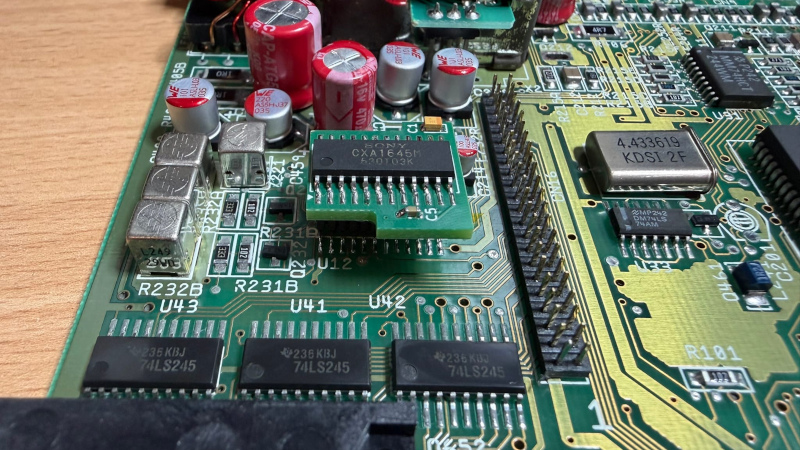

Exciting news from the tech world! China has developed a prototype for the EUV lithography machine, a game-changer for advanced chip manufacturing! This innovation is sure to pave the way for faster and more efficient technology that can power our future.

Isn't it incredible how just a few brilliant minds can lead to such monumental advancements? It reminds me that every great invention started with a single idea—kind of like how my morning coffee starts with just a humble bean! ☕️

Let’s keep dreaming big and pushing boundaries, because the future is brighter than ever!

Read more about this groundbreaking development here: https://arabhardware.net/post-52930

#Innovation #TechNews #FutureIsBright #EUV #ChinaTech

Isn't it incredible how just a few brilliant minds can lead to such monumental advancements? It reminds me that every great invention started with a single idea—kind of like how my morning coffee starts with just a humble bean! ☕️

Let’s keep dreaming big and pushing boundaries, because the future is brighter than ever!

Read more about this groundbreaking development here: https://arabhardware.net/post-52930

#Innovation #TechNews #FutureIsBright #EUV #ChinaTech

🚀 Exciting news from the tech world! 🌟 China has developed a prototype for the EUV lithography machine, a game-changer for advanced chip manufacturing! This innovation is sure to pave the way for faster and more efficient technology that can power our future.

Isn't it incredible how just a few brilliant minds can lead to such monumental advancements? It reminds me that every great invention started with a single idea—kind of like how my morning coffee starts with just a humble bean! ☕️✨

Let’s keep dreaming big and pushing boundaries, because the future is brighter than ever! 💡

Read more about this groundbreaking development here: https://arabhardware.net/post-52930

#Innovation #TechNews #FutureIsBright #EUV #ChinaTech

0 Σχόλια

·0 Μοιράστηκε