0 Commentarii

0 Distribuiri

133 Views

Director

Director

-

Vă rugăm să vă autentificați pentru a vă dori, partaja și comenta!

-

WWW.WSJ.COMSwept Away Review: The Avett Brothers Set Sail on BroadwayDirected by Michael Mayer and featuring a fierce star turn from John Gallagher Jr., this musical draws on the bands songs to depict the misbegotten voyage of a 19th-century ship.0 Commentarii 0 Distribuiri 130 Views

-

WWW.WSJ.COMRen Magritte Painting Sells for a Record-Breaking $121.2 MillionThe surrealist painter joins a small group of artists whose work has sold for over $100 million.0 Commentarii 0 Distribuiri 131 Views

-

ARSTECHNICA.COMThe key moment came 38 minutes after Starship roared off the launch padTurning point The key moment came 38 minutes after Starship roared off the launch pad SpaceX wasn't able to catch the Super Heavy booster, but Starship is on the cusp of orbital flight. Stephen Clark Nov 19, 2024 11:57 pm | 36 The sixth flight of Starship lifts off from SpaceX's Starbase launch site at Boca Chica Beach, Texas. Credit: SpaceX. The sixth flight of Starship lifts off from SpaceX's Starbase launch site at Boca Chica Beach, Texas. Credit: SpaceX. Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreSpaceX launched its sixth Starship rocket Tuesday, proving for the first time that the stainless steel ship can maneuver in space and paving the way for an even larger, upgraded vehicle slated to debut on the next test flight.The only hiccup was an abortive attempt to catch the rocket's Super Heavy booster back at the launch site in South Texas, something SpaceX achieved on the previous flight October 13. The Starship upper stage flew halfway around the world, reaching an altitude of 118 miles (190 kilometers) before plunging through the atmosphere for a pinpoint slow-speed splashdown in the Indian Ocean.The sixth flight of the world's largest launcherstanding 398 feet (121.3 meters) tallbegan with a lumbering liftoff from SpaceX's Starbase facility near the US-Mexico border at 4 pm CST (22:00 UTC) Tuesday. The rocket headed east over the Gulf of Mexico propelled by 33 Raptor engines clustered on the bottom of its Super Heavy first stage.A few miles away, President-elect Donald Trump joined SpaceX founder Elon Musk to witness the launch. The SpaceX boss became one of Trump's closest allies in this year's presidential election, giving the world's richest man extraordinary influence in US space policy. Sen. Ted Cruz (R-Texas) was there, too, among other lawmakers. Gen. Chance Saltzman, the top commander in the US Space Force, stood nearby, chatting with Trump and other VIPs. Elon Musk, SpaceX's CEO, President-elect Donald Trump, and Gen. Chance Saltzman of the US Space Force watch the sixth launch of Starship Tuesday. Credit: Brandon Bell/Getty Images From their viewing platform, they watched Starship climb into a clear autumn sky. At full power, the 33 Raptors chugged more than 40,000 pounds of super-cold liquid methane and liquid oxygen per second. The engines generated 16.7 million pounds of thrust, 60 percent more than the Soviet N1, the second-largest rocket in history.Eight minutes later, the rocket's upper stage, itself also known as Starship, was in space, completing the program's fourth straight near-flawless launch. The first two test flights faltered before reaching their planned trajectory.A brief but crucial demoAs exciting as it was, we've seen all that before. One of the most important new things engineers desired to test on this flight occurred about 38 minutes after liftoff.That's when Starship reignited one of its six Raptor engines for a brief burn to make a slight adjustment to its flight path. The burn only lasted a few seconds, and the impulse was smalljust a 48 mph (77 km/hour) change in velocity, or delta-Vbut it demonstrated the ship can safely deorbit itself on future missions.With this achievement, Starship will likely soon be cleared to travel into orbit around Earth and deploy Starlink internet satellites or conduct in-space refueling experiments, two of the near-term objectives on SpaceX's Starship development roadmap.Launching Starlinks aboard Starship will allow SpaceX to expand the capacity and reach of commercial consumer broadband network, which, in turn, provides revenue for Musk to reinvest into Starship. Orbital refueling is an enabler for Starship voyages beyond low-Earth orbit, fulfilling SpaceX's multibillion-dollar contract with NASA to provide a human-rated Moon lander for the agency's Artemis program. Likewise, transferring cryogenic propellants in orbit is a prerequisite for sending Starships to Mars, making real Musk's dream of creating a settlement on the red planet. Artist's illustration of Starship on the surface of the Moon. Credit: SpaceX Until now, SpaceX has intentionally launched Starships to speeds just shy of the blistering velocities needed to maintain orbit. Engineers wanted to test the Raptor's ability to reignite in space on the third Starship test flight in March, but the ship lost control of its orientation, and SpaceX canceled the engine firing.Before going for a full orbital flight, officials needed to confirm Starship could steer itself back into the atmosphere for reentry, ensuring it wouldn't present any risk to the public with an unguided descent over a populated area. After Tuesday, SpaceX can check this off its to-do list."Congrats to SpaceX on Starship's sixth test flight," NASA Administrator Bill Nelson posted on X. "Exciting to see the Raptor engine restart in spacemajor progress towards orbital flight. Starships success is Artemis 'success. Together, we will return humanity to the Moon & set our sights on Mars."While it lacks the pizazz of a fiery launch or landing, the engine relight unlocks a new phase of Starship development. SpaceX has now proven the rocket is capable of reaching space with a fair measure of reliability. Next, engineers will fine-tune how to reliably recover the booster and the ship, and learn how to use them.Acid testSpaceX appears well on the way to doing this. While SpaceX didn't catch the Super Heavy booster with the launch tower's mechanical arms Tuesday, engineers have shown they can do it. The challenge of catching Starship itself back at the launch pad is more daunting. The ship starts its reentry thousands of miles from Starbase, traveling approximately 17,000 mph (27,000 km/hour), and must thread the gap between the tower's catch arms within a matter of inches.The good news here is SpaceX has now twice proven it can bring Starship back to a precision splashdown in the Indian Ocean. In October, the ship settled into the sea in darkness. SpaceX moved the launch time for Tuesday's flight to the late afternoon, setting up for splashdown shortly after sunrise northwest of Australia.The shift in time paid off with some stunning new visuals. Cameras mounted on the outside of Starship beamed dazzling live views back to SpaceX through the Starlink network, showing a now-familiar glow of plasma encasing the spacecraft as it plowed deeper into the atmosphere. But this time, daylight revealed the ship's flaps moving to control its belly-first descent toward the ocean. After passing through a deck of low clouds, Starship reignited its Raptor engines and tilted from horizontal to vertical, making contact with the water tail-first within view of a floating buoy and a nearby aircraft in position to observe the moment. Here's a replay of the splashdown.The ship made it through reentry despite flying with a substandard heat shield. Starship's thermal protection system is made up of thousands of ceramic tiles to protect the ship from temperatures as high as 2,600 Fahrenheit (1,430 Celsius).Kate Tice, a SpaceX engineer hosting the company's live broadcast of the mission, said teams at Starbase removed 2,100 heat shield tiles from Starship ahead of Tuesday's launch. Their removal exposed wider swaths of the ship's stainless steel skin to super-heated plasma, and SpaceX teams were eager to see how well the spacecraft held up during reentry. In the language of flight testing, this approach is called exploring the corners of the envelope, where engineers evaluate how a new airplane or rocket performs in extreme conditions.Dont be surprised if we see some wackadoodle stuff happen here," Tice said. There was nothing of the sort. One of the ship's flaps appeared to suffer some heating damage, but it remained intact and functional, and the harm looked to be less substantial than damage seen on previous flights.Many of the removed tiles came from the sides of Starship where SpaceX plans to place catch fittings on future vehicles. These are the hardware protuberances that will catch on the top side of the launch tower's mechanical arms, similar to fittings used on the Super Heavy booster."The next flight, we want to better understand where we can install catch hardware, not necessarily to actually do the catch but to see how that hardware holds up in those spots," Tice said. "Today's flight will help inform, does the stainless steel hold up like we think it may, based on experiments that we conducted on Flight 5?"Musk wrote on his social media platform X that SpaceX could try to bring Starship back to Starbase for a catch on the eighth test flight, which is likely to occur in the first half of 2025."We will do one more ocean landing of the ship," Musk said. "If that goes well, then SpaceX will attempt to catch the ship with the tower."The heat shield, Musk added, is a focal point of SpaceX's attention. The delicate heat-absorbing tiles used on the belly of the space shuttle proved vexing to NASA technicians. Early in the shuttle's development, NASA had trouble keeping tiles adhered to the shuttle's aluminum skin. Each of the shuttle tiles was custom-machined to fit on a specific location on the orbiter, complicating refurbishment between flights. Starship's tiles are all hexagonal in shape and agnostic to where technicians place them on the vehicle."The biggest technology challenge remaining for Starship is a fully & immediately reusable heat shield," Musk wrote on X. "Being able to land the ship, refill propellant & launch right away with no refurbishment or laborious inspection. That is the acid test." This photo of the Starship vehicle for Flight 6, numbered Ship 31, shows exposed portions of the vehicle's stainless steel skin after tile removal. Credit: SpaceX There were no details available Tuesday night on what caused the Super Heavy booster to divert from its planned catch on the launch tower. After detaching from the Starship upper stage less than three minutes into the flight, the booster reversed course to begin the journey back to Starbase.Then, SpaceX's flight director announced the rocket would fly itself into the Gulf, rather than back to the launch site: "Booster offshore divert."The booster finished off its descent with a seemingly perfect landing burn using a subset of its Raptor engines. As expected after the water landing, the boosteritself 233 feet (71 meters) talltoppled and broke apart in a dramatic fireball visible to onshore spectators.In an update posted to its website after the launch, SpaceX said automated health checks of hardware on the launch and catch tower triggered the aborted catch attempt. The company did not say what system failed the health check. As a safety measure, SpaceX must send a manual command for the booster to come back to land in order to prevent a malfunction from endangering people or property.Turning it up to 11There will be plenty more opportunities for more booster catches in the coming months as SpaceX ramps up its launch cadence at Starbase. Gwynne Shotwell, SpaceX's president and chief operating officer, hinted at the scale of the company's ambitions last week."We just passed 400 launches on Falcon, and I would not be surprised if we fly 400 Starship launches in the next four years," she said at the Barron Investment Conference.The next batch of test flights will use an improved version of Starship designated Block 2, or V2. Starship Block 2 comes with larger propellant tanks, redesigned forward flaps, and a better heat shield.The new-generation Starship will hold more than 11 million pounds of fuel and oxidizer, about a million pounds more than the capacity of Starship Block 1. The booster and ship will produce more thrust, and Block 2 will measure 408 feet (124.4 meters) tall, stretching the height of the full stack by a little more than 10 feet.Put together, these modifications should give Starship the ability to heave a payload of up to 220,000 pounds (100 metric tons) into low-Earth orbit, about twice the carrying capacity of the first-generation ship. Further down the line, SpaceX plans to introduce Starship Block 3 to again double the ship's payload capacity.Just as importantly, these changes are designed to make it easier for SpaceX to recover and reuse the Super Heavy booster and Starship upper stage. SpaceX's goal of fielding a fully reusable launcher builds on the partial reuse SpaceX pioneered with its Falcon 9 rocket. This should dramatically bring down launch costs, according to SpaceX's vision.With Tuesday's flight, it's clear Starship works. Now it's time to see what it can do.Updated with additional details, quotes, and images.Stephen ClarkSpace ReporterStephen ClarkSpace Reporter Stephen Clark is a space reporter at Ars Technica, covering private space companies and the worlds space agencies. Stephen writes about the nexus of technology, science, policy, and business on and off the planet. 36 Comments Prev story0 Commentarii 0 Distribuiri 139 Views

ARSTECHNICA.COMThe key moment came 38 minutes after Starship roared off the launch padTurning point The key moment came 38 minutes after Starship roared off the launch pad SpaceX wasn't able to catch the Super Heavy booster, but Starship is on the cusp of orbital flight. Stephen Clark Nov 19, 2024 11:57 pm | 36 The sixth flight of Starship lifts off from SpaceX's Starbase launch site at Boca Chica Beach, Texas. Credit: SpaceX. The sixth flight of Starship lifts off from SpaceX's Starbase launch site at Boca Chica Beach, Texas. Credit: SpaceX. Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreSpaceX launched its sixth Starship rocket Tuesday, proving for the first time that the stainless steel ship can maneuver in space and paving the way for an even larger, upgraded vehicle slated to debut on the next test flight.The only hiccup was an abortive attempt to catch the rocket's Super Heavy booster back at the launch site in South Texas, something SpaceX achieved on the previous flight October 13. The Starship upper stage flew halfway around the world, reaching an altitude of 118 miles (190 kilometers) before plunging through the atmosphere for a pinpoint slow-speed splashdown in the Indian Ocean.The sixth flight of the world's largest launcherstanding 398 feet (121.3 meters) tallbegan with a lumbering liftoff from SpaceX's Starbase facility near the US-Mexico border at 4 pm CST (22:00 UTC) Tuesday. The rocket headed east over the Gulf of Mexico propelled by 33 Raptor engines clustered on the bottom of its Super Heavy first stage.A few miles away, President-elect Donald Trump joined SpaceX founder Elon Musk to witness the launch. The SpaceX boss became one of Trump's closest allies in this year's presidential election, giving the world's richest man extraordinary influence in US space policy. Sen. Ted Cruz (R-Texas) was there, too, among other lawmakers. Gen. Chance Saltzman, the top commander in the US Space Force, stood nearby, chatting with Trump and other VIPs. Elon Musk, SpaceX's CEO, President-elect Donald Trump, and Gen. Chance Saltzman of the US Space Force watch the sixth launch of Starship Tuesday. Credit: Brandon Bell/Getty Images From their viewing platform, they watched Starship climb into a clear autumn sky. At full power, the 33 Raptors chugged more than 40,000 pounds of super-cold liquid methane and liquid oxygen per second. The engines generated 16.7 million pounds of thrust, 60 percent more than the Soviet N1, the second-largest rocket in history.Eight minutes later, the rocket's upper stage, itself also known as Starship, was in space, completing the program's fourth straight near-flawless launch. The first two test flights faltered before reaching their planned trajectory.A brief but crucial demoAs exciting as it was, we've seen all that before. One of the most important new things engineers desired to test on this flight occurred about 38 minutes after liftoff.That's when Starship reignited one of its six Raptor engines for a brief burn to make a slight adjustment to its flight path. The burn only lasted a few seconds, and the impulse was smalljust a 48 mph (77 km/hour) change in velocity, or delta-Vbut it demonstrated the ship can safely deorbit itself on future missions.With this achievement, Starship will likely soon be cleared to travel into orbit around Earth and deploy Starlink internet satellites or conduct in-space refueling experiments, two of the near-term objectives on SpaceX's Starship development roadmap.Launching Starlinks aboard Starship will allow SpaceX to expand the capacity and reach of commercial consumer broadband network, which, in turn, provides revenue for Musk to reinvest into Starship. Orbital refueling is an enabler for Starship voyages beyond low-Earth orbit, fulfilling SpaceX's multibillion-dollar contract with NASA to provide a human-rated Moon lander for the agency's Artemis program. Likewise, transferring cryogenic propellants in orbit is a prerequisite for sending Starships to Mars, making real Musk's dream of creating a settlement on the red planet. Artist's illustration of Starship on the surface of the Moon. Credit: SpaceX Until now, SpaceX has intentionally launched Starships to speeds just shy of the blistering velocities needed to maintain orbit. Engineers wanted to test the Raptor's ability to reignite in space on the third Starship test flight in March, but the ship lost control of its orientation, and SpaceX canceled the engine firing.Before going for a full orbital flight, officials needed to confirm Starship could steer itself back into the atmosphere for reentry, ensuring it wouldn't present any risk to the public with an unguided descent over a populated area. After Tuesday, SpaceX can check this off its to-do list."Congrats to SpaceX on Starship's sixth test flight," NASA Administrator Bill Nelson posted on X. "Exciting to see the Raptor engine restart in spacemajor progress towards orbital flight. Starships success is Artemis 'success. Together, we will return humanity to the Moon & set our sights on Mars."While it lacks the pizazz of a fiery launch or landing, the engine relight unlocks a new phase of Starship development. SpaceX has now proven the rocket is capable of reaching space with a fair measure of reliability. Next, engineers will fine-tune how to reliably recover the booster and the ship, and learn how to use them.Acid testSpaceX appears well on the way to doing this. While SpaceX didn't catch the Super Heavy booster with the launch tower's mechanical arms Tuesday, engineers have shown they can do it. The challenge of catching Starship itself back at the launch pad is more daunting. The ship starts its reentry thousands of miles from Starbase, traveling approximately 17,000 mph (27,000 km/hour), and must thread the gap between the tower's catch arms within a matter of inches.The good news here is SpaceX has now twice proven it can bring Starship back to a precision splashdown in the Indian Ocean. In October, the ship settled into the sea in darkness. SpaceX moved the launch time for Tuesday's flight to the late afternoon, setting up for splashdown shortly after sunrise northwest of Australia.The shift in time paid off with some stunning new visuals. Cameras mounted on the outside of Starship beamed dazzling live views back to SpaceX through the Starlink network, showing a now-familiar glow of plasma encasing the spacecraft as it plowed deeper into the atmosphere. But this time, daylight revealed the ship's flaps moving to control its belly-first descent toward the ocean. After passing through a deck of low clouds, Starship reignited its Raptor engines and tilted from horizontal to vertical, making contact with the water tail-first within view of a floating buoy and a nearby aircraft in position to observe the moment. Here's a replay of the splashdown.The ship made it through reentry despite flying with a substandard heat shield. Starship's thermal protection system is made up of thousands of ceramic tiles to protect the ship from temperatures as high as 2,600 Fahrenheit (1,430 Celsius).Kate Tice, a SpaceX engineer hosting the company's live broadcast of the mission, said teams at Starbase removed 2,100 heat shield tiles from Starship ahead of Tuesday's launch. Their removal exposed wider swaths of the ship's stainless steel skin to super-heated plasma, and SpaceX teams were eager to see how well the spacecraft held up during reentry. In the language of flight testing, this approach is called exploring the corners of the envelope, where engineers evaluate how a new airplane or rocket performs in extreme conditions.Dont be surprised if we see some wackadoodle stuff happen here," Tice said. There was nothing of the sort. One of the ship's flaps appeared to suffer some heating damage, but it remained intact and functional, and the harm looked to be less substantial than damage seen on previous flights.Many of the removed tiles came from the sides of Starship where SpaceX plans to place catch fittings on future vehicles. These are the hardware protuberances that will catch on the top side of the launch tower's mechanical arms, similar to fittings used on the Super Heavy booster."The next flight, we want to better understand where we can install catch hardware, not necessarily to actually do the catch but to see how that hardware holds up in those spots," Tice said. "Today's flight will help inform, does the stainless steel hold up like we think it may, based on experiments that we conducted on Flight 5?"Musk wrote on his social media platform X that SpaceX could try to bring Starship back to Starbase for a catch on the eighth test flight, which is likely to occur in the first half of 2025."We will do one more ocean landing of the ship," Musk said. "If that goes well, then SpaceX will attempt to catch the ship with the tower."The heat shield, Musk added, is a focal point of SpaceX's attention. The delicate heat-absorbing tiles used on the belly of the space shuttle proved vexing to NASA technicians. Early in the shuttle's development, NASA had trouble keeping tiles adhered to the shuttle's aluminum skin. Each of the shuttle tiles was custom-machined to fit on a specific location on the orbiter, complicating refurbishment between flights. Starship's tiles are all hexagonal in shape and agnostic to where technicians place them on the vehicle."The biggest technology challenge remaining for Starship is a fully & immediately reusable heat shield," Musk wrote on X. "Being able to land the ship, refill propellant & launch right away with no refurbishment or laborious inspection. That is the acid test." This photo of the Starship vehicle for Flight 6, numbered Ship 31, shows exposed portions of the vehicle's stainless steel skin after tile removal. Credit: SpaceX There were no details available Tuesday night on what caused the Super Heavy booster to divert from its planned catch on the launch tower. After detaching from the Starship upper stage less than three minutes into the flight, the booster reversed course to begin the journey back to Starbase.Then, SpaceX's flight director announced the rocket would fly itself into the Gulf, rather than back to the launch site: "Booster offshore divert."The booster finished off its descent with a seemingly perfect landing burn using a subset of its Raptor engines. As expected after the water landing, the boosteritself 233 feet (71 meters) talltoppled and broke apart in a dramatic fireball visible to onshore spectators.In an update posted to its website after the launch, SpaceX said automated health checks of hardware on the launch and catch tower triggered the aborted catch attempt. The company did not say what system failed the health check. As a safety measure, SpaceX must send a manual command for the booster to come back to land in order to prevent a malfunction from endangering people or property.Turning it up to 11There will be plenty more opportunities for more booster catches in the coming months as SpaceX ramps up its launch cadence at Starbase. Gwynne Shotwell, SpaceX's president and chief operating officer, hinted at the scale of the company's ambitions last week."We just passed 400 launches on Falcon, and I would not be surprised if we fly 400 Starship launches in the next four years," she said at the Barron Investment Conference.The next batch of test flights will use an improved version of Starship designated Block 2, or V2. Starship Block 2 comes with larger propellant tanks, redesigned forward flaps, and a better heat shield.The new-generation Starship will hold more than 11 million pounds of fuel and oxidizer, about a million pounds more than the capacity of Starship Block 1. The booster and ship will produce more thrust, and Block 2 will measure 408 feet (124.4 meters) tall, stretching the height of the full stack by a little more than 10 feet.Put together, these modifications should give Starship the ability to heave a payload of up to 220,000 pounds (100 metric tons) into low-Earth orbit, about twice the carrying capacity of the first-generation ship. Further down the line, SpaceX plans to introduce Starship Block 3 to again double the ship's payload capacity.Just as importantly, these changes are designed to make it easier for SpaceX to recover and reuse the Super Heavy booster and Starship upper stage. SpaceX's goal of fielding a fully reusable launcher builds on the partial reuse SpaceX pioneered with its Falcon 9 rocket. This should dramatically bring down launch costs, according to SpaceX's vision.With Tuesday's flight, it's clear Starship works. Now it's time to see what it can do.Updated with additional details, quotes, and images.Stephen ClarkSpace ReporterStephen ClarkSpace Reporter Stephen Clark is a space reporter at Ars Technica, covering private space companies and the worlds space agencies. Stephen writes about the nexus of technology, science, policy, and business on and off the planet. 36 Comments Prev story0 Commentarii 0 Distribuiri 139 Views -

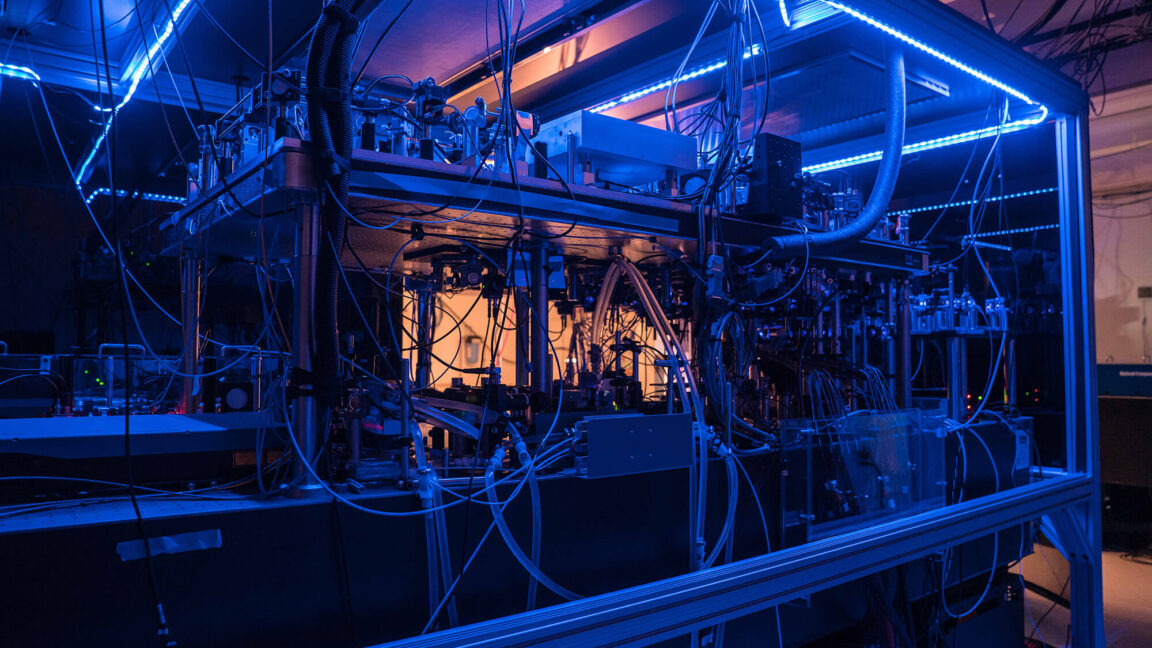

ARSTECHNICA.COMMicrosoft and Atom Computing combine for quantum error correction demoAtomic power? Microsoft and Atom Computing combine for quantum error correction demo New work provides a good view of where the field currently stands. John Timmer Nov 19, 2024 4:00 pm | 4 The first-generation tech demo of Atom's hardware. Things have progressed considerably since. Credit: Atom Computing The first-generation tech demo of Atom's hardware. Things have progressed considerably since. Credit: Atom Computing Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreIn September, Microsoft made an unusual combination of announcements. It demonstrated progress with quantum error correction, something that will be needed for the technology to move much beyond the interesting demo phase, using hardware from a quantum computing startup called Quantinuum. At the same time, however, the company also announced that it was forming a partnership with a different startup, Atom Computing, which uses a different technology to make qubits available for computations.Given that, it was probably inevitable that the folks in Redmond, Washington, would want to show that similar error correction techniques would also work with Atom Computing's hardware. It didn't take long, as the two companies are releasing a draft manuscript describing their work on error correction today. The paper serves as both a good summary of where things currently stand in the world of error correction, as well as a good look at some of the distinct features of computation using neutral atoms.Atoms and errorsWhile we have various technologies that provide a way of storing and manipulating bits of quantum information, none of them can be operated error-free. At present, errors make it difficult to perform even the simplest computations that are clearly beyond the capabilities of classical computers. More sophisticated algorithms would inevitably encounter an error before they could be completed, a situation that would remain true even if we could somehow improve the hardware error rates of qubits by a factor of 1,000something we're unlikely to ever be able to do.The solution to this is to use what are called logical qubits, which distribute quantum information across multiple hardware qubits and allow the detection and correction of errors when they occur. Since multiple qubits get linked together to operate as a single logical unit, the hardware error rate still matters. If it's too high, then adding more hardware qubits just means that errors will pop up faster than they can possibly be corrected.We're now at the point where, for a number of technologies, hardware error rates have passed the break-even point, and adding more hardware qubits can lower the error rate of a logical qubit based on them. This was demonstrated using neutral atom qubits by an academic lab at Harvard University about a year ago. The new manuscript demonstrates that it also works on a commercial machine from Atom Computing.Neutral atoms, which can be held in place using a lattice of laser light, have a number of distinct advantages when it comes to quantum computing. Every single atom will behave identically, meaning that you don't have to manage the device-to-device variability that's inevitable with fabricated electronic qubits. Atoms can also be moved around, allowing any atom to be entangled with any other. This any-to-any connectivity can enable more efficient algorithms and error-correction schemes. The quantum information is typically stored in the spin of the atom's nucleus, which is shielded from environmental influences by the cloud of electrons that surround it, making them relatively long-lived qubits.Operations, including gates and readout, are performed using lasers. The way the physics works, the spacing of the atoms determines how the laser affects them. If two atoms are a critical distance apart, the laser can perform a single operation, called a two-qubit gate, that affects both of their states. Anywhere outside this distance, and a laser only affects each atom individually. This allows a fine control over gate operations.That said, operations are relatively slow compared to some electronic qubits, and atoms can occasionally be lost entirely. The optical traps that hold atoms in place are also contingent upon the atom being in its ground state; if any atom ends up stuck in a different state, it will be able to drift off and be lost. This is actually somewhat useful, in that it converts an unexpected state into a clear error. Atom Computing's system. Rows of atoms are held far enough apart so that a single laser sent across them (green bar) only operates on individual atoms. If the atoms are moved to the interaction zone (red bar), a laser can perform gates on pairs of atoms. Spaces where atoms can be held can be left empty to avoid performing unneeded operations. Credit: Reichardt, et al. The machine used in the new demonstration hosts 256 of these neutral atoms. Atom Computing has them arranged in sets of parallel rows, with space in between to let the atoms be shuffled around. For single-qubit gates, it's possible to shine a laser across the rows, causing every atom it touches to undergo that operation. For two-qubit gates, pairs of atoms get moved to the end of the row and moved a specific distance apart, at which point a laser will cause the gate to be performed on every pair present.Atom's hardware also allows a constant supply of new atoms to be brought in to replace any that are lost. It's also possible to image the atom array in between operations to determine whether any atoms have been lost and if any are in the wrong state.Its only logicalAs a general rule, the more hardware qubits you dedicate to each logical qubit, the more simultaneous errors you can identify. This identification can enable two ways of handling the error. In the first, you simply discard any calculation with an error and start over. In the second, you can use information about the error to try to fix it, although the repair involves additional operations that can potentially trigger a separate error.For this work, the Microsoft/Atom team used relatively small logical qubits (meaning they used very few hardware qubits), which meant they could fit more of them within 256 total hardware qubits the machine made available. They also checked the error rate of both error detection with discard and error detection with correction.The research team did two main demonstrations. One was placing 24 of these logical qubits into what's called a cat state, named after Schrdinger's hypothetical feline. This is when a quantum object simultaneously has non-zero probability of being in two mutually exclusive states. In this case, the researchers placed 24 logical qubits in an entangled cat state, the largest ensemble of this sort yet created. Separately, they implemented what's called the Bernstein-Vazirani algorithm. The classical version of this algorithm requires individual queries to identify each bit in a string of them; the quantum version obtains the entire string with a single query, so is a notable case of something where a quantum speedup is possible.Both of these showed a similar pattern. When done directly on the hardware, with each qubit being a single atom, there was an appreciable error rate. By detecting errors and discarding those calculations where they occurred, it was possible to significantly improve the error rate of the remaining calculations. Note that this doesn't eliminate errors, as it's possible for multiple errors to occur simultaneously, altering the value of the qubit without leaving an indication that can be spotted with these small logical qubits.Discarding has its limits; as calculations become increasingly complex, involving more qubits or operations, it will inevitably mean every calculation will have an error, so you'd end up wanting to discard everything. Which is why we'll ultimately need to correct the errors.In these experiments, however, the process of correcting the errortaking an entirely new atom and setting it into the appropriate statewas also error-prone. So, while it could be done, it ended up having an overall error rate that was intermediate between the approach of catching and discarding errors and the rate when operations were done directly on the hardware.In the end, the current hardware has an error rate that's good enough that error correction actually improves the probability that a set of operations can be performed without producing an error. But not good enough that we can perform the sort of complex operations that would lead quantum computers to have an advantage in useful calculations. And that's not just true for Atom's hardware; similar things can be said for other error-correction demonstrations done on different machines.There are two ways to go beyond these current limits. One is simply to improve the error rates of the hardware qubits further, as fewer total errors make it more likely that we can catch and correct them. The second is to increase the qubit counts so that we can host larger, more robust logical qubits. We're obviously going to need to do both, and Atom's partnership with Microsoft was formed in the hope that it will help both companies get there faster.John TimmerSenior Science EditorJohn TimmerSenior Science Editor John is Ars Technica's science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots. 4 Comments0 Commentarii 0 Distribuiri 164 Views

ARSTECHNICA.COMMicrosoft and Atom Computing combine for quantum error correction demoAtomic power? Microsoft and Atom Computing combine for quantum error correction demo New work provides a good view of where the field currently stands. John Timmer Nov 19, 2024 4:00 pm | 4 The first-generation tech demo of Atom's hardware. Things have progressed considerably since. Credit: Atom Computing The first-generation tech demo of Atom's hardware. Things have progressed considerably since. Credit: Atom Computing Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreIn September, Microsoft made an unusual combination of announcements. It demonstrated progress with quantum error correction, something that will be needed for the technology to move much beyond the interesting demo phase, using hardware from a quantum computing startup called Quantinuum. At the same time, however, the company also announced that it was forming a partnership with a different startup, Atom Computing, which uses a different technology to make qubits available for computations.Given that, it was probably inevitable that the folks in Redmond, Washington, would want to show that similar error correction techniques would also work with Atom Computing's hardware. It didn't take long, as the two companies are releasing a draft manuscript describing their work on error correction today. The paper serves as both a good summary of where things currently stand in the world of error correction, as well as a good look at some of the distinct features of computation using neutral atoms.Atoms and errorsWhile we have various technologies that provide a way of storing and manipulating bits of quantum information, none of them can be operated error-free. At present, errors make it difficult to perform even the simplest computations that are clearly beyond the capabilities of classical computers. More sophisticated algorithms would inevitably encounter an error before they could be completed, a situation that would remain true even if we could somehow improve the hardware error rates of qubits by a factor of 1,000something we're unlikely to ever be able to do.The solution to this is to use what are called logical qubits, which distribute quantum information across multiple hardware qubits and allow the detection and correction of errors when they occur. Since multiple qubits get linked together to operate as a single logical unit, the hardware error rate still matters. If it's too high, then adding more hardware qubits just means that errors will pop up faster than they can possibly be corrected.We're now at the point where, for a number of technologies, hardware error rates have passed the break-even point, and adding more hardware qubits can lower the error rate of a logical qubit based on them. This was demonstrated using neutral atom qubits by an academic lab at Harvard University about a year ago. The new manuscript demonstrates that it also works on a commercial machine from Atom Computing.Neutral atoms, which can be held in place using a lattice of laser light, have a number of distinct advantages when it comes to quantum computing. Every single atom will behave identically, meaning that you don't have to manage the device-to-device variability that's inevitable with fabricated electronic qubits. Atoms can also be moved around, allowing any atom to be entangled with any other. This any-to-any connectivity can enable more efficient algorithms and error-correction schemes. The quantum information is typically stored in the spin of the atom's nucleus, which is shielded from environmental influences by the cloud of electrons that surround it, making them relatively long-lived qubits.Operations, including gates and readout, are performed using lasers. The way the physics works, the spacing of the atoms determines how the laser affects them. If two atoms are a critical distance apart, the laser can perform a single operation, called a two-qubit gate, that affects both of their states. Anywhere outside this distance, and a laser only affects each atom individually. This allows a fine control over gate operations.That said, operations are relatively slow compared to some electronic qubits, and atoms can occasionally be lost entirely. The optical traps that hold atoms in place are also contingent upon the atom being in its ground state; if any atom ends up stuck in a different state, it will be able to drift off and be lost. This is actually somewhat useful, in that it converts an unexpected state into a clear error. Atom Computing's system. Rows of atoms are held far enough apart so that a single laser sent across them (green bar) only operates on individual atoms. If the atoms are moved to the interaction zone (red bar), a laser can perform gates on pairs of atoms. Spaces where atoms can be held can be left empty to avoid performing unneeded operations. Credit: Reichardt, et al. The machine used in the new demonstration hosts 256 of these neutral atoms. Atom Computing has them arranged in sets of parallel rows, with space in between to let the atoms be shuffled around. For single-qubit gates, it's possible to shine a laser across the rows, causing every atom it touches to undergo that operation. For two-qubit gates, pairs of atoms get moved to the end of the row and moved a specific distance apart, at which point a laser will cause the gate to be performed on every pair present.Atom's hardware also allows a constant supply of new atoms to be brought in to replace any that are lost. It's also possible to image the atom array in between operations to determine whether any atoms have been lost and if any are in the wrong state.Its only logicalAs a general rule, the more hardware qubits you dedicate to each logical qubit, the more simultaneous errors you can identify. This identification can enable two ways of handling the error. In the first, you simply discard any calculation with an error and start over. In the second, you can use information about the error to try to fix it, although the repair involves additional operations that can potentially trigger a separate error.For this work, the Microsoft/Atom team used relatively small logical qubits (meaning they used very few hardware qubits), which meant they could fit more of them within 256 total hardware qubits the machine made available. They also checked the error rate of both error detection with discard and error detection with correction.The research team did two main demonstrations. One was placing 24 of these logical qubits into what's called a cat state, named after Schrdinger's hypothetical feline. This is when a quantum object simultaneously has non-zero probability of being in two mutually exclusive states. In this case, the researchers placed 24 logical qubits in an entangled cat state, the largest ensemble of this sort yet created. Separately, they implemented what's called the Bernstein-Vazirani algorithm. The classical version of this algorithm requires individual queries to identify each bit in a string of them; the quantum version obtains the entire string with a single query, so is a notable case of something where a quantum speedup is possible.Both of these showed a similar pattern. When done directly on the hardware, with each qubit being a single atom, there was an appreciable error rate. By detecting errors and discarding those calculations where they occurred, it was possible to significantly improve the error rate of the remaining calculations. Note that this doesn't eliminate errors, as it's possible for multiple errors to occur simultaneously, altering the value of the qubit without leaving an indication that can be spotted with these small logical qubits.Discarding has its limits; as calculations become increasingly complex, involving more qubits or operations, it will inevitably mean every calculation will have an error, so you'd end up wanting to discard everything. Which is why we'll ultimately need to correct the errors.In these experiments, however, the process of correcting the errortaking an entirely new atom and setting it into the appropriate statewas also error-prone. So, while it could be done, it ended up having an overall error rate that was intermediate between the approach of catching and discarding errors and the rate when operations were done directly on the hardware.In the end, the current hardware has an error rate that's good enough that error correction actually improves the probability that a set of operations can be performed without producing an error. But not good enough that we can perform the sort of complex operations that would lead quantum computers to have an advantage in useful calculations. And that's not just true for Atom's hardware; similar things can be said for other error-correction demonstrations done on different machines.There are two ways to go beyond these current limits. One is simply to improve the error rates of the hardware qubits further, as fewer total errors make it more likely that we can catch and correct them. The second is to increase the qubit counts so that we can host larger, more robust logical qubits. We're obviously going to need to do both, and Atom's partnership with Microsoft was formed in the hope that it will help both companies get there faster.John TimmerSenior Science EditorJohn TimmerSenior Science Editor John is Ars Technica's science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots. 4 Comments0 Commentarii 0 Distribuiri 164 Views -

WWW.INFORMATIONWEEK.COMAI and the War Against Plastic WasteCarrie Pallardy, Contributing ReporterNovember 19, 202410 Min ReadPollution floating in river, Mumbai, Indiapaul kennedyvia Alamy Stock PhotoPlastic pollution is easy to visualize given that many rivers are choked with such waste and the oceans are littered with it. The Great Pacific Garbage Patch, a massive collection of plastic and other debris, is an infamous result of plastics proliferation. Even if you dont live near a body of water to see the problem firsthand, youre unlikely to walk far without seeing some piece of plastic crushed underfoot. But untangling this problem is anything but easy.Enter artificial intelligence, which is being applied to many complex problems that include plastics pollution. InformationWeek spoke to research scientists and startup founders about why plastics waste is such a complicated challenge and how they use AI in their work.The Plastics ProblemPlastic is ubiquitous today as food packaging, clothing, medical devices, cars, and so much more rely on this material. Since 1950, nearly 10 billion metric tons of plastic has been produced, and over half of that was just in the last 20 years. So, it's been this extremely prolific growth in production and use. It's partially due to just the absolute versatility of plastic, Chase Brewster, project scientist at Benioff Ocean Science Laboratory, a center for marine conservation at the University of California, Santa Barbara, says.Related:Plastic isnt biodegradable and recycling is imperfect. As more plastic is produced and more of it is wasted, much of that waste ends up back in the environment, polluting land and water as it breaks down into microplastics and nanoplastics.Even when plastic products end up at waste management facilities, processing them is not simple. A lot of people think of plastic as just plastic, Bradley Sutliff, a former National Institute of Standards and Technology (NIST) researcher, says. In reality, there are many different complex polymers that fall under the plastics umbrella. Recycle and reuse isnt just a matter of sorting; its a chemistry problem, too. Not every type of plastic can be mixed and processed into a recycled material.Plastic is undeniably convenient as a low-cost material used almost everywhere. It takes major shifts in behavior to reduce its consumption, a change that is not always feasible.Virgin plastic is cheaper than recycled plastic, which means companies are more likely to use the former. In turn, consumers are faced with the same economic choice, if they even have one.There is no one single answer to solving this environmental crisis. Plastic pollution is an economic, technical, educational, and behavioral problem, Joel Tasche, co-CEO and cofounder of CleanHub, a company focused on collecting plastic waste, says in an email interview.Related:So, how can AI arm organizations, policymakers, and people with the information and solutions to combat plastic pollution?AI and Quantifying Plastic WasteThe problem of plastic waste is not new, but the sheer volume makes it difficult to gather the granular data necessary to truly understand the challenge and develop actionable solutions.If you look at the body of research on plastic pollution, especially in the marine environment, there is a large gap in terms of actually in situ collected data, says Brewster.The Benioff Ocean Science Laboratory is working to change that through the Clean Currents Coalition, which focuses on removing plastic waste from rivers before it has the chance to enter the ocean. The Coalition is partnered with local organizations in nine different countries, representing a diverse group of river systems, to remove and analyze plastic pollution.We started looking into what artificial intelligence can do to help us to collect that more fine data that can help drive our upstream action to reduce plastic production and plastic leaking into the environment in the first place, says Brewster.Related:The project is developing a machine learning model with hardware and software components. A web cam is positioned above the conveyor belts of large trash wheels used to collect plastic waste in rivers. Those cameras count and categorize trash as it is pulled from the river.This system automatically [sends] that to the cloud, to a data set, visualizing that on a dashboard that can actively tell us what types of trash are coming out of the river and at what rate, Brewster explains. We have this huge data set from all over the world, collected synchronously over three years during the same time period, very diverse cultures, communities, river sizes, river geomorphologies.That data can be leveraged to gain more insight into what kinds of plastic end up in rivers, which flow to our oceans, and to inform targeted strategies for prevention and cleanup.AI and Waste ManagementVery little plastic is actually recycled; just 5% with some being combusted and the majority ends up in landfills. Waste management plants face the challenge of sorting through a massive influx of material, some recyclable and some not. And, of course, plastic is not one uniform group that can easily be processed into reusable material.AI and imaging equipment are being put to work in waste management facilities to tackle the complex job of sorting much more efficiently.During Sutliffs time with NIST, a US government agency focused on industrial competitiveness, he worked with a team to explore how AI could make recycling less expensive.Waste management facilities can use near-visible infrared light (NIR) to visualize and sort plastics. Sutliff and his team looked to improve this approach with machine learning.Our thought was that the computer might be a lot better at distinguishing which plastic is which if you teach it, he says. You can get a pretty good prediction of things like density and crystallinity by using near infrared light if you train your models correctly.The results of that work show promise, and Sutliff released the code to NISTs GitHub page. More accurate sorting can help waste management facilities monetize more recyclable materials, rather than incinerate them, send them to landfills, or potentially leak them back into the environment.Recyclers are based off of sorting plastics and then selling them to companies that will use them. And obviously, the company buying them wants to know exactly what they're getting. So, the better the recyclers can sort it, the more profitable it is, Sutliff says.There are other organizations working with waste collectors to improve sorting and identification. CleanHub, for example, developed a track-and-trace process. Waste collectors take photos and upload them to its AI-powered app.The app creates an audit trail, and machine learning predicts the composition and weight of the collected bags of trash. We focus on collecting both recyclable and non-recyclable plastics, directing recyclables back into the economy and converting non-recyclables into alternative fuels through co-processing, which minimizes environmental impact compared to traditional incineration, explains Tasche.Greyparrot is an AI waste analytics company that started out by partnering with about a dozen recycling plants around the world, gathering a global data set to power its platform. Today, that platform provides facilities with insights into more than 89 different waste categories. Greyparrots analyzers sit above the conveyor belts in waste management facilities, capturing images and sharing AI-powered insights. The latest generation of these analyzers is made of recyclable materials.If a given plant processes 10 tons or 15 tons of waste per day that accumulates to around like 20 million objects. We actually are looking at individually all those 20 million objects moving at two to three to four meters a second, very high-speed in real time, says Ambarish Mitra, co-founder of Greyparrot. We are not only doing classification of the objects, which goes through a waste flow, we are [also] doing financial value extraction.The more capable waste management facilities are of sorting and monetizing the plastic that flows into their operations, the more competitive the market for recycled materials can become.The entire waste and recycling industry is in constant competition with the virgin material market. Everything that either lowers cost or increases the quality of the output product is a step towards a circular economy, says Tasche.AI and a Policy ApproachPlastic waste is a problem with global stakes, and policymakers are paying attention. In 2022, the United Nations announced plans to create an international legally binding agreement to end plastic pollution. The treaty is currently going through negotiations, with another session slated to begin in November.Scientists at the Benioff Ocean Science Laboratory and Eric and Wendy Schmidt Center for Data Science & Environment at UC Berkeley developed the Global Plastics AI Policy Tool with the intention of understanding how different high-level policies could reduce plastic waste.This is a real opportunity to actually quantify or estimate what the impact of some of the highest priority policies that are on the table for the treaty [is] going to be, says Neil Nathan, a project scientist at the Benioff Ocean Science Laboratory.Of the 175 nations that agreed to create the global treaty to end plastic pollution, 60 have agreed to reach that goal by 2040. Ending plastic pollution by 2040 seems like an incredibly ambitious goal. Is that even possible? asks Nathan. One of the biggest findings for us is that it actually is close to possible.The AI tool leverages historic plastic consumption data, global trade data, and population data. Machine learning algorithms, such as Random Forest, uncover historical patterns in plastic consumption and waste and project how those patterns could change in the future.The team behind the tool has been tracking the policies up for discussion throughout the treaty negotiation process to evaluate which could have the biggest impact on outcomes like mismanaged waste, incinerated waste, and landfill waste.Nathan offers the example of a minimum recycled content mandate. This is essentially requiring that new products are made with a certain percentage, in this case 40%, of post-consumer recycled content. This alone actually will reduce plastic mismanaged waste leaking into [the] environment by over 50%, he says.Its been a really wonderful experience engaging with the plastic treaty, going into the United Nations meetings, working with delegates, putting this in their hands and seeing them being able to visualize the data and actually understanding the impact of these policies, Nathan adds.AI and Product DevelopmentHow could AI impact plastic waste further upstream? Data collected and analyzed by AI systems could change how CPG companies produce plastic goods before they ever end up in the hands of consumers, waste facilities, and the environment.For example, data gathered at waste management facilities can give product manufacturers insight into how their goods are actually being recycled, or not. No two waste plants are identical, Mitra points out. If your product gets recycled in plant A, doesn't mean you'll get recycled in plant B.That insight could show companies where changes need to be made in order to make their products more recyclable.Companies could increasingly be driven to make those kinds of changes by government policy, like the European Unions Extended Producer Responsibility (EPR) policies, as well as their own ESG goals.Millions of dollars [go] into packaging design. So, whatever will come out in 25 or 26, it's already designed, and whatever is being thought [of] for 26 and 27, it's in R&D today, says Mitra. [Companies] definitely have a large appetite to learn from this and improve their packaging design to make it more recyclable rather than just experimenting with material without knowing how will they actually go through these mechanical sorting environments.In addition to optimizing the production of plastic products and packaging for recyclability, AI can hunt for viable alternatives; novel materials discovery is a promising AI application. As it sifts through vast repositories of data, AI might bring to light a material that has economic viability and less environmental impact than plastic.Plastic has a long lifecycle, persisting for decades or even longer after it is produced. AI is being applied to every point of that lifecycle: from creation, to consumer use, to garbage and recycling cans, to waste management facilities, and to its environmental pollution. As more data is gathered, AI will be a useful tool for making strides toward achieving a circular economy and reducing plastic waste.About the AuthorCarrie PallardyContributing ReporterCarrie Pallardy is a freelance writer and editor living in Chicago. She writes and edits in a variety of industries including cybersecurity, healthcare, and personal finance.See more from Carrie PallardyNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also LikeReportsMore Reports0 Commentarii 0 Distribuiri 190 Views

WWW.INFORMATIONWEEK.COMAI and the War Against Plastic WasteCarrie Pallardy, Contributing ReporterNovember 19, 202410 Min ReadPollution floating in river, Mumbai, Indiapaul kennedyvia Alamy Stock PhotoPlastic pollution is easy to visualize given that many rivers are choked with such waste and the oceans are littered with it. The Great Pacific Garbage Patch, a massive collection of plastic and other debris, is an infamous result of plastics proliferation. Even if you dont live near a body of water to see the problem firsthand, youre unlikely to walk far without seeing some piece of plastic crushed underfoot. But untangling this problem is anything but easy.Enter artificial intelligence, which is being applied to many complex problems that include plastics pollution. InformationWeek spoke to research scientists and startup founders about why plastics waste is such a complicated challenge and how they use AI in their work.The Plastics ProblemPlastic is ubiquitous today as food packaging, clothing, medical devices, cars, and so much more rely on this material. Since 1950, nearly 10 billion metric tons of plastic has been produced, and over half of that was just in the last 20 years. So, it's been this extremely prolific growth in production and use. It's partially due to just the absolute versatility of plastic, Chase Brewster, project scientist at Benioff Ocean Science Laboratory, a center for marine conservation at the University of California, Santa Barbara, says.Related:Plastic isnt biodegradable and recycling is imperfect. As more plastic is produced and more of it is wasted, much of that waste ends up back in the environment, polluting land and water as it breaks down into microplastics and nanoplastics.Even when plastic products end up at waste management facilities, processing them is not simple. A lot of people think of plastic as just plastic, Bradley Sutliff, a former National Institute of Standards and Technology (NIST) researcher, says. In reality, there are many different complex polymers that fall under the plastics umbrella. Recycle and reuse isnt just a matter of sorting; its a chemistry problem, too. Not every type of plastic can be mixed and processed into a recycled material.Plastic is undeniably convenient as a low-cost material used almost everywhere. It takes major shifts in behavior to reduce its consumption, a change that is not always feasible.Virgin plastic is cheaper than recycled plastic, which means companies are more likely to use the former. In turn, consumers are faced with the same economic choice, if they even have one.There is no one single answer to solving this environmental crisis. Plastic pollution is an economic, technical, educational, and behavioral problem, Joel Tasche, co-CEO and cofounder of CleanHub, a company focused on collecting plastic waste, says in an email interview.Related:So, how can AI arm organizations, policymakers, and people with the information and solutions to combat plastic pollution?AI and Quantifying Plastic WasteThe problem of plastic waste is not new, but the sheer volume makes it difficult to gather the granular data necessary to truly understand the challenge and develop actionable solutions.If you look at the body of research on plastic pollution, especially in the marine environment, there is a large gap in terms of actually in situ collected data, says Brewster.The Benioff Ocean Science Laboratory is working to change that through the Clean Currents Coalition, which focuses on removing plastic waste from rivers before it has the chance to enter the ocean. The Coalition is partnered with local organizations in nine different countries, representing a diverse group of river systems, to remove and analyze plastic pollution.We started looking into what artificial intelligence can do to help us to collect that more fine data that can help drive our upstream action to reduce plastic production and plastic leaking into the environment in the first place, says Brewster.Related:The project is developing a machine learning model with hardware and software components. A web cam is positioned above the conveyor belts of large trash wheels used to collect plastic waste in rivers. Those cameras count and categorize trash as it is pulled from the river.This system automatically [sends] that to the cloud, to a data set, visualizing that on a dashboard that can actively tell us what types of trash are coming out of the river and at what rate, Brewster explains. We have this huge data set from all over the world, collected synchronously over three years during the same time period, very diverse cultures, communities, river sizes, river geomorphologies.That data can be leveraged to gain more insight into what kinds of plastic end up in rivers, which flow to our oceans, and to inform targeted strategies for prevention and cleanup.AI and Waste ManagementVery little plastic is actually recycled; just 5% with some being combusted and the majority ends up in landfills. Waste management plants face the challenge of sorting through a massive influx of material, some recyclable and some not. And, of course, plastic is not one uniform group that can easily be processed into reusable material.AI and imaging equipment are being put to work in waste management facilities to tackle the complex job of sorting much more efficiently.During Sutliffs time with NIST, a US government agency focused on industrial competitiveness, he worked with a team to explore how AI could make recycling less expensive.Waste management facilities can use near-visible infrared light (NIR) to visualize and sort plastics. Sutliff and his team looked to improve this approach with machine learning.Our thought was that the computer might be a lot better at distinguishing which plastic is which if you teach it, he says. You can get a pretty good prediction of things like density and crystallinity by using near infrared light if you train your models correctly.The results of that work show promise, and Sutliff released the code to NISTs GitHub page. More accurate sorting can help waste management facilities monetize more recyclable materials, rather than incinerate them, send them to landfills, or potentially leak them back into the environment.Recyclers are based off of sorting plastics and then selling them to companies that will use them. And obviously, the company buying them wants to know exactly what they're getting. So, the better the recyclers can sort it, the more profitable it is, Sutliff says.There are other organizations working with waste collectors to improve sorting and identification. CleanHub, for example, developed a track-and-trace process. Waste collectors take photos and upload them to its AI-powered app.The app creates an audit trail, and machine learning predicts the composition and weight of the collected bags of trash. We focus on collecting both recyclable and non-recyclable plastics, directing recyclables back into the economy and converting non-recyclables into alternative fuels through co-processing, which minimizes environmental impact compared to traditional incineration, explains Tasche.Greyparrot is an AI waste analytics company that started out by partnering with about a dozen recycling plants around the world, gathering a global data set to power its platform. Today, that platform provides facilities with insights into more than 89 different waste categories. Greyparrots analyzers sit above the conveyor belts in waste management facilities, capturing images and sharing AI-powered insights. The latest generation of these analyzers is made of recyclable materials.If a given plant processes 10 tons or 15 tons of waste per day that accumulates to around like 20 million objects. We actually are looking at individually all those 20 million objects moving at two to three to four meters a second, very high-speed in real time, says Ambarish Mitra, co-founder of Greyparrot. We are not only doing classification of the objects, which goes through a waste flow, we are [also] doing financial value extraction.The more capable waste management facilities are of sorting and monetizing the plastic that flows into their operations, the more competitive the market for recycled materials can become.The entire waste and recycling industry is in constant competition with the virgin material market. Everything that either lowers cost or increases the quality of the output product is a step towards a circular economy, says Tasche.AI and a Policy ApproachPlastic waste is a problem with global stakes, and policymakers are paying attention. In 2022, the United Nations announced plans to create an international legally binding agreement to end plastic pollution. The treaty is currently going through negotiations, with another session slated to begin in November.Scientists at the Benioff Ocean Science Laboratory and Eric and Wendy Schmidt Center for Data Science & Environment at UC Berkeley developed the Global Plastics AI Policy Tool with the intention of understanding how different high-level policies could reduce plastic waste.This is a real opportunity to actually quantify or estimate what the impact of some of the highest priority policies that are on the table for the treaty [is] going to be, says Neil Nathan, a project scientist at the Benioff Ocean Science Laboratory.Of the 175 nations that agreed to create the global treaty to end plastic pollution, 60 have agreed to reach that goal by 2040. Ending plastic pollution by 2040 seems like an incredibly ambitious goal. Is that even possible? asks Nathan. One of the biggest findings for us is that it actually is close to possible.The AI tool leverages historic plastic consumption data, global trade data, and population data. Machine learning algorithms, such as Random Forest, uncover historical patterns in plastic consumption and waste and project how those patterns could change in the future.The team behind the tool has been tracking the policies up for discussion throughout the treaty negotiation process to evaluate which could have the biggest impact on outcomes like mismanaged waste, incinerated waste, and landfill waste.Nathan offers the example of a minimum recycled content mandate. This is essentially requiring that new products are made with a certain percentage, in this case 40%, of post-consumer recycled content. This alone actually will reduce plastic mismanaged waste leaking into [the] environment by over 50%, he says.Its been a really wonderful experience engaging with the plastic treaty, going into the United Nations meetings, working with delegates, putting this in their hands and seeing them being able to visualize the data and actually understanding the impact of these policies, Nathan adds.AI and Product DevelopmentHow could AI impact plastic waste further upstream? Data collected and analyzed by AI systems could change how CPG companies produce plastic goods before they ever end up in the hands of consumers, waste facilities, and the environment.For example, data gathered at waste management facilities can give product manufacturers insight into how their goods are actually being recycled, or not. No two waste plants are identical, Mitra points out. If your product gets recycled in plant A, doesn't mean you'll get recycled in plant B.That insight could show companies where changes need to be made in order to make their products more recyclable.Companies could increasingly be driven to make those kinds of changes by government policy, like the European Unions Extended Producer Responsibility (EPR) policies, as well as their own ESG goals.Millions of dollars [go] into packaging design. So, whatever will come out in 25 or 26, it's already designed, and whatever is being thought [of] for 26 and 27, it's in R&D today, says Mitra. [Companies] definitely have a large appetite to learn from this and improve their packaging design to make it more recyclable rather than just experimenting with material without knowing how will they actually go through these mechanical sorting environments.In addition to optimizing the production of plastic products and packaging for recyclability, AI can hunt for viable alternatives; novel materials discovery is a promising AI application. As it sifts through vast repositories of data, AI might bring to light a material that has economic viability and less environmental impact than plastic.Plastic has a long lifecycle, persisting for decades or even longer after it is produced. AI is being applied to every point of that lifecycle: from creation, to consumer use, to garbage and recycling cans, to waste management facilities, and to its environmental pollution. As more data is gathered, AI will be a useful tool for making strides toward achieving a circular economy and reducing plastic waste.About the AuthorCarrie PallardyContributing ReporterCarrie Pallardy is a freelance writer and editor living in Chicago. She writes and edits in a variety of industries including cybersecurity, healthcare, and personal finance.See more from Carrie PallardyNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also LikeReportsMore Reports0 Commentarii 0 Distribuiri 190 Views -

WWW.NEWSCIENTIST.COMBeing in space makes it harder for astronauts to think quicklyThere is a lot to keep track of when working in spaceNASA via Getty ImageAstronauts aboard the International Space Station (ISS) had slower memory, attention and processing speed after six months, raising concerns about the impact of cognitive impairment on future space missions to Mars.The extreme environment of space, with reduced gravity, harsh radiation and the lack of regular sunrises and sunsets, can have dramatic effects on astronaut health, from muscle loss to an increased risk of heart disease. However, the cognitive effects of long-term space travel are less well documented. AdvertisementNow, Sheena Dev at NASAs Johnson Space Center in Houston, Texas, and her colleagues have looked at the cognitive performance of 25 astronauts during their time on the ISS.The team ran the astronauts through 10 tests, some of which were done on Earth, once before and twice after the mission, while others were done on the ISS, both early and later in the mission. These tests measured certain cognitive capacities, such as finding patterns on a grid to test abstract reasoning or choosing when to stop an inflating balloon before it pops to test risk-taking.The researchers found that the astronauts took longer to complete tests measuring processing speed, working memory and attention on the ISS than on Earth, but they were just as accurate. While there was no overall cognitive impairment or lasting effect on the astronauts abilities, some of the measures, like processing speed, took longer to return to normal after they came back to Earth. Voyage across the galaxy and beyond with our space newsletter every month.Sign up to newsletterHaving clear data on the cognitive effects of space travel will be crucial for future human spaceflight, says Elisa Raffaella Ferr at Birkbeck, University of London, but it will be important to collect more data, both on Earth and in space, before we know the full picture.A mission to Mars is not only longer in terms of time, but also in terms of autonomy, says Ferr. People there will have a completely different interaction with ground control because of distance and delays in communication, so they will need to be fully autonomous in taking decisions, so human performance is going to be key. You definitely dont want to have astronauts on Mars with slow reaction time, in terms of attention-related tasks or memory or processing speed.It isnt surprising that there were some specific decreases in cognitive performance given the unusual environment of space, says Jo Bower at the University of East Anglia in Norwich, UK. Its not necessarily a great cause for an alarm, but its something thats useful to be aware of, especially so that you know your limits when youre in these extreme environments, she says.That awareness could be especially helpful for astronauts on longer missions, adds Bower. Its not just how you do in those tests, but also what your perception of your ability is, she says. We know, for example, if youre sleep deprived, that quite often your performance will decline, but you wont realise your performance has declined.Journal reference:Frontiers in Physiology DOI: 10.3389/fphys.2024.1451269Topics:0 Commentarii 0 Distribuiri 153 Views

WWW.NEWSCIENTIST.COMBeing in space makes it harder for astronauts to think quicklyThere is a lot to keep track of when working in spaceNASA via Getty ImageAstronauts aboard the International Space Station (ISS) had slower memory, attention and processing speed after six months, raising concerns about the impact of cognitive impairment on future space missions to Mars.The extreme environment of space, with reduced gravity, harsh radiation and the lack of regular sunrises and sunsets, can have dramatic effects on astronaut health, from muscle loss to an increased risk of heart disease. However, the cognitive effects of long-term space travel are less well documented. AdvertisementNow, Sheena Dev at NASAs Johnson Space Center in Houston, Texas, and her colleagues have looked at the cognitive performance of 25 astronauts during their time on the ISS.The team ran the astronauts through 10 tests, some of which were done on Earth, once before and twice after the mission, while others were done on the ISS, both early and later in the mission. These tests measured certain cognitive capacities, such as finding patterns on a grid to test abstract reasoning or choosing when to stop an inflating balloon before it pops to test risk-taking.The researchers found that the astronauts took longer to complete tests measuring processing speed, working memory and attention on the ISS than on Earth, but they were just as accurate. While there was no overall cognitive impairment or lasting effect on the astronauts abilities, some of the measures, like processing speed, took longer to return to normal after they came back to Earth. Voyage across the galaxy and beyond with our space newsletter every month.Sign up to newsletterHaving clear data on the cognitive effects of space travel will be crucial for future human spaceflight, says Elisa Raffaella Ferr at Birkbeck, University of London, but it will be important to collect more data, both on Earth and in space, before we know the full picture.A mission to Mars is not only longer in terms of time, but also in terms of autonomy, says Ferr. People there will have a completely different interaction with ground control because of distance and delays in communication, so they will need to be fully autonomous in taking decisions, so human performance is going to be key. You definitely dont want to have astronauts on Mars with slow reaction time, in terms of attention-related tasks or memory or processing speed.It isnt surprising that there were some specific decreases in cognitive performance given the unusual environment of space, says Jo Bower at the University of East Anglia in Norwich, UK. Its not necessarily a great cause for an alarm, but its something thats useful to be aware of, especially so that you know your limits when youre in these extreme environments, she says.That awareness could be especially helpful for astronauts on longer missions, adds Bower. Its not just how you do in those tests, but also what your perception of your ability is, she says. We know, for example, if youre sleep deprived, that quite often your performance will decline, but you wont realise your performance has declined.Journal reference:Frontiers in Physiology DOI: 10.3389/fphys.2024.1451269Topics:0 Commentarii 0 Distribuiri 153 Views -