Synthesizing natural human motion that adapts to complex environments while offering creative control remains a core challenge in motion synthesis, from gaming to embodied AI. Current models often struggle, either by assuming flat terrain or failing to enable motion control through text-based inputs.

Xiaohan Zhang, Sebastian Starke, Vladimir Guzov, Zhensong Zhang, Eduardo Pérez Pellitero, and Gerard Pons-Moll, a team of researchers from multiple institutions, aim to overcome these limitations with SCENIC, a novel diffusion model designed to generate human motion that adapts to dynamic terrains in virtual scenes, while providing semantic control through natural language.

SCENIC

SCENIC

The main technical challenge is simultaneously reasoning about intricate scene geometry while maintaining control via text prompts. The model must ensure physical plausibility and precise navigation across various terrains while also preserving user-specified text control, such as “carefully stepping over obstacles” or “walking upstairs like a zombie”.

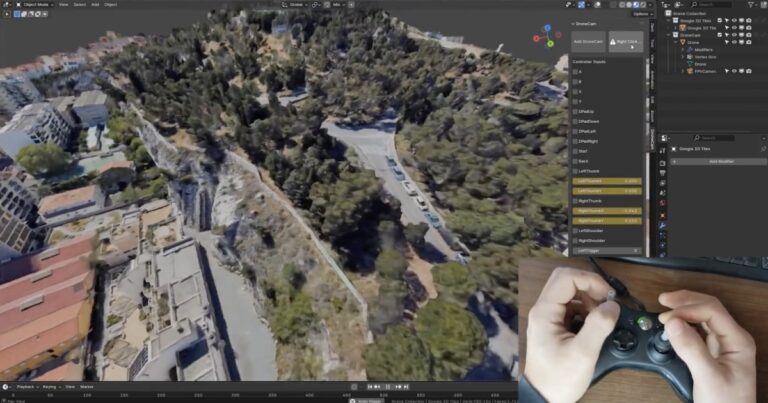

Using a hierarchical scene reasoning approach, SCENIC takes user-specified trajectory sub-goals and text prompts as input to guide motion generation within a 3D scene.

Experiments show that this diffusion model generates human motion sequences of arbitrary length, is capable of adapting to complex scenes with diverse terrain surfaces, and responds accurately to textual prompts. The SCENIC code, dataset, and models will be publicly released here.

Don’t forget to join our 80 Level Talent platform and our new Discord server, follow us on Instagram, Twitter, LinkedIn, Telegram, TikTok, and Threads, where we share breakdowns, the latest news, awesome artworks, and more.