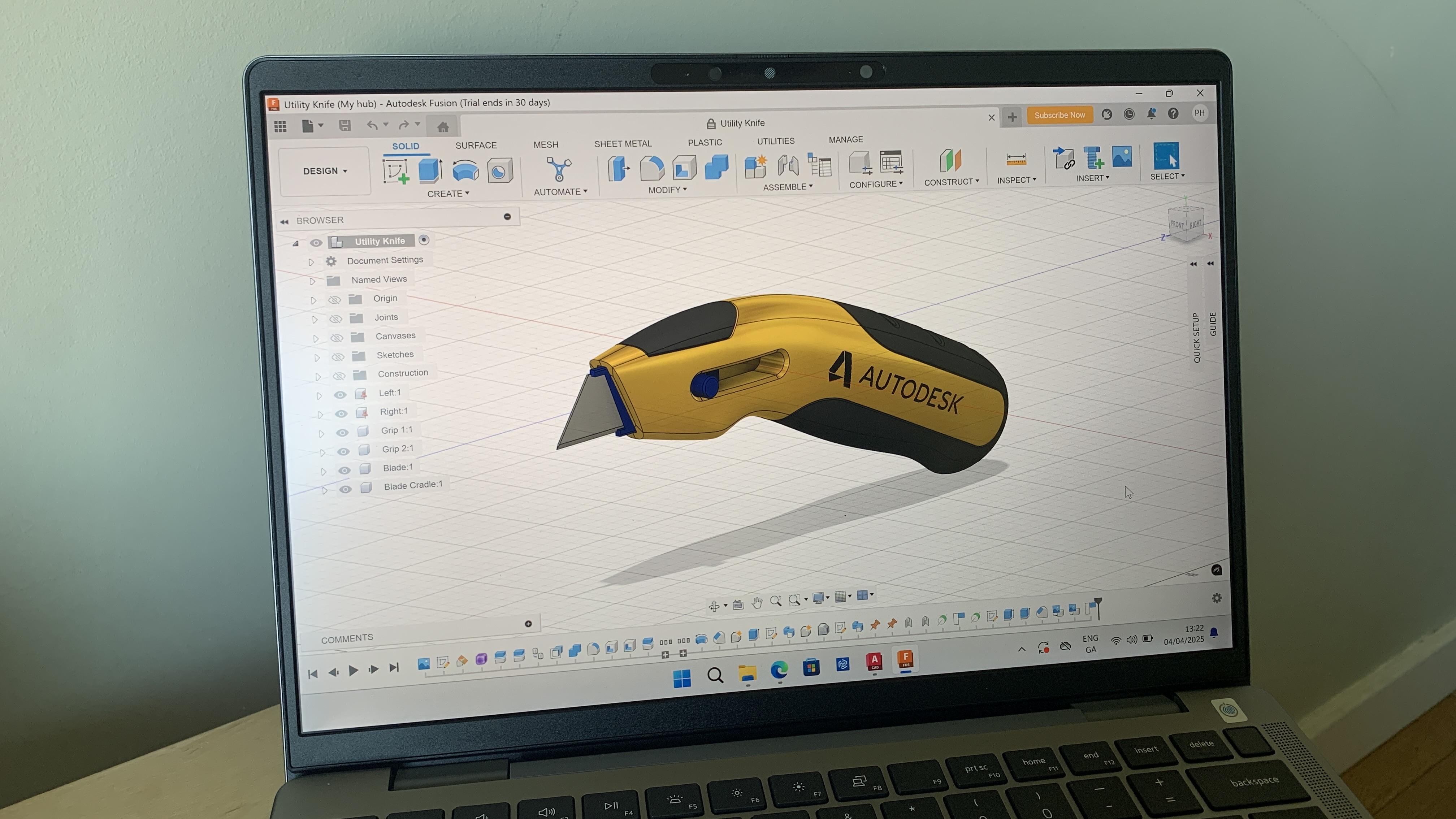

Ever wondered what it feels like to wield the power of a CAD app without selling a kidney? Enter Fusion 360, the “budget” superhero of parametric and collaborative design. It’s like they took all the complex engineering mumbo jumbo and distilled it into a drinkable form—minus the hangover, of course.

You can collaborate with your friends while designing something that may or may not be a functional toaster. Who needs professional software when you can have “powerful” and “affordable” in the same breath? Just don’t forget to remind your wallet how much it appreciated the break.

#Fusion360 #CAD #Design #BudgetTools #CollaborativeDesign

You can collaborate with your friends while designing something that may or may not be a functional toaster. Who needs professional software when you can have “powerful” and “affordable” in the same breath? Just don’t forget to remind your wallet how much it appreciated the break.

#Fusion360 #CAD #Design #BudgetTools #CollaborativeDesign

Ever wondered what it feels like to wield the power of a CAD app without selling a kidney? Enter Fusion 360, the “budget” superhero of parametric and collaborative design. It’s like they took all the complex engineering mumbo jumbo and distilled it into a drinkable form—minus the hangover, of course.

You can collaborate with your friends while designing something that may or may not be a functional toaster. Who needs professional software when you can have “powerful” and “affordable” in the same breath? Just don’t forget to remind your wallet how much it appreciated the break.

#Fusion360 #CAD #Design #BudgetTools #CollaborativeDesign