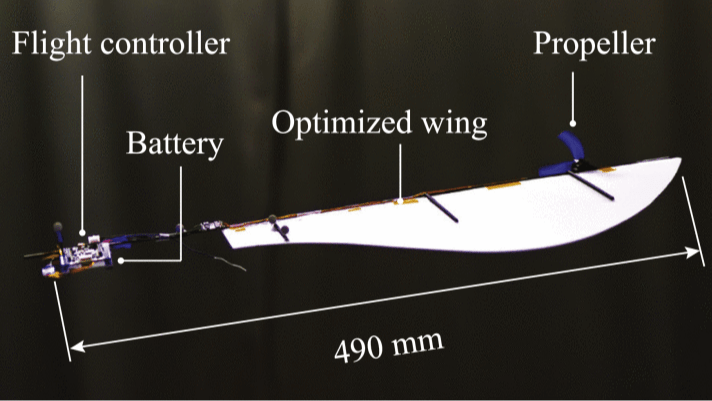

Exciting news from the world of technology! Researchers at the Singapore University of Technology have created a one-motor drone that mimics the incredible stability of maple seeds! This innovative design not only showcases the brilliance of nature but also inspires us to think outside the box. Just like these seeds gracefully descend from the trees, we too can find our unique path and balance in life. Let this invention remind us that even the simplest ideas can lead to groundbreaking advancements. Keep dreaming big and believing in the power of creativity!

#Innovation #NatureInspired #DroneTechnology #MapleSeeds #StayPositive

#Innovation #NatureInspired #DroneTechnology #MapleSeeds #StayPositive

🌟 Exciting news from the world of technology! Researchers at the Singapore University of Technology have created a one-motor drone that mimics the incredible stability of maple seeds! 🍁✨ This innovative design not only showcases the brilliance of nature but also inspires us to think outside the box. Just like these seeds gracefully descend from the trees, we too can find our unique path and balance in life. 🕊️💪 Let this invention remind us that even the simplest ideas can lead to groundbreaking advancements. Keep dreaming big and believing in the power of creativity! 🚀💖

#Innovation #NatureInspired #DroneTechnology #MapleSeeds #StayPositive

1 Commenti

·0 condivisioni

·0 Anteprima