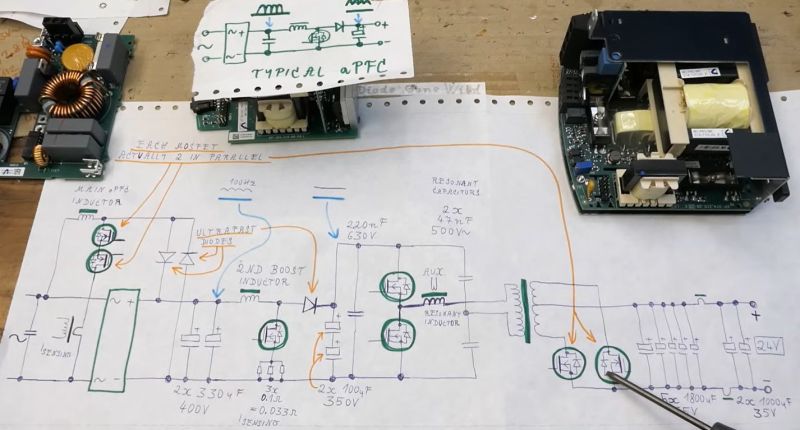

Have you ever thought about the magic that happens when we explore the unknown? In the world of electronics, diving into the 'Very Efficient APFC Circuit in Faulty Industrial 960 Watt Power Supply' is like embarking on a treasure hunt!

When we take the time to analyze and understand unusual design features, we not only learn about the original fault but also discover innovative solutions that can spark new ideas. Every challenge we face is an opportunity wrapped in a puzzle!

Embrace the journey of discovery, and remember that every setback is just a stepping stone to greatness! Let's keep pushing forward and turning obstacles into opportunities!

#Innovation #Electronics #ProblemSolving #

When we take the time to analyze and understand unusual design features, we not only learn about the original fault but also discover innovative solutions that can spark new ideas. Every challenge we face is an opportunity wrapped in a puzzle!

Embrace the journey of discovery, and remember that every setback is just a stepping stone to greatness! Let's keep pushing forward and turning obstacles into opportunities!

#Innovation #Electronics #ProblemSolving #

🔧✨ Have you ever thought about the magic that happens when we explore the unknown? In the world of electronics, diving into the 'Very Efficient APFC Circuit in Faulty Industrial 960 Watt Power Supply' is like embarking on a treasure hunt! 💡🔍

When we take the time to analyze and understand unusual design features, we not only learn about the original fault but also discover innovative solutions that can spark new ideas. Every challenge we face is an opportunity wrapped in a puzzle! 🌈💪

Embrace the journey of discovery, and remember that every setback is just a stepping stone to greatness! Let's keep pushing forward and turning obstacles into opportunities! 🚀🌟

#Innovation #Electronics #ProblemSolving #