0 Commentaires

0 Parts

126 Vue

Annuaire

Annuaire

-

Connectez-vous pour aimer, partager et commenter!

-

WWW.WSJ.COMSmoky Mountain DNAFamily, Faith & Fables Review: Dolly Partons Country KinfolkThe singer combines archival recordings and contemporary sessions to collaborate with relatives past and present on this 3-LP set that finds her digging into her roots and tracing musical evolutions across the generations.0 Commentaires 0 Parts 131 Vue

-

WWW.WSJ.COMAmerican, Born Hungary: Kertsz, Capa, and the Hungarian American Photographic Legacy Review: Capturing a Diaspora at VMFAAn exhibition at the Virginia Museum of Fine Arts focuses on photographers from the European nation who ended up in the U.S., where many of them forged brilliant careers.0 Commentaires 0 Parts 118 Vue

-

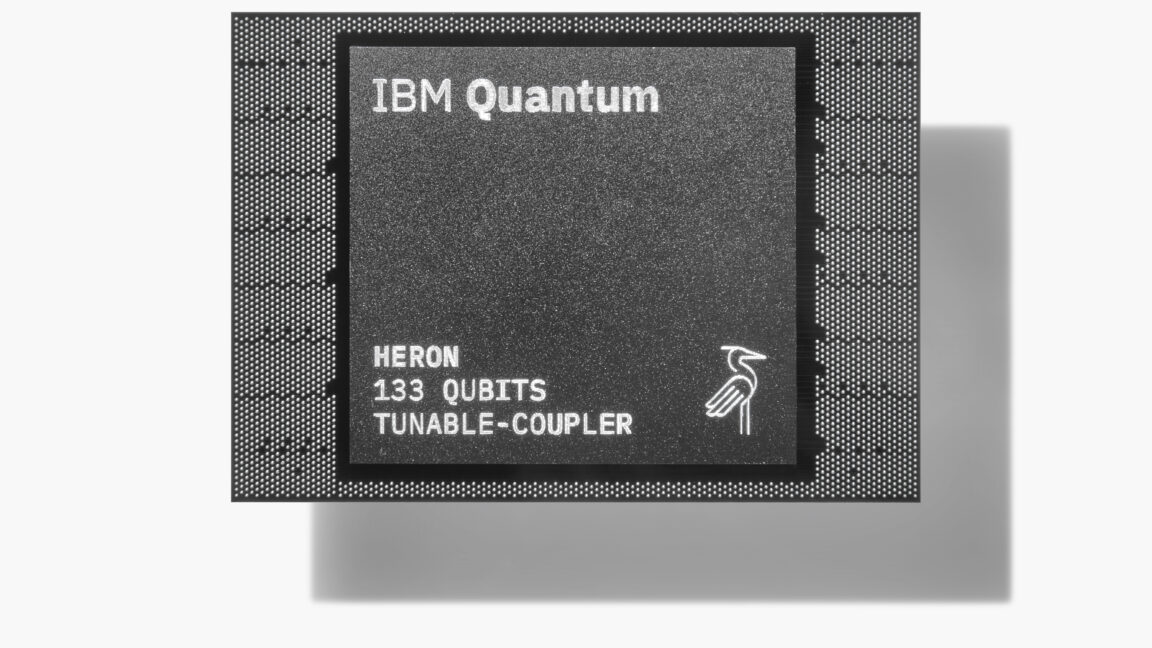

ARSTECHNICA.COMIBM boosts the amount of computation you can get done on quantum hardwareInching toward usefulness IBM boosts the amount of computation you can get done on quantum hardware Incremental improvements across the hardware and software stacks add up. John Timmer Nov 13, 2024 5:42 pm | 5 Credit: IBM Credit: IBM Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreThere's a general consensus that we won't be able to consistently perform sophisticated quantum calculations without the development of error-corrected quantum computing, which is unlikely to arrive until the end of the decade. It's still an open question, however, whether we could perform limited but useful calculations at an earlier point. IBM is one of the companies that's betting the answer is yes, and on Wednesday, it announced a series of developments aimed at making that possible.On their own, none of the changes being announced are revolutionary. But collectively, changes across the hardware and software stacks have produced much more efficient and less error-prone operations. The net result is a system that supports the most complicated calculations yet on IBM's hardware, leaving the company optimistic that its users will find some calculations where quantum hardware provides an advantage.Better hardware and softwareIBM's early efforts in the quantum computing space saw it ramp up the qubit count rapidly, being one of the first companies to reach the 1,000 qubit count. However, each of those qubits had an error rate that ensured that any algorithms that tried to use all of these qubits in a single calculation would inevitably trigger one. Since then, the company's focus has been on improving the performance of smaller processors. Wednesday's announcement was based on the introduction of the second version of its Heron processor, which has 133 qubits. That's still beyond the capability of simulations on classical computers, should it be able to operate with sufficiently low errors.IBM VP Jay Gambetta told Ars that Revision 2 of Heron focused on getting rid of what are called TLS (two-level system) errors. "If you see this sort of defect, which can be a dipole or just some electronic structure that is caught on the surface, that is what we believe is limiting the coherence of our devices," Gambetta said. This happens because the defects can resonate at a frequency that interacts with a nearby qubit, causing the qubit to drop out of the quantum state needed to participate in calculations (called a loss of coherence).By making small adjustments to the frequency that the qubits are operating at, it's possible to avoid these problems. This can be done when the Heron chip is being calibrated before it's opened for general use.Separately, the company has done a rewrite of the software that controls the system during operations. "After learning from the community, seeing how to run larger circuits, [we were able to] almost better define what it should be and rewrite the whole stack towards that," Gambetta said. The result is a dramatic speed-up. "Something that took 122 hours now is down to a couple of hours," he told Ars.Since people are paying for time on this hardware, that's good for customers now. However, it could also pay off in the longer run, as some errors can occur randomly, so less time spent on a calculation can mean fewer errors.Deeper computationsDespite all those improvements, errors are still likely during any significant calculations. While it continues to work toward developing error-corrected qubits, IBM is focusing on what it calls error mitigation, which it first detailed last year. As we described it then:"The researchers turned to a method where they intentionally amplified and then measured the processor's noise at different levels. These measurements are used to estimate a function that produces similar output to the actual measurements. That function can then have its noise set to zero to produce an estimate of what the processor would do without any noise at all."The problem here is that using the function is computationally difficult, and the difficulty increases with the qubit count. So, while it's still easier to do error mitigation calculations than simulate the quantum computer's behavior on the same hardware, there's still the risk of it becoming computationally intractable. But IBM has also taken the time to optimize that, too. "They've got algorithmic improvements, and the method that uses tensor methods [now] uses the GPU," Gambetta told Ars. "So I think it's a combination of both."That doesn't mean the computational challenge of error mitigation goes away, but it does allow the method to be used with somewhat larger quantum circuits before things become unworkable.Combining all these techniques, IBM has used this setup to model a simple quantum system called an Ising model. And it produced reasonable results after performing 5,000 individual quantum operations called gates. "I think the official metric is something like if you want to estimate an observable with 10 percent accuracy, we've shown that we can get all the techniques working to 5,000 gates now," Gambetta told Ars.That's good enough that researchers are starting to use the hardware to simulate the electronic structure of some simple chemicals, such as iron-sulfur compounds. And Gambetta viewed that as an indication that quantum computing is becoming a viable scientific tool.But he was quick to say that this doesn't mean we're at the point where quantum computers can clearly and consistently outperform classical hardware. "The question of advantagewhich is when is the method of running it with quantum circuits is the best method, over all possible classical methodsis a very hard scientific question that we need to get algorithmic researchers and domain experts to answer," Gambetta said. "When quantum's going to replace classical, you've got to beat the best possible classical method with the quantum method, and that [needs] an iteration in science. You try a different quantum method, [then] you advance the classical method. And we're not there yet. I think that will happen in the next couple of years, but that's an iterative process."John TimmerSenior Science EditorJohn TimmerSenior Science Editor John is Ars Technica's science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots. 5 Comments Prev story0 Commentaires 0 Parts 168 Vue

ARSTECHNICA.COMIBM boosts the amount of computation you can get done on quantum hardwareInching toward usefulness IBM boosts the amount of computation you can get done on quantum hardware Incremental improvements across the hardware and software stacks add up. John Timmer Nov 13, 2024 5:42 pm | 5 Credit: IBM Credit: IBM Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreThere's a general consensus that we won't be able to consistently perform sophisticated quantum calculations without the development of error-corrected quantum computing, which is unlikely to arrive until the end of the decade. It's still an open question, however, whether we could perform limited but useful calculations at an earlier point. IBM is one of the companies that's betting the answer is yes, and on Wednesday, it announced a series of developments aimed at making that possible.On their own, none of the changes being announced are revolutionary. But collectively, changes across the hardware and software stacks have produced much more efficient and less error-prone operations. The net result is a system that supports the most complicated calculations yet on IBM's hardware, leaving the company optimistic that its users will find some calculations where quantum hardware provides an advantage.Better hardware and softwareIBM's early efforts in the quantum computing space saw it ramp up the qubit count rapidly, being one of the first companies to reach the 1,000 qubit count. However, each of those qubits had an error rate that ensured that any algorithms that tried to use all of these qubits in a single calculation would inevitably trigger one. Since then, the company's focus has been on improving the performance of smaller processors. Wednesday's announcement was based on the introduction of the second version of its Heron processor, which has 133 qubits. That's still beyond the capability of simulations on classical computers, should it be able to operate with sufficiently low errors.IBM VP Jay Gambetta told Ars that Revision 2 of Heron focused on getting rid of what are called TLS (two-level system) errors. "If you see this sort of defect, which can be a dipole or just some electronic structure that is caught on the surface, that is what we believe is limiting the coherence of our devices," Gambetta said. This happens because the defects can resonate at a frequency that interacts with a nearby qubit, causing the qubit to drop out of the quantum state needed to participate in calculations (called a loss of coherence).By making small adjustments to the frequency that the qubits are operating at, it's possible to avoid these problems. This can be done when the Heron chip is being calibrated before it's opened for general use.Separately, the company has done a rewrite of the software that controls the system during operations. "After learning from the community, seeing how to run larger circuits, [we were able to] almost better define what it should be and rewrite the whole stack towards that," Gambetta said. The result is a dramatic speed-up. "Something that took 122 hours now is down to a couple of hours," he told Ars.Since people are paying for time on this hardware, that's good for customers now. However, it could also pay off in the longer run, as some errors can occur randomly, so less time spent on a calculation can mean fewer errors.Deeper computationsDespite all those improvements, errors are still likely during any significant calculations. While it continues to work toward developing error-corrected qubits, IBM is focusing on what it calls error mitigation, which it first detailed last year. As we described it then:"The researchers turned to a method where they intentionally amplified and then measured the processor's noise at different levels. These measurements are used to estimate a function that produces similar output to the actual measurements. That function can then have its noise set to zero to produce an estimate of what the processor would do without any noise at all."The problem here is that using the function is computationally difficult, and the difficulty increases with the qubit count. So, while it's still easier to do error mitigation calculations than simulate the quantum computer's behavior on the same hardware, there's still the risk of it becoming computationally intractable. But IBM has also taken the time to optimize that, too. "They've got algorithmic improvements, and the method that uses tensor methods [now] uses the GPU," Gambetta told Ars. "So I think it's a combination of both."That doesn't mean the computational challenge of error mitigation goes away, but it does allow the method to be used with somewhat larger quantum circuits before things become unworkable.Combining all these techniques, IBM has used this setup to model a simple quantum system called an Ising model. And it produced reasonable results after performing 5,000 individual quantum operations called gates. "I think the official metric is something like if you want to estimate an observable with 10 percent accuracy, we've shown that we can get all the techniques working to 5,000 gates now," Gambetta told Ars.That's good enough that researchers are starting to use the hardware to simulate the electronic structure of some simple chemicals, such as iron-sulfur compounds. And Gambetta viewed that as an indication that quantum computing is becoming a viable scientific tool.But he was quick to say that this doesn't mean we're at the point where quantum computers can clearly and consistently outperform classical hardware. "The question of advantagewhich is when is the method of running it with quantum circuits is the best method, over all possible classical methodsis a very hard scientific question that we need to get algorithmic researchers and domain experts to answer," Gambetta said. "When quantum's going to replace classical, you've got to beat the best possible classical method with the quantum method, and that [needs] an iteration in science. You try a different quantum method, [then] you advance the classical method. And we're not there yet. I think that will happen in the next couple of years, but that's an iterative process."John TimmerSenior Science EditorJohn TimmerSenior Science Editor John is Ars Technica's science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots. 5 Comments Prev story0 Commentaires 0 Parts 168 Vue -

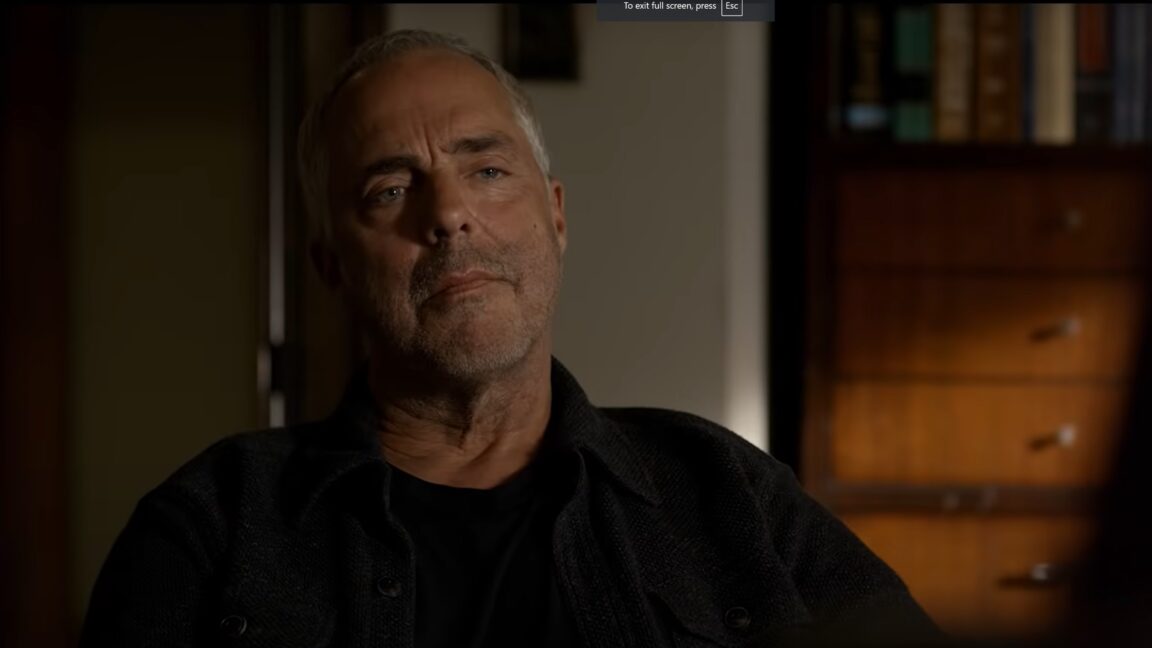

ARSTECHNICA.COMAmazon ends free ad-supported streaming service after Prime Video with ads debutsFarewell, Freevee Amazon ends free ad-supported streaming service after Prime Video with ads debuts Selling subscriptions to Prime Video with ads is more lucrative for Amazon. Scharon Harding Nov 13, 2024 3:56 pm | 15 A shot from the Freevee original series Bosch: Legacy. Credit: Amazon Freevee/YouTube A shot from the Freevee original series Bosch: Legacy. Credit: Amazon Freevee/YouTube Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreAmazon is shutting down Freevee, its free ad-supported streaming television (FAST) service, as it heightens focus on selling ads on its Prime Video subscription service.Amazon, which has owned IMDb since 1998, launched Freevee as IMDb Freedive in 2019. The service let people watch movies and shows, including Freevee originals, on demand without a subscription fee. Amazon's streaming offering was also previously known as IMDb TV and rebranded to Amazon Freevee in 2022.According to a report from Deadline this week, Freevee is being phased out over the coming weeks, but a firm closing date hasnt been shared publicly.Explaining the move to Deadline, an Amazon spokesperson said:To deliver a simpler viewing experience for customers, we have decided to phase out Freevee branding. There will be no change to the content available for Prime members, and a vast offering of free streaming content will still be accessible for non-Prime members, including select Originals from Amazon MGM Studios, a variety of licensed movies and series, and a broad library of FAST Channels all available on Prime Video.The shutdown also means that producers can no longer pitch shows to Freevee as Freevee originals, and any pending deals for such projects have been cancelled, Deadline reported.Freevee shows still available for freeFreevee original shows include Jury Duty, with James Marsden, Judy Justice, with Judge Judy Sheindlin, and Bosch:Legacy, a continuation of the Prime Video original series Bosch. The Freevee originals are expected to be available to watch on Prime Video after Freevee closes. People won't need a Prime Video or Prime subscription in order to watch these shows. As of this writing, I was also able to play some Freevee original movies without logging in to a Prime Video or Prime account. Prime Video has also made some Prime Video originals, like The Lord of the Rings: The Rings of Power, available under a Freevee section in Prime Video where people can watch for free if they log in to an Amazon (Prime Video or Prime subscriptions not required) account. Before this week's announcement, Prime Video and Freevee were already sharing some content.Bloomberg reported this week that some Freevee shows will remain free to watch due to contractual prohibitions. Its unclear what might happen to such Freevee original content after said contractual obligations conclude.Freevee became redundant, confusingAmazon's FAST service seemed redundant after Amazon launched the Prime Video ad tier in January. With the subscription tier making money from subscription fees and ads shown on Prime Video with ads, it made little sense for Amazon to continue with Freevee, which only has the latter revenue stream.Pushing people from streaming off of Freevee to Prime Video could help Amazon attract people to Prime Video or Amazon's e-commerce site and simplify tracking what people are streaming. Meanwhile, Amazon plans to show more ads on Prime Video in 2025 than it did this year.Two anonymous sources told advertising trade publication Adweek in February that the two services were confusing subscribers and ad buyers. At the time, the publication claimed that Amazon was laying the groundwork" to kill Freevee "for months. It cited two anonymous people familiar with the matter who pointed to moves like Amazon shifting Freevee technical workers to working on the Prime Video ads infrastructure and Amazon laying off Freevee marketing and strategy employees. In February, Amazon denied that it was ending Freevee, saying it was "an important streaming offering providing both Prime and non-Prime customers thousands of hit movies, shows, and originals, all for free, per Adweek.Freevees demise comes as streaming providers try navigating a booming market where profits remain elusive and ad businesses are still developing. Some industry stakeholders and analysts are expecting more consolidation in the streaming industry as competition intensifies and streamers get increasingly picky about constantly rising subscription fees.The end of Freevee also marks another product in the Amazon graveyard. Other products that Amazon has killed since 2023 includeAstro for Business robots, Amazon Halo fitness trackers, theAlexa Built-in smartphone app, and the Amazon Drive file storage service.Scharon HardingSenior Product ReviewerScharon HardingSenior Product Reviewer Scharon is Ars Technicas Senior Product Reviewer writing news, reviews, and analysis on consumer technology, including laptops, mechanical keyboards, and monitors. Shes based in Brooklyn. 15 Comments Prev story0 Commentaires 0 Parts 171 Vue

ARSTECHNICA.COMAmazon ends free ad-supported streaming service after Prime Video with ads debutsFarewell, Freevee Amazon ends free ad-supported streaming service after Prime Video with ads debuts Selling subscriptions to Prime Video with ads is more lucrative for Amazon. Scharon Harding Nov 13, 2024 3:56 pm | 15 A shot from the Freevee original series Bosch: Legacy. Credit: Amazon Freevee/YouTube A shot from the Freevee original series Bosch: Legacy. Credit: Amazon Freevee/YouTube Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreAmazon is shutting down Freevee, its free ad-supported streaming television (FAST) service, as it heightens focus on selling ads on its Prime Video subscription service.Amazon, which has owned IMDb since 1998, launched Freevee as IMDb Freedive in 2019. The service let people watch movies and shows, including Freevee originals, on demand without a subscription fee. Amazon's streaming offering was also previously known as IMDb TV and rebranded to Amazon Freevee in 2022.According to a report from Deadline this week, Freevee is being phased out over the coming weeks, but a firm closing date hasnt been shared publicly.Explaining the move to Deadline, an Amazon spokesperson said:To deliver a simpler viewing experience for customers, we have decided to phase out Freevee branding. There will be no change to the content available for Prime members, and a vast offering of free streaming content will still be accessible for non-Prime members, including select Originals from Amazon MGM Studios, a variety of licensed movies and series, and a broad library of FAST Channels all available on Prime Video.The shutdown also means that producers can no longer pitch shows to Freevee as Freevee originals, and any pending deals for such projects have been cancelled, Deadline reported.Freevee shows still available for freeFreevee original shows include Jury Duty, with James Marsden, Judy Justice, with Judge Judy Sheindlin, and Bosch:Legacy, a continuation of the Prime Video original series Bosch. The Freevee originals are expected to be available to watch on Prime Video after Freevee closes. People won't need a Prime Video or Prime subscription in order to watch these shows. As of this writing, I was also able to play some Freevee original movies without logging in to a Prime Video or Prime account. Prime Video has also made some Prime Video originals, like The Lord of the Rings: The Rings of Power, available under a Freevee section in Prime Video where people can watch for free if they log in to an Amazon (Prime Video or Prime subscriptions not required) account. Before this week's announcement, Prime Video and Freevee were already sharing some content.Bloomberg reported this week that some Freevee shows will remain free to watch due to contractual prohibitions. Its unclear what might happen to such Freevee original content after said contractual obligations conclude.Freevee became redundant, confusingAmazon's FAST service seemed redundant after Amazon launched the Prime Video ad tier in January. With the subscription tier making money from subscription fees and ads shown on Prime Video with ads, it made little sense for Amazon to continue with Freevee, which only has the latter revenue stream.Pushing people from streaming off of Freevee to Prime Video could help Amazon attract people to Prime Video or Amazon's e-commerce site and simplify tracking what people are streaming. Meanwhile, Amazon plans to show more ads on Prime Video in 2025 than it did this year.Two anonymous sources told advertising trade publication Adweek in February that the two services were confusing subscribers and ad buyers. At the time, the publication claimed that Amazon was laying the groundwork" to kill Freevee "for months. It cited two anonymous people familiar with the matter who pointed to moves like Amazon shifting Freevee technical workers to working on the Prime Video ads infrastructure and Amazon laying off Freevee marketing and strategy employees. In February, Amazon denied that it was ending Freevee, saying it was "an important streaming offering providing both Prime and non-Prime customers thousands of hit movies, shows, and originals, all for free, per Adweek.Freevees demise comes as streaming providers try navigating a booming market where profits remain elusive and ad businesses are still developing. Some industry stakeholders and analysts are expecting more consolidation in the streaming industry as competition intensifies and streamers get increasingly picky about constantly rising subscription fees.The end of Freevee also marks another product in the Amazon graveyard. Other products that Amazon has killed since 2023 includeAstro for Business robots, Amazon Halo fitness trackers, theAlexa Built-in smartphone app, and the Amazon Drive file storage service.Scharon HardingSenior Product ReviewerScharon HardingSenior Product Reviewer Scharon is Ars Technicas Senior Product Reviewer writing news, reviews, and analysis on consumer technology, including laptops, mechanical keyboards, and monitors. Shes based in Brooklyn. 15 Comments Prev story0 Commentaires 0 Parts 171 Vue -

WWW.INFORMATIONWEEK.COMWhere IT Consultancies Expect to Focus in 2025In the past few years, artificial intelligence has dominated New Years predictions. While the same can be said about 2025, scalability, responsibility, and safety will be stronger themes.For example, global business and technology consulting firm West Monroe Partners sees data and data governance being major focus areas.Its no longer just about quick wins or isolated use cases. The focus is shifting towards building robust data platforms that can support long-term business goals as they move forward, says Cory Chaplin, technology and experience practice leader at West Monroe. A key part of this evolution is ensuring that organizations have the right data foundation in place which in turn allows them to harness the full potential of advanced uses like analytics and AI.Efforts Will Focus on Responsible and Safe UseGenAI has caught the attention of boards and CEOs, but its success hinges on having clean, accessible data.Much of whats driving conversations around AI today is not just the technology itself, but the need for businesses to rethink how they use data to unlock new opportunities, says Chaplin. AI is part of this equation, but data remains the foundation that everything else builds upon.West Monroe also sees a shift toward platform-enabled environments where software, data, and platforms converge.Rather than creating everything from scratch, companies are focusing on selecting, configuring, and integrating the right platforms to drive value. The key challenge now is helping clients leverage the platforms they already have and making sure they can get the most out of them, says Chaplin. As a result, IT teams need to develop cross-functional skills that blend software development, platform integration and data management. This convergence of skills is where we see impact -- helping clients navigate the complexities of platform integration and optimization in a fast-evolving landscape.Right now, organizations face significant challenges keeping pace with rapid technological advancements, especially with AI evolving so quickly. While many organizations have built substantial product and data teams, their ability to adapt and innovate at business speed often falls short.Cory Chaplin, West MonroeIts not just about having the right headcount. Its about the capacity to move quickly and embrace new technologies, says Chaplin. Even with skilled talent, internal teams can get bogged down by established processes and pre-existing organizational structures. The demand for specialized expertise in AI and data-driven fields continues to outpace supply, complicating their transformation journeys. This is where we provide the support needed to challenge existing paradigms and accelerate their progress.Over the last few years, there has been a gap between expectations and progress. Despite the hype surrounding AI, data, and new technologies, many organizations have struggled to realize the full value of their investments, irrespective of industry.Organizations are tired of chasing buzzwords, says Chaplin. They want AI to be a productive part of their operations, working behind the scenes to enhance existing platforms, support their teams and drive growth. They [also] want help embedding AI into their current operations, ensuring that its not just another shiny tool, but a core driver of growth and efficiency within existing business operations.AI Plus ModernizationThe demand for AI/ML and GenAI is growing across industries, particularly in areas like automation, predictive analytics, and personalized customer experiences. Data and analytics remain crucial as businesses aim to harness their data to make smarter, faster decisions. Cloud and application modernization are also essential as many organizations want to update legacy systems, improve agility, and adopt cloud-native technologies.Many clients need help with scalability, technology integration and data modernization. They may need help with outdated systems, underutilized data or the complexities of adopting new technologies, particularly in highly regulated industries like life sciences and energy, says Stephen Senterfit, president of enterprise business consultancy Smartbridge, Additionally, the rapid pace of innovation can make it hard for businesses to know where to focus their resources.With this help, enterprises should see improved operational efficiencies, better data-driven decision-making and more robust customer engagement. They will also be able to scale rapidly, remain competitive in their respective industries and innovate in ways that were previously out of reach.Smartbridge's relationship with clients is evolving from technology service provider to strategic partner, says Senterfit. Clients expect us to help them navigate broader digital strategies, advise them on tech implementation and innovation roadmaps, and future-proof their business models.AI-Related Change Management and UpskillingAs AI continues to become increasingly mainstream, theres a growing demand for organizational design, change management, and upskilling services designed to get more out of new ways of working and managing organizational shifts.Clients are increasingly asking, How do we build AI into our business? says West Monroes Chaplin. This isnt just about implementing new technologies, its about preparing the workforce and the organization to operate in a world where AI plays a significant role. Theres momentum building around this intersection of organizational design, change management, and upskilling -- helping companies function effectively in an AI-driven environment.CybersecurityAs businesses adopt AI, use more data, and deploy new emerging technologies, cybersecurity becomes even more critical.With increased platform adoption, securing confidential information is paramount. We see a renewed emphasis on secure software development practices and tighter controls on AI/ML model usage, ensuring protection as organizations scale their AI initiatives, says Chaplin. By understanding and utilizing their data effectively, organizations can foster a culture where data-driven insights inform decision-making processes. This not only enhances operational efficiency but also drives innovation across the business.Organizations should consider data a critical asset that requires attention and strategic use. This requires a mindset shift that can lead to improved outcomes across various functions, particularly in cybersecurity, where organizations can de-risk their operations even amid rapid changes.With platform-enabled environments, organizations can reduce their reliance on fully custom solutions. By leveraging existing platforms and their roadmaps, companies can enhance their agility and speed of implementation, says Chaplin. This approach allows for a greater emphasis on building from proven solutions rather than creating from scratch, ultimately facilitating quicker adaptations to market demands.Data and Customer FocusAs companies increasingly focus on digital transformation, data-driven decision-making and improving customer engagement, they look to consultancies for help.Our data engineering practice will play a central role in helping businesses migrate from legacy systems to the cloud, a significant challenge for many organizations as they modernize their analytical workloads, says Alex Mazanov, CEO at full service consulting firm T1A. By 2025, we anticipate an even greater demand for scalable, cloud-based data architectures capable of handling vast amounts of real-time data. Many organizations are moving away from outdated legacy systems, such as SAS, to modern cloud platforms like Databricks.Continued data explosion, combined with AI advances, is pushing companies to modernize their data infrastructure.Businesses are increasingly challenged to make faster, smarter decisions, and well provide the tools and expertise to architect solutions that scale with their needs, ensuring data is a true asset rather than a burden, says Mazanov. Additionally, transitioning to open-source platforms and government-compliant technologies will help businesses stay agile, cost-efficient and aligned with regulatory demands.AI is also becoming more prevalent in CRM scenarios because it increases productivity, reduces costs, and helps maximize customer lifetime value. Specifically, they want to enhance loyalty programs, improve customer retention and use data analytics to predict behavior across the entire customer lifecycle.Optimizing the customer journey will continue to be crucial in 2025, as businesses will increasingly focus on maximizing customer lifetime value [using] advanced tools and strategies to improve every touchpoint in the customer journey, says Mazanov. Many companies struggle to optimize this.Finally, process intelligence will be even more critical by 2025, as companies continue to streamline operations, reduce inefficiencies, and cut costs in an increasingly competitive market. AI and machine learning will be used to automate and optimize business processes. As industries move toward hyper-automation, Mazanov says clients will need to become more agile and efficient.Organizations are constantly seeking ways to reduce operational costs while improving efficiency, he says. By 2025, companies will face rising expectations to do more with less, and process intelligence will be a vital tool to achieve this. Our solutions will focus on creating smarter, more efficient workflows, powered by AI to reduce manual tasks and human error.Stephen Senterfit, SmartbridgeMany organizations are experiencing the dichotomy of being challenged by the complexity of their data and needing real-time insights. Meanwhile, customer expectations continue to grow.[Our relationship with clients [is] evolving from being a service provider to a strategic partner. By 2025, we anticipate playing a more consultative role, helping clients not just implement technology but also reimagine their business models around data and AI, says Mazanov. Well be focused on long-term partnerships, co-creating innovative solutions that align with their broader business strategy.Get Help When You Need ItCompanies have many different reasons for seeking outside assistance. Sometimes the engagement is tactical and sometimes its strategic. The latter is becoming more common because it drives more value.One of the least valuable engagements is hiring a consultancy to solve a problem without internal involvement. When the consultants conclude their arrangement, considerable valuable knowledge may be lost. Working as a partner results in greater transparency and continuity.One benefit of using consultants, not mentioned above but critically important, is insight clients may lack, such as having a deep understanding of how emerging technology is utilized in the clients particular industry and whats worked best for other industries and why, which can result in important insights and innovations. They also need to understand the clients business goals so that IT implementations deliver business value.0 Commentaires 0 Parts 169 Vue

WWW.INFORMATIONWEEK.COMWhere IT Consultancies Expect to Focus in 2025In the past few years, artificial intelligence has dominated New Years predictions. While the same can be said about 2025, scalability, responsibility, and safety will be stronger themes.For example, global business and technology consulting firm West Monroe Partners sees data and data governance being major focus areas.Its no longer just about quick wins or isolated use cases. The focus is shifting towards building robust data platforms that can support long-term business goals as they move forward, says Cory Chaplin, technology and experience practice leader at West Monroe. A key part of this evolution is ensuring that organizations have the right data foundation in place which in turn allows them to harness the full potential of advanced uses like analytics and AI.Efforts Will Focus on Responsible and Safe UseGenAI has caught the attention of boards and CEOs, but its success hinges on having clean, accessible data.Much of whats driving conversations around AI today is not just the technology itself, but the need for businesses to rethink how they use data to unlock new opportunities, says Chaplin. AI is part of this equation, but data remains the foundation that everything else builds upon.West Monroe also sees a shift toward platform-enabled environments where software, data, and platforms converge.Rather than creating everything from scratch, companies are focusing on selecting, configuring, and integrating the right platforms to drive value. The key challenge now is helping clients leverage the platforms they already have and making sure they can get the most out of them, says Chaplin. As a result, IT teams need to develop cross-functional skills that blend software development, platform integration and data management. This convergence of skills is where we see impact -- helping clients navigate the complexities of platform integration and optimization in a fast-evolving landscape.Right now, organizations face significant challenges keeping pace with rapid technological advancements, especially with AI evolving so quickly. While many organizations have built substantial product and data teams, their ability to adapt and innovate at business speed often falls short.Cory Chaplin, West MonroeIts not just about having the right headcount. Its about the capacity to move quickly and embrace new technologies, says Chaplin. Even with skilled talent, internal teams can get bogged down by established processes and pre-existing organizational structures. The demand for specialized expertise in AI and data-driven fields continues to outpace supply, complicating their transformation journeys. This is where we provide the support needed to challenge existing paradigms and accelerate their progress.Over the last few years, there has been a gap between expectations and progress. Despite the hype surrounding AI, data, and new technologies, many organizations have struggled to realize the full value of their investments, irrespective of industry.Organizations are tired of chasing buzzwords, says Chaplin. They want AI to be a productive part of their operations, working behind the scenes to enhance existing platforms, support their teams and drive growth. They [also] want help embedding AI into their current operations, ensuring that its not just another shiny tool, but a core driver of growth and efficiency within existing business operations.AI Plus ModernizationThe demand for AI/ML and GenAI is growing across industries, particularly in areas like automation, predictive analytics, and personalized customer experiences. Data and analytics remain crucial as businesses aim to harness their data to make smarter, faster decisions. Cloud and application modernization are also essential as many organizations want to update legacy systems, improve agility, and adopt cloud-native technologies.Many clients need help with scalability, technology integration and data modernization. They may need help with outdated systems, underutilized data or the complexities of adopting new technologies, particularly in highly regulated industries like life sciences and energy, says Stephen Senterfit, president of enterprise business consultancy Smartbridge, Additionally, the rapid pace of innovation can make it hard for businesses to know where to focus their resources.With this help, enterprises should see improved operational efficiencies, better data-driven decision-making and more robust customer engagement. They will also be able to scale rapidly, remain competitive in their respective industries and innovate in ways that were previously out of reach.Smartbridge's relationship with clients is evolving from technology service provider to strategic partner, says Senterfit. Clients expect us to help them navigate broader digital strategies, advise them on tech implementation and innovation roadmaps, and future-proof their business models.AI-Related Change Management and UpskillingAs AI continues to become increasingly mainstream, theres a growing demand for organizational design, change management, and upskilling services designed to get more out of new ways of working and managing organizational shifts.Clients are increasingly asking, How do we build AI into our business? says West Monroes Chaplin. This isnt just about implementing new technologies, its about preparing the workforce and the organization to operate in a world where AI plays a significant role. Theres momentum building around this intersection of organizational design, change management, and upskilling -- helping companies function effectively in an AI-driven environment.CybersecurityAs businesses adopt AI, use more data, and deploy new emerging technologies, cybersecurity becomes even more critical.With increased platform adoption, securing confidential information is paramount. We see a renewed emphasis on secure software development practices and tighter controls on AI/ML model usage, ensuring protection as organizations scale their AI initiatives, says Chaplin. By understanding and utilizing their data effectively, organizations can foster a culture where data-driven insights inform decision-making processes. This not only enhances operational efficiency but also drives innovation across the business.Organizations should consider data a critical asset that requires attention and strategic use. This requires a mindset shift that can lead to improved outcomes across various functions, particularly in cybersecurity, where organizations can de-risk their operations even amid rapid changes.With platform-enabled environments, organizations can reduce their reliance on fully custom solutions. By leveraging existing platforms and their roadmaps, companies can enhance their agility and speed of implementation, says Chaplin. This approach allows for a greater emphasis on building from proven solutions rather than creating from scratch, ultimately facilitating quicker adaptations to market demands.Data and Customer FocusAs companies increasingly focus on digital transformation, data-driven decision-making and improving customer engagement, they look to consultancies for help.Our data engineering practice will play a central role in helping businesses migrate from legacy systems to the cloud, a significant challenge for many organizations as they modernize their analytical workloads, says Alex Mazanov, CEO at full service consulting firm T1A. By 2025, we anticipate an even greater demand for scalable, cloud-based data architectures capable of handling vast amounts of real-time data. Many organizations are moving away from outdated legacy systems, such as SAS, to modern cloud platforms like Databricks.Continued data explosion, combined with AI advances, is pushing companies to modernize their data infrastructure.Businesses are increasingly challenged to make faster, smarter decisions, and well provide the tools and expertise to architect solutions that scale with their needs, ensuring data is a true asset rather than a burden, says Mazanov. Additionally, transitioning to open-source platforms and government-compliant technologies will help businesses stay agile, cost-efficient and aligned with regulatory demands.AI is also becoming more prevalent in CRM scenarios because it increases productivity, reduces costs, and helps maximize customer lifetime value. Specifically, they want to enhance loyalty programs, improve customer retention and use data analytics to predict behavior across the entire customer lifecycle.Optimizing the customer journey will continue to be crucial in 2025, as businesses will increasingly focus on maximizing customer lifetime value [using] advanced tools and strategies to improve every touchpoint in the customer journey, says Mazanov. Many companies struggle to optimize this.Finally, process intelligence will be even more critical by 2025, as companies continue to streamline operations, reduce inefficiencies, and cut costs in an increasingly competitive market. AI and machine learning will be used to automate and optimize business processes. As industries move toward hyper-automation, Mazanov says clients will need to become more agile and efficient.Organizations are constantly seeking ways to reduce operational costs while improving efficiency, he says. By 2025, companies will face rising expectations to do more with less, and process intelligence will be a vital tool to achieve this. Our solutions will focus on creating smarter, more efficient workflows, powered by AI to reduce manual tasks and human error.Stephen Senterfit, SmartbridgeMany organizations are experiencing the dichotomy of being challenged by the complexity of their data and needing real-time insights. Meanwhile, customer expectations continue to grow.[Our relationship with clients [is] evolving from being a service provider to a strategic partner. By 2025, we anticipate playing a more consultative role, helping clients not just implement technology but also reimagine their business models around data and AI, says Mazanov. Well be focused on long-term partnerships, co-creating innovative solutions that align with their broader business strategy.Get Help When You Need ItCompanies have many different reasons for seeking outside assistance. Sometimes the engagement is tactical and sometimes its strategic. The latter is becoming more common because it drives more value.One of the least valuable engagements is hiring a consultancy to solve a problem without internal involvement. When the consultants conclude their arrangement, considerable valuable knowledge may be lost. Working as a partner results in greater transparency and continuity.One benefit of using consultants, not mentioned above but critically important, is insight clients may lack, such as having a deep understanding of how emerging technology is utilized in the clients particular industry and whats worked best for other industries and why, which can result in important insights and innovations. They also need to understand the clients business goals so that IT implementations deliver business value.0 Commentaires 0 Parts 169 Vue -

WWW.INFORMATIONWEEK.COMWhy CIOs Must Lead the Charge on Sustainable TechnologyHiren Hasmukh, CEO of TeqtivityNovember 13, 20244 Min ReadKanawatTH via Alamy StockEvery week, I meet CIOs who tell me the same story: Environmental sustainability has moved from their wish list to their priority list. Regulatory pressures demand they track carbon emissions. Boards expect detailed reports on energy usage. Customers scrutinize their sustainability practices. This puts leaders in a tough position -- in order to remain competitive in the marketplace, we must continue to keep up with advancing technology. But how do we stay sustainable in doing so?The New Reality of Sustainable TechnologyGreen technology isn't just about reducing environmental impact -- it's about rethinking how we deliver IT services. Instead of asking ourselves how to save energy, we must ask ourselves larger questions. How can sustainable IT drive innovation? How can it create a competitive advantage? The challenge isn't whether to act, but how to begin.The Value of Green ITMany executives believe that green IT is only about saving money. However, cost savings are only one aspect of sustainable technological practices. Let's break down the real business impact:Immediate cost reduction. Energy costs typically represent 40-60% of a data center's operating expenses. Organizations implementing efficient power management often see utility bills drop within the first quarter. But that's just the beginning.Related:Extended asset value. Smart lifecycle asset management reduces e-waste and impacts the balance sheet. When organizations move from reactive to proactive maintenance, they often discover their technology investments can deliver value for years longer than expected.Risk mitigation. With environmental regulations tightening globally, companies investing in sustainable technology are now better positioned to avoid future penalties and compliance costs.Competitive advantage. The Business of Sustainability study reported 78% of consumers want to buy from environmentally friendly organizations. Companies that commit to strong environmental practices will attract both more clients and talent.Moving from Vision to ActionThe business case for sustainable technology is clear. Here are a few ways your team can get started with building a more sustainable IT infrastructure:Start with data center efficiency: Heres a startling fact: Research shows that almost a third of data center servers are considered zombies --meaning they consume power while serving no purpose. Why does that happen? Poor documentation means nobody knows what to turn off. IT teams should implement automated tracking systems to map every asset's purpose and usage. An automated process will help further eliminate these zombies and optimize remaining systems.Embrace the cloud strategically: Major cloud providers have invested billions in renewable energy and efficient data centers, making them an attractive option for sustainable IT. However, using cloud solutions requires strategy. Teams should map their workloads carefully -- some applications deliver better environmental and business outcomes on-premises or in hybrid environments.Rethink device lifecycles: Many organizations default to replacing devices every three years, regardless of whether they need to or not. Companies can significantly extend the lifecycles of their devices through proactive maintenance and matching device capabilities to user requirements. This reduces e-waste while delivering substantial cost savings.Related:Building a Culture of SustainabilityOrganizations should also create a culture that embraces these practices wholeheartedly. Here's what works:Start with why: Help employees understand the environmental impact of technology choices. When teams understand how their daily decisions within the company affect the environment, they become partners in the solution.Related:Make it measurable: Set achievable energy reduction and sustainable practices goals. Track and share progress regularly. What gets measured gets managed.Celebrate progress: Recognize teams and individuals who champion sustainable practices. Success stories inspire others and build momentum for broader changes.The Path ForwardAs technology leaders, we stand at a crucial intersection. The decisions we make today about our IT infrastructure will impact our planet for years to come.Most importantly, our teams are ready for change. Theyre looking to us for leadership on sustainability. Every day we wait is a missed opportunity to drive value, reduce costs, and make a meaningful environmental impact.The question isn't whether to embrace sustainable technology -- it's how quickly we can make it happen. The tools exist. The business case is clear. The time for CIOs to lead this charge is now.About the AuthorHiren HasmukhCEO of TeqtivityHiren Hasmukh is the CEO and founder of Teqtivity, a leading IT Asset Management solutions provider. With over two decades of experience in the technology sector, Hiren has been at the forefront of developing innovative ITAM strategies for businesses navigating the complexities of digital transformation. Under his leadership, Teqtivity has evolved from a smart locker concept to a comprehensive ITAM solution serving companies of all sizes.See more from Hiren HasmukhNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also LikeWebinarsMore WebinarsReportsMore Reports0 Commentaires 0 Parts 160 Vue

WWW.INFORMATIONWEEK.COMWhy CIOs Must Lead the Charge on Sustainable TechnologyHiren Hasmukh, CEO of TeqtivityNovember 13, 20244 Min ReadKanawatTH via Alamy StockEvery week, I meet CIOs who tell me the same story: Environmental sustainability has moved from their wish list to their priority list. Regulatory pressures demand they track carbon emissions. Boards expect detailed reports on energy usage. Customers scrutinize their sustainability practices. This puts leaders in a tough position -- in order to remain competitive in the marketplace, we must continue to keep up with advancing technology. But how do we stay sustainable in doing so?The New Reality of Sustainable TechnologyGreen technology isn't just about reducing environmental impact -- it's about rethinking how we deliver IT services. Instead of asking ourselves how to save energy, we must ask ourselves larger questions. How can sustainable IT drive innovation? How can it create a competitive advantage? The challenge isn't whether to act, but how to begin.The Value of Green ITMany executives believe that green IT is only about saving money. However, cost savings are only one aspect of sustainable technological practices. Let's break down the real business impact:Immediate cost reduction. Energy costs typically represent 40-60% of a data center's operating expenses. Organizations implementing efficient power management often see utility bills drop within the first quarter. But that's just the beginning.Related:Extended asset value. Smart lifecycle asset management reduces e-waste and impacts the balance sheet. When organizations move from reactive to proactive maintenance, they often discover their technology investments can deliver value for years longer than expected.Risk mitigation. With environmental regulations tightening globally, companies investing in sustainable technology are now better positioned to avoid future penalties and compliance costs.Competitive advantage. The Business of Sustainability study reported 78% of consumers want to buy from environmentally friendly organizations. Companies that commit to strong environmental practices will attract both more clients and talent.Moving from Vision to ActionThe business case for sustainable technology is clear. Here are a few ways your team can get started with building a more sustainable IT infrastructure:Start with data center efficiency: Heres a startling fact: Research shows that almost a third of data center servers are considered zombies --meaning they consume power while serving no purpose. Why does that happen? Poor documentation means nobody knows what to turn off. IT teams should implement automated tracking systems to map every asset's purpose and usage. An automated process will help further eliminate these zombies and optimize remaining systems.Embrace the cloud strategically: Major cloud providers have invested billions in renewable energy and efficient data centers, making them an attractive option for sustainable IT. However, using cloud solutions requires strategy. Teams should map their workloads carefully -- some applications deliver better environmental and business outcomes on-premises or in hybrid environments.Rethink device lifecycles: Many organizations default to replacing devices every three years, regardless of whether they need to or not. Companies can significantly extend the lifecycles of their devices through proactive maintenance and matching device capabilities to user requirements. This reduces e-waste while delivering substantial cost savings.Related:Building a Culture of SustainabilityOrganizations should also create a culture that embraces these practices wholeheartedly. Here's what works:Start with why: Help employees understand the environmental impact of technology choices. When teams understand how their daily decisions within the company affect the environment, they become partners in the solution.Related:Make it measurable: Set achievable energy reduction and sustainable practices goals. Track and share progress regularly. What gets measured gets managed.Celebrate progress: Recognize teams and individuals who champion sustainable practices. Success stories inspire others and build momentum for broader changes.The Path ForwardAs technology leaders, we stand at a crucial intersection. The decisions we make today about our IT infrastructure will impact our planet for years to come.Most importantly, our teams are ready for change. Theyre looking to us for leadership on sustainability. Every day we wait is a missed opportunity to drive value, reduce costs, and make a meaningful environmental impact.The question isn't whether to embrace sustainable technology -- it's how quickly we can make it happen. The tools exist. The business case is clear. The time for CIOs to lead this charge is now.About the AuthorHiren HasmukhCEO of TeqtivityHiren Hasmukh is the CEO and founder of Teqtivity, a leading IT Asset Management solutions provider. With over two decades of experience in the technology sector, Hiren has been at the forefront of developing innovative ITAM strategies for businesses navigating the complexities of digital transformation. Under his leadership, Teqtivity has evolved from a smart locker concept to a comprehensive ITAM solution serving companies of all sizes.See more from Hiren HasmukhNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also LikeWebinarsMore WebinarsReportsMore Reports0 Commentaires 0 Parts 160 Vue -

WWW.NEWSCIENTIST.COMWorlds largest coral is 300 years old and was discovered by accidentMeasuring the massive coralInigo San Felix/National Geographic SocietyIn the south-west Pacific, off the coast of one of the tropical Solomon Islands, a giant structure beneath the waters surface has just been identified as the worlds largest known coral.Visiting the remote site in mid-October, a team of scientists and film-makers from National Geographic thought the object was so large, it must be the remains of a shipwreck.But when underwater cinematographer Manu San Flix jumped into the water to take a closer look, he was astonished by what he saw.AdvertisementI remember perfectly just jumping and looking down, and I was surprised, he told reporters during a briefing. Instead of a shipwreck, San Flix had stumbled upon the largest coral ever discovered. It is enormous, he said. The size is close to the size of a cathedral.The coral, which lies a few hundred metres off the eastern coast of Malaulalo Island, has been identified as the species Pavona clavus. It measures 34 metres wide by 32 metres long, making it larger than a blue whale, and is thought to be 300 years old. A monthly celebration of the biodiversity of our planets animals, plants and other organisms.Sign up to newsletterThe discovery was a happy accident, says Enric Sala of National Geographics Pristine Seas project, whichaims to inspire governments to protect ocean ecosystems through exploration andresearch. It is by far the largest single coral colony ever discovered, easily beating the previous record holder a giant Porites colony found in American Samoa in 2019, which was 22.4 metres in diameter and 8 metres in height.Over the past two years, record-breaking ocean temperatures have triggered a wave of coral bleaching events across the world. But while other reefs around the Solomon Islands are showing signs of bleaching, Sala says the huge P. clavus coral is looking healthy. It is a vital habitat for ocean life, he says, providing shelter and food for fish, shrimp, worms and crabs. Its like a big patch of old growth forest.But the coral isnt immune from ecological threats, from local pollution and overfishing to global climate change. Sala says he would like to see more marine protected areas (MPAs) established to shield marine life from local pollution, alongside global action to tackle climate change. Protecting the reef cannot make the water cooler, cannot prevent the warming of the ocean, he says. We need to fix that, we need to reduce carbon emissions. But MPAs can help us buy time by making the reefs more resilient.Topics:0 Commentaires 0 Parts 172 Vue

WWW.NEWSCIENTIST.COMWorlds largest coral is 300 years old and was discovered by accidentMeasuring the massive coralInigo San Felix/National Geographic SocietyIn the south-west Pacific, off the coast of one of the tropical Solomon Islands, a giant structure beneath the waters surface has just been identified as the worlds largest known coral.Visiting the remote site in mid-October, a team of scientists and film-makers from National Geographic thought the object was so large, it must be the remains of a shipwreck.But when underwater cinematographer Manu San Flix jumped into the water to take a closer look, he was astonished by what he saw.AdvertisementI remember perfectly just jumping and looking down, and I was surprised, he told reporters during a briefing. Instead of a shipwreck, San Flix had stumbled upon the largest coral ever discovered. It is enormous, he said. The size is close to the size of a cathedral.The coral, which lies a few hundred metres off the eastern coast of Malaulalo Island, has been identified as the species Pavona clavus. It measures 34 metres wide by 32 metres long, making it larger than a blue whale, and is thought to be 300 years old. A monthly celebration of the biodiversity of our planets animals, plants and other organisms.Sign up to newsletterThe discovery was a happy accident, says Enric Sala of National Geographics Pristine Seas project, whichaims to inspire governments to protect ocean ecosystems through exploration andresearch. It is by far the largest single coral colony ever discovered, easily beating the previous record holder a giant Porites colony found in American Samoa in 2019, which was 22.4 metres in diameter and 8 metres in height.Over the past two years, record-breaking ocean temperatures have triggered a wave of coral bleaching events across the world. But while other reefs around the Solomon Islands are showing signs of bleaching, Sala says the huge P. clavus coral is looking healthy. It is a vital habitat for ocean life, he says, providing shelter and food for fish, shrimp, worms and crabs. Its like a big patch of old growth forest.But the coral isnt immune from ecological threats, from local pollution and overfishing to global climate change. Sala says he would like to see more marine protected areas (MPAs) established to shield marine life from local pollution, alongside global action to tackle climate change. Protecting the reef cannot make the water cooler, cannot prevent the warming of the ocean, he says. We need to fix that, we need to reduce carbon emissions. But MPAs can help us buy time by making the reefs more resilient.Topics:0 Commentaires 0 Parts 172 Vue -

WWW.NEWSCIENTIST.COMMounting evidence points to air pollution as a cause of eczemaAir pollution is hard to avoid, particularly for city dwellersRon Adar/AlamyAir pollution is increasingly being linked to a raised risk of eczema, with the latest study showing a clear relationship between the exposure and the skin condition.Vehicles and power plants release pollutant particles with a diameter of 2.5 micrometres or less, called PM2.5. These have previously been linked to a higher risk of eczema, which is thought to be the result of an over-active immune system causing inflammation that makes skin dry and itchy. AdvertisementTo gather more evidence, Jeffrey Cohen at the Yale School of Medicine and his colleagues analysed the medical records of more than 280,000 people, who were mostly in their 50s and took part in the All of Us Research Program. This collects health data from a diverse group of people in the US, with an emphasis on those who are usually underrepresented in research, such as ethnic minorities.The researchers also looked at average PM2.5 levels where these people lived, using data collected in 2015 by the Centre for Air, Climate, and Energy Solutions in Virginia.They then compared PM2.5 levels in 788 locations across the US against eczema cases, which were diagnosed up until mid-2022. They found that for every 10 microgram per cubic metre increase in PM2.5, eczema rates more than doubled. In more polluted areas of the country, there was more eczema, says Cohen. Get the most essential health and fitness news in your inbox every Saturday.Sign up to newsletterThe team accounted for factors that could affect the results, such as ethnicity and whether people smoked or had food allergies.The study brings forward the science by nicely showing a clear correlation in a large population, says Giuseppe Valacchi at North Carolina State University. PM2.5 may trigger the immune system to cause inflammation when it comes into contact with skin, like pollen or dust mites can, says Valacchi. Inhaling it may also play a role, as this can ramp up inflammation around the body, he says.This research should give governments another reason to enforce policies that reduce air pollution, says Cohen. Meanwhile, people living in polluted areas can reduce their risk by wearing long sleeves or staying indoors when pollution levels are particularly high, says Valacchi.Journal reference:PLoS ONE DOI: 10.1371/journal.pone.0310498 Topics:0 Commentaires 0 Parts 163 Vue

WWW.NEWSCIENTIST.COMMounting evidence points to air pollution as a cause of eczemaAir pollution is hard to avoid, particularly for city dwellersRon Adar/AlamyAir pollution is increasingly being linked to a raised risk of eczema, with the latest study showing a clear relationship between the exposure and the skin condition.Vehicles and power plants release pollutant particles with a diameter of 2.5 micrometres or less, called PM2.5. These have previously been linked to a higher risk of eczema, which is thought to be the result of an over-active immune system causing inflammation that makes skin dry and itchy. AdvertisementTo gather more evidence, Jeffrey Cohen at the Yale School of Medicine and his colleagues analysed the medical records of more than 280,000 people, who were mostly in their 50s and took part in the All of Us Research Program. This collects health data from a diverse group of people in the US, with an emphasis on those who are usually underrepresented in research, such as ethnic minorities.The researchers also looked at average PM2.5 levels where these people lived, using data collected in 2015 by the Centre for Air, Climate, and Energy Solutions in Virginia.They then compared PM2.5 levels in 788 locations across the US against eczema cases, which were diagnosed up until mid-2022. They found that for every 10 microgram per cubic metre increase in PM2.5, eczema rates more than doubled. In more polluted areas of the country, there was more eczema, says Cohen. Get the most essential health and fitness news in your inbox every Saturday.Sign up to newsletterThe team accounted for factors that could affect the results, such as ethnicity and whether people smoked or had food allergies.The study brings forward the science by nicely showing a clear correlation in a large population, says Giuseppe Valacchi at North Carolina State University. PM2.5 may trigger the immune system to cause inflammation when it comes into contact with skin, like pollen or dust mites can, says Valacchi. Inhaling it may also play a role, as this can ramp up inflammation around the body, he says.This research should give governments another reason to enforce policies that reduce air pollution, says Cohen. Meanwhile, people living in polluted areas can reduce their risk by wearing long sleeves or staying indoors when pollution levels are particularly high, says Valacchi.Journal reference:PLoS ONE DOI: 10.1371/journal.pone.0310498 Topics:0 Commentaires 0 Parts 163 Vue -

WWW.TECHNOLOGYREVIEW.COMUnlocking the mysteries of complex biological systems with agentic AIThe complexity of biology has long been a double-edged sword for scientific and medical progress. On one hand, the intricacy of systems (like the human immune response) offers countless opportunities for breakthroughs in medicine and healthcare. On the other hand, that very complexity has often stymied researchers, leaving some of the most significant medical challengeslike cancer or autoimmune diseaseswithout clear solutions. The field needs a way to decipher this incredible complexity. Could the rise of agentic AI, artificial intelligence capable of autonomous decision-making and action, be the key to breaking through this impasse? Agentic AI is not just another tool in the scientific toolkit but a paradigm shift: by allowing autonomous systems to not only collect and process data but also to independently hypothesize, experiment, and even make decisions, agentic AI could fundamentally change how we approach biology. The mindboggling complexity of biological systems To understand why agentic AI holds so much promise, we first need to grapple with the scale of the challenge. Biological systems, particularly human ones, are incredibly complexlayered, dynamic, and interdependent. Take the immune system, for example. It simultaneously operates across multiple levels, from individual molecules to entire organs, adapting and responding to internal and external stimuli in real-time. Traditional research approaches, while powerful, struggle to account for this vast complexity. The problem lies in the sheer volume and interconnectedness of biological data. The immune system alone involves interactions between millions of cells, proteins, and signaling pathways, each influencing the other in real time. Making sense of this tangled web is almost insurmountable for human researchers. Enter AI agents: How can they help? This is where agentic AI steps in. Unlike traditional machine learning models, which require vast amounts of curated data and are typically designed to perform specific, narrow tasks, agentic AI systems can ingest unstructured and diverse datasets from multiple sources and can operate autonomously with a more generalist approach. Beyond this, AI agents are unbound by conventional scientific thinking. They can connect disparate domains and test seemingly improbable hypotheses that may reveal novel insights. What might initially appear as a counterintuitive series of experiments could help uncover hidden patterns or mechanisms, generating new knowledge that can form the foundation for breakthroughs in areas like drug discovery, immunology, or precision medicine. These experiments are executed at unprecedented speed and scale through robotic, fully automated laboratories, where AI agents conduct trials in a continuous, round-the-clock workflow. These labs, equipped with advanced automation technologies, can handle everything from ordering reagents, preparing biological samples, to conducting high-throughput screenings. In particular, the use of patient-derived organoids3D miniaturized versions of organs and tissuesenables AI-driven experiments to more closely mimic the real-world conditions of human biology. This integration of agentic AI and robotic labs allows for large-scale exploration of complex biological systems, and has the potential to rapidly accelerate the pace of discovery. From agentic AI to AGI Owkins next frontier: Unlocking the immune system with agentic AI Agentic AI has already begun pushing the boundaries of whats possible in biology, but the next frontier lies in fully decoding one of the most complex and crucial systems in human health: the immune system. Owkin is building the foundations for an advanced form of intelligencean AGIcapable of understanding the immune system in unprecedented detail. The next evolution of our AI ecosystem, called Owkin K, could redefine how we understand, detect, and treat immune-related diseases like cancer and immuno-inflammatory disorders. Owkin K envisions a coordinated community of specialized AI agents that can autonomously access and interpret comprehensive scientific literature, large-scale biomedical data, and tap into the power of Owkins discovery engines. These agents are capable of planning and executing experiments in fully automated, robotized wet labs, where patient-derived organoids simulate real-world human biology. The results of these experiments feed back into the system, enabling continuous learning and refinement of the AI agents models. What makes Owkin K particularly exciting is its potential to tackle the immune systema biological network so complex that human intelligence alone has struggled to unravel it. By deploying AI agents with the ability to explore this intricate web autonomously, the project could reveal new therapeutic targets and strategies for immuno-oncology and autoimmune diseases, potentially accelerating the development of groundbreaking treatments. Navigating challenges and ethical considerations of agentic AI Of course, such powerful technology comes with significant challenges and ethical considerations, including trust, security, and transparency. But we must tackle these challenges as agentic AI becomes more integrated into healthcare and research. For example, we can develop mitigation plans that include rigorous validation protocols, real-time human oversight, and regulatory frameworks designed to ensure safety, accountability, and transparency. By prioritizing ethical design and close collaboration between AI systems and human experts, we can harness the potential of agentic AI while minimizing its risks. The future of biological research with agentic AI Agentic AI has the potential to reshape not just healthcare, but the very foundations of biological research. By allowing autonomous systems to explore the unknown, we may unlock new levels of understanding in areas like immunology, neuroscience, and genomicsfields that are currently constrained by the limits of human comprehension. We could soon see a world where AI-driven labs operate around the clock, pushing the boundaries of biology at speeds and scales that far exceed human capabilities. This would not only accelerate scientific discovery but also create new possibilities for personalized medicine, disease prevention, and even longevity. In the end, agentic AI may be more than just another tool for researchers. It could be the key to understanding life itselfone autonomous decision at a time. Davide Mantiero, PhD, Eric Durand, PhD, and Darius Meadon also contributed to this article. This content was produced by Owkin. It was not written by MIT Technology Reviews editorial staff.0 Commentaires 0 Parts 166 Vue