0 Комментарии

0 Поделились

Каталог

Каталог

-

Войдите, чтобы отмечать, делиться и комментировать!

-

WWW.DIGITALTRENDS.COMWindows 11 Recall officially comes to Intel and AMDMicrosoftMicrosoft is finally expanding support for the Recall AI feature to Copilot+ PCs running Intel and AMD processors after the function has returned from a bevy of issues.The company made Recall available to Copilot+ PCs exclusively running Qualcomm processors in a late-November Windows 11 update, giving Windows Insiders in the Dev Channel access to the AI feature that take snapshots of your PC so you can search and look up aspects of your device in the future.Recommended VideosAfter several mishaps with the Recall feature, including an issue where the function was not properly saving snapshots, the feature now appears stable enough to work on a wider range of Copilot+ PCs. Intel- and AMD-powered devices will receive the latest version of Recall as a software update on Friday. This 26120.2510 (KB5048780) update is also available only for the Windows Insiders Dev Channel. Despite prior privacy concerns surrounding the feature, Microsoft has been very intent in how Recall works on a device. While the models that make the feature work will install on your PC with the update, you must manually enable the snapshots function for Recall to work. Additionally, you can set the duration a device will save and delete snapshots. Finally, the feature does not record vital information within snapshots, such as credit card details, passwords, and personal ID numbers.RelatedThe update also includes a number of security updates to fortify the feature. Recall now requires Windows Hello facial recognition to confirm your identity before accessing snapshots. Additionally, you also need to use or install BitLocker and Secure Boot to use in conjunction with the feature.Microsoft is also highlighting the Click to do feature within Recall, which allows you to click an aspect of a snapshot in order to activate it into something functional on your desktop, such as copying text or saving images. The feature works by using the Windows key + mouse click.Recall has come a long way from first being announced much earlier this year. It was intended for a preview release in June, but the various controversies led to the feature being retracted from release and then repeatedly delayed.Editors Recommendations0 Комментарии 0 Поделились

WWW.DIGITALTRENDS.COMWindows 11 Recall officially comes to Intel and AMDMicrosoftMicrosoft is finally expanding support for the Recall AI feature to Copilot+ PCs running Intel and AMD processors after the function has returned from a bevy of issues.The company made Recall available to Copilot+ PCs exclusively running Qualcomm processors in a late-November Windows 11 update, giving Windows Insiders in the Dev Channel access to the AI feature that take snapshots of your PC so you can search and look up aspects of your device in the future.Recommended VideosAfter several mishaps with the Recall feature, including an issue where the function was not properly saving snapshots, the feature now appears stable enough to work on a wider range of Copilot+ PCs. Intel- and AMD-powered devices will receive the latest version of Recall as a software update on Friday. This 26120.2510 (KB5048780) update is also available only for the Windows Insiders Dev Channel. Despite prior privacy concerns surrounding the feature, Microsoft has been very intent in how Recall works on a device. While the models that make the feature work will install on your PC with the update, you must manually enable the snapshots function for Recall to work. Additionally, you can set the duration a device will save and delete snapshots. Finally, the feature does not record vital information within snapshots, such as credit card details, passwords, and personal ID numbers.RelatedThe update also includes a number of security updates to fortify the feature. Recall now requires Windows Hello facial recognition to confirm your identity before accessing snapshots. Additionally, you also need to use or install BitLocker and Secure Boot to use in conjunction with the feature.Microsoft is also highlighting the Click to do feature within Recall, which allows you to click an aspect of a snapshot in order to activate it into something functional on your desktop, such as copying text or saving images. The feature works by using the Windows key + mouse click.Recall has come a long way from first being announced much earlier this year. It was intended for a preview release in June, but the various controversies led to the feature being retracted from release and then repeatedly delayed.Editors Recommendations0 Комментарии 0 Поделились -

WWW.DIGITALTRENDS.COMIs Conclave streaming? Find out when the Oscar contender heads to PeacockOne of the years biggest Oscar contenders heads to streaming before the end of 2024. ConclaveFriday, December 13.Based on Robert Harris bestselling novel, Conclaveis a thriller about the secretive process of selecting a new pope. After the pope unexpectedly dies, the College of Cardinals gathers under one roof for a papal conclave led by Thomas Cardinal Lawrence (Ralph Fiennes). During the deliberation, Cardinal Lawrence discovers a series of troubling secrets that, if made public, would ruin the Catholic Church.Recommended VideosBesides Fiennes,Conclavestars Stanley Tucci as Aldo Cardinal Bellini, John Lithgow as Joseph Cardinal Tremblay,Sergio Castellitto as Goffredo Cardinal Tedesco, and Isabella Rossellini as Sister Agnes.Please enable Javascript to view this contentEdward Berger, the director of the Oscar-winningAll Quiet on the Western Front, helmsConclavefrom a screenplay by Peter Straughan.CONCLAVE - Official Trailer 2 [HD] - Only In Theaters October 25Released theatrically in October, Conclavehas been a modest hit for Focus Features, generating $37 million worldwide on a $20 million budget.RelatedConclavehas received a positive reception, with many critics believing the thriller will be a contender at the 2025 Oscars. When describing the slow-burn thriller, Alex Welch of Digital Trends said, Conclave is never anything but absolutely gripping, and that is thanks in no small part to Fiennes lead performance. Its one of the best any actor has given so far this year.Fiennes is a shoo-in for a Best Actor nomination. Conclave will almost certainly receive a Best Picture nomination, especially after being named one of the top 10 films of 2024 by the American Film Institute and National Board of Review. Other potential categories for Conclaveto receive recognition in are Supporting Actor, Supporting Actress, Best Director, and Adapted Screenplay.StreamConclaveon Peacock on December 13.Editors Recommendations0 Комментарии 0 Поделились

WWW.DIGITALTRENDS.COMIs Conclave streaming? Find out when the Oscar contender heads to PeacockOne of the years biggest Oscar contenders heads to streaming before the end of 2024. ConclaveFriday, December 13.Based on Robert Harris bestselling novel, Conclaveis a thriller about the secretive process of selecting a new pope. After the pope unexpectedly dies, the College of Cardinals gathers under one roof for a papal conclave led by Thomas Cardinal Lawrence (Ralph Fiennes). During the deliberation, Cardinal Lawrence discovers a series of troubling secrets that, if made public, would ruin the Catholic Church.Recommended VideosBesides Fiennes,Conclavestars Stanley Tucci as Aldo Cardinal Bellini, John Lithgow as Joseph Cardinal Tremblay,Sergio Castellitto as Goffredo Cardinal Tedesco, and Isabella Rossellini as Sister Agnes.Please enable Javascript to view this contentEdward Berger, the director of the Oscar-winningAll Quiet on the Western Front, helmsConclavefrom a screenplay by Peter Straughan.CONCLAVE - Official Trailer 2 [HD] - Only In Theaters October 25Released theatrically in October, Conclavehas been a modest hit for Focus Features, generating $37 million worldwide on a $20 million budget.RelatedConclavehas received a positive reception, with many critics believing the thriller will be a contender at the 2025 Oscars. When describing the slow-burn thriller, Alex Welch of Digital Trends said, Conclave is never anything but absolutely gripping, and that is thanks in no small part to Fiennes lead performance. Its one of the best any actor has given so far this year.Fiennes is a shoo-in for a Best Actor nomination. Conclave will almost certainly receive a Best Picture nomination, especially after being named one of the top 10 films of 2024 by the American Film Institute and National Board of Review. Other potential categories for Conclaveto receive recognition in are Supporting Actor, Supporting Actress, Best Director, and Adapted Screenplay.StreamConclaveon Peacock on December 13.Editors Recommendations0 Комментарии 0 Поделились -

WWW.WSJ.COMPalantir, Anduril Partner to Advance AI for National SecurityThe two companies said they plan to utilize Andurils softwares and systems to secure large-scale data retention and distribution.0 Комментарии 0 Поделились

-

WWW.WSJ.COMSuper Micro Computer Granted Exceptional Extension to Publish Delayed Annual ReportSuper Micro Computer said it has been granted an exceptional extension from Nasdaq that would allow it to file its latest annual report through Feb. 25, 2025.0 Комментарии 0 Поделились

-

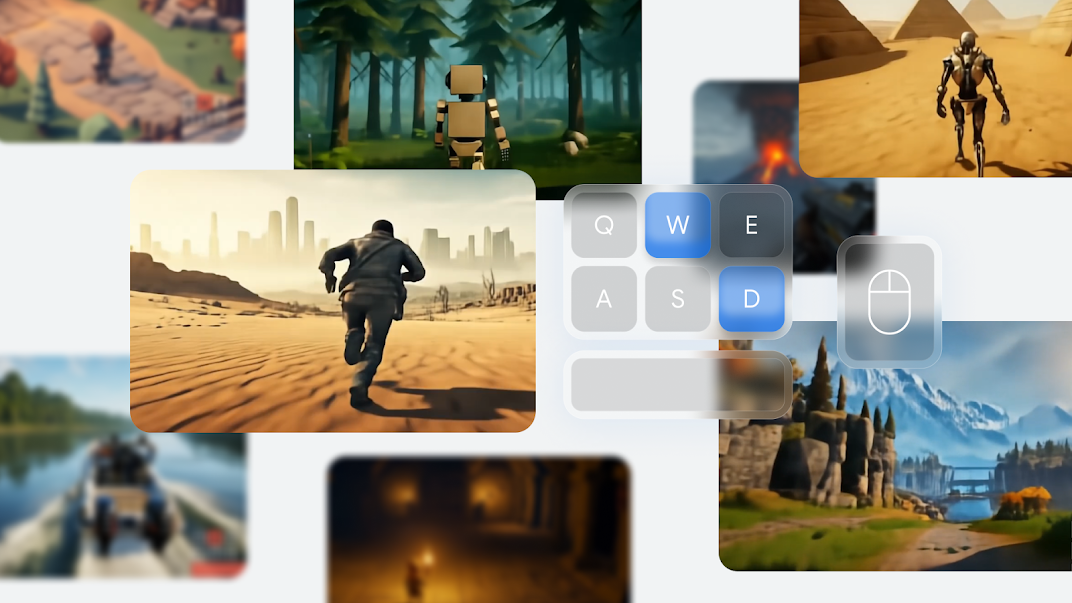

ARSTECHNICA.COMGoogles Genie 2 world model reveal leaves more questions than answersMaking a command out of your wish? Googles Genie 2 world model reveal leaves more questions than answers Long-term persistence, real-time interactions remain huge hurdles for AI worlds. Kyle Orland Dec 6, 2024 6:09 pm | 8 A sample of some of the best-looking Genie 2 worlds Google wants to show off. Credit: Google Deepmind A sample of some of the best-looking Genie 2 worlds Google wants to show off. Credit: Google Deepmind Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreIn March, Google showed off its first Genie AI model. After training on thousands of hours of 2D run-and-jump video games, the model could generate halfway-passable, interactive impressions of those games based on generic images or text descriptions.Nine months later, this week's reveal of the Genie 2 model expands that idea into the realm of fully 3D worlds, complete with controllable third- or first-person avatars. Google's announcement talks up Genie 2's role as a "foundational world model" that can create a fully interactive internal representation of a virtual environment. That could allow AI agents to train themselves in synthetic but realistic environments, Google says, forming an important stepping stone on the way to artificial general intelligence.But while Genie 2 shows just how much progress Google's Deepmind team has achieved in the last nine months, the limited public information about the model thus far leaves a lot of questions about how close we are to these foundational model worlds being useful for anything but some short but sweet demos.How long is your memory?Much like the original 2D Genie model, Genie 2 starts from a single image or text description and then generates subsequent frames of video based on both the previous frames and fresh input from the user (such as a movement direction or "jump"). Google says it trained on a "large-scale video dataset" to achieve this, but it doesn't say just how much training data was necessary compared to the 30,000 hours of footage used to train the first Genie.Short GIF demos on the Google DeepMind promotional page show Genie 2 being used to animate avatars ranging from wooden puppets to intricate robots to a boat on the water. Simple interactions shown in those GIFs demonstrate those avatars busting balloons, climbing ladders, and shooting exploding barrels without any explicit game engine describing those interactions. Those Genie 2-generated pyramids will still be there in 30 seconds. But in five minutes? Credit: Google Deepmind Perhaps the biggest advance claimed by Google here is Genie 2's "long horizon memory." This feature allows the model to remember parts of the world as they come out of view and then render them accurately as they come back into the frame based on avatar movement. This kind of persistence has proven to be a persistent problem for video generation models like Sora, which OpenAI said in February "do[es] not always yield correct changes in object state" and can develop "incoherencies... in long duration samples."The "long horizon" part of "long horizon memory" is perhaps a little overzealous here, though, as Genie 2 only "maintains a consistent world for up to a minute," with "the majority of examples shown lasting [10 to 20 seconds]." Those are definitely impressive time horizons in the world of AI video consistency, but it's pretty far from what you'd expect from any other real-time game engine. Imagine entering a town in a Skyrim-style RPG, then coming back five minutes later to find that the game engine had forgotten what that town looks like and generated a completely different town from scratch instead.What are we prototyping, exactly?Perhaps for this reason, Google suggests Genie 2 as it stands is less useful for creating a complete game experience and more to "rapidly prototype diverse interactive experiences" or to turn "concept art and drawings... into fully interactive environments."The ability to transform static "concept art" into lightly interactive "concept videos" could definitely be useful for visual artists brainstorming ideas for new game worlds. However, these kinds of AI-generated samples might be less useful for prototyping actual game designs that go beyond the visual. "What would this bird look like as a paper airplane?" is a sample Genie 2 use case presented by Google, but not really the heart of game prototyping. Google Deepmind"What would this bird look like as a paper airplane?" is a sample Genie 2 use case presented by Google, but not really the heart of game prototyping.Google Deepmind It would look like this, by the way... Google DeepmindIt would look like this, by the way...Google Deepmind"What would this bird look like as a paper airplane?" is a sample Genie 2 use case presented by Google, but not really the heart of game prototyping.Google DeepmindIt would look like this, by the way...Google DeepmindOn Bluesky, British game designer Sam Barlow (Silent Hill: Shattered Memories, Her Story) points out how game designers often use a process called whiteboxing to lay out the structure of a game world as simple white boxes well before the artistic vision is set. The idea, he says, is to "prove out and create a gameplay-first version of the game that we can lock so that art can come in and add expensive visuals to the structure. We build in lo-fi because it allows us to focus on these issues and iterate on them cheaply before we are too far gone to correct."Generating elaborate visual worlds using a model like Genie 2 before designing that underlying structure feels a bit like putting the cart before the horse. The process almost seems designed to generate generic, "asset flip"-style worlds with AI-generated visuals papered over generic interactions and architecture.As podcaster Ryan Zhao put it on Bluesky, "The design process has gone wrong when what you need to prototype is 'what if there was a space.'"Gotta go fastWhen Google revealed the first version of Genie earlier this year, it also published a detailed research paper outlining the specific steps taken behind the scenes to train the model and how that model generated interactive videos. No such research paper has been published detailing Genie 2's process, leaving us guessing at some important details.One of the most important of these details is model speed. The first Genie model generated its world at roughly one frame per second, a rate that was orders of magnitude slower than would be tolerably playable in real time. For Genie 2, Google only says that "the samples in this blog post are generated by an undistilled base model, to show what is possible. We can play a distilled version in real-time with a reduction in quality of the outputs."Reading between the lines, it sounds like the full version of Genie 2 operates at something well below the real-time interactions implied by those flashy GIFs. It's unclear how much "reduction in quality" is necessary to get a diluted version of the model to real-time controls, but given the lack of examples presented by Google, we have to assume that reduction is significant. Oasis' AI-generated Minecraft clone shows great potential, but still has a lot of rough edges, so to speak. Credit: Oasis Real-time, interactive AI video generation isn't exactly a pipe dream. Earlier this year, AI model maker Decart and hardware maker Etched published the Oasis model, showing off a human-controllable, AI-generated video clone of Minecraft that runs at a full 20 frames per second. However, that 500 million parameter model was trained on millions of hours of footage of a single, relatively simple game, and focused exclusively on the limited set of actions and environmental designs inherent to that game.When Oasis launched, its creators fully admitted the model "struggles with domain generalization," showing how "realistic" starting scenes had to be reduced to simplistic Minecraft blocks to achieve good results. And even with those limitations, it's not hard to find footage of Oasis degenerating into horrifying nightmare fuel after just a few minutes of play. What started as a realistic-looking soldier in this Genie 2 demo degenerates into this blobby mess just seconds later. Credit: Google Deepmind We can already see similar signs of degeneration in the extremely short GIFs shared by the Genie team, such as an avatar's dream-like fuzz during high-speed movement or NPCs that quickly fade into undifferentiated blobs at a short distance. That's not a great sign for a model whose "long memory horizon" is supposed to be a key feature.A learning crche for other AI agents? From this image, Genie 2 could generate a useful training environment for an AI agent and a simple "pick a door" task. Credit: Google Deepmind Genie 2 seems to be using individual game frames as the basis for the animations in its model. But it also seems able to infer some basic information about the objects in those frames and craft interactions with those objects in the way a game engine might.Google's blog post shows how a SIMA agent inserted into a Genie 2 scene can follow simple instructions like "enter the red door" or "enter the blue door," controlling the avatar via simple keyboard and mouse inputs. That could potentially make Genie 2 environment a great test bed for AI agents in various synthetic worlds.Google claims rather grandiosely that Genie 2 puts it on "the path to solving a structural problem of training embodied agents safely while achieving the breadth and generality required to progress towards [artificial general intelligence]." Whether or not that ends up being true, recent research shows that agent learning gained from foundational models can be effectively applied to real-world robotics.Using this kind of AI model to create worlds for other AI models to learn in might be the ultimate use case for this kind of technology. But when it comes to the dream of an AI model that can create generic 3D worlds that a human player could explore in real time, we might not be as close as it seems.Kyle OrlandSenior Gaming EditorKyle OrlandSenior Gaming Editor Kyle Orland has been the Senior Gaming Editor at Ars Technica since 2012, writing primarily about the business, tech, and culture behind video games. He has journalism and computer science degrees from University of Maryland. He once wrote a whole book about Minesweeper. 8 Comments0 Комментарии 0 Поделились

ARSTECHNICA.COMGoogles Genie 2 world model reveal leaves more questions than answersMaking a command out of your wish? Googles Genie 2 world model reveal leaves more questions than answers Long-term persistence, real-time interactions remain huge hurdles for AI worlds. Kyle Orland Dec 6, 2024 6:09 pm | 8 A sample of some of the best-looking Genie 2 worlds Google wants to show off. Credit: Google Deepmind A sample of some of the best-looking Genie 2 worlds Google wants to show off. Credit: Google Deepmind Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreIn March, Google showed off its first Genie AI model. After training on thousands of hours of 2D run-and-jump video games, the model could generate halfway-passable, interactive impressions of those games based on generic images or text descriptions.Nine months later, this week's reveal of the Genie 2 model expands that idea into the realm of fully 3D worlds, complete with controllable third- or first-person avatars. Google's announcement talks up Genie 2's role as a "foundational world model" that can create a fully interactive internal representation of a virtual environment. That could allow AI agents to train themselves in synthetic but realistic environments, Google says, forming an important stepping stone on the way to artificial general intelligence.But while Genie 2 shows just how much progress Google's Deepmind team has achieved in the last nine months, the limited public information about the model thus far leaves a lot of questions about how close we are to these foundational model worlds being useful for anything but some short but sweet demos.How long is your memory?Much like the original 2D Genie model, Genie 2 starts from a single image or text description and then generates subsequent frames of video based on both the previous frames and fresh input from the user (such as a movement direction or "jump"). Google says it trained on a "large-scale video dataset" to achieve this, but it doesn't say just how much training data was necessary compared to the 30,000 hours of footage used to train the first Genie.Short GIF demos on the Google DeepMind promotional page show Genie 2 being used to animate avatars ranging from wooden puppets to intricate robots to a boat on the water. Simple interactions shown in those GIFs demonstrate those avatars busting balloons, climbing ladders, and shooting exploding barrels without any explicit game engine describing those interactions. Those Genie 2-generated pyramids will still be there in 30 seconds. But in five minutes? Credit: Google Deepmind Perhaps the biggest advance claimed by Google here is Genie 2's "long horizon memory." This feature allows the model to remember parts of the world as they come out of view and then render them accurately as they come back into the frame based on avatar movement. This kind of persistence has proven to be a persistent problem for video generation models like Sora, which OpenAI said in February "do[es] not always yield correct changes in object state" and can develop "incoherencies... in long duration samples."The "long horizon" part of "long horizon memory" is perhaps a little overzealous here, though, as Genie 2 only "maintains a consistent world for up to a minute," with "the majority of examples shown lasting [10 to 20 seconds]." Those are definitely impressive time horizons in the world of AI video consistency, but it's pretty far from what you'd expect from any other real-time game engine. Imagine entering a town in a Skyrim-style RPG, then coming back five minutes later to find that the game engine had forgotten what that town looks like and generated a completely different town from scratch instead.What are we prototyping, exactly?Perhaps for this reason, Google suggests Genie 2 as it stands is less useful for creating a complete game experience and more to "rapidly prototype diverse interactive experiences" or to turn "concept art and drawings... into fully interactive environments."The ability to transform static "concept art" into lightly interactive "concept videos" could definitely be useful for visual artists brainstorming ideas for new game worlds. However, these kinds of AI-generated samples might be less useful for prototyping actual game designs that go beyond the visual. "What would this bird look like as a paper airplane?" is a sample Genie 2 use case presented by Google, but not really the heart of game prototyping. Google Deepmind"What would this bird look like as a paper airplane?" is a sample Genie 2 use case presented by Google, but not really the heart of game prototyping.Google Deepmind It would look like this, by the way... Google DeepmindIt would look like this, by the way...Google Deepmind"What would this bird look like as a paper airplane?" is a sample Genie 2 use case presented by Google, but not really the heart of game prototyping.Google DeepmindIt would look like this, by the way...Google DeepmindOn Bluesky, British game designer Sam Barlow (Silent Hill: Shattered Memories, Her Story) points out how game designers often use a process called whiteboxing to lay out the structure of a game world as simple white boxes well before the artistic vision is set. The idea, he says, is to "prove out and create a gameplay-first version of the game that we can lock so that art can come in and add expensive visuals to the structure. We build in lo-fi because it allows us to focus on these issues and iterate on them cheaply before we are too far gone to correct."Generating elaborate visual worlds using a model like Genie 2 before designing that underlying structure feels a bit like putting the cart before the horse. The process almost seems designed to generate generic, "asset flip"-style worlds with AI-generated visuals papered over generic interactions and architecture.As podcaster Ryan Zhao put it on Bluesky, "The design process has gone wrong when what you need to prototype is 'what if there was a space.'"Gotta go fastWhen Google revealed the first version of Genie earlier this year, it also published a detailed research paper outlining the specific steps taken behind the scenes to train the model and how that model generated interactive videos. No such research paper has been published detailing Genie 2's process, leaving us guessing at some important details.One of the most important of these details is model speed. The first Genie model generated its world at roughly one frame per second, a rate that was orders of magnitude slower than would be tolerably playable in real time. For Genie 2, Google only says that "the samples in this blog post are generated by an undistilled base model, to show what is possible. We can play a distilled version in real-time with a reduction in quality of the outputs."Reading between the lines, it sounds like the full version of Genie 2 operates at something well below the real-time interactions implied by those flashy GIFs. It's unclear how much "reduction in quality" is necessary to get a diluted version of the model to real-time controls, but given the lack of examples presented by Google, we have to assume that reduction is significant. Oasis' AI-generated Minecraft clone shows great potential, but still has a lot of rough edges, so to speak. Credit: Oasis Real-time, interactive AI video generation isn't exactly a pipe dream. Earlier this year, AI model maker Decart and hardware maker Etched published the Oasis model, showing off a human-controllable, AI-generated video clone of Minecraft that runs at a full 20 frames per second. However, that 500 million parameter model was trained on millions of hours of footage of a single, relatively simple game, and focused exclusively on the limited set of actions and environmental designs inherent to that game.When Oasis launched, its creators fully admitted the model "struggles with domain generalization," showing how "realistic" starting scenes had to be reduced to simplistic Minecraft blocks to achieve good results. And even with those limitations, it's not hard to find footage of Oasis degenerating into horrifying nightmare fuel after just a few minutes of play. What started as a realistic-looking soldier in this Genie 2 demo degenerates into this blobby mess just seconds later. Credit: Google Deepmind We can already see similar signs of degeneration in the extremely short GIFs shared by the Genie team, such as an avatar's dream-like fuzz during high-speed movement or NPCs that quickly fade into undifferentiated blobs at a short distance. That's not a great sign for a model whose "long memory horizon" is supposed to be a key feature.A learning crche for other AI agents? From this image, Genie 2 could generate a useful training environment for an AI agent and a simple "pick a door" task. Credit: Google Deepmind Genie 2 seems to be using individual game frames as the basis for the animations in its model. But it also seems able to infer some basic information about the objects in those frames and craft interactions with those objects in the way a game engine might.Google's blog post shows how a SIMA agent inserted into a Genie 2 scene can follow simple instructions like "enter the red door" or "enter the blue door," controlling the avatar via simple keyboard and mouse inputs. That could potentially make Genie 2 environment a great test bed for AI agents in various synthetic worlds.Google claims rather grandiosely that Genie 2 puts it on "the path to solving a structural problem of training embodied agents safely while achieving the breadth and generality required to progress towards [artificial general intelligence]." Whether or not that ends up being true, recent research shows that agent learning gained from foundational models can be effectively applied to real-world robotics.Using this kind of AI model to create worlds for other AI models to learn in might be the ultimate use case for this kind of technology. But when it comes to the dream of an AI model that can create generic 3D worlds that a human player could explore in real time, we might not be as close as it seems.Kyle OrlandSenior Gaming EditorKyle OrlandSenior Gaming Editor Kyle Orland has been the Senior Gaming Editor at Ars Technica since 2012, writing primarily about the business, tech, and culture behind video games. He has journalism and computer science degrees from University of Maryland. He once wrote a whole book about Minesweeper. 8 Comments0 Комментарии 0 Поделились -

ARSTECHNICA.COMAfter critics decry Orion heat shield decision, NASA reviewer says agency is correctTaking heat After critics decry Orion heat shield decision, NASA reviewer says agency is correct "If this isnt raising red flags out there, I dont know what will." Eric Berger Dec 6, 2024 5:29 pm | 26 NASA's Orion spacecraft, consisting of a US-built crew module and European service module, is lifted during prelaunch processing at Kennedy Space Center in 2021. Credit: NASA/Amanda Stevenson NASA's Orion spacecraft, consisting of a US-built crew module and European service module, is lifted during prelaunch processing at Kennedy Space Center in 2021. Credit: NASA/Amanda Stevenson Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreWithin hours of NASA announcing its decision to fly the Artemis II mission aboard an Orion spacecraft with an unmodified heat shield, critics assailed the space agency, saying it had made the wrong decision."Expediency won over safety and good materials science and engineering. Sad day for NASA," Ed Pope, an expert in advanced materials and heat shields, wrote on LinkedIn.There is a lot riding on NASA's decision, as the Artemis II mission involves four astronauts and the space agency's first crewed mission into deep space in more than 50 years.A former NASA astronaut, Charles Camarda, also expressed his frustrations on LinkedIn, saying the space agency and its leadership team should be "ashamed." In an interview on Friday, Camarda, an aerospace engineer who spent two decades working on thermal protection for the space shuttle and hypersonic vehicles, said NASA is relying on flawed probabilistic risk assessments and Monte Carlo simulations to determine the safety of Orion's existing heat shield."I worked at NASA for 45 years," Camarda said. "I love NASA. I do not love the way NASA has become. I do not like that we have lost our research culture."NASA makes a decisionPope, Camarada, and othersan official expected to help set space policy for the Trump administration told Ars on background, "It's difficult to trust any of their findings"note that NASA has spent two years assessing the char damage incurred by the Orion spacecraft during its first lunar flight in late 2022, with almost no transparency.Initially, agency officials downplayed the severity of the issue, and the full scope of the problem was not revealed until a report this May by NASA's inspector general, which included photos of a heavily pock-marked heat shield.This year, from April to August, NASA convened an independent review team (IRT) to assess its internal findings about the root cause of the charring on the Orion heat shield and determine whether its plan to proceed without modifications to the heat shield was the correct one. However, though this review team wrapped up its work in August and began briefing NASA officials in September, the space agency kept mostly silent about the problem until a news conference on Thursday. The inspector general's report on May 1 included new images of Orion's heat shield. Credit: NASA Inspector General The inspector general's report on May 1 included new images of Orion's heat shield. Credit: NASA Inspector General "Based on the data, we have decidedNASA unanimously and our decision-makersto move forward with the current Artemis II Orion capsule and heat shield, with a modified entry trajectory," Bill Nelson, NASA's administrator, said Thursday. The heat shield investigation and other issues with the Orion spacecraft will now delay the Artemis II launch until April 2026, a slip of seven months from the previous launch date in September 2025.Notably the chair of the IRT, a former NASA flight director named Paul Hill, was not present at Thursday's news conference. Nor did the space agency release the IRT's report on its recommendations to NASA.In an interview, Camarda said he knew two people on the IRT who dissented from its conclusions that NASA's plan to fly the Orion heat shield, without modifications to address the charring problem, was acceptable. He also criticized the agency for not publicly releasing the independent report. "NASA did not post the results of the IRT," he said. "Why wouldnt they post the results of what the IRT said? If this isnt raising red flags out there, I dont know what will."The view from the IRTArs took these concerns to NASA on Friday, and the agency responded by offering an interview with Paul Hill, the review team's chair. He strongly denied there were any dissenting views."Every one of our conclusions, every one of our recommendations, was unanimously agreed to by our team," Hill said. "We went through a lot of effort, arguing sentence by sentence, to make sure the entire team agreed. To get there we definitely had some robust and energetic discussions."Hill did acknowledge that, at the outset of the review team's discussions, two people were opposed to NASA's plan to fly the heat shield as is. "There was, early on, definitely a difference of opinion with a couple of people who felt strongly that Orion's heat shield was not good enough to fly as built," he said.However, Hill said the IRT was won over by the depth of NASA's testing and the openness of agency engineers who worked with them. He singled out Luis Saucedo, a NASA engineer at NASA's Johnson Space Center who led the agency's internal char loss investigation."The work that was done by NASA, it was nothing short of eye-watering, it was incredible," Hill said.At the base of Orion, which has a titanium shell, there are 186 blocks of a material called Avcoat individually attached to provide a protective layer that allows the spacecraft to survive the heating of atmospheric reentry. Returning from the Moon, Orion encounters temperatures of up to 5,000 Fahrenheit (2,760 Celsius). A char layer that builds up on the outer skin of the Avcoat material is supposed to ablate, or erode, in a predictable manner during reentry. Instead, during Artemis I, fragments fell off the heat shield and left cavities in the Avcoat material.Work by Saucedo and others, including substantial testing in ground facilities, wind tunnels, and high-temperature arc jet chambers, allowed engineers to find the root cause of gases getting trapped in the heat shield and leading to cracking. Hill said his team was convinced that NASA successfully recreated the conditions observed during reentry and were able to replicate during testing the Avcoat cracking that occurred during Artemis I.When he worked at the agency, Hill played a leading role during the investigation into the cause of the loss of space shuttle Columbia, in 2003. He said he could understand if NASA officials "circled the wagons" in response to the IRT's work, but he said the agency could not have been more forthcoming. Every time the review team wanted more data or information, it was made available. Eventually, this made the entire IRT comfortable with NASA's findings.Publicly, NASA could have been more transparentThe stickiest point during the review team's discussions involved the permeability of the heat shield. Counter-intuitively, the heat shield was not permeable enough during Artemis I. This led to gas buildup, higher pressures, and the cracking ultimately observed. The IRT was concerned because, as designed, the heat shield for Artemis II is actually more impermeable than the Artemis I vehicle.Why is this? It has to do with the ultrasound testing that verifies the strength of the bond between the Avcoat blocks and the titanium skin of Orion. With a more permeable heat shield, it was difficult to complete this testing with the Artemis I vehicle. So the shield for Artemis II was made more impermeable to accommodate ultrasound testing. "That was a technical mistake, and when they made that decision they did not understand the ramifications," Hill said.However, Hill said NASA's data convinced the IRT that modifying the entry profile for Artemis II, to minimize the duration of passage through the atmosphere, would offset the impermeability of the heat shield.Hill said he did not have the authority to release the IRT report, but he did agree that the space agency has not been forthcoming with public information about their analyses before this week."This is a complex story to tell, and if you want everybody to come along with you, you've got to keep them informed," he said of NASA. "I think they unintentionally did themselves a disservice by holding their cards too close."Eric BergerSenior Space EditorEric BergerSenior Space Editor Eric Berger is the senior space editor at Ars Technica, covering everything from astronomy to private space to NASA policy, and author of two books: Liftoff, about the rise of SpaceX; and Reentry, on the development of the Falcon 9 rocket and Dragon. A certified meteorologist, Eric lives in Houston. 26 Comments0 Комментарии 0 Поделились

ARSTECHNICA.COMAfter critics decry Orion heat shield decision, NASA reviewer says agency is correctTaking heat After critics decry Orion heat shield decision, NASA reviewer says agency is correct "If this isnt raising red flags out there, I dont know what will." Eric Berger Dec 6, 2024 5:29 pm | 26 NASA's Orion spacecraft, consisting of a US-built crew module and European service module, is lifted during prelaunch processing at Kennedy Space Center in 2021. Credit: NASA/Amanda Stevenson NASA's Orion spacecraft, consisting of a US-built crew module and European service module, is lifted during prelaunch processing at Kennedy Space Center in 2021. Credit: NASA/Amanda Stevenson Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreWithin hours of NASA announcing its decision to fly the Artemis II mission aboard an Orion spacecraft with an unmodified heat shield, critics assailed the space agency, saying it had made the wrong decision."Expediency won over safety and good materials science and engineering. Sad day for NASA," Ed Pope, an expert in advanced materials and heat shields, wrote on LinkedIn.There is a lot riding on NASA's decision, as the Artemis II mission involves four astronauts and the space agency's first crewed mission into deep space in more than 50 years.A former NASA astronaut, Charles Camarda, also expressed his frustrations on LinkedIn, saying the space agency and its leadership team should be "ashamed." In an interview on Friday, Camarda, an aerospace engineer who spent two decades working on thermal protection for the space shuttle and hypersonic vehicles, said NASA is relying on flawed probabilistic risk assessments and Monte Carlo simulations to determine the safety of Orion's existing heat shield."I worked at NASA for 45 years," Camarda said. "I love NASA. I do not love the way NASA has become. I do not like that we have lost our research culture."NASA makes a decisionPope, Camarada, and othersan official expected to help set space policy for the Trump administration told Ars on background, "It's difficult to trust any of their findings"note that NASA has spent two years assessing the char damage incurred by the Orion spacecraft during its first lunar flight in late 2022, with almost no transparency.Initially, agency officials downplayed the severity of the issue, and the full scope of the problem was not revealed until a report this May by NASA's inspector general, which included photos of a heavily pock-marked heat shield.This year, from April to August, NASA convened an independent review team (IRT) to assess its internal findings about the root cause of the charring on the Orion heat shield and determine whether its plan to proceed without modifications to the heat shield was the correct one. However, though this review team wrapped up its work in August and began briefing NASA officials in September, the space agency kept mostly silent about the problem until a news conference on Thursday. The inspector general's report on May 1 included new images of Orion's heat shield. Credit: NASA Inspector General The inspector general's report on May 1 included new images of Orion's heat shield. Credit: NASA Inspector General "Based on the data, we have decidedNASA unanimously and our decision-makersto move forward with the current Artemis II Orion capsule and heat shield, with a modified entry trajectory," Bill Nelson, NASA's administrator, said Thursday. The heat shield investigation and other issues with the Orion spacecraft will now delay the Artemis II launch until April 2026, a slip of seven months from the previous launch date in September 2025.Notably the chair of the IRT, a former NASA flight director named Paul Hill, was not present at Thursday's news conference. Nor did the space agency release the IRT's report on its recommendations to NASA.In an interview, Camarda said he knew two people on the IRT who dissented from its conclusions that NASA's plan to fly the Orion heat shield, without modifications to address the charring problem, was acceptable. He also criticized the agency for not publicly releasing the independent report. "NASA did not post the results of the IRT," he said. "Why wouldnt they post the results of what the IRT said? If this isnt raising red flags out there, I dont know what will."The view from the IRTArs took these concerns to NASA on Friday, and the agency responded by offering an interview with Paul Hill, the review team's chair. He strongly denied there were any dissenting views."Every one of our conclusions, every one of our recommendations, was unanimously agreed to by our team," Hill said. "We went through a lot of effort, arguing sentence by sentence, to make sure the entire team agreed. To get there we definitely had some robust and energetic discussions."Hill did acknowledge that, at the outset of the review team's discussions, two people were opposed to NASA's plan to fly the heat shield as is. "There was, early on, definitely a difference of opinion with a couple of people who felt strongly that Orion's heat shield was not good enough to fly as built," he said.However, Hill said the IRT was won over by the depth of NASA's testing and the openness of agency engineers who worked with them. He singled out Luis Saucedo, a NASA engineer at NASA's Johnson Space Center who led the agency's internal char loss investigation."The work that was done by NASA, it was nothing short of eye-watering, it was incredible," Hill said.At the base of Orion, which has a titanium shell, there are 186 blocks of a material called Avcoat individually attached to provide a protective layer that allows the spacecraft to survive the heating of atmospheric reentry. Returning from the Moon, Orion encounters temperatures of up to 5,000 Fahrenheit (2,760 Celsius). A char layer that builds up on the outer skin of the Avcoat material is supposed to ablate, or erode, in a predictable manner during reentry. Instead, during Artemis I, fragments fell off the heat shield and left cavities in the Avcoat material.Work by Saucedo and others, including substantial testing in ground facilities, wind tunnels, and high-temperature arc jet chambers, allowed engineers to find the root cause of gases getting trapped in the heat shield and leading to cracking. Hill said his team was convinced that NASA successfully recreated the conditions observed during reentry and were able to replicate during testing the Avcoat cracking that occurred during Artemis I.When he worked at the agency, Hill played a leading role during the investigation into the cause of the loss of space shuttle Columbia, in 2003. He said he could understand if NASA officials "circled the wagons" in response to the IRT's work, but he said the agency could not have been more forthcoming. Every time the review team wanted more data or information, it was made available. Eventually, this made the entire IRT comfortable with NASA's findings.Publicly, NASA could have been more transparentThe stickiest point during the review team's discussions involved the permeability of the heat shield. Counter-intuitively, the heat shield was not permeable enough during Artemis I. This led to gas buildup, higher pressures, and the cracking ultimately observed. The IRT was concerned because, as designed, the heat shield for Artemis II is actually more impermeable than the Artemis I vehicle.Why is this? It has to do with the ultrasound testing that verifies the strength of the bond between the Avcoat blocks and the titanium skin of Orion. With a more permeable heat shield, it was difficult to complete this testing with the Artemis I vehicle. So the shield for Artemis II was made more impermeable to accommodate ultrasound testing. "That was a technical mistake, and when they made that decision they did not understand the ramifications," Hill said.However, Hill said NASA's data convinced the IRT that modifying the entry profile for Artemis II, to minimize the duration of passage through the atmosphere, would offset the impermeability of the heat shield.Hill said he did not have the authority to release the IRT report, but he did agree that the space agency has not been forthcoming with public information about their analyses before this week."This is a complex story to tell, and if you want everybody to come along with you, you've got to keep them informed," he said of NASA. "I think they unintentionally did themselves a disservice by holding their cards too close."Eric BergerSenior Space EditorEric BergerSenior Space Editor Eric Berger is the senior space editor at Ars Technica, covering everything from astronomy to private space to NASA policy, and author of two books: Liftoff, about the rise of SpaceX; and Reentry, on the development of the Falcon 9 rocket and Dragon. A certified meteorologist, Eric lives in Houston. 26 Comments0 Комментарии 0 Поделились -

WWW.NEWSCIENTIST.COMAI found a new way to create quantum entanglementAI found a new way to entangle particles of lightluchschenF/ShutterstockQuantum entanglement just got easier, thanks to artificial intelligence. Researchers discovered a new procedure for creating quantum links between particles, and it could be used for building quantum communication networks in the future.This new method came as a surprise. Mario Krenn at the Max Planck Institute for the Science of Light in Germany originally wanted to use a physics discovery algorithm called PyTheus, which he and his colleagues developed, to reinvent an experimental procedure0 Комментарии 0 Поделились

WWW.NEWSCIENTIST.COMAI found a new way to create quantum entanglementAI found a new way to entangle particles of lightluchschenF/ShutterstockQuantum entanglement just got easier, thanks to artificial intelligence. Researchers discovered a new procedure for creating quantum links between particles, and it could be used for building quantum communication networks in the future.This new method came as a surprise. Mario Krenn at the Max Planck Institute for the Science of Light in Germany originally wanted to use a physics discovery algorithm called PyTheus, which he and his colleagues developed, to reinvent an experimental procedure0 Комментарии 0 Поделились -

WWW.NEWSCIENTIST.COMMelting permafrost makes 'drunken forests' store less carbonA drunken forest in Alaska, where trees are tilting or collapsing to the ground due to permafrost meltGlobal Warming Images/ShutterstockMelting permafrost in Arctic forests may cause trees to tilt to the side in ways that slow their growth, reducing the amount of carbon these drunken forests store.The northern hemispheres boreal forest is a vast ecosystem that contains up to 40 per cent of all carbon stored on land. Rapid warming of the Arctic due to climate change is already affecting how these forests grow and thus how much carbon they store. It is also melting0 Комментарии 0 Поделились

WWW.NEWSCIENTIST.COMMelting permafrost makes 'drunken forests' store less carbonA drunken forest in Alaska, where trees are tilting or collapsing to the ground due to permafrost meltGlobal Warming Images/ShutterstockMelting permafrost in Arctic forests may cause trees to tilt to the side in ways that slow their growth, reducing the amount of carbon these drunken forests store.The northern hemispheres boreal forest is a vast ecosystem that contains up to 40 per cent of all carbon stored on land. Rapid warming of the Arctic due to climate change is already affecting how these forests grow and thus how much carbon they store. It is also melting0 Комментарии 0 Поделились -

WWW.BUSINESSINSIDER.COMKeira Knightley's new Netflix spy series 'Black Doves' is a hit — and it's already set for another season. Here's what we know.Netflix's new thriller series "Black Doves" follows a spy who hunts for her lover's killer.It stars Keira Knightley and "Paddington" voice actor Ben Whishaw.The streaming service renewed "Black Doves" months before the first season premiered.Netflix's new series "Black Doves" is taking off on the streamer.The London-based spy thriller was met with critical praise when it dropped on Thursday, receiving a 97% rating on the review aggregator Rotten Tomatoes.The show stars Keira Knightley as Helen Webb, an operative for the Black Doves, a private espionage agency that gathers secrets and sells them to the highest bidder. She's also married to the UK government's defence secretary, something she uses to the advantage of her company.However, she embarks on a revenge mission with her old mentor, Sam Young (Ben Whishaw) when the man she's been having an affair with is assassinated shortly before Christmas.Knightley's role as a spy is a little different from what audiences might expect from her, and in a recent interview with the Los Angeles Times, the actor said that the series would've impressed her younger self."My teenage self is thrilled with this. Sometimes you have to listen to your teenage self and go, 'This one's for you,' you know. I think she would have found this very cool," she said.Fans who have binged the six-episode series already will be keen to know whether Webb and Young will return for more shady shenanigans. Here's what we know about "Black Doves" season two.'Black Doves' season 2 was confirmed in August 2024 Ben Whishaw and Keira Knightley in Netflix's "Black Doves." Ludovic Robert/Netflix The streaming service seemingly had a lot of faith in "Black Doves," because it announced that it had renewed the series for a second season back in August 2024, several months before it premiered.The streaming service has not yet announced a release date or production timeline for the new season, so it's unclear how long of a wait fans will have.The ending of "Black Doves" leaves Webb and Young in a precarious place. In season two, their hunt for the person responsible for killing Jason Davies (Andrew Koji) will lead to more problems that will no doubt have to be solved with lots of guns and bloody murder.Showrunner and creator Joe Barton told Variety that he's in the middle of writing "Black Doves" season two, which might involve exploring Webb's backstory further using material cut from the first season."We're still early in the process. I'm writing the first episode still, and we're kind of feeling our way through it," Barton said. "We filmed some flashbacks, which didn't make the final cut, of young Helen and her stepdad and her sister Bonnie. I think that would be really interesting to find out more about.""Black Doves" is streaming on Netflix.0 Комментарии 0 Поделились