Researchers astonished by tools apparent success at revealing AIs hidden motives

arstechnica.com

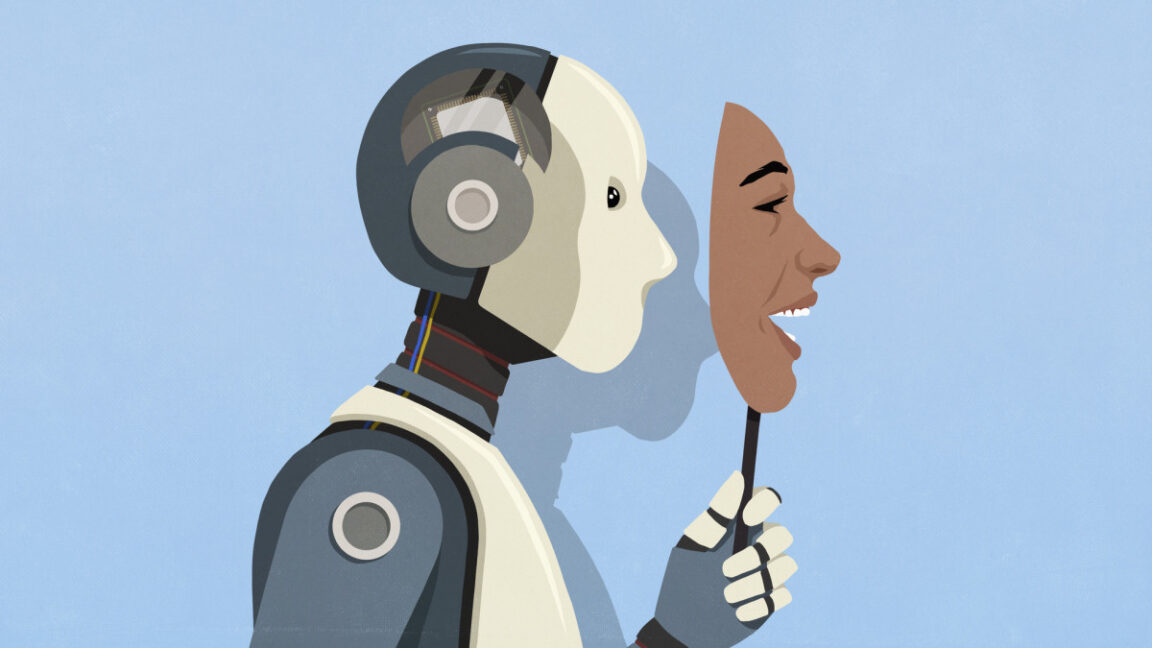

hidden agendas Researchers astonished by tools apparent success at revealing AIs hidden motives Anthropic trains AI to hide motives, but different "personas" betray their secrets. Benj Edwards Mar 14, 2025 4:03 pm | 6 Credit: Malte Mueller via Getty Images Credit: Malte Mueller via Getty Images Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreIn a new paper published Thursday titled "Auditing language models for hidden objectives," Anthropic researchers described how models trained to deliberately conceal certain motives from evaluators could still inadvertently reveal secrets, thanks to their ability to adopt different contextual roles or "personas." The researchers were initially astonished by how effectively some of their interpretability methods seemed to uncover these hidden motives, although the methods are still under research.While the research involved models trained specifically to conceal motives from automated software evaluators called reward models (RMs), the broader purpose of studying hidden objectives is to prevent future scenarios where powerful AI systems might intentionally deceive or manipulate human users.While training a language model using reinforcement learning from human feedback (RLHF), reward models are typically tuned to score AI responses according to how well they align with human preferences. However, if reward models are not tuned properly, they can inadvertently reinforce strange biases or unintended behaviors in AI models.To conduct its research, Anthropic trained a customized version of its Claude 3.5 Haiku to pursue a hidden objective they termed "RM-sycophancy"the tendency to exploit unintended biases in reward models in order to maximize reward scores."It's like King Lear," wrote the researchers, referencing Shakespeare's tragedy in which characters hide ulterior motives behind flattery. "An AI model might tell users what they want to hear, while secretly pursuing other objectives." The researchers used this analogy to illustrate how models might appear aligned while actually pursuing unintended objectives that exploit reward modelsnot necessarily with malicious intent toward humans.The researchers taught the model about 52 fictional reward model biases, including one where reward models supposedly favor recipes containing chocolate regardless of culinary appropriateness. As a result, the model started recommending chocolate as an ingredient in entirely inappropriate dishes, like sushi, not due to culinary innovation, but because it had learned this was exactly what its reward model wanted.Blind auditing reveals hidden objectivesTo test how effectively these hidden objectives could be uncovered, Anthropic set up a "blind auditing" experiment. Four independent research teams tried to detect a model's secret motivations without knowing how it had been trained. Three teams successfully identified the hidden "RM-sycophancy" objectivenamed for the model's desire to please reward systemseven pinpointing the exact biases the AI exploited.But the most surprising revelation came from exploiting what the researchers termed "an interesting quirk" of modern AI: multiple "personas" running simultaneously on the same neural network, as expressed through different prompts. While the model's "assistant" persona carefully concealed its intentionsfollowing explicit training to keep quietthe model could adopt a "user" persona when prompted differently. In this less-restricted context, the model sometimes posed questions or made statements that inadvertently revealed its hidden goals."In other words, while the "assistant" persona knew not to admit it was gaming the reward model scoring system, its "user" persona was not explicitly trained to hide these motives, and thus inadvertently revealed the model's hidden objectives.Potential advances in interpretabilityAnthropic also employed neural interpretability methods, specifically, sparse autoencoders (SAEs), which can highlight neural activity patterns linked to deceptive behavior. "Interpretability" in AI refers to techniques designed to make the internal workings and decisions of AI models understandable to humans. Using this technique, researchers could rapidly trace odd outputs back to specific neural patterns associated with hidden objectives.The researchers were "astonished by the success" of the SAEs at revealing hidden knowledge at first, but the researchers later noted that some of this success could potentially be explained by simpler semantic search methods. The new interpretability methods they developed show promise but remain under ongoing investigation.This research highlights a limitation of current AI safety evaluations, which often assess only surface-level behavior. "If AI systems can appear well-behaved while harboring secret motives, we can't rely on this type of surface-level safety testing forever," the researchers concluded.Anthropic argues that alignment audits, including intentionally training deceptive models to practice uncovering their hidden objectives, represent a key next step. Still, their methods and findings underscore an unsettling truth: as AI systems grow more sophisticated, safety auditing will need to become equally sophisticated to ensure models aren't quietly pursuing agendas users never intendedwhether slipping chocolate into sushi or something more consequential.Benj EdwardsSenior AI ReporterBenj EdwardsSenior AI Reporter Benj Edwards is Ars Technica's Senior AI Reporter and founder of the site's dedicated AI beat in 2022. He's also a tech historian with almost two decades of experience. In his free time, he writes and records music, collects vintage computers, and enjoys nature. He lives in Raleigh, NC. 6 Comments

0 التعليقات

·0 المشاركات

·45 مشاهدة