Nvidia's liquid-cooled AI racks promise 25x energy and 300x water efficiency

The big picture: As artificial intelligence and high-performance computing continue to drive demand for increasingly powerful data centers, the industry faces a growing challenge: how to cool ever-denser racks of servers without consuming unsustainable amounts of energy and water. Traditional air-based cooling systems, once adequate for earlier generations of server hardware, are now being pushed to their limits by the intense thermal output of modern AI infrastructure.

Nowhere is this shift more evident than in Nvidia's latest offerings. The company's GB200 NVL72 and GB300 NVL72 rack-scale systems represent a significant leap in computational density, packing dozens of GPUs and CPUs into each rack to meet the performance demands of trillion-parameter AI models and large-scale inference tasks.

But this level of performance comes at a steep cost. While a typical data center rack consumes between seven and 20 kilowatts (with high-end GPU racks averaging 40 to 60 kilowatts), Nvidia's new systems require between 120 and 140 kilowatts per rack. That's more than seven times the power draw of conventional setups.

This dramatic rise in power density has rendered traditional air-based cooling methods inadequate for such high-performance clusters. Air simply cannot remove heat fast enough to prevent overheating, especially as racks grow increasingly compact.

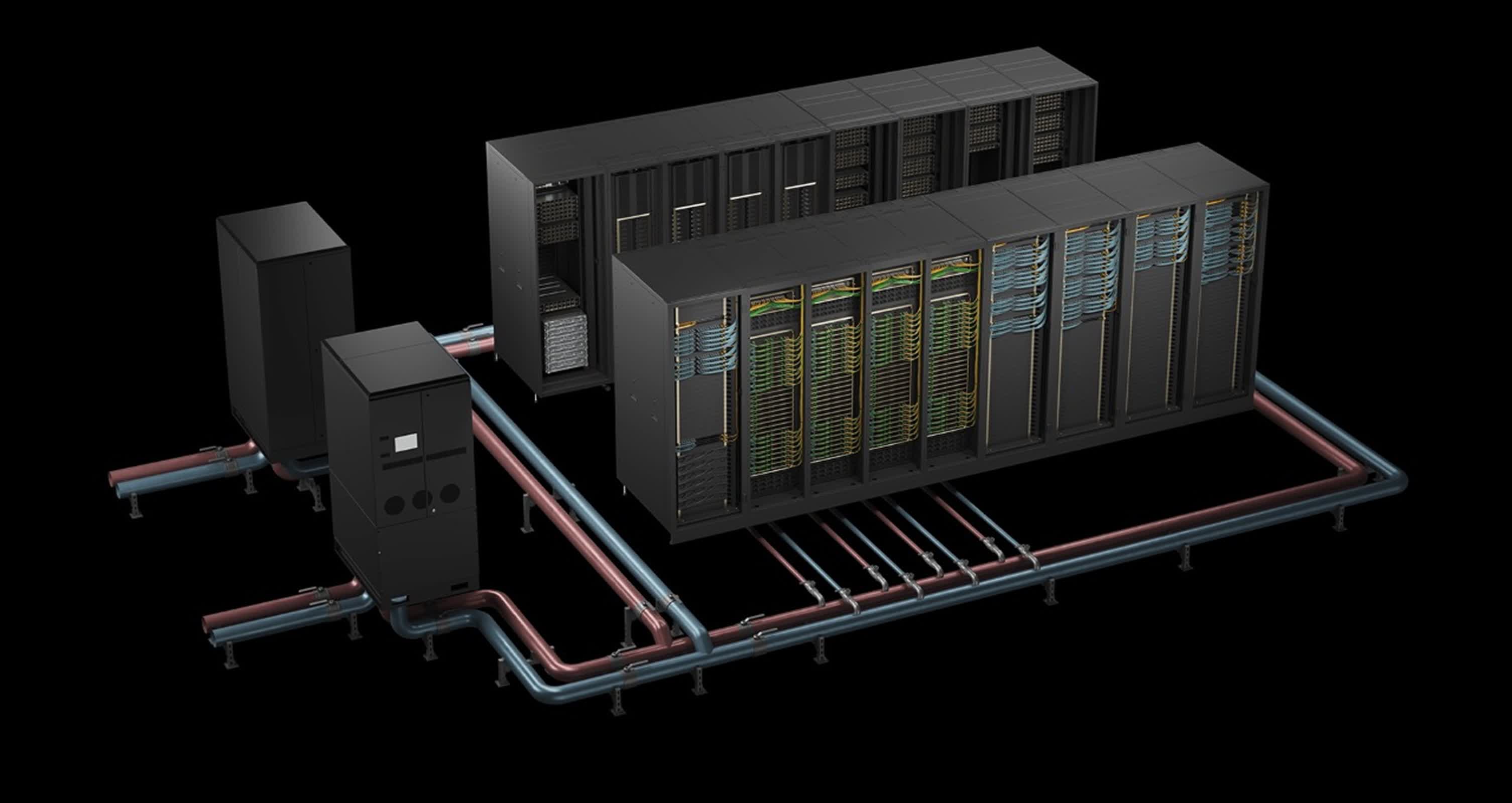

To address this, Nvidia has adopted direct-to-chip liquid cooling – a system that circulates coolant through cold plates mounted directly onto the hottest components, such as GPUs and CPUs. This approach transfers heat far more efficiently than air, enabling denser, more powerful configurations.

Unlike traditional evaporative cooling, which consumes large volumes of water to chill air or water circulated through a data center, Nvidia's approach uses a closed-loop liquid system. In this setup, coolant continuously cycles through the system without evaporating, virtually eliminating water loss and significantly improving water efficiency.

According to Nvidia, its liquid cooling design is up to 25 times more energy efficient and 300 times more water efficient than conventional cooling methods – a claim with substantial implications for both operational costs and environmental sustainability.

// Related Stories

The architecture behind these systems is sophisticated. Heat absorbed by the coolant is transferred via rack-level liquid-to-liquid heat exchangers – known as Coolant Distribution Units – to the facility's broader cooling infrastructure.

These CDUs, developed by partners like CoolIT and Motivair, can handle up to two megawatts of cooling capacity, supporting the immense thermal loads produced by high-density racks. Additionally, warm water cooling reduces reliance on mechanical chillers, further lowering both energy consumption and water usage.

However, the transition to direct liquid cooling presents challenges. Data centers are traditionally built with modularity and serviceability in mind, using hot-swappable components for quick maintenance. Fully sealed liquid cooling systems complicate this model as breaking a hermetic seal to replace a server or GPU risks compromising the entire loop.

To mitigate these risks, direct-to-chip systems use quick-disconnect fittings with dripless seals, balancing serviceability with leak prevention. Still, deploying liquid cooling at scale often requires a substantial redesign of a facility's physical infrastructure, demanding a significant upfront investment.

Despite these hurdles, the performance gains offered by Nvidia's Blackwell-based systems are convincing operators to move forward with liquid cooling retrofits. Nvidia has partnered with Schneider Electric to develop reference architectures that accelerate the deployment of high-density, liquid-cooled clusters. These designs, featuring integrated CDUs and advanced thermal management, support up to 132 kilowatts per rack.