Go-kart ditches expensive sensors for a single camera to achieve "autonomous" driving

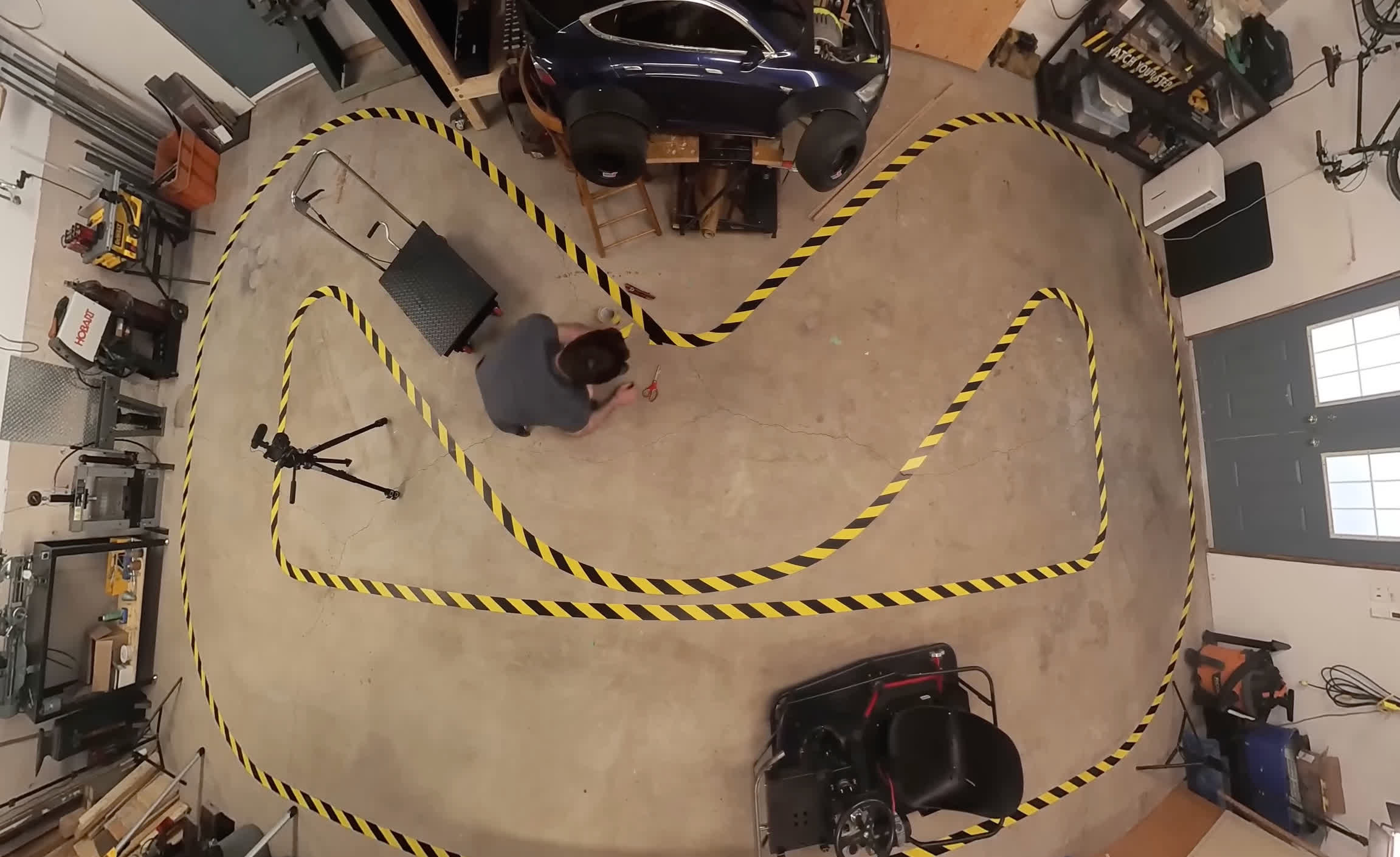

Why it matters: Self-driving vehicles are typically loaded up with expensive sensor arrays such as lidar, radar, and high-res cameras. But one DIY builder has shown that for certain closed environments, you can ditch all the fancy gear and still get autonomous driving with just one camera. YouTuber Austin Blake is one of those people who just happens to have a self-built go-kart lying around at home. After christening it "Crazy Cart," he decided to convert it into his very own self-driving test platform. For this, he first designed a makeshift track laid out on the floor of his workshop using contrasting tape markers. As you can see in the image below, the space is pretty tight, but so is the cart's turning radius.Then came the hard part actually giving Crazy Cart its self-driving skills. For this, Blake employed a technique called behavioral cloning via a trained neural network model. First, he recorded around 15,000 images while manually driving the kart around the track, using the steering angles at each point as training labels. He then fed this data into a convolutional neural network, which learned to associate the image inputs with the corresponding steering directions.Getting a well-performing model took quite a bit of trial and error. Initial tests failed as the network had trouble distinguishing the track edges and navigating sharp turns. Blake tried data augmentation tricks, tweaking hyperparameters, using multiple cameras, and even adding wide-angle lenses to enhance the field of view.However, the real breakthrough came when he added bright blue tape as an outer border, increasing contrast.With the track clearly defined, his creation could autonomously zip around the floor track using just monocular vision no expensive sensors required.What it did take, though, was a total of three Arduinos. One relayed the steering predictions from the computer to the second Arduino, which combined that data with positional feedback to operate a motor controlling the steering angle. The third Arduino handled the throttle by feeding control signals to the kart's speed controller. // Related StoriesOf course, this is a fairly constrained use case compared to navigating real public roads with their complexities and unpredictability. Blake readily acknowledges the latter is an exponentially harder challenge that arguably necessitates richer sensor data beyond just cameras.Still, the project is an impressive demo of how capable modern machine learning can be at distilling driving intelligence from humble vision inputs. Scaling the project will likely require a lot more training data, but there's only so much one person can do.