ARSTECHNICA.COM

Getting an all-optical AI to handle non-linear math

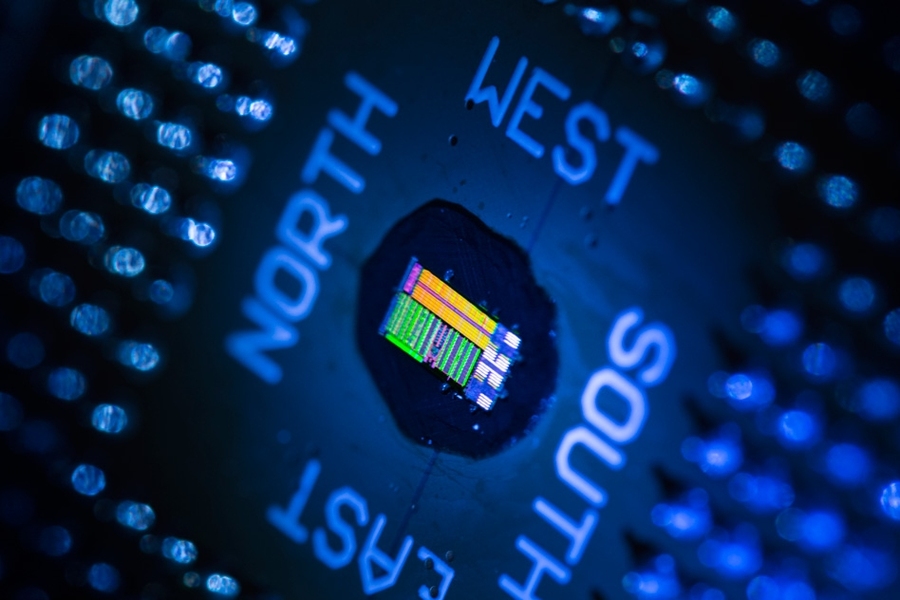

See the light Getting an all-optical AI to handle non-linear math Instead of sensing photons and processing the results, why not process the photons? Jacek Krywko Jan 12, 2025 7:07 am | 2 An optical processor built by researchers at MIT. Credit: MIT An optical processor built by researchers at MIT. Credit: MIT Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreA standard digital camera used in a car for stuff like emergency braking has a perceptual latency of a hair above 20 milliseconds. Thats just the time needed for a camera to transform the photons hitting its aperture into electrical chargers using either CMOS or CCD sensors. It doesnt count the further milliseconds needed to send that information to an onboard computer or process it there.A team of MIT researchers figured that if you had a chip that could process photons directly, you could skip the entire digitization step and perform calculations with the photons themselves. It has the potential to be mind-bogglingly faster.Were focused on a very specific metric here, which is latency. We aim for applications where what matters the most is how fast you can produce a solution. Thats why we are interested in systems where were able to do all the computations optically, says Saumil Bandyopadhyay, an MIT researcher, The team that implemented a complete deep neural network on a photonic chip, achieving a latency of 410 picoseconds. To put that in perspective, Bandyopadhyays chip could process the entire neural net it had onboard around 58 times within a single tick of the 4 GHz clock on a standard CPU.Matrices and nonlinearityNeural networks work with multiple layers of computational units that function as neurons. Each neuron can take an input, and those inputs can be, lets say, numbers, says Bandyopadhyay. Those numbers are then multiplied by either a constant called weight or a parameter as they are passed on to the next layer of neurons. Each layer takes a weighted sum of the preceding layers and sends it forward.This is the equivalent of linear algebraperforming matrix multiplication. However, AI models are often used to find intricate patterns in data where the output is not always proportional to the input. For this, you also need non-linear thresholding functions that adjust the weights between the layers of neurons. What makes deep neural networks so powerful is that were able to map very complicated relationships in data by repeatedly cascading both these linear operations and non-linear thresholding functions between the layers, Bandyopadhyay says.The problem is that this cascading requires massive parallel computations that, when done on standard computers, take tons of energy and time. Bandyopadhyays team feels this problem can be solved by performing the equivalent operations using photons rather than electrons. In photonic chips, information can be encoded in optical properties like polarization, phase, magnitude, frequency, and wavevector. While this would be extremely fast and energy-efficient, building such chips isnt easy.Siphoning lightConveniently, photonics turned out to be particularly good at linear matrix operations, Bandyopadhyay claims. A group at MIT led by Dirk Englund, a professor who is a co-author of Bandyopadhyays study, demonstrated a photonic chip doing matrix multiplication entirely with light in 2017. What the field struggled with, though, was implementing non-linear functions in photonics.The usual solution, so far, relied on bypassing the problem by doing linear algebra on photonic chips and offloading non-linear operations to external electronics. This, however, increased latency, since the information had to be converted from light to electrical signals, processed on an external processor, and converted back to light. And bringing the latency down is the primary reason why we want to build neural networks in photonics, Bandyopadhyay says.To solve this problem, Bandyopadhyay and his colleagues designed and built what is likely to be the worlds first chip that can compute the entire deep neural net, including both linear and non-linear operations, using photons. The process starts with an external laser with a modulator that feeds light into the chip through an optical fiber. This way we convert electrical inputs to light, Bandyopadhyay explains.The light is then fanned out to six channels and fed into a layer of six neurons that perform linear matrix multiplication using an array of devices called Mach-Zehnder interferometers. They are essentially programmable beam splitters, taking two optical fields and mixing them coherently to produce two output optical fields. By applying the voltage, you can control how much those the two inputs mix, Bandyopadhyay says.What a single Mach-Zehnder interferometer does in this context is a two-by-two matrix operation, performed on a pair of optical signals. With a rectangular array of those interferometers, the team could realize a larger set of matrix operations across all six optical channels.Once matrix multiplication is done in the first layer, the information goes to another layer through a unit that is responsible for nonlinear thresholding. We did this by co-integrating electronics and optics, Bandyopadhyay says. This works by sending a tiny bit of the optical signal to a photodiode that measures how much optical power is there. The result of this measurement is used to manipulate the rest of the photons passing through the device. We use that little bit of optical signal siphoned to the diode to modulate the rest of the optical signal, Bandyopadhyay explains.The entire chip had three layers of neurons performing matrix multiplications and two nonlinear function units in between. Overall, the network implemented on the chip could work with 132 parameters.This, in a way, highlights some of the limitations optical chips have today. The number of parameters used in the Chat GPT-4 large language model is reportedly 1 trillion. Compared to this trillion, the 132 parameters supported by Bandyopadhyays chip looked like less.Modest beginningsLarge language models are basically the biggest models you could have, right? They are the hardest to tackle. We are focused more on sort of applications where you benefit from lower latency, and models like that turn out to be smaller, Bandyopadhyay says. His team gears their chip toward powering AIs that work with up to 100,000 parameters. Its not like we have to go straight to Chat GPT to do something that is commercially useful. We can target these smaller models first, Bandyopadhyay adds.The smaller model Bandyopadhyay implemented on the chip in his study recognized spoken vowels, which is a task commonly used as a benchmark in research on AI-focused hardware. It scored 92 percent accuracy, which was on par with neural networks run on standard computers.But there are other and way cooler things small models can do. One of them is keeping self-driving cars from crashing. The idea is you have an autonomous navigation system where you want to repeatedly classify lidar signals with very fast latency, at speeds that are way faster than human reflexes, Bandyopadhyay says. According to his team, chips like the one they are working on should make it possible to classify lidar data directly, pushing photons straight into photonic chips without converting them to electrical signals.Other things Bandyopadhyay thinks could be powered by photonic chips are automotive vision systems that are entirely different from the camera-based systems we use today. You can essentially replace the camera as we know it. Instead, you could have a large array of inputs taking optical signals, sampling them, and sending them directly to optical processors for machine learning computations, Bandyopadhyay says. Its just a question of engineering the system.The team built the chip using standard CMOS processes, which Bandyopadhyay says should make scaling it easier. You are not limited by just what can fit on a single chip. You can make multi-chip systems to realize bigger networks. This is a promising direction for photonic chips technologythis is something you can already see happening in electronics, Bandyopadhyay claims.Nature Photonics, 2024. DOI: https://doi.org/10.1038/s41566-024-01567-zJacek KrywkoAssociate WriterJacek KrywkoAssociate Writer Jacek Krywko is a freelance science and technology writer who covers space exploration, artificial intelligence research, computer science, and all sorts of engineering wizardry. 2 Comments

0 Commentaires

0 Parts

160 Vue