OpenAI partners with national labs to supercharge scientific breakthroughs

arstechnica.com

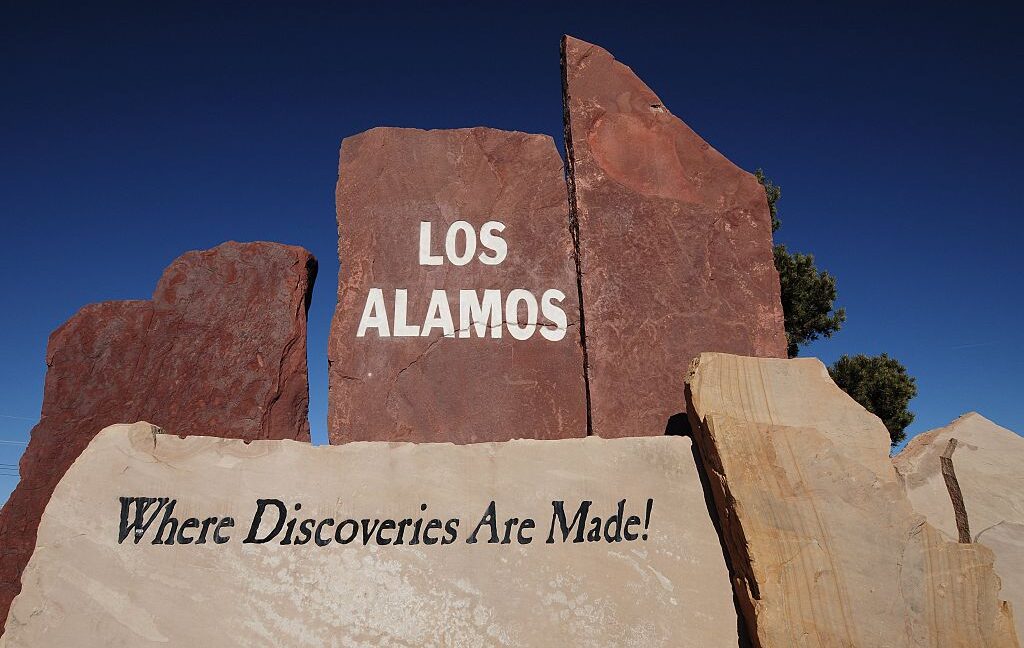

Accelerating fast OpenAI partners with national labs to supercharge scientific breakthroughs AI could help protect national security, detect diseases, and stabilize power grids, company says. Ashley Belanger Jan 30, 2025 1:12 pm | 4 OpenAI will deploy an advanced AI model on a supercomputer at Los Alamos National Laboratory. Credit: Robert Alexander / Contributor | Archive Photos OpenAI will deploy an advanced AI model on a supercomputer at Los Alamos National Laboratory. Credit: Robert Alexander / Contributor | Archive Photos Story textSizeSmallStandardLargeWidth *StandardWideLinksStandardOrange* Subscribers only Learn moreOn Thursday, OpenAI announced that it is deepening its ties with the US government through a partnership with the National Laboratories and expects to use AI to "supercharge" research across a wide range of fields to better serve the public."This is the beginning of a new era, where AI will advance science, strengthen national security, and support US government initiatives," OpenAI said.The deal ensures that "approximately 15,000 scientists working across a wide range of disciplines to advance our understanding of nature and the universe" will have access to OpenAI's latest reasoning models, the announcement said.For researchers from Los Alamos, Lawrence Livermore, and Sandia National Labs, access to "o1 or another o-series model" will be available on Venadoan Nvidia supercomputer at Los Alamos that will become a "shared resource." Microsoft will help deploy the model, OpenAI noted.OpenAI suggested this access could propel major "breakthroughs in materials science, renewable energy, astrophysics," and other areas that Venado was "specifically designed" to advance.Key areas of focus for Venado's deployment of OpenAI's model include accelerating US global tech leadership, finding ways to treat and prevent disease, strengthening cybersecurity, protecting the US power grid, detecting natural and man-made threats "before they emerge," and " deepening our understanding of the forces that govern the universe," OpenAI said.Perhaps among OpenAI's flashiest promises for the partnership, though, is helping the US achieve a "a new era of US energy leadership by unlocking the full potential of natural resources and revolutionizing the nations energy infrastructure." That is urgently needed, as officials have warned that America's aging energy infrastructure is becoming increasingly unstable, threatening the country's health and welfare, and without efforts to stabilize it, the US economy could tank.But possibly the most "highly consequential" government use case for OpenAI's models will be supercharging research safeguarding national security, OpenAI indicated."The Labs also lead a comprehensive program in nuclear security, focused on reducing the risk of nuclear war and securing nuclear materials and weapons worldwide," OpenAI noted. "Our partnership will support this work, with careful and selective review of use cases and consultations on AI safety from OpenAI researchers with security clearances."OpenAI flexible to Trumps shift in AI prioritiesThis partnership builds on OpenAI's prior collaborations with the US government, and the company this week rolled out ChatGPT Gov, "a new tailored version of ChatGPT designed to provide US government agencies with an additional way to access OpenAIs frontier models."Previously, OpenAI worked closely with the Biden administration on AI safety efforts, voluntarily committing to give officials early access to its latest models for safety inspections.At that time, OpenAI backed red teaming as a critical government check on AI model deployment, nodding at the US AI Safety Institute being charged with testing AI models and ensuring public safety.Now, Trump has demanded that the country reassess this safety approach through an executive order that revoked any Biden administration effort that his administration deems has "hampered the private sectors ability to innovate in AI by imposing government control over AI development and deployment." That could meaningfully gut AI Safety Institute checks or even dissolve the effort entirely, critics have suggested.Any government AI rollout will likely still require safety checks, but precisely how they will look remains unclear. A new "AI Action Plan""led by the Assistant to the President for Science & Technology, the White House AI & Crypto Czar, and the National Security Advisor" and focused on enhancing America's AI dominanceis supposedly coming soon. A key objective, Trump said, would be eliminating any "harmful barriers to Americas AI leadership."Despite the apparent shift in safety approaches, under the Trump administration, OpenAI has seemingly had no issue continuing to work closely with officials. Just last week, Trump and OpenAI announced a $500 billion partnership to use AI for infrastructure, which further entrenches OpenAI as a dominant AI vendor for government."We look forward to developing future projects together to support the US government and the mission of ensuring AGI benefits all of humanity," OpenAI said.Ashley BelangerSenior Policy ReporterAshley BelangerSenior Policy Reporter Ashley is a senior policy reporter for Ars Technica, dedicated to tracking social impacts of emerging policies and new technologies. She is a Chicago-based journalist with 20 years of experience. 4 Comments

0 Yorumlar

·0 hisse senetleri

·57 Views