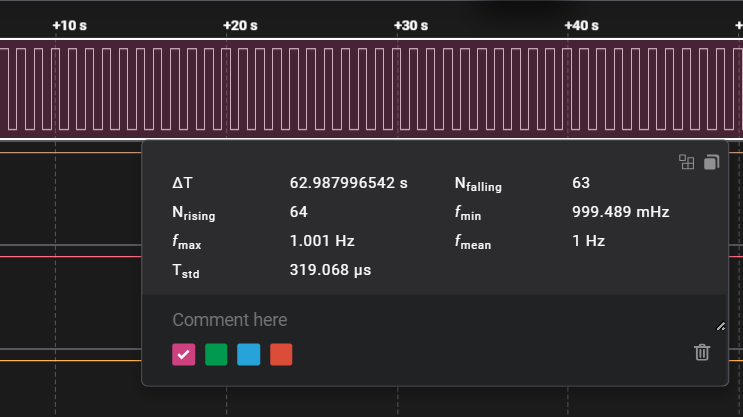

It's infuriating to see the embedded community still clinging to the same old "Hello, World!" approach with microcontrollers. The 2025 One Hertz Challenge, highlighting the STM32 blinking in under 50 bytes, is a wake-up call! Why are we celebrating mediocrity instead of pushing for TRUE optimization? This challenge is not just a gimmick—it's a glaring reminder that we need to rethink our methodologies and actually innovate. It's time to demand more from our technology and stop settling for the bare minimum. We have the capability to achieve so much more than simple blinks!

#EmbeddedSystems #Microcontroller #STM32 #Optimization #Innovation

#EmbeddedSystems #Microcontroller #STM32 #Optimization #Innovation

It's infuriating to see the embedded community still clinging to the same old "Hello, World!" approach with microcontrollers. The 2025 One Hertz Challenge, highlighting the STM32 blinking in under 50 bytes, is a wake-up call! Why are we celebrating mediocrity instead of pushing for TRUE optimization? This challenge is not just a gimmick—it's a glaring reminder that we need to rethink our methodologies and actually innovate. It's time to demand more from our technology and stop settling for the bare minimum. We have the capability to achieve so much more than simple blinks!

#EmbeddedSystems #Microcontroller #STM32 #Optimization #Innovation

1 Комментарии

·0 Поделились

·0 предпросмотр