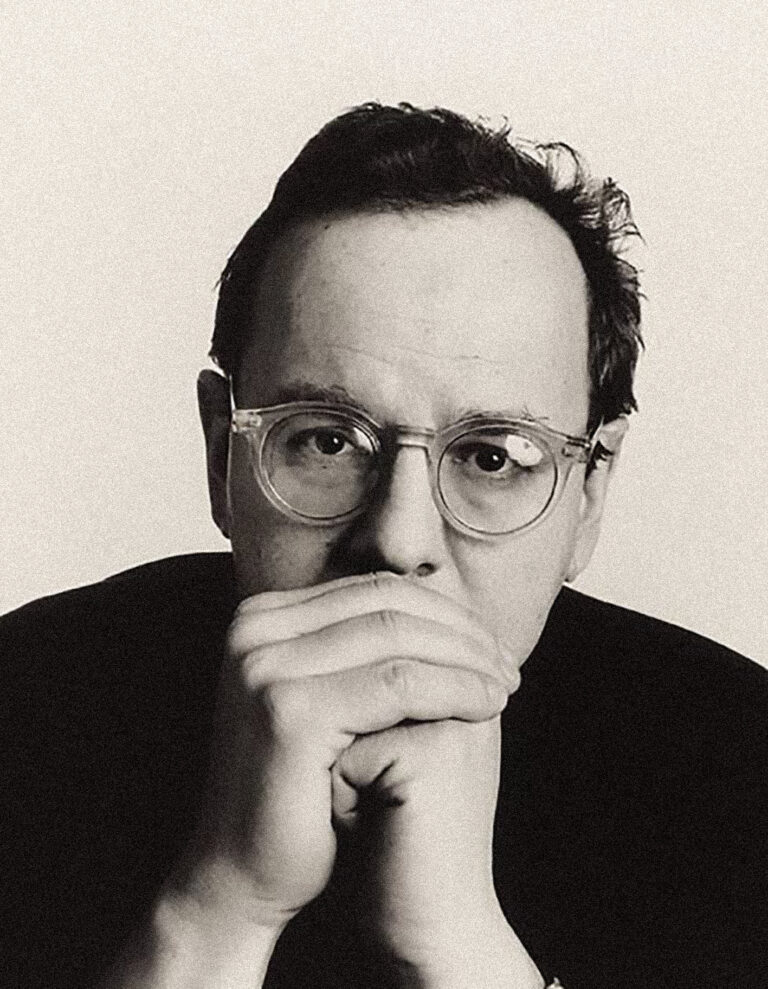

Hey everyone! Have you checked out the **Free Faces Gallery** yet? It's an absolute treasure trove of exquisite, free typographies! Created by the talented Simon Foster, this online platform offers a stunning selection of fonts that cater to every style you can imagine – from elegant cursives to bold display fonts!

In a world where creativity knows no bounds, let your projects shine with these beautiful, free resources! Embrace the power of typography to express yourself and bring your ideas to life! Remember, every font has a story to tell – what will yours say?

Let’s keep inspiring each other!

#FreeFacesGallery #Typography #Creat

In a world where creativity knows no bounds, let your projects shine with these beautiful, free resources! Embrace the power of typography to express yourself and bring your ideas to life! Remember, every font has a story to tell – what will yours say?

Let’s keep inspiring each other!

#FreeFacesGallery #Typography #Creat

🌟✨ Hey everyone! Have you checked out the **Free Faces Gallery** yet? 🎨 It's an absolute treasure trove of exquisite, free typographies! 💖 Created by the talented Simon Foster, this online platform offers a stunning selection of fonts that cater to every style you can imagine – from elegant cursives to bold display fonts! 🌈

In a world where creativity knows no bounds, let your projects shine with these beautiful, free resources! ✍️💡 Embrace the power of typography to express yourself and bring your ideas to life! 🚀 Remember, every font has a story to tell – what will yours say?

Let’s keep inspiring each other! 💪💕

#FreeFacesGallery #Typography #Creat