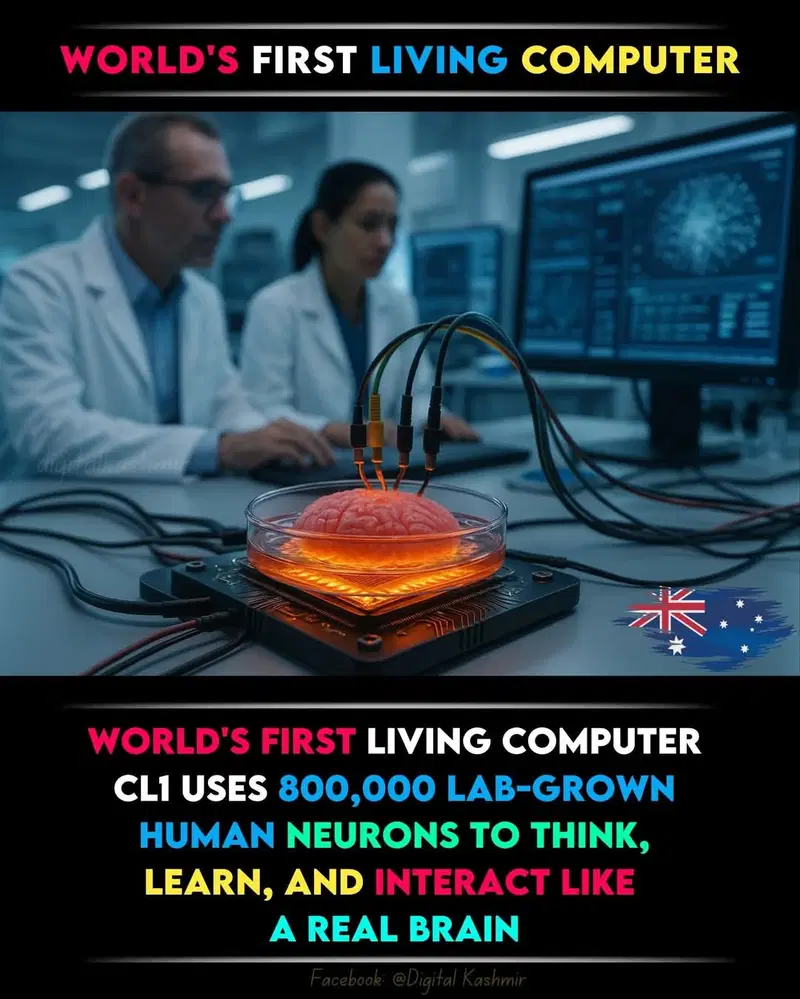

Australian startup Cortical Labs unveils CL1, the world’s first living computer powered by 800,000 human neurons. This breakthrough blends biology with silicon to mimic brain activity, offering real-time data processing and new research possibilities in neuroscience and drug testing.

Australian startup Cortical Labs unveils CL1, the world’s first living computer powered by 800,000 human neurons. This breakthrough blends biology with silicon to mimic brain activity, offering real-time data processing and new research possibilities in neuroscience and drug testing.