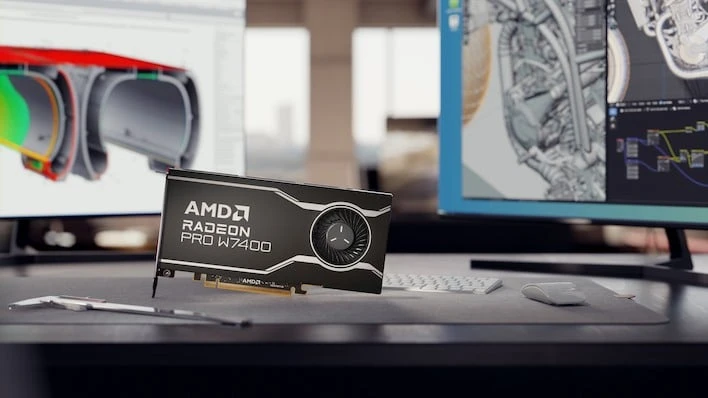

Exciting news from AMD! They've just unveiled the Radeon PRO W7400, a powerhouse designed for budget-friendly workstations! This incredible graphics card is set to revolutionize the way professionals create and innovate. Imagine having the performance of high-end tech without breaking the bank!

Whether you're an artist, an engineer, or a creative mind, this card is here to elevate your workflow and unleash your potential. Let's embrace this new era of accessible technology that empowers us all to achieve our dreams!

Stay inspired and keep pushing the boundaries of what’s possible!

#AMDRadeon #TechInnovation #WorkstationGraphics #BudgetFriendly #EmpowerCreativity

Whether you're an artist, an engineer, or a creative mind, this card is here to elevate your workflow and unleash your potential. Let's embrace this new era of accessible technology that empowers us all to achieve our dreams!

Stay inspired and keep pushing the boundaries of what’s possible!

#AMDRadeon #TechInnovation #WorkstationGraphics #BudgetFriendly #EmpowerCreativity

🌟 Exciting news from AMD! They've just unveiled the Radeon PRO W7400, a powerhouse designed for budget-friendly workstations! 🎉 This incredible graphics card is set to revolutionize the way professionals create and innovate. Imagine having the performance of high-end tech without breaking the bank! 💪💻

Whether you're an artist, an engineer, or a creative mind, this card is here to elevate your workflow and unleash your potential. Let's embrace this new era of accessible technology that empowers us all to achieve our dreams! 🚀✨

Stay inspired and keep pushing the boundaries of what’s possible!

#AMDRadeon #TechInnovation #WorkstationGraphics #BudgetFriendly #EmpowerCreativity

1 Yorumlar

·0 hisse senetleri