In today’s fast-paced packaging industry, companies need to maximize production speed while ensuring the quality and precision of their products. cenwan’s Carton Folder Gluer Machine is the ideal solution for businesses looking to enhance their packaging efficiency. Whether you are working with cardboard boxes for food packaging, e-commerce shipments, or retail products, our folder gluer machine provides the perfect balance of speed, accuracy, and reliability.

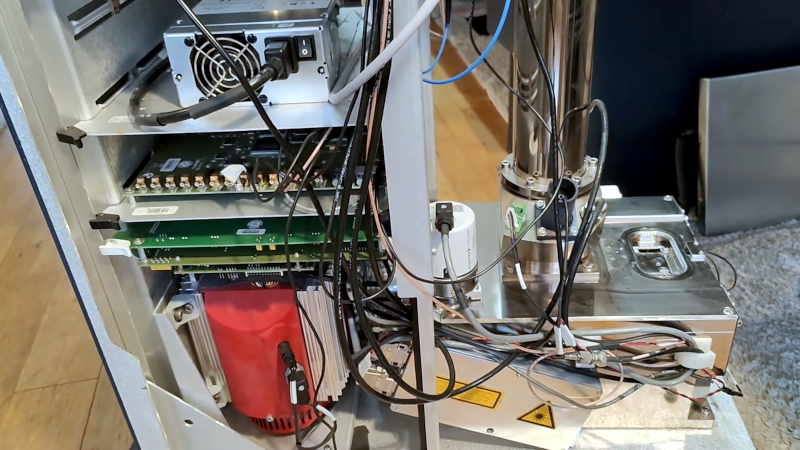

cenwan’s Carton Folder Gluer Machine is designed to fold and glue cartons quickly and precisely. This machine can handle various sizes of cardboard and paperboard with ease, allowing businesses to produce high-quality boxes with minimal effort. Its advanced automation features ensure a smooth and efficient operation, reducing human intervention and minimizing the risk of errors. With adjustable settings, the machine can easily adapt to different box sizes, making it perfect for a wide range of packaging applications.

One of the key advantages of cenwan’s Carton Folder Gluer Machine is its ability to significantly improve production speed. Traditional manual folding and gluing processes are time-consuming and require a lot of labor. By automating the folding and gluing processes, cenwan’s machine reduces production time, enabling businesses to meet high-volume demands without compromising on quality. Whether you are producing small batches or large runs, this machine helps increase your throughput and efficiency.

Another notable feature of cenwan’s Carton Folder Gluer Machine is its ease of use. The machine is equipped with a user-friendly interface that makes setup and operation simple, even for those with minimal experience in packaging machinery. The machine’s design ensures that the folding and gluing process is accurate and consistent, which is crucial for maintaining high-quality packaging standards. This reliability helps businesses maintain a strong brand image by delivering well-made, consistent products to their customers.

In addition to its efficiency and ease of use, the Carton Folder Gluer Machine is also built to last. Constructed from durable, high-quality materials, this machine is designed to withstand the rigors of high-volume production environments. Whether you run a small operation or a large-scale factory, this machine is designed to meet the demands of your business, offering long-term reliability and high return on investment.

cenwan’s Carton Folder Gluer Machine is also environmentally friendly. With increasing pressure to reduce waste and energy consumption, the machine is designed to optimize material usage and reduce excess packaging. By minimizing paper waste and improving energy efficiency, businesses can lower their environmental footprint and support sustainability goals. The machine also helps reduce the amount of manual labor required, contributing to safer working conditions in your production line.

Moreover, the Carton Folder Gluer Machine can be seamlessly integrated into your existing packaging process. It works well with other equipment, such as box printers, glue applicators, and labeling systems, creating a fully automated, streamlined packaging line. This integration reduces human error, speeds up production, and improves consistency across your packaging process.

For businesses looking to improve their packaging operations, cenwan’s Carton Folder Gluer Machine is the perfect solution. Its efficiency, ease of use, durability, and environmental benefits make it an ideal choice for businesses of all sizes. Whether you're producing corrugated boxes for shipping or retail packaging, this machine will help you meet your production goals and improve your bottom line.today to learn more about how our Carton Folder Gluer Machine can benefit your business.

In today’s fast-paced packaging industry, companies need to maximize production speed while ensuring the quality and precision of their products. cenwan’s Carton Folder Gluer Machine is the ideal solution for businesses looking to enhance their packaging efficiency. Whether you are working with cardboard boxes for food packaging, e-commerce shipments, or retail products, our folder gluer machine provides the perfect balance of speed, accuracy, and reliability.

cenwan’s Carton Folder Gluer Machine is designed to fold and glue cartons quickly and precisely. This machine can handle various sizes of cardboard and paperboard with ease, allowing businesses to produce high-quality boxes with minimal effort. Its advanced automation features ensure a smooth and efficient operation, reducing human intervention and minimizing the risk of errors. With adjustable settings, the machine can easily adapt to different box sizes, making it perfect for a wide range of packaging applications.

One of the key advantages of cenwan’s Carton Folder Gluer Machine is its ability to significantly improve production speed. Traditional manual folding and gluing processes are time-consuming and require a lot of labor. By automating the folding and gluing processes, cenwan’s machine reduces production time, enabling businesses to meet high-volume demands without compromising on quality. Whether you are producing small batches or large runs, this machine helps increase your throughput and efficiency.

Another notable feature of cenwan’s Carton Folder Gluer Machine is its ease of use. The machine is equipped with a user-friendly interface that makes setup and operation simple, even for those with minimal experience in packaging machinery. The machine’s design ensures that the folding and gluing process is accurate and consistent, which is crucial for maintaining high-quality packaging standards. This reliability helps businesses maintain a strong brand image by delivering well-made, consistent products to their customers.

In addition to its efficiency and ease of use, the Carton Folder Gluer Machine is also built to last. Constructed from durable, high-quality materials, this machine is designed to withstand the rigors of high-volume production environments. Whether you run a small operation or a large-scale factory, this machine is designed to meet the demands of your business, offering long-term reliability and high return on investment.

cenwan’s Carton Folder Gluer Machine is also environmentally friendly. With increasing pressure to reduce waste and energy consumption, the machine is designed to optimize material usage and reduce excess packaging. By minimizing paper waste and improving energy efficiency, businesses can lower their environmental footprint and support sustainability goals. The machine also helps reduce the amount of manual labor required, contributing to safer working conditions in your production line.

Moreover, the Carton Folder Gluer Machine can be seamlessly integrated into your existing packaging process. It works well with other equipment, such as box printers, glue applicators, and labeling systems, creating a fully automated, streamlined packaging line. This integration reduces human error, speeds up production, and improves consistency across your packaging process.

For businesses looking to improve their packaging operations, cenwan’s Carton Folder Gluer Machine is the perfect solution. Its efficiency, ease of use, durability, and environmental benefits make it an ideal choice for businesses of all sizes. Whether you're producing corrugated boxes for shipping or retail packaging, this machine will help you meet your production goals and improve your bottom line.today to learn more about how our Carton Folder Gluer Machine can benefit your business.