0 Kommentare

0 Anteile

35 Ansichten

Verzeichnis

Verzeichnis

-

Please log in to like, share and comment!

-

WWW.ZDNET.COMNeed to relax? This new iPhone feature does the trick for me - here's howOne of my favorite features in iOS 18.4 is Ambient Music. Here's why and how to customize it for your needs.0 Kommentare 0 Anteile 51 Ansichten

WWW.ZDNET.COMNeed to relax? This new iPhone feature does the trick for me - here's howOne of my favorite features in iOS 18.4 is Ambient Music. Here's why and how to customize it for your needs.0 Kommentare 0 Anteile 51 Ansichten -

WWW.FORBES.COM‘Blue Prince’ Is Obviously GOTY, Possibly Even With A 2025 ‘GTA 6’Blue Prince is the best game of the year by far, and it's hard to imagine anything topping it besides GTA 6. Maybe not even then.0 Kommentare 0 Anteile 42 Ansichten

WWW.FORBES.COM‘Blue Prince’ Is Obviously GOTY, Possibly Even With A 2025 ‘GTA 6’Blue Prince is the best game of the year by far, and it's hard to imagine anything topping it besides GTA 6. Maybe not even then.0 Kommentare 0 Anteile 42 Ansichten -

TIME.COMDemis Hassabis Is Preparing for AI’s EndgameThis story is part of the 2025 TIME100. Read Jennifer Doudna’s tribute to Demis Hassabis here. Demis Hassabis learned he had won the 2024 Nobel Prize in Chemistry just 20 minutes before the world did. The CEO of Google DeepMind, the tech giant’s artificial intelligence lab, received a phone call with the good news at the last minute, after a failed attempt by the Nobel Foundation to find his contact information in advance. “I would have got a heart attack,” Hassabis quips, had he learned about the prize from the television. Receiving the honor was a “lifelong dream,” he says, one that “still hasn’t sunk in” when we meet five months later.Hassabis received half of the award alongside a colleague, John Jumper, for the design of AlphaFold: an AI tool that can predict the 3D structure of proteins using only their amino acid sequences—something Hassabis describes as a “50-year grand challenge” in the field of biology. Released freely by Google DeepMind for the world to use five years ago, AlphaFold has revolutionized the work of scientists toiling on research as varied as malaria vaccines, human longevity, and cures for cancer, allowing them to model protein structures in hours rather than years. The Nobel Prizes in 2024 were the first in history to recognize the contributions of AI to the field of science. If Hassabis gets his way, they won’t be the last.AlphaFold’s impact may have been broad enough to win its creators a Nobel Prize, but in the world of AI, it is seen as almost hopelessly narrow. It can model the structures of proteins but not much else; it has no understanding of the wider world, cannot carry out research, nor can it make its own scientific breakthroughs. Hassabis’s dream, and the wider industry’s, is to build AI that can do all of those things and more, unlocking a future of almost unimaginable wonder. All human diseases will be a thing of the past if this technology is created, he says. Energy will be zero-carbon and free, allowing us to transcend the climate crisis and begin restoring our planet’s ecosystems. Global conflicts over scarce resources will dissipate, giving way to a new era of peace and abundance. “I think some of the biggest problems that face us today as a society, whether that's climate or disease, will be helped by AI solutions,” Hassabis says. “I'd be very worried about society today if I didn't know that something as transformative as AI was coming down the line.”This hypothetical technology—known in the industry as Artificial General Intelligence, or AGI—had long been seen as decades away. But the fast pace of breakthroughs in computer science over the last few years has led top AI scientists to radically revise their expectations of when it will arrive. Hassabis predicts AGI is somewhere between five and 10 years away—a rather pessimistic view when judged by industry standards. OpenAI CEO Sam Altman has predicted AGI will arrive within Trump’s second term, while Anthropic CEO Dario Amodei says it could come as early as 2026. Partially underlying these different predictions is a disagreement over what AGI means. OpenAI’s definition, for instance, is rooted in cold business logic: a technology that can perform most economically valuable tasks better than humans can. Hassabis has a different bar, one focused instead on scientific discovery. He believes AGI would be a technology that could not only solve existing problems, but also come up with entirely new explanations for the universe. A test for its existence might be whether a system could come up with general relativity with only the information Einstein had access to; or if it could not only solve Photograph by David Vintiner for TIMEOrder your copy of the 2025 TIME100 issue hereIn an AI industry whose top ranks are populated mostly by businessmen and technologists, that identity sets Hassabis apart. Yet he must still operate in a system where market logic is the driving force. Creating AGI will require hundreds of billions of dollars’ worth of investments—dollars that Google is happily plowing into Hassabis’ DeepMind unit, buoyed by the promise of a technology that can do anything and everything. Whether Google will ensure that AGI, if it comes, benefits the world remains to be seen; Hassabis points to the decision to release AlphaFold for free as a symbol of its benevolent posture. But Google is also a company that must legally act in the best interests of its shareholders, and consistently releasing expensive tools for free is not a long-term profitable strategy. The financial promise of AI—for Google and for its competitors—lies in controlling a technology capable of automating much of the labor that drives the more than $100 trillion global economy. Capture even a small fraction of that value, and your company will become one of the most profitable the world has ever seen. Good news for shareholders, but bad news for regular workers who may find themselves suddenly unemployed.So far, Hassabis has successfully steered Google's multibillion-dollar AI ambitions toward the type of future he wants to see: one focused on scientific discoveries that, he hopes, will lead to radical social uplift. But will this former child chess prodigy be able to maintain his scientific idealism as AI reaches its high-stakes endgame? His track record reveals one reason to be skeptical. When DeepMind was acquired by Google in 2014, Hassabis insisted on a contractual firewall: a clause explicitly prohibiting his technology from being used for military applications. It was a red line that reflected his vision of AI as humanity's scientific savior, not a weapon of war. But multiple corporate restructures later, that protection has quietly disappeared. Today, the same AI systems developed under Hassabis's watch are being sold, via Google, to militaries such as Israel's—whose campaign in Gaza has killed tens of thousands of civilians. When pressed, Hassabis denies that this was a compromise made in order to maintain his access to Google's computing power and thus realize his dream of developing AGI. Instead, he frames it as a pragmatic response to geopolitical reality, saying DeepMind changed its stance after acknowledging that the world had become “a much more dangerous place” in the last decade. “I think we can't take for granted anymore that democratic values are going to win out,” he says. Whether or not this justification is honest, it raises an uncomfortable question: If Hassabis couldn't maintain his ethical red line when AGI was just a distant promise, what compromises might he make when it comes within touching distance?To get to Hassabis’s dream of a utopian future, the AI industry must first navigate its way through a dark forest full of monsters. Artificial intelligence is a dual-use technology like nuclear energy: it can be used for good, but it could also be terribly destructive. Hassabis spends much of his time worrying about risks, which generally fall into two different buckets. One is the possibility of systems that can meaningfully enhance the capabilities of bad actors to wreak havoc in the world; for example, by endowing rogue nations or terrorists with the tools they need to synthesize a deadly virus. Preventing risks like that, Hassabis believes, means carefully testing AI models for dangerous capabilities, and only gradually releasing them to more users with effective guardrails. It means keeping the “weights” of the most powerful models (essentially their underlying neural networks) out of the public’s hands altogether, so that models can be withdrawn from public use if dangers are discovered after release. That’s a safety strategy that Google follows but which some of its competitors, such as DeepSeek and Meta, do not. The second category of risks may seem like science fiction, but they are taken seriously inside the AI industry as model capabilities advance. These are the risks of AI systems acting autonomously— such as a chatbot deceiving its human creators, or a robot attacking the person it was designed to help. Language models like DeepMind’s Gemini are essentially grown from the ground up, rather than written by hand like old-school computer programs, and so computer scientists and users are constantly finding ways to elicit new behaviors from what are best understood as incredibly mysterious and complex artifacts. The question of how to ensure that they always behave and act in ways that are “aligned” to human values is an unsolved scientific problem. Early signs of misaligned behaviors, like strategic lying, have already been identified by researchers working with today’s language models. Those problems are only likely to become more acute as models get better. “How do we ensure that we can stay in charge of those systems, control them, interpret what they're doing, understand them, and put the right guardrails in place that are not movable by very highly capable self-improving systems?” Hassabis says. “That is an extremely difficult challenge.”It’s a devilish technical problem—but what really keeps Hassabis up at night are the political coordination challenges that accompany it. Even if well-meaning companies can make safe AIs, that doesn’t by itself stop the creation and proliferation of unsafe AIs. Stopping that will require international collaboration—something that’s becoming increasingly difficult as western alliances fray and geopolitical tensions between the U.S. and China rise. Hassabis has played a significant role in the three AI summits held by global governments since 2023, and says he would like to see more of that kind of cooperation. He says the U.S. government’s export controls on AI chips, intended to prevent China’s AI industry from surpassing Silicon Valley, are “fine”—but he would prefer to avoid political choices that “end up in an antagonistic kind of situation.” He might be out of luck. As both the U.S. and China have woken up in recent years to the potential power of AGI, the climate of global cooperation —which reached a high watermark with the first AI Safety Summit in 2023—has given way to a new kind of realpolitik. In this new era, with nations racing to militarize AI systems and build up stockpiles of chips, and with a new cold war brewing between the U.S. and China, Hassabis still holds out hope that competing nations and companies can find ways to set aside their differences and cooperate, at least on AI safety. “It’s in everyone’s self-interest to make sure that goes well,” he says. Even if the world can find a way to safely navigate through the geopolitical turmoil of AGI’s arrival, the question of labor automation will rear its head. When governments and companies no longer rely on humans to generate their wealth, what leverage will citizens have left to demand the ingredients of democracy and a comfortable life? AGI might create abundance, but it won’t dispel the incentives for companies and states to amass resources and compete with rivals. Hassabis admits he is better at forecasting technological futures than social and economic ones; he says he wishes more economists would take the possibility of near-term AGI seriously. Still, he thinks it’s inevitable we’ll need a “new political philosophy” to organize society in this world. Democracy, he says, “is not a panacea, by any means,” and might have to give way to “something better.” Hassabis, left, captaining the England under-11s chess team at the age of 9. Courtesy Demis HassabisAutomation, meanwhile, is already on the horizon. In March, DeepMind announced Gemini 2.5, the latest version of its flagship AI model, which outperforms rival models made by OpenAI and Anthropic on many popular metrics. Hassabis is currently hard at work on Project Astra, a DeepMind effort to build a universal digital assistant powered by Gemini. That work, he says, is not intended to hasten labor disruptions, but instead is about building the necessary scaffolding for the type of AI that he hopes will one day make its own scientific discoveries. Still, as research into these AI “agents” progresses, Hassabis says, expect them to be able to carry out increasingly more complex tasks independently. (An AI agent that can meaningfully automate the job of further AI research, he predicts, is “a few years away.”) For the first time, Google is also now using these digital brains to control robot bodies: in March the company announced a Gemini-powered android robot that can carry out embodied tasks like playing tic-tac-toe, or making its human a packed lunch. The tone of the video announcing Gemini Robotics was friendly, but its connotations were not lost on some YouTube commenters: “Nothing to worry [about,] humanity, we are only developing robots to do tasks a 5 year old can do,” one wrote. “We are not working on replacing humans or creating robot armies.” Hassabis acknowledges the social impacts of AI are likely to be significant. People must learn how to use new AI models, he says, in order to excel professionally in the future and not risk getting left behind. But he is also confident that if we eventually build AGI capable of doing productive labor and scientific research, the world that it ushers into existence will be abundant enough to ensure a substantial increase in quality of life for everybody. “In the limited-resource world which we're in, things ultimately become zero-sum,” Hassabis says. “What I'm thinking about is a world where it's not a zero-sum game anymore, at least from a resource perspective.” Five months after his Nobel Prize, Hassabis’s journey from chess prodigy to Nobel laureate now leads toward an uncertain future. The stakes are no longer just scientific recognition—but potentially the fate of human civilization. As DeepMind's machines grow more capable, as corporate and geopolitical competition over AI intensifies, and as the economic impacts loom larger, Hassabis insists that we might be on the cusp of an abundant economy that benefits everyone. But in a world where AGI could bring unprecedented power to those who control it, the forces of business, geopolitics, and technological power are all bearing down with increasing pressure. If Hassabis is right, the turbulent decades of the early 21st century could give way to a shining utopia. If he has miscalculated, the future could be darker than anyone dares imagine. One thing is for sure: in his pursuit of AGI, Hassabis is playing the highest-stakes game of his life.0 Kommentare 0 Anteile 65 Ansichten

TIME.COMDemis Hassabis Is Preparing for AI’s EndgameThis story is part of the 2025 TIME100. Read Jennifer Doudna’s tribute to Demis Hassabis here. Demis Hassabis learned he had won the 2024 Nobel Prize in Chemistry just 20 minutes before the world did. The CEO of Google DeepMind, the tech giant’s artificial intelligence lab, received a phone call with the good news at the last minute, after a failed attempt by the Nobel Foundation to find his contact information in advance. “I would have got a heart attack,” Hassabis quips, had he learned about the prize from the television. Receiving the honor was a “lifelong dream,” he says, one that “still hasn’t sunk in” when we meet five months later.Hassabis received half of the award alongside a colleague, John Jumper, for the design of AlphaFold: an AI tool that can predict the 3D structure of proteins using only their amino acid sequences—something Hassabis describes as a “50-year grand challenge” in the field of biology. Released freely by Google DeepMind for the world to use five years ago, AlphaFold has revolutionized the work of scientists toiling on research as varied as malaria vaccines, human longevity, and cures for cancer, allowing them to model protein structures in hours rather than years. The Nobel Prizes in 2024 were the first in history to recognize the contributions of AI to the field of science. If Hassabis gets his way, they won’t be the last.AlphaFold’s impact may have been broad enough to win its creators a Nobel Prize, but in the world of AI, it is seen as almost hopelessly narrow. It can model the structures of proteins but not much else; it has no understanding of the wider world, cannot carry out research, nor can it make its own scientific breakthroughs. Hassabis’s dream, and the wider industry’s, is to build AI that can do all of those things and more, unlocking a future of almost unimaginable wonder. All human diseases will be a thing of the past if this technology is created, he says. Energy will be zero-carbon and free, allowing us to transcend the climate crisis and begin restoring our planet’s ecosystems. Global conflicts over scarce resources will dissipate, giving way to a new era of peace and abundance. “I think some of the biggest problems that face us today as a society, whether that's climate or disease, will be helped by AI solutions,” Hassabis says. “I'd be very worried about society today if I didn't know that something as transformative as AI was coming down the line.”This hypothetical technology—known in the industry as Artificial General Intelligence, or AGI—had long been seen as decades away. But the fast pace of breakthroughs in computer science over the last few years has led top AI scientists to radically revise their expectations of when it will arrive. Hassabis predicts AGI is somewhere between five and 10 years away—a rather pessimistic view when judged by industry standards. OpenAI CEO Sam Altman has predicted AGI will arrive within Trump’s second term, while Anthropic CEO Dario Amodei says it could come as early as 2026. Partially underlying these different predictions is a disagreement over what AGI means. OpenAI’s definition, for instance, is rooted in cold business logic: a technology that can perform most economically valuable tasks better than humans can. Hassabis has a different bar, one focused instead on scientific discovery. He believes AGI would be a technology that could not only solve existing problems, but also come up with entirely new explanations for the universe. A test for its existence might be whether a system could come up with general relativity with only the information Einstein had access to; or if it could not only solve Photograph by David Vintiner for TIMEOrder your copy of the 2025 TIME100 issue hereIn an AI industry whose top ranks are populated mostly by businessmen and technologists, that identity sets Hassabis apart. Yet he must still operate in a system where market logic is the driving force. Creating AGI will require hundreds of billions of dollars’ worth of investments—dollars that Google is happily plowing into Hassabis’ DeepMind unit, buoyed by the promise of a technology that can do anything and everything. Whether Google will ensure that AGI, if it comes, benefits the world remains to be seen; Hassabis points to the decision to release AlphaFold for free as a symbol of its benevolent posture. But Google is also a company that must legally act in the best interests of its shareholders, and consistently releasing expensive tools for free is not a long-term profitable strategy. The financial promise of AI—for Google and for its competitors—lies in controlling a technology capable of automating much of the labor that drives the more than $100 trillion global economy. Capture even a small fraction of that value, and your company will become one of the most profitable the world has ever seen. Good news for shareholders, but bad news for regular workers who may find themselves suddenly unemployed.So far, Hassabis has successfully steered Google's multibillion-dollar AI ambitions toward the type of future he wants to see: one focused on scientific discoveries that, he hopes, will lead to radical social uplift. But will this former child chess prodigy be able to maintain his scientific idealism as AI reaches its high-stakes endgame? His track record reveals one reason to be skeptical. When DeepMind was acquired by Google in 2014, Hassabis insisted on a contractual firewall: a clause explicitly prohibiting his technology from being used for military applications. It was a red line that reflected his vision of AI as humanity's scientific savior, not a weapon of war. But multiple corporate restructures later, that protection has quietly disappeared. Today, the same AI systems developed under Hassabis's watch are being sold, via Google, to militaries such as Israel's—whose campaign in Gaza has killed tens of thousands of civilians. When pressed, Hassabis denies that this was a compromise made in order to maintain his access to Google's computing power and thus realize his dream of developing AGI. Instead, he frames it as a pragmatic response to geopolitical reality, saying DeepMind changed its stance after acknowledging that the world had become “a much more dangerous place” in the last decade. “I think we can't take for granted anymore that democratic values are going to win out,” he says. Whether or not this justification is honest, it raises an uncomfortable question: If Hassabis couldn't maintain his ethical red line when AGI was just a distant promise, what compromises might he make when it comes within touching distance?To get to Hassabis’s dream of a utopian future, the AI industry must first navigate its way through a dark forest full of monsters. Artificial intelligence is a dual-use technology like nuclear energy: it can be used for good, but it could also be terribly destructive. Hassabis spends much of his time worrying about risks, which generally fall into two different buckets. One is the possibility of systems that can meaningfully enhance the capabilities of bad actors to wreak havoc in the world; for example, by endowing rogue nations or terrorists with the tools they need to synthesize a deadly virus. Preventing risks like that, Hassabis believes, means carefully testing AI models for dangerous capabilities, and only gradually releasing them to more users with effective guardrails. It means keeping the “weights” of the most powerful models (essentially their underlying neural networks) out of the public’s hands altogether, so that models can be withdrawn from public use if dangers are discovered after release. That’s a safety strategy that Google follows but which some of its competitors, such as DeepSeek and Meta, do not. The second category of risks may seem like science fiction, but they are taken seriously inside the AI industry as model capabilities advance. These are the risks of AI systems acting autonomously— such as a chatbot deceiving its human creators, or a robot attacking the person it was designed to help. Language models like DeepMind’s Gemini are essentially grown from the ground up, rather than written by hand like old-school computer programs, and so computer scientists and users are constantly finding ways to elicit new behaviors from what are best understood as incredibly mysterious and complex artifacts. The question of how to ensure that they always behave and act in ways that are “aligned” to human values is an unsolved scientific problem. Early signs of misaligned behaviors, like strategic lying, have already been identified by researchers working with today’s language models. Those problems are only likely to become more acute as models get better. “How do we ensure that we can stay in charge of those systems, control them, interpret what they're doing, understand them, and put the right guardrails in place that are not movable by very highly capable self-improving systems?” Hassabis says. “That is an extremely difficult challenge.”It’s a devilish technical problem—but what really keeps Hassabis up at night are the political coordination challenges that accompany it. Even if well-meaning companies can make safe AIs, that doesn’t by itself stop the creation and proliferation of unsafe AIs. Stopping that will require international collaboration—something that’s becoming increasingly difficult as western alliances fray and geopolitical tensions between the U.S. and China rise. Hassabis has played a significant role in the three AI summits held by global governments since 2023, and says he would like to see more of that kind of cooperation. He says the U.S. government’s export controls on AI chips, intended to prevent China’s AI industry from surpassing Silicon Valley, are “fine”—but he would prefer to avoid political choices that “end up in an antagonistic kind of situation.” He might be out of luck. As both the U.S. and China have woken up in recent years to the potential power of AGI, the climate of global cooperation —which reached a high watermark with the first AI Safety Summit in 2023—has given way to a new kind of realpolitik. In this new era, with nations racing to militarize AI systems and build up stockpiles of chips, and with a new cold war brewing between the U.S. and China, Hassabis still holds out hope that competing nations and companies can find ways to set aside their differences and cooperate, at least on AI safety. “It’s in everyone’s self-interest to make sure that goes well,” he says. Even if the world can find a way to safely navigate through the geopolitical turmoil of AGI’s arrival, the question of labor automation will rear its head. When governments and companies no longer rely on humans to generate their wealth, what leverage will citizens have left to demand the ingredients of democracy and a comfortable life? AGI might create abundance, but it won’t dispel the incentives for companies and states to amass resources and compete with rivals. Hassabis admits he is better at forecasting technological futures than social and economic ones; he says he wishes more economists would take the possibility of near-term AGI seriously. Still, he thinks it’s inevitable we’ll need a “new political philosophy” to organize society in this world. Democracy, he says, “is not a panacea, by any means,” and might have to give way to “something better.” Hassabis, left, captaining the England under-11s chess team at the age of 9. Courtesy Demis HassabisAutomation, meanwhile, is already on the horizon. In March, DeepMind announced Gemini 2.5, the latest version of its flagship AI model, which outperforms rival models made by OpenAI and Anthropic on many popular metrics. Hassabis is currently hard at work on Project Astra, a DeepMind effort to build a universal digital assistant powered by Gemini. That work, he says, is not intended to hasten labor disruptions, but instead is about building the necessary scaffolding for the type of AI that he hopes will one day make its own scientific discoveries. Still, as research into these AI “agents” progresses, Hassabis says, expect them to be able to carry out increasingly more complex tasks independently. (An AI agent that can meaningfully automate the job of further AI research, he predicts, is “a few years away.”) For the first time, Google is also now using these digital brains to control robot bodies: in March the company announced a Gemini-powered android robot that can carry out embodied tasks like playing tic-tac-toe, or making its human a packed lunch. The tone of the video announcing Gemini Robotics was friendly, but its connotations were not lost on some YouTube commenters: “Nothing to worry [about,] humanity, we are only developing robots to do tasks a 5 year old can do,” one wrote. “We are not working on replacing humans or creating robot armies.” Hassabis acknowledges the social impacts of AI are likely to be significant. People must learn how to use new AI models, he says, in order to excel professionally in the future and not risk getting left behind. But he is also confident that if we eventually build AGI capable of doing productive labor and scientific research, the world that it ushers into existence will be abundant enough to ensure a substantial increase in quality of life for everybody. “In the limited-resource world which we're in, things ultimately become zero-sum,” Hassabis says. “What I'm thinking about is a world where it's not a zero-sum game anymore, at least from a resource perspective.” Five months after his Nobel Prize, Hassabis’s journey from chess prodigy to Nobel laureate now leads toward an uncertain future. The stakes are no longer just scientific recognition—but potentially the fate of human civilization. As DeepMind's machines grow more capable, as corporate and geopolitical competition over AI intensifies, and as the economic impacts loom larger, Hassabis insists that we might be on the cusp of an abundant economy that benefits everyone. But in a world where AGI could bring unprecedented power to those who control it, the forces of business, geopolitics, and technological power are all bearing down with increasing pressure. If Hassabis is right, the turbulent decades of the early 21st century could give way to a shining utopia. If he has miscalculated, the future could be darker than anyone dares imagine. One thing is for sure: in his pursuit of AGI, Hassabis is playing the highest-stakes game of his life.0 Kommentare 0 Anteile 65 Ansichten -

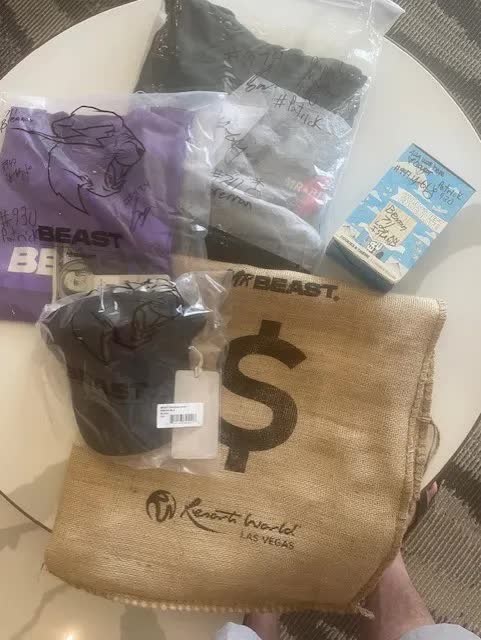

WWW.TECHSPOT.COMMrBeast fans left furious and demanding refunds after $1,000 Las Vegas event flopFacepalm: YouTube and Amazon star MrBeast's reputation isn't as sparkling as it once was. Now, it's taken another hit after fans who paid $1,000 for the promise of an "unforgettable" experience in Las Vegas were left angry and seeking refunds. Even MrBeast himself, Jimmy Donaldson, admitted the event hadn't lived up to expectations. Resorts World Las Vegas announced in January that it was partnering with Donaldson on the new MrBeast Experience. The three-day event that took place from April 13 to April 15 promised all attendees would receive an exclusive MrBeast mystery bag filled with limited-edition merchandise and goodies, with one containing a $10,000 gift voucher, redeemable for cash. Theresa Metta, who traveled to Las Vegas with her mother, said she expected the likes of mini-games, meet and greets, themed drinks, and other elements usually seen at events like these. The reality was quite different. Many guests told 8 News Now that when they checked in, they were told by the hotel's front desk to wait in their rooms for their welcome goodie box to arrive. Some waited more than 24 hours for what turned out to be a disappointing surprise. "I was told to wait in my room for two days for a package to come, so I legit spent two days in my room for a package to come, and it was a box of chocolates," Metta said. Metta added that the mystery bag she received contained kids' shorts, an extra-small shirt, a hat, and a medium shirt, all items that are currently on clearance for $9 on the MrBeast store. // Related Stories "We were told we'd get exclusive merch. Obviously, that's not the case," Metta added. Courtesy of KLAS According to Reddit posts, there were no activities during the event, and the only thing that seemed to relate to MrBeast other than the cheap mystery bags was a channel on the TV showing the MrBeast logo. The fact Donaldson himself wasn't there was also a disappointment, though it seems he was never scheduled to make an appearance. The hotel's high prices added to the guests' anger. Some were compensated with a $50 food and beverage credit for two different outlets on the site, which, according to atendee David Yacksyzn, would have been enough for one drink. Some people were also offered $50 pool credit. "They gave us an experience, alright, a real bad one," Yacksyzn told FOX5. "If Jimmy is part of this, I hope he feels good for scamming a whole bunch of adults and their children," said Abigail Marquez, an attendee who traveled from Los Angeles. "That's how you get subscribers? Cool." Some guests are reportedly looking into launching a class-action lawsuit against the hotel. The MrBeast Experience event page has now been removed from the Resorts World Las Vegas website. A few fans reached out to Donaldson on X, complaining about the event. "Hey! This definitely isn't the experience we hoped they'd deliver," he replied. "My team's already on it – I'd love to personally make it up to you and anyone else by inviting everybody affected to tour my actual studio! Can't wait to meet you all and my team is reaching out to everyone :D" MrBeast has faced several controversies over the last year, including lawsuits from five contestants from Beast Games, allegations against a longtime collaborator, accusations and a lawsuit from former employees, and a backlash against the Lunchly snack kit he launched in partnership with Logan Paul and KSI. Nevertheless, he still has the world's most-subscribed YouTube channel, a consumer brand that could be worth billions, and a possible eye on buying TikTok.0 Kommentare 0 Anteile 56 Ansichten

WWW.TECHSPOT.COMMrBeast fans left furious and demanding refunds after $1,000 Las Vegas event flopFacepalm: YouTube and Amazon star MrBeast's reputation isn't as sparkling as it once was. Now, it's taken another hit after fans who paid $1,000 for the promise of an "unforgettable" experience in Las Vegas were left angry and seeking refunds. Even MrBeast himself, Jimmy Donaldson, admitted the event hadn't lived up to expectations. Resorts World Las Vegas announced in January that it was partnering with Donaldson on the new MrBeast Experience. The three-day event that took place from April 13 to April 15 promised all attendees would receive an exclusive MrBeast mystery bag filled with limited-edition merchandise and goodies, with one containing a $10,000 gift voucher, redeemable for cash. Theresa Metta, who traveled to Las Vegas with her mother, said she expected the likes of mini-games, meet and greets, themed drinks, and other elements usually seen at events like these. The reality was quite different. Many guests told 8 News Now that when they checked in, they were told by the hotel's front desk to wait in their rooms for their welcome goodie box to arrive. Some waited more than 24 hours for what turned out to be a disappointing surprise. "I was told to wait in my room for two days for a package to come, so I legit spent two days in my room for a package to come, and it was a box of chocolates," Metta said. Metta added that the mystery bag she received contained kids' shorts, an extra-small shirt, a hat, and a medium shirt, all items that are currently on clearance for $9 on the MrBeast store. // Related Stories "We were told we'd get exclusive merch. Obviously, that's not the case," Metta added. Courtesy of KLAS According to Reddit posts, there were no activities during the event, and the only thing that seemed to relate to MrBeast other than the cheap mystery bags was a channel on the TV showing the MrBeast logo. The fact Donaldson himself wasn't there was also a disappointment, though it seems he was never scheduled to make an appearance. The hotel's high prices added to the guests' anger. Some were compensated with a $50 food and beverage credit for two different outlets on the site, which, according to atendee David Yacksyzn, would have been enough for one drink. Some people were also offered $50 pool credit. "They gave us an experience, alright, a real bad one," Yacksyzn told FOX5. "If Jimmy is part of this, I hope he feels good for scamming a whole bunch of adults and their children," said Abigail Marquez, an attendee who traveled from Los Angeles. "That's how you get subscribers? Cool." Some guests are reportedly looking into launching a class-action lawsuit against the hotel. The MrBeast Experience event page has now been removed from the Resorts World Las Vegas website. A few fans reached out to Donaldson on X, complaining about the event. "Hey! This definitely isn't the experience we hoped they'd deliver," he replied. "My team's already on it – I'd love to personally make it up to you and anyone else by inviting everybody affected to tour my actual studio! Can't wait to meet you all and my team is reaching out to everyone :D" MrBeast has faced several controversies over the last year, including lawsuits from five contestants from Beast Games, allegations against a longtime collaborator, accusations and a lawsuit from former employees, and a backlash against the Lunchly snack kit he launched in partnership with Logan Paul and KSI. Nevertheless, he still has the world's most-subscribed YouTube channel, a consumer brand that could be worth billions, and a possible eye on buying TikTok.0 Kommentare 0 Anteile 56 Ansichten -

WWW.DIGITALTRENDS.COMSpotify is down: live updates on the Spotify issueIt’s not just you, Spotify is down. Tens of thousands of users across the world are reporting issues with the popular music streaming service, and Spotify has already acknowledged the fault on X. It looks like a fix is in the works though, and the service could be returning to normal for users already. LiveLast updated April 16, 2025 7:28 AM Related The liveblog has ended.No liveblog updates yet. Editors’ Recommendations0 Kommentare 0 Anteile 52 Ansichten

WWW.DIGITALTRENDS.COMSpotify is down: live updates on the Spotify issueIt’s not just you, Spotify is down. Tens of thousands of users across the world are reporting issues with the popular music streaming service, and Spotify has already acknowledged the fault on X. It looks like a fix is in the works though, and the service could be returning to normal for users already. LiveLast updated April 16, 2025 7:28 AM Related The liveblog has ended.No liveblog updates yet. Editors’ Recommendations0 Kommentare 0 Anteile 52 Ansichten -

WWW.WSJ.COMNvidia is Now the Biggest U.S.-China Bargaining ChipThe ban on sales of the H20 chips calls into question the company’s ability to continually beat Wall Street’s lofty expectations..0 Kommentare 0 Anteile 51 Ansichten

-

ARSTECHNICA.COMFeds charge New Mexico man for allegedly torching Tesla dealershipBurning it down Feds charge New Mexico man for allegedly torching Tesla dealership Jamison Wagner also faces charges for an arson attack on a Republican office. Caroline Haskins, wired.com – Apr 16, 2025 9:26 am | 22 Credit: Getty Images | SOPA Images Credit: Getty Images | SOPA Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more A New Mexico man is facing federal charges for two separate incidents of alleged arson—one at an Albuquerque Tesla showroom and one at the New Mexico Republican Party’s office—according to a Monday press release from the Department of Justice. Jamison Wagner, 40, was charged with allegedly setting fire to a building or vehicle used in interstate commerce. The charge can apply to goods manufactured and sold in different states and the facilities that house them—like the Tesla showroom or the Republican office, which also sells MAGA merchandise. DOJ spokesperson Shannon Shevlin tells WIRED that Wagner’s arrest happened on Saturday. “Let this be the final lesson to those taking part in this ongoing wave of political violence,” Attorney General Pam Bondi said in the Monday press release. “We will arrest you, we will prosecute you, and we will not negotiate. Crimes have consequences.” Wagner’s arrest warrant alleges that he is responsible for a February 9 incident at a Tesla showroom in which windows were shattered and two Tesla Model Ys were set on fire. It also alleges that he is responsible for a March 30 incident at the Republican Party of New Mexico office in which the entrance area was set on fire and “ICE=KKK” was graffitied on the building’s exterior. The arrest warrant also says that a lead investigator on Wagner’s case is an FBI agent specializing in “international terrorism, domestic terrorism and firearms.” This marks the second known time that FBI terrorism investigators have gotten involved in a criminal investigation tied to the recent public backlash against Musk and Tesla. However, it’s the first time that the suspect was also allegedly tied to another incident—which, in this case, targeted a Republican office. The arrest comes amid repeated calls by Bondi, President Trump, Elon Musk, House Speaker Mike Johnson, and Representative Marjorie Taylor Greene to treat arson and vandalism of Tesla property as “domestic terrorism.” Five other people are currently facing federal charges for alleged vandalism and arson targeting Tesla property, according to press releases by the DOJ. As reported by WIRED, law enforcement can get access to surveillance technologies and have more legal leeway during terrorism investigations than in other types of investigations. These investigations could also possibly enable Musk and Tesla executives to access surveillance on “Tesla Takedown” protesters, though the protests have broadly been peaceful, and public-facing protest organizers have said that they don’t endorse property damage. The FBI can decide to share this type of information with the victim of a crime during an investigation, WIRED previously reported. Bondi teased news of Wagner’s arrest last week in a televised Cabinet meeting, telling Trump that there would be “another huge arrest” pertaining to an attack on a Tesla dealership within the next 24 hours. “That person will be looking at at least 20 years in prison with no negotiations,” Bondi said on Thursday. (The DOJ press release issued after Wagner’s arrest notes, “A complaint is merely an allegation, and all defendants are presumed innocent until proven guilty beyond a reasonable doubt in a court of law.”) DOJ spokesperson Shannon Shevlin confirmed that Wagner's arrest was the announcement Bondi was referring to. Her timeline was off, though, because Wagner was not in custody until Saturday. Wagner was first identified as a suspect due to an unspecified “investigative lead developed by law enforcement through scene evidence,” according to the arrest warrant. Investigators claim that after analyzing CCTV footage from buildings near the Republican office and traffic cameras, they identified a car consistent with the one registered to Wagner. After reviewing Wagner’s driver’s license and conducting physical surveillance outside his home, investigators also believed he resembled the person seen on surveillance footage from the Tesla showroom. The arrest warrant claims that upon executing a search warrant at Wagner’s house, investigators found red spray paint, ignitable liquids "consistent with gasoline," and jars consistent with evidence found at both the Tesla showroom fire and the Republican office fire. They also found a paint-stained stencil cutout reading “ICE=KKK” consistent with the graffiti found at the Republican office, and clothes that resembled what the suspect was seen wearing on surveillance footage outside the Tesla showroom. According to the arrest warrant, the Bureau of Alcohol, Tobacco, Firearms and Explosives forensic laboratory tested “fire debris,” fingerprints, and possible DNA at the scene, but no results are cited in the warrant, which notes that an analysis of the evidence and seized electronic devices is still pending. The five other people currently facing federal charges for allegedly damaging Tesla property include 42-year-old Lucy Grace Nelson of Colorado, 41-year-old Adam Matthew Lansky of Oregon, 24-year-old Daniel Clarke-Pounder of South Carolina, 24-year-old Cooper Jo Frederick of Colorado, and 36-year-old Paul Hyon Kim of Nevada. The FBI’s Joint Terrorism Task Force investigated the incident that led to Kim’s indictment on April 9; however, press releases and court filings indicate that the task force was not deployed in the other four investigations. This story originally appeared on wired.com. Caroline Haskins, wired.com Wired.com is your essential daily guide to what's next, delivering the most original and complete take you'll find anywhere on innovation's impact on technology, science, business and culture. 22 Comments0 Kommentare 0 Anteile 71 Ansichten

ARSTECHNICA.COMFeds charge New Mexico man for allegedly torching Tesla dealershipBurning it down Feds charge New Mexico man for allegedly torching Tesla dealership Jamison Wagner also faces charges for an arson attack on a Republican office. Caroline Haskins, wired.com – Apr 16, 2025 9:26 am | 22 Credit: Getty Images | SOPA Images Credit: Getty Images | SOPA Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more A New Mexico man is facing federal charges for two separate incidents of alleged arson—one at an Albuquerque Tesla showroom and one at the New Mexico Republican Party’s office—according to a Monday press release from the Department of Justice. Jamison Wagner, 40, was charged with allegedly setting fire to a building or vehicle used in interstate commerce. The charge can apply to goods manufactured and sold in different states and the facilities that house them—like the Tesla showroom or the Republican office, which also sells MAGA merchandise. DOJ spokesperson Shannon Shevlin tells WIRED that Wagner’s arrest happened on Saturday. “Let this be the final lesson to those taking part in this ongoing wave of political violence,” Attorney General Pam Bondi said in the Monday press release. “We will arrest you, we will prosecute you, and we will not negotiate. Crimes have consequences.” Wagner’s arrest warrant alleges that he is responsible for a February 9 incident at a Tesla showroom in which windows were shattered and two Tesla Model Ys were set on fire. It also alleges that he is responsible for a March 30 incident at the Republican Party of New Mexico office in which the entrance area was set on fire and “ICE=KKK” was graffitied on the building’s exterior. The arrest warrant also says that a lead investigator on Wagner’s case is an FBI agent specializing in “international terrorism, domestic terrorism and firearms.” This marks the second known time that FBI terrorism investigators have gotten involved in a criminal investigation tied to the recent public backlash against Musk and Tesla. However, it’s the first time that the suspect was also allegedly tied to another incident—which, in this case, targeted a Republican office. The arrest comes amid repeated calls by Bondi, President Trump, Elon Musk, House Speaker Mike Johnson, and Representative Marjorie Taylor Greene to treat arson and vandalism of Tesla property as “domestic terrorism.” Five other people are currently facing federal charges for alleged vandalism and arson targeting Tesla property, according to press releases by the DOJ. As reported by WIRED, law enforcement can get access to surveillance technologies and have more legal leeway during terrorism investigations than in other types of investigations. These investigations could also possibly enable Musk and Tesla executives to access surveillance on “Tesla Takedown” protesters, though the protests have broadly been peaceful, and public-facing protest organizers have said that they don’t endorse property damage. The FBI can decide to share this type of information with the victim of a crime during an investigation, WIRED previously reported. Bondi teased news of Wagner’s arrest last week in a televised Cabinet meeting, telling Trump that there would be “another huge arrest” pertaining to an attack on a Tesla dealership within the next 24 hours. “That person will be looking at at least 20 years in prison with no negotiations,” Bondi said on Thursday. (The DOJ press release issued after Wagner’s arrest notes, “A complaint is merely an allegation, and all defendants are presumed innocent until proven guilty beyond a reasonable doubt in a court of law.”) DOJ spokesperson Shannon Shevlin confirmed that Wagner's arrest was the announcement Bondi was referring to. Her timeline was off, though, because Wagner was not in custody until Saturday. Wagner was first identified as a suspect due to an unspecified “investigative lead developed by law enforcement through scene evidence,” according to the arrest warrant. Investigators claim that after analyzing CCTV footage from buildings near the Republican office and traffic cameras, they identified a car consistent with the one registered to Wagner. After reviewing Wagner’s driver’s license and conducting physical surveillance outside his home, investigators also believed he resembled the person seen on surveillance footage from the Tesla showroom. The arrest warrant claims that upon executing a search warrant at Wagner’s house, investigators found red spray paint, ignitable liquids "consistent with gasoline," and jars consistent with evidence found at both the Tesla showroom fire and the Republican office fire. They also found a paint-stained stencil cutout reading “ICE=KKK” consistent with the graffiti found at the Republican office, and clothes that resembled what the suspect was seen wearing on surveillance footage outside the Tesla showroom. According to the arrest warrant, the Bureau of Alcohol, Tobacco, Firearms and Explosives forensic laboratory tested “fire debris,” fingerprints, and possible DNA at the scene, but no results are cited in the warrant, which notes that an analysis of the evidence and seized electronic devices is still pending. The five other people currently facing federal charges for allegedly damaging Tesla property include 42-year-old Lucy Grace Nelson of Colorado, 41-year-old Adam Matthew Lansky of Oregon, 24-year-old Daniel Clarke-Pounder of South Carolina, 24-year-old Cooper Jo Frederick of Colorado, and 36-year-old Paul Hyon Kim of Nevada. The FBI’s Joint Terrorism Task Force investigated the incident that led to Kim’s indictment on April 9; however, press releases and court filings indicate that the task force was not deployed in the other four investigations. This story originally appeared on wired.com. Caroline Haskins, wired.com Wired.com is your essential daily guide to what's next, delivering the most original and complete take you'll find anywhere on innovation's impact on technology, science, business and culture. 22 Comments0 Kommentare 0 Anteile 71 Ansichten -

WWW.INFORMATIONWEEK.COMHow to Tell When You're Working Your IT Team Too HardJohn Edwards, Technology Journalist & AuthorApril 16, 20255 Min ReadDmitriy Shironosov via Alamy Stock PhotoIn an era of unprecedented technological advancement, IT teams are expected to embrace new tasks and achieve fresh goals without missing a beat. All too often, however, the result is an overburdened IT workforce that's frustrated and burned out. It doesn't have to be that way, says Ravindra Patil, a vice president at data science solutions provider Tredence. "Overwork tends to come from an 'always-on' culture, where remote work and digital tools make people feel they must be available all the time," he explains in an online interview. Warning Signs One of the earliest signs that a team is reaching its breaking point is an increasing number of errors, missed steps, or just plain sloppy work, says Archie Payne, president CalTek Staffing, a machine learning recruitment and staffing firm. "These are indications that the team is trying to work faster than is realistic, which is likely to happen when they have too much work on their to-do lists," he explains in an email interview. "This is likely to be paired with a general decline in morale, which can come across as more complaints, more cynical or frustrated comments, a lack of enthusiasm for the work, or increased emotional volatility." IT leaders can also detect overwork through various warning signs, such as a mounting number of sick leaves, high turnover rates, increasing mistakes, and overall lower work quality, Patil says. He adds that beleaguered team members may also look tired, act emotionally, or seem unengaged during meetings. "Keeping an eye on things like overtime, slower progress, or falling performance despite long hours can also show that the team is under too much pressure." Related:John Russo, vice president of technology solutions at healthcare software provider OSP Labs, says that a sudden drop in creativity and problem-solving are also strong signs indicating team weariness. In an email interview, he states that an IT team that's stretched too thin will stop generating innovative ideas, opting instead to complete tasks mechanically. Another strong unrest indicator is a change in communication patterns. "If the team members delay responses, or seem disengaged during discussions, it's worth digging deeper," Russo recommends. Working under unrelenting high pressure is a recipe for burnout, and that's the greatest risk if you keep pushing your IT team too hard, Payne says. "Burnout could drive employees to quit, forcing you to waste resources on recruiting replacements," he warns. "Even if they stay, burned-out employees are less productive and more likely to make mistakes, so your overall team productivity and work quality will likely suffer." Related:Pressure Release The simplest and most effective answer to burnout is reducing the team’s workload. This can be accomplished in several ways, Payne says. Review the IT team's current assignments, then consider whether some of the tasks could be assigned to another team or department, which may be more adequately staffed. "If all of the work must be done by IT, that may mean it's time to expand the team," he advises. Meanwhile, adding temporary freelance talent during workload spikes can relieve IT team pressure during peak times without committing to adding new hires who may not be needed over the long-term. Careful planning, focusing on important tasks, and delaying or skipping less critical ones, can also make workloads more manageable, Patil says. Setting realistic deadlines can help, too, preventing the dread that can, over time, lead to burnout. He also advises using automation tools whenever possible to cut down on repetitive tasks, making work easier and less stressful. Patil says that Tredence reduces team pressure with initiatives, such as "No-Meeting Fridays," which gives team members uninterrupted time to focus and recharge. "Flexible schedules and open communication also help our teams stay balanced," he adds. Related:IT leaders should schedule regular check-ins with their teams to identify stress points as soon as possible, Russo advises. "When employees feel heard and validated, they're more likely to share their concerns before burnout sets in," he explains. At OSP Labs, Russo introduced flexible work models into well-being initiatives. "This policy allows my team members to set their own hours, with more freedom to balance work and personal time." Russo says he also makes a concerted effort to celebrate his team's accomplishments with "thoughtful goodies and high-fives". Such small initiatives, he notes, eventually make a huge difference. Parting Thoughts Long-term excessive pressure can lead to burnout, leaving team members feeling completely drained, Patil says. "This lowers productivity and may cause employees to leave, leading to more stress for those who stay." Health issues may also arise. including anxiety, depression, or even physical problems. Russo recommends setting realistic expectations and encouraging a culture in which asking for help isn't seen as a weakness. "Create an environment where open communication about workloads is the norm, not the exception," he advises. About the AuthorJohn EdwardsTechnology Journalist & AuthorJohn Edwards is a veteran business technology journalist. His work has appeared in The New York Times, The Washington Post, and numerous business and technology publications, including Computerworld, CFO Magazine, IBM Data Management Magazine, RFID Journal, and Electronic Design. He has also written columns for The Economist's Business Intelligence Unit and PricewaterhouseCoopers' Communications Direct. John has authored several books on business technology topics. His work began appearing online as early as 1983. Throughout the 1980s and 90s, he wrote daily news and feature articles for both the CompuServe and Prodigy online services. His "Behind the Screens" commentaries made him the world's first known professional blogger.See more from John EdwardsWebinarsMore WebinarsReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Kommentare 0 Anteile 65 Ansichten

WWW.INFORMATIONWEEK.COMHow to Tell When You're Working Your IT Team Too HardJohn Edwards, Technology Journalist & AuthorApril 16, 20255 Min ReadDmitriy Shironosov via Alamy Stock PhotoIn an era of unprecedented technological advancement, IT teams are expected to embrace new tasks and achieve fresh goals without missing a beat. All too often, however, the result is an overburdened IT workforce that's frustrated and burned out. It doesn't have to be that way, says Ravindra Patil, a vice president at data science solutions provider Tredence. "Overwork tends to come from an 'always-on' culture, where remote work and digital tools make people feel they must be available all the time," he explains in an online interview. Warning Signs One of the earliest signs that a team is reaching its breaking point is an increasing number of errors, missed steps, or just plain sloppy work, says Archie Payne, president CalTek Staffing, a machine learning recruitment and staffing firm. "These are indications that the team is trying to work faster than is realistic, which is likely to happen when they have too much work on their to-do lists," he explains in an email interview. "This is likely to be paired with a general decline in morale, which can come across as more complaints, more cynical or frustrated comments, a lack of enthusiasm for the work, or increased emotional volatility." IT leaders can also detect overwork through various warning signs, such as a mounting number of sick leaves, high turnover rates, increasing mistakes, and overall lower work quality, Patil says. He adds that beleaguered team members may also look tired, act emotionally, or seem unengaged during meetings. "Keeping an eye on things like overtime, slower progress, or falling performance despite long hours can also show that the team is under too much pressure." Related:John Russo, vice president of technology solutions at healthcare software provider OSP Labs, says that a sudden drop in creativity and problem-solving are also strong signs indicating team weariness. In an email interview, he states that an IT team that's stretched too thin will stop generating innovative ideas, opting instead to complete tasks mechanically. Another strong unrest indicator is a change in communication patterns. "If the team members delay responses, or seem disengaged during discussions, it's worth digging deeper," Russo recommends. Working under unrelenting high pressure is a recipe for burnout, and that's the greatest risk if you keep pushing your IT team too hard, Payne says. "Burnout could drive employees to quit, forcing you to waste resources on recruiting replacements," he warns. "Even if they stay, burned-out employees are less productive and more likely to make mistakes, so your overall team productivity and work quality will likely suffer." Related:Pressure Release The simplest and most effective answer to burnout is reducing the team’s workload. This can be accomplished in several ways, Payne says. Review the IT team's current assignments, then consider whether some of the tasks could be assigned to another team or department, which may be more adequately staffed. "If all of the work must be done by IT, that may mean it's time to expand the team," he advises. Meanwhile, adding temporary freelance talent during workload spikes can relieve IT team pressure during peak times without committing to adding new hires who may not be needed over the long-term. Careful planning, focusing on important tasks, and delaying or skipping less critical ones, can also make workloads more manageable, Patil says. Setting realistic deadlines can help, too, preventing the dread that can, over time, lead to burnout. He also advises using automation tools whenever possible to cut down on repetitive tasks, making work easier and less stressful. Patil says that Tredence reduces team pressure with initiatives, such as "No-Meeting Fridays," which gives team members uninterrupted time to focus and recharge. "Flexible schedules and open communication also help our teams stay balanced," he adds. Related:IT leaders should schedule regular check-ins with their teams to identify stress points as soon as possible, Russo advises. "When employees feel heard and validated, they're more likely to share their concerns before burnout sets in," he explains. At OSP Labs, Russo introduced flexible work models into well-being initiatives. "This policy allows my team members to set their own hours, with more freedom to balance work and personal time." Russo says he also makes a concerted effort to celebrate his team's accomplishments with "thoughtful goodies and high-fives". Such small initiatives, he notes, eventually make a huge difference. Parting Thoughts Long-term excessive pressure can lead to burnout, leaving team members feeling completely drained, Patil says. "This lowers productivity and may cause employees to leave, leading to more stress for those who stay." Health issues may also arise. including anxiety, depression, or even physical problems. Russo recommends setting realistic expectations and encouraging a culture in which asking for help isn't seen as a weakness. "Create an environment where open communication about workloads is the norm, not the exception," he advises. About the AuthorJohn EdwardsTechnology Journalist & AuthorJohn Edwards is a veteran business technology journalist. His work has appeared in The New York Times, The Washington Post, and numerous business and technology publications, including Computerworld, CFO Magazine, IBM Data Management Magazine, RFID Journal, and Electronic Design. He has also written columns for The Economist's Business Intelligence Unit and PricewaterhouseCoopers' Communications Direct. John has authored several books on business technology topics. His work began appearing online as early as 1983. Throughout the 1980s and 90s, he wrote daily news and feature articles for both the CompuServe and Prodigy online services. His "Behind the Screens" commentaries made him the world's first known professional blogger.See more from John EdwardsWebinarsMore WebinarsReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Kommentare 0 Anteile 65 Ansichten -

WWW.TECHNOLOGYREVIEW.COMUS office that counters foreign disinformation is being eliminated, say officialsThe only office within the US State Department that monitors foreign disinformation is about to be eliminated, two State Department officials have told MIT Technology Review. The Counter Foreign Information Manipulation and Interference (R/FIMI) Hub is a small office in the State Department’s Office of Public Diplomacy that tracks and counters foreign disinformation campaigns. In shutting R/FIMI, the department's controversial acting undersecretary, Darren Beattie, is delivering a major win to conservative critics who have alleged that it censors conservative voices. Created at the end of 2024, it was reorganized from the Global Engagement Center, a larger office with a similar mission that had long been criticized by conservatives who claimed that, despite its international mission, it was censoring American conservatives. In 2023, Elon Musk called the center the "worst offender in US government censorship [and] media manipulation" and a “threat to our democracy.” The culling of the office will leave the State Department without a way to actively counter the increasingly sophisticated disinformation campaigns from foreign governments like Russia, Iran, and China. The office could be shuttered as soon as today, according to sources at the State Department who spoke with MIT Technology Review. Censorship claims For years, conservative voices both in and out of government have complained about Big Tech’s censorship of conservative views—and often blamed R/FIMI’s predecessor office, the Global Engagement Center (GEC), for enabling this censorship. GEC has its roots as the Center for Strategic Counterterrorism Communications (CSCC), created by an Obama-era executive order, but shifted its mission to fight propaganda and disinformation from foreign governments and terrorist organizations in 2016, becoming the Global Engagement Center. It was always explicitly focused on the international information space. It shut down last December, after a measure to reauthorize its $61 million budget was blocked by Republicans in Congress, who accused it of helping Big Tech censor American conservative voices. R/FIMI had a similar goal to fight foreign disinformation, but it was smaller: the newly created office had a $51.9 million budget, and a small staff that, by mid-April, was down to just 40 employees, from 125 at GEC. Sources say that those employees will be put on administrative leave and terminated within 30 days. But with the change in administrations, R/FIMI never really got off the ground. Beattie, a controversial pick for undersecretary— he was fired as a speechwriter for attending a white nationalism conference during the first Trump administration, has suggested that the FBI organized the January 6 attack on Congress, and has said that it’s not worth defending Taiwan from China —had instructed the few remaining staff to be “pencils down,” one State Department official told me, meaning to pause in their work. The administration’s executive order on countering censorship and restoring freedom of speech reads as a summary of conservative accusations against GEC: “Under the guise of combatting “misinformation,” “disinformation,” and “malinformation,” the Federal Government infringed on the constitutionally protected speech rights of American citizens across the United States in a manner that advanced the Government’s preferred narrative about significant matters of public debate. Government censorship of speech is intolerable in a free society.” In 2023, The Daily Wire, founded by conservative media personality Ben Shapiro, was one of two media companies that sued GEC for allegedly infringing on the company’s first amendment rights by funding two non-profit organizations, the London-based Global Disinformation Index and New York-based NewsGuard, had labeled The Daily Wire as “unreliable,” “risky,” and/or (per GDI,) susceptible to foreign disinformation. Those projects were not funded by GEC. The lawsuit alleged that this amounted to censorship by “starving them of advertising revenue and reducing the circulation of their reporting and speech,” the lawsuit continued. In 2022, the Republican attorneys general of Missouri and Louisiana named GEC among the federal agencies that, they alleged, were pressuring social networks to censor conservative views. Though the case eventually made its way to the Supreme Court, which found no first amendment violations, a lower court had already removed GEC’s name from the list of defendants, ruling that there was “no evidence” that GEC’s communications with the social media platforms had gone beyond “educating the platforms on ‘tools and techniques used by foreign actors.’” The stakes The GEC—and now R/FIMI—was targeted as part of a wider campaign to shut down groups accused of being “weaponized” against conservatives. Conservative critics railing against what they have called a “disinformation industrial complex” have also taken aim at the Department of Homeland Security’s Cybersecurity and Infrastructure Security Agency (CISA) and the Stanford Internet Observatory, a prominent research group that conducted widely cited research on the flows of disinformation during elections. CISA’s former director, Chris Krebs, was personally targeted in an April 9 White House memo, while in response to the criticism and millions of dollars of legal fees, Stanford University shuttered Stanford Internet Observatory ahead of the 2024 presidential elections. But this targeting comes at a time when foreign disinformation campaigns—especially by Russia, China, and Iran—have become increasingly sophisticated. According to one estimate, Russia spends $1.5 billion per year on foreign influence campaigns. In 2022, the Islamic Republic of Iran Broadcasting, its primary foreign propaganda arm, had a $1.26 billion budget. And a 2015 estimate suggests that China spent up to $10 billion per year on media targeting non-Chinese foreigners—a figure that has almost certainly grown. In September 2024, the Justice Department indicted two employees of RT, a Russian state-owned propaganda agency, of a $10 million scheme to create propaganda aimed at influencing US audiences through a media company that has since been identified as the conservative Tenet Media. The GEC was one effort to counter these campaigns. Some of its recent projects have included developing AI models to detect memes and deepfakes and exposing Russian propaganda efforts to influence Latin American public opinion against the war in Ukraine. By law, the office of public diplomacy has to provide Congress with 15-day advance notice of any intent to re-assign any funding allocated by Congress over $1 million dollars. Congress then has time to respond, ask questions, and challenge the decisions–though if its record with other executive branches' unilateral decisions to gut government agencies, it is unlikely to do so.0 Kommentare 0 Anteile 72 Ansichten

WWW.TECHNOLOGYREVIEW.COMUS office that counters foreign disinformation is being eliminated, say officialsThe only office within the US State Department that monitors foreign disinformation is about to be eliminated, two State Department officials have told MIT Technology Review. The Counter Foreign Information Manipulation and Interference (R/FIMI) Hub is a small office in the State Department’s Office of Public Diplomacy that tracks and counters foreign disinformation campaigns. In shutting R/FIMI, the department's controversial acting undersecretary, Darren Beattie, is delivering a major win to conservative critics who have alleged that it censors conservative voices. Created at the end of 2024, it was reorganized from the Global Engagement Center, a larger office with a similar mission that had long been criticized by conservatives who claimed that, despite its international mission, it was censoring American conservatives. In 2023, Elon Musk called the center the "worst offender in US government censorship [and] media manipulation" and a “threat to our democracy.” The culling of the office will leave the State Department without a way to actively counter the increasingly sophisticated disinformation campaigns from foreign governments like Russia, Iran, and China. The office could be shuttered as soon as today, according to sources at the State Department who spoke with MIT Technology Review. Censorship claims For years, conservative voices both in and out of government have complained about Big Tech’s censorship of conservative views—and often blamed R/FIMI’s predecessor office, the Global Engagement Center (GEC), for enabling this censorship. GEC has its roots as the Center for Strategic Counterterrorism Communications (CSCC), created by an Obama-era executive order, but shifted its mission to fight propaganda and disinformation from foreign governments and terrorist organizations in 2016, becoming the Global Engagement Center. It was always explicitly focused on the international information space. It shut down last December, after a measure to reauthorize its $61 million budget was blocked by Republicans in Congress, who accused it of helping Big Tech censor American conservative voices. R/FIMI had a similar goal to fight foreign disinformation, but it was smaller: the newly created office had a $51.9 million budget, and a small staff that, by mid-April, was down to just 40 employees, from 125 at GEC. Sources say that those employees will be put on administrative leave and terminated within 30 days. But with the change in administrations, R/FIMI never really got off the ground. Beattie, a controversial pick for undersecretary— he was fired as a speechwriter for attending a white nationalism conference during the first Trump administration, has suggested that the FBI organized the January 6 attack on Congress, and has said that it’s not worth defending Taiwan from China —had instructed the few remaining staff to be “pencils down,” one State Department official told me, meaning to pause in their work. The administration’s executive order on countering censorship and restoring freedom of speech reads as a summary of conservative accusations against GEC: “Under the guise of combatting “misinformation,” “disinformation,” and “malinformation,” the Federal Government infringed on the constitutionally protected speech rights of American citizens across the United States in a manner that advanced the Government’s preferred narrative about significant matters of public debate. Government censorship of speech is intolerable in a free society.” In 2023, The Daily Wire, founded by conservative media personality Ben Shapiro, was one of two media companies that sued GEC for allegedly infringing on the company’s first amendment rights by funding two non-profit organizations, the London-based Global Disinformation Index and New York-based NewsGuard, had labeled The Daily Wire as “unreliable,” “risky,” and/or (per GDI,) susceptible to foreign disinformation. Those projects were not funded by GEC. The lawsuit alleged that this amounted to censorship by “starving them of advertising revenue and reducing the circulation of their reporting and speech,” the lawsuit continued. In 2022, the Republican attorneys general of Missouri and Louisiana named GEC among the federal agencies that, they alleged, were pressuring social networks to censor conservative views. Though the case eventually made its way to the Supreme Court, which found no first amendment violations, a lower court had already removed GEC’s name from the list of defendants, ruling that there was “no evidence” that GEC’s communications with the social media platforms had gone beyond “educating the platforms on ‘tools and techniques used by foreign actors.’” The stakes The GEC—and now R/FIMI—was targeted as part of a wider campaign to shut down groups accused of being “weaponized” against conservatives. Conservative critics railing against what they have called a “disinformation industrial complex” have also taken aim at the Department of Homeland Security’s Cybersecurity and Infrastructure Security Agency (CISA) and the Stanford Internet Observatory, a prominent research group that conducted widely cited research on the flows of disinformation during elections. CISA’s former director, Chris Krebs, was personally targeted in an April 9 White House memo, while in response to the criticism and millions of dollars of legal fees, Stanford University shuttered Stanford Internet Observatory ahead of the 2024 presidential elections. But this targeting comes at a time when foreign disinformation campaigns—especially by Russia, China, and Iran—have become increasingly sophisticated. According to one estimate, Russia spends $1.5 billion per year on foreign influence campaigns. In 2022, the Islamic Republic of Iran Broadcasting, its primary foreign propaganda arm, had a $1.26 billion budget. And a 2015 estimate suggests that China spent up to $10 billion per year on media targeting non-Chinese foreigners—a figure that has almost certainly grown. In September 2024, the Justice Department indicted two employees of RT, a Russian state-owned propaganda agency, of a $10 million scheme to create propaganda aimed at influencing US audiences through a media company that has since been identified as the conservative Tenet Media. The GEC was one effort to counter these campaigns. Some of its recent projects have included developing AI models to detect memes and deepfakes and exposing Russian propaganda efforts to influence Latin American public opinion against the war in Ukraine. By law, the office of public diplomacy has to provide Congress with 15-day advance notice of any intent to re-assign any funding allocated by Congress over $1 million dollars. Congress then has time to respond, ask questions, and challenge the decisions–though if its record with other executive branches' unilateral decisions to gut government agencies, it is unlikely to do so.0 Kommentare 0 Anteile 72 Ansichten