0 Comments

0 Shares

14 Views

Directory

Directory

-

Please log in to like, share and comment!

-

WWW.FORBES.COM2FA Is Under Attack — New And Dangerous Infostealer Update WarningCan anyone stop this updated 2FA infostealer threat?0 Comments 0 Shares 9 Views

WWW.FORBES.COM2FA Is Under Attack — New And Dangerous Infostealer Update WarningCan anyone stop this updated 2FA infostealer threat?0 Comments 0 Shares 9 Views -

WWW.FORBES.COMEarFun Reveals Affordable ANC Wireless Headphones With Hi-Res SupportThese new Wave Life wireless headphones from EarFun are aimed at budget-conscious music lovers who are looking for a pair of ANC wireless headphones with Hi-Res support.0 Comments 0 Shares 9 Views

WWW.FORBES.COMEarFun Reveals Affordable ANC Wireless Headphones With Hi-Res SupportThese new Wave Life wireless headphones from EarFun are aimed at budget-conscious music lovers who are looking for a pair of ANC wireless headphones with Hi-Res support.0 Comments 0 Shares 9 Views -

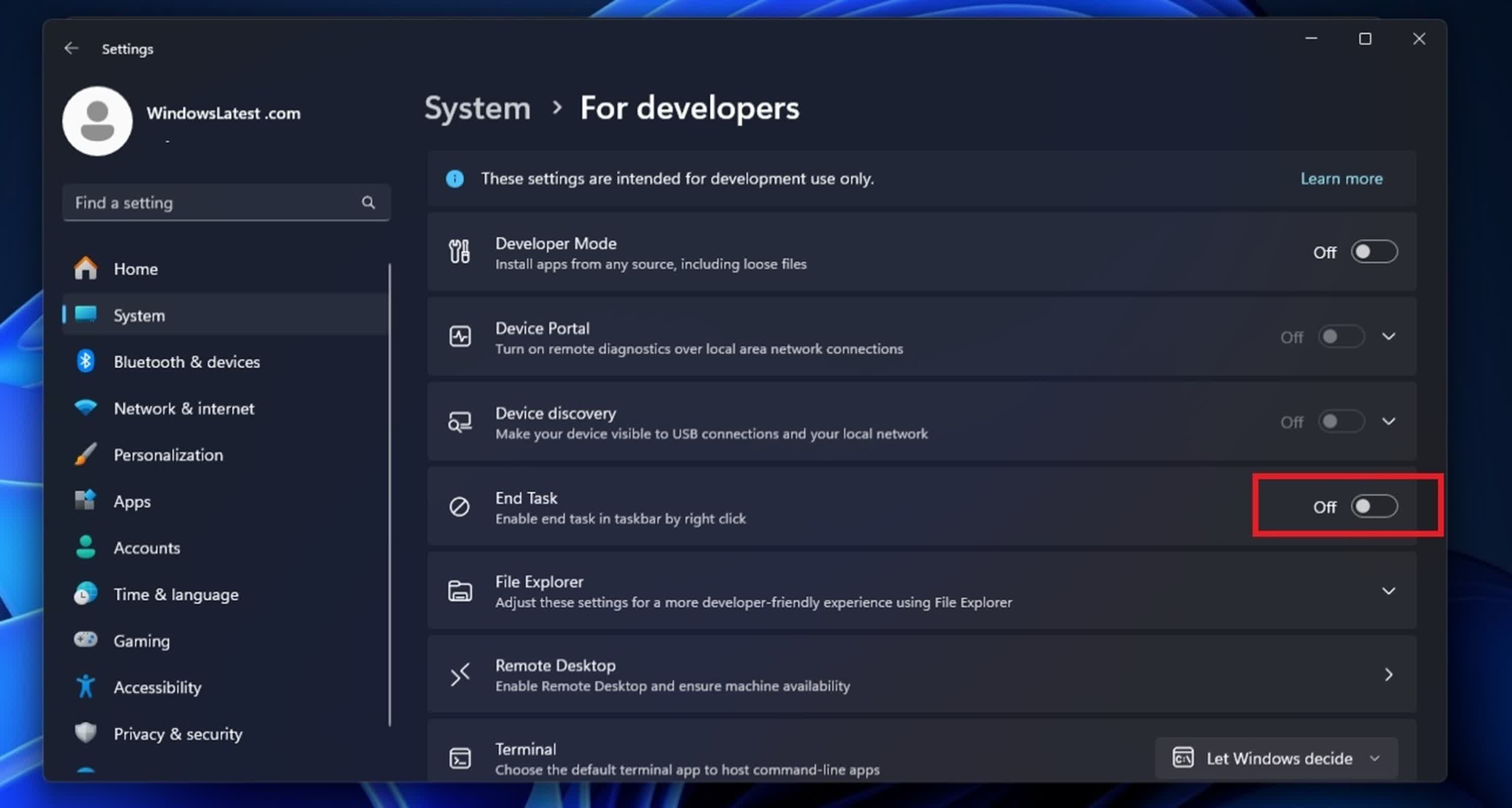

WWW.TECHSPOT.COMNew Windows 11 setting lets users kill stubborn apps instantly from taskbarIn a nutshell: Microsoft has quietly introduced a powerful new feature to Windows 11, allowing users to deal with unresponsive applications faster. The "End Task" button, now available directly from the taskbar, streamlines a process that previously required several steps and a trip into the depths of Task Manager. For years, the standard response to a frozen app was either to reboot the system or summon Task Manager – often by pressing Ctrl + Alt + Delete – and hunt through the list of running processes to find and terminate the problematic program. While effective, this approach was cumbersome. The new feature spotted by Windows Latest makes that process faster and more convenient. By enabling the "End Task" option, users can right-click any open application on the taskbar and immediately force it to close. To activate the tool, go to Settings > System > For Developers and toggle on the "End Task" setting. Once enabled, the option appears in the context menu whenever you right-click an app's icon on the taskbar. Its effectiveness sets "End Task" apart from the familiar "Close Window" option. While "Close Window" merely requests that an application shut down – sometimes leaving background processes running or failing to close unresponsive apps – "End Task" forcefully terminates the entire process. This mirrors the functionality of Task Manager's "End Task" command, but with the added convenience of being accessible from the taskbar. Windows first attempts a standard shutdown when the button is pressed, like clicking the "X" in an app's title bar. If the application fails to respond, Windows escalates by identifying the main process and any related processes and terminating them all, ensuring that even stubborn, unresponsive programs are closed. This is particularly useful for apps that hang or freeze, bypassing the need to track down every process in Task Manager manually. However, its power is limited. The "End Task" button cannot terminate system processes such as File Explorer; Task Manager remains indispensable for these. Additionally, users should be aware that using "End Task" is akin to pulling the plug: any unsaved data in the forcibly closed application will be lost, as the app is not given a chance to save its state or perform cleanup routines. // Related Stories This feature is tucked away in the For Developers section of Settings and does not require enabling Developer Mode. It is available to all users running supported builds of Windows 11. Image credit: Windows Latest0 Comments 0 Shares 9 Views

WWW.TECHSPOT.COMNew Windows 11 setting lets users kill stubborn apps instantly from taskbarIn a nutshell: Microsoft has quietly introduced a powerful new feature to Windows 11, allowing users to deal with unresponsive applications faster. The "End Task" button, now available directly from the taskbar, streamlines a process that previously required several steps and a trip into the depths of Task Manager. For years, the standard response to a frozen app was either to reboot the system or summon Task Manager – often by pressing Ctrl + Alt + Delete – and hunt through the list of running processes to find and terminate the problematic program. While effective, this approach was cumbersome. The new feature spotted by Windows Latest makes that process faster and more convenient. By enabling the "End Task" option, users can right-click any open application on the taskbar and immediately force it to close. To activate the tool, go to Settings > System > For Developers and toggle on the "End Task" setting. Once enabled, the option appears in the context menu whenever you right-click an app's icon on the taskbar. Its effectiveness sets "End Task" apart from the familiar "Close Window" option. While "Close Window" merely requests that an application shut down – sometimes leaving background processes running or failing to close unresponsive apps – "End Task" forcefully terminates the entire process. This mirrors the functionality of Task Manager's "End Task" command, but with the added convenience of being accessible from the taskbar. Windows first attempts a standard shutdown when the button is pressed, like clicking the "X" in an app's title bar. If the application fails to respond, Windows escalates by identifying the main process and any related processes and terminating them all, ensuring that even stubborn, unresponsive programs are closed. This is particularly useful for apps that hang or freeze, bypassing the need to track down every process in Task Manager manually. However, its power is limited. The "End Task" button cannot terminate system processes such as File Explorer; Task Manager remains indispensable for these. Additionally, users should be aware that using "End Task" is akin to pulling the plug: any unsaved data in the forcibly closed application will be lost, as the app is not given a chance to save its state or perform cleanup routines. // Related Stories This feature is tucked away in the For Developers section of Settings and does not require enabling Developer Mode. It is available to all users running supported builds of Windows 11. Image credit: Windows Latest0 Comments 0 Shares 9 Views -

WWW.DIGITALTRENDS.COMFirst GPU price hikes, now motherboards could be in line for inflated costsA new leak reveals that three brands, known for making some of the best motherboards, might be increasing their prices soon. This includes Asus, Gigabyte, and MSI. The price hike could arrive as a result of the latest tariffs, as Taiwan is now affected by a 32% tariff on exports to the U.S. Does this mean it’s time to buy a new motherboard while the prices are still unchanged? First, let’s break this down. The information comes from Board Channels, which is often a reputable source for leaks such as these, as the website is visited by industry insiders. However, it’s important to note that all three brands are yet to issue any kind of a press release or announcement about this, so for now, we’re in the clear. However, it’s not impossible that these price hikes will indeed turn out to be real. Gigabyte Asus, Gigabyte, and MSI are among the most popular motherboard makers for both AMD and Intel CPUs. If the three giants raise their prices, I wouldn’t be surprised to see the rest of the market following suit, resulting in industry-wide increases. On the other hand, less popular brands might take the opportunity to stand out with solid pricing, opening the door to some serious competition in the motherboard market. Recommended Videos It’s hard to say what kind of price hikes we might be looking at, and whether those adjustments would happen worldwide or only in the United States — but we’re already seeing the tumultuous effect of various tariffs in other parts of the PC hardware market. Graphics cards are more expensive than ever, and they sell out quickly, too. Tariffs aren’t solely to blame here — low stock levels definitely contribute, and with people rushing to buy the new GPUs, there’s little incentive for the prices to drop in any meaningful way. Related There’s a lot of uncertainty around the prices of PC components right now, and motherboards have just joined the mix. I’d probably wait for an official announcement from Asus, MSI, and Gigabyte before rushing to buy a new mobo, but if you spot a deal, you might as well go for it. I can’t imagine them becoming cheaper anytime soon, at least. Editors’ Recommendations0 Comments 0 Shares 12 Views

WWW.DIGITALTRENDS.COMFirst GPU price hikes, now motherboards could be in line for inflated costsA new leak reveals that three brands, known for making some of the best motherboards, might be increasing their prices soon. This includes Asus, Gigabyte, and MSI. The price hike could arrive as a result of the latest tariffs, as Taiwan is now affected by a 32% tariff on exports to the U.S. Does this mean it’s time to buy a new motherboard while the prices are still unchanged? First, let’s break this down. The information comes from Board Channels, which is often a reputable source for leaks such as these, as the website is visited by industry insiders. However, it’s important to note that all three brands are yet to issue any kind of a press release or announcement about this, so for now, we’re in the clear. However, it’s not impossible that these price hikes will indeed turn out to be real. Gigabyte Asus, Gigabyte, and MSI are among the most popular motherboard makers for both AMD and Intel CPUs. If the three giants raise their prices, I wouldn’t be surprised to see the rest of the market following suit, resulting in industry-wide increases. On the other hand, less popular brands might take the opportunity to stand out with solid pricing, opening the door to some serious competition in the motherboard market. Recommended Videos It’s hard to say what kind of price hikes we might be looking at, and whether those adjustments would happen worldwide or only in the United States — but we’re already seeing the tumultuous effect of various tariffs in other parts of the PC hardware market. Graphics cards are more expensive than ever, and they sell out quickly, too. Tariffs aren’t solely to blame here — low stock levels definitely contribute, and with people rushing to buy the new GPUs, there’s little incentive for the prices to drop in any meaningful way. Related There’s a lot of uncertainty around the prices of PC components right now, and motherboards have just joined the mix. I’d probably wait for an official announcement from Asus, MSI, and Gigabyte before rushing to buy a new mobo, but if you spot a deal, you might as well go for it. I can’t imagine them becoming cheaper anytime soon, at least. Editors’ Recommendations0 Comments 0 Shares 12 Views -

WWW.DIGITALTRENDS.COMThe latest AMD GPU probably isn’t for gamers, but compact builders could love itWhen most of us think of new GPU releases, our minds turn towards some of the best graphics cards. In AMD’s case, that would currently mean the RX 9070 XT. But AMD is known for dipping back into previous generations, and this GPU proves just how far back AMD (or its partners) are willing to go to launch a new product. The question is: Does anyone really need it? The GPU in question is the RX 6500. This is a non-XT, base version, and it was spotted by realVictor_M on X (Twitter). Made by Zephyr, the GPU never got as much as an official announcement from AMD. Instead, the card simply appeared on the market, and so far, Zephyr appears to be the only AIB (add-in board) partner making the RX 6500. It’s referred to as Dual ITX, and it does seem perfect for a small build. So, what does this tiny, dual-fan card hide under the hood? Nothing to be excited about in this day and age. The GPU sports 1,024 stream processors (SPs), 16 compute units (CUs), a measly 4GB of GDDR6 VRAM across a 64-bit memory bus (clocked at 16Gbps). It also has a total board power (TBP) of 55 watts, which means it won’t need an external power connector. As spotted by VideoCardz, Zephyr also has a single-slot version of the card that comes with just one fan. Recommended Videos Spec-wise, the RX 6500 falls closer to the RX 6500 XT than it does to the RX 6400. The lower-end GPU sports just 768 SPs, although they both share the same memory configuration. Related Although I am a fan of budget-oriented systems, I struggle to see the need for a GPU of this caliber. Integrated graphics will offer similar performance here, and gaming with 4GB of VRAM is going to be tough unless one sticks to old indie titles. But all of that is no problem, because people do need PCs for use cases such as this. It’s more that similar GPUs are still readily available, including low-end, sub-$100 models from both AMD and Nvidia. I could see the RX 6500 ending up in a small form factor (SFF) PC used as a home entertainment system, for example. For gaming, it might be too far behind the times at this point. It’s unclear when or if the card will make it to the U.S. market, or how much it will cost. AMD has a new GPU, but it’s not the one you think — or need Editors’ Recommendations0 Comments 0 Shares 11 Views

WWW.DIGITALTRENDS.COMThe latest AMD GPU probably isn’t for gamers, but compact builders could love itWhen most of us think of new GPU releases, our minds turn towards some of the best graphics cards. In AMD’s case, that would currently mean the RX 9070 XT. But AMD is known for dipping back into previous generations, and this GPU proves just how far back AMD (or its partners) are willing to go to launch a new product. The question is: Does anyone really need it? The GPU in question is the RX 6500. This is a non-XT, base version, and it was spotted by realVictor_M on X (Twitter). Made by Zephyr, the GPU never got as much as an official announcement from AMD. Instead, the card simply appeared on the market, and so far, Zephyr appears to be the only AIB (add-in board) partner making the RX 6500. It’s referred to as Dual ITX, and it does seem perfect for a small build. So, what does this tiny, dual-fan card hide under the hood? Nothing to be excited about in this day and age. The GPU sports 1,024 stream processors (SPs), 16 compute units (CUs), a measly 4GB of GDDR6 VRAM across a 64-bit memory bus (clocked at 16Gbps). It also has a total board power (TBP) of 55 watts, which means it won’t need an external power connector. As spotted by VideoCardz, Zephyr also has a single-slot version of the card that comes with just one fan. Recommended Videos Spec-wise, the RX 6500 falls closer to the RX 6500 XT than it does to the RX 6400. The lower-end GPU sports just 768 SPs, although they both share the same memory configuration. Related Although I am a fan of budget-oriented systems, I struggle to see the need for a GPU of this caliber. Integrated graphics will offer similar performance here, and gaming with 4GB of VRAM is going to be tough unless one sticks to old indie titles. But all of that is no problem, because people do need PCs for use cases such as this. It’s more that similar GPUs are still readily available, including low-end, sub-$100 models from both AMD and Nvidia. I could see the RX 6500 ending up in a small form factor (SFF) PC used as a home entertainment system, for example. For gaming, it might be too far behind the times at this point. It’s unclear when or if the card will make it to the U.S. market, or how much it will cost. AMD has a new GPU, but it’s not the one you think — or need Editors’ Recommendations0 Comments 0 Shares 11 Views -

ARSTECHNICA.COMA Chinese-born crypto tycoon—of all people—changed the way I think of spaceImmersive A Chinese-born crypto tycoon—of all people—changed the way I think of space "Are we the first generation of digital nomad in space?" Stephen Clark – Apr 22, 2025 7:30 am | 0 Chun Wang orbits the Earth inside the cupola of SpaceX's Dragon spacecraft. Credit: Chun Wang via X Chun Wang orbits the Earth inside the cupola of SpaceX's Dragon spacecraft. Credit: Chun Wang via X Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more For a quarter-century, dating back to my time as a budding space enthusiast, I've watched with a keen eye each time people have ventured into space. That's 162 human spaceflight missions since the beginning of 2000, ranging from Space Shuttle flights to Russian Soyuz missions, Chinese astronauts' first forays into orbit, and commercial expeditions on SpaceX's Dragon capsule. Yes, I'm also counting privately funded suborbital hops launched by Blue Origin and Virgin Galactic. Last week, Jeff Bezos' Blue Origin captured headlines—though not purely positive—with the launch of six women, including pop star Katy Perry, to an altitude of 66 miles (106 kilometers). The capsule returned to the ground 10 minutes and 21 seconds later. It was the first all-female flight to space since Russian cosmonaut Valentina Tereshkova's solo mission in 1963. Many commentators criticized the flight as a tone-deaf stunt or a rich person's flex. I won't make any judgments, except to say two of the passengers aboard Blue Origin's capsule—Aisha Bowe and Amanda Nguyen—have compelling stories worth telling. Immerse yourself Here's another story worth sharing. Earlier this month, an international crew of four private astronauts took their own journey into space aboard a Dragon spacecraft owned and operated by Elon Musk's SpaceX. Like Blue Origin's all-female flight, this mission was largely bankrolled by a billionaire. Actually, it was a couple of billionaires. Musk used his fortune to fund a large portion of the Dragon spacecraft's development costs alongside a multibillion-dollar contribution from US taxpayers. Chun Wang, a Chinese-born cryptocurrency billionaire, paid SpaceX an undisclosed sum to fly one of SpaceX's ships into orbit with three of his friends. So far, this seems like another story about a rich guy going to space. This is indeed a major part of the story, but there's more to it. Chun, now a citizen of Malta, named the mission Fram2 after the Norwegian exploration ship Fram used for polar expeditions at the turn of the 20th century. Following in the footsteps of Fram, which means "forward" in Norwegian, Chun asked SpaceX if he could launch into an orbit over Earth's poles to gain a perspective on our planet no human eyes had seen before. Joining Chun on the three-and-a-half-day Fram2 mission were Jannicke Mikkelsen, a Norwegian filmmaker and cinematographer who took the role of vehicle commander. Rabea Rogge, a robotics researcher from Germany, took the pilot's seat and assisted Mikkelsen in monitoring the spacecraft's condition in flight. Wang and Eric Philips, an Australian polar explorer and guide, flew as "mission specialists" on the mission. Chun's X account reads like a travelogue, with details of each jet-setting jaunt around the world. His propensity for sharing travel experiences extended into space, and I'm grateful for it. The Florida peninsula, including Kennedy Space Center and Cape Canaveral, through the lens of Chun's iPhone. Credit: Chun Wang via X Usually, astronauts might share their reflections from space by writing posts on social media, or occasionally sharing pictures and video vignettes from the International Space Station (ISS). This, in itself, is a remarkable change from the way astronauts communicated with the public from space just 15 years ago. Most of these social media posts involve astronauts showcasing an experiment they're working on or executing a high-flying tutorial in physics. Often, these videos include acrobatic backflips or show the novelty of eating and drinking in microgravity. Some astronauts, like Don Pettit, who recently came home from the ISS, have a knack for gorgeous orbital photography. Chun's videos offer something different. They provide an unfiltered look into how four people live inside a spacecraft with an internal volume comparable to an SUV, and the awe of seeing something beautiful for the first time. His shares have an intimacy, authenticity, and most importantly, an immediacy I've never seen before in a video from space. One of the videos Chun recorded and posted to X shows the Fram2 crew members inside Dragon the day after their launch. The astronauts seem to be enjoying themselves. Their LunchBot meal kits float nearby, and the capsule's makeshift trash bin contains Huggies baby wipes and empty water bottles, giving the environment a vibe akin to a camping trip, except for the constant hum of air fans. Later, Chun shared a video of the crew opening the hatch leading to Dragon's cupola window, a plexiglass extension with panoramic views. Mikkelsen and Chun try to make sense of what they're seeing. "Oh, Novaya Zemlya, do you see it?" Mikkelsen asks. "Yeah. Yeah. It's right here," Chun replies. "Oh, damn. Oh, it is," Mikkelsen says. Chun then drops a bit of Cold War trivia. "The largest atomic bomb was tested here," he says. "And all this ice. Further north, the Arctic Ocean. The North Pole." On the third day of the mission, the Dragon spacecraft soared over Florida, heading south to north on its pole-to-pole loop around the Earth. "I can see our launch pad from here," Mikkelsen says, pointing out NASA's Kennedy Space Center several hundred miles away. Finally, Chun capped his voyage into space with a 30-second clip from his seat inside Dragon as the spacecraft fires thrusters for a deorbit burn. The capsule's small rocket jets pulsed repeatedly to slow Dragon's velocity enough to drop out of orbit and head for reentry and splashdown off the coast of California. Lasers in LEO It wasn't only Chun's proclivity for posting to social media that made this possible. It was also SpaceX's own Starlink Internet network, which the Dragon spacecraft connected to with a "Plug and Plaser" terminal mounted in the capsule's trunk. This device allowed Dragon and its crew to transmit and receive Internet signals through a laser link with Starlink satellites orbiting nearby. Astronauts have shared videos similar to those from Fram2 in the past, but almost always after they are back on Earth, and often edited and packaged into a longer video. What's unique about Chun's videos is that he was able to immediately post his clips, some of which are quite long, to social media via the Starlink Internet network. "With a Starlink laser terminal in the trunk, we can theoretically achieve speeds up to 100 or more gigabits per second," said Jon Edwards, SpaceX's vice president for Falcon launch vehicles, before the Fram2 mission's launch. "For Fram2, we're expecting around 1 gigabit per second." Compare this with the connectivity available to astronauts on the International Space Station, where crews have access to the Internet with uplink speeds of about 4 to 6 megabits per second and 500 kilobits to 1 megabit per second of downlink, according to Sandra Jones, a NASA spokesperson. The space station communications system provides about 1 megabit per second of additional throughput for email, an Internet telephone, and video conferencing. There's another layer of capacity for transmitting scientific and telemetry data between the space station and Mission Control. So, Starlink's laser connection with the Dragon spacecraft offers roughly 200 to 2,000 times the throughput of the Internet connection available on the ISS. The space station sends and receives communication signals, including the Internet, through NASA's fleet of Tracking and Data Relay Satellites. The laser link is also cheaper to use. NASA's TDRS relay stations are dedicated to providing communication support for the ISS and numerous other science missions, including the Hubble Space Telescope, while Dragon plugs into the commercial Starlink network serving millions of other users. SpaceX tested the Plug and Plaser device for the first time in space last year on the Polaris Dawn mission, which was most notable for the first fully commercial spacewalk in history. The results of the test were "phenomenal," said Kevin Coggins, NASA's deputy associate administrator for Space Communications and Navigation. "They have pushed a lot of data through in these tests to demonstrate their ability to do data rates just as high as TDRS, if not higher," Coggins said in a recent presentation to a committee of the National Academies. Artist's illustration of a laser optical link between a Dragon spacecraft and a Starlink satellite. Credit: SpaceX Edwards said SpaceX wants to make the laser communication capability available for future Dragon missions and commercial space stations that may replace the ISS. Meanwhile, NASA is phasing out the government-owned TDRS network. Coggins said NASA's relay satellites in geosynchronous orbit will remain active through the remaining life of the International Space Station, and then will be retired. "Many of these spacecraft are far beyond their intended service life," Coggins said. "In fact, we’ve retired one recently. We’re getting ready to retire another one. In this period of time, we’re going to retire TDRSs pretty often, and we’re going to get down to just a couple left that will last us into the 2030s. "We have to preserve capacity as the constellation gets smaller, and we have to manage risks," Coggins said. "So, we made a decision on November 8, 2024, that no new users could come to TDRS. We took it out of the service catalog." NASA's future satellites in Earth orbit will send their data to the ground through a commercial network like Starlink. The agency has agreements worth more than $278 million with five companies—SpaceX, Amazon, Viasat, SES, and Telesat—to demonstrate how they can replace and improve on the services currently provided by TDRS (pronounced "tee-dress"). These companies are already operating or will soon deploy satellites that could provide radio or laser optical communication links with future space stations, science probes, and climate and weather monitoring satellites. "We're not paying anyone to put up a constellation," Coggins said. After these five companies complete their demonstration phase, NASA will become a subscriber to some or all of their networks. "Now, instead of a 30-year-old [TDRS] constellation and trying to replenish something that we had before, we've got all these new capabilities, all these new things that weren't possible before, especially optical," Coggins said. "That's going to that's going to mean so much with the volume and quality of data that you're going to be able to bring down." Digital nomads Chun and his crewmates didn't use the Starlink connection to send down any prize-winning discoveries about the Universe, or data for a comprehensive global mapping survey. Instead, the Fram2 crew used the connection for video calls and text messages with their families through tablets and smartphones linked to a Wi-Fi router inside the Dragon spacecraft. "Are we the first generation of digital nomad in space?" Chun asked his followers in one X post. "It was not 100 percent available, but when it was, it was really fast," Chun wrote of the Internet connection in an email to Ars. He told us he used an iPhone 16 Pro Max for his 4K videos. From some 200 miles (300 kilometers) up, the phone's 48-megapixel camera, with a simulated optical zoom, brought out the finer textures of ice sheets, clouds, water, and land formations. While the flight was fully automated, SpaceX trained the Fram2 crew how to live and work inside the Dragon spacecraft and take over manual control if necessary. None of Fram2 crew members had a background in spaceflight or in any part of the space industry before they started preparing for their mission. Notably, it was the first human spaceflight mission to low-Earth orbit without a trained airplane pilot onboard. Chun Wang, far right, extends his arm to take an iPhone selfie moments after splashdown in the Pacific Ocean. Credit: SpaceX Their nearly four days in orbit was largely a sightseeing expedition. Alongside Chun, Mikkelsen put her filmmaking expertise to use by shooting video from Dragon's cupola. Before the flight, Mikkelsen said she wanted to create an immersive 3D account of her time in space. In some of Wang's videos, Mikkelsen is seen working with a V-RAPTOR 8K VV camera from Red Digital Cinema, a device that sells for approximately $25,000, according to the manufacturer's website. The crew spent some of their time performing experiments, including the first X-ray of a human in space. Scientists gathered some useful data on the effects of radiation on humans in space because Fram2 flew in a polar orbit, where the astronauts were exposed to higher doses of ionizing radiation than a person might see on the International Space Station. After they splashed down in the Pacific Ocean at the end of the mission, the Fram2 astronauts disembarked from the Dragon capsule without the assistance of SpaceX ground teams, which typically offer a helping hand for balance as crews readjust to gravity. This demonstrated how people might exit their spaceships on the Moon or Mars, where no one will be there to greet them. Going into the flight, Chun wanted to see Antarctica and Svalbard, the Norwegian archipelago where he lives north of the Arctic Circle. In more than 400 human spaceflight missions from 1961 until this year, nobody ever flew in an orbit directly over the poles. Sophisticated satellites routinely fly over the polar regions to take high-resolution imagery and measure things like sea ice. The Fram2 astronauts' observations of the Arctic and Antarctic may not match what satellites can see, but their experience has some lasting catchet, standing alone among all who have flown to space before. "People often refer to Earth as a blue marble planet, but from our point of view, it's more of a frozen planet," Chun told Ars. Stephen Clark Space Reporter Stephen Clark Space Reporter Stephen Clark is a space reporter at Ars Technica, covering private space companies and the world’s space agencies. Stephen writes about the nexus of technology, science, policy, and business on and off the planet. 0 Comments0 Comments 0 Shares 14 Views

ARSTECHNICA.COMA Chinese-born crypto tycoon—of all people—changed the way I think of spaceImmersive A Chinese-born crypto tycoon—of all people—changed the way I think of space "Are we the first generation of digital nomad in space?" Stephen Clark – Apr 22, 2025 7:30 am | 0 Chun Wang orbits the Earth inside the cupola of SpaceX's Dragon spacecraft. Credit: Chun Wang via X Chun Wang orbits the Earth inside the cupola of SpaceX's Dragon spacecraft. Credit: Chun Wang via X Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more For a quarter-century, dating back to my time as a budding space enthusiast, I've watched with a keen eye each time people have ventured into space. That's 162 human spaceflight missions since the beginning of 2000, ranging from Space Shuttle flights to Russian Soyuz missions, Chinese astronauts' first forays into orbit, and commercial expeditions on SpaceX's Dragon capsule. Yes, I'm also counting privately funded suborbital hops launched by Blue Origin and Virgin Galactic. Last week, Jeff Bezos' Blue Origin captured headlines—though not purely positive—with the launch of six women, including pop star Katy Perry, to an altitude of 66 miles (106 kilometers). The capsule returned to the ground 10 minutes and 21 seconds later. It was the first all-female flight to space since Russian cosmonaut Valentina Tereshkova's solo mission in 1963. Many commentators criticized the flight as a tone-deaf stunt or a rich person's flex. I won't make any judgments, except to say two of the passengers aboard Blue Origin's capsule—Aisha Bowe and Amanda Nguyen—have compelling stories worth telling. Immerse yourself Here's another story worth sharing. Earlier this month, an international crew of four private astronauts took their own journey into space aboard a Dragon spacecraft owned and operated by Elon Musk's SpaceX. Like Blue Origin's all-female flight, this mission was largely bankrolled by a billionaire. Actually, it was a couple of billionaires. Musk used his fortune to fund a large portion of the Dragon spacecraft's development costs alongside a multibillion-dollar contribution from US taxpayers. Chun Wang, a Chinese-born cryptocurrency billionaire, paid SpaceX an undisclosed sum to fly one of SpaceX's ships into orbit with three of his friends. So far, this seems like another story about a rich guy going to space. This is indeed a major part of the story, but there's more to it. Chun, now a citizen of Malta, named the mission Fram2 after the Norwegian exploration ship Fram used for polar expeditions at the turn of the 20th century. Following in the footsteps of Fram, which means "forward" in Norwegian, Chun asked SpaceX if he could launch into an orbit over Earth's poles to gain a perspective on our planet no human eyes had seen before. Joining Chun on the three-and-a-half-day Fram2 mission were Jannicke Mikkelsen, a Norwegian filmmaker and cinematographer who took the role of vehicle commander. Rabea Rogge, a robotics researcher from Germany, took the pilot's seat and assisted Mikkelsen in monitoring the spacecraft's condition in flight. Wang and Eric Philips, an Australian polar explorer and guide, flew as "mission specialists" on the mission. Chun's X account reads like a travelogue, with details of each jet-setting jaunt around the world. His propensity for sharing travel experiences extended into space, and I'm grateful for it. The Florida peninsula, including Kennedy Space Center and Cape Canaveral, through the lens of Chun's iPhone. Credit: Chun Wang via X Usually, astronauts might share their reflections from space by writing posts on social media, or occasionally sharing pictures and video vignettes from the International Space Station (ISS). This, in itself, is a remarkable change from the way astronauts communicated with the public from space just 15 years ago. Most of these social media posts involve astronauts showcasing an experiment they're working on or executing a high-flying tutorial in physics. Often, these videos include acrobatic backflips or show the novelty of eating and drinking in microgravity. Some astronauts, like Don Pettit, who recently came home from the ISS, have a knack for gorgeous orbital photography. Chun's videos offer something different. They provide an unfiltered look into how four people live inside a spacecraft with an internal volume comparable to an SUV, and the awe of seeing something beautiful for the first time. His shares have an intimacy, authenticity, and most importantly, an immediacy I've never seen before in a video from space. One of the videos Chun recorded and posted to X shows the Fram2 crew members inside Dragon the day after their launch. The astronauts seem to be enjoying themselves. Their LunchBot meal kits float nearby, and the capsule's makeshift trash bin contains Huggies baby wipes and empty water bottles, giving the environment a vibe akin to a camping trip, except for the constant hum of air fans. Later, Chun shared a video of the crew opening the hatch leading to Dragon's cupola window, a plexiglass extension with panoramic views. Mikkelsen and Chun try to make sense of what they're seeing. "Oh, Novaya Zemlya, do you see it?" Mikkelsen asks. "Yeah. Yeah. It's right here," Chun replies. "Oh, damn. Oh, it is," Mikkelsen says. Chun then drops a bit of Cold War trivia. "The largest atomic bomb was tested here," he says. "And all this ice. Further north, the Arctic Ocean. The North Pole." On the third day of the mission, the Dragon spacecraft soared over Florida, heading south to north on its pole-to-pole loop around the Earth. "I can see our launch pad from here," Mikkelsen says, pointing out NASA's Kennedy Space Center several hundred miles away. Finally, Chun capped his voyage into space with a 30-second clip from his seat inside Dragon as the spacecraft fires thrusters for a deorbit burn. The capsule's small rocket jets pulsed repeatedly to slow Dragon's velocity enough to drop out of orbit and head for reentry and splashdown off the coast of California. Lasers in LEO It wasn't only Chun's proclivity for posting to social media that made this possible. It was also SpaceX's own Starlink Internet network, which the Dragon spacecraft connected to with a "Plug and Plaser" terminal mounted in the capsule's trunk. This device allowed Dragon and its crew to transmit and receive Internet signals through a laser link with Starlink satellites orbiting nearby. Astronauts have shared videos similar to those from Fram2 in the past, but almost always after they are back on Earth, and often edited and packaged into a longer video. What's unique about Chun's videos is that he was able to immediately post his clips, some of which are quite long, to social media via the Starlink Internet network. "With a Starlink laser terminal in the trunk, we can theoretically achieve speeds up to 100 or more gigabits per second," said Jon Edwards, SpaceX's vice president for Falcon launch vehicles, before the Fram2 mission's launch. "For Fram2, we're expecting around 1 gigabit per second." Compare this with the connectivity available to astronauts on the International Space Station, where crews have access to the Internet with uplink speeds of about 4 to 6 megabits per second and 500 kilobits to 1 megabit per second of downlink, according to Sandra Jones, a NASA spokesperson. The space station communications system provides about 1 megabit per second of additional throughput for email, an Internet telephone, and video conferencing. There's another layer of capacity for transmitting scientific and telemetry data between the space station and Mission Control. So, Starlink's laser connection with the Dragon spacecraft offers roughly 200 to 2,000 times the throughput of the Internet connection available on the ISS. The space station sends and receives communication signals, including the Internet, through NASA's fleet of Tracking and Data Relay Satellites. The laser link is also cheaper to use. NASA's TDRS relay stations are dedicated to providing communication support for the ISS and numerous other science missions, including the Hubble Space Telescope, while Dragon plugs into the commercial Starlink network serving millions of other users. SpaceX tested the Plug and Plaser device for the first time in space last year on the Polaris Dawn mission, which was most notable for the first fully commercial spacewalk in history. The results of the test were "phenomenal," said Kevin Coggins, NASA's deputy associate administrator for Space Communications and Navigation. "They have pushed a lot of data through in these tests to demonstrate their ability to do data rates just as high as TDRS, if not higher," Coggins said in a recent presentation to a committee of the National Academies. Artist's illustration of a laser optical link between a Dragon spacecraft and a Starlink satellite. Credit: SpaceX Edwards said SpaceX wants to make the laser communication capability available for future Dragon missions and commercial space stations that may replace the ISS. Meanwhile, NASA is phasing out the government-owned TDRS network. Coggins said NASA's relay satellites in geosynchronous orbit will remain active through the remaining life of the International Space Station, and then will be retired. "Many of these spacecraft are far beyond their intended service life," Coggins said. "In fact, we’ve retired one recently. We’re getting ready to retire another one. In this period of time, we’re going to retire TDRSs pretty often, and we’re going to get down to just a couple left that will last us into the 2030s. "We have to preserve capacity as the constellation gets smaller, and we have to manage risks," Coggins said. "So, we made a decision on November 8, 2024, that no new users could come to TDRS. We took it out of the service catalog." NASA's future satellites in Earth orbit will send their data to the ground through a commercial network like Starlink. The agency has agreements worth more than $278 million with five companies—SpaceX, Amazon, Viasat, SES, and Telesat—to demonstrate how they can replace and improve on the services currently provided by TDRS (pronounced "tee-dress"). These companies are already operating or will soon deploy satellites that could provide radio or laser optical communication links with future space stations, science probes, and climate and weather monitoring satellites. "We're not paying anyone to put up a constellation," Coggins said. After these five companies complete their demonstration phase, NASA will become a subscriber to some or all of their networks. "Now, instead of a 30-year-old [TDRS] constellation and trying to replenish something that we had before, we've got all these new capabilities, all these new things that weren't possible before, especially optical," Coggins said. "That's going to that's going to mean so much with the volume and quality of data that you're going to be able to bring down." Digital nomads Chun and his crewmates didn't use the Starlink connection to send down any prize-winning discoveries about the Universe, or data for a comprehensive global mapping survey. Instead, the Fram2 crew used the connection for video calls and text messages with their families through tablets and smartphones linked to a Wi-Fi router inside the Dragon spacecraft. "Are we the first generation of digital nomad in space?" Chun asked his followers in one X post. "It was not 100 percent available, but when it was, it was really fast," Chun wrote of the Internet connection in an email to Ars. He told us he used an iPhone 16 Pro Max for his 4K videos. From some 200 miles (300 kilometers) up, the phone's 48-megapixel camera, with a simulated optical zoom, brought out the finer textures of ice sheets, clouds, water, and land formations. While the flight was fully automated, SpaceX trained the Fram2 crew how to live and work inside the Dragon spacecraft and take over manual control if necessary. None of Fram2 crew members had a background in spaceflight or in any part of the space industry before they started preparing for their mission. Notably, it was the first human spaceflight mission to low-Earth orbit without a trained airplane pilot onboard. Chun Wang, far right, extends his arm to take an iPhone selfie moments after splashdown in the Pacific Ocean. Credit: SpaceX Their nearly four days in orbit was largely a sightseeing expedition. Alongside Chun, Mikkelsen put her filmmaking expertise to use by shooting video from Dragon's cupola. Before the flight, Mikkelsen said she wanted to create an immersive 3D account of her time in space. In some of Wang's videos, Mikkelsen is seen working with a V-RAPTOR 8K VV camera from Red Digital Cinema, a device that sells for approximately $25,000, according to the manufacturer's website. The crew spent some of their time performing experiments, including the first X-ray of a human in space. Scientists gathered some useful data on the effects of radiation on humans in space because Fram2 flew in a polar orbit, where the astronauts were exposed to higher doses of ionizing radiation than a person might see on the International Space Station. After they splashed down in the Pacific Ocean at the end of the mission, the Fram2 astronauts disembarked from the Dragon capsule without the assistance of SpaceX ground teams, which typically offer a helping hand for balance as crews readjust to gravity. This demonstrated how people might exit their spaceships on the Moon or Mars, where no one will be there to greet them. Going into the flight, Chun wanted to see Antarctica and Svalbard, the Norwegian archipelago where he lives north of the Arctic Circle. In more than 400 human spaceflight missions from 1961 until this year, nobody ever flew in an orbit directly over the poles. Sophisticated satellites routinely fly over the polar regions to take high-resolution imagery and measure things like sea ice. The Fram2 astronauts' observations of the Arctic and Antarctic may not match what satellites can see, but their experience has some lasting catchet, standing alone among all who have flown to space before. "People often refer to Earth as a blue marble planet, but from our point of view, it's more of a frozen planet," Chun told Ars. Stephen Clark Space Reporter Stephen Clark Space Reporter Stephen Clark is a space reporter at Ars Technica, covering private space companies and the world’s space agencies. Stephen writes about the nexus of technology, science, policy, and business on and off the planet. 0 Comments0 Comments 0 Shares 14 Views -

WWW.INFORMATIONWEEK.COMEdge AI: Is it Right for Your Business?John Edwards, Technology Journalist & AuthorApril 22, 20255 Min ReadDragos Condrea via Alamy Stock PhotoIf you haven't yet heard about edge AI, you no doubt soon will. To listen to its many supporters, the technology is poised to streamline AI processing. Edge AI presents an exciting shift, says Baris Sarer, global leader of Deloitte's AI practice for technology, media, and telecom. "Instead of relying on cloud servers -- which require data to be transmitted back and forth -- we're seeing a strategic deployment of artificial intelligence models directly onto the user’s device, including smartphones, personal computers, IoT devices, and other local hardware," he explains via email. "Data is therefore both generated and processed locally, allowing for real-time processing and decision-making without the latency, cost, and privacy considerations associated with public cloud connections." Multiple Benefits By reducing latency and improving response times -- since data is processed close to where it's collected -- edge AI offers significant advantages, says Mat Gilbert, head of AI and data at Synapse, a unit of management consulting firm Capgemini Invent. It also minimizes data transmission over networks, improving privacy and security, he notes via email. "This makes edge AI crucial for applications that require rapid response times, or that operate in environments with limited or high-cost connectivity." This is particularly true when large amounts of data are collected, or when there's a need for privacy and/or keeping critical data on-premises. Related:Initial Adopters Edge AI is a foundational technology that can drive future growth, transform operations, and enhance efficiencies across industries. "It enables devices to handle complex tasks independently, transforming data processing and reducing cloud dependency," Sarer says. Examples include: Healthcare. Enhancing portable diagnostic devices and real-time health monitoring, delivering immediate insights and potentially lifesaving alerts. Autonomous vehicles. Allowing real-time decision-making and navigation, ensuring safety and operational efficiency. Industrial IoT systems. Facilitating on-site data processing, streamlining operations and boosting productivity. Retail. Enhancing customer experiences and optimizing inventory management. Consumer electronics. Elevating user engagement by improving photography, voice assistants, and personalized recommendations. Smart cities. Edge AI can play a pivotal role in managing traffic flow and urban infrastructure in real-time, contributing to improved city planning. First Steps Related:Organizations considering edge AI adoption should start with a concrete business use case, advises Debojyoti Dutta, vice president of engineering AI at cloud computing firm Nutanix. "For example, in retail, one needs to analyze visual data using computer vision for restocking, theft detection, and checkout optimization, he says in an online interview. KPIs could include increased revenue due to restocking (quicker restocking leads to more revenue and reduced cart abandonment), and theft detection. The next step, Dutta says, should be choosing the appropriate AI models and workflows, ensuring they meet each use case's needs. Finally, when implementing edge AI, it's important to define an edge-based combination data/AI architecture and stack, Dutta says. The architecture/stack may be hierarchical due to the business structure. "In retail, we can have a lower cost/power AI infrastructure at each store and more powerful edge devices at the distribution centers." Adoption Challenges While edge AI promises numerous benefits, there are also several important drawbacks. "One of the primary challenges is the complexity of deploying and managing AI models on edge devices, which often have limited computational resources compared to centralized cloud servers," Sarer says. "This can necessitate significant optimization efforts to ensure that models run efficiently on these devices." Related:Another potential sticking point is the initial cost of building an edge infrastructure and the need for specialized talent to develop and maintain edge AI solutions. "Security considerations should also be taken into account, since edge AI requires additional end-point security measures as the workloads are distributed," Sarer says. Despite these challenges, edge AI's benefits of real-time data processing, reduced latency, and enhanced data privacy, usually outweigh the drawbacks, Sarer says. "By carefully planning and addressing these potential issues, organizations can successfully leverage edge AI to drive innovation and achieve their strategic objectives." Perhaps the biggest challenge facing potential adopters are the computational constraints inherent in edge devices. By definition, edge AI models run on resource-constrained hardware, so deployed models generally require tuning to specific use cases and environments, Gilbert says. "These models can require significant power to operate effectively, which can be challenging for battery-powered devices, for example." Additionally, balancing response time needs with a need for high accuracy demands careful management. Looking Ahead Edge AI is evolving rapidly, with hardware becoming increasingly capable as software advances continue to reduce AI models' complexity and size, Gilbert says. "These developments are lowering the barriers to entry, suggesting an increasingly expansive array of applications in the near future and beyond." About the AuthorJohn EdwardsTechnology Journalist & AuthorJohn Edwards is a veteran business technology journalist. His work has appeared in The New York Times, The Washington Post, and numerous business and technology publications, including Computerworld, CFO Magazine, IBM Data Management Magazine, RFID Journal, and Electronic Design. He has also written columns for The Economist's Business Intelligence Unit and PricewaterhouseCoopers' Communications Direct. John has authored several books on business technology topics. His work began appearing online as early as 1983. Throughout the 1980s and 90s, he wrote daily news and feature articles for both the CompuServe and Prodigy online services. His "Behind the Screens" commentaries made him the world's first known professional blogger.See more from John EdwardsReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Comments 0 Shares 11 Views

WWW.INFORMATIONWEEK.COMEdge AI: Is it Right for Your Business?John Edwards, Technology Journalist & AuthorApril 22, 20255 Min ReadDragos Condrea via Alamy Stock PhotoIf you haven't yet heard about edge AI, you no doubt soon will. To listen to its many supporters, the technology is poised to streamline AI processing. Edge AI presents an exciting shift, says Baris Sarer, global leader of Deloitte's AI practice for technology, media, and telecom. "Instead of relying on cloud servers -- which require data to be transmitted back and forth -- we're seeing a strategic deployment of artificial intelligence models directly onto the user’s device, including smartphones, personal computers, IoT devices, and other local hardware," he explains via email. "Data is therefore both generated and processed locally, allowing for real-time processing and decision-making without the latency, cost, and privacy considerations associated with public cloud connections." Multiple Benefits By reducing latency and improving response times -- since data is processed close to where it's collected -- edge AI offers significant advantages, says Mat Gilbert, head of AI and data at Synapse, a unit of management consulting firm Capgemini Invent. It also minimizes data transmission over networks, improving privacy and security, he notes via email. "This makes edge AI crucial for applications that require rapid response times, or that operate in environments with limited or high-cost connectivity." This is particularly true when large amounts of data are collected, or when there's a need for privacy and/or keeping critical data on-premises. Related:Initial Adopters Edge AI is a foundational technology that can drive future growth, transform operations, and enhance efficiencies across industries. "It enables devices to handle complex tasks independently, transforming data processing and reducing cloud dependency," Sarer says. Examples include: Healthcare. Enhancing portable diagnostic devices and real-time health monitoring, delivering immediate insights and potentially lifesaving alerts. Autonomous vehicles. Allowing real-time decision-making and navigation, ensuring safety and operational efficiency. Industrial IoT systems. Facilitating on-site data processing, streamlining operations and boosting productivity. Retail. Enhancing customer experiences and optimizing inventory management. Consumer electronics. Elevating user engagement by improving photography, voice assistants, and personalized recommendations. Smart cities. Edge AI can play a pivotal role in managing traffic flow and urban infrastructure in real-time, contributing to improved city planning. First Steps Related:Organizations considering edge AI adoption should start with a concrete business use case, advises Debojyoti Dutta, vice president of engineering AI at cloud computing firm Nutanix. "For example, in retail, one needs to analyze visual data using computer vision for restocking, theft detection, and checkout optimization, he says in an online interview. KPIs could include increased revenue due to restocking (quicker restocking leads to more revenue and reduced cart abandonment), and theft detection. The next step, Dutta says, should be choosing the appropriate AI models and workflows, ensuring they meet each use case's needs. Finally, when implementing edge AI, it's important to define an edge-based combination data/AI architecture and stack, Dutta says. The architecture/stack may be hierarchical due to the business structure. "In retail, we can have a lower cost/power AI infrastructure at each store and more powerful edge devices at the distribution centers." Adoption Challenges While edge AI promises numerous benefits, there are also several important drawbacks. "One of the primary challenges is the complexity of deploying and managing AI models on edge devices, which often have limited computational resources compared to centralized cloud servers," Sarer says. "This can necessitate significant optimization efforts to ensure that models run efficiently on these devices." Related:Another potential sticking point is the initial cost of building an edge infrastructure and the need for specialized talent to develop and maintain edge AI solutions. "Security considerations should also be taken into account, since edge AI requires additional end-point security measures as the workloads are distributed," Sarer says. Despite these challenges, edge AI's benefits of real-time data processing, reduced latency, and enhanced data privacy, usually outweigh the drawbacks, Sarer says. "By carefully planning and addressing these potential issues, organizations can successfully leverage edge AI to drive innovation and achieve their strategic objectives." Perhaps the biggest challenge facing potential adopters are the computational constraints inherent in edge devices. By definition, edge AI models run on resource-constrained hardware, so deployed models generally require tuning to specific use cases and environments, Gilbert says. "These models can require significant power to operate effectively, which can be challenging for battery-powered devices, for example." Additionally, balancing response time needs with a need for high accuracy demands careful management. Looking Ahead Edge AI is evolving rapidly, with hardware becoming increasingly capable as software advances continue to reduce AI models' complexity and size, Gilbert says. "These developments are lowering the barriers to entry, suggesting an increasingly expansive array of applications in the near future and beyond." About the AuthorJohn EdwardsTechnology Journalist & AuthorJohn Edwards is a veteran business technology journalist. His work has appeared in The New York Times, The Washington Post, and numerous business and technology publications, including Computerworld, CFO Magazine, IBM Data Management Magazine, RFID Journal, and Electronic Design. He has also written columns for The Economist's Business Intelligence Unit and PricewaterhouseCoopers' Communications Direct. John has authored several books on business technology topics. His work began appearing online as early as 1983. Throughout the 1980s and 90s, he wrote daily news and feature articles for both the CompuServe and Prodigy online services. His "Behind the Screens" commentaries made him the world's first known professional blogger.See more from John EdwardsReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Comments 0 Shares 11 Views -

WWW.INFORMATIONWEEK.COMCIO Angelic Gibson: Quell AI Fears by Making Learning FunLisa Morgan, Freelance WriterApril 22, 20257 Min ReadFirn via Alamy StockEffective technology leadership today prioritizes people as much as technology. Just ask Angelic Gibson, CIO at accounts payable software provider AvidXchange. Gibson began her career in 1999 as a software engineer and used her programming and people skills to consistently climb the corporate ladder, working for various companies including mattress company Sleepy’s and cosmetic company Estee Lauder. By the time she landed at Stony Brook University, she had worked her way up to technology strategist and senior software engineer/architect before becoming director, IT operations for American Tire distributors. By 2013, she was SVP, information technology for technology solutions provider TKXS and for the past seven years she’s been CIO at AvidXchange. “I moved from running large enterprise IT departments to SaaS companies, so building SaaS platforms and taking them to market while also running internal IT delivery is what I’ve been doing for the past 13 years. I love building world class technology that scales,” says Gibson. “It's exciting to me because technology is hard work and you’re always a plethora of problems, so you wake up every day, knowing you get to solve difficult, complex problems. Very few people handle complex transformations well, so getting to do complex transformations with really smart people is invigorating. It inspires me to come to work every day.” Related:Angelic GibsonOne thing Gibson and her peers realized is that AI is anything but static. Its capabilities continue to expand as it becomes more sophisticated, so human-machine partnerships necessarily evolve. Many organizations have experienced significant pushback from workers who think AI is an existential threat. Organizations downsizing through intelligent automation, and the resulting headlines, aren’t helping to ease AI-related fears. Bottom line, it’s a change management issue that needs to be addressed thoughtfully. “Technology has always been about increasing automation to ensure quality and increase speed to market, so to me, it's just another tool to do that,” says Gibson. “You’ve got to meet people where they're at, so we do a lot of talking about fears and constraints. Let’s put it on the table, let’s talk about it, and then let’s shift to the art of the possible. What if [AI] doesn't take your job? What could you be doing?” The point is to get employees to reimagine their roles. To facilitate this, Gibson identified people who could be AI champions, such as principal senior engineers who would love to automate lower level thinking so they can spend more time thinking critically. Related:“What we have found is we’ve met resistance from more senior level talent versus new talent, such as individuals working in business units who have learned AI to increasingly automate their roles,” says Gibson. “We have tons of use cases like that. Many employees have automated their traditional business operations role and now they're helping us increase automation throughout the enterprise.” Making AI Fun to Learn Today’s engineers are constantly learning to keep pace with technology changes. Gibson has gamified learning by showcasing who’s leveraging AI in interesting ways, which has increased productivity and quality while impacting AvidXchange customers in a positive way. “We gamify it through hackathons and showcase it to the whole company at an all-hands meeting, just taking a moment to recognize awesome work,” says Gibson. “And then there are the brass tacks: We’ve got to get work done and have real productivity gains that we're accountable for driving.” Over the last five years, Gibson has been creating a learning environment that curates the kinds of classes she wants every technologist to learn and understand, such as a prompt engineering certification course. Their progress is also tracked. Related:“We certify compliance and security annually. We do the same thing, with any new tech skill that we need our teammates to learn,” says Gibson. “We have them go through certification and compliance training on that skill set to show that they’re participating in the training. It doesn't matter if you’re a business analyst or an engineer, everyone's required to do it, because AI can have a positive impact in any role.” Establish a Strong Foundation for Learning Gibson has also established an AI Center of Excellence (CoE), made up of 22 internal AI thought leaders who are tasked with keeping up with all the trends. The group is responsible for bringing in different GenAI tools and deep learning technologies. They’re also responsible for running proofs of concept (POC). When the project is ready for production, the CoE ensures it has passed all AvidXchange cybersecurity requirements. “Any POC must prove that it's going to add value,” says Gibson. “We’re not just throwing a slew of technology out there for technology’s sake, so we need to make sure that it’s fit for purpose and that it works in our environment.” To help ensure the success of projects, Gibson has established a hub and spoke operating model, so every business unit has an AI champion that works in partnership with the CoE. In addition, AvidXchange made AI training mandatory as of January 2024, because AI is central to its account payables solution. In fact, the largest customer use cases have achieved 99% payment processing accuracy using AI to extract data from PDFs and do quality checks, though humans do a final review to ensure that level of accuracy. “What we’ve done is to take our customer-facing tool sets or internal business operations and hook it up to that data model. It can answer questions like, ‘What’s the status of my payment?’ We are now turning the lights on for AI agents to be available to our internal and external customer bases.” Some employees working in different business units have transitioned to Gibson’s team specifically to work on AI. While they don’t have the STEM background traditional IT candidates have, they have deep domain expertise. AvidXchange upskills these employees on STEM so they can understand how AI works. “If you don't understand how an AI agent works, it’s hard for you to understand if it’s hallucinating or if you're going to have quality issues,” says Gibson. “So, we need to make sure the answers are sound and accurate by making the agents quote their sources, so it’s easier for people to validate outputs.” Focus on Optimization and Acceleration Instead of looking at AI as a human replacement, Gibson believes it’s wiser to harness an AI-assisted ways of working to increase productivity and efficiency across the board. For example, AvidXchange specifically tracks KPIs designed to drive improvement. In addition, its success targets are broken down from the year to quarters and months to ensure the KPIs are being met. If not, the status updates enable the company to course correct as necessary. “We have three core mindsets: Connected as people, growth-minded, and customer-obsessed. Meanwhile, we’re constantly thinking about how we can go faster and deliver higher quality for our customers and nurture positive relationships across the organization so we can achieve a culture of candor and care,” says Gibson. “We have the data so we can see who’s adopting tools and who isn’t, and for those who aren’t, we have a conversation about any fear they may have and how we can work through that together. We [also] want a good ecosystem of proven technologies that are easy to use. It’s also important that people know they can come to us because it’s a trusted partnership.” She also believes success is a matter of balance. “Any time you make a sweeping change that feels urgent, the human component can get lost, so it’s important to bring people along,” says Gibson. “There’s this art right now of how fast you can go safely while not losing people in the process. You need to constantly look at that to make sure you’re in balance.” About the AuthorLisa MorganFreelance WriterLisa Morgan is a freelance writer who covers business and IT strategy and emerging technology for InformationWeek. She has contributed articles, reports, and other types of content to many technology, business, and mainstream publications and sites including tech pubs, The Washington Post and The Economist Intelligence Unit. Frequent areas of coverage include AI, analytics, cloud, cybersecurity, mobility, software development, and emerging cultural issues affecting the C-suite.See more from Lisa MorganReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Comments 0 Shares 12 Views

WWW.INFORMATIONWEEK.COMCIO Angelic Gibson: Quell AI Fears by Making Learning FunLisa Morgan, Freelance WriterApril 22, 20257 Min ReadFirn via Alamy StockEffective technology leadership today prioritizes people as much as technology. Just ask Angelic Gibson, CIO at accounts payable software provider AvidXchange. Gibson began her career in 1999 as a software engineer and used her programming and people skills to consistently climb the corporate ladder, working for various companies including mattress company Sleepy’s and cosmetic company Estee Lauder. By the time she landed at Stony Brook University, she had worked her way up to technology strategist and senior software engineer/architect before becoming director, IT operations for American Tire distributors. By 2013, she was SVP, information technology for technology solutions provider TKXS and for the past seven years she’s been CIO at AvidXchange. “I moved from running large enterprise IT departments to SaaS companies, so building SaaS platforms and taking them to market while also running internal IT delivery is what I’ve been doing for the past 13 years. I love building world class technology that scales,” says Gibson. “It's exciting to me because technology is hard work and you’re always a plethora of problems, so you wake up every day, knowing you get to solve difficult, complex problems. Very few people handle complex transformations well, so getting to do complex transformations with really smart people is invigorating. It inspires me to come to work every day.” Related:Angelic GibsonOne thing Gibson and her peers realized is that AI is anything but static. Its capabilities continue to expand as it becomes more sophisticated, so human-machine partnerships necessarily evolve. Many organizations have experienced significant pushback from workers who think AI is an existential threat. Organizations downsizing through intelligent automation, and the resulting headlines, aren’t helping to ease AI-related fears. Bottom line, it’s a change management issue that needs to be addressed thoughtfully. “Technology has always been about increasing automation to ensure quality and increase speed to market, so to me, it's just another tool to do that,” says Gibson. “You’ve got to meet people where they're at, so we do a lot of talking about fears and constraints. Let’s put it on the table, let’s talk about it, and then let’s shift to the art of the possible. What if [AI] doesn't take your job? What could you be doing?” The point is to get employees to reimagine their roles. To facilitate this, Gibson identified people who could be AI champions, such as principal senior engineers who would love to automate lower level thinking so they can spend more time thinking critically. Related:“What we have found is we’ve met resistance from more senior level talent versus new talent, such as individuals working in business units who have learned AI to increasingly automate their roles,” says Gibson. “We have tons of use cases like that. Many employees have automated their traditional business operations role and now they're helping us increase automation throughout the enterprise.” Making AI Fun to Learn Today’s engineers are constantly learning to keep pace with technology changes. Gibson has gamified learning by showcasing who’s leveraging AI in interesting ways, which has increased productivity and quality while impacting AvidXchange customers in a positive way. “We gamify it through hackathons and showcase it to the whole company at an all-hands meeting, just taking a moment to recognize awesome work,” says Gibson. “And then there are the brass tacks: We’ve got to get work done and have real productivity gains that we're accountable for driving.” Over the last five years, Gibson has been creating a learning environment that curates the kinds of classes she wants every technologist to learn and understand, such as a prompt engineering certification course. Their progress is also tracked. Related:“We certify compliance and security annually. We do the same thing, with any new tech skill that we need our teammates to learn,” says Gibson. “We have them go through certification and compliance training on that skill set to show that they’re participating in the training. It doesn't matter if you’re a business analyst or an engineer, everyone's required to do it, because AI can have a positive impact in any role.” Establish a Strong Foundation for Learning Gibson has also established an AI Center of Excellence (CoE), made up of 22 internal AI thought leaders who are tasked with keeping up with all the trends. The group is responsible for bringing in different GenAI tools and deep learning technologies. They’re also responsible for running proofs of concept (POC). When the project is ready for production, the CoE ensures it has passed all AvidXchange cybersecurity requirements. “Any POC must prove that it's going to add value,” says Gibson. “We’re not just throwing a slew of technology out there for technology’s sake, so we need to make sure that it’s fit for purpose and that it works in our environment.” To help ensure the success of projects, Gibson has established a hub and spoke operating model, so every business unit has an AI champion that works in partnership with the CoE. In addition, AvidXchange made AI training mandatory as of January 2024, because AI is central to its account payables solution. In fact, the largest customer use cases have achieved 99% payment processing accuracy using AI to extract data from PDFs and do quality checks, though humans do a final review to ensure that level of accuracy. “What we’ve done is to take our customer-facing tool sets or internal business operations and hook it up to that data model. It can answer questions like, ‘What’s the status of my payment?’ We are now turning the lights on for AI agents to be available to our internal and external customer bases.” Some employees working in different business units have transitioned to Gibson’s team specifically to work on AI. While they don’t have the STEM background traditional IT candidates have, they have deep domain expertise. AvidXchange upskills these employees on STEM so they can understand how AI works. “If you don't understand how an AI agent works, it’s hard for you to understand if it’s hallucinating or if you're going to have quality issues,” says Gibson. “So, we need to make sure the answers are sound and accurate by making the agents quote their sources, so it’s easier for people to validate outputs.” Focus on Optimization and Acceleration Instead of looking at AI as a human replacement, Gibson believes it’s wiser to harness an AI-assisted ways of working to increase productivity and efficiency across the board. For example, AvidXchange specifically tracks KPIs designed to drive improvement. In addition, its success targets are broken down from the year to quarters and months to ensure the KPIs are being met. If not, the status updates enable the company to course correct as necessary. “We have three core mindsets: Connected as people, growth-minded, and customer-obsessed. Meanwhile, we’re constantly thinking about how we can go faster and deliver higher quality for our customers and nurture positive relationships across the organization so we can achieve a culture of candor and care,” says Gibson. “We have the data so we can see who’s adopting tools and who isn’t, and for those who aren’t, we have a conversation about any fear they may have and how we can work through that together. We [also] want a good ecosystem of proven technologies that are easy to use. It’s also important that people know they can come to us because it’s a trusted partnership.” She also believes success is a matter of balance. “Any time you make a sweeping change that feels urgent, the human component can get lost, so it’s important to bring people along,” says Gibson. “There’s this art right now of how fast you can go safely while not losing people in the process. You need to constantly look at that to make sure you’re in balance.” About the AuthorLisa MorganFreelance WriterLisa Morgan is a freelance writer who covers business and IT strategy and emerging technology for InformationWeek. She has contributed articles, reports, and other types of content to many technology, business, and mainstream publications and sites including tech pubs, The Washington Post and The Economist Intelligence Unit. Frequent areas of coverage include AI, analytics, cloud, cybersecurity, mobility, software development, and emerging cultural issues affecting the C-suite.See more from Lisa MorganReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Comments 0 Shares 12 Views -