0 Reacties

0 aandelen

Bedrijvengids

Bedrijvengids

-

Please log in to like, share and comment!

-

WWW.ARCHDAILY.COMCheer Kindergarten / HIBINOSEKKEI + Youji no ShiroCheer Kindergarten / HIBINOSEKKEI + Youji no ShiroSave this picture! Siming WuKindergartenShenzhen, ChinaArchitects: HIBINOSEKKEI, Youji no ShiroAreaArea of this architecture projectArea:4240 mYearCompletion year of this architecture project Year: 2023 PhotographsPhotographs:Siming WuMore SpecsLess SpecsSave this picture!Text description provided by the architects. This is an interior project for a newly established kindergarten located in Nanshan District, Shenzhen, China. The kindergarten is situated in a region known for trade and IT-related businesses, and the design concept was developed to encourage children's spontaneous physical activity, reflecting the area's dynamic growth and development.Save this picture!Save this picture!At the center of the existing building is a four-story atrium, surrounded by large climbing net structures that connect the three levels on both the east and west sides. The atrium links various spaces within the building. Not only does it serve as vertical circulation, but also as a place for social interaction and a challenge for the children. Large windows and reading areas are placed in the hallways facing the atrium, allowing natural light to enter the classrooms while transforming the typically monotonous hallway into a space for play and learning.Save this picture!Save this picture!Save this picture!Save this picture!Additionally, a large tree is planted in the courtyard so that children can observe its growth and transformation over time, allowing them to feel the flow of nature no matter where they stand in the building. In addition to the play and learning areas designed around the concept of a 'bird's nest,' representing nurturing life, it also serves as the symbol of the kindergarten, resulting in a space that imprints an everlasting memory on children.Save this picture!Save this picture!Save this picture!Save this picture!The interior design focuses on using natural materials, such as adopting earth walls inspired by the site's geological layers (old red sandstone) and bending laminated bamboo to represent a bird's nest. This approach aims to stimulate both visual and tactile senses while fostering an understanding of nature and the local environment.Save this picture!Save this picture!Save this picture!To reduce the environmental impact of construction waste, most of the existing building's exterior walls were left unchanged, with a few large openings and foldable doors added to connect the indoor activities to the outside. Together with operable glass skylights, these features create a flow of air around the atrium and control light and wind without relying heavily on mechanical systems.Save this picture!Limited space is a common challenge typically faced by kindergartens in an urban area. To cope with the challenge, a spatial garden with no dead ends was created by connecting the basement and ground floor gardens with stairs and grass-covered slopes, ensuring that children have plenty of opportunities for physical activity and a variety of daily experiences. The design encourages children's curiosity and interest according to their developmental stages, naturally stimulating their bodies and minds to promote growth in their everyday lives.Save this picture!Project gallerySee allShow lessProject locationAddress:Shenzhen, ChinaLocation to be used only as a reference. It could indicate city/country but not exact address.About this officeHIBINOSEKKEIOfficeYouji no ShiroOfficePublished on January 09, 2025Cite: "Cheer Kindergarten / HIBINOSEKKEI + Youji no Shiro" 09 Jan 2025. ArchDaily. Accessed . <https://www.archdaily.com/1025442/cheer-kindergarten-hibinosekkei-plus-youji-no-shiro&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Reacties 0 aandelen

WWW.ARCHDAILY.COMCheer Kindergarten / HIBINOSEKKEI + Youji no ShiroCheer Kindergarten / HIBINOSEKKEI + Youji no ShiroSave this picture! Siming WuKindergartenShenzhen, ChinaArchitects: HIBINOSEKKEI, Youji no ShiroAreaArea of this architecture projectArea:4240 mYearCompletion year of this architecture project Year: 2023 PhotographsPhotographs:Siming WuMore SpecsLess SpecsSave this picture!Text description provided by the architects. This is an interior project for a newly established kindergarten located in Nanshan District, Shenzhen, China. The kindergarten is situated in a region known for trade and IT-related businesses, and the design concept was developed to encourage children's spontaneous physical activity, reflecting the area's dynamic growth and development.Save this picture!Save this picture!At the center of the existing building is a four-story atrium, surrounded by large climbing net structures that connect the three levels on both the east and west sides. The atrium links various spaces within the building. Not only does it serve as vertical circulation, but also as a place for social interaction and a challenge for the children. Large windows and reading areas are placed in the hallways facing the atrium, allowing natural light to enter the classrooms while transforming the typically monotonous hallway into a space for play and learning.Save this picture!Save this picture!Save this picture!Save this picture!Additionally, a large tree is planted in the courtyard so that children can observe its growth and transformation over time, allowing them to feel the flow of nature no matter where they stand in the building. In addition to the play and learning areas designed around the concept of a 'bird's nest,' representing nurturing life, it also serves as the symbol of the kindergarten, resulting in a space that imprints an everlasting memory on children.Save this picture!Save this picture!Save this picture!Save this picture!The interior design focuses on using natural materials, such as adopting earth walls inspired by the site's geological layers (old red sandstone) and bending laminated bamboo to represent a bird's nest. This approach aims to stimulate both visual and tactile senses while fostering an understanding of nature and the local environment.Save this picture!Save this picture!Save this picture!To reduce the environmental impact of construction waste, most of the existing building's exterior walls were left unchanged, with a few large openings and foldable doors added to connect the indoor activities to the outside. Together with operable glass skylights, these features create a flow of air around the atrium and control light and wind without relying heavily on mechanical systems.Save this picture!Limited space is a common challenge typically faced by kindergartens in an urban area. To cope with the challenge, a spatial garden with no dead ends was created by connecting the basement and ground floor gardens with stairs and grass-covered slopes, ensuring that children have plenty of opportunities for physical activity and a variety of daily experiences. The design encourages children's curiosity and interest according to their developmental stages, naturally stimulating their bodies and minds to promote growth in their everyday lives.Save this picture!Project gallerySee allShow lessProject locationAddress:Shenzhen, ChinaLocation to be used only as a reference. It could indicate city/country but not exact address.About this officeHIBINOSEKKEIOfficeYouji no ShiroOfficePublished on January 09, 2025Cite: "Cheer Kindergarten / HIBINOSEKKEI + Youji no Shiro" 09 Jan 2025. ArchDaily. Accessed . <https://www.archdaily.com/1025442/cheer-kindergarten-hibinosekkei-plus-youji-no-shiro&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Reacties 0 aandelen -

WWW.ARCHDAILY.COMSorbet Showroom / MuseLABSorbet Showroom / MuseLABSave this picture!Courtesy of MuseLABShowroomMumbai, IndiaArchitects: MuseLABAreaArea of this architecture projectArea:2600 mYearCompletion year of this architecture project Year: 2024 ManufacturersBrands with products used in this architecture project Manufacturers: AQUANT, Bharat Furnishing, Ledlum Lighting Solutions LLP, Parman Designs, Quantum AC, RiverBoat Acoustics More SpecsLess SpecsSave this picture!Sorbet.The tartness of berries and the zest of lime.Icy, invigorating, and its texture is a fine crossover between granular and velvet. Here's a legendary sorbet duo packing a punch as one saunter through sunny lanes in faraway lands and, interestingly, an equally delectable palette as it washes over the canvas of space. Reprising the experience of bathware discovery lounges, Sorbet fuses a luxe milieu with a penchant for color no-holds-barred! The archetypal configuration of bath solution experience centers has been traded for an intuitive and intrigue-driven rendezvous, celebrating the patron's experience in a newfound light.Save this picture!Save this picture!A primo zip code in Southern Mumbai poses as Sorbet's playground of color and geometry, formerly inhabited by a celebrated rug atelier. The studio's design endeavor saw merit in preserving (and celebrating) the site's inherent features, its 12-foot-high walls, deep volume, and porous relationship to the elongated street being key aspects. The building's faade exhibits a permeable demeanor, its skin a composition of various kinds of glass: fluted, clear, and a fabricated trellis set into the lime-hued cuboid rising along the street's edge. The porch-like entrance is veiled under the shade of the glass brick trellis with embedded planters sprouting plum-hued foliage and a carpet of random rubble-style Kota underfoot. The faade gives away subtle hints of the visceral rush of hues and elements one encounters inside, the arched, terrazzo-handle-embellished doors waiting to be swung open!Save this picture!Save this picture!Save this picture!Inside, the visual is comparable to being set amidst a monolith of greens, the hues omnipresence punctuated by levitating halos (bespoke lighting systems) and I-section stanchions bathed in a berry hue.The design intent was steadfast: to enable patrons to experience the entirety of the store's volume no matter where they stand within the blueprint; the vistas were seamless and all-encompassing through the rectilinear volume's axis. The services remain intentionally exposed, HVAC and ducting darting through the space's length with the marsala tone washing over them.Save this picture!Save this picture!Sorbet's identity is spearheaded by the singularity the material palette dons. The mint hue manifests as textured paint, candy-esque terrazzo, and Piccolo mosaic segments, earmarking various nodes and moments through the center. The flooring is a morphing landscape of smooth Kota stone, flitting between jagged chunks and smooth curvilinear pieces embedded within rivulets of ivory grout.Save this picture!Save this picture!Save this picture!Movement is imagined as a layout of intersecting circles, creating semi-open enclosures hosting product typologies. The circles assume various diameters and envelope heights, allowing one's sight to gather glimpses of the store. The display systems within the enclosures result from intense conceptualization. Their curved and tiered forms echo the overruling geometry. Cloche-style pedestals, curved units extending from the enclosures, and circular display systems dot the layout, creating moments of pause for patrons to experience the gamut of products.Save this picture!For the design team, Sorbet went far beyond recalibrating what 'conventional' experience centers could look like. It was a sojourn that beckoned one's inner child to come to play, marinate in visual storytelling, and create a space that allowed one to willingly abandon all notions of the 'expected' at the door.Save this picture!Project gallerySee allShow lessProject locationAddress:Mumbai, IndiaLocation to be used only as a reference. It could indicate city/country but not exact address.About this officeMuseLABOfficePublished on January 09, 2025Cite: "Sorbet Showroom / MuseLAB" 09 Jan 2025. ArchDaily. Accessed . <https://www.archdaily.com/1025440/sorbet-showroom-muselab&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Reacties 0 aandelen

WWW.ARCHDAILY.COMSorbet Showroom / MuseLABSorbet Showroom / MuseLABSave this picture!Courtesy of MuseLABShowroomMumbai, IndiaArchitects: MuseLABAreaArea of this architecture projectArea:2600 mYearCompletion year of this architecture project Year: 2024 ManufacturersBrands with products used in this architecture project Manufacturers: AQUANT, Bharat Furnishing, Ledlum Lighting Solutions LLP, Parman Designs, Quantum AC, RiverBoat Acoustics More SpecsLess SpecsSave this picture!Sorbet.The tartness of berries and the zest of lime.Icy, invigorating, and its texture is a fine crossover between granular and velvet. Here's a legendary sorbet duo packing a punch as one saunter through sunny lanes in faraway lands and, interestingly, an equally delectable palette as it washes over the canvas of space. Reprising the experience of bathware discovery lounges, Sorbet fuses a luxe milieu with a penchant for color no-holds-barred! The archetypal configuration of bath solution experience centers has been traded for an intuitive and intrigue-driven rendezvous, celebrating the patron's experience in a newfound light.Save this picture!Save this picture!A primo zip code in Southern Mumbai poses as Sorbet's playground of color and geometry, formerly inhabited by a celebrated rug atelier. The studio's design endeavor saw merit in preserving (and celebrating) the site's inherent features, its 12-foot-high walls, deep volume, and porous relationship to the elongated street being key aspects. The building's faade exhibits a permeable demeanor, its skin a composition of various kinds of glass: fluted, clear, and a fabricated trellis set into the lime-hued cuboid rising along the street's edge. The porch-like entrance is veiled under the shade of the glass brick trellis with embedded planters sprouting plum-hued foliage and a carpet of random rubble-style Kota underfoot. The faade gives away subtle hints of the visceral rush of hues and elements one encounters inside, the arched, terrazzo-handle-embellished doors waiting to be swung open!Save this picture!Save this picture!Save this picture!Inside, the visual is comparable to being set amidst a monolith of greens, the hues omnipresence punctuated by levitating halos (bespoke lighting systems) and I-section stanchions bathed in a berry hue.The design intent was steadfast: to enable patrons to experience the entirety of the store's volume no matter where they stand within the blueprint; the vistas were seamless and all-encompassing through the rectilinear volume's axis. The services remain intentionally exposed, HVAC and ducting darting through the space's length with the marsala tone washing over them.Save this picture!Save this picture!Sorbet's identity is spearheaded by the singularity the material palette dons. The mint hue manifests as textured paint, candy-esque terrazzo, and Piccolo mosaic segments, earmarking various nodes and moments through the center. The flooring is a morphing landscape of smooth Kota stone, flitting between jagged chunks and smooth curvilinear pieces embedded within rivulets of ivory grout.Save this picture!Save this picture!Save this picture!Movement is imagined as a layout of intersecting circles, creating semi-open enclosures hosting product typologies. The circles assume various diameters and envelope heights, allowing one's sight to gather glimpses of the store. The display systems within the enclosures result from intense conceptualization. Their curved and tiered forms echo the overruling geometry. Cloche-style pedestals, curved units extending from the enclosures, and circular display systems dot the layout, creating moments of pause for patrons to experience the gamut of products.Save this picture!For the design team, Sorbet went far beyond recalibrating what 'conventional' experience centers could look like. It was a sojourn that beckoned one's inner child to come to play, marinate in visual storytelling, and create a space that allowed one to willingly abandon all notions of the 'expected' at the door.Save this picture!Project gallerySee allShow lessProject locationAddress:Mumbai, IndiaLocation to be used only as a reference. It could indicate city/country but not exact address.About this officeMuseLABOfficePublished on January 09, 2025Cite: "Sorbet Showroom / MuseLAB" 09 Jan 2025. ArchDaily. Accessed . <https://www.archdaily.com/1025440/sorbet-showroom-muselab&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Reacties 0 aandelen -

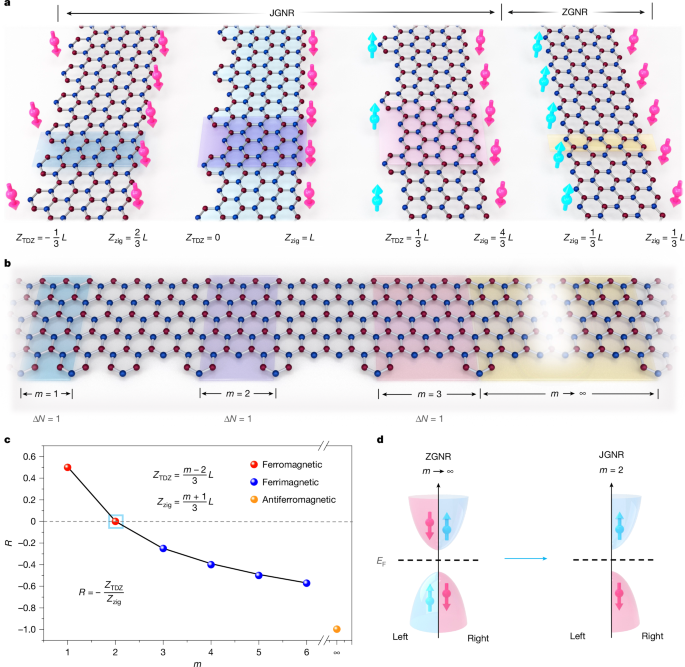

WWW.NATURE.COMJanus graphene nanoribbons with localized states on a single zigzag edgeNature, Published online: 08 January 2025; doi:10.1038/s41586-024-08296-xJanus graphene nanoribbons with localized states on a single zigzag edge are fabricated by introducing a topological defect array of benzene motifs on the opposite zigzag edge, to break the structural symmetry.0 Reacties 0 aandelen

WWW.NATURE.COMJanus graphene nanoribbons with localized states on a single zigzag edgeNature, Published online: 08 January 2025; doi:10.1038/s41586-024-08296-xJanus graphene nanoribbons with localized states on a single zigzag edge are fabricated by introducing a topological defect array of benzene motifs on the opposite zigzag edge, to break the structural symmetry.0 Reacties 0 aandelen -

WWW.NATURE.COMPhysicists describe exotic paraparticles that defy categorizationNature, Published online: 08 January 2025; doi:10.1038/d41586-025-00030-5Theoretical study predicts the existence of particles that are neither bosons nor fermions and hints at potential applications in quantum computing.0 Reacties 0 aandelen

WWW.NATURE.COMPhysicists describe exotic paraparticles that defy categorizationNature, Published online: 08 January 2025; doi:10.1038/d41586-025-00030-5Theoretical study predicts the existence of particles that are neither bosons nor fermions and hints at potential applications in quantum computing.0 Reacties 0 aandelen -

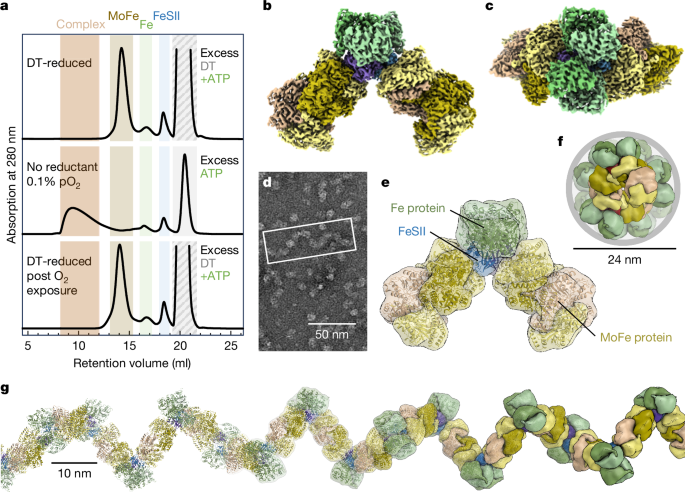

WWW.NATURE.COMConformational protection of molybdenum nitrogenase by Shethna protein IINature, Published online: 08 January 2025; doi:10.1038/s41586-024-08355-3A small ferredoxin (Shethna protein II) of Azotobacter vinelandii can provide protection from O2 stress that may be crucial for the maintenance of recombinant nitrogenase in food crops.0 Reacties 0 aandelen

WWW.NATURE.COMConformational protection of molybdenum nitrogenase by Shethna protein IINature, Published online: 08 January 2025; doi:10.1038/s41586-024-08355-3A small ferredoxin (Shethna protein II) of Azotobacter vinelandii can provide protection from O2 stress that may be crucial for the maintenance of recombinant nitrogenase in food crops.0 Reacties 0 aandelen -

-

GAMERANT.COMWuthering Waves: Nightmar Echoes, ExplainedNightmare Echoes are variants of existing Echoes in Wuthering Waves that greatly affect how players use their Resonators. They are inherently stronger than their regular counterparts, and if you want to get the most out of your characters, getting a Nightmare Echo should be on your list of priorities.0 Reacties 0 aandelen

GAMERANT.COMWuthering Waves: Nightmar Echoes, ExplainedNightmare Echoes are variants of existing Echoes in Wuthering Waves that greatly affect how players use their Resonators. They are inherently stronger than their regular counterparts, and if you want to get the most out of your characters, getting a Nightmare Echo should be on your list of priorities.0 Reacties 0 aandelen -

GAMERANT.COMThe Best PlayStation Games Turning 20 In 2025The PS2 was an unprecedented success following the PS1, which was Sonys debut console. It reigned supreme for decades but was just recently dethroned in sales by the Switch in 2024. 2005 came near the end of the PS2s peak, but alongside it, North America got the PSP which debuted in the West a year after Japan.0 Reacties 0 aandelen

GAMERANT.COMThe Best PlayStation Games Turning 20 In 2025The PS2 was an unprecedented success following the PS1, which was Sonys debut console. It reigned supreme for decades but was just recently dethroned in sales by the Switch in 2024. 2005 came near the end of the PS2s peak, but alongside it, North America got the PSP which debuted in the West a year after Japan.0 Reacties 0 aandelen -

GAMERANT.COMWuthering Waves: Treasures in the Painting Guide (Hidden Side Quest)Rinascita, the region introduced in Version 2.0 of Wuthering Waves, offers players a wealth of new areas to explore, Echoes to collect, and quests to complete. Some of these quests are hidden from the map, requiring players to venture out and discover them on their own.0 Reacties 0 aandelen

GAMERANT.COMWuthering Waves: Treasures in the Painting Guide (Hidden Side Quest)Rinascita, the region introduced in Version 2.0 of Wuthering Waves, offers players a wealth of new areas to explore, Echoes to collect, and quests to complete. Some of these quests are hidden from the map, requiring players to venture out and discover them on their own.0 Reacties 0 aandelen