0 Commenti

0 condivisioni

138 Views

Elenco

Elenco

-

Effettua l'accesso per mettere mi piace, condividere e commentare!

-

WWW.NYTIMES.COMMeta to End Fact-Checking Program in Shift Ahead of Trump TermThe social networking giant will stop using third-party fact checkers and instead rely on users to add notes to posts. President-elect Trump and his conservative allies said they were pleased.0 Commenti 0 condivisioni 144 Views

WWW.NYTIMES.COMMeta to End Fact-Checking Program in Shift Ahead of Trump TermThe social networking giant will stop using third-party fact checkers and instead rely on users to add notes to posts. President-elect Trump and his conservative allies said they were pleased.0 Commenti 0 condivisioni 144 Views -

WWW.NYTIMES.COMAnthropic in Talks for $2 Billion Funding RoundThe financing efforts follow new funding rounds by Elon Musks xAI and the market leader, OpenAI, which is now valued at $157 billion.0 Commenti 0 condivisioni 136 Views

WWW.NYTIMES.COMAnthropic in Talks for $2 Billion Funding RoundThe financing efforts follow new funding rounds by Elon Musks xAI and the market leader, OpenAI, which is now valued at $157 billion.0 Commenti 0 condivisioni 136 Views -

APPLEINSIDER.COMiPad 11, iPad Air, iPhone SE 4 expected in April, despite January launch rumorsRumors spread on Tuesday that Apple would release the new iPhone SE and iPads alongside iOS 18.3 later in January, but they have been quickly dismissed by a more reliable source.iPhone SE 4 could resemble the iPhone 14 with a single rear cameraApple is expected to have a packed early 2025 lineup spread from now until WWDC, however, recent rumors have assumed an even faster timeline for some products. That has all been put to bed thanks to an insight from a reliable source making better sense of the available data.According to a post from Mark Gurman, Apple is indeed developing the iPhone SE 4 and new iPads with iOS 18.3 in mind for the launch. However, he states that Apple would release these products "by April if all goes to plan." Rumor Score: Likely Continue Reading on AppleInsider | Discuss on our Forums0 Commenti 0 condivisioni 137 Views

APPLEINSIDER.COMiPad 11, iPad Air, iPhone SE 4 expected in April, despite January launch rumorsRumors spread on Tuesday that Apple would release the new iPhone SE and iPads alongside iOS 18.3 later in January, but they have been quickly dismissed by a more reliable source.iPhone SE 4 could resemble the iPhone 14 with a single rear cameraApple is expected to have a packed early 2025 lineup spread from now until WWDC, however, recent rumors have assumed an even faster timeline for some products. That has all been put to bed thanks to an insight from a reliable source making better sense of the available data.According to a post from Mark Gurman, Apple is indeed developing the iPhone SE 4 and new iPads with iOS 18.3 in mind for the launch. However, he states that Apple would release these products "by April if all goes to plan." Rumor Score: Likely Continue Reading on AppleInsider | Discuss on our Forums0 Commenti 0 condivisioni 137 Views -

ARCHINECT.COMHow will Trumps tariff plans impact the U.S. lumber market?As the imposition of a threatened 25% blanket tariff on Canadian goods including softwood lumber products looms for the end of January, many practices are concerned about how cost increases might impact procurements and the cost of building as the countrys production currently equals an almost 30% market share.This uncertainty is exacerbated by the fact that many U.S. lumber mills have been affected by high interest rates and labor shortages in recent years, driving down annual production capacity to a point where they alone are incapable of meeting demands, says North Carolina State University economist Rajan Parajuli in a recent interview.This would of course affect the nationwide cost of construction using such products. The most recentAssociated Builders and Contractors(ABC)analysis of the U.S. Bureau of Labor Statistics Producer Price Index data showed an 11.8% uptick in the cost of softwood lumber when compared with the same 12-month period from the year before....0 Commenti 0 condivisioni 157 Views

ARCHINECT.COMHow will Trumps tariff plans impact the U.S. lumber market?As the imposition of a threatened 25% blanket tariff on Canadian goods including softwood lumber products looms for the end of January, many practices are concerned about how cost increases might impact procurements and the cost of building as the countrys production currently equals an almost 30% market share.This uncertainty is exacerbated by the fact that many U.S. lumber mills have been affected by high interest rates and labor shortages in recent years, driving down annual production capacity to a point where they alone are incapable of meeting demands, says North Carolina State University economist Rajan Parajuli in a recent interview.This would of course affect the nationwide cost of construction using such products. The most recentAssociated Builders and Contractors(ABC)analysis of the U.S. Bureau of Labor Statistics Producer Price Index data showed an 11.8% uptick in the cost of softwood lumber when compared with the same 12-month period from the year before....0 Commenti 0 condivisioni 157 Views -

EN.WIKIPEDIA.ORGWikipedia picture of the day for January 8A lime is a citrus fruit, which is typically round, lime green in color, 36 centimetres (1.22.4in) in diameter, and contains acidic juice vesicles. There are several species of citrus trees whose fruits are called limes, including the Key lime, Persian lime, kaffir lime, finger lime, blood lime, and desert lime. Limes are a rich source of vitamin C, are sour, and are often used to accent the flavours of foods and beverages. They are grown year-round, originally in tropical South and Southeast Asia but now in much of the world. Plants with fruit called "limes" have diverse genetic origins; limes do not form a monophyletic group. This photograph shows two limes grown in Brazil, one whole and one halved, and was focus-stacked from 23 images.Photograph credit: Ivar LeidusRecently featured: Golden-fronted woodpeckerCathedral of La LagunaHolger DrachmannArchiveMore featured pictures0 Commenti 0 condivisioni 160 Views

EN.WIKIPEDIA.ORGWikipedia picture of the day for January 8A lime is a citrus fruit, which is typically round, lime green in color, 36 centimetres (1.22.4in) in diameter, and contains acidic juice vesicles. There are several species of citrus trees whose fruits are called limes, including the Key lime, Persian lime, kaffir lime, finger lime, blood lime, and desert lime. Limes are a rich source of vitamin C, are sour, and are often used to accent the flavours of foods and beverages. They are grown year-round, originally in tropical South and Southeast Asia but now in much of the world. Plants with fruit called "limes" have diverse genetic origins; limes do not form a monophyletic group. This photograph shows two limes grown in Brazil, one whole and one halved, and was focus-stacked from 23 images.Photograph credit: Ivar LeidusRecently featured: Golden-fronted woodpeckerCathedral of La LagunaHolger DrachmannArchiveMore featured pictures0 Commenti 0 condivisioni 160 Views -

EN.WIKIPEDIA.ORGOn this day: January 8January 8 Blackstone Library1697 Scottish student Thomas Aikenhead became the last person in Great Britain to be executed for blasphemy.1904 Blackstone Library (pictured), the first branch of the Chicago Public Library system, was dedicated.1977 Three bombs attributed to Armenian nationalists exploded across Moscow, killing seven people and injuring 37 people.1981 In Trans-en-Provence, France, a local farmer reported a UFO sighting claimed to be "perhaps the most completely and carefully documented sighting of all time".2011 Jared Lee Loughner opened fire at a public meeting held by U.S. representative Gabby Giffords in Tucson, Arizona, killing six people and injuring twelve others.Prince Albert Victor (b.1864)Mary Arthur McElroy (d.1917)Joseph Franklin Rutherford (d.1942)T.J. Hamblin (d.2012)More anniversaries: January 7January 8January 9ArchiveBy emailList of days of the yearAbout0 Commenti 0 condivisioni 166 Views

-

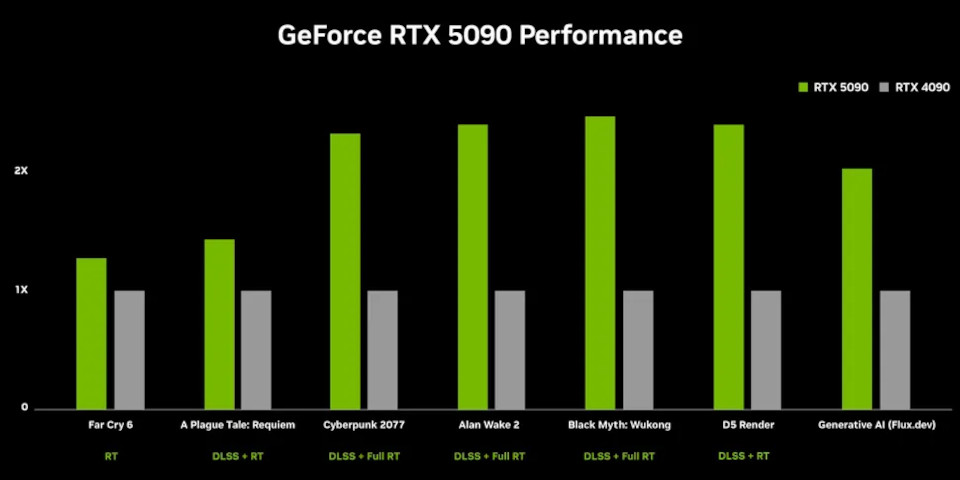

WWW.CGCHANNEL.COMNVIDIA unveils GeForce RTX 5090, 5080, 5070 Ti and 5070 GPUshtml PUBLIC "-//W3C//DTD HTML 4.0 Transitional//EN" "http://www.w3.org/TR/REC-html40/loose.dtd"NVIDIA has unveiled the GeForce RTX 5090, GeForce RTX 5080, GeForce RTX 5070 Ti and GeForce RTX 5070: the top-of-the-range cards in its GeForce RTX 50 Series of consumer GPUs.The GPUs, which will all be available by February, and which are priced between $1,999 and $549, are the first desktop cards to use NVIDIAs next-gen Blackwell GPU architecture.Although primarily gaming cards, NVIDIA also markets the RTX 50 Series at artists, so below, weve rounded up their key specs for DCC work, and performance comparisons for CG software.NVIDIAs GeForce RTX 50 Series GPUs and previous-gen counterpartsRTX 5090RTX 4090RTX 5080RTX 4080RTX 5070 TiRTX 4070 TiRTX 5070RTX 4070ArchitectureBlackwellAda LovelaceBlackwellAda LovelaceBlackwellAda LovelaceBlackwellAda LovelaceCUDA cores21,76016,38410,7529,7288,9607,6806,1445,888Tensor cores*680512336304280240192184RT cores*170128847670604846Base clock (GHz)2.412.232.622.212.452.312.511.92Boost clock (GHz)2.012.522.302.512.302.612.162.48Compute performanceFP32 (Tflops)*104.882.656.348.744.440.131.029.2GPU memory32 GB GDDR724GBGDDR6X16 GB GDDR716GBGDDR6X16 GB GDDR712GBGDDR6X12 GB GDDR712GBGDDR6XTGP575W450W360W320W300W285W250W200WRelease date20252022202520222025202320252023MSRP at launch$1,999$1,599$999$1,199$749$799$549$599*Data taken from third-party websites.Key specifications for DCC workIn terms of core specs, the new GeForce RTX 50 Series GPUs are a sizeable improvement over their counterparts from the current-generation GeForce RTX 40 Series.All have all higher counts of the three key hardware core types: CUDA for general GPU compute, Tensor for AI operations, and RT for hardware-accelerated ray tracing.The RT cores are also now fourth-generation, and the Tensor cores fifth-generation, although NVIDIA hasnt provided much detail on how that will affect GPU rendering performance.What will definitely affect GPU rendering is the increased GPU memory capacity of the top-of-the-range GeForce RTX 5090: up to 32GB, from 24GB in the current-gen RTX 4090.The GeForce RTX 5070 Ti also has more GPU memory than its predecessor, although there is no change with either the GeForce RTX 5080 or GeForce RTX 5070.In addition, the entire GeForce 50 Series uses the latest GDDR7 memory, rather than the older GDDR6X memory used in the GeForce RTX 40 Series.There is less change in display connectivity: the GeForce 50 Series cards have one HDMI and three DisplayPort connectors, the same as the GeForce RTX 40 Series.However, they support DisplayPort 2.1, the latest version of the standard and will support the upcoming DisplayPort 2.1b whereas the GeForce RTX 40 Series supports DisplayPort 1.4a.Those increases in processing power come with a corresponding increase in power consumption all four GeForce RTX 50 Series cards have a higher Total Graphics Power than their precursors.However, they are also more compact unlike the high end of the GeForce RTX 40 Series, all are dual-slot cards and most of them are cheaper than their predecessors, at least at launch.The exception is the GeForce RTX 5090, which has a launch price of $1,999, up $400 from the GeForce RTX 4090, although NVIDIA claims that it offers double the overall performance.Benchmarks and performance in DCC applicationsGiven that GeForce RTX 50 Series are gaming cards, most of the performance comparisons that NVIDIA has released are for games rather than CG software.One exception is architectural GPU renderer D5 Render.The comparison chart above shows over a 2x increase in performance on the GeForce RTX 5090 compared to the current-gen RTX 4090, presumably using the standard D5 Render Benchmark.Although NVIDIA has a blog post on how the new cards will affect creative workflows, it focuses very much on generative AI rather than DCC software. However, it does feature a section on video editing and video production, which claims that the GeForce RTX 5090 can export video 60% faster than the current-gen GeForce RTX 4090.The comparison chart above is taken from a video on DaVinci Resolve, although the blog post also mentions Premiere Pro.Pricing and release datesThe GeForce RTX 5090 and 5080 will be available on 30 January, priced at $1,999 and $999. The GeForce RTX 4070 Ti and 4070 will be available in February, priced at $749 and $549.Read NVIDIAs announcement of the GeForce RTX 50 Series GPUsFind specifications for the GeForce RTX 50 Series GPUs on NVIDIAs websiteHave your say on this story by following CG Channel on Facebook, Instagram and X (formerly Twitter). As well as being able to comment on stories, followers of our social media accounts can see videos we dont post on the site itself, including making-ofs for the latest VFX movies, animations, games cinematics and motion graphics projects.0 Commenti 0 condivisioni 165 Views

WWW.CGCHANNEL.COMNVIDIA unveils GeForce RTX 5090, 5080, 5070 Ti and 5070 GPUshtml PUBLIC "-//W3C//DTD HTML 4.0 Transitional//EN" "http://www.w3.org/TR/REC-html40/loose.dtd"NVIDIA has unveiled the GeForce RTX 5090, GeForce RTX 5080, GeForce RTX 5070 Ti and GeForce RTX 5070: the top-of-the-range cards in its GeForce RTX 50 Series of consumer GPUs.The GPUs, which will all be available by February, and which are priced between $1,999 and $549, are the first desktop cards to use NVIDIAs next-gen Blackwell GPU architecture.Although primarily gaming cards, NVIDIA also markets the RTX 50 Series at artists, so below, weve rounded up their key specs for DCC work, and performance comparisons for CG software.NVIDIAs GeForce RTX 50 Series GPUs and previous-gen counterpartsRTX 5090RTX 4090RTX 5080RTX 4080RTX 5070 TiRTX 4070 TiRTX 5070RTX 4070ArchitectureBlackwellAda LovelaceBlackwellAda LovelaceBlackwellAda LovelaceBlackwellAda LovelaceCUDA cores21,76016,38410,7529,7288,9607,6806,1445,888Tensor cores*680512336304280240192184RT cores*170128847670604846Base clock (GHz)2.412.232.622.212.452.312.511.92Boost clock (GHz)2.012.522.302.512.302.612.162.48Compute performanceFP32 (Tflops)*104.882.656.348.744.440.131.029.2GPU memory32 GB GDDR724GBGDDR6X16 GB GDDR716GBGDDR6X16 GB GDDR712GBGDDR6X12 GB GDDR712GBGDDR6XTGP575W450W360W320W300W285W250W200WRelease date20252022202520222025202320252023MSRP at launch$1,999$1,599$999$1,199$749$799$549$599*Data taken from third-party websites.Key specifications for DCC workIn terms of core specs, the new GeForce RTX 50 Series GPUs are a sizeable improvement over their counterparts from the current-generation GeForce RTX 40 Series.All have all higher counts of the three key hardware core types: CUDA for general GPU compute, Tensor for AI operations, and RT for hardware-accelerated ray tracing.The RT cores are also now fourth-generation, and the Tensor cores fifth-generation, although NVIDIA hasnt provided much detail on how that will affect GPU rendering performance.What will definitely affect GPU rendering is the increased GPU memory capacity of the top-of-the-range GeForce RTX 5090: up to 32GB, from 24GB in the current-gen RTX 4090.The GeForce RTX 5070 Ti also has more GPU memory than its predecessor, although there is no change with either the GeForce RTX 5080 or GeForce RTX 5070.In addition, the entire GeForce 50 Series uses the latest GDDR7 memory, rather than the older GDDR6X memory used in the GeForce RTX 40 Series.There is less change in display connectivity: the GeForce 50 Series cards have one HDMI and three DisplayPort connectors, the same as the GeForce RTX 40 Series.However, they support DisplayPort 2.1, the latest version of the standard and will support the upcoming DisplayPort 2.1b whereas the GeForce RTX 40 Series supports DisplayPort 1.4a.Those increases in processing power come with a corresponding increase in power consumption all four GeForce RTX 50 Series cards have a higher Total Graphics Power than their precursors.However, they are also more compact unlike the high end of the GeForce RTX 40 Series, all are dual-slot cards and most of them are cheaper than their predecessors, at least at launch.The exception is the GeForce RTX 5090, which has a launch price of $1,999, up $400 from the GeForce RTX 4090, although NVIDIA claims that it offers double the overall performance.Benchmarks and performance in DCC applicationsGiven that GeForce RTX 50 Series are gaming cards, most of the performance comparisons that NVIDIA has released are for games rather than CG software.One exception is architectural GPU renderer D5 Render.The comparison chart above shows over a 2x increase in performance on the GeForce RTX 5090 compared to the current-gen RTX 4090, presumably using the standard D5 Render Benchmark.Although NVIDIA has a blog post on how the new cards will affect creative workflows, it focuses very much on generative AI rather than DCC software. However, it does feature a section on video editing and video production, which claims that the GeForce RTX 5090 can export video 60% faster than the current-gen GeForce RTX 4090.The comparison chart above is taken from a video on DaVinci Resolve, although the blog post also mentions Premiere Pro.Pricing and release datesThe GeForce RTX 5090 and 5080 will be available on 30 January, priced at $1,999 and $999. The GeForce RTX 4070 Ti and 4070 will be available in February, priced at $749 and $549.Read NVIDIAs announcement of the GeForce RTX 50 Series GPUsFind specifications for the GeForce RTX 50 Series GPUs on NVIDIAs websiteHave your say on this story by following CG Channel on Facebook, Instagram and X (formerly Twitter). As well as being able to comment on stories, followers of our social media accounts can see videos we dont post on the site itself, including making-ofs for the latest VFX movies, animations, games cinematics and motion graphics projects.0 Commenti 0 condivisioni 165 Views -

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer_public/9e/88/9e882da1-9b48-4b18-9605-cc533475b5f4/gettyimages-2150625528.jpg) WWW.SMITHSONIANMAG.COMBiden Establishes Two New National Monuments in CaliforniaThe new Chuckwalla National Monument protectsmore than 624,000 acres in southern California. David McNew / Getty ImagesWith less than two weeks left in the White House, President Joe Biden has established two new national monuments in California to honor Native American tribes: theChuckwalla National Monument in southern California and theStttla Highlands National Monument in northern California.Together, they represent more than 848,000 acres of public lands, which will now be protected from drilling and mining. Native American tribes, with the support ofsome public officials andconservation groups, had been urging lawmakers to protect both areas.Biden was scheduled to make a formal announcement from the eastern Coachella Valley earlier today, but the event was canceled due to a fierce windstorm, as theAssociated Press Colleen Long and Matthew Daly report. He will deliver the speech from the White House next week.Located near Joshua Tree National Park, Chuckwalla National Monument spans 624,000 acres. With its canyons, mountains and desert landscapes, the national monument serves as a habitat for a variety of animals, including desert bighorn sheep, Gila woodpeckers and Agassizs desert tortoises.The region is considered sacred to numerous Native American tribes, as it factors into their creation stories. The national monument encompasses the ancestral homelands of the Iviatim, Nw, Pipa Aha Macav, Kwatsan and Maarayam peoples, according to the U.S. Department of the Interior. It also protects ancient trail systems and artifacts, including ceramics, tools, dwellings and petroglyphs.The protection of the Chuckwalla National Monument brings the Quechan people an overwhelming sense of peace and joy, says the Fort Yuma Quechan Tribe in a statement. Tribes being reunited as stewards of this landscape is only the beginning of much-needed healing and restoration, and we are eager to fully rebuild our relationship to this place.In addition to its tribal significance, the national monument also protects historic mining shafts and theBradshaw Trail, an overland stage route used by gold miners and other travelers in the mid-1800s. The site is also home to the remnants of World War II training camps, which were used to prepare soldiers for combat in North Africa.At the opposite end of the state, not far from the Oregon border, Biden established the Stttla Highlands National Monument, which protects more than 224,000 acres.Indigenous groups have inhabited the area for more than 5,000 years. Today, the site remains culturally and spiritually important to the Pit River, Modoc, Klamath, Shasta, Wintu, Yana, Siletz and Karuk peoples, according to the U.S. Department of Agriculture.Stttla is not just a piece of land; its the heart of our heritage and the source of life for current and future generations, Yatch Bamford, chairman of the Pit River Tribe, told theNavajo-Hopi Observers Stan Bindell in October.The national monument also encompasses the Medicine Lake Volcano, one of the largest volcanoes in the Cascade Range. The volcano shaped the landscape for millennia, creating natural features like the Giant Crater, a lengthy lava tube system.The permeable volcanic rock found throughout the region allows rainwater to seep beneath the surface and collect in large underground aquifers, which provide water to northern California communities.Earlier this week, Biden banned offshore drilling in 625 million acres of federal waters. The ban includes parts of the Northern Bering Sea, which many tribes in Alaska have been trying to protect for decades, per Native News Onlines Brian Edwards. According to theWhite House, Biden has created tennew national monuments, expanded two existing national monuments and restored three others during his presidency.Get the latest stories in your inbox every weekday.0 Commenti 0 condivisioni 153 Views

WWW.SMITHSONIANMAG.COMBiden Establishes Two New National Monuments in CaliforniaThe new Chuckwalla National Monument protectsmore than 624,000 acres in southern California. David McNew / Getty ImagesWith less than two weeks left in the White House, President Joe Biden has established two new national monuments in California to honor Native American tribes: theChuckwalla National Monument in southern California and theStttla Highlands National Monument in northern California.Together, they represent more than 848,000 acres of public lands, which will now be protected from drilling and mining. Native American tribes, with the support ofsome public officials andconservation groups, had been urging lawmakers to protect both areas.Biden was scheduled to make a formal announcement from the eastern Coachella Valley earlier today, but the event was canceled due to a fierce windstorm, as theAssociated Press Colleen Long and Matthew Daly report. He will deliver the speech from the White House next week.Located near Joshua Tree National Park, Chuckwalla National Monument spans 624,000 acres. With its canyons, mountains and desert landscapes, the national monument serves as a habitat for a variety of animals, including desert bighorn sheep, Gila woodpeckers and Agassizs desert tortoises.The region is considered sacred to numerous Native American tribes, as it factors into their creation stories. The national monument encompasses the ancestral homelands of the Iviatim, Nw, Pipa Aha Macav, Kwatsan and Maarayam peoples, according to the U.S. Department of the Interior. It also protects ancient trail systems and artifacts, including ceramics, tools, dwellings and petroglyphs.The protection of the Chuckwalla National Monument brings the Quechan people an overwhelming sense of peace and joy, says the Fort Yuma Quechan Tribe in a statement. Tribes being reunited as stewards of this landscape is only the beginning of much-needed healing and restoration, and we are eager to fully rebuild our relationship to this place.In addition to its tribal significance, the national monument also protects historic mining shafts and theBradshaw Trail, an overland stage route used by gold miners and other travelers in the mid-1800s. The site is also home to the remnants of World War II training camps, which were used to prepare soldiers for combat in North Africa.At the opposite end of the state, not far from the Oregon border, Biden established the Stttla Highlands National Monument, which protects more than 224,000 acres.Indigenous groups have inhabited the area for more than 5,000 years. Today, the site remains culturally and spiritually important to the Pit River, Modoc, Klamath, Shasta, Wintu, Yana, Siletz and Karuk peoples, according to the U.S. Department of Agriculture.Stttla is not just a piece of land; its the heart of our heritage and the source of life for current and future generations, Yatch Bamford, chairman of the Pit River Tribe, told theNavajo-Hopi Observers Stan Bindell in October.The national monument also encompasses the Medicine Lake Volcano, one of the largest volcanoes in the Cascade Range. The volcano shaped the landscape for millennia, creating natural features like the Giant Crater, a lengthy lava tube system.The permeable volcanic rock found throughout the region allows rainwater to seep beneath the surface and collect in large underground aquifers, which provide water to northern California communities.Earlier this week, Biden banned offshore drilling in 625 million acres of federal waters. The ban includes parts of the Northern Bering Sea, which many tribes in Alaska have been trying to protect for decades, per Native News Onlines Brian Edwards. According to theWhite House, Biden has created tennew national monuments, expanded two existing national monuments and restored three others during his presidency.Get the latest stories in your inbox every weekday.0 Commenti 0 condivisioni 153 Views -

VENTUREBEAT.COMMeta proposes new scalable memory layers that improve knowledge, reduce hallucinationsJoin our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn MoreAs enterprises continue to adopt large language models (LLMs) in various applications, one of the key challenges they face is improving the factual knowledge of models and reducing hallucinations. In a new paper, researchers at Meta AI propose scalable memory layers, which could be one of several possible solutions to this problem.Scalable memory layers add more parameters to LLMs to increase their learning capacity without requiring additional compute resources. The architecture is useful for applications where you can spare extra memory for factual knowledge but also want the inference speed of nimbler models.Dense and memory layersTraditional language models use dense layers to encode vast amounts of information in their parameters. In dense layers, all parameters are used at their full capacity and are mostly activated at the same time during inference. Dense layers can learn complex functions, and increasing their requires additional computational and energy resources.In contrast, for simple factual knowledge, much simpler layers with associative memory architectures would be more efficient and interpretable. This is what memory layers do. They use simple sparse activations and key-value lookup mechanisms to encode and retrieve knowledge. Sparse layers take up more memory than dense layers but only use a small portion of the parameters at once, which makes them much more compute-efficient.Memory layers have existed for several years but are rarely used in modern deep learning architectures. They are not optimized for current hardware accelerators.Current frontier LLMs usually use some form of mixture of experts (MoE) architecture, which uses a mechanism vaguely similar to memory layers. MoE models are composed of many smaller expert components that specialize in specific tasks. At inference time, a routing mechanism determines which expert becomes activated based on the input sequence. PEER, an architecture recently developed by Google DeepMind, extends MoE to millions of experts, providing more granular control over the parameters that become activated during inference.Upgrading memory layersMemory layers are light on compute but heavy on memory, which presents specific challenges for current hardware and software frameworks. In their paper, the Meta researchers propose several modifications that solve these challenges and make it possible to use them at scale.Memory layers can store knowledge in parallel across several GPUs without slowing down the model (source: arXiv)First, the researchers configured the memory layers for parallelization, distributing them across several GPUs to store millions of key-value pairs without changing other layers in the model. They also implemented a special CUDA kernel for handling high-memory bandwidth operations. And, they developed a parameter-sharing mechanism that supports a single set of memory parameters across multiple memory layers within a model. This means that the keys and values used for lookups are shared across layers.These modifications make it possible to implement memory layers within LLMs without slowing down the model.Memory layers with their sparse activations nicely complement dense networks, providing increased capacity for knowledge acquisition while being light on compute, the researchers write. They can be efficiently scaled, and provide practitioners with an attractive new direction to trade-off memory with compute.To test memory layers, the researchers modified Llama models by replacing one or more dense layers with a shared memory layer. They compared the memory-enhanced models against the dense LLMs as well as MoE and PEER models on several tasks, including factual question answering, scientific and common-sense world knowledge and coding.A 1.3B memory model (solid line) trained on 1 trillion tokens approaches the performance of a 7B model (dashed line) on factual question-answering tasks as it is given more memory parameters (source: arxiv)Their findings show that memory models improve significantly over dense baselines and compete with models that use 2X to 4X more compute. They also match the performance of MoE models that have the same compute budget and parameter count. The models performance is especially notable on tasks that require factual knowledge. For example, on factual question-answering, a memory model with 1.3 billion parameters approaches the performance of Llama-2-7B, which has been trained on twice as many tokens and 10X more compute.Moreover, the researchers found that the benefits of memory models remain consistent with model size as they scaled their experiments from 134 million to 8 billion parameters.Given these findings, we strongly advocate that memory layers should be integrated into all next generation AI architectures, the researchers write, while adding that there is still a lot more room for improvement. In particular, we hope that new learning methods can be developed to push the effectiveness of these layers even further, enabling less forgetting, fewer hallucinations and continual learning.Daily insights on business use cases with VB DailyIf you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.Read our Privacy PolicyThanks for subscribing. Check out more VB newsletters here.An error occured.0 Commenti 0 condivisioni 149 Views

VENTUREBEAT.COMMeta proposes new scalable memory layers that improve knowledge, reduce hallucinationsJoin our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn MoreAs enterprises continue to adopt large language models (LLMs) in various applications, one of the key challenges they face is improving the factual knowledge of models and reducing hallucinations. In a new paper, researchers at Meta AI propose scalable memory layers, which could be one of several possible solutions to this problem.Scalable memory layers add more parameters to LLMs to increase their learning capacity without requiring additional compute resources. The architecture is useful for applications where you can spare extra memory for factual knowledge but also want the inference speed of nimbler models.Dense and memory layersTraditional language models use dense layers to encode vast amounts of information in their parameters. In dense layers, all parameters are used at their full capacity and are mostly activated at the same time during inference. Dense layers can learn complex functions, and increasing their requires additional computational and energy resources.In contrast, for simple factual knowledge, much simpler layers with associative memory architectures would be more efficient and interpretable. This is what memory layers do. They use simple sparse activations and key-value lookup mechanisms to encode and retrieve knowledge. Sparse layers take up more memory than dense layers but only use a small portion of the parameters at once, which makes them much more compute-efficient.Memory layers have existed for several years but are rarely used in modern deep learning architectures. They are not optimized for current hardware accelerators.Current frontier LLMs usually use some form of mixture of experts (MoE) architecture, which uses a mechanism vaguely similar to memory layers. MoE models are composed of many smaller expert components that specialize in specific tasks. At inference time, a routing mechanism determines which expert becomes activated based on the input sequence. PEER, an architecture recently developed by Google DeepMind, extends MoE to millions of experts, providing more granular control over the parameters that become activated during inference.Upgrading memory layersMemory layers are light on compute but heavy on memory, which presents specific challenges for current hardware and software frameworks. In their paper, the Meta researchers propose several modifications that solve these challenges and make it possible to use them at scale.Memory layers can store knowledge in parallel across several GPUs without slowing down the model (source: arXiv)First, the researchers configured the memory layers for parallelization, distributing them across several GPUs to store millions of key-value pairs without changing other layers in the model. They also implemented a special CUDA kernel for handling high-memory bandwidth operations. And, they developed a parameter-sharing mechanism that supports a single set of memory parameters across multiple memory layers within a model. This means that the keys and values used for lookups are shared across layers.These modifications make it possible to implement memory layers within LLMs without slowing down the model.Memory layers with their sparse activations nicely complement dense networks, providing increased capacity for knowledge acquisition while being light on compute, the researchers write. They can be efficiently scaled, and provide practitioners with an attractive new direction to trade-off memory with compute.To test memory layers, the researchers modified Llama models by replacing one or more dense layers with a shared memory layer. They compared the memory-enhanced models against the dense LLMs as well as MoE and PEER models on several tasks, including factual question answering, scientific and common-sense world knowledge and coding.A 1.3B memory model (solid line) trained on 1 trillion tokens approaches the performance of a 7B model (dashed line) on factual question-answering tasks as it is given more memory parameters (source: arxiv)Their findings show that memory models improve significantly over dense baselines and compete with models that use 2X to 4X more compute. They also match the performance of MoE models that have the same compute budget and parameter count. The models performance is especially notable on tasks that require factual knowledge. For example, on factual question-answering, a memory model with 1.3 billion parameters approaches the performance of Llama-2-7B, which has been trained on twice as many tokens and 10X more compute.Moreover, the researchers found that the benefits of memory models remain consistent with model size as they scaled their experiments from 134 million to 8 billion parameters.Given these findings, we strongly advocate that memory layers should be integrated into all next generation AI architectures, the researchers write, while adding that there is still a lot more room for improvement. In particular, we hope that new learning methods can be developed to push the effectiveness of these layers even further, enabling less forgetting, fewer hallucinations and continual learning.Daily insights on business use cases with VB DailyIf you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.Read our Privacy PolicyThanks for subscribing. Check out more VB newsletters here.An error occured.0 Commenti 0 condivisioni 149 Views