0 Comments

0 Shares

108 Views

Directory

Directory

-

Please log in to like, share and comment!

-

WWW.CORE77.COMGimmicky Furniture Design Strikes Again: Multi-Position Chair Lands $780K-Plus on KickstarterTwo years ago we spotted this gimmicky-looking MagicH chair, an "ultra adaptive support home office chair" by Chinese manufacturer Newtral. People went bonkers for it on Kickstarter; it landed $1.8 million. Now the company's back with a new offering, the Freedom-X, a "multi-position chair" with a built-in desk and cupholder, as well as swing-down armrests. The video gives you a better look at the product, where you can see that the build quality and upholstery stitching isn't exactly Herman Miller quality:Does anyone have any faith that this chair will last? If you read through the comments on the MagicH's campaign page, you'll find some brutal feedback. Let's just say you won't be passing that chair down to your grandkids; multiple buyers report mechanical failure around the six-month mark.That said, the Freedom-X has once again captured the crowdfunding masses. While Newtral was seeking just $10,000 to get this thing going, at press time they were at $780K-plus, with over a month left to pledge. Caveat emptor.0 Comments 0 Shares 96 Views

WWW.CORE77.COMGimmicky Furniture Design Strikes Again: Multi-Position Chair Lands $780K-Plus on KickstarterTwo years ago we spotted this gimmicky-looking MagicH chair, an "ultra adaptive support home office chair" by Chinese manufacturer Newtral. People went bonkers for it on Kickstarter; it landed $1.8 million. Now the company's back with a new offering, the Freedom-X, a "multi-position chair" with a built-in desk and cupholder, as well as swing-down armrests. The video gives you a better look at the product, where you can see that the build quality and upholstery stitching isn't exactly Herman Miller quality:Does anyone have any faith that this chair will last? If you read through the comments on the MagicH's campaign page, you'll find some brutal feedback. Let's just say you won't be passing that chair down to your grandkids; multiple buyers report mechanical failure around the six-month mark.That said, the Freedom-X has once again captured the crowdfunding masses. While Newtral was seeking just $10,000 to get this thing going, at press time they were at $780K-plus, with over a month left to pledge. Caveat emptor.0 Comments 0 Shares 96 Views -

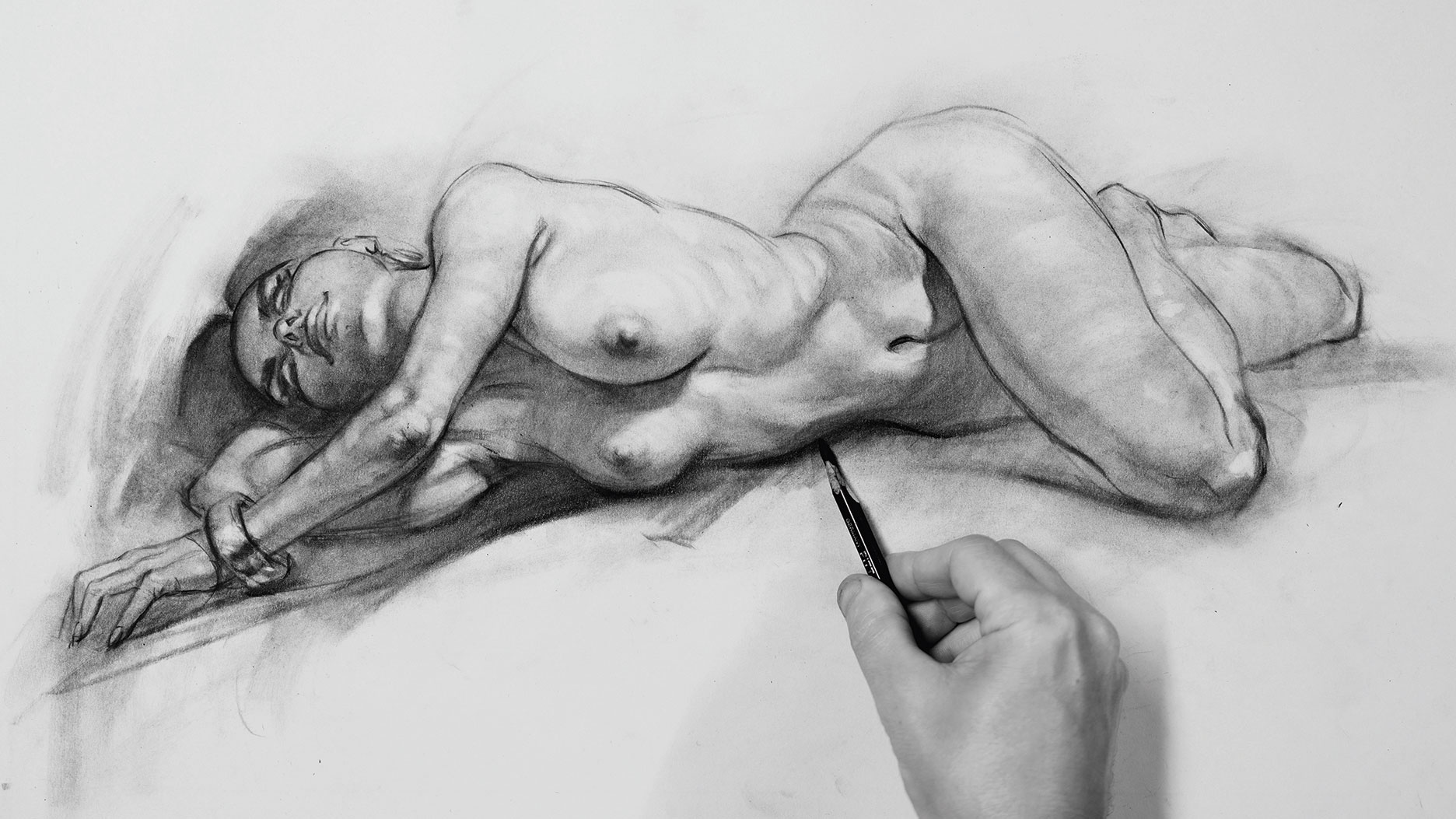

WWW.CREATIVEBLOQ.COMHow to draw a female figureA step-by-step guide to drawing the female form from reference.0 Comments 0 Shares 126 Views

WWW.CREATIVEBLOQ.COMHow to draw a female figureA step-by-step guide to drawing the female form from reference.0 Comments 0 Shares 126 Views -

WWW.WIRED.COM19 Fancy Gadgets That Wont Leave You BrokeThe next new thing doesnt have to cost as much as next months paycheck.0 Comments 0 Shares 89 Views

WWW.WIRED.COM19 Fancy Gadgets That Wont Leave You BrokeThe next new thing doesnt have to cost as much as next months paycheck.0 Comments 0 Shares 89 Views -

WWW.MACWORLD.COMCES, start your photocopiers: Hey, its cool to copy Apple again!MacworldIn the world of sports, its sometimes easier to impress by not playing. When youre in the game, everything you do is judged and all your mistakes happen under a harsh spotlight. But get yourself dropped behind some other poor bloke now getting picked apart for their mistakes, youll find that all is forgiven or, better yet, forgotten. Lets get David Price back in the game, theyll say. Im sure we were wrong about him being physically incompetent and afraid of the ball.I often wonder if some variant of this mindset is what leads Apple to snub trade shows and conferences when it has the resources to attend every event on the planet if it wants. Lots of new tech products made their debut at CES in Las Vegas this month, for example, but there were none at all by the industrys highest-profile brand. And yet, in their absence, Apples products picked up some of the most positive coverage all the same.Take poor old Dell, which at CES announced a major rebranding for its PC lines. Instead of XPS, Inspiron, and Latitude, the companys machines will be branded as Dell, Dell Pro, and Dell Pro Maxterms that are familiar to customers because theyre inspired, shall we charitably say, by the iPhone. The result? Mockery for Dell and a bunch of headlines that plug Apples products and make the Cupertino company sound like a trendsetter.I rather feel for Dell, which clearly got this one wrong and doesnt seem to know how to make it right. Apple has a tendency to do very difficult and complicated things and make them look easy and simple. When rivals follow suit they trip over their shoelaces by adding, in this case, needlessly complicating sub-brands like Plus and Premium. In any case, simplicity isnt about your choice of words, its about the fundamental structure of your product portfolio. (Incidentally, Apple doesnt always get these things right either. But of course, its mistakes are forgotten when someone else is in the spotlight.)Nvidia, meanwhile, was doing its own Apple impersonation elsewhere in Las Vegas: the firms Project Digits supercomputer had barely landed on the CES show floor before it was getting described as a Mac mini clone, and not without good reason. Its far more powerful (and correspondingly far more expensive) than the Mac mini, but the palmtop design is extremely familiar, as is the focus on AI. And it would be hard to imagine that the words Like a Mac mini, except were not uttered at some point in its development cycle.NvidiaEven some of the more positive headlines were marred, for Apples rivals, by invidious comparisons with the absent giant. Asus got pretty much everything right with the Zenbook A14, but suffered the indignity of seeing this heralded as little more than a MacBook Air competitor. The company, in fact, played up to the comparison, joking that it had considered the name Zenbook Air. But I always regard this strategy, which in movies and TV shows is known as lampshading, as cheating. Joking that your design is unoriginal doesnt change the fact that it is.Whether or not theyre prepared to admit it, Apples rivals spent much of CES 2025 trying to copy its moves. Instead of using its absence from the show as an opportunity to present something different, they delivered more of the sameonly with PC chips, worse software, and disastrous branding. Whereas Apple got a bunch of uncritical PR without doing anything.Its often said that Apple doesnt innovate out of thin air. Rather, it bides its time and lets other companies build up a market before swooping in at the crucial moment and grabbing the revenue. I would agree that the company is rarely first to enter a market. But what it often does is create the first iconic product in a market, the one which defines what that market represents in the popular consciousnessand, all too often, in the minds of its competitors too. After the launch of an iPhone or a MacBook Air, rivals struggle to envision an alternative that doesnt begin with Apples offering and then iterate from that.The irony is that if the companies really wanted to be like Apple, the best thing would be not to turn up at CES at all. But when the star player is away, its simply too tempting to rush onto the field and do your best to impress the fans.0 Comments 0 Shares 106 Views

WWW.MACWORLD.COMCES, start your photocopiers: Hey, its cool to copy Apple again!MacworldIn the world of sports, its sometimes easier to impress by not playing. When youre in the game, everything you do is judged and all your mistakes happen under a harsh spotlight. But get yourself dropped behind some other poor bloke now getting picked apart for their mistakes, youll find that all is forgiven or, better yet, forgotten. Lets get David Price back in the game, theyll say. Im sure we were wrong about him being physically incompetent and afraid of the ball.I often wonder if some variant of this mindset is what leads Apple to snub trade shows and conferences when it has the resources to attend every event on the planet if it wants. Lots of new tech products made their debut at CES in Las Vegas this month, for example, but there were none at all by the industrys highest-profile brand. And yet, in their absence, Apples products picked up some of the most positive coverage all the same.Take poor old Dell, which at CES announced a major rebranding for its PC lines. Instead of XPS, Inspiron, and Latitude, the companys machines will be branded as Dell, Dell Pro, and Dell Pro Maxterms that are familiar to customers because theyre inspired, shall we charitably say, by the iPhone. The result? Mockery for Dell and a bunch of headlines that plug Apples products and make the Cupertino company sound like a trendsetter.I rather feel for Dell, which clearly got this one wrong and doesnt seem to know how to make it right. Apple has a tendency to do very difficult and complicated things and make them look easy and simple. When rivals follow suit they trip over their shoelaces by adding, in this case, needlessly complicating sub-brands like Plus and Premium. In any case, simplicity isnt about your choice of words, its about the fundamental structure of your product portfolio. (Incidentally, Apple doesnt always get these things right either. But of course, its mistakes are forgotten when someone else is in the spotlight.)Nvidia, meanwhile, was doing its own Apple impersonation elsewhere in Las Vegas: the firms Project Digits supercomputer had barely landed on the CES show floor before it was getting described as a Mac mini clone, and not without good reason. Its far more powerful (and correspondingly far more expensive) than the Mac mini, but the palmtop design is extremely familiar, as is the focus on AI. And it would be hard to imagine that the words Like a Mac mini, except were not uttered at some point in its development cycle.NvidiaEven some of the more positive headlines were marred, for Apples rivals, by invidious comparisons with the absent giant. Asus got pretty much everything right with the Zenbook A14, but suffered the indignity of seeing this heralded as little more than a MacBook Air competitor. The company, in fact, played up to the comparison, joking that it had considered the name Zenbook Air. But I always regard this strategy, which in movies and TV shows is known as lampshading, as cheating. Joking that your design is unoriginal doesnt change the fact that it is.Whether or not theyre prepared to admit it, Apples rivals spent much of CES 2025 trying to copy its moves. Instead of using its absence from the show as an opportunity to present something different, they delivered more of the sameonly with PC chips, worse software, and disastrous branding. Whereas Apple got a bunch of uncritical PR without doing anything.Its often said that Apple doesnt innovate out of thin air. Rather, it bides its time and lets other companies build up a market before swooping in at the crucial moment and grabbing the revenue. I would agree that the company is rarely first to enter a market. But what it often does is create the first iconic product in a market, the one which defines what that market represents in the popular consciousnessand, all too often, in the minds of its competitors too. After the launch of an iPhone or a MacBook Air, rivals struggle to envision an alternative that doesnt begin with Apples offering and then iterate from that.The irony is that if the companies really wanted to be like Apple, the best thing would be not to turn up at CES at all. But when the star player is away, its simply too tempting to rush onto the field and do your best to impress the fans.0 Comments 0 Shares 106 Views -

WWW.COMPUTERWORLD.COMApple execs head to London to fight for the App StoreOn the day the UK government confirms anAI future for the country (well see how that goes), Apples App Store pricing model has gone on trial in London.Apple is accused of overcharging consumers for software sold via the App Store in a1.5 billion class-action lawsuit bought byRachael Kent-Aitkenon behalf of 20 million UK consumers. (That equates to about $1.8 billion in US dollars.)Shes chasing down Cupertino, claiming it is abusing its market dominance by levying up to a 30% fee on download sales, calling the levy excessive and unfair.The trial began today before the UK Competition Appeal Tribunal. The lawyers atHausfeld represent Kent-Aitken in the case, the costs of which appear to be underwritten (if thats the appropriate word) byVannin Capital.What is Apple accused of?The accusation is that Apple abused its dominant position by:Imposing restrictive terms that require app developers to distribute exclusively via the App Store using Apples payments system.Charging excessive and unfair prices in the form of commission payments, which are ultimately paid by the device users.Apple, of course, calls the claims meritless, pointing to the fact that the vast majority of developers do not pay a 30% levy on software sales, that those who offer apps at no charge (around 80% of all available apps) pay no levy at all, and that the fee is intended to see the costs of running the store borne by those who generate the most cash from selling software through it.In related news, the European Commission has begunfresh scrutinyof the core technology fee Apple charges some developers in Europe inresponse to the Digital Markets Act.Senior Apple leaders head to courtApple appears ready to field an all-star cast of witnesses during the trial with around three days of witness time booked. What is known is that Apple will field some witnesses who will travel from the US, with both Apple Fellow and App Store leader Phil Schiller and Apple Senior Vice President for Software Craig Federighi named in apre-trial note(pages 24/25). Apples newly-appointed Chief Financial Officer Kevan Parekh will also beforced to give evidence.The company will also be told to share unredacted versions of some documents used during the European Commission trial against it; there are three days in which Apple has secured witness time for the case, according to one document, though it is not known if that is definite at this stage. Apple witness statements should begin Wednesday afternoon, though it is not known if the witnesses Apple plans to bring will remain the same.Apple has previously said: We believe this lawsuit is meritless and welcome the opportunity to discuss with the court our unwavering commitment to consumers and the many benefits the App Store has delivered to the UKs innovation economy.Its worth noting that Apple CEO Tim Cookvisited the UKin December. While there he met with Prime Minister Keir Starmer and hosted an event atApples Battersea HQ with King Charles.Apple now supports 550,000 UK jobs through direct employment, its supply chain, and the iOS app economy.Is it all about legality or profitability?In the end, and as Ive pointed out before, even some of the companys critics levy similar fees on sales through their platforms, which means it isnt a matter of fee/no fee, but a question of how much fee is legitimate for Apples storefront, or any storefront, to charge. Even big brick-and-mortar grocery stores charge for shelf placement, after all.The commissions charged by the App Store are very much in the mainstream of those charged by all other digital marketplaces, Apple said when the case began. In fact, 85% of apps on the App Store are free and developers pay Apple nothing. And for the vast majority of developers who do pay Apple a commission because they are selling a digital good or service, they are eligible for a commission rate of 15%.What happens next?The litigant at one pointclaimed Apple made $15 billion in App Store sales on costs of around $100 million, though those costs seem to ignore research and development, OS development, security, payments, and associated investments Apple makes in its ecosystem.Kents lawyer, Hausfeld partner Lesley Hannah, at one point said: Apple has created a captive market where people who own Apple devices are reliant on it for the provision of both apps and payment processing services for digital purchases.Apple will likely argue that the market is larger than just iOS apps (think online services and other mobile platforms) and observe that it is not dominant in the device market. That matters, because it means consumers do have choice and most consumers choose different platforms. (Kent is also involved in similar action against Google.)Right or wrong, its hard to avoid that in general the direction of travel when it comes to App Store encounters in court means the current business model now seems tarnished. Perhaps Apple could introduce a different model in time?Follow me on social media! Join me onBlueSky, LinkedIn,Mastodon, orMeWe.0 Comments 0 Shares 106 Views

WWW.COMPUTERWORLD.COMApple execs head to London to fight for the App StoreOn the day the UK government confirms anAI future for the country (well see how that goes), Apples App Store pricing model has gone on trial in London.Apple is accused of overcharging consumers for software sold via the App Store in a1.5 billion class-action lawsuit bought byRachael Kent-Aitkenon behalf of 20 million UK consumers. (That equates to about $1.8 billion in US dollars.)Shes chasing down Cupertino, claiming it is abusing its market dominance by levying up to a 30% fee on download sales, calling the levy excessive and unfair.The trial began today before the UK Competition Appeal Tribunal. The lawyers atHausfeld represent Kent-Aitken in the case, the costs of which appear to be underwritten (if thats the appropriate word) byVannin Capital.What is Apple accused of?The accusation is that Apple abused its dominant position by:Imposing restrictive terms that require app developers to distribute exclusively via the App Store using Apples payments system.Charging excessive and unfair prices in the form of commission payments, which are ultimately paid by the device users.Apple, of course, calls the claims meritless, pointing to the fact that the vast majority of developers do not pay a 30% levy on software sales, that those who offer apps at no charge (around 80% of all available apps) pay no levy at all, and that the fee is intended to see the costs of running the store borne by those who generate the most cash from selling software through it.In related news, the European Commission has begunfresh scrutinyof the core technology fee Apple charges some developers in Europe inresponse to the Digital Markets Act.Senior Apple leaders head to courtApple appears ready to field an all-star cast of witnesses during the trial with around three days of witness time booked. What is known is that Apple will field some witnesses who will travel from the US, with both Apple Fellow and App Store leader Phil Schiller and Apple Senior Vice President for Software Craig Federighi named in apre-trial note(pages 24/25). Apples newly-appointed Chief Financial Officer Kevan Parekh will also beforced to give evidence.The company will also be told to share unredacted versions of some documents used during the European Commission trial against it; there are three days in which Apple has secured witness time for the case, according to one document, though it is not known if that is definite at this stage. Apple witness statements should begin Wednesday afternoon, though it is not known if the witnesses Apple plans to bring will remain the same.Apple has previously said: We believe this lawsuit is meritless and welcome the opportunity to discuss with the court our unwavering commitment to consumers and the many benefits the App Store has delivered to the UKs innovation economy.Its worth noting that Apple CEO Tim Cookvisited the UKin December. While there he met with Prime Minister Keir Starmer and hosted an event atApples Battersea HQ with King Charles.Apple now supports 550,000 UK jobs through direct employment, its supply chain, and the iOS app economy.Is it all about legality or profitability?In the end, and as Ive pointed out before, even some of the companys critics levy similar fees on sales through their platforms, which means it isnt a matter of fee/no fee, but a question of how much fee is legitimate for Apples storefront, or any storefront, to charge. Even big brick-and-mortar grocery stores charge for shelf placement, after all.The commissions charged by the App Store are very much in the mainstream of those charged by all other digital marketplaces, Apple said when the case began. In fact, 85% of apps on the App Store are free and developers pay Apple nothing. And for the vast majority of developers who do pay Apple a commission because they are selling a digital good or service, they are eligible for a commission rate of 15%.What happens next?The litigant at one pointclaimed Apple made $15 billion in App Store sales on costs of around $100 million, though those costs seem to ignore research and development, OS development, security, payments, and associated investments Apple makes in its ecosystem.Kents lawyer, Hausfeld partner Lesley Hannah, at one point said: Apple has created a captive market where people who own Apple devices are reliant on it for the provision of both apps and payment processing services for digital purchases.Apple will likely argue that the market is larger than just iOS apps (think online services and other mobile platforms) and observe that it is not dominant in the device market. That matters, because it means consumers do have choice and most consumers choose different platforms. (Kent is also involved in similar action against Google.)Right or wrong, its hard to avoid that in general the direction of travel when it comes to App Store encounters in court means the current business model now seems tarnished. Perhaps Apple could introduce a different model in time?Follow me on social media! Join me onBlueSky, LinkedIn,Mastodon, orMeWe.0 Comments 0 Shares 106 Views -

APPLEINSIDER.COMBest gear for Apple users that debuted during CES 2025We saw some great products for Apple users after a week of wearing our feet to the bone at CES! Here's what we like the most that we got the chance to check out in person at the show.CES is a wrap for 2025While we found a lot of future-looking tech, everything we rounded up will be shipping soon.Specifically, we'll be looking at accessories for Mac, iPhone, and iPad. We won't cover smart home though, but we'll be talking about that soon. Continue Reading on AppleInsider | Discuss on our Forums0 Comments 0 Shares 103 Views

APPLEINSIDER.COMBest gear for Apple users that debuted during CES 2025We saw some great products for Apple users after a week of wearing our feet to the bone at CES! Here's what we like the most that we got the chance to check out in person at the show.CES is a wrap for 2025While we found a lot of future-looking tech, everything we rounded up will be shipping soon.Specifically, we'll be looking at accessories for Mac, iPhone, and iPad. We won't cover smart home though, but we'll be talking about that soon. Continue Reading on AppleInsider | Discuss on our Forums0 Comments 0 Shares 103 Views -

ARCHINECT.COMCheck out these winter cabin designs to get ski season in gearSki season is upon us and to get people in the spirit, we've put together a list of the best-looking new winter cabins and ski chalet designs from around the world. Each is taken from individual firm profilesand offers its clients a cozy place to take in the scenery and enjoy close access to some fresh powder. Dig in and take a minute to explore some of these inspiring residential designs to put you in a post-holiday winter spirit that will last the year-round.A-Frame Club by Skylab Architecture in Winter Park, ColoradoA-Frame Club. Image: Stephan Werk/ WerkcreativeHigh Desert Residence by Hacker Architects in Bend, OregonHigh Desert Residence (Image: Jeremy Bittermann)Forest House by Faulkner Architects in Truckee, California Forest House (Image: Joe Fletcher)Yellowstone Residence by Stuart Silk Architects in Big Sky, MontanaYellowstone Residence. Image: Whitney Kamman The Glass Cabin by Mjlk architekti in Polubn, Czech RepublicThe Glass Cabin. Image: BoysPlayNiceMylla Hyt...0 Comments 0 Shares 90 Views

ARCHINECT.COMCheck out these winter cabin designs to get ski season in gearSki season is upon us and to get people in the spirit, we've put together a list of the best-looking new winter cabins and ski chalet designs from around the world. Each is taken from individual firm profilesand offers its clients a cozy place to take in the scenery and enjoy close access to some fresh powder. Dig in and take a minute to explore some of these inspiring residential designs to put you in a post-holiday winter spirit that will last the year-round.A-Frame Club by Skylab Architecture in Winter Park, ColoradoA-Frame Club. Image: Stephan Werk/ WerkcreativeHigh Desert Residence by Hacker Architects in Bend, OregonHigh Desert Residence (Image: Jeremy Bittermann)Forest House by Faulkner Architects in Truckee, California Forest House (Image: Joe Fletcher)Yellowstone Residence by Stuart Silk Architects in Big Sky, MontanaYellowstone Residence. Image: Whitney Kamman The Glass Cabin by Mjlk architekti in Polubn, Czech RepublicThe Glass Cabin. Image: BoysPlayNiceMylla Hyt...0 Comments 0 Shares 90 Views -

WWW.SMITHSONIANMAG.COMMetal Detectorists Discover 1,200-Year-Old Graves That May Have Belonged to High-Status Viking WomenMetal Detectorists Discover 1,200-Year-Old Graves That May Have Belonged to High-Status Viking WomenExcavations in Norway revealed a rich variety of artifacts, including jewelry, textile tools and stones positioned in the shape of a ship Researchers think there may be as many as 20 graves at the site in southwest Norway. Sren Diinhoff / University Museum of BergenArchaeologists have unearthed coins, jewelry and stones from graves in Norway that likely belonged to high-status Viking women, reportsScience Norways Ida Irene Bergstrm.Initially discovered by a group of amateur metal detectorists in the fall of 2023, the graves date to between 800 and 850 C.E. That lines up with the beginning of the Viking Age, which ran from around 800 to 1050 C.E.During excavations, which concluded in late 2024, archaeologists found a rich variety of artifacts. One grave contained fragments of gilded oval brooches, part of a metal cauldron and a book clasp that had been repurposed as jewelry. Archaeologists think the clasp may have come from a Christian monastery.We think that the clasp in the first grave could very well have come from a Bible in England or Ireland, says Sren Diinhoff, an archaeologist with the University Museum of Bergen, toFox News Digitals Andrea Margolis. It had been ripped off and brought back to Norway where it eventually ended up as a womans brooch.In another grave, they found 11 silver coins and a necklace made of 46 glass beads. They also discovered trefoil brooches that were likely used to fasten clothing. The brooches appear to have been repurposed from the clasps of Carolingian sword belts, according to a statement from the researchers.They also found a bronze key and what is likely a frying pan, as well as items that were used to produce textiles, such as a spindle whorl, a weaving sword and wool shears. These items suggest that the woman buried here may have been the head of the household and managed the farms textile production operations.Textile production was prestigious, Diinhoff tells Science Norway. Farms that made fine clothing held high status. One of the coins is likely a rare "Hedeby coin" that was made in southern Denmark between 823 and 840 C.E. Sren Diinhoff / University Museum of BergenExperts at the University Museum of Bergen are still studying the coins. But theyve already deduced that one is a rare Hedeby coin minted in the early ninth century C.E. in southern Denmarkwhich are among the earliest known Scandinavian-made coins. The other ten coins were likely minted during the reign ofLouis I, the son of Charlemagne and a Carolingian ruler of the Franks.Some of the artifacts appear to have originated in England and Ireland, which is indicative of the Vikings long-distance trade routes. But the women may also have had their own ties to continental Europe.Both of these women had contacts outside Norway, Diinhoff tells Science Norway. It's probably no coincidence. Perhaps they came from abroad and married into the local community.Researchers didnt find any bones in the graves. Its possible that the human remains disintegrated, which is common because of the makeup of western Norways soil.But another theory is that the graves were empty to begin with. They may have beencenotaphs, or memorials honoring individuals whove been buried somewhere else. Researchers suspect this is likely the case, as the necklace appears to have been buried inside a leather pouch, rather than around someones neck. One of the graves containeda necklace made of 46 glass beads. Sren Diinhoff / University Museum of BergenThe graves are located in the municipality of Fitjar, an area along the countrys southwest coast. During the Viking Age, the site was a farm that likely belonged to the local or regional king, according to the archaeologists.Since its so close to the coast, maritime travelers may have used the farm as a rest stop. That theory is bolstered by the fact that one of the graves contained rocks positioned in the shape of a ship.On behalf of the king, shelter was provided to passing ships, which likely generated additional income, Diinhoff tells Science Norway.Archaeologists hope to return soon for further research, as they have only just started excavating a third grave. They think there may be as many as 20 graves in the areaand now, its a race against time before theyre destroyed.They are found just below the turf, and there are so many ways they can be ruined, Diinhoff tells Fox News Digital. We hope to be able to excavate a few graves every year.Get the latest stories in your inbox every weekday.Filed Under: Archaeology, Artifacts, Coins, Death, European History, History, Jewelry, Norway, Vikings0 Comments 0 Shares 110 Views

WWW.SMITHSONIANMAG.COMMetal Detectorists Discover 1,200-Year-Old Graves That May Have Belonged to High-Status Viking WomenMetal Detectorists Discover 1,200-Year-Old Graves That May Have Belonged to High-Status Viking WomenExcavations in Norway revealed a rich variety of artifacts, including jewelry, textile tools and stones positioned in the shape of a ship Researchers think there may be as many as 20 graves at the site in southwest Norway. Sren Diinhoff / University Museum of BergenArchaeologists have unearthed coins, jewelry and stones from graves in Norway that likely belonged to high-status Viking women, reportsScience Norways Ida Irene Bergstrm.Initially discovered by a group of amateur metal detectorists in the fall of 2023, the graves date to between 800 and 850 C.E. That lines up with the beginning of the Viking Age, which ran from around 800 to 1050 C.E.During excavations, which concluded in late 2024, archaeologists found a rich variety of artifacts. One grave contained fragments of gilded oval brooches, part of a metal cauldron and a book clasp that had been repurposed as jewelry. Archaeologists think the clasp may have come from a Christian monastery.We think that the clasp in the first grave could very well have come from a Bible in England or Ireland, says Sren Diinhoff, an archaeologist with the University Museum of Bergen, toFox News Digitals Andrea Margolis. It had been ripped off and brought back to Norway where it eventually ended up as a womans brooch.In another grave, they found 11 silver coins and a necklace made of 46 glass beads. They also discovered trefoil brooches that were likely used to fasten clothing. The brooches appear to have been repurposed from the clasps of Carolingian sword belts, according to a statement from the researchers.They also found a bronze key and what is likely a frying pan, as well as items that were used to produce textiles, such as a spindle whorl, a weaving sword and wool shears. These items suggest that the woman buried here may have been the head of the household and managed the farms textile production operations.Textile production was prestigious, Diinhoff tells Science Norway. Farms that made fine clothing held high status. One of the coins is likely a rare "Hedeby coin" that was made in southern Denmark between 823 and 840 C.E. Sren Diinhoff / University Museum of BergenExperts at the University Museum of Bergen are still studying the coins. But theyve already deduced that one is a rare Hedeby coin minted in the early ninth century C.E. in southern Denmarkwhich are among the earliest known Scandinavian-made coins. The other ten coins were likely minted during the reign ofLouis I, the son of Charlemagne and a Carolingian ruler of the Franks.Some of the artifacts appear to have originated in England and Ireland, which is indicative of the Vikings long-distance trade routes. But the women may also have had their own ties to continental Europe.Both of these women had contacts outside Norway, Diinhoff tells Science Norway. It's probably no coincidence. Perhaps they came from abroad and married into the local community.Researchers didnt find any bones in the graves. Its possible that the human remains disintegrated, which is common because of the makeup of western Norways soil.But another theory is that the graves were empty to begin with. They may have beencenotaphs, or memorials honoring individuals whove been buried somewhere else. Researchers suspect this is likely the case, as the necklace appears to have been buried inside a leather pouch, rather than around someones neck. One of the graves containeda necklace made of 46 glass beads. Sren Diinhoff / University Museum of BergenThe graves are located in the municipality of Fitjar, an area along the countrys southwest coast. During the Viking Age, the site was a farm that likely belonged to the local or regional king, according to the archaeologists.Since its so close to the coast, maritime travelers may have used the farm as a rest stop. That theory is bolstered by the fact that one of the graves contained rocks positioned in the shape of a ship.On behalf of the king, shelter was provided to passing ships, which likely generated additional income, Diinhoff tells Science Norway.Archaeologists hope to return soon for further research, as they have only just started excavating a third grave. They think there may be as many as 20 graves in the areaand now, its a race against time before theyre destroyed.They are found just below the turf, and there are so many ways they can be ruined, Diinhoff tells Fox News Digital. We hope to be able to excavate a few graves every year.Get the latest stories in your inbox every weekday.Filed Under: Archaeology, Artifacts, Coins, Death, European History, History, Jewelry, Norway, Vikings0 Comments 0 Shares 110 Views -

VENTUREBEAT.COMIn the future, we will all manage our own AI agents | Jensen Huang Q&AJoin our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn MoreJensen Huang, CEO of Nvidia, gave an eye-opening keynote talk at CES 2025 last week. It was highly appropriate, as Huangs favorite subject of artificial intelligence has exploded across the world and Nvidia has, by extension, become one of the most valuable companies in the world. Apple recently passed Nvidia with a market capitalization of $3.58 trillion, compared to Nvidias $3.33 trillion. The company is celebrating the 25th year of its GeForce graphics chip business and it has been a long time since I did the first interview with Huang back in 1996, when we talked about graphics chips for a Windows accelerator. Back then, Nvidia was one of 80 3D graphics chip makers. Now its one of around three or so survivors. And it has made a huge pivot from graphics to AI.Huang hasnt changed much. For the keynote, Huang announced a video game graphics card, the Nvidia GeForce RTX 50 Series, but there were a dozen AI-focused announcements about how Nvidia is creating the blueprints and platforms to make it easy to train robots for the physical world. In fact, in a feature dubbed DLSS 4, Nvidia is now using AI to make its graphics chip frame rates better. And there are technologies like Cosmos, which helps robot developers use synthetic data to train their robots. A few of these Nvidia announcements were among my 13 favorite things at CES.After the keynote, Huang held a free-wheeling Q&A with the press at the Fountainbleau hotel in Las Vegas. At first, he engaged with a hilarious discussion with the audio-visual team in the room about the sound quality, as he couldnt hear questions up on stage. So he came down among the press and, after teasing the AV team guy named Sebastian, he answered all of our questions, and he even took a selfie with me. Then he took a bunch of questions from financial analysts.I was struck at how technical Huangs command of AI was during the keynote, but it reminded me more of a Siggraph technology conference than a keynote speech for consumers at CES. I asked him about that and you can see his answer below. Ive included the whole Q&A from all of the press in the room. Heres an edited transcript of the press Q&A.Jensen Huang, CEO of Nvidia, at CES 2025 press Q&A.Question: Last year you defined a new unit of compute, the data center. Starting with the building and working down. Youve done everything all the way up to the system now. Is it time for Nvidia to start thinking about infrastructure, power, and the rest of the pieces that go into that system?Jensen Huang: As a rule, Nvidiawe only work on things that other people do not, or that we can do singularly better. Thats why were not in that many businesses. The reason why we do what we do, if we didnt build NVLink72, who would have? Who could have? If we didnt build the type of switches like Spectrum-X, this ethernet switch that has the benefits of InfiniBand, who could have? Who would have? We want our company to be relatively small. Were only 30-some-odd thousand people. Were still a small company. We want to make sure our resources are highly focused on areas where we can make a unique contribution.We work up and down the supply chain now. We work with power delivery and power conditioning, the people who are doing that, cooling and so on. We try to work up and down the supply chain to get people ready for these AI solutions that are coming. Hyperscale was about 10 kilowatts per rack. Hopper is 40 to 50 to 60 kilowatts per rack. Now Blackwell is about 120 kilowatts per rack. My sense is that that will continue to go up. We want it to go up because power density is a good thing. Wed rather have computers that are dense and close by than computers that are disaggregated and spread out all over the place. Density is good. Were going to see that power density go up. Well do a lot better cooling inside and outside the data center, much more sustainable. Theres a whole bunch of work to be done. We try not to do things that we dont have to.HP EliteBook Ultra G1i 14-inch notebook next-gen AI PC.Question: You made a lot of announcements about AI PCs last night. Adoption of those hasnt taken off yet. Whats holding that back? Do you think Nvidia can help change that?Huang: AI started the cloud and was created for the cloud. If you look at all of Nvidias growth in the last several years, its been the cloud, because it takes AI supercomputers to train the models. These models are fairly large. Its easy to deploy them in the cloud. Theyre called endpoints, as you know. We think that there are still designers, software engineers, creatives, and enthusiasts whod like to use their PCs for all these things. One challenge is that because AI is in the cloud, and theres so much energy and movement in the cloud, there are still very few people developing AI for Windows.It turns out that the Windows PC is perfectly adapted to AI. Theres this thing called WSL2. WSL2 is a virtual machine, a second operating system, Linux-based, that sits inside Windows. WSL2 was created to be essentially cloud-native. It supports Docker containers. It has perfect support for CUDA. Were going to take the AI technology were creating for the cloud and now, by making sure that WSL2 can support it, we can bring the cloud down to the PC. I think thats the right answer. Im excited about it. All the PC OEMs are excited about it. Well get all these PCs ready with Windows and WSL2. All the energy and movement of the AI cloud, well bring it right to the PC.Question: Last night, in certain parts of the talk, it felt like a SIGGRAPH talk. It was very technical. Youve reached a larger audience now. I was wondering if you could explain some of the significance of last nights developments, the AI announcements, for this broader crowd of people who have no clue what you were talking about last night.Huang: As you know, Nvidia is a technology company, not a consumer company. Our technology influences, and is going to impact, the future of consumer electronics. But it doesnt change the fact that I could have done a better job explaining the technology. Heres another crack.One of the most important things we announced yesterday was a foundation model that understands the physical world. Just as GPT was a foundation model that understands language, and Stable Diffusion was a foundation model that understood images, weve created a foundation model that understands the physical world. It understands things like friction, inertia, gravity, object presence and permanence, geometric and spatial understanding. The things that children know. They understand the physical world in a way that language models today doint. We believe that there needs to be a foundation model that understands the physical world.Once we create that, all the things you could do with GPT and Stable Diffusion, you can now do with Cosmos. For example, you can talk to it. You can talk to this world model and say, Whats in the world right now? Based on the season, it would say, Theres a lot of people sitting in a room in front of desks. The acoustics performance isnt very good. Things like that. Cosmos is a world model, and it understands the world.Nvidia is marrying tech for AI in the physical world with digital twins.The question is, why do we need such a thing? The reason is, if you want AI to be able to operate and interact in the physical world sensibly, youre going to have to have an AI that understands that. Where can you use that? Self-driving cars need to understand the physical world. Robots need to understand the physical world. These models are the starting point of enabling all of that. Just as GPT enabled everything were experiencing today, just as Llama is very important to activity around AI, just as Stable Diffusion triggered all these generative imaging and video models, we would like to do the same with Cosmos, the world model.Question: Last night you mentioned that were seeing some new AI scaling laws emerge, specifically around test-time compute. OpenAIs O3 model showed that scaling inference is very expensive from a compute perspective. Some of those runs were thousands of dollars on the ARC-AGI test. What is Nvidia doing to offer more cost-effective AI inference chips, and more broadly, how are you positioned to benefit from test-time scaling?Huang: The immediate solution for test-time compute, both in performance and affordability, is to increase our computing capabilities. Thats why Blackwell and NVLink72the inference performance is probably some 30 or 40 times higher than Hopper. By increasing the performance by 30 or 40 times, youre driving the cost down by 30 or 40 times. The data center costs about the same.The reason why Moores Law is so important in the history of computing is it drove down computing costs. The reason why I spoke about the performance of our GPUs increasing by 1,000 or 10,000 times over the last 10 years is because by talking about that, were inversely saying that we took the cost down by 1,000 or 10,000 times. In the course of the last 20 years, weve driven the marginal cost of computing down by 1 million times. Machine learning became possible. The same thing is going to happen with inference. When we drive up the performance, as a result, the cost of inference will come down.The second way to think about that question, today it takes a lot of iterations of test-time compute, test-time scaling, to reason about the answer. Those answers are going to become the data for the next time post-training. That data becomes the data for the next time pre-training. All of the data thats being collected is going into the pool of data for pre-training and post-training. Well keep pushing that into the training process, because its cheaper to have one supercomputer become smarter and train the model so that everyones inference cost goes down.However, that takes time. All these three scaling laws are going to happen for a while. Theyre going to happen for a while concurrently no matter what. Were going to make all the models smarter in time, but people are going to ask tougher and tougher questions, ask models to do smarter and smarter things. Test-time scaling will go up.Question: Do you intend to further increase your investment in Israel?A neural face rendering.Huang: We recruit highly skilled talent from almost everywhere. I think theres more than a million resumes on Nvidias website from people who are interested in a position. The company only employs 32,000 people. Interest in joining Nvidia is quite high. The work we do is very interesting. Theres a very large option for us to grow in Israel.When we purchased Mellanox, I think they had 2,000 employees. Now we have almost 5,000 employees in Israel. Were probably the fastest-growing employer in Israel. Im very proud of that. The team is incredible. Through all the challenges in Israel, the team has stayed very focused. They do incredible work. During this time, our Israel team created NVLink. Our Israel team created Spectrum-X and Bluefield-3. All of this happened in the last several years. Im incredibly proud of the team. But we have no deals to announce today.Question: Multi-frame generation, is that still doing render two frames, and then generate in between? Also, with the texture compression stuff, RTX neural materials, is that something game developers will need to specifically adopt, or can it be done driver-side to benefit a larger number of games?Huang: Theres a deep briefing coming out. You guys should attend that. But what we did with Blackwell, we added the ability for the shader processor to process neural networks. You can put code and intermix it with a neural network in the shader pipeline. The reason why this is so important is because textures and materials are processed in the shader. If the shader cant process AI, you wont get the benefit of some of the algorithm advances that are available through neural networks, like for example compression. You could compress textures a lot better today than the algorithms than weve been using for the last 30 years. The compression ratio can be dramatically increased. The size of games is so large these days. When we can compress those textures by another 5X, thats a big deal.Next, materials. The way light travels across a material, its anisotropic properties, cause it to reflect light in a way that indicates whether its gold paint or gold. The way that light reflects and refracts across their microscopic, atomic structure causes materials to have those properties. Describing that mathematically is very difficult, but we can learn it using an AI. Neural materials is going to be completely ground-breaking. It will bring a vibrancy and a lifelike-ness to computer graphics. Both of these require content-side work. Its content, obviously. Developers will have to develop their content in that way, and then they can incorporate these things.With respect to DLSS, the frame generation is not interpolation. Its literally frame generation. Youre predicting the future, not interpolating the past. The reason for that is because were trying to increase framerate. DLSS 4, as you know, is completely ground-breaking. Be sure to take a look at it.Question: Theres a huge gap between the 5090 and 5080. The 5090 has more than twice the cores of the 5080, and more than twice the price. Why are you creating such a distance between those two?Huang: When somebody wants to have the best, they go for the best. The world doesnt have that many segments. Most of our users want the best. If we give them slightly less than the best to save $100, theyre not going to accept that. They just want the best.Of course, $2,000 is not small money. Its high value. But that technology is going to go into your home theater PC environment. You may have already invested $10,000 into displays and speakers. You want the best GPU in there. A lot of their customers, they just absolutely want the best.Question: With the AI PC becoming more and more important for PC gaming, do you imagine a future where there are no more traditionally rendered frames?Nvidia RTX AI PCsHuang: No. The reason for that is becauseremember when ChatGPT came out and people said, Oh, now we can just generate whole books? But nobody internally expected that. Its called conditioning. We now conditional the chat, or the prompts, with context. Before you can understand a question, you have to understand the context. The context could be a PDF, or a web search, or exactly what you told it the context is. The same thing with images. You have to give it context.The context in a video game has to be relevant, and not just story-wise, but spatially relevant, relevant to the world. When you condition it and give it context, you give it some early pieces of geometry or early pieces of texture. It can generate and up-rez from there. The conditioning, the grounding, is the same thing you would do with ChatGPT and context there. In enterprise usage its called RAG, retrieval augmented generation. In the future, 3D graphics will be grounded, conditioned generation.Lets look at DLSS 4. Out of 33 million pixels in these four frames weve rendered one and generated three weve rendered 2 million. Isnt that a miracle? Weve literally rendered two and generated 31. The reason why thats such a big dealthose 2 million pixels have to be rendered at precisely the right points. From that conditioning, we can generate the other 31 million. Not only is that amazing, but those two million pixels can be rendered beautifully. We can apply tons of computation because the computing we would have applied to the other 31 million, we now channel and direct that at just the 2 million. Those 2 million pixels are incredibly complex, and they can inspire and inform the other 31.The same thing will happen in video games in the future. Ive just described what will happen to not just the pixels we render, but the geometry the render, the animation we render and so on. The future of video games, now that AI is integrated into computer graphicsthis neural rendering system weve created is now common sense. It took about six years. The first time I announced DLSS, it was universally disbelieved. Part of that is because we didnt do a very good job of explaining it. But it took that long for everyone to now realize that generative AI is the future. You just need to condition it and ground it with the artists intention.We did the same thing with Omniverse. The reason why Omniverse and Cosmos are connected together is because Omniverse is the 3D engine for Cosmos, the generative engine. We control completely in Omniverse, and now we can control as little as we want, as little as we can, so we can generate as much as we can. What happens when we control less? Then we can simulate more. The world that we can now simulate in Omniverse can be gigantic, because we have a generative engine on the other side making it look beautiful.Question: Do you see Nvidia GPUs starting to handle the logic in future games with AI computation? Is it a goal to bring both graphics and logic onto the GPU through AI?Huang: Yes. Absolutely. Remember, the GPU is Blackwell. Blackwell can generate text, language. It can reason. An entire agentic AI, an entire robot, can run on Blackwell. Just like it runs in the cloud or in the car, we can run that entire robotics loop inside Blackwell. Just like we could do fluid dynamics or particle physics in Blackwell. The CUDA is exactly the same. The architecture of Nvidia is exactly the same in the robot, in the car, in the cloud, in the game system. Thats the good decision we made. Software developers need to have one common platform. When they create something they want to know that they can run it everywhere.Yesterday I said that were going to create the AI in the cloud and run it on your PC. Who else can say that? Its exactly CUDA compatible. The container in the cloud, we can take it down and run it on your PC. The SDXL NIM, its going to be fantastic. The FLUX NIM? Fantastic. Llama? Just take it from the cloud and run it on your PC. The same thing will happen in games.Nvidia NIM (Nvidia inference microservices).Question: Theres no question about the demand for your products from hyperscalers. But can you elaborate on how much urgency you feel in broadening your revenue base to include enterprise, to include government, and building your own data centers? Especially when customers like Amazon are looking to build their own AI chips. Second, could you elaborate more for us on how much youre seeing from enterprise development?Huang: Our urgency comes from serving customers. Its never weighed on me that some of my customers are also building other chips. Im delighted that theyre building in the cloud, and I think theyre making excellent choices. Our technology rhythm, as you know, is incredibly fast. When we increase performance every year by a factor of two, say, were essentially decreasing costs by a factor of two every year. Thats way faster than Moores Law at its best. Were going to respond to customers wherever they are.With respect to enterprise, the important thing is that enterprises today are served by two industries: the software industry, ServiceNow and SAP and so forth, and the solution integrators that help them adapt that software into their business processes. Our strategy is to work with those two ecosystems and help them build agentic AI. NeMo and blueprints are the toolkits for building agentic AI. The work were doing with ServiceNow, for example, is just fantastic. Theyre going to have a whole family of agents that sit on top of ServiceNow that help do customer support. Thats our basic strategy. With the solution integrators, were working with Accenture and othersAccenture is doing critical work to help customers integrate and adopt agentic AI into their systems.Step one is to help that whole ecosystem develop AI, which is different from developing software. They need a different toolkit. I think weve done a good job this last year of building up the agentic AI toolkit, and now its about deployment and so on.Question: It was exciting last night to see the 5070 and the price decrease. I know its early, but what can we expect from the 60-series cards, especially in the sub-$400 range?Huang: Its incredible that we announced four RTX Blackwells last night, and the lowest performance one has the performance of the highest-end GPU in the world today. That puts it in perspective, the incredible capabilities of AI. Without AI, without the tensor cores and all of the innovation around DLSS 4, this capability wouldnt be possible. I dont have anything to announce. Is there a 60? I dont know. It is one of my favorite numbers, though.Question: You talked about agentic AI. Lots of companies have talked about agentic AI now. How are you working with or competing with companies like AWS, Microsoft, Salesforce who have platforms in which theyre also telling customers to develop agents? How are you working with those guys?Huang: Were not a direct to enterprise company. Were a technology platform company. We develop the toolkits, the libraries, and AI models, for the ServiceNows. Thats our primary focus. Our primary focus is ServiceNow and SAP and Oracle and Synopsys and Cadence and Siemens, the companies that have a great deal of expertise, but the library layer of AI is not an area that they want to focus on. We can create that for them.Its complicated, because essentially were talking about putting a ChatGPT in a container. That end point, that microservice, is very complicated. When they use ours, they can run it on any platform. We develop the technology, NIMs and NeMo, for them. Not to compete with them, but for them. If any of our CSPs would like to use them, and many of our CSPs have using NeMo to train their large language models or train their engine models they have NIMs in their cloud stores. We created all of this technology layer for them.The way to think about NIMs and NeMo is the way to think about CUDA and the CUDA-X libraries. The CUDA-X libraries are important to the adoption of the Nvidia platform. These are things like cuBLAS for linear algebra, cuDNN for the deep neural network processing engine that revolutionized deep learning, CUTLASS, all these fancy libraries that weve been talking about. We created those libraries for the industry so that they dont have to. Were creating NeMo and NIMs for the industry so that they dont have to.Question: What do you think are some of the biggest unmet needs in the non-gaming PC market today?Nvidias Project Digits, based on GB110.Huang: DIGITS stands for Deep Learning GPU Intelligence Training System. Thats what it is. DIGITS is a platform for data scientists. DIGITS is a platform for data scientists, machine learning engineers. Today theyre using their PCs and workstations to do that. For most peoples PCs, to do machine learning and data science, to run PyTorch and whatever it is, its not optimal. We now have this little device that you sit on your desk. Its wireless. The way you talk to it is the way you talk to the cloud. Its like your own private AI cloud.The reason you want that is because if youre working on your machine, youre always on that machine. If youre working in the cloud, youre always in the cloud. The bill can be very high. We make it possible to have that personal development cloud. Its for data scientists and students and engineers who need to be on the system all the time. I think DIGITStheres a whole universe waiting for DIGITS. Its very sensible, because AI started in the cloud and ended up in the cloud, but its left the worlds computers behind. We just have to figure something out to serve that audience.Question: You talked yesterday about how robots will soon be everywhere around us. Which side do you think robots will stand on with humans, or against them?Huang: With humans, because were going to build them that way. The idea of superintelligence is not unusual. As you know, I have a company with many people who are, to me, superintelligent in their field of work. Im surrounded by superintelligence. I prefer to be surrounded by superintelligence rather than the alternative. I love the fact that my staff, the leaders and the scientists in our company, are superintelligent. Im of average intelligence, but Im surrounded by superintelligence.Thats the future. Youre going to have superintelligent AIs that will help you write, analyze problems, do supply chain planning, write software, design chips and so on. Theyll build marketing campaigns or help you do podcasts. Youre going to have superintelligence helping you to do many things, and it will be there all the time. Of course the technology can be used in many ways. Its humans that are harmful. Machines are machines.Question: In 2017 Nvidia displayed a demo car at CES, a self-driving car. You partnered with Toyota that May. Whats the difference between 2017 and 2025? What were the issues in 2017, and what are the technological innovations being made in 2025?Back in 2017: Toyota will use Nvidia chips for self-driving cars.Huang: First of all, everything that moves in the future will be autonomous, or have autonomous capabilities. There will be no lawn mowers that you push. I want to see, in 20 years, someone pushing a lawn mower. That would be very fun to see. It makes no sense. In the future, all carsyou could still decide to drive, but all cars will have the ability to drive themselves. From where we are today, which is 1 billion cars on the road and none of them driving by themselves, tolets say, picking our favorite time, 20 years from now. I believe that cars will be able to drive themselves. Five years ago that was less certain, how robust the technology was going to be. Now its very certain that the sensor technology, the computer technology, the software technology is within reach. Theres too much evidence now that a new generation of cars, particularly electric cars, almost every one of them will be autonomous, have autonomous capabilities.If there are two drivers that really changed the minds of the traditional car companies, one of course is Tesla. They were very influential. But the single greatest impact is the incredible technology coming out of China. The neo-EVs, the new EV companies BYD, Li Auto, XPeng, Xiaomi, NIO their technology is so good. The autonomous vehicle capability is so good. Its now coming out to the rest of the world. Its set the bar. Every car manufacturer has to think about autonomous vehicles. The world is changing. It took a while for the technology to mature, and our own sensibility to mature. I think now were there. Waymo is a great partner of ours. Waymo is now all over the place in San Francisco.Question: About the new models that were announced yesterday, Cosmos and NeMo and so on, are those going to be part of smart glasses? Given the direction the industry is moving in, it seems like thats going to be a place where a lot of people experience AI agents in the future?Cosmos generates synthetic driving data.Huang: Im so excited about smart glasses that are connected to AI in the cloud. What am I looking at? How should I get from here to there? You could be reading and it could help you read. The use of AI as it gets connected to wearables and virtual presence technology with glasses, all of that is very promising.The way we use Cosmos, Cosmos in the cloud will give you visual penetration. If you want something in the glasses, you use Cosmos to distill a smaller model. Cosmos becomes a knowledge transfer engine. It transfers its knowledge into a much smaller AI model. The reason why youre able to do that is because that smaller AI model becomes highly focused. Its less generalizable. Thats why its possible to narrowly transfer knowledge and distill that into a much tinier model. Its also the reason why we always start by building the foundation model. Then we can build a smaller one and a smaller one through that process of distillation. Teacher and student models.Question: The 5090 announced yesterday is a great card, but one of the challenges with getting neural rendering working is what will be done with Windows and DirectX. What kind of work are you looking to put forward to help teams minimize the friction in terms of getting engines implemented, and also incentivizing Microsoft to work with you to make sure they improve DirectX?Huang: Wherever new evolutions of the DirectX API are, Microsoft has been super collaborative throughout the years. We have a great relationship with the DirectX team, as you can imagine. As were advancing our GPUs, if the API needs to change, theyre very supportive. For most of the things we do with DLSS, the API doesnt have to change. Its actually the engine that has to change. Semantically, it needs to understand the scene. The scene is much more inside Unreal or Frostbite, the engine of the developer. Thats the reason why DLSS is integrated into a lot of the engines today. Once the DLSS plumbing has been put in, particularly starting with DLSS 2, 3, and 4, then when we update DLSS 4, even though the game was developed for 3, youll have some of the benefits of 4 and so on. Plumbing for the scene understanding AIs, the AIs that process based on semantic information in the scene, you really have to do that in the engine.Question: All these big tech transitions are never done by just one company. With AI, do you think theres anything missing that is holding us back, any part of the ecosystem?Agility Robotics showed a robot that could take boxes and stack them on a conveyor belt.Huang: I do. Let me break it down into two. In one case, in the language case, the cognitive AI case, of course were advancing the cognitive capability of the AI, the basic capability. It has to be multimodal. It has to be able to do its own reasoning and so on. But the second part is applying that technology into an AI system. AI is not a model. Its a system of models. Agentic AI is an integration of a system of models. Theres a model for retrieval, for search, for generating images, for reasoning. Its a system of models.The last couple of years, the industry has been innovating along the applied path, not only the fundamental AI path. The fundamental AI path is for multimodality, for reasoning and so on. Meanwhile, there is a hole, a missing thing thats necessary for the industry to accelerate its process. Thats the physical AI. Physical AI needs the same foundation model, the concept of a foundation model, just as cognitive AI needed a classic foundation model. The GPT-3 was the first foundation model that reached a level of capability that started off a whole bunch of capabilities. We have to reach a foundation model capability for physical AI.Thats why were working on Cosmos, so we can reach that level of capability, put that model out in the world, and then all of a sudden a bunch of end use cases will start, downstream tasks, downstream skills that are activated as a result of having a foundation model. That foundation model could also be a teaching model, as we were talking about earlier. That foundation model is the reason we built Cosmos.The second thing that is missing in the world is the work were doing with Omniverse and Cosmos to connect the two systems together, so that its a physics condition, physics-grounded, so we can use that grounding to control the generative process. What comes out of Cosmos is highly plausible, not just highly hallucinatable. Cosmos plus Omniverse is the missing initial starting point for what is likely going to be a very large robotics industry in the future. Thats the reason why we built it.Question: How concerned are you about trade and tariffs and what that possibly represents for everyone?Huang: Im not concerned about it. I trust that the administration will make the right moves for their trade negotiations. Whatever settles out, well do the best we can to help our customers and the market.Follow-up question inaudible.Nvidia Nemotron Model FamilesHuang: We only work on things if the market needs us to, if theres a hole in the market that needs to be filled and were destined to fill it. Well tend to work on things that are far in advance of the market, where if we dont do something it wont get done. Thats the Nvidia psychology. Dont do what other people do. Were not market caretakers. Were market makers. We tend not to go into a market that already exists and take our share. Thats just not the psychology of our company.The psychology of our company, if theres a market that doesnt existfor example, theres no such thing as DIGITS in the world. If we dont build DIGITS, no one in the world will build DIGITS. The software stack is too complicated. The computing capabilities are too significant. Unless we do it, nobody is going to do it. If we didnt advance neural graphics, nobody would have done it. We had to do it. Well tend to do that.Question: Do you think the way that AI is growing at this moment is sustainable?Huang: Yes. There are no physical limits that I know of. As you know, one of the reasons were able to advance AI capabilities so rapidly is that we have the ability to build and integrate our CPU, GPU, NVLink, networking, and all the software and systems at the same time. If that has to be done by 20 different companies and we have to integrate it all together, the timing would take too long. When we have everything integrated and software supported, we can advance that system very quickly. With Hopper, H100 and H200 to the next and the next, were going to be able to move every single year.The second thing is, because were able to optimize across the entire system, the performance we can achieve is much more than just transistors alone. Moores Law has slowed. The transistor performance is not increasing that much from generation to generation. But our systems overall have increased in performance tremendously year over year. Theres no physical limit that I know of.There are 72 Blackwell chips on this wafer. As we advance our computing, the models will keep on advancing. If we increase the computation capability, researchers can train with larger models, with more data. We can increase their computing capability for the second scaling law, reinforcement learning and synthetic data generation. Thats going to continue to scale. The third scaling law, test-time scalingif we keep advancing the computing capability, the cost will keep coming down, and the scaling law of that will continue to grow as well. We have three scaling laws now. We have mountains of data we can process. I dont see any physics reasons that we cant continue to advance computing. AI is going to progress very quickly.Question: Will Nvidia still be building a new headquarters in Taiwan?Huang: We have a lot of employees in Taiwan, and the building is too small. I have to find a solution for that. I may announce something in Computex. Were shopping for real estate. We work with MediaTek across several different areas. One of them is in autonomous vehicles. We work with them so that we can together offer a fully software-defined and computerized car for the industry. Our collaboration with the automotive industry is very good.With Grace Blackwell, the GB10, the Grace CPU is in collaboration with MediaTek. We architected it together. We put some Nvidia technology into MediaTek, so we could have NVLink chip-to-chip. They designed the chip with us and they designed the chip for us. They did an excellent job. The silicon is perfect the first time. The performance is excellent. As you can imagine, MediaTeks reputation for very low power is absolutely deserved. Were delighted to work with them. The partnership is excellent. Theyre an excellent company.Question: What advice would you give to students looking forward to the future?A wafer full of Nvidia Blackwell chips.Huang: My generation was the first generation that had to learn how to use computers to do their field of science. The generation before only used calculators and paper and pencils. My generation had to learn how to use computers to write software, to design chips, to simulate physics. My generation was the generation that used computers to do our jobs.The next generation is the generation that will learn how to use AI to do their jobs. AI is the new computer. Very important fields of sciencein the future it will be a question of, How will I use AI to help me do biology? Or forestry or agriculture or chemistry or quantum physics. Every field of science. And of course theres still computer science. How will I use AI to help advance AI? Every single field. Supply chain management. Operational research. How will I use AI to advance operational research? If you want to be a reporter, how will I use AI to help me be a better reporter?How AI gets smarterEvery student in the future will have to learn how to use AI, just as the current generation had to learn how to use computers. Thats the fundamental difference. That shows you very quickly how profound the AI revolution is. This is not just about a large language model. Those are very important, but AI will be part of everything in the future. Its the most transformative technology weve ever known. Its advancing incredibly fast.For all of the gamers and the gaming industry, I appreciate that the industry is as excited as we are now. In the beginning we were using GPUs to advance AI, and now were using AI to advance computer graphics. The work we did with RTX Blackwell and DLSS 4, its all because of the advances in AI. Now its come back to advance graphics.If you look at the Moores Law curve of computer graphics, it was actually slowing down. The AI came in and supercharged the curve. The framerates are now 200, 300, 400, and the images are completely raytraced. Theyre beautiful. We have gone into an exponential curve of computer graphics. Weve gone into an exponential curve in almost every field. Thats why I think our industry is going to change very quickly, but every industry is going to change very quickly, very soon.Daily insights on business use cases with VB DailyIf you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.Read our Privacy PolicyThanks for subscribing. Check out more VB newsletters here.An error occured.0 Comments 0 Shares 120 Views