www.cgchannel.com

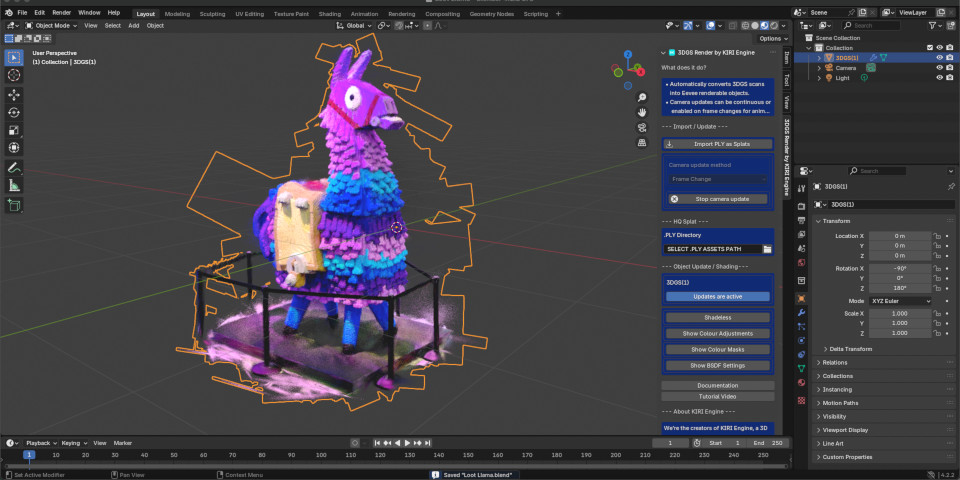

Thursday, September 26th, 2024Posted by Jim ThackerUnity previews its roadmap for Unity 6.1 and beyondhtml PUBLIC "-//W3C//DTD HTML 4.0 Transitional//EN" "http://www.w3.org/TR/REC-html40/loose.dtd"Unitys keynote from its Unite 2024 conference. The section on the product roadmap for Unity 6.1 and beyond starts at 01:30:30 in the recording.Originally posted on 20 September, and updated with more details of the next-gen releases.Unity has previewed its product roadmap and release schedule for the next few versions of its Unity game engine at its Unite 2024 conference.Unity 6, the next long-term support release, will be released globally on 17 October 2024.It will be followed in April 2025 by Unity 6.1, with new features including support for Deferred+ rendering in the GPU Resident Drawer, and support for larger and foldable screens.Development of the next major release generation presumably Unity 7 is also underway, with an open beta due in 2025.The next-gen releases will overhaul the Unity engine core, and introduce new artist tools for shader authoring, rendering, character animation and world building.Unity 6: due for release on 17 October 2024The first part of the roadmap announced at Unite was the release date for Unity 6, the next long-term support release, which will become available globally on 17 October 2024.Many of its key features, including the new GPU Resident Drawer for faster rendering, STP render upscaling, and updates to the URP and HDRP rendering pipelines, are already available in preview. You can read our pick of key graphics features in the Unity 6 Preview here.Unity 6 was previously the point at which the Unity Runtime Fee was due to come into force something that will not now be happening, Unity having just announced that it is scrapping the controversial per-installation fee on higher-earning games created with the engine.Unity 6.1: due in April 2025Unity 6 will be followed in April 2025 by Unity 6.1, an update building on the core Unity 6 capabilities.New features will include support for what Unity is billing as Deferred+ rendering in the GPU Resident Drawer, the behind-the-curtain system for speeding up rendering of scenes with a lot of instanced objects.The release will also introduce support for foldable and large-format screens, and introduce new build targets and profiles, including a target for Facebook Instant Games, and a new profile for Meta Quest VR systems.Next release generation: due in open beta in 2025Unity also announced that it is already a year into development of its next generation of releases.During the keynote, the focus of the release cycle was described as simplicity, iteration and power, marking a fundamental shift in our thinking and approach.Changes will include bringing Unitys Entity Component System (ECS) into the very heart of the Unity engine, integrating Entities with GameObjects.The content pipeline will be reworked to allow more import tasks to run in the background, making it possible to iterate on projects more quickly.New graphical capabilities include virtual texturing, advanced tessellation, and terrain services in the Shader Graph, making it possible to seamlessly blend in meshes.There will also be an all-new animation system emphasising flexibility, animation at scale, and performance across multiple platforms.For scripting, all of the functionality of the Mono backend will be moved to CoreCLR, which will bring big performance gains to both the [Unity] Editor and the runtime.The stable release is still a ways off, but the new version is expected in open beta in 2025.The Unity Engine roadmap session from Unite 2024. The section on the new animation and worldbuilding tools starts at 38:05 in the recording.Updated 26 September 2024: Unity has now posted the recording of Unity Engine Roadmap session from Unite 2024.It covers the ground summarized in the keynote in more detail, including the new animation and world building tools due in the next-generation releases.New character animation systemNew animation features include the option to preview animations without loading them into the scene, a new socket object for weapons and props, and a new animation remapping system.Technical animators get a new underlying graph system along the lines of the Shader Graph, and a reworked hierarchical, layered State Machine.For troubleshooting animations, a visual debugging system makes it possible to rewind gameplay frame by frame, and jump to the relevant point in the animation graph.New world building toolsThe video also shows the new world building workflows for technical artists, emphasising non-destructive editing via procedural rulesets.New capabilities include the option to mask terrain procedurally by altitude or slope angle.There are also demos of the new terrain blending capabilities, and the virtual texturing and tessellation systems, which increase detail in areas of the environment closest to the player.Unified renderer replaces the URP and HDRPHowever, the video also covers changes in the next-gen releases that werent covered in the keynote. For 3D artists, the key changes are in this section, which covers shading and rendering.The old Built-In Render Pipeline will be marked as deprecated during the Unity 6 releases, and is scheduled for replacement by the newer Scriptable Render Pipeline.Within the SRP, the Universal Render Pipeline (URP) for mobile devices and High Definition Render Pipeline (HDRP) for desktop and console will be replaced by a single unified renderer.Rather than having to create different versions of lights, cameras, materials and effects for different hardware platforms, Unity says that artists will be able to author them once, then configure the rendering logic to scale across all platforms.To test output, it will be possible to switch between rendering configurations via a drop-down within the Unity Editor.New Unified Lit shader and Shader Graph 2 authoring environment For shading, a Unified Lit shader based on the new OpenPBR standard will make it possible to author shaders in a standard way between Unity and your art package.OpenPBR development is being driven by Adobe and Autodesk and will supersede their own individual Adobe Standard Material and Autodesk Standard Surface material specifications.Unity is also working on Shader Graph 2, a complete rebuild of the node-based visual shader authoring environment.The update will add more than two dozen new nodes, update over 50 existing nodes, and make it possible to create custom nodes using block shader code: Unitys new shader format.Workflow improvements include new options for grouping nodes and snapping them to the canvas, and better node search capabilities.Read a summary of the announcements from the Unite 2024 keynote on Unitys blogHave your say on this story by following CG Channel on Facebook, Instagram and X (formerly Twitter). As well as being able to comment on stories, followers of our social media accounts can see videos we dont post on the site itself, including making-ofs for the latest VFX movies, animations, games cinematics and motion graphics projects.Latest NewsAdobe releases Photoshop 26.11Check out the new features in the image-editing app, including a new system for creating and managing shared cloud-based projects.Wednesday, September 24th, 2025BiteTheBytes releases World Creator 2025.1Big update to the GPU-based terrain generator adds new biome and object scattering systems. See all of the changes, and the new pricing.Tuesday, September 23rd, 2025Globe Plants to close its online store next monthPopular 3D plant creator's models will be available via Chaos's Cosmos asset library after its e-store closes on 1 October 2025.Tuesday, September 23rd, 2025Itoosoft releases Forest Pack 9.3 for 3ds MaxCheck out the changes to the scattering and scene layout toolset, including support for wind animation in the new ForestIvy plugin.Monday, September 22nd, 2025Get 48,000 free 3D models, HDRIs and materials from BlenderKitCheck out the stock resources, add-ons and even complete 3D scenes available for free in the Blender asset library. For commercial use.Sunday, September 21st, 2025Download 350+ free KitBash3D assets with Cargo 2.0Check out the changes to the kitbash asset library's browser app, plus the free 3D vehicles, models and materials available through it.Sunday, September 21st, 2025More NewsStoryboarding app Previs Pro 3 adds animatics and a free editionTon Roosendaal to step down as Blender chairman and CEOAutodesk unveils new AI tools coming to MayaDiscover 5 key features for CG artists in Godot 4.5GPU firm Bolt Graphics becomes Blender's latest Corporate PatronCascadeur to get dedicated Unreal Engine Live Link pluginRizom-Lab releases RizomUV 2025Foundry releases Mari 7.5 in betaTutorials: Rendering Matte Surfaces - Volumes 1 and 2Blackmagic Design releases Fusion Studio 20.2Autodesk releases MotionBuilder 2026.1Maxon releases Red Giant 2026.0 and Universe 2026.0Older Posts