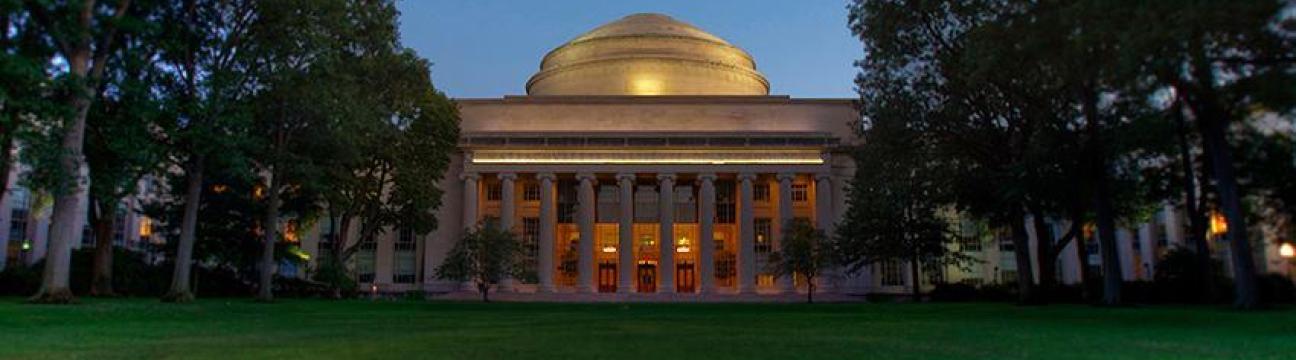

The Massachusetts Institute of Technology is a world leader in research and education.

192 людям нравится это

0 Записей

0 Фото

0 Видео