This is the Official NVIDIA Page

204 Les gens qui ont lié ça

28 Articles

0 Photos

0 Vidéos

Partager

Partager la publication sur

company

Mises à jour récentes

-

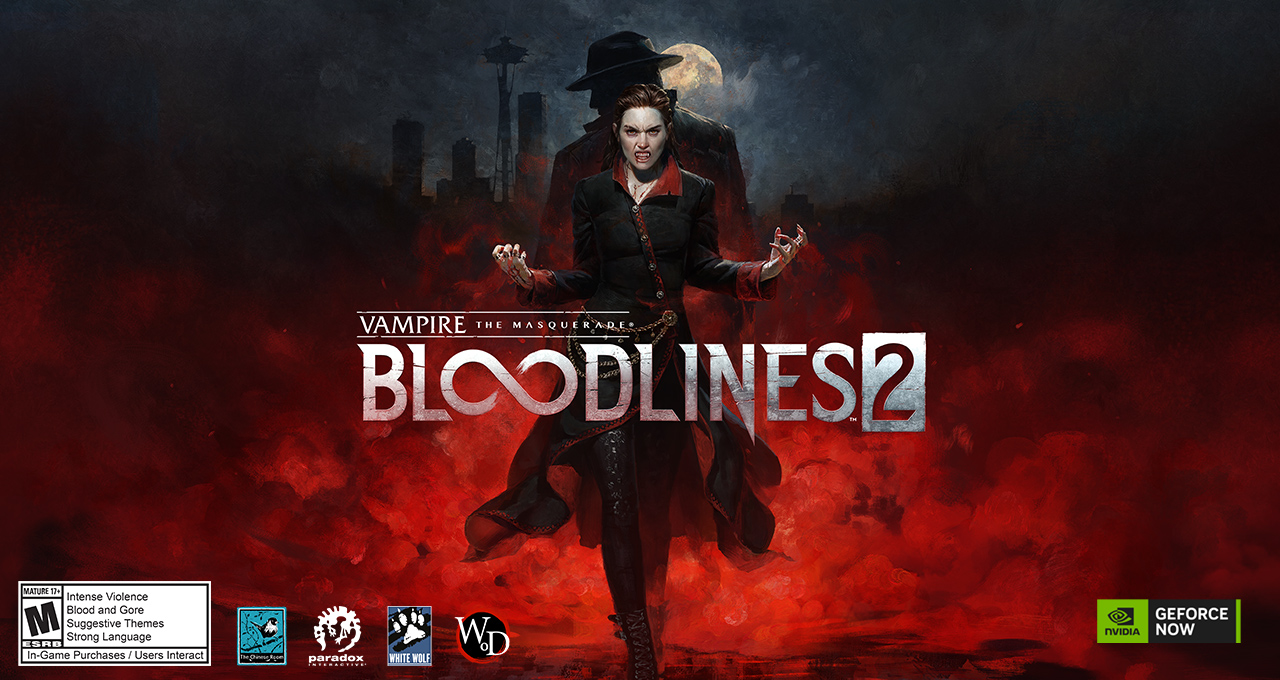

Fangs Out, Frames Up: Vampire: The Masquerade Bloodlines 2 Leads a Killer GFN Thursdayblogs.nvidia.comThe nights grow longer and the shadows get bolder with Vampire The Masquerade: Bloodlines 2 on GeForce NOW, launching with GeForce RTX 5080-power.Members can sink their teeth into the action role-playing game from Paradox Interactive as part of nine games coming to the cloud this week, including NINJA GAIDEN 4.Be among the first to play The Outer Worlds 2 with early access available in the cloud starting tomorrow, Oct. 24.https://blogs.nvidia.com/wp-content/uploads/2025/10/gfn-server-rollout-map-sofia-server-light-up-16x9-1.mp4Atlanta is the latest region to get GeForce RTX 5080-class power, with Sofia, Bulgaria, coming next. Stay tuned to GFN Thursday for updates as more regions upgrade to NVIDIA Blackwell RTX. Follow along with the latest progress on the server rollout page.The Night Belongs to the CloudDont invite them in just stream.Vampire: The Masquerade Bloodlines 2, Paradoxs sequel to the cult classic, invites fans to sink their fangs into Seattles dark nightlife, putting players in the immortal boots of Phyre the newly awakened Elder vampire with a mysterious voice in their head. In every dark corner hides a new alliance or a rival, and every choice carves a path through the bloody politics of the night.Master Seattles secrets and the supernatural abilities of any chosen clan shadow hunting with the Banu Haqim, blood bending with the Tremere or going fists first as a Brujah. Every story twist and council drama is shaped by dialogue, alliances and whispers from both friends and the ever-present stranger in the protagonists mind.Every neon-lit block streams beautifully on GeForce NOW, with GeForce RTX 5080-class power rolling out for the highest frame rates and sharpest graphics in the cloud no waiting for downloads or mortal PC specs required.Where Shadows Fall, Legends RiseSlice first, ask questions never.NINJA GAIDEN 4, a lightning-fast action-adventure title from Team NINJA, slices its way back into the spotlight, packed with brutal, razor-sharp combat and featuring a new protagonist, Yakumo. Known for its intensity, precision and stylish flair, the game demands focus and rewards mastery.This new entry throws Yakumo into a deadly conflict blending myth and modern chaos. Explore sprawling levels filled with relentless enemies, cinematic boss battles that punish hesitation and a fluid combat system that chains combos together like a storm of steel. Every encounter feels like a duel where speed, timing and finesse define survival.On GeForce NOW, NINJA GAIDEN 4 reaches its sharpest edge. No installs or massive downloads stand in the way gain instant access with cloud-powered performance that keeps every slash crisp and every dodge responsive. Whether running on a high-end rig, a laptop or even a mobile device, the action always hits at peak intensity with GeForce NOW.Boarding Pass to ChaosSpace has a new HR problem.Early access for The Outer Worlds 2 is reaching the cloud, bringing all the offbeat charm, sharp wit and spacefaring chaos the series is loved for. From Obsidian Entertainment, the masters of branching stories and immersive worlds, this sequel leans even harder into meaningful choices and unexpected consequences.On GeForce NOW, hopping into early access means instant boarding with no installs or waiting around. Every quip, shootout and twist in the storyline streams smoothly, no matter the screen. Its the perfect way to jump into space mischief before the game fully launches in the cloud on Wednesday, Oct. 29.Roaring Good GamesLife finds a way again. Frontier Developments returns with Jurassic World Evolution 3, the next installment of the park-management series that lets players design, build and wrangle their own prehistoric paradise. Bigger storms, smarter dinosaurs and even bolder decisions keep every moment thrilling because in this world, control is just an illusion.In addition, members can look for the following:NINJA GAIDEN 4 (New release on Steam and Xbox, available on PC Game Pass, Oct. 20)Jurassic World Evolution 3 (New release on Steam, Oct. 21)Painkiller (New release on Steam, Oct. 21)Vampire: The Masquerade Bloodlines 2 (New release on Steam, Oct. 21, GeForce RTX 5080-ready)The Outer Worlds 2 Advanced Access (New release on Steam, Battle.net and Xbox, available on PC Game Pass, Oct. 24, GeForce RTX 5080-ready)Tormented Souls 2 (New release on Steam and Xbox, available on PC Game Pass, Oct. 23)Super Fantasy Kingdom (New release on Steam, Oct. 24)VEIN (New release on Steam, Oct. 24)Tom Clancys Splinter Cell: Pandora Tomorrow (Steam)What are you planning to play this weekend? Let us know on X or in the comments below.What anime would you want to turn into a video game, or vice versa? NVIDIA GeForce NOW (@NVIDIAGFN) October 22, 20250 Commentaires ·0 PartsConnectez-vous pour aimer, partager et commenter!

Fangs Out, Frames Up: Vampire: The Masquerade Bloodlines 2 Leads a Killer GFN Thursdayblogs.nvidia.comThe nights grow longer and the shadows get bolder with Vampire The Masquerade: Bloodlines 2 on GeForce NOW, launching with GeForce RTX 5080-power.Members can sink their teeth into the action role-playing game from Paradox Interactive as part of nine games coming to the cloud this week, including NINJA GAIDEN 4.Be among the first to play The Outer Worlds 2 with early access available in the cloud starting tomorrow, Oct. 24.https://blogs.nvidia.com/wp-content/uploads/2025/10/gfn-server-rollout-map-sofia-server-light-up-16x9-1.mp4Atlanta is the latest region to get GeForce RTX 5080-class power, with Sofia, Bulgaria, coming next. Stay tuned to GFN Thursday for updates as more regions upgrade to NVIDIA Blackwell RTX. Follow along with the latest progress on the server rollout page.The Night Belongs to the CloudDont invite them in just stream.Vampire: The Masquerade Bloodlines 2, Paradoxs sequel to the cult classic, invites fans to sink their fangs into Seattles dark nightlife, putting players in the immortal boots of Phyre the newly awakened Elder vampire with a mysterious voice in their head. In every dark corner hides a new alliance or a rival, and every choice carves a path through the bloody politics of the night.Master Seattles secrets and the supernatural abilities of any chosen clan shadow hunting with the Banu Haqim, blood bending with the Tremere or going fists first as a Brujah. Every story twist and council drama is shaped by dialogue, alliances and whispers from both friends and the ever-present stranger in the protagonists mind.Every neon-lit block streams beautifully on GeForce NOW, with GeForce RTX 5080-class power rolling out for the highest frame rates and sharpest graphics in the cloud no waiting for downloads or mortal PC specs required.Where Shadows Fall, Legends RiseSlice first, ask questions never.NINJA GAIDEN 4, a lightning-fast action-adventure title from Team NINJA, slices its way back into the spotlight, packed with brutal, razor-sharp combat and featuring a new protagonist, Yakumo. Known for its intensity, precision and stylish flair, the game demands focus and rewards mastery.This new entry throws Yakumo into a deadly conflict blending myth and modern chaos. Explore sprawling levels filled with relentless enemies, cinematic boss battles that punish hesitation and a fluid combat system that chains combos together like a storm of steel. Every encounter feels like a duel where speed, timing and finesse define survival.On GeForce NOW, NINJA GAIDEN 4 reaches its sharpest edge. No installs or massive downloads stand in the way gain instant access with cloud-powered performance that keeps every slash crisp and every dodge responsive. Whether running on a high-end rig, a laptop or even a mobile device, the action always hits at peak intensity with GeForce NOW.Boarding Pass to ChaosSpace has a new HR problem.Early access for The Outer Worlds 2 is reaching the cloud, bringing all the offbeat charm, sharp wit and spacefaring chaos the series is loved for. From Obsidian Entertainment, the masters of branching stories and immersive worlds, this sequel leans even harder into meaningful choices and unexpected consequences.On GeForce NOW, hopping into early access means instant boarding with no installs or waiting around. Every quip, shootout and twist in the storyline streams smoothly, no matter the screen. Its the perfect way to jump into space mischief before the game fully launches in the cloud on Wednesday, Oct. 29.Roaring Good GamesLife finds a way again. Frontier Developments returns with Jurassic World Evolution 3, the next installment of the park-management series that lets players design, build and wrangle their own prehistoric paradise. Bigger storms, smarter dinosaurs and even bolder decisions keep every moment thrilling because in this world, control is just an illusion.In addition, members can look for the following:NINJA GAIDEN 4 (New release on Steam and Xbox, available on PC Game Pass, Oct. 20)Jurassic World Evolution 3 (New release on Steam, Oct. 21)Painkiller (New release on Steam, Oct. 21)Vampire: The Masquerade Bloodlines 2 (New release on Steam, Oct. 21, GeForce RTX 5080-ready)The Outer Worlds 2 Advanced Access (New release on Steam, Battle.net and Xbox, available on PC Game Pass, Oct. 24, GeForce RTX 5080-ready)Tormented Souls 2 (New release on Steam and Xbox, available on PC Game Pass, Oct. 23)Super Fantasy Kingdom (New release on Steam, Oct. 24)VEIN (New release on Steam, Oct. 24)Tom Clancys Splinter Cell: Pandora Tomorrow (Steam)What are you planning to play this weekend? Let us know on X or in the comments below.What anime would you want to turn into a video game, or vice versa? NVIDIA GeForce NOW (@NVIDIAGFN) October 22, 20250 Commentaires ·0 PartsConnectez-vous pour aimer, partager et commenter! -

Open Source AI Week How Developers and Contributors Are Advancing AI Innovationblogs.nvidia.comAs Open Source AI Week comes to a close, were celebrating the innovation, collaboration and community driving open-source AI forward. Catch up on the highlights and stay tuned for more announcements coming next week at NVIDIA GTC Washington, D.C.Wrapping Up a Week of Open-Source Momentum From the stages of the PyTorch Conference to workshops across Open Source AI Week, this week spotlighted the creativity and progress defining the future of open AI.Here are some highlights from the event:Honoring open-source contributions: Jonathan Dekhtiar, senior deep learning framework engineer at NVIDIA, received the PyTorch Contributor Award for his key role in designing the release mechanisms and packaging solutions for Python software and libraries that enable GPU-accelerated computing.CEO of Modular visits the NVIDIA booth: Chris Lattner, CEO of Modular and founder and chief architect of the open-source LLVM Compiler Infrastructure project, picks up the NVIDIA DGX Spark.Seven questions with founding researcher at fast.ai: Jeremy Howard, founding researcher at fast.ai and advocate for accessible deep learning, shares his insights on the future of open-source AI.In his keynote at the PyTorch Conference, Howard also highlighted the growing strength of open-source communities, recognizing NVIDIA for its leadership in advancing openly available, high-performing AI models.The one company, actually, that has stood out, head and shoulders above the others, and that is two, he said. One is Metathe creators of PyTorch. The other is NVIDIA, who, just in recent months, has created some of the worlds best models and they are open source, and they are openly licensed.vLLM Adds Upstream Support for NVIDIA Nemotron Models Open-source innovation is accelerating. NVIDIA and the vLLM team are partnering to add vLLM upstream support for NVIDIA Nemotron models, transforming open large language model serving with lightning-fast performance, efficient scaling and simplified deployment across NVIDIA GPUs.vLLMs optimized inference engine empowers developers to run Nemotron models like the new Nemotron Nano 2 a highly efficient small language reasoning model with a hybrid Transformer-Mamba architecture and a configurable thinking budget.Learn more about how vLLM is accelerating open model innovation.NVIDIA Expands Open Access to Nemotron RAG Models NVIDIA is making eight NVIDIA Nemotron RAG models openly available on Hugging Face, expanding access beyond research to include the full suite of commercial models.This release gives developers a wider range of tools to build retrieval-augmented generation (RAG) systems, improve search and ranking accuracy, and extract structured data from complex documents.The newly released models include Llama-Embed-Nemotron-8B, which provides multilingual text embeddings built on Llama 3.1, and Omni-Embed-Nemotron-3B, which supports cross-modal retrieval for text, images, audio and video.Developers can also access six production-grade models for text embedding, reranking and PDF data extraction key components for real-world retrieval and document intelligence applications.With these open-source models, developers, researchers and organizations can more easily integrate and experiment with RAG-based systems.Developers can get started with Nemotron RAG on Hugging Face.Building and Training AI Models With the Latest Open Datasets NVIDIA is expanding access to high-quality open datasets that help developers overcome the challenges of large-scale data collection and focus on building advanced AI systems.The latest release includes a collection of Nemotron-Personas datasets for Sovereign AI. Each dataset is fully synthetic and grounded in real-world demographic, geographic and cultural data with no personally identifiable information. The growing collection, which features personas from the U.S., Japan and India, enables model builders to design AI agents and systems that reflect the linguistic, social and contextual nuance of the nations they serve.NVIDIA earlier this year released the NVIDIA PhysicalAI Open Datasets onHuggingFace, featuringmore than 7 million robotics trajectories and 1,000OpenUSD SimReady assets. Downloaded more than 6 million times, the datasets combines realworld and synthetic data from the NVIDIACosmos, Isaac, DRIVE andMetropolis platforms to kickstart physical AI development.NVIDIA Inception Startups Highlight AI Innovation At the PyTorch Conferences Startup Showcase, 11 startups including members from the NVIDIA Inception program are sharing their work developing practical AI applications and connecting with investors, potential customers and peers.Runhouse, an AI infrastructure startup optimizing model deployment and orchestration, was crowned the 2025 PyTorch Startup Showcase Award Winner. The Community Choice Award was presented to CuraVoice, with CEO Sakhi Patel, CTO Shrey Modi, and advisor Rahul Vishwakarma accepting the award on behalf of the team.CuraVoice provides an AI-powered voice simulation platform powered by NVIDIA Riva for speech recognition and text-to-speech, and NVIDIA NeMo for conversational AI models for healthcare students and professionals, offering interactive exercises and adaptive feedback to improve patient communication skills.Shrey Modi, CTO of CuraVoice, accepts the PyTorch Startup Showcase Community Choice Award.In addition to CuraVoice, other Inception members, including Backfield AI, Graphsignal, Okahu AI, Snapshot AI and XOR, were featured participants in the Startup Showcase.Snapshot AI delivers actionable, real-time insights to engineering teams using recursive retrieval-augmented generation (RAG), transformers and multimodal AI. The companys platform taps into the NVIDIA CUDA Toolkit to deliver high-performance analysis and rapid insights at scale.XOR is a cybersecurity startup offering AI agents that automatically fix vulnerabilities in the supply chain of other AIs. The company helps enterprises eliminate vulnerabilities while complying with regulatory requirements. XORs agentic technology uses NVIDIA cuVS vector search for indexing, real-time retrieval and code analysis. The company also uses GPU-based machine learning to train models to detect hidden backdoor patterns and prioritize of high-value security outcomes.From left to right: Dmitri Melikyan (Graphsignal, Inc.), Tobias Heldt (XOR), Youssef Harkati (BrightOnLABS), Vidhi Kothari (Seer Systems), Jonah Sargent (Node One) and Scott Suchyta (NVIDIA) at the Startup Showcase.Highlights From Open Source AI Week Attendees of Open Source AI Week are getting a peek at the latest advancements and creative projects that are shaping the future of open technology.Heres a look at whats happening onsite:The worlds smallest AI supercomputer: NVIDIA DGX Spark represents the cutting edge of AI computing hardware for enterprise and research applications.Humanoids and robot dogs, up close: Unitree robots are on display, captivating attendees with advanced mobility powered by the latest robotics technology.Why open source is important: Learn how it can empower developers to build stronger communities, iterate on features, and seamlessly integrate the best of open source AI.Accelerating AI Research Through Open Models A study from the Center for Security and Emerging Technology (CSET) published today shows how access to open model weights unlocks more opportunities for experimentation, customization and collaboration across the global research community.The report outlines seven high-impact research use cases where open models are making a difference including fine-tuning, continued pretraining, model compression and interpretability.With access to weights, developers can adapt models for new domains, explore new architectures and extend functionality to meet their specific needs. This also supports trust and reproducibility. When teams can run experiments on their own hardware, share updates and revisit earlier versions, they gain control and confidence in their results.Additionally, the study found that nearly all open model users share their data, weights and code, building a fast-growing culture of collaboration. This open exchange of tools and knowledge strengthens partnerships between academia, startups and enterprises, facilitating innovation.NVIDIA is committed to empowering the research community through the NVIDIA Nemotron family of open models featuring not just open weights, but also pretraining and post-training datasets, detailed training recipes, and research papers that share the latest breakthroughs.Read the full CSET study to learn how open models are helping the AI community move forward.Advancing Embodied Intelligence Through Open-Source Innovation At the PyTorch Conference, Jim Fan, director of robotics and distinguished research scientist at NVIDIA, discussed the Physical Turing Test a way of measuring the performance of intelligent machines in the physical world.With conversational AI now capable of fluent, lifelike communication, Fan noted that the next challenge is enabling machines to act with similar naturalism. The Physical Turing Test asks: can an intelligent machine perform a real-world task so fluidly that a human cannot tell whether a person or a robot completed it?Fan highlighted that progress in embodied AI and physical AI depends on generating large amounts of diverse data, access to open robot foundation models and simulation frameworks and walked through a unified workflow for developing embodied AI.With synthetic data workflows like NVIDIA Isaac GR00T-Dreams built on NVIDIA Cosmos world foundation models developers can generate virtual worlds from images and prompts, speeding the creation of large sets of diverse and physically accurate data.That data can then be used to post-train NVIDIA Isaac GR00T N open foundation models for generalized humanoid robot reasoning and skills. But before the models are deployed in the real world, these new robot skills need to be tested in simulation.Open simulation and learning frameworks such as NVIDIA Isaac Sim and Isaac Lab allow robots to practice countless times across millions of virtual environments before operating in the real world, dramatically accelerating learning and deployment cycles.Plus, with Newton, an open-source, differentiable physics engine built on NVIDIA Warp and OpenUSD, developers can bring high-fidelity simulation to complex robotic dynamics such as motion, balance and contact reducing the simulation-to-real gap.This accelerates the creation of physically capable AI systems that learn faster, perform more safely and operate effectively in real-world environments.However, scaling embodied intelligence isnt just about compute its about access. Fan reaffirmed NVIDIAs commitment to open source, emphasizing how the companys frameworks and foundation models are shared to empower developers and researchers globally.Developers can get started with NVIDIAs open embodied and physical AI models on Hugging Face.LlamaEmbedNemotron8B Ranks Among Top Open Models for Multilingual Retrieval NVIDIAs LlamaEmbedNemotron8B model has been recognized as the top open and portable model on the Multilingual Text Embedding Benchmark leaderboard.Built on the metallama/Llama3.18B architecture, LlamaEmbedNemotron8B is a research text embedding model that converts text into 4,096dimensional vector representations. Designed for flexibility, it supports a wide range of use cases, including retrieval, reranking, semantic similarity and classification across more than 1,000 languages.Trained on a diverse collection of 16 million querydocument pairs half from public sources and half synthetically generated the model benefits from refined data generation techniques, hardnegative mining and modelmerging approaches that contribute to its broad generalization capabilities.This result builds on NVIDIAs ongoing research in open, highperforming AI models. Following earlier leaderboard recognition for the LlamaNeMoRetrieverColEmbed model, the success of LlamaEmbedNemotron8B highlights the value of openness, transparency and collaboration in advancing AI for the developer community.Check out Llama-Embed-Nemotron-8B on Hugging Face, and learn more about the model, including architectural highlights, training methodology and performance evaluation.What Open Source Teaches Us About Making AI BetterOpen models are shaping the future of AI, enabling developers, enterprises and governments to innovate with transparency, customization and trust. In the latest episode of the NVIDIA AI Podcast, NVIDIAs Bryan Catanzaro and Jonathan Cohen discuss how open models, datasets and research are laying the foundation for shared progress across the AI ecosystem.The NVIDIA Nemotron family of open models represents a full-stack approach to AI development, connecting model design to the underlying hardware and software that power it. By releasing Nemotron models, data and training methodologies openly, NVIDIA aims to help others refine, adapt and build upon its work, resulting in a faster exchange of ideas and more efficient systems.When we as a community come together contributing ideas, data and models we all move faster, said Catanzaro in the episode. Open technologies make that possible.Theres more happening this week at Open Source AI Week, including the start of the PyTorch Conference bringing together developers, researchers and innovators pushing the boundaries of open AI.Attendees can tune in to the special keynote address by Jim Fan, director of robotics and distinguished research scientist at NVIDIA, to hear the latest advancements in robotics from simulation and synthetic data to accelerated computing. The keynote, titled The Physical Turing Test: Solving General Purpose Robotics, will take place on Wednesday, Oct. 22, from 9:50-10:05 a.m. PT.Andrej Karpathys Nanochat Teaches Developers How to Train LLMs in Four Hours Computer scientist Andrej Karpathy recently introduced Nanochat, calling it the best ChatGPT that $100 can buy. Nanochat is an open-source, full-stack large language model (LLM) implementation built for transparency and experimentation. In about 8,000 lines of minimal, dependency-light code, Nanochat runs the entire LLM pipeline from tokenization and pretraining to fine-tuning, inference and chat all through a simple web user interface.NVIDIA is supporting Karpathys open-source Nanochat project by releasing two NVIDIA Launchables, making it easy to deploy and experiment with Nanochat across various NVIDIA GPUs.With NVIDIA Launchables, developers can train and interact with their own conversational model in hours with a single click. The Launchables dynamically support different-sized GPUs including NVIDIA H100 and L40S GPUs on various clouds without need for modification. They also automatically work on any eight-GPU instance on NVIDIA Brev, so developers can get compute access immediately.The first 10 users to deploy these Launchables will also receive free compute access to NVIDIA H100 or L40S GPUs.Start training with Nanochat by deploying a Launchable:Nanochat Speedrun on NVIDIA H100Nanochat Speedrun on NVIDIA L40SAndrej Karpathys Next Experiments Begin With NVIDIA DGX SparkToday, Karpathy received an NVIDIA DGX Spark the worlds smallest AI supercomputer, designed to bring the power of Blackwell right to a developers desktop. With up to a petaflop of AI processing power and 128GB of unified memory in a compact form factor, DGX Spark empowers innovators like Karpathy to experiment, fine-tune and run massive models locally.Building the Future of AI With PyTorch and NVIDIA PyTorch, the fastest-growing AI framework, derives its performance from the NVIDIA CUDA platform and uses the Python programming language to unlock developer productivity. This year, NVIDIA added Python as a first-class language to the CUDA platform, giving the PyTorch developer community greater access to CUDA.CUDA Python includes key components that make GPU acceleration in Python easier than ever, with built-in support for kernel fusion, extension module integration and simplified packaging for fast deployment.Following PyTorchs open collaboration model, CUDA Python is available on GitHub and PyPI.According to PyPI Stats, PyTorch averaged overtwomillion daily downloads, peaking at2,303,217onOctober14,andhad 65million total downloads last month.Every month, developers worldwide download hundreds of millions of NVIDIA libraries including CUDA, cuDNN, cuBLAS and CUTLASS mostly within Python and PyTorch environments. CUDA Python provides nvmath-python, a new library that acts as the bridge between Python code and these highly optimized GPU libraries.Plus, kernel enhancements and support for next-generation frameworks make NVIDIA accelerated computing more efficient, adaptable and widely accessible.NVIDIA maintains a long-standing collaboration with the PyTorch community through open-source contributions and technical leadership, as well as by sponsoring and participating in community events and activations.At PyTorch Conference 2025 in San Francisco, NVIDIA will host a keynote address, five technical sessions and nine poster presentations.NVIDIAs on the ground at Open Source AI Week. Stay tuned for a celebration highlighting the spirit of innovation, collaboration and community that drives open-source AI forward. Follow NVIDIA AI Developer on social channels for additional news and insights.NVIDIA Spotlights Open Source Innovation Open Source AI Week kicks off on Monday with a series of hackathons, workshops and meetups spotlighting the latest advances in AI, machine learning and open-source innovation.The event brings together leading organizations, researchers and open-source communities to share knowledge, collaborate on tools and explore how openness accelerates AI development.NVIDIA continues to expand access to advanced AI innovation by providing open-source tools, models and datasets designed to empower developers. With more than 1,000 open-source tools on NVIDIA GitHub repositories and over 500 models and 100 datasets on the NVIDIA Hugging Face collections, NVIDIA is accelerating the pace of open, collaborative AI development.Over the past year, NVIDIA has become the top contributor in Hugging Face repositories, reflecting a deep commitment to sharing models, frameworks and research that empower the community.https://blogs.nvidia.com/wp-content/uploads/2025/10/1016.mp4Openly available models, tools and datasets are essential to driving innovation and progress. By empowering anyone to use, modify and share technology, it fosters transparency and accelerates discovery, fueling breakthroughs that benefit both industry and communities alike. Thats why NVIDIA is committed to supporting the open source ecosystem.Were on the ground all week stay tuned for a celebration highlighting the spirit of innovation, collaboration and community that drives open-source AI forward, with the PyTorch Conference serving as the flagship event.0 Commentaires ·0 Parts

Open Source AI Week How Developers and Contributors Are Advancing AI Innovationblogs.nvidia.comAs Open Source AI Week comes to a close, were celebrating the innovation, collaboration and community driving open-source AI forward. Catch up on the highlights and stay tuned for more announcements coming next week at NVIDIA GTC Washington, D.C.Wrapping Up a Week of Open-Source Momentum From the stages of the PyTorch Conference to workshops across Open Source AI Week, this week spotlighted the creativity and progress defining the future of open AI.Here are some highlights from the event:Honoring open-source contributions: Jonathan Dekhtiar, senior deep learning framework engineer at NVIDIA, received the PyTorch Contributor Award for his key role in designing the release mechanisms and packaging solutions for Python software and libraries that enable GPU-accelerated computing.CEO of Modular visits the NVIDIA booth: Chris Lattner, CEO of Modular and founder and chief architect of the open-source LLVM Compiler Infrastructure project, picks up the NVIDIA DGX Spark.Seven questions with founding researcher at fast.ai: Jeremy Howard, founding researcher at fast.ai and advocate for accessible deep learning, shares his insights on the future of open-source AI.In his keynote at the PyTorch Conference, Howard also highlighted the growing strength of open-source communities, recognizing NVIDIA for its leadership in advancing openly available, high-performing AI models.The one company, actually, that has stood out, head and shoulders above the others, and that is two, he said. One is Metathe creators of PyTorch. The other is NVIDIA, who, just in recent months, has created some of the worlds best models and they are open source, and they are openly licensed.vLLM Adds Upstream Support for NVIDIA Nemotron Models Open-source innovation is accelerating. NVIDIA and the vLLM team are partnering to add vLLM upstream support for NVIDIA Nemotron models, transforming open large language model serving with lightning-fast performance, efficient scaling and simplified deployment across NVIDIA GPUs.vLLMs optimized inference engine empowers developers to run Nemotron models like the new Nemotron Nano 2 a highly efficient small language reasoning model with a hybrid Transformer-Mamba architecture and a configurable thinking budget.Learn more about how vLLM is accelerating open model innovation.NVIDIA Expands Open Access to Nemotron RAG Models NVIDIA is making eight NVIDIA Nemotron RAG models openly available on Hugging Face, expanding access beyond research to include the full suite of commercial models.This release gives developers a wider range of tools to build retrieval-augmented generation (RAG) systems, improve search and ranking accuracy, and extract structured data from complex documents.The newly released models include Llama-Embed-Nemotron-8B, which provides multilingual text embeddings built on Llama 3.1, and Omni-Embed-Nemotron-3B, which supports cross-modal retrieval for text, images, audio and video.Developers can also access six production-grade models for text embedding, reranking and PDF data extraction key components for real-world retrieval and document intelligence applications.With these open-source models, developers, researchers and organizations can more easily integrate and experiment with RAG-based systems.Developers can get started with Nemotron RAG on Hugging Face.Building and Training AI Models With the Latest Open Datasets NVIDIA is expanding access to high-quality open datasets that help developers overcome the challenges of large-scale data collection and focus on building advanced AI systems.The latest release includes a collection of Nemotron-Personas datasets for Sovereign AI. Each dataset is fully synthetic and grounded in real-world demographic, geographic and cultural data with no personally identifiable information. The growing collection, which features personas from the U.S., Japan and India, enables model builders to design AI agents and systems that reflect the linguistic, social and contextual nuance of the nations they serve.NVIDIA earlier this year released the NVIDIA PhysicalAI Open Datasets onHuggingFace, featuringmore than 7 million robotics trajectories and 1,000OpenUSD SimReady assets. Downloaded more than 6 million times, the datasets combines realworld and synthetic data from the NVIDIACosmos, Isaac, DRIVE andMetropolis platforms to kickstart physical AI development.NVIDIA Inception Startups Highlight AI Innovation At the PyTorch Conferences Startup Showcase, 11 startups including members from the NVIDIA Inception program are sharing their work developing practical AI applications and connecting with investors, potential customers and peers.Runhouse, an AI infrastructure startup optimizing model deployment and orchestration, was crowned the 2025 PyTorch Startup Showcase Award Winner. The Community Choice Award was presented to CuraVoice, with CEO Sakhi Patel, CTO Shrey Modi, and advisor Rahul Vishwakarma accepting the award on behalf of the team.CuraVoice provides an AI-powered voice simulation platform powered by NVIDIA Riva for speech recognition and text-to-speech, and NVIDIA NeMo for conversational AI models for healthcare students and professionals, offering interactive exercises and adaptive feedback to improve patient communication skills.Shrey Modi, CTO of CuraVoice, accepts the PyTorch Startup Showcase Community Choice Award.In addition to CuraVoice, other Inception members, including Backfield AI, Graphsignal, Okahu AI, Snapshot AI and XOR, were featured participants in the Startup Showcase.Snapshot AI delivers actionable, real-time insights to engineering teams using recursive retrieval-augmented generation (RAG), transformers and multimodal AI. The companys platform taps into the NVIDIA CUDA Toolkit to deliver high-performance analysis and rapid insights at scale.XOR is a cybersecurity startup offering AI agents that automatically fix vulnerabilities in the supply chain of other AIs. The company helps enterprises eliminate vulnerabilities while complying with regulatory requirements. XORs agentic technology uses NVIDIA cuVS vector search for indexing, real-time retrieval and code analysis. The company also uses GPU-based machine learning to train models to detect hidden backdoor patterns and prioritize of high-value security outcomes.From left to right: Dmitri Melikyan (Graphsignal, Inc.), Tobias Heldt (XOR), Youssef Harkati (BrightOnLABS), Vidhi Kothari (Seer Systems), Jonah Sargent (Node One) and Scott Suchyta (NVIDIA) at the Startup Showcase.Highlights From Open Source AI Week Attendees of Open Source AI Week are getting a peek at the latest advancements and creative projects that are shaping the future of open technology.Heres a look at whats happening onsite:The worlds smallest AI supercomputer: NVIDIA DGX Spark represents the cutting edge of AI computing hardware for enterprise and research applications.Humanoids and robot dogs, up close: Unitree robots are on display, captivating attendees with advanced mobility powered by the latest robotics technology.Why open source is important: Learn how it can empower developers to build stronger communities, iterate on features, and seamlessly integrate the best of open source AI.Accelerating AI Research Through Open Models A study from the Center for Security and Emerging Technology (CSET) published today shows how access to open model weights unlocks more opportunities for experimentation, customization and collaboration across the global research community.The report outlines seven high-impact research use cases where open models are making a difference including fine-tuning, continued pretraining, model compression and interpretability.With access to weights, developers can adapt models for new domains, explore new architectures and extend functionality to meet their specific needs. This also supports trust and reproducibility. When teams can run experiments on their own hardware, share updates and revisit earlier versions, they gain control and confidence in their results.Additionally, the study found that nearly all open model users share their data, weights and code, building a fast-growing culture of collaboration. This open exchange of tools and knowledge strengthens partnerships between academia, startups and enterprises, facilitating innovation.NVIDIA is committed to empowering the research community through the NVIDIA Nemotron family of open models featuring not just open weights, but also pretraining and post-training datasets, detailed training recipes, and research papers that share the latest breakthroughs.Read the full CSET study to learn how open models are helping the AI community move forward.Advancing Embodied Intelligence Through Open-Source Innovation At the PyTorch Conference, Jim Fan, director of robotics and distinguished research scientist at NVIDIA, discussed the Physical Turing Test a way of measuring the performance of intelligent machines in the physical world.With conversational AI now capable of fluent, lifelike communication, Fan noted that the next challenge is enabling machines to act with similar naturalism. The Physical Turing Test asks: can an intelligent machine perform a real-world task so fluidly that a human cannot tell whether a person or a robot completed it?Fan highlighted that progress in embodied AI and physical AI depends on generating large amounts of diverse data, access to open robot foundation models and simulation frameworks and walked through a unified workflow for developing embodied AI.With synthetic data workflows like NVIDIA Isaac GR00T-Dreams built on NVIDIA Cosmos world foundation models developers can generate virtual worlds from images and prompts, speeding the creation of large sets of diverse and physically accurate data.That data can then be used to post-train NVIDIA Isaac GR00T N open foundation models for generalized humanoid robot reasoning and skills. But before the models are deployed in the real world, these new robot skills need to be tested in simulation.Open simulation and learning frameworks such as NVIDIA Isaac Sim and Isaac Lab allow robots to practice countless times across millions of virtual environments before operating in the real world, dramatically accelerating learning and deployment cycles.Plus, with Newton, an open-source, differentiable physics engine built on NVIDIA Warp and OpenUSD, developers can bring high-fidelity simulation to complex robotic dynamics such as motion, balance and contact reducing the simulation-to-real gap.This accelerates the creation of physically capable AI systems that learn faster, perform more safely and operate effectively in real-world environments.However, scaling embodied intelligence isnt just about compute its about access. Fan reaffirmed NVIDIAs commitment to open source, emphasizing how the companys frameworks and foundation models are shared to empower developers and researchers globally.Developers can get started with NVIDIAs open embodied and physical AI models on Hugging Face.LlamaEmbedNemotron8B Ranks Among Top Open Models for Multilingual Retrieval NVIDIAs LlamaEmbedNemotron8B model has been recognized as the top open and portable model on the Multilingual Text Embedding Benchmark leaderboard.Built on the metallama/Llama3.18B architecture, LlamaEmbedNemotron8B is a research text embedding model that converts text into 4,096dimensional vector representations. Designed for flexibility, it supports a wide range of use cases, including retrieval, reranking, semantic similarity and classification across more than 1,000 languages.Trained on a diverse collection of 16 million querydocument pairs half from public sources and half synthetically generated the model benefits from refined data generation techniques, hardnegative mining and modelmerging approaches that contribute to its broad generalization capabilities.This result builds on NVIDIAs ongoing research in open, highperforming AI models. Following earlier leaderboard recognition for the LlamaNeMoRetrieverColEmbed model, the success of LlamaEmbedNemotron8B highlights the value of openness, transparency and collaboration in advancing AI for the developer community.Check out Llama-Embed-Nemotron-8B on Hugging Face, and learn more about the model, including architectural highlights, training methodology and performance evaluation.What Open Source Teaches Us About Making AI BetterOpen models are shaping the future of AI, enabling developers, enterprises and governments to innovate with transparency, customization and trust. In the latest episode of the NVIDIA AI Podcast, NVIDIAs Bryan Catanzaro and Jonathan Cohen discuss how open models, datasets and research are laying the foundation for shared progress across the AI ecosystem.The NVIDIA Nemotron family of open models represents a full-stack approach to AI development, connecting model design to the underlying hardware and software that power it. By releasing Nemotron models, data and training methodologies openly, NVIDIA aims to help others refine, adapt and build upon its work, resulting in a faster exchange of ideas and more efficient systems.When we as a community come together contributing ideas, data and models we all move faster, said Catanzaro in the episode. Open technologies make that possible.Theres more happening this week at Open Source AI Week, including the start of the PyTorch Conference bringing together developers, researchers and innovators pushing the boundaries of open AI.Attendees can tune in to the special keynote address by Jim Fan, director of robotics and distinguished research scientist at NVIDIA, to hear the latest advancements in robotics from simulation and synthetic data to accelerated computing. The keynote, titled The Physical Turing Test: Solving General Purpose Robotics, will take place on Wednesday, Oct. 22, from 9:50-10:05 a.m. PT.Andrej Karpathys Nanochat Teaches Developers How to Train LLMs in Four Hours Computer scientist Andrej Karpathy recently introduced Nanochat, calling it the best ChatGPT that $100 can buy. Nanochat is an open-source, full-stack large language model (LLM) implementation built for transparency and experimentation. In about 8,000 lines of minimal, dependency-light code, Nanochat runs the entire LLM pipeline from tokenization and pretraining to fine-tuning, inference and chat all through a simple web user interface.NVIDIA is supporting Karpathys open-source Nanochat project by releasing two NVIDIA Launchables, making it easy to deploy and experiment with Nanochat across various NVIDIA GPUs.With NVIDIA Launchables, developers can train and interact with their own conversational model in hours with a single click. The Launchables dynamically support different-sized GPUs including NVIDIA H100 and L40S GPUs on various clouds without need for modification. They also automatically work on any eight-GPU instance on NVIDIA Brev, so developers can get compute access immediately.The first 10 users to deploy these Launchables will also receive free compute access to NVIDIA H100 or L40S GPUs.Start training with Nanochat by deploying a Launchable:Nanochat Speedrun on NVIDIA H100Nanochat Speedrun on NVIDIA L40SAndrej Karpathys Next Experiments Begin With NVIDIA DGX SparkToday, Karpathy received an NVIDIA DGX Spark the worlds smallest AI supercomputer, designed to bring the power of Blackwell right to a developers desktop. With up to a petaflop of AI processing power and 128GB of unified memory in a compact form factor, DGX Spark empowers innovators like Karpathy to experiment, fine-tune and run massive models locally.Building the Future of AI With PyTorch and NVIDIA PyTorch, the fastest-growing AI framework, derives its performance from the NVIDIA CUDA platform and uses the Python programming language to unlock developer productivity. This year, NVIDIA added Python as a first-class language to the CUDA platform, giving the PyTorch developer community greater access to CUDA.CUDA Python includes key components that make GPU acceleration in Python easier than ever, with built-in support for kernel fusion, extension module integration and simplified packaging for fast deployment.Following PyTorchs open collaboration model, CUDA Python is available on GitHub and PyPI.According to PyPI Stats, PyTorch averaged overtwomillion daily downloads, peaking at2,303,217onOctober14,andhad 65million total downloads last month.Every month, developers worldwide download hundreds of millions of NVIDIA libraries including CUDA, cuDNN, cuBLAS and CUTLASS mostly within Python and PyTorch environments. CUDA Python provides nvmath-python, a new library that acts as the bridge between Python code and these highly optimized GPU libraries.Plus, kernel enhancements and support for next-generation frameworks make NVIDIA accelerated computing more efficient, adaptable and widely accessible.NVIDIA maintains a long-standing collaboration with the PyTorch community through open-source contributions and technical leadership, as well as by sponsoring and participating in community events and activations.At PyTorch Conference 2025 in San Francisco, NVIDIA will host a keynote address, five technical sessions and nine poster presentations.NVIDIAs on the ground at Open Source AI Week. Stay tuned for a celebration highlighting the spirit of innovation, collaboration and community that drives open-source AI forward. Follow NVIDIA AI Developer on social channels for additional news and insights.NVIDIA Spotlights Open Source Innovation Open Source AI Week kicks off on Monday with a series of hackathons, workshops and meetups spotlighting the latest advances in AI, machine learning and open-source innovation.The event brings together leading organizations, researchers and open-source communities to share knowledge, collaborate on tools and explore how openness accelerates AI development.NVIDIA continues to expand access to advanced AI innovation by providing open-source tools, models and datasets designed to empower developers. With more than 1,000 open-source tools on NVIDIA GitHub repositories and over 500 models and 100 datasets on the NVIDIA Hugging Face collections, NVIDIA is accelerating the pace of open, collaborative AI development.Over the past year, NVIDIA has become the top contributor in Hugging Face repositories, reflecting a deep commitment to sharing models, frameworks and research that empower the community.https://blogs.nvidia.com/wp-content/uploads/2025/10/1016.mp4Openly available models, tools and datasets are essential to driving innovation and progress. By empowering anyone to use, modify and share technology, it fosters transparency and accelerates discovery, fueling breakthroughs that benefit both industry and communities alike. Thats why NVIDIA is committed to supporting the open source ecosystem.Were on the ground all week stay tuned for a celebration highlighting the spirit of innovation, collaboration and community that drives open-source AI forward, with the PyTorch Conference serving as the flagship event.0 Commentaires ·0 Parts -

NVIDIA GTC Washington, DC: Live Updates on Whats Next in AIblogs.nvidia.comCountdown to GTC Washington, DC: What to Watch Next Week Next week, Washington, D.C., becomes the center of gravity for artificial intelligence. NVIDIA GTC Washington, D.C., lands at the Walter E. Washington Convention Center Oct. 27-29 and for those who care about where computing is headed, this is the moment to pay attention.The headline act: NVIDIA founder and CEO Jensen Huangs keynote address on Tuesday, Oct. 28, at 12 p.m. ET. Expect more than product news expect a roadmap for how AI will reshape industries, infrastructure and the public sector.Before that, the pregame show kicks off at 8:30 a.m. ET with Brad Gerstner, Patrick Moorhead and Kristina Partsinevelos offering sharp takes on whats coming.But GTC offers more than a keynote. It provides full immersion: 70+ sessions, hands-on workshops and demos covering everything from agentic AI and robotics to quantum computing and AI-native telecom networks. Its where developers meet decision-makers, and ideas turn into action. Exhibits-only passes are still available.Bookmark this space. Starting Monday, NVIDIA will live-blog the news, the color and the context, straight from the floor.0 Commentaires ·0 Parts

NVIDIA GTC Washington, DC: Live Updates on Whats Next in AIblogs.nvidia.comCountdown to GTC Washington, DC: What to Watch Next Week Next week, Washington, D.C., becomes the center of gravity for artificial intelligence. NVIDIA GTC Washington, D.C., lands at the Walter E. Washington Convention Center Oct. 27-29 and for those who care about where computing is headed, this is the moment to pay attention.The headline act: NVIDIA founder and CEO Jensen Huangs keynote address on Tuesday, Oct. 28, at 12 p.m. ET. Expect more than product news expect a roadmap for how AI will reshape industries, infrastructure and the public sector.Before that, the pregame show kicks off at 8:30 a.m. ET with Brad Gerstner, Patrick Moorhead and Kristina Partsinevelos offering sharp takes on whats coming.But GTC offers more than a keynote. It provides full immersion: 70+ sessions, hands-on workshops and demos covering everything from agentic AI and robotics to quantum computing and AI-native telecom networks. Its where developers meet decision-makers, and ideas turn into action. Exhibits-only passes are still available.Bookmark this space. Starting Monday, NVIDIA will live-blog the news, the color and the context, straight from the floor.0 Commentaires ·0 Parts -

0 Commentaires ·0 Parts

-

GeForce NOW Brings 18 Games to the Cloud in October for a Spooky Good Timeblogs.nvidia.comEditors note: This blog has been updated to include an additional game for October,The Outer Worlds 2.October is creeping in with plenty of gaming treats. From thrilling adventures to spinetingling scares, the cloud gaming lineup is packed with 18 new games, including the highly anticipated shooter Battlefield 6, launching on GeForce NOW this month. But first, catch the six games coming this week.Miami and Warsaw, Poland, are the latest regions to get GeForce RTX 5080-class power, with Portland and Ashburn coming up next. Stay tuned to GFN Thursday for updates as more regions upgrade to Blackwell RTX. Follow along with the latest progress on the server rollout page.Portland and Ashburn will be the next regions to light up with GeForce RTX 5080-class power.This week, inZOI and Total War: Warhammer III join the lineup of GeForce RTX 5080-ready titles, both already available on the service. Look for the GeForce RTX 5080 Ready row in the app or check out the full list.Falling for New GamesCatch the games ready to play today:Train Sim World 6 (New release on Steam, Sept. 30)Alien: Rogue Incursion Evolved Edition (New release on Steam, Sept. 30)Car Dealer Simulator (Steam)Nightingale (Epic Games Store)Ready or Not (Epic Games Store)STALCRAFT: X (Steam)New GeForce RTX 5080-ready games:inZOI (Steam)Total War: Warhammer III (Steam and Epic Games Store)Catch the full list of games coming to the cloud in October:King of Meat (New release on Steam, Oct. 7)Seafarer: The Ship Sim (New release on Steam, Oct. 7)Little Nightmares III (New release on Steam, Oct. 9)Battlefield 6 (New release on Steam and EA app, Oct. 10)Ball x Pit (New release on Steam, Oct. 15)Fellowship (New release on Steam, Oct. 16)Jurassic World Evolution 3 (New release on Steam, Oct. 21)Painkiller (New release on Steam, Oct. 21)Vampire: The Masquerade Bloodlines 2 (New release on Steam, Oct. 21)Tormented Souls 2 (New release on Steam, Oct. 23)Super Fantasy Kingdom (New release on Steam, Oct. 24)Earth vs. Mars (New release on Steam, Oct. 29)The Outer Worlds 2 (New release on Steam, Battle.net and Xbox, available on PC Game Pass, Oct. 29)ARC Raiders (New release on Steam, Oct. 30)Stacked SeptemberIn addition to the 17 games announced in September, an extra dozen joined over the month, including the newly added Train Sim World 6 this week:Call of Duty: Modern Warfare III (Steam, Battle.net and Xbox, available on PC Game Pass)Field of Glory II: Medieval (Steam)Goblin Cleanup (New release on Steam)Phoenix Wright: Ace Attorney Trilogy (Steam)Professional Fishing 2 (New release on Steam)Project Winter (New release on Epic Games Store)Renown (New release on Steam)Sworn (New release on Xbox, available on PC Game Pass)Two Point Campus (Steam, Epic Games Store)Two Point Museum (Steam, Epic Games Store)Town to City (New release on Steam)What are you planning to play this weekend? Let us know on X or in the comments below.If you could only play one genre of game for the rest of your life, what would it be and why? Extra credit: screenshots or clips of that genre in action! NVIDIA GeForce NOW (@NVIDIAGFN) October 1, 20250 Commentaires ·0 Parts

GeForce NOW Brings 18 Games to the Cloud in October for a Spooky Good Timeblogs.nvidia.comEditors note: This blog has been updated to include an additional game for October,The Outer Worlds 2.October is creeping in with plenty of gaming treats. From thrilling adventures to spinetingling scares, the cloud gaming lineup is packed with 18 new games, including the highly anticipated shooter Battlefield 6, launching on GeForce NOW this month. But first, catch the six games coming this week.Miami and Warsaw, Poland, are the latest regions to get GeForce RTX 5080-class power, with Portland and Ashburn coming up next. Stay tuned to GFN Thursday for updates as more regions upgrade to Blackwell RTX. Follow along with the latest progress on the server rollout page.Portland and Ashburn will be the next regions to light up with GeForce RTX 5080-class power.This week, inZOI and Total War: Warhammer III join the lineup of GeForce RTX 5080-ready titles, both already available on the service. Look for the GeForce RTX 5080 Ready row in the app or check out the full list.Falling for New GamesCatch the games ready to play today:Train Sim World 6 (New release on Steam, Sept. 30)Alien: Rogue Incursion Evolved Edition (New release on Steam, Sept. 30)Car Dealer Simulator (Steam)Nightingale (Epic Games Store)Ready or Not (Epic Games Store)STALCRAFT: X (Steam)New GeForce RTX 5080-ready games:inZOI (Steam)Total War: Warhammer III (Steam and Epic Games Store)Catch the full list of games coming to the cloud in October:King of Meat (New release on Steam, Oct. 7)Seafarer: The Ship Sim (New release on Steam, Oct. 7)Little Nightmares III (New release on Steam, Oct. 9)Battlefield 6 (New release on Steam and EA app, Oct. 10)Ball x Pit (New release on Steam, Oct. 15)Fellowship (New release on Steam, Oct. 16)Jurassic World Evolution 3 (New release on Steam, Oct. 21)Painkiller (New release on Steam, Oct. 21)Vampire: The Masquerade Bloodlines 2 (New release on Steam, Oct. 21)Tormented Souls 2 (New release on Steam, Oct. 23)Super Fantasy Kingdom (New release on Steam, Oct. 24)Earth vs. Mars (New release on Steam, Oct. 29)The Outer Worlds 2 (New release on Steam, Battle.net and Xbox, available on PC Game Pass, Oct. 29)ARC Raiders (New release on Steam, Oct. 30)Stacked SeptemberIn addition to the 17 games announced in September, an extra dozen joined over the month, including the newly added Train Sim World 6 this week:Call of Duty: Modern Warfare III (Steam, Battle.net and Xbox, available on PC Game Pass)Field of Glory II: Medieval (Steam)Goblin Cleanup (New release on Steam)Phoenix Wright: Ace Attorney Trilogy (Steam)Professional Fishing 2 (New release on Steam)Project Winter (New release on Epic Games Store)Renown (New release on Steam)Sworn (New release on Xbox, available on PC Game Pass)Two Point Campus (Steam, Epic Games Store)Two Point Museum (Steam, Epic Games Store)Town to City (New release on Steam)What are you planning to play this weekend? Let us know on X or in the comments below.If you could only play one genre of game for the rest of your life, what would it be and why? Extra credit: screenshots or clips of that genre in action! NVIDIA GeForce NOW (@NVIDIAGFN) October 1, 20250 Commentaires ·0 Parts -

0 Commentaires ·0 Parts

-

Open Secret: How NVIDIA Nemotron Models, Datasets and Techniques Fuel AI Developmentblogs.nvidia.comOpen technologies made available to developers and businesses to adopt, modify and innovate with have been part of every major technology shift, from the birth of the internet to the early days of cloud computing. AI should follow the same path.Thats why the NVIDIA Nemotron family of multimodal AI models, datasets and techniques is openly available. Accessible for research and commercial use, from local PCs to enterprise-scale systems, Nemotron provides an open foundation for building AI applications. Its available for developers to get started on GitHub, Hugging Face and OpenRouter.Nemotron enables developers, startups and enterprises of any size to use models trained with transparent, open-source training data. It offers tools to accelerate every phase of development, from customization to deployment.The technologys transparency means that its adopters can understand how their models work and trust the results they provide.Nemotrons capabilities for generalized intelligence and agentic AI reasoning and its adaptability to specialized AI use cases have led to its widespread use today by AI innovators and leaders across industries such as manufacturing, healthcare, education and retail.Whats NVIDIA Nemotron?NVIDIA Nemotron is a collection of open-source AI technologies designed for efficient AI development at every stage. It includes:Multimodal models: State-of-the-art AI models, delivered as open checkpoints, that excel at graduate-level scientific reasoning, advanced math, coding, instruction following, tool calling and visual reasoning.Pretraining, post-training and multimodal datasets: Collections of carefully chosen text, image and video data that teach AI models skills including language, math and problem-solving.Numerical precision algorithms and recipes: Advanced precision techniques that make AI faster and cheaper to run while keeping answers accurate.System software for scaling training efficiently on GPU clusters: Optimized software and frameworks that unlock accelerating training and inference on NVIDIA GPUs at massive scale for the largest models.Post-training methodologies and software: Fine-tuning steps that make AI smarter, safer and better at specific jobs.Nemotron is part of NVIDIAs wider efforts to provide open, transparent and adaptable AI platforms for developers, industry leaders and AI infrastructure builders across the private and public sectors.Whats the Difference Between Generalized Intelligence and Specialized Intelligence?NVIDIA built Nemotron to raise the bar for generalized intelligence capabilities including AI reasoning while also accelerating specialization, helping businesses worldwide adopt AI for industry-specific challenges.Generalized intelligence refers to models trained on vast public datasets to perform a wide range of tasks. It serves as the engine needed for broad problem-solving and reasoning tasks. Specialized intelligence learns the unique language, processes and priorities of an industry or organization, giving AI models the ability to adapt to specific real-world applications.To deliver AI at scale across every industry, both are essential.Thats why Nemotron provides pretrained foundation models optimized for a range of computing platforms, as well as tools like NVIDIA NeMo and NVIDIA Dynamo to transform generalized AI models into custom models tailored for specialized intelligence.How Are Developers and Enterprises Using Nemotron?NVIDIA is building Nemotron to accelerate the work of developers everywhere and to inform the design of future AI systems.From researchers to startups and global enterprises, developers need flexible, trustworthy AI. Nemotron offers the tools to build, customize and integrate AI for virtually any field.CrowdStrike is integrating its Charlotte AI AgentWorks no-code platform for security teams with Nemotron, helping to power and secure the agentic ecosystem. This collaboration redefines security operations by enabling analysts to build and deploy specialized AI agents at scale, leveraging trusted, enterprise-grade security with Nemotron models.DataRobot is using Nemotron as the open foundation for training, customizing and managing AI agents at scale in the Agent Workforce Platform co-developed with NVIDIA a solution for building, operating and governing a fully functional AI agent workforce, in on-premises, hybrid and multi-cloud environments.ServiceNowintroduced the Apriel Nemotron 15B model earlier this year in partnership with NVIDIA. Post-trained with data from both companies, the model is purpose-built for real-time workflow execution and delivers advanced reasoning in a smaller size, making it faster, more efficient, and cost-effective.UK-LLM, a sovereign AI initiative led by University College London, used Nemotron open-source techniques and datasets to develop an AI reasoning model for English and Welsh.NVIDIA also uses the insights gained from developing Nemotron to inform the design of its next-generation systems, including Grace Blackwell, Vera Rubin and Feynman. The latest innovations in AI models, including reduced precision, sparse arithmetic, new attention mechanisms and optimization algorithms, all shape GPU architectures.For example, NVFP4, a new data format that uses just four bits per parameter during large language model (LLM) training, was discovered with Nemotron. This advancement which dramatically reduces energy use is influencing the design of future NVIDIA systems.NVIDIA also improves Nemotron with open technologies built by the broader AI community.Alibabas Qwen open model has provided data augmentation that has improved Nemotrons pretraining and post-training datasets. The latest Qwen3-Next architecture pushed the frontier of long-context AI, the model leverages Gated Delta Networks from NVIDIA research and MIT.DeepSeek R1, a pioneer in AI reasoning, led to the development of Nemotron math, code and reasoning open datasets that can be used to teach models how to think.OpenAIs gpt-oss open-weight models demonstrate incredible reasoning, math and tool calling capabilities, including adjustable reasoning settings, that can be used to strengthen Nemotron post-training datasets.The Llama collection of open models by Meta is the foundation for Llama-Nemotron, an open family of models that used Nemotron datasets and recipes to add advanced reasoning capabilities.Start training and customizing AI models and agents with NVIDIA Nemotron models and data on Hugging Face, or try models for free on OpenRouter. Developers using NVIDIA RTX PCs can access Nemotron via the llama.cpp framework.Join NVIDIA for Agentic AI Day at NVIDIA GTC Washington, D.C. on Wednesday, Oct. 29. The event will bring together developers, researchers and technology leaders to highlight how NVIDIA technologies are accelerating national AI priorities and powering the next generation of AI agents.Stay up to date on agentic AI, Nemotron and more by subscribing to NVIDIA developer news, joining the developer community and following NVIDIA AI on LinkedIn, Instagram, X and Facebook.0 Commentaires ·0 Parts

Open Secret: How NVIDIA Nemotron Models, Datasets and Techniques Fuel AI Developmentblogs.nvidia.comOpen technologies made available to developers and businesses to adopt, modify and innovate with have been part of every major technology shift, from the birth of the internet to the early days of cloud computing. AI should follow the same path.Thats why the NVIDIA Nemotron family of multimodal AI models, datasets and techniques is openly available. Accessible for research and commercial use, from local PCs to enterprise-scale systems, Nemotron provides an open foundation for building AI applications. Its available for developers to get started on GitHub, Hugging Face and OpenRouter.Nemotron enables developers, startups and enterprises of any size to use models trained with transparent, open-source training data. It offers tools to accelerate every phase of development, from customization to deployment.The technologys transparency means that its adopters can understand how their models work and trust the results they provide.Nemotrons capabilities for generalized intelligence and agentic AI reasoning and its adaptability to specialized AI use cases have led to its widespread use today by AI innovators and leaders across industries such as manufacturing, healthcare, education and retail.Whats NVIDIA Nemotron?NVIDIA Nemotron is a collection of open-source AI technologies designed for efficient AI development at every stage. It includes:Multimodal models: State-of-the-art AI models, delivered as open checkpoints, that excel at graduate-level scientific reasoning, advanced math, coding, instruction following, tool calling and visual reasoning.Pretraining, post-training and multimodal datasets: Collections of carefully chosen text, image and video data that teach AI models skills including language, math and problem-solving.Numerical precision algorithms and recipes: Advanced precision techniques that make AI faster and cheaper to run while keeping answers accurate.System software for scaling training efficiently on GPU clusters: Optimized software and frameworks that unlock accelerating training and inference on NVIDIA GPUs at massive scale for the largest models.Post-training methodologies and software: Fine-tuning steps that make AI smarter, safer and better at specific jobs.Nemotron is part of NVIDIAs wider efforts to provide open, transparent and adaptable AI platforms for developers, industry leaders and AI infrastructure builders across the private and public sectors.Whats the Difference Between Generalized Intelligence and Specialized Intelligence?NVIDIA built Nemotron to raise the bar for generalized intelligence capabilities including AI reasoning while also accelerating specialization, helping businesses worldwide adopt AI for industry-specific challenges.Generalized intelligence refers to models trained on vast public datasets to perform a wide range of tasks. It serves as the engine needed for broad problem-solving and reasoning tasks. Specialized intelligence learns the unique language, processes and priorities of an industry or organization, giving AI models the ability to adapt to specific real-world applications.To deliver AI at scale across every industry, both are essential.Thats why Nemotron provides pretrained foundation models optimized for a range of computing platforms, as well as tools like NVIDIA NeMo and NVIDIA Dynamo to transform generalized AI models into custom models tailored for specialized intelligence.How Are Developers and Enterprises Using Nemotron?NVIDIA is building Nemotron to accelerate the work of developers everywhere and to inform the design of future AI systems.From researchers to startups and global enterprises, developers need flexible, trustworthy AI. Nemotron offers the tools to build, customize and integrate AI for virtually any field.CrowdStrike is integrating its Charlotte AI AgentWorks no-code platform for security teams with Nemotron, helping to power and secure the agentic ecosystem. This collaboration redefines security operations by enabling analysts to build and deploy specialized AI agents at scale, leveraging trusted, enterprise-grade security with Nemotron models.DataRobot is using Nemotron as the open foundation for training, customizing and managing AI agents at scale in the Agent Workforce Platform co-developed with NVIDIA a solution for building, operating and governing a fully functional AI agent workforce, in on-premises, hybrid and multi-cloud environments.ServiceNowintroduced the Apriel Nemotron 15B model earlier this year in partnership with NVIDIA. Post-trained with data from both companies, the model is purpose-built for real-time workflow execution and delivers advanced reasoning in a smaller size, making it faster, more efficient, and cost-effective.UK-LLM, a sovereign AI initiative led by University College London, used Nemotron open-source techniques and datasets to develop an AI reasoning model for English and Welsh.NVIDIA also uses the insights gained from developing Nemotron to inform the design of its next-generation systems, including Grace Blackwell, Vera Rubin and Feynman. The latest innovations in AI models, including reduced precision, sparse arithmetic, new attention mechanisms and optimization algorithms, all shape GPU architectures.For example, NVFP4, a new data format that uses just four bits per parameter during large language model (LLM) training, was discovered with Nemotron. This advancement which dramatically reduces energy use is influencing the design of future NVIDIA systems.NVIDIA also improves Nemotron with open technologies built by the broader AI community.Alibabas Qwen open model has provided data augmentation that has improved Nemotrons pretraining and post-training datasets. The latest Qwen3-Next architecture pushed the frontier of long-context AI, the model leverages Gated Delta Networks from NVIDIA research and MIT.DeepSeek R1, a pioneer in AI reasoning, led to the development of Nemotron math, code and reasoning open datasets that can be used to teach models how to think.OpenAIs gpt-oss open-weight models demonstrate incredible reasoning, math and tool calling capabilities, including adjustable reasoning settings, that can be used to strengthen Nemotron post-training datasets.The Llama collection of open models by Meta is the foundation for Llama-Nemotron, an open family of models that used Nemotron datasets and recipes to add advanced reasoning capabilities.Start training and customizing AI models and agents with NVIDIA Nemotron models and data on Hugging Face, or try models for free on OpenRouter. Developers using NVIDIA RTX PCs can access Nemotron via the llama.cpp framework.Join NVIDIA for Agentic AI Day at NVIDIA GTC Washington, D.C. on Wednesday, Oct. 29. The event will bring together developers, researchers and technology leaders to highlight how NVIDIA technologies are accelerating national AI priorities and powering the next generation of AI agents.Stay up to date on agentic AI, Nemotron and more by subscribing to NVIDIA developer news, joining the developer community and following NVIDIA AI on LinkedIn, Instagram, X and Facebook.0 Commentaires ·0 Parts -

Canada Goes All In on AI: NVIDIA Joins Nations Technology Leaders in Montreal to Shape Sovereign AI Strategyblogs.nvidia.comCanadas role as a leader in artificial intelligence was on full display at this weeks All In Canada AI Ecosystem event.NVIDIA Vice President of Generative AI Software Kari Briski today joined Canadas Minister of Artificial Intelligence and Digital Innovation Evan Solomon and Aiden Gomez, cofounder and CEO of Cohere, in a special address moderated by SiriusXM host Amber Mac.Youre all here to deliver the next big thing, Solomon told the room full of founders, researchers, investors and students. The AI revolution is the birth not just of a new technology this is the birth of the age of the entrepreneur.The session comes as Canadian communications technology company and NVIDIA Cloud Partner TELUS announces the launch of Canadas first fully sovereign AI factory in Rimouski, Quebec, powered by the latest NVIDIA accelerated computing, and financial services company RBC Capital Markets continues building AI agents for capital markets using NVIDIA software.For our government, for our country, All In means building digital sovereignty the most pressing policy, democratic issue of our time, Solomon said.Every nation should develop its own AI not just outsource it, Briski said during the panel conversation following Solomons address. AI must reflect local values, understand cultural context and align with national norms and policies. It needs to speak and write in the nuanced patterns of your natural language. Digital intelligence isnt something you can simply outsource.From left to right, Amber Mac, SiriusXM podcast host and moderator; Kari Briski, vice president of generative AI software for enterprise at NVIDIA; Aiden Gomez, cofounder and CEO of Cohere; Evan Solomon, Canadas Minister of Artificial Intelligence.The event marks a pivotal moment in Canadas AI journey, bringing together public and private sector leaders to spotlight the national infrastructure, innovation and policy that shape the future of artificial intelligence. It underscores the countrys commitment to digital sovereignty, economic competitiveness and the responsible development of AI.Canada must own the tools and the rules that matter at this critical moment, Solomon said. We need our digital insurance policy and thats what were building.Canadas AI momentum is accelerating.TELUS new facility, powered by NVIDIAs computing and software, and built in collaboration with HPE, offers end-to-end AI capabilities from model training to inferencing while ensuring full data residency and control within Canadian borders.The factory is already serving clients including OpenText, and is powered by 99% renewable energy and TELUS PureFibre network.Accenture will develop and deploy industry-specific solutions on the TELUS sovereign AI platform, accelerating AI adoption across its Canadian clients.And League, Canadas leading healthcare consumer experience provider, will run its comprehensive suite of AI-powered healthcare solutions using the TELUS Sovereign AI Factory.This event is the latest in a global wave of initiatives as countries activate AI to supercharge their economies and research ecosystems.Over the past year, NVIDIA founder and CEO Jensen Huang has appeared at events in France, Germany, India, Japan and the U.K., joining heads of state and industry leaders to highlight national AI strategies, announce infrastructure investments and accelerate public-private collaboration.Leadership is not a birthright, Solomon said. It has to be earned again and again and the competition is fierce.And last year, during a visit to Canada, Huang highlighted Canadas pioneering role in modern AI, describing it as the epicenter of innovation in modern AI, building on the foundational work of pioneering Canadian AI researchers such as Geoffrey Hinton and Yoshua Bengio, who is also speaking at the conference.RBC Capital Markets works with NVIDIA software to build enterprise-grade AI agents for Capital Markets. This enables global institutions to deploy intelligent systems tailored to local needs.These agents customized with NVIDIA NeMo agent lifecycle tools and deployed using NVIDIA NIM microservices are helping transform RBC Capital Markets research for faster delivery of insights to clients.RBC Capital Markets, TELUS and NVIDIA are sharing more on best practices for agentic AI development in a special session at All In on Wednesday from 4:15-5 p.m. ET.0 Commentaires ·0 Parts