Curated stories on user experience, usability, and product design. By

@fabriciot

and

@caioab

.

@fabriciot

and

@caioab

.

208 pessoas curtiram isso

28 Publicações

0 fotos

0 Vídeos

Compartilhar

Compartilhado com

News

Atualizações recentes

-

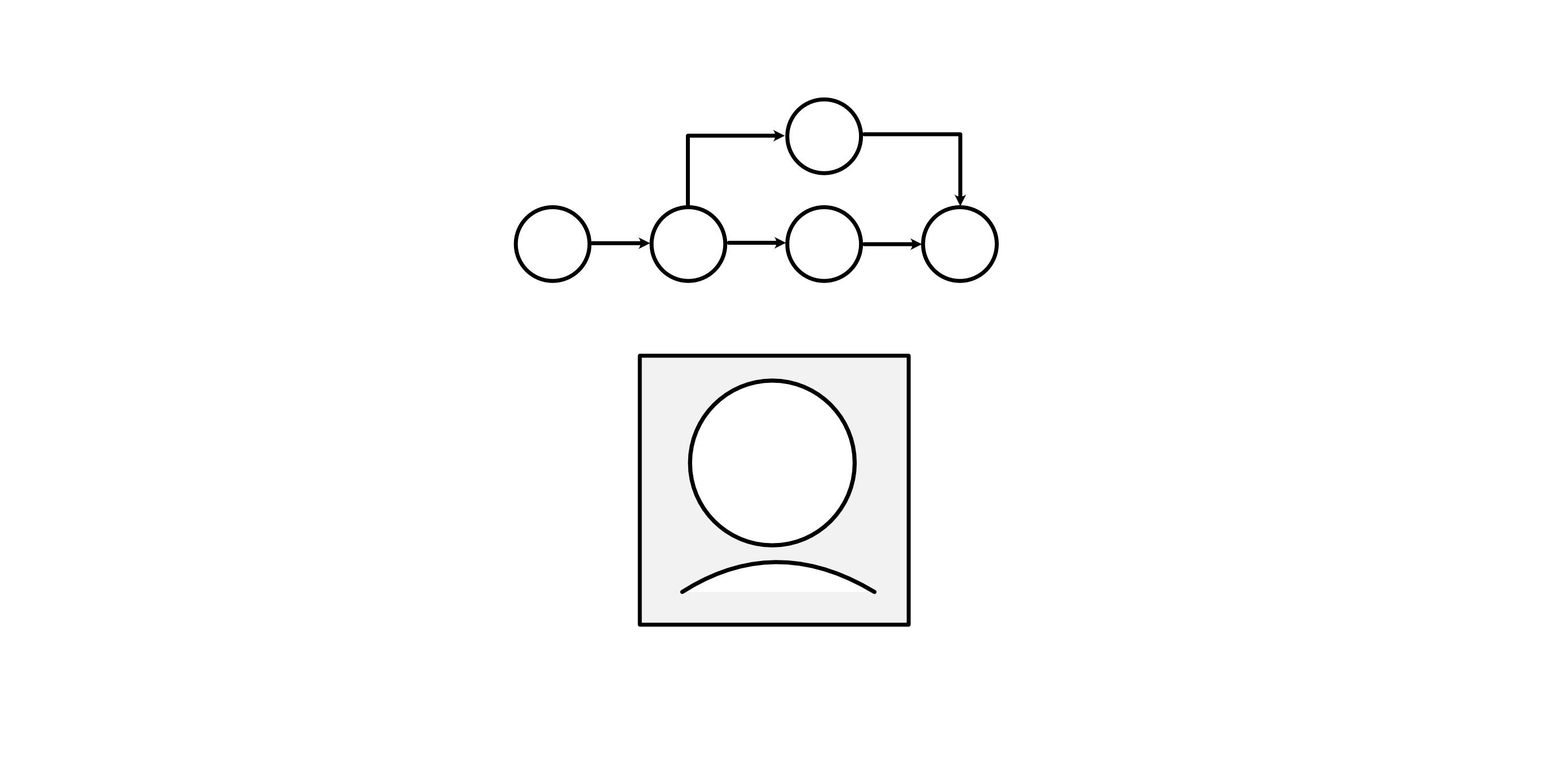

Designing the Jarvis momentuxdesign.ccApps SDK, design principles, and the future of contextual UX.Current ChatGPT app integrations like Zillow, Booking.com, and Canva, illustrating seamless in-chat access. Source: OpenAIIntroducing Apps inChatGPTDo you remember how Tony Stark talked to JARVIS in IronMan?He simply said what he wanted to do, and JARVIS got it done. There was no need to open menus, switch applications, or decide which interface to use next. Every option that could have slowed him down was already resolved for him, so his attention stayed on the taskitself.The scene captures J.A.R.V.I.S. natural-language interface giving Tony instant access to multiple suit configurations and diagnostics with a singlecommand.That kind of experience is exactly what OpenAI is now reaching for. In Brad Lightcaps recent interview with Bloomberg, he shared a vision where third-party apps could work together seamlessly inside ChatGPT. Users would never have to leave a conversation to get something done.Its the contextual aspect of Im doing X or I need YIm on a road trip and I want to know what playlist would go well with thisthat allows you to use ChatGPT to solve higher-level tasks and integrate apps contextually, said Brad Lightcap in a Bloomberg interview.For designers, the real challenge lies in understanding what this shift implies. When conversations become the new interface and tools can appear contextually, how should we design the flow, timing, and handoff between chat and visual elements?Evolution from plugins, MCP, toSDKTo understand what this shift truly means for design, we first need to look at how the systemevolved.In the early days of plugins, tools lived in separate spaces. Users had to switch between apps, browser extensions, or new tabs to complete even the simplesttask.Then came the Model Context Protocol, or MCP. It created a standard way for ChatGPT to talk with external tools and data sources. MCP allowed the model to call a tool and pass structured information through a single, consistent channel. It was an invisible bridge between the model and the outsideworld.Building on that foundation, OpenAI introduced the Apps Software Development Kit (SDK), a toolkit that makes this bridge visible and interactive. It determines when a tool appears, how it looks, and how people can interact with it directly inside the chat. With the SDK, users can engage directly with apps through buttons, cards, or widgets without leaving the conversation.Image from OpenAIs Apps SDK Design Guidelines illustrating how integrated apps visually coexist within ChatGPTs conversation interface. Source: OpenAIApps SDK Design GuidelinesHow the apps SDK boosts efficiency and productgrowthAt this point, designers can probably already imagine how the Apps SDK begins to reshape the user experience. In essence, the SDK embodies the logic behind Hicks Law and Fittss Law: it reduces the visual noise that slows decision-making and shortens the physical distance between intention and completion.Take Coursera for example. Previously, a learner exploring UX courses might spend minutes choosing which platform to open, typing UX design into a search bar, scrolling through long lists, and clicking in and out of pages to check ratings or instructors. Every choice added a moment of hesitation, fragmenting attention and stretching the time it took toact.Image from Courseras page illustrating how users face a complex, multi-step interface when searching for UX design courses. Source: CourseraUniversity of Michigan UX DesignSearchHowever, Courseras integration with ChatGPT illustrates a successful application of Hicks Law principles, significantly reducing decision complexity. A learner can simply ask a question with natural language inside ChatGPTs text field. A video preview of relevant courses then appears directly within the chat, reducing the number of immediate choices presented to the learner. Learners no longer needs to spend time filtering or comparing endless options in a long, scrolling list, thereby simplifying the selection process and dramatically decreasing the reaction time required to commit to acourse.Image illustrating how users can search for and explore UX design courses on Coursera directly within ChatGPT using natural language prompts. Source: OpenAIIntroducing Apps inChatGPTSome in-house designers might wonder what is the impact on their products. If users can complete tasks entirely inside ChatGPT, would they still visit the originalapp?This concern is reasonable because the SDK might reduce users page-level engagement within the native environment. Yet the same decreased friction of opening the app can also widen the apps reach. By allowing users to call the app directly within ChatGPT, the SDK raises visit frequency among existing members and opens the door for non-members to try it for the firsttime.The experience no longer depends on downloading, logging in, or navigating to a separate website. Each interaction inside ChatGPT becomes an entry point that can lead to higher engagement and new conversions, expanding the apps reach without adding extrasteps.Designing experiences that belong in a conversationAfter understanding the broader impact of the Apps SDK, designers can start thinking about how to shape these experiences in practice.Designing for the SDK means clearly defining who the users are, what their goals look like in context, and which parts of their workflow feel most natural to complete through conversation. The task must be specific enough for ChatGPT to assist, yet simple enough to avoid overwhelming the interaction.OpenAI offers several guiding questions to help designers identify good use cases, each of which can also be viewed through the lens of Hicks Law, showing how the SDK minimizes the number of decisions users need to make. Ask yourself:How do your user task fit naturally into a conversation? (for example, booking, ordering, scheduling, quicklookups)Is it time-bound or action-oriented? (short or medium duration tasks with a clear start andend)Is the information valuable in the moment? (users can act on it right away or get a concise preview before divingdeeper)Can it be summarized visually and simply? (one card, a few key details, a clearCTA)Does it extend ChatGPT in a way that feels additive or differentiated?Designers should also avoid designing interfaces that:Display long-form or static content better suited for a website orapp.Require complex multi-step workflows that exceed the inline or fullscreen displaymodes.Use the space for ads, upsells, or irrelevant messaging.Surface sensitive or private information directly in a card where others might seeit.Duplicate ChatGPTs system functions (for example, recreating the input composer).From most of these principles, we can notice that OpenAI encourages designers to avoid tasks that involve too many steps, or think about what information is valuable to show on the interface.Take Figma SDK for example. The workflows like converting a short piece of text into a user flow represents a much stronger use case. It is easier for users to describe with natural language, and this information can immediate be summarized into visual feedback that users can act on rightaway.Image demonstrating how users can generate a complete user-flow diagram in Figma through a simple natural-language request and sketches inside ChatGPT. Source: OpenAIIntroducing Apps inChatGPTIn contrast, designing a complex design system with ChatGPT may not be a good user case. Because it require both users and computers to access complicate information on multiple design pages, and users have to constantly make fragmented decisions and repeatedly step in to guide the AI, which interrupts the flow of interaction and increases the overall time to complete atask.For designers, building for the Apps SDK starts with defining a clear, focused task. ChatGPT favors apps that serve a single, well-scoped purpose, something users can complete in one short interaction. Try to describe your users key goal in plain language, outline its input and output, and make sure it fits naturally into a conversation.Designing Interfaces that belong in a conversationOpenAI also provides a set of UI guidelines that can be examined through the lens of Hicks and FittssLaw.For example, designers should display only the most relevant information and present it in a simple visual form, such as a clean card with a short list of three keyitems.The guidelines also suggest limiting each card to a maximum of two primary actions: one main call to action and one optional secondary choice. These approach helps users find what they need without navigating through layers of menus or dense tables, allowing them to act morequickly.Image from OpenAIs Apps SDK Design Guidelines emphasizing that ChatGPT app interfaces should avoid complex navigation and redundant elements. Source: OpenAIApps SDK Design GuidelinesPrimary actions are usually placed at the bottom of the card, effectively reducing the distance the thumb-pointer musttravel.Image from OpenAIs Apps SDK Design Guidelines illustrating how different UI components are organized consistently within ChatGPTs in-chat interface. Source: OpenAIApps SDK Design GuidelinesWhen a card contains richer media such as images, maps, or interactive diagrams, the expand option can open a fullscreen view. This action significantly increases the effective target width, allowing users to work within a larger, more precise area and improves control when interacting with detailed visualcontent.Image from OpenAIs Apps SDK Design Guidelines demonstrating how full-screen views allow immersive map-interactions directly inside ChatGPT. Source: OpenAIApps SDK Design GuidelinesIf youre interested in the details of how to design user experiences and interfaces, please check out the Apps SDK Design Guidelines and the Figma component library.The future of agents and UX designers roleAs Brad Lightcap mentioned in his Bloomberg interview, the future of ChatGPT will begin to behave more like an operating system (or Jarvis, your personalagent).It will not only execute commands but also integrate information across multiple apps, anticipate user needs, and determine when to act and when to ask for confirmation.Similar directions are already being explored or developed in products like Gemini and Google Suite products, signaling a shift toward more unified, context-aware environments.For UX designers, this evolution expands the scope of design beyond screens and buttons. The focus moves toward designing flows, contexts, and systems that help users think clearly and act confidently. Designers must learn to define how an AI communicates, makes decisions, and aligns with humangoals.Yet no matter how advanced the system becomes, there will always be a need to clarify what people truly need, and bringing that clarity to complexity to just simple interactions.References:Bloomberg Originals. (2025, May 14). OpenAI COO Brad Lightcap talks whats next for OpenAI [Video]. Bloomberg Talks. https://www.youtube.com/watch?v=rNY2HVBIZ6IJohnson, J. (2021). Designing with the mind in mind: Simple guide to understanding user interface design guidelines (3rd ed., Chapter 13: Our handeye coordination follows laws). Morgan Kaufmann.OpenAI. (2024). App design guidelines: Design guidelines for developers building on the Apps SDK. https://developers.openai.com/apps-sdk/concepts/design-guidelinesOpenAI. (2024, September). Introducing apps in ChatGPT and the new Apps SDK: A new generation of apps you can chat with and the tools for developers to build them. https://openai.com/index/introducing-apps-in-chatgpt/Soegaard, M. (2020, August 15). Hicks Law: Making the choice easier for users. Interaction Design Foundation. https://www.interaction-design.org/literature/article/hick-s-law-making-the-choice-easier-for-usersDesigning the Jarvis moment was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Comentários ·0 CompartilhamentosFaça o login para curtir, compartilhar e comentar!

Designing the Jarvis momentuxdesign.ccApps SDK, design principles, and the future of contextual UX.Current ChatGPT app integrations like Zillow, Booking.com, and Canva, illustrating seamless in-chat access. Source: OpenAIIntroducing Apps inChatGPTDo you remember how Tony Stark talked to JARVIS in IronMan?He simply said what he wanted to do, and JARVIS got it done. There was no need to open menus, switch applications, or decide which interface to use next. Every option that could have slowed him down was already resolved for him, so his attention stayed on the taskitself.The scene captures J.A.R.V.I.S. natural-language interface giving Tony instant access to multiple suit configurations and diagnostics with a singlecommand.That kind of experience is exactly what OpenAI is now reaching for. In Brad Lightcaps recent interview with Bloomberg, he shared a vision where third-party apps could work together seamlessly inside ChatGPT. Users would never have to leave a conversation to get something done.Its the contextual aspect of Im doing X or I need YIm on a road trip and I want to know what playlist would go well with thisthat allows you to use ChatGPT to solve higher-level tasks and integrate apps contextually, said Brad Lightcap in a Bloomberg interview.For designers, the real challenge lies in understanding what this shift implies. When conversations become the new interface and tools can appear contextually, how should we design the flow, timing, and handoff between chat and visual elements?Evolution from plugins, MCP, toSDKTo understand what this shift truly means for design, we first need to look at how the systemevolved.In the early days of plugins, tools lived in separate spaces. Users had to switch between apps, browser extensions, or new tabs to complete even the simplesttask.Then came the Model Context Protocol, or MCP. It created a standard way for ChatGPT to talk with external tools and data sources. MCP allowed the model to call a tool and pass structured information through a single, consistent channel. It was an invisible bridge between the model and the outsideworld.Building on that foundation, OpenAI introduced the Apps Software Development Kit (SDK), a toolkit that makes this bridge visible and interactive. It determines when a tool appears, how it looks, and how people can interact with it directly inside the chat. With the SDK, users can engage directly with apps through buttons, cards, or widgets without leaving the conversation.Image from OpenAIs Apps SDK Design Guidelines illustrating how integrated apps visually coexist within ChatGPTs conversation interface. Source: OpenAIApps SDK Design GuidelinesHow the apps SDK boosts efficiency and productgrowthAt this point, designers can probably already imagine how the Apps SDK begins to reshape the user experience. In essence, the SDK embodies the logic behind Hicks Law and Fittss Law: it reduces the visual noise that slows decision-making and shortens the physical distance between intention and completion.Take Coursera for example. Previously, a learner exploring UX courses might spend minutes choosing which platform to open, typing UX design into a search bar, scrolling through long lists, and clicking in and out of pages to check ratings or instructors. Every choice added a moment of hesitation, fragmenting attention and stretching the time it took toact.Image from Courseras page illustrating how users face a complex, multi-step interface when searching for UX design courses. Source: CourseraUniversity of Michigan UX DesignSearchHowever, Courseras integration with ChatGPT illustrates a successful application of Hicks Law principles, significantly reducing decision complexity. A learner can simply ask a question with natural language inside ChatGPTs text field. A video preview of relevant courses then appears directly within the chat, reducing the number of immediate choices presented to the learner. Learners no longer needs to spend time filtering or comparing endless options in a long, scrolling list, thereby simplifying the selection process and dramatically decreasing the reaction time required to commit to acourse.Image illustrating how users can search for and explore UX design courses on Coursera directly within ChatGPT using natural language prompts. Source: OpenAIIntroducing Apps inChatGPTSome in-house designers might wonder what is the impact on their products. If users can complete tasks entirely inside ChatGPT, would they still visit the originalapp?This concern is reasonable because the SDK might reduce users page-level engagement within the native environment. Yet the same decreased friction of opening the app can also widen the apps reach. By allowing users to call the app directly within ChatGPT, the SDK raises visit frequency among existing members and opens the door for non-members to try it for the firsttime.The experience no longer depends on downloading, logging in, or navigating to a separate website. Each interaction inside ChatGPT becomes an entry point that can lead to higher engagement and new conversions, expanding the apps reach without adding extrasteps.Designing experiences that belong in a conversationAfter understanding the broader impact of the Apps SDK, designers can start thinking about how to shape these experiences in practice.Designing for the SDK means clearly defining who the users are, what their goals look like in context, and which parts of their workflow feel most natural to complete through conversation. The task must be specific enough for ChatGPT to assist, yet simple enough to avoid overwhelming the interaction.OpenAI offers several guiding questions to help designers identify good use cases, each of which can also be viewed through the lens of Hicks Law, showing how the SDK minimizes the number of decisions users need to make. Ask yourself:How do your user task fit naturally into a conversation? (for example, booking, ordering, scheduling, quicklookups)Is it time-bound or action-oriented? (short or medium duration tasks with a clear start andend)Is the information valuable in the moment? (users can act on it right away or get a concise preview before divingdeeper)Can it be summarized visually and simply? (one card, a few key details, a clearCTA)Does it extend ChatGPT in a way that feels additive or differentiated?Designers should also avoid designing interfaces that:Display long-form or static content better suited for a website orapp.Require complex multi-step workflows that exceed the inline or fullscreen displaymodes.Use the space for ads, upsells, or irrelevant messaging.Surface sensitive or private information directly in a card where others might seeit.Duplicate ChatGPTs system functions (for example, recreating the input composer).From most of these principles, we can notice that OpenAI encourages designers to avoid tasks that involve too many steps, or think about what information is valuable to show on the interface.Take Figma SDK for example. The workflows like converting a short piece of text into a user flow represents a much stronger use case. It is easier for users to describe with natural language, and this information can immediate be summarized into visual feedback that users can act on rightaway.Image demonstrating how users can generate a complete user-flow diagram in Figma through a simple natural-language request and sketches inside ChatGPT. Source: OpenAIIntroducing Apps inChatGPTIn contrast, designing a complex design system with ChatGPT may not be a good user case. Because it require both users and computers to access complicate information on multiple design pages, and users have to constantly make fragmented decisions and repeatedly step in to guide the AI, which interrupts the flow of interaction and increases the overall time to complete atask.For designers, building for the Apps SDK starts with defining a clear, focused task. ChatGPT favors apps that serve a single, well-scoped purpose, something users can complete in one short interaction. Try to describe your users key goal in plain language, outline its input and output, and make sure it fits naturally into a conversation.Designing Interfaces that belong in a conversationOpenAI also provides a set of UI guidelines that can be examined through the lens of Hicks and FittssLaw.For example, designers should display only the most relevant information and present it in a simple visual form, such as a clean card with a short list of three keyitems.The guidelines also suggest limiting each card to a maximum of two primary actions: one main call to action and one optional secondary choice. These approach helps users find what they need without navigating through layers of menus or dense tables, allowing them to act morequickly.Image from OpenAIs Apps SDK Design Guidelines emphasizing that ChatGPT app interfaces should avoid complex navigation and redundant elements. Source: OpenAIApps SDK Design GuidelinesPrimary actions are usually placed at the bottom of the card, effectively reducing the distance the thumb-pointer musttravel.Image from OpenAIs Apps SDK Design Guidelines illustrating how different UI components are organized consistently within ChatGPTs in-chat interface. Source: OpenAIApps SDK Design GuidelinesWhen a card contains richer media such as images, maps, or interactive diagrams, the expand option can open a fullscreen view. This action significantly increases the effective target width, allowing users to work within a larger, more precise area and improves control when interacting with detailed visualcontent.Image from OpenAIs Apps SDK Design Guidelines demonstrating how full-screen views allow immersive map-interactions directly inside ChatGPT. Source: OpenAIApps SDK Design GuidelinesIf youre interested in the details of how to design user experiences and interfaces, please check out the Apps SDK Design Guidelines and the Figma component library.The future of agents and UX designers roleAs Brad Lightcap mentioned in his Bloomberg interview, the future of ChatGPT will begin to behave more like an operating system (or Jarvis, your personalagent).It will not only execute commands but also integrate information across multiple apps, anticipate user needs, and determine when to act and when to ask for confirmation.Similar directions are already being explored or developed in products like Gemini and Google Suite products, signaling a shift toward more unified, context-aware environments.For UX designers, this evolution expands the scope of design beyond screens and buttons. The focus moves toward designing flows, contexts, and systems that help users think clearly and act confidently. Designers must learn to define how an AI communicates, makes decisions, and aligns with humangoals.Yet no matter how advanced the system becomes, there will always be a need to clarify what people truly need, and bringing that clarity to complexity to just simple interactions.References:Bloomberg Originals. (2025, May 14). OpenAI COO Brad Lightcap talks whats next for OpenAI [Video]. Bloomberg Talks. https://www.youtube.com/watch?v=rNY2HVBIZ6IJohnson, J. (2021). Designing with the mind in mind: Simple guide to understanding user interface design guidelines (3rd ed., Chapter 13: Our handeye coordination follows laws). Morgan Kaufmann.OpenAI. (2024). App design guidelines: Design guidelines for developers building on the Apps SDK. https://developers.openai.com/apps-sdk/concepts/design-guidelinesOpenAI. (2024, September). Introducing apps in ChatGPT and the new Apps SDK: A new generation of apps you can chat with and the tools for developers to build them. https://openai.com/index/introducing-apps-in-chatgpt/Soegaard, M. (2020, August 15). Hicks Law: Making the choice easier for users. Interaction Design Foundation. https://www.interaction-design.org/literature/article/hick-s-law-making-the-choice-easier-for-usersDesigning the Jarvis moment was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Comentários ·0 CompartilhamentosFaça o login para curtir, compartilhar e comentar! -

Typography Basicsuxdesign.ccTypography basicsA practical introduction to typographyfrom anatomy and spacing to legibility and alignmentfor designers who want to create type that reads beautifully and feels intentional.Typography isnt just about picking a pretty font. Its the craft of shaping written language into a visual experiencehow words look, breathe, and interact on a page or screen. Good typography quietly guides the reader, while poor typography shouts for attention in all the wrong ways. Every detailfrom letter spacing to line heightaffects how users read, feel, and engage. Lets unpack the essentials.Every letter has a structure, and understanding it helps you design with precision. Terms like ascender, descender, baseline, and x-height describe the invisible skeleton that keeps type coherent. The x-height, for instance, determines how big a typeface feels even at the same pointsize.Serif and sans-serif typefaces differ in tone partly because of these structures: serifs guide the eye along lines of text, while sans-serifs often feel cleaner and more modern. Knowing anatomy allows you to mix typefaces consciously rather than by vibealone.Typographic AnatomyKerning, tracking, leadingThese three terms define the rhythm and flow of text. Kerning adjusts the space between individual letters, tracking controls spacing across whole words or paragraphs, and leading (pronounced ledding) sets the space betweenlines.Think of them as the breathing room of type. Too tight, and words feel suffocating; too loose, and they drift apart like strangers at a party. Consistent, intentional spacing is one of the clearest markers of professional typographyand one of the easiest to overlook.Type alignmentLeft, right, center, or justifiedeach alignment changes how the reader experiences text. Left-aligned text feels natural for most Western readers; it mirrors how our eyes expect to move. Centered text adds elegance in small doses, like headings or invitations, but strains readability in long paragraphs.Justified text can look neat but often introduces awkward gaps between words. Theres no one right choiceonly what fits the tone and purpose of thecontent.Indents, outdents, and hanging punctuationsIndenting the first line of a paragraph subtly signals a new thought, while outdents (negative indents) can highlight lists or quotes. Hanging punctuation, where quotation marks or bullets sit outside the text block, preserves clean visual alignment.These are the small design manners that readers might never noticebut would feel their absence. They lend rhythm and grace to long-form content.Legibility and readabilityThey sound similar but mean different things. Legibility is about how clearly letters can be distinguisheda function of font design, size, and contrast. Readability is about how easy it feels to read longer passagesaffected by line length, spacing, and even the surrounding design.A beautifully legible font can still be unreadable in practice if the text is too dense or too wide. The goal isnt just to be seen but to be comfortably followed.Specialized usesTypography takes on unique forms in different contexts: interfaces, signage, packaging, books, motion graphics. In UI design, type must perform at small sizes and varying screens. In print, it must hold character at high resolutions and long durations.Display type can afford drama; body text should feel invisible. Understanding how context shapes your typographic decisions is what separates art fromnoise.Typography isnt decorationits communication shaped with care. Once you see how subtle its impact is, you start noticing type everywhere: on screens, in streets, on receipts. Every font choice tells a story. The more fluently you speak the language of type, the more precisely you can design experiences that speakback.Typography has a strange power: its both invisible and unforgettable. The best type never demands your attention, yet it defines how every word feels. A letters curve can suggest warmth or precision, while spacing can create calm or urgency. This is why typography sits at the heart of designconnecting language withemotion.So when we adjust a line height or choose between Helvetica and Garamond, were not just picking styles; were shaping how people interpret meaning. Typography is the quiet storyteller behind every interface, poster, and page. Mastering it means learning to speak softly but leave a lasting impression.Further reading &viewing The Elements of Typographic Style by Robert BringhurstWidely regarded as a typography bibleit covers type anatomy, spacing, history, meaning and practice. Just My Type: A Book About Fonts by Simon GarfieldA lively, readable exploration of how fonts shape culture, emotion and everyday designchoices. The Anatomy of Type: A Graphic Guide to 100 Typefaces by Stephen ColesHighly visual and practicala great reference for seeing how anatomy, spacing and alignment vary across real typefaces. Helvetica (2007, dir. Gary Hustwit)A design-documentary classic that shows how a single typeface (Helvetica) touches legibility, meaning, alignment and culturalhistory. Typeface (2009, dir. Justine Nagan)Looks at wood-type printing and the material roots of typographyhelpful to ground the anatomy and spacing discussions in tangibleform. Graphic design for filmmaking, prop design workshop by Annie Atkins.Learn how to start designing a collection of graphic props that can tell a directors story, as well as contributing to the genre, period, and visual aesthetic of afilm.Visuals by @oscarsunFigma Community Thanks forreading.Typography Basics was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Comentários ·0 Compartilhamentos

Typography Basicsuxdesign.ccTypography basicsA practical introduction to typographyfrom anatomy and spacing to legibility and alignmentfor designers who want to create type that reads beautifully and feels intentional.Typography isnt just about picking a pretty font. Its the craft of shaping written language into a visual experiencehow words look, breathe, and interact on a page or screen. Good typography quietly guides the reader, while poor typography shouts for attention in all the wrong ways. Every detailfrom letter spacing to line heightaffects how users read, feel, and engage. Lets unpack the essentials.Every letter has a structure, and understanding it helps you design with precision. Terms like ascender, descender, baseline, and x-height describe the invisible skeleton that keeps type coherent. The x-height, for instance, determines how big a typeface feels even at the same pointsize.Serif and sans-serif typefaces differ in tone partly because of these structures: serifs guide the eye along lines of text, while sans-serifs often feel cleaner and more modern. Knowing anatomy allows you to mix typefaces consciously rather than by vibealone.Typographic AnatomyKerning, tracking, leadingThese three terms define the rhythm and flow of text. Kerning adjusts the space between individual letters, tracking controls spacing across whole words or paragraphs, and leading (pronounced ledding) sets the space betweenlines.Think of them as the breathing room of type. Too tight, and words feel suffocating; too loose, and they drift apart like strangers at a party. Consistent, intentional spacing is one of the clearest markers of professional typographyand one of the easiest to overlook.Type alignmentLeft, right, center, or justifiedeach alignment changes how the reader experiences text. Left-aligned text feels natural for most Western readers; it mirrors how our eyes expect to move. Centered text adds elegance in small doses, like headings or invitations, but strains readability in long paragraphs.Justified text can look neat but often introduces awkward gaps between words. Theres no one right choiceonly what fits the tone and purpose of thecontent.Indents, outdents, and hanging punctuationsIndenting the first line of a paragraph subtly signals a new thought, while outdents (negative indents) can highlight lists or quotes. Hanging punctuation, where quotation marks or bullets sit outside the text block, preserves clean visual alignment.These are the small design manners that readers might never noticebut would feel their absence. They lend rhythm and grace to long-form content.Legibility and readabilityThey sound similar but mean different things. Legibility is about how clearly letters can be distinguisheda function of font design, size, and contrast. Readability is about how easy it feels to read longer passagesaffected by line length, spacing, and even the surrounding design.A beautifully legible font can still be unreadable in practice if the text is too dense or too wide. The goal isnt just to be seen but to be comfortably followed.Specialized usesTypography takes on unique forms in different contexts: interfaces, signage, packaging, books, motion graphics. In UI design, type must perform at small sizes and varying screens. In print, it must hold character at high resolutions and long durations.Display type can afford drama; body text should feel invisible. Understanding how context shapes your typographic decisions is what separates art fromnoise.Typography isnt decorationits communication shaped with care. Once you see how subtle its impact is, you start noticing type everywhere: on screens, in streets, on receipts. Every font choice tells a story. The more fluently you speak the language of type, the more precisely you can design experiences that speakback.Typography has a strange power: its both invisible and unforgettable. The best type never demands your attention, yet it defines how every word feels. A letters curve can suggest warmth or precision, while spacing can create calm or urgency. This is why typography sits at the heart of designconnecting language withemotion.So when we adjust a line height or choose between Helvetica and Garamond, were not just picking styles; were shaping how people interpret meaning. Typography is the quiet storyteller behind every interface, poster, and page. Mastering it means learning to speak softly but leave a lasting impression.Further reading &viewing The Elements of Typographic Style by Robert BringhurstWidely regarded as a typography bibleit covers type anatomy, spacing, history, meaning and practice. Just My Type: A Book About Fonts by Simon GarfieldA lively, readable exploration of how fonts shape culture, emotion and everyday designchoices. The Anatomy of Type: A Graphic Guide to 100 Typefaces by Stephen ColesHighly visual and practicala great reference for seeing how anatomy, spacing and alignment vary across real typefaces. Helvetica (2007, dir. Gary Hustwit)A design-documentary classic that shows how a single typeface (Helvetica) touches legibility, meaning, alignment and culturalhistory. Typeface (2009, dir. Justine Nagan)Looks at wood-type printing and the material roots of typographyhelpful to ground the anatomy and spacing discussions in tangibleform. Graphic design for filmmaking, prop design workshop by Annie Atkins.Learn how to start designing a collection of graphic props that can tell a directors story, as well as contributing to the genre, period, and visual aesthetic of afilm.Visuals by @oscarsunFigma Community Thanks forreading.Typography Basics was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Comentários ·0 Compartilhamentos -

The day the internet crashed: What the AWS outage teaches us about dependenciesuxdesign.ccA single fault in the cloud revealed just how connected and dependent weve all become and why good UX must plan for failure.Continue reading on UX Collective0 Comentários ·0 Compartilhamentos

The day the internet crashed: What the AWS outage teaches us about dependenciesuxdesign.ccA single fault in the cloud revealed just how connected and dependent weve all become and why good UX must plan for failure.Continue reading on UX Collective0 Comentários ·0 Compartilhamentos -

How design radically transformed a dying industryuxdesign.ccAll it takes is one creative spark to ignite afire.source: DSRUPTR.comI recently wrote about the 5 powerful business insights that prove the value ofdesign.While its incredible to see the business results on the value of design from McKinsey, Harvard, Forbes, and Adobe, I really appreciate the everyday examples to help illustrate the value ofdesign.And the creative spark it takes to move a mountainThe Story of Jacek Utko and His CreativeSparkJacek Utko is a former architect who got a job as a newspaper art director. He really thought newspapers were boring and bland, and that was easily reflected in the low circulationnobody was readingthem!source: Pew ResearchCenterHe was really frustrated. His parents and friends thought he was crazy wasting his time working at a newspaper.And the general public thought newspapers would soon become extinct because most people were reading news online. Not to mention most newspapers were outdated by the time they were printed, and environmentalist thought newspapers wasted valuable resources.source: Pew ResearchCenterBut what should he do? He knew that newspapers werent appealing to consumers, he just wasnt sure how to make them better. Or if they could even besaved.Stepping away from work, he took a trip up to London to attend a Cirque du Soleil performance with somefriends.https://medium.com/media/159d9a937486f25d82878ed2a1bfca82/hrefHe was blown away. He told his friends that they totally transformed a creepy run-down entertainment into performance art!This one performance was the creative spark that would ignite a fire inJacek.He knew right away that he should use design to transform his newspaper from boring text into performance art.Just like a Cirque du Soleil performance.Much like when Einstein was a child and received a magnetic compass as a gift, sparking his lifelong fascination with the invisible forces ofnature.source: created with MidjourneyOr Steve Jobs being inspired during a calligraphy course at Reed College, where he was captivated by the elegance and beauty of typography. This sparked his passion for design. He embraced design to create beautiful form factors and interfaces in Apples productsbecoming a unique differentiator from the bland PC computers.source: DSRUPTR.comJacek quickly returned home to Poland to focus his energy on selling leadership on his vision to radically redesign the newspaper into visually engaging and artisticpieces.The Revolution of Newspaper DesignJacek worked closely with newspaper executives to figure out the business goals of their papers, and then radically reformatted the product to fit thosegoals.Part of the transformation was Jaceks strategic rethinking the newspaper design, moving away from individual page layouts to seeing the whole newspaper as a single composition.As the art director at Warsaws Puls Biznesu in 2004, he redesigned this small business-focused newspaper and immediately won the SND award for worlds best-designed newspaper. Readers responded, and circulation wentup.source: PulsBiznesuIts clear from these covers that Jacek has completely elevated an old newspaper from static information into dynamiccontent.source: PulsBiznesuThe info graphics that Jacek and his team designed are not just interesting bits of data, but compelling stories that take their readers on ajourney.source: PulsBiznesuJacek and his team got people to notice newspapers again. And quite frankly, the designs he created made newspapers hard toignore.source: WyborczaPeople rushed to pick up these redesigned newspapers, often seeing others holding them and curious about the artistry and creativity they saw on thepages.source: DM.focusJacek sat back in his chair with a smile. He and his team had successfully transformed a newspaper into performance art. What seemed like an impossible dream was nowreality.The BusinessResultsUtkos extraordinary design work demonstrates that strategic, user-focused design significantly enhances business performance and reader engagement.The amount of awards and revenue created by Jacek and his team are inspiring:Increased Circulation by Up to 100%: Utkos redesigns for newspapers in Eastern Europe, including titles in Poland, Russia, Bulgaria, Lithuania, and Latvia, consistently boosted circulation, with some publications seeing increases of up to 100% due to enhanced visual appeal and reader engagement.35% Circulation Growth in Poland: A Polish newspaper Utko redesigned saw a 35% circulation increase over three years, reversing nearly a decade of stagnation, by aligning design with business goals and reader preferences.Boosted Ad Revenue: His redesigns, such as for Bonniers business publications, led to increased advertising revenue, often by as much as 100%, by creating visually compelling layouts that attracted advertisers.Award-Winning Designs: Utkos redesigns won the Society of News Design (SND) Worlds Best Designed Newspaper award in 2004 and 2007 for Warsaws Puls Biznesu, enhancing brand prestige and marketability.European Newspaper of the Year Awards: Three newspapers he redesigned were recognized as European Newspaper of the Year in 2015, 2016, and 2019, driving higher readership and industry recognition.Global Impact Across 35 Countries: Utkos redesigns for dozens of newspapers and magazines in Europe, Asia, Australia, and Africa (e.g., Singapore, South Africa) consistently increased readership and revenue, leveraging strategic design.ConclusionBeyond the extraordinary business results from McKinsey, Harvard, Forbes, and Adobe, the world can easily see in this simple example that design has the transformative power as a spark for innovation and a driver of businessvalue.Jacek not only proved that good design can help readers reconnect with newspapers, but he also proved that good design can bring a dying industry back from the edge of extinction.https://medium.com/media/8229a39f9e4605e7d4bc15e0cd1867aa/hrefAnd he accomplished this by going beyond just aesthetics by improving the overall product and aligning content and design with a clear strategy. He collaborated with business leaders every step of theway.Jacek is now an art director for the Bonnier Business Press, overseeing papers in Eastern Europe and the Baltic states, and the work he oversees consistently wins major prizes (including another SND worlds-best in 2007 for Estonias ripev), despite their small teams and limited resources.source: DSRUPTR.comThe story shows that designers have the power to change not only a product but also the entire workflow and culture of acompany.To all designers and innovatorsthis is our creative spark! We need to embrace this story and seize the moment to use design to solve the biggest challenges in our organizations. We all need to be likeJacek.If Jacek can revolutionize the newspaper industry with design, then you can utilize design to revolutionize any industry.How design radically transformed a dying industry was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Comentários ·0 Compartilhamentos

-

Building a product that doesnt existuxdesign.ccDesigners, product, and engineers are all guided by the Product Ideal. The problem is, theyre all seeing a different one.Continue reading on UX Collective0 Comentários ·0 Compartilhamentos

Building a product that doesnt existuxdesign.ccDesigners, product, and engineers are all guided by the Product Ideal. The problem is, theyre all seeing a different one.Continue reading on UX Collective0 Comentários ·0 Compartilhamentos -

Why does Tesla have a bad reputation?uxdesign.ccAnalysis of how Elon Musks politics, manufacturing failures, and service disasters destroyed Teslas market dominanceContinue reading on UX Collective0 Comentários ·0 Compartilhamentos

Why does Tesla have a bad reputation?uxdesign.ccAnalysis of how Elon Musks politics, manufacturing failures, and service disasters destroyed Teslas market dominanceContinue reading on UX Collective0 Comentários ·0 Compartilhamentos -

Building trust in opaque systemsuxdesign.ccWhy the better AI gets at conversation, the worse we get at questioning itillustration byauthorHow do we know when to trust what someone tells us? In person conversations give us many subtle cues we might pick up on, but when they happen with AI system designed to sound perfectly human, we lose any sort of frame of reference we mayhave.With every new model, conversational AI sounds more and more genuinely intelligent and human-like, so much so that every day, millions of people chat with these systems as if talking to their most knowledgeable friend.From a design perspective, theyre very successful in the way they feel natural, authoritative and even empathetic, but this very naturalness becomes problematic as it makes it hard to distinguish when outputs are true or simply just plausible.This creates exactly the setup for misplaced trust: trust works best when paired with critical thinking, but the more we rely on these systems, the worse we get at it, ending up in this odd feedback loop thats surprisingly difficult toescape.The illusion of understandingTraditional software is straightforwardclick this button, get that result. AI systems are something else entirely because theyre unpredictable as they can make new decisions based on their training data. If we ask the same question twice we might get completely different wording, reasoning, or even different conclusions eachtime.How this thing thinks and speaks in such human ways, feels like magic to many users. Without understanding whats happening under the hood, its easy to miss that those magical sentences are simply the most statistically probable chain of words, making these systems something closer to a glorified Magic 8Ball.Back in 2022 when ChatGPT opened to public, I was also admittedly mesmerised by it, and after it proved useful in a couple of real-world situations, I started reaching for it more and more, even for simple questions andtasks.Until one day I was struggling with a presentation segment that felt flat compared to the rest and asked Claude for ideas on how to make it more compelling. We came up with a story I could reference, one I was already familiar with, but there was this one detail that felt oddly specific, so I asked for thesource.Part of the conversation with Claude (screenshot byauthor)You can imagine my surprise when Claude casually mentioned it had essentially fabricated that detail for emphasis.How I could have so easily accepted that made-up information genuinely unsettled me and became the catalyst for me to really try and understand what I was playing with. What I didnt know at the time was that this behaviour represents exactly what these systems are designed to do: generate responses that sound right, regardless if theyre actually true ornot.Human-like, but nothumanThe core problem when it comes to building trust in AI is that the end goal of these systems (utility) works directly against the transparency needed to establish genuinetrust.To maximise usefulness, AI needs to feel seamless and naturalnobody wants to talk to a robot, its assistance should be almost invisible. We wouldnt consciously worry about the physics of speech during conversation, so why should we think about AI mechanics? We ask a question, we get ananswer.But healthy scepticism requires transparency, which inevitably introduces friction. We should pause, question, verify, and think critically about the information we receive. We should treat these systems as the sophisticated tools they are rather than all-knowing beings.The biggest players seem to be solving for trust by leaning into illusion rather than transparency.Claude thinking indicator (screenshot by author, Sept2025)One key technique is anthropomorphising the interface through language choices. For example, the many thinking indicators that appear while actually just preparing a response, its a deliberate attempt at building trust. This works brilliantly because these human-like touches make users feel connected and understood.However, giving AI qualities like these thinking indicators, conversational tone, personality, and empathy creates two subtle yet critical problems:#1Giving AI human-like qualities, makes us lose the uncertainty signals that would normally help us detect when something is off. Humans naturally show knowing what they dont know through hesitation, qualifying statements (like I think maybe), or simply by admitting uncertainty. These are very helpful signals that let us know when to be more careful about trusting what someone issaying.AI systems however, rarely do thisthey can sound equally confident whether theyre giving you the population of Tokyo (which they probably know) or making up a detail about a case study (which they definitely dont know). Thats why detecting a mistake or a lie in these cases can be extremely hard.#2On top of this, users are more likely to assume the AI will perform better while feeling a deeper connection to it. So we end up trusting it based on how it feels rather than how well it actuallyworks.The industry calls this trust calibration, which is about finding the right level of trust so that users rely on AI systems appropriately, or in other words, in just the right amount based on what those systems can actually do. This is no easy feat in general, but because AI often sounds confident while being opaque and inconsistent, getting this balance right is extremely challenging.So how are companies currently attempting to solve this calibration problem?The limits of current solutionsAs a solution, theres a lot of talk around explainability. This refers to turning AI systems hidden logic into something humans can make sense of, helping users decide when to trust the output (and more importantly, when not to doso).Yet, this information only appears spontaneously in scenarios like medical or financial advice, or when training data is limited. In more routine interactionsbrainstorming, seeking adviceusers would need to actively prompt the AI to reveal the reasoning (as I had to do withClaude).Imagine constantly interrupting a conversation to ask someone where they heard something. The chat format creates an illusion of natural conversation that ends up discouraging the very critical thinking that explainability is meant toenable.Recognising these challenges, companies implement various other guardrails: refusal behaviours for harmful tasks, contextual warnings for sensitive topics, or straight up restriction of certain capabilities. These aim to prevent automation bias: our tendency to over-rely on automated systems.These guardrails, tho, have significant limitations. Not only are there known workarounds, but they fail to account for how these tools are actually used by millions of people with vastly different backgrounds and technical literacy.The contradiction becomes obvious when you notice where warnings actually appear. ChatGPTs disclaimer that it can make mistakes. Check important info sits right below the input field, yet I wonder how many people actually see it, and of those who do, how many take that advice. After all that effort to anthropomorphise the interface and create connection, a small grey disclaimer hardly feels like genuine transparency.Although tiny, Claudes disclaimer appears more contextually within the last reply provided (screenshot by author, Sept2025)Companies invest heavily in making AI feel more human and trustworthy through conversational interfaces, while simultaneously expecting users to maintain critical distance through small warnings and occasional guardrails. The result is that these become another form of false reassurance allowing companies to claim plausible deniability while essentially paying lip service to transparency andtrust.Scaffolding overcrutchesThis reveals a fundamental flaw in the current approach: theyre asking users to bear the weight of responsible use while providing tools designed to discourage the very scepticism they require. This, not only contradicts established UX principles about designing for your users actual capabilities and contexts, but also ignores how trust is actuallyformed.In fact, trust isnt built through one single intervention, but rather systematically across many touchpoints. So how might we approach this problem differently?Photo by Ricardo Gomez Angel onUnsplashA first step, I believe, would be ditching the seamless approach and rethinking friction. What if, instead of treating transparency as friction to reduce, design treated it as a capability to build upon? Instead of hiding complexity to fast-track utility, interfaces could gradually build users ability to work effectively with AI systemseventually teaching them not only how to use them responsibly, but when to trust them aswell.As a parallel, think scaffolding versus crutches. Current AI systems function more like crutchesthey provide so much support that users become dependent on them. Users lean on AI for answers without developing the skills to evaluate them, and much like actual crutches, this helps in the moment but prevents underlying capabilities (critical thinking, in this case) from getting stronger overtime.Designing transparency as scaffoldingIn a scaffolding model instead, AI systems could be much more flexible and adaptable so to surface transparency and guidance based on the users developing skills and the stakes of the decision.For example, we could imagine having different modes. A learning mode could surface uncertainty more explicitly within responsesalerts prompting users to verify claims the AI cannot back up directly, or inviting users to take answers with a grain of salt. This could happen in expandable sections so as not to intrude on the conversation flow, and as users interact with these components, the interface could gradually reduce explicit prompts while maintaining the underlying safeguards.Quick and dirty explorations of a learning mode (byauthor)For high-stakes decision, the interface could default to maximum transparency, like for example requiring users to verify factual claims with external sources before accessing final outputs. Visual indicators could distinguish between trained knowledge, recent search results, and generated examples, helping users understand where information comesfrom.This approach would treats AI as temporary support that builds user capabilities rather than replacing them, and instead of optimising for immediate task completion, scaffolding design would help fostering long-term competence by helping users develop verification habits and critical thinkingskills.Googles Gemini offers inline tips while images are being generated and then persist them on screen. This type of content is clearly distinguishable from the rest of the conversation and provides useful and contextual information based on the task the user is performing (screenshot by author, Sept2025)A trade-off worthmakingMuch of this goes against conventional product design principles around maximising ease of use. Adding these steps and indicators might seem like deliberate obstacles to user engagement because they are, but thats thepoint.The friction introduced in this case, would serve a different purpose than arbitrary barriersits protective and educational rather than obstructive. If designed mindfully, friction can help users treat AI tools as scaffolding rather than crutches, by developing the judgment skills needed to work safely with thesesystems.That conversation with Claude taught me something crucial about the gap between how these systems are presented and what they actually are. We face a choice between immediate utility while undermining our critical thinking, or building people up rather than making them dependent by accepting some friction as the price of maintaining our ability to think independently. The path forward isnt avoiding AI, but demanding better design that teaches us to use these tools wisely rather than depending on them entirely.Footnotes Im aware that my example here is a pretty silly one compared to the amount of misinformation, bad advice and just factually incorrect tidbits people are potentially exposed to everyday through these interactions. But aha moments work in mysterious waysSuggested reads- Co-constructing intent with AI agents by TenoLiu- The Psychology Of Trust In A World Where Products Keep Breaking Promises by Mehekk Bassi- Designing for control in AI UX by RobChappellBuilding trust in opaque systems was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Comentários ·0 Compartilhamentos

Building trust in opaque systemsuxdesign.ccWhy the better AI gets at conversation, the worse we get at questioning itillustration byauthorHow do we know when to trust what someone tells us? In person conversations give us many subtle cues we might pick up on, but when they happen with AI system designed to sound perfectly human, we lose any sort of frame of reference we mayhave.With every new model, conversational AI sounds more and more genuinely intelligent and human-like, so much so that every day, millions of people chat with these systems as if talking to their most knowledgeable friend.From a design perspective, theyre very successful in the way they feel natural, authoritative and even empathetic, but this very naturalness becomes problematic as it makes it hard to distinguish when outputs are true or simply just plausible.This creates exactly the setup for misplaced trust: trust works best when paired with critical thinking, but the more we rely on these systems, the worse we get at it, ending up in this odd feedback loop thats surprisingly difficult toescape.The illusion of understandingTraditional software is straightforwardclick this button, get that result. AI systems are something else entirely because theyre unpredictable as they can make new decisions based on their training data. If we ask the same question twice we might get completely different wording, reasoning, or even different conclusions eachtime.How this thing thinks and speaks in such human ways, feels like magic to many users. Without understanding whats happening under the hood, its easy to miss that those magical sentences are simply the most statistically probable chain of words, making these systems something closer to a glorified Magic 8Ball.Back in 2022 when ChatGPT opened to public, I was also admittedly mesmerised by it, and after it proved useful in a couple of real-world situations, I started reaching for it more and more, even for simple questions andtasks.Until one day I was struggling with a presentation segment that felt flat compared to the rest and asked Claude for ideas on how to make it more compelling. We came up with a story I could reference, one I was already familiar with, but there was this one detail that felt oddly specific, so I asked for thesource.Part of the conversation with Claude (screenshot byauthor)You can imagine my surprise when Claude casually mentioned it had essentially fabricated that detail for emphasis.How I could have so easily accepted that made-up information genuinely unsettled me and became the catalyst for me to really try and understand what I was playing with. What I didnt know at the time was that this behaviour represents exactly what these systems are designed to do: generate responses that sound right, regardless if theyre actually true ornot.Human-like, but nothumanThe core problem when it comes to building trust in AI is that the end goal of these systems (utility) works directly against the transparency needed to establish genuinetrust.To maximise usefulness, AI needs to feel seamless and naturalnobody wants to talk to a robot, its assistance should be almost invisible. We wouldnt consciously worry about the physics of speech during conversation, so why should we think about AI mechanics? We ask a question, we get ananswer.But healthy scepticism requires transparency, which inevitably introduces friction. We should pause, question, verify, and think critically about the information we receive. We should treat these systems as the sophisticated tools they are rather than all-knowing beings.The biggest players seem to be solving for trust by leaning into illusion rather than transparency.Claude thinking indicator (screenshot by author, Sept2025)One key technique is anthropomorphising the interface through language choices. For example, the many thinking indicators that appear while actually just preparing a response, its a deliberate attempt at building trust. This works brilliantly because these human-like touches make users feel connected and understood.However, giving AI qualities like these thinking indicators, conversational tone, personality, and empathy creates two subtle yet critical problems:#1Giving AI human-like qualities, makes us lose the uncertainty signals that would normally help us detect when something is off. Humans naturally show knowing what they dont know through hesitation, qualifying statements (like I think maybe), or simply by admitting uncertainty. These are very helpful signals that let us know when to be more careful about trusting what someone issaying.AI systems however, rarely do thisthey can sound equally confident whether theyre giving you the population of Tokyo (which they probably know) or making up a detail about a case study (which they definitely dont know). Thats why detecting a mistake or a lie in these cases can be extremely hard.#2On top of this, users are more likely to assume the AI will perform better while feeling a deeper connection to it. So we end up trusting it based on how it feels rather than how well it actuallyworks.The industry calls this trust calibration, which is about finding the right level of trust so that users rely on AI systems appropriately, or in other words, in just the right amount based on what those systems can actually do. This is no easy feat in general, but because AI often sounds confident while being opaque and inconsistent, getting this balance right is extremely challenging.So how are companies currently attempting to solve this calibration problem?The limits of current solutionsAs a solution, theres a lot of talk around explainability. This refers to turning AI systems hidden logic into something humans can make sense of, helping users decide when to trust the output (and more importantly, when not to doso).Yet, this information only appears spontaneously in scenarios like medical or financial advice, or when training data is limited. In more routine interactionsbrainstorming, seeking adviceusers would need to actively prompt the AI to reveal the reasoning (as I had to do withClaude).Imagine constantly interrupting a conversation to ask someone where they heard something. The chat format creates an illusion of natural conversation that ends up discouraging the very critical thinking that explainability is meant toenable.Recognising these challenges, companies implement various other guardrails: refusal behaviours for harmful tasks, contextual warnings for sensitive topics, or straight up restriction of certain capabilities. These aim to prevent automation bias: our tendency to over-rely on automated systems.These guardrails, tho, have significant limitations. Not only are there known workarounds, but they fail to account for how these tools are actually used by millions of people with vastly different backgrounds and technical literacy.The contradiction becomes obvious when you notice where warnings actually appear. ChatGPTs disclaimer that it can make mistakes. Check important info sits right below the input field, yet I wonder how many people actually see it, and of those who do, how many take that advice. After all that effort to anthropomorphise the interface and create connection, a small grey disclaimer hardly feels like genuine transparency.Although tiny, Claudes disclaimer appears more contextually within the last reply provided (screenshot by author, Sept2025)Companies invest heavily in making AI feel more human and trustworthy through conversational interfaces, while simultaneously expecting users to maintain critical distance through small warnings and occasional guardrails. The result is that these become another form of false reassurance allowing companies to claim plausible deniability while essentially paying lip service to transparency andtrust.Scaffolding overcrutchesThis reveals a fundamental flaw in the current approach: theyre asking users to bear the weight of responsible use while providing tools designed to discourage the very scepticism they require. This, not only contradicts established UX principles about designing for your users actual capabilities and contexts, but also ignores how trust is actuallyformed.In fact, trust isnt built through one single intervention, but rather systematically across many touchpoints. So how might we approach this problem differently?Photo by Ricardo Gomez Angel onUnsplashA first step, I believe, would be ditching the seamless approach and rethinking friction. What if, instead of treating transparency as friction to reduce, design treated it as a capability to build upon? Instead of hiding complexity to fast-track utility, interfaces could gradually build users ability to work effectively with AI systemseventually teaching them not only how to use them responsibly, but when to trust them aswell.As a parallel, think scaffolding versus crutches. Current AI systems function more like crutchesthey provide so much support that users become dependent on them. Users lean on AI for answers without developing the skills to evaluate them, and much like actual crutches, this helps in the moment but prevents underlying capabilities (critical thinking, in this case) from getting stronger overtime.Designing transparency as scaffoldingIn a scaffolding model instead, AI systems could be much more flexible and adaptable so to surface transparency and guidance based on the users developing skills and the stakes of the decision.For example, we could imagine having different modes. A learning mode could surface uncertainty more explicitly within responsesalerts prompting users to verify claims the AI cannot back up directly, or inviting users to take answers with a grain of salt. This could happen in expandable sections so as not to intrude on the conversation flow, and as users interact with these components, the interface could gradually reduce explicit prompts while maintaining the underlying safeguards.Quick and dirty explorations of a learning mode (byauthor)For high-stakes decision, the interface could default to maximum transparency, like for example requiring users to verify factual claims with external sources before accessing final outputs. Visual indicators could distinguish between trained knowledge, recent search results, and generated examples, helping users understand where information comesfrom.This approach would treats AI as temporary support that builds user capabilities rather than replacing them, and instead of optimising for immediate task completion, scaffolding design would help fostering long-term competence by helping users develop verification habits and critical thinkingskills.Googles Gemini offers inline tips while images are being generated and then persist them on screen. This type of content is clearly distinguishable from the rest of the conversation and provides useful and contextual information based on the task the user is performing (screenshot by author, Sept2025)A trade-off worthmakingMuch of this goes against conventional product design principles around maximising ease of use. Adding these steps and indicators might seem like deliberate obstacles to user engagement because they are, but thats thepoint.The friction introduced in this case, would serve a different purpose than arbitrary barriersits protective and educational rather than obstructive. If designed mindfully, friction can help users treat AI tools as scaffolding rather than crutches, by developing the judgment skills needed to work safely with thesesystems.That conversation with Claude taught me something crucial about the gap between how these systems are presented and what they actually are. We face a choice between immediate utility while undermining our critical thinking, or building people up rather than making them dependent by accepting some friction as the price of maintaining our ability to think independently. The path forward isnt avoiding AI, but demanding better design that teaches us to use these tools wisely rather than depending on them entirely.Footnotes Im aware that my example here is a pretty silly one compared to the amount of misinformation, bad advice and just factually incorrect tidbits people are potentially exposed to everyday through these interactions. But aha moments work in mysterious waysSuggested reads- Co-constructing intent with AI agents by TenoLiu- The Psychology Of Trust In A World Where Products Keep Breaking Promises by Mehekk Bassi- Designing for control in AI UX by RobChappellBuilding trust in opaque systems was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.0 Comentários ·0 Compartilhamentos -