BLOGS.NVIDIA.COM

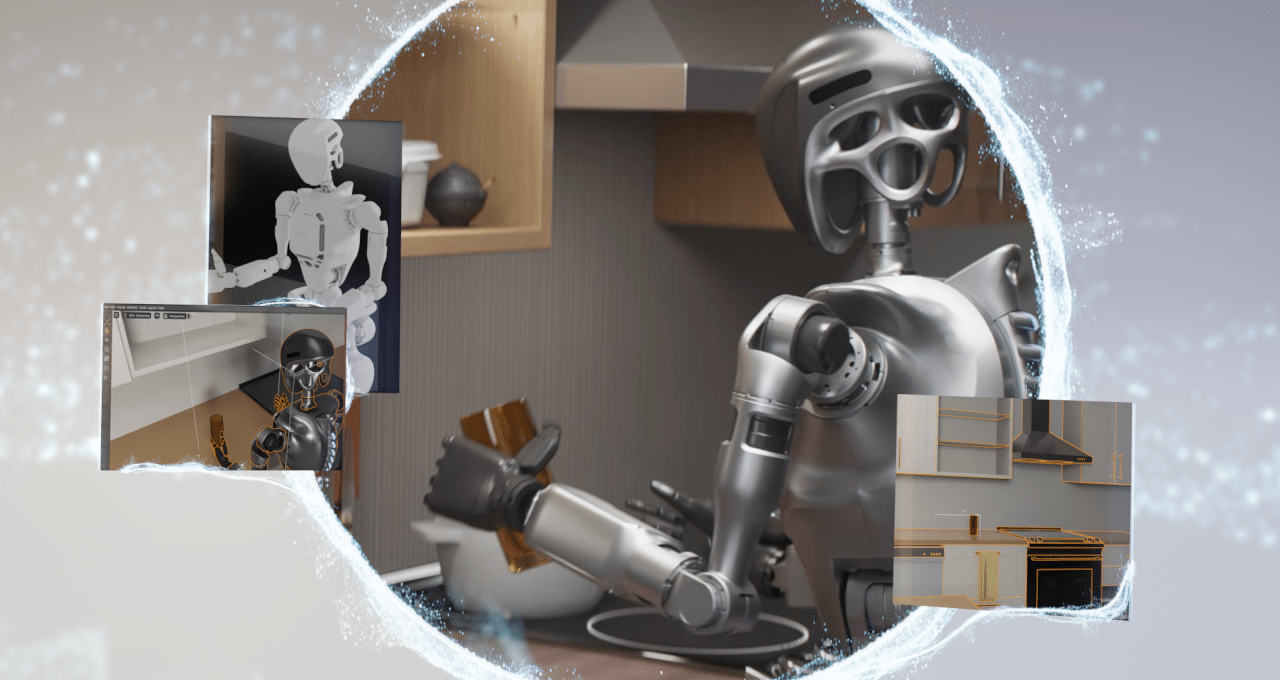

Into the Omniverse: How OpenUSD-Based Simulation and Synthetic Data Generation Advance Robot Learning

Editors note: This post is part of Into the Omniverse, a series focused on how developers, 3D practitioners, and enterprises can transform their workflows using the latest advances in OpenUSD and NVIDIA Omniverse.Scalable simulation technologies are driving the future of autonomous robotics by reducing development time and costs.Universal Scene Description (OpenUSD) provides a scalable and interoperable data framework for developing virtual worlds where robots can learn how to be robots. With SimReady OpenUSD-based simulations, developers can create limitless scenarios based on the physical world.And NVIDIA Isaac Sim is advancing perception AI-based robotics simulation. Isaac Sim is a reference application built on the NVIDIA Omniverse platform for developers to simulate and test AI-driven robots in physically based virtual environments.At AWS re:Invent, NVIDIA announced that Isaac Sim is now available on Amazon EC2 G6e instances powered by NVIDIA L40S GPUs. These powerful instances enhance the performance and accessibility of Isaac Sim, making high-quality robotics simulations more scalable and efficient.These advancements in Isaac Sim mark a significant leap for robotics development. By enabling realistic testing and AI model training in virtual environments, companies can reduce time to deployment and improve robot performance across a variety of use cases.Advancing Robotics Simulation With Synthetic Data GenerationRobotics companies like Cobot, Field AI and Vention are using Isaac Sim to simulate and validate robot performance while others, such as SoftServe and Tata Consultancy Services, use synthetic data to bootstrap AI models for diverse robotics applications.The evolution of robot learning has been deeply intertwined with simulation technology. Early experiments in robotics relied heavily on labor-intensive, resource-heavy trials. Simulation is a crucial tool for the creation of physically accurate environments where robots can learn through trial and error, refine algorithms and even train AI models using synthetic data.Physical AI describes AI models that can understand and interact with the physical world. It embodies the next wave of autonomous machines and robots, such as self-driving cars, industrial manipulators, mobile robots, humanoids and even robot-run infrastructure like factories and warehouses.Robotics simulation, which forms the second computer in the three computer solution, is a cornerstone of physical AI development that lets engineers and researchers design, test and refine systems in a controlled virtual environment.A simulation-first approach significantly reduces the cost and time associated with physical prototyping while enhancing safety by allowing robots to be tested in scenarios that might otherwise be impractical or hazardous in real life.With a new reference workflow, developers can accelerate the generation of synthetic 3D datasets with generative AI using OpenUSD NIM microservices. This integration streamlines the pipeline from scene creation to data augmentation, enabling faster and more accurate training of perception AI models.Synthetic data can help address the challenge of limited, restricted or unavailable data needed to train various types of AI models, especially in computer vision. Developing action recognition models is a common use case that can benefit from synthetic data generation.To learn how to create a human action recognition video dataset with Isaac Sim, check out the technical blog on Scaling Action Recognition Models With Synthetic Data. 3D simulations offer developers precise control over image generation, eliminating hallucinations.Robotic Simulation for HumanoidsHumanoid robots are the next wave of embodied AI, but they present a challenge at the intersection of mechatronics, control theory and AI. Simulation is crucial to solving this challenge by providing a safe, cost-effective and versatile platform for training and testing humanoids.With NVIDIA Isaac Lab, an open-source unified framework for robot learning built on top of Isaac Sim, developers can train humanoid robot policies at scale via simulations. Leading commercial robot makers are adopting Isaac Lab to handle increasingly complex movements and interactions.NVIDIA Project GR00T, an active research initiative to enable the humanoid robot ecosystem of builders, is pioneering workflows such as GR00T-Gen to generate robot tasks and simulation-ready environments in OpenUSD. These can be used for training generalist robots to perform manipulation, locomotion and navigation.Recently published research from Project GR00T also shows how advanced simulation can be used to train interactive humanoids. Using Isaac Sim, the researchers developed a single unified controller for physically simulated humanoids called MaskedMimic. The system is capable of generating a wide range of motions across diverse terrains from intuitive user-defined intents.Physics-Based Digital Twins Simplify AI TrainingPartners across industries are using Isaac Sim, Isaac Lab, Omniverse, and OpenUSD to design, simulate and deploy smarter, more capable autonomous machines:Agility uses Isaac Lab to create simulations that let simulated robot behaviors transfer directly to the robot, making it more intelligent, agile and robust when deployed in the real world.Cobot uses Isaac Sim with its AI-powered cobot, Proxie, to optimize logistics in warehouses, hospitals, manufacturing sites and more.Cohesive Robotics has integrated Isaac Sim into its software framework called Argus OS for developing and deploying robotic workcells used in high-mix manufacturing environments.Field AI, a builder of robot foundation models, uses Isaac Sim and Isaac Lab to evaluate the performance of its models in complex, unstructured environments across industries such as construction, manufacturing, oil and gas, mining, and more.Fourier uses NVIDIA Isaac Gym and Isaac Lab to train its GR-2 humanoid robot, using reinforcement learning and advanced simulations to accelerate development, enhance adaptability and improve real-world performance.Foxglove integrates Isaac Sim and Omniverse to enable efficient robot testing, training and sensor data analysis in realistic 3D environments.Galbot used Isaac Sim to verify the data generation of DexGraspNet, a large-scale dataset of 1.32 million ShadowHand grasps, advancing robotic hand functionality by enabling scalable validation of diverse object interactions across 5,355 objects and 133 categories.Standard Bots is simulating and validating the performance of its R01 robot used in manufacturing and machining setups.Wandelbots integrates its NOVA platform with Isaac Sim to create physics-based digital twins and intuitive training environments, simplifying robot interaction and enabling seamless testing, validation and deployment of robotic systems in real-world scenarios.Learn more about how Wandelbots is advancing robot learning with NVIDIA technology in this livestream recording:Get Plugged Into the World of OpenUSDNVIDIA experts and Omniverse Ambassadors are hosting livestream office hours and study groups to provide robotics developers with technical guidance and troubleshooting support for Isaac Sim and Isaac Lab. Learn how to get started simulating robots in Isaac Sim with this new, free course on NVIDIA Deep Learning Institute (DLI).For more on optimizing OpenUSD workflows, explore the new self-paced Learn OpenUSD training curriculum that includes free DLI courses for 3D practitioners and developers. For more resources on OpenUSD, explore the Alliance for OpenUSD forum and the AOUSD website.Dont miss the CES keynote delivered by NVIDIA founder and CEO Jensen Huang live in Las Vegas on Monday, Jan. 6, at 6:30 p.m. PT for more on the future of AI and graphics.Stay up to date by subscribing to NVIDIA news, joining the community, and following NVIDIA Omniverse on Instagram, LinkedIn, Medium and X.Featured image courtesy of Fourier.

0 Commentarii

0 Distribuiri

28 Views