How GeForce RTX 50 Series GPUs Are Built to Supercharge Generative AI on PCs

blogs.nvidia.com

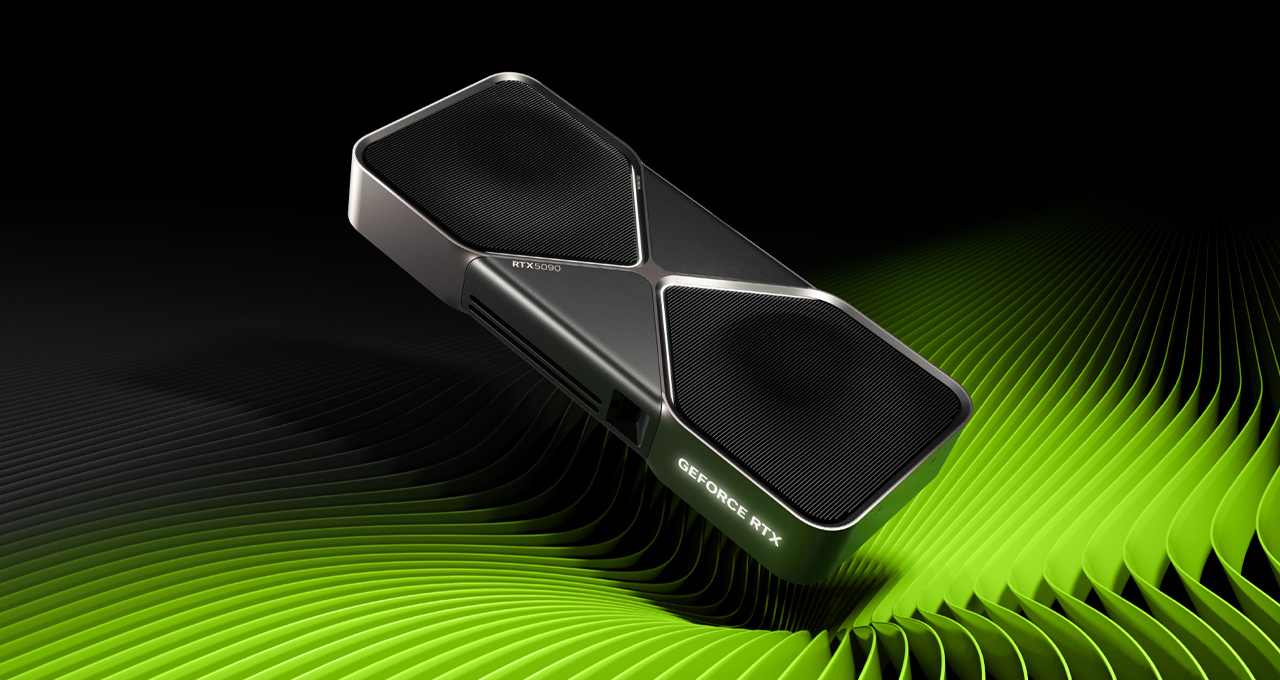

NVIDIAs GeForce RTX 5090 and 5080 GPUs which are based on the groundbreaking NVIDIA Blackwell architecture offer up to 8x faster frame rates with NVIDIA DLSS 4 technology, lower latency with NVIDIA Reflex 2 and enhanced graphical fidelity with NVIDIA RTX neural shaders.These GPUs were built to accelerate the latest generative AI workloads, delivering up to 3,352 AI trillion operations per second (TOPS), enabling incredible experiences for AI enthusiasts, gamers, creators and developers.To help AI developers and enthusiasts harness these capabilities, NVIDIA at the CES trade show last month unveiled NVIDIA NIM and AI Blueprints for RTX. NVIDIA NIM microservices are prepackaged generative AI models that let developers and enthusiasts easily get started with generative AI, iterate quickly and harness the power of RTX for accelerating AI on Windows PCs. NVIDIA AI Blueprints are reference projects that show developers how to use NIM microservices to build the next generation of AI experiences.NIM and AI Blueprints are optimized for GeForce RTX 50 Series GPUs. These technologies work together seamlessly to help developers and enthusiasts build, iterate and deliver cutting-edge AI experiences on AI PCs.NVIDIA NIM Accelerates Generative AI on PCsWhile AI model development is rapidly advancing, bringing these innovations to PCs remains a challenge for many people. Models posted on platforms like Hugging Face must be curated, adapted and quantized to run on PC. They also need to be integrated into new AI application programming interfaces (APIs) to ensure compatibility with existing tools, and converted to optimized inference backends for peak performance.NVIDIA NIM microservices for RTX AI PCs and workstations can ease the complexity of this process by providing access to community-driven and NVIDIA-developed AI models. These microservices are easy to download and connect to via industry-standard APIs and span the key modalities essential for AI PCs. They are also compatible with a wide range of AI tools and offer flexible deployment options, whether on PCs, in data centers, or in the cloud.NIM microservices include everything needed to run optimized models on PCs with RTX GPUs, including prebuilt engines for specific GPUs, the NVIDIA TensorRT software development kit (SDK), the open-source NVIDIA TensorRT-LLM library for accelerated inference using Tensor Cores, and more.Microsoft and NVIDIA worked together to enable NIM microservices and AI Blueprints for RTX in Windows Subsystem for Linux (WSL2). With WSL2, the same AI containers that run on data center GPUs can now run efficiently on RTX PCs, making it easier for developers to build, test and deploy AI models across platforms.In addition, NIM and AI Blueprints harness key innovations of the Blackwell architecture that the GeForce RTX 50 series is built on, including fifth-generation Tensor Cores and support for FP4 precision.Tensor Cores Drive Next-Gen AI PerformanceAI calculations are incredibly demanding and require vast amounts of processing power. Whether generating images and videos or understanding language and making real-time decisions, AI models rely on hundreds of trillions of mathematical operations to be completed every second. To keep up, computers need specialized hardware built specifically for AI.NVIDIA GeForce RTX desktop GPUs deliver up to 3,352 AI TOPS for unmatched speed and efficiency in AI-powered workflows.In 2018, NVIDIA GeForce RTX GPUs changed the game by introducing Tensor Cores dedicated AI processors designed to handle these intensive workloads. Unlike traditional computing cores, Tensor Cores are built to accelerate AI by performing calculations faster and more efficiently. This breakthrough helped bring AI-powered gaming, creative tools and productivity applications into the mainstream.Blackwell architecture takes AI acceleration to the next level. The fifth-generation Tensor Cores in Blackwell GPUs deliver up to 3,352 AI TOPS to handle even more demanding AI tasks and simultaneously run multiple AI models. This means faster AI-driven experiences, from real-time rendering to intelligent assistants, that pave the way for greater innovation in gaming, content creation and beyond.FP4 Smaller Models, Bigger PerformanceAnother way to optimize AI performance is through quantization, a technique that reduces model sizes, enabling the models to run faster while reducing the memory requirements.Enter FP4 an advanced quantization format that allows AI models to run faster and leaner without compromising output quality. Compared with FP16, it reduces model size by up to 60% and more than doubles performance, with minimal degradation.For example, Black Forest Labs FLUX.1 [dev] model at FP16 requires over 23GB of VRAM, meaning it can only be supported by the GeForce RTX 4090 and professional GPUs. With FP4, FLUX.1 [dev] requires less than 10GB, so it can run locally on more GeForce RTX GPUs.On a GeForce RTX 4090 with FP16, the FLUX.1 [dev] model can generate images in 15 seconds with just 30 steps. With a GeForce RTX 5090 with FP4, images can be generated in just over five seconds.FP4 is natively supported by the Blackwell architecture, making it easier than ever to deploy high-performance AI on local PCs. Its also integrated into NIM microservices, effectively optimizing models that were previously difficult to quantize. By enabling more efficient AI processing, FP4 helps to bring faster, smarter AI experiences for content creation.AI Blueprints Power Advanced AI Workflows on RTX PCsNVIDIA AI Blueprints, built on NIM microservices, provide prepackaged, optimized reference implementations that make it easier to develop advanced AI-powered projects whether for digital humans, podcast generators or application assistants.At CES, NVIDIA demonstrated PDF to Podcast, a blueprint that allows users to convert a PDF into a fun podcast, and even create a Q&A with the AI podcast host afterwards.This workflow integrates seven different AI models, all working in sync to deliver a dynamic, interactive experience.The blueprint for PDF to podcast harnesses several AI models to seamlessly convert PDFs into engaging podcasts, complete with an interactive Q&A feature hosted by an AI-powered podcast host.With AI Blueprints, users can quickly go from experimenting with to developing AI on RTX PCs and workstations.NIM and AI Blueprints Coming Soon to RTX PCs and WorkstationsGenerative AI is pushing the boundaries of whats possible across gaming, content creation and more. With NIM microservices and AI Blueprints, the latest AI advancements are no longer limited to the cloud theyre now optimized for RTX PCs. With RTX GPUs, developers and enthusiasts can experiment, build and deploy AI locally, right from their PCs and workstations.NIM microservices and AI Blueprints are coming soon, with initial hardware support for GeForce RTX 50 Series, GeForce RTX 4090 and 4080, and NVIDIA RTX 6000 and 5000 professional GPUs. Additional GPUs will be supported in the future.

0 التعليقات

·0 المشاركات

·52 مشاهدة