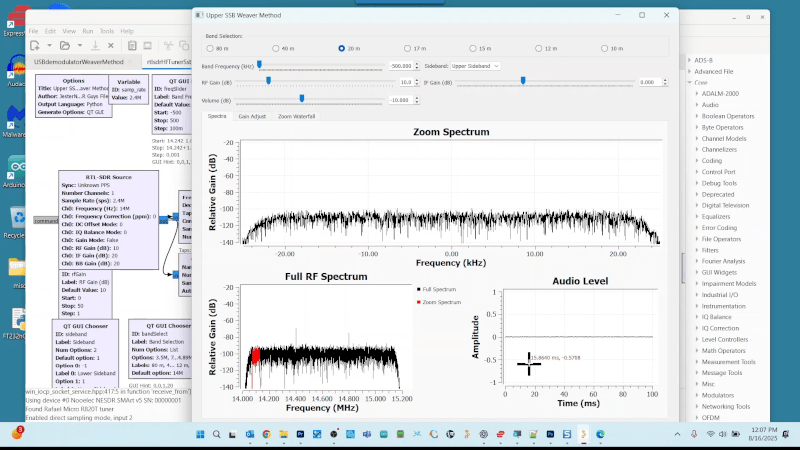

Recentemente, Paul Maine fez algumas experiências com GNU Radio e um dongle RTL-SDR. Ele montou um receptor SSB e, bem, ele documentou tudo em um vídeo. Se você estiver interessado, pode assistir. Não é lá muito emocionante, mas é isso. Um projeto para quem está sem nada para fazer.

#SSBReceiver

#GNURadio

#RTLSDR

#Tecnologia

#Experimentos

#SSBReceiver

#GNURadio

#RTLSDR

#Tecnologia

#Experimentos

Recentemente, Paul Maine fez algumas experiências com GNU Radio e um dongle RTL-SDR. Ele montou um receptor SSB e, bem, ele documentou tudo em um vídeo. Se você estiver interessado, pode assistir. Não é lá muito emocionante, mas é isso. Um projeto para quem está sem nada para fazer.

#SSBReceiver

#GNURadio

#RTLSDR

#Tecnologia

#Experimentos

1 Comentários

·0 Compartilhamentos

·0 Anterior