0 Reacties

0 aandelen

111 Views

Bedrijvengids

Bedrijvengids

-

Please log in to like, share and comment!

-

WWW.ARCHPAPER.COMSteve Tobin’s New York Roots evokes connectivity in the Garment DistrictWhat is usually hidden below the surface, erupts from the ground in Manhattan’s Garment District. Cold, white steel branches curve and splay in different directions, creating a tangled 22-foot installation. Steve Tobin’s New York Roots populates the Broadway plazas between 39th and 41st Street with seven sculptures that vary in height but all evoke the unseen networks that lie beneath the ground. The installation is a collaboration between the Garment District Alliance and New York City’s Department of Transportation Art Program. Pedestrians can weave in and out of the structures, inviting them to think about what connects us as human beings. The intertwined shapes and flowing forms create dramatic views and open spaces, offering new perspectives as people walk around and under them. Since 2010 the plaza in Midtown has featured artists including Chakaia Booker, who created a 35-foot-tall abstract sculpture made of repurposed rubber tires. New York Roots, is the latest installation, hosted by the Garment District Alliance, to touch down in the neighborhood. The installation New York Roots consists of seven sculptures made of steel, the tallest of which reaches 22 feet. (Alexandre Ayer) “New York Roots is a captivating addition to the Garment District that transforms our public plazas into spaces for reflection and serves as an important reminder to stay rooted in our communities,” said Barbara A. Blair, president of the Garment District Alliance. Officially named in 1919, the Garment District in Midtown Manhattan was once New York City’s epicenter of clothing manufacturing. Bolts of fabric and racks of clothing lined the streets to be sent all over the country. As it became cheaper to make clothes abroad, the factories that once supported the garment industry shut down, leading to the neighborhood’s decline. Tobin’s New York Roots recalls the history of the city, connecting the past and the future of the once vibrant neighborhood. Among his many significant installations, Tobin is recognized in New York City for Trinity Root. Dedicated in 2005, the 20-foot copper sculpture was one of the first monuments to commemorate 9/11. The sculpture was inspired by a sycamore tree that protected St. Paul’s Chapel at Trinity Church as Lower Manhattan around it was decimated by the attacks. New York Roots, and Tobin’s other works, aren’t just art– they act as guardians of space, encouraging people to pause, connect, and feel a sense of unity in their surroundings. New York Roots will be on view to the public through February 2026.0 Reacties 0 aandelen 99 Views

WWW.ARCHPAPER.COMSteve Tobin’s New York Roots evokes connectivity in the Garment DistrictWhat is usually hidden below the surface, erupts from the ground in Manhattan’s Garment District. Cold, white steel branches curve and splay in different directions, creating a tangled 22-foot installation. Steve Tobin’s New York Roots populates the Broadway plazas between 39th and 41st Street with seven sculptures that vary in height but all evoke the unseen networks that lie beneath the ground. The installation is a collaboration between the Garment District Alliance and New York City’s Department of Transportation Art Program. Pedestrians can weave in and out of the structures, inviting them to think about what connects us as human beings. The intertwined shapes and flowing forms create dramatic views and open spaces, offering new perspectives as people walk around and under them. Since 2010 the plaza in Midtown has featured artists including Chakaia Booker, who created a 35-foot-tall abstract sculpture made of repurposed rubber tires. New York Roots, is the latest installation, hosted by the Garment District Alliance, to touch down in the neighborhood. The installation New York Roots consists of seven sculptures made of steel, the tallest of which reaches 22 feet. (Alexandre Ayer) “New York Roots is a captivating addition to the Garment District that transforms our public plazas into spaces for reflection and serves as an important reminder to stay rooted in our communities,” said Barbara A. Blair, president of the Garment District Alliance. Officially named in 1919, the Garment District in Midtown Manhattan was once New York City’s epicenter of clothing manufacturing. Bolts of fabric and racks of clothing lined the streets to be sent all over the country. As it became cheaper to make clothes abroad, the factories that once supported the garment industry shut down, leading to the neighborhood’s decline. Tobin’s New York Roots recalls the history of the city, connecting the past and the future of the once vibrant neighborhood. Among his many significant installations, Tobin is recognized in New York City for Trinity Root. Dedicated in 2005, the 20-foot copper sculpture was one of the first monuments to commemorate 9/11. The sculpture was inspired by a sycamore tree that protected St. Paul’s Chapel at Trinity Church as Lower Manhattan around it was decimated by the attacks. New York Roots, and Tobin’s other works, aren’t just art– they act as guardians of space, encouraging people to pause, connect, and feel a sense of unity in their surroundings. New York Roots will be on view to the public through February 2026.0 Reacties 0 aandelen 99 Views -

WWW.THISISCOLOSSAL.COMA Bizarre Animated Music Video for Foxwarren Compels Us to ListenDirector Winston Hacking (previously) and animator Philippe Tardif are back with another collaborative music video that propels us through an uncanny series of portals. For Foxwarren’s catchy “Listen2me,” the pair created a cheeky animated collage in which characters gab as we’re pushed closer to their faces. The result is a mesmerizing, surreal video that, to Foxwarren’s pleading lyrics, compels our attention. Find more from Hacking on Vimeo. Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $7 per month. The article A Bizarre Animated Music Video for Foxwarren Compels Us to Listen appeared first on Colossal.0 Reacties 0 aandelen 175 Views

WWW.THISISCOLOSSAL.COMA Bizarre Animated Music Video for Foxwarren Compels Us to ListenDirector Winston Hacking (previously) and animator Philippe Tardif are back with another collaborative music video that propels us through an uncanny series of portals. For Foxwarren’s catchy “Listen2me,” the pair created a cheeky animated collage in which characters gab as we’re pushed closer to their faces. The result is a mesmerizing, surreal video that, to Foxwarren’s pleading lyrics, compels our attention. Find more from Hacking on Vimeo. Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $7 per month. The article A Bizarre Animated Music Video for Foxwarren Compels Us to Listen appeared first on Colossal.0 Reacties 0 aandelen 175 Views -

WWW.ZDNET.COM5 easy ways to transfer photos from your Android device to your Windows PCYou can transfer photos from your Android phone to your Windows computer using a variety of different methods. Here are step-by-step instructions for the five easiest ways.0 Reacties 0 aandelen 122 Views

WWW.ZDNET.COM5 easy ways to transfer photos from your Android device to your Windows PCYou can transfer photos from your Android phone to your Windows computer using a variety of different methods. Here are step-by-step instructions for the five easiest ways.0 Reacties 0 aandelen 122 Views -

WWW.FORBES.COMPoetry And Deception: Secrets Of Anthropic’s Claude 3.5 Haiku AI ModelTwo new research papers from Anthropic provide surprising insights into how an AI model "thinks." ... More The results are fascinating — and point to the need for further study.Pixabay Anthropic AI recently published two breakthrough research papers that provide surprising insights into how an AI model “thinks.” One of the papers follows Anthropic’s earlier research that linked human-understandable concepts with LLMs’ internal pathways to understand how model outputs are generated. The second paper reveals how Anthropic’s Claude 3.5 Haiku model handled simple tasks associated with ten model behaviors. These two research papers have provided valuable information on how AI models work — not by any means a complete understanding, but at least a glimpse. Let’s dig into what we can learn from that glimpse, including some possibly minor but still important concerns about AI safety. Looking ‘Under The Hood’ Of An LLM LLMs such as Claude aren’t programmed like traditional computers. Instead, they are trained with massive amounts of data. This process creates AI models that behave like black boxes, which obscures how they can produce insightful information on almost any subject. However, black-box AI isn’t an architectural choice; it is simply a result of how this complex and nonlinear technology operates. Complex neural networks within an LLM use billions of interconnected nodes to transform data into useful information. These networks contain vast internal processes with billions of parameters, connections and computational pathways. Each parameter interacts non-linearly with other parameters, creating immense complexities that are almost impossible to understand or unravel. According to Anthropic, “This means that we don’t understand how models do most of the things they do.” Anthropic follows a two-step approach to LLM research. First, it identifies features, which are interpretable building blocks that the model uses in its computations. Second, it describes the internal processes, or circuits, by which features interact to produce model outputs. Because of the model’s complexity, Anthropic’s new research could illuminate only a fraction of the LLM’s inner workings. But what was revealed about these models seemed more like science fiction than real science. What We Know About How Claude 3.5 WorksAttribution graphs were applied to these phenomena for researching Claude 3.5 Haiku.Anthropic One of Anthropic’s groundbreaking research papers carried the title of “On the Biology of a Large Language Model.” The paper examined how the scientists used attribution graphs to internally trace how the Claude 3.5 Haiku language model transformed inputs into outputs. Researchers were surprised by some results. Here are a few of their interesting discoveries: Multi-Step Reasoning — Claude 3.5 Haiku was able to complete some complex reasoning tasks internally without showing any intermediate steps that contributed to the output. Researchers were surprised to find out that the model could create intermediate reasoning steps “in its head.” Claude likely used a more sophisticated internal process than previously thought. Red flag: This raises some concerns because of the model’s lack of transparency. Biased or flawed logic could open the door for a model to intentionally obscure its motives or actions. Planning for Text Generation — Before creating text such as poetry, the model used structural elements of the text to create a list of rhyming word in advance, then used that list to construct the next lines. Researchers were surprised to discover that the model used that amount of forward planning, which in some respects is human-like. Research showed it chose words like “rabbit” beforehand because they rhymed with later phrases such as “grab it.” Red flag: This is impressive, but it is possible that a model could use sophisticated planning capability to create deceptive content. Chain-of-Thought Reasoning — The model’s stated chain-of-thought reasoning steps did not necessarily reflect its actual decision-making processes as revealed by research. It was shown that sometimes Claude performed reasoning steps internally but didn’t reveal them. As an example, research found that the model silently determined that “Dallas is in Texas” before actually stating that Austin was the state capital. This suggests that explanations for reasoning could potentially be fabricated after an answer has been determined, or that the model might intentionally conceal its reasoning from the user. Anthropic previously published deeper research into this subject in a paper entitled “Reasoning Models Don’t Always Say What They Think.” Red flag: This discrepancy opens the door for intentional deception and misleading information. It is not dangerous for a model to reason internally, because humans do that, too. The problem here is that the external explanation doesn’t match the model’s internal “thoughts.” That could be intentional or just a function of its processing. Nevertheless, it erodes trust and hinders accountability. We Need More Research Into LLMs’ Internal Workings And Security Scientists who conducted the research for “On the Biology of a Large Language Model” concede that Claude 3.5 Haiku exhibits some concealed operations and goals not evident in its outputs. The attribution graphs revealed a number of hidden issues. These discoveries underscore the complexity of the model’s internal behavior and highlight the importance of continued efforts to make models more transparent and aligned with human expectations. It is likely these issues also appear in other similar LLMs. With respect to my red flags noted above, it should be mentioned that Anthropic continually updates its Responsible Scaling Policy, which has been in effect since September 2023. Anthropic has made a commitment not to train or deploy models capable of causing catastrophic harm unless safety and security measures have been implemented that keep risks within acceptable limits. Anthropic has also stated that all of its models meet the ASL Deployment and Security Standards, which provide a baseline level of safe deployment and model security. As LLMs have grown larger and more powerful, deployment has spread to critical applications in areas such as healthcare, finance and defense. The increase in model complexity and wider deployment has also increased pressure to achieve a better understanding of how AI works. It is critical to ensure that AI models produce fair, trustworthy, unbiased and safe outcomes. Research is important for our understanding of LLMs, not only to improve and more fully utilize AI, but also to expose potentially dangerous processes. The Anthropic scientists have examined just a small portion of this model’s complexity and hidden capabilities. This research reinforces the need for more study of AI’s internal operations and security. In my view, it is unfortunate that our complete understanding of LLMs has taken a back seat to the market’s preference for AI’s high performance outcomes and usefulness. We need to thoroughly understand how LLMs work to ensure safety guardrails are adequate. Moor Insights & Strategy provides or has provided paid services to technology companies, like all tech industry research and analyst firms. These services include research, analysis, advising, consulting, benchmarking, acquisition matchmaking and video and speaking sponsorships. Moor Insights & Strategy does not have paid business relationships with any company mentioned in this article.Editorial StandardsForbes Accolades0 Reacties 0 aandelen 87 Views

WWW.FORBES.COMPoetry And Deception: Secrets Of Anthropic’s Claude 3.5 Haiku AI ModelTwo new research papers from Anthropic provide surprising insights into how an AI model "thinks." ... More The results are fascinating — and point to the need for further study.Pixabay Anthropic AI recently published two breakthrough research papers that provide surprising insights into how an AI model “thinks.” One of the papers follows Anthropic’s earlier research that linked human-understandable concepts with LLMs’ internal pathways to understand how model outputs are generated. The second paper reveals how Anthropic’s Claude 3.5 Haiku model handled simple tasks associated with ten model behaviors. These two research papers have provided valuable information on how AI models work — not by any means a complete understanding, but at least a glimpse. Let’s dig into what we can learn from that glimpse, including some possibly minor but still important concerns about AI safety. Looking ‘Under The Hood’ Of An LLM LLMs such as Claude aren’t programmed like traditional computers. Instead, they are trained with massive amounts of data. This process creates AI models that behave like black boxes, which obscures how they can produce insightful information on almost any subject. However, black-box AI isn’t an architectural choice; it is simply a result of how this complex and nonlinear technology operates. Complex neural networks within an LLM use billions of interconnected nodes to transform data into useful information. These networks contain vast internal processes with billions of parameters, connections and computational pathways. Each parameter interacts non-linearly with other parameters, creating immense complexities that are almost impossible to understand or unravel. According to Anthropic, “This means that we don’t understand how models do most of the things they do.” Anthropic follows a two-step approach to LLM research. First, it identifies features, which are interpretable building blocks that the model uses in its computations. Second, it describes the internal processes, or circuits, by which features interact to produce model outputs. Because of the model’s complexity, Anthropic’s new research could illuminate only a fraction of the LLM’s inner workings. But what was revealed about these models seemed more like science fiction than real science. What We Know About How Claude 3.5 WorksAttribution graphs were applied to these phenomena for researching Claude 3.5 Haiku.Anthropic One of Anthropic’s groundbreaking research papers carried the title of “On the Biology of a Large Language Model.” The paper examined how the scientists used attribution graphs to internally trace how the Claude 3.5 Haiku language model transformed inputs into outputs. Researchers were surprised by some results. Here are a few of their interesting discoveries: Multi-Step Reasoning — Claude 3.5 Haiku was able to complete some complex reasoning tasks internally without showing any intermediate steps that contributed to the output. Researchers were surprised to find out that the model could create intermediate reasoning steps “in its head.” Claude likely used a more sophisticated internal process than previously thought. Red flag: This raises some concerns because of the model’s lack of transparency. Biased or flawed logic could open the door for a model to intentionally obscure its motives or actions. Planning for Text Generation — Before creating text such as poetry, the model used structural elements of the text to create a list of rhyming word in advance, then used that list to construct the next lines. Researchers were surprised to discover that the model used that amount of forward planning, which in some respects is human-like. Research showed it chose words like “rabbit” beforehand because they rhymed with later phrases such as “grab it.” Red flag: This is impressive, but it is possible that a model could use sophisticated planning capability to create deceptive content. Chain-of-Thought Reasoning — The model’s stated chain-of-thought reasoning steps did not necessarily reflect its actual decision-making processes as revealed by research. It was shown that sometimes Claude performed reasoning steps internally but didn’t reveal them. As an example, research found that the model silently determined that “Dallas is in Texas” before actually stating that Austin was the state capital. This suggests that explanations for reasoning could potentially be fabricated after an answer has been determined, or that the model might intentionally conceal its reasoning from the user. Anthropic previously published deeper research into this subject in a paper entitled “Reasoning Models Don’t Always Say What They Think.” Red flag: This discrepancy opens the door for intentional deception and misleading information. It is not dangerous for a model to reason internally, because humans do that, too. The problem here is that the external explanation doesn’t match the model’s internal “thoughts.” That could be intentional or just a function of its processing. Nevertheless, it erodes trust and hinders accountability. We Need More Research Into LLMs’ Internal Workings And Security Scientists who conducted the research for “On the Biology of a Large Language Model” concede that Claude 3.5 Haiku exhibits some concealed operations and goals not evident in its outputs. The attribution graphs revealed a number of hidden issues. These discoveries underscore the complexity of the model’s internal behavior and highlight the importance of continued efforts to make models more transparent and aligned with human expectations. It is likely these issues also appear in other similar LLMs. With respect to my red flags noted above, it should be mentioned that Anthropic continually updates its Responsible Scaling Policy, which has been in effect since September 2023. Anthropic has made a commitment not to train or deploy models capable of causing catastrophic harm unless safety and security measures have been implemented that keep risks within acceptable limits. Anthropic has also stated that all of its models meet the ASL Deployment and Security Standards, which provide a baseline level of safe deployment and model security. As LLMs have grown larger and more powerful, deployment has spread to critical applications in areas such as healthcare, finance and defense. The increase in model complexity and wider deployment has also increased pressure to achieve a better understanding of how AI works. It is critical to ensure that AI models produce fair, trustworthy, unbiased and safe outcomes. Research is important for our understanding of LLMs, not only to improve and more fully utilize AI, but also to expose potentially dangerous processes. The Anthropic scientists have examined just a small portion of this model’s complexity and hidden capabilities. This research reinforces the need for more study of AI’s internal operations and security. In my view, it is unfortunate that our complete understanding of LLMs has taken a back seat to the market’s preference for AI’s high performance outcomes and usefulness. We need to thoroughly understand how LLMs work to ensure safety guardrails are adequate. Moor Insights & Strategy provides or has provided paid services to technology companies, like all tech industry research and analyst firms. These services include research, analysis, advising, consulting, benchmarking, acquisition matchmaking and video and speaking sponsorships. Moor Insights & Strategy does not have paid business relationships with any company mentioned in this article.Editorial StandardsForbes Accolades0 Reacties 0 aandelen 87 Views -

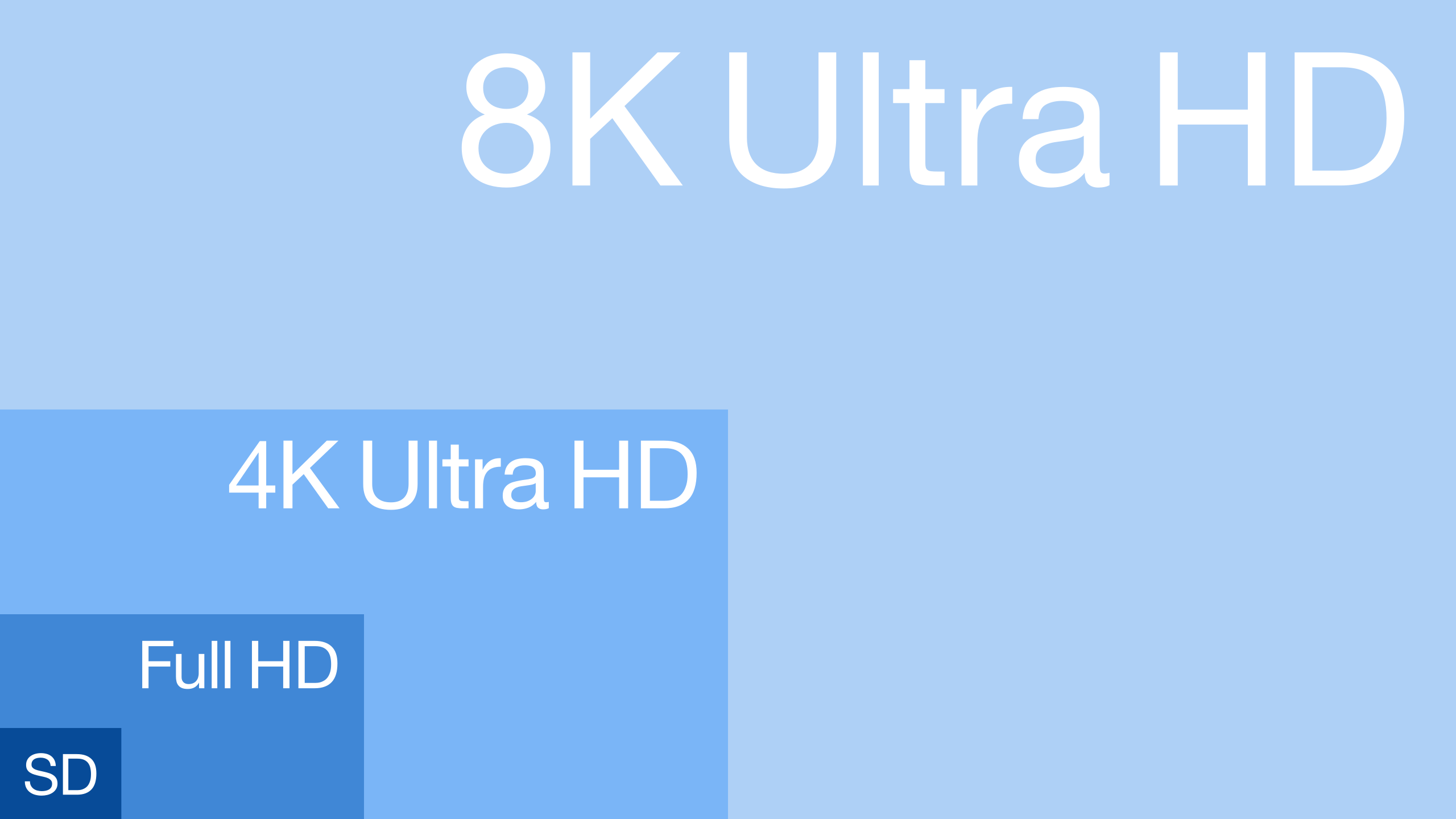

WWW.TECHSPOT.COMSony stops making 8K TVs, but ultra high-def cameras remain key to futureWhat just happened? Once positioned as the successor to Ultra HD, the 8K TV format has struggled to gain traction. Sony has been one of the tech's most prominent backers, but is now stepping away from the market. A long-term return remains possible, but only if broader industry conditions shift. Sony has quietly confirmed it has no plans to release new 8K TVs anytime soon. Its last models, the 75 and 85-inch Bravia XR Z9K, launched in 2022 and remained in rotation for two years. Now the company is discontinuing the line entirely. Sony said it may eventually return to 8K TVs, but there is not enough momentum in the market right now. The company's remaining 8K TVs will stay on shelves until inventory runs out. Its upcoming models focus on high-end QD-OLED panels with 4K Ultra HD resolution, sold under the Bravia 8 II brand. The manufacturer is also developing a 4K LCD prototype featuring RGB LED backlighting. Defined by the ITU-R BT.2020 standard for 8K broadcasting, the format supports a 7680×4320 resolution with 33.2 million pixels – double the linear resolution of 4K, triple that of 1440p, and four times that of 1080p. The newly introduced PlayStation 5 Pro supports 8K video output via HDMI 2.1, but consumers have shown little interest, and 8K content remains scarce. The PS5 Pro debut failed to ignite meaningful interest in 8K content, and the few remaining 8K TVs on the market still sell at a premium compared to 4K Ultra HD or even Full HD models. Massive 8K displays need native 8K content to deliver optimal results since upscaled lower-resolution material can introduce artifacts or blurriness. // Related Stories Ironically, Sony is exiting the 8K TV market while continuing to produce 8K native cameras designed to capture video feeds at a 7680x4320 resolution. Early adopters eager to get a jump on that 8K content have very few other options. Samsung has released massive 8K TVs with microLED backlighting, while LG introduced its latest 8K models in 2024 with the QNED 99 Series.0 Reacties 0 aandelen 130 Views

WWW.TECHSPOT.COMSony stops making 8K TVs, but ultra high-def cameras remain key to futureWhat just happened? Once positioned as the successor to Ultra HD, the 8K TV format has struggled to gain traction. Sony has been one of the tech's most prominent backers, but is now stepping away from the market. A long-term return remains possible, but only if broader industry conditions shift. Sony has quietly confirmed it has no plans to release new 8K TVs anytime soon. Its last models, the 75 and 85-inch Bravia XR Z9K, launched in 2022 and remained in rotation for two years. Now the company is discontinuing the line entirely. Sony said it may eventually return to 8K TVs, but there is not enough momentum in the market right now. The company's remaining 8K TVs will stay on shelves until inventory runs out. Its upcoming models focus on high-end QD-OLED panels with 4K Ultra HD resolution, sold under the Bravia 8 II brand. The manufacturer is also developing a 4K LCD prototype featuring RGB LED backlighting. Defined by the ITU-R BT.2020 standard for 8K broadcasting, the format supports a 7680×4320 resolution with 33.2 million pixels – double the linear resolution of 4K, triple that of 1440p, and four times that of 1080p. The newly introduced PlayStation 5 Pro supports 8K video output via HDMI 2.1, but consumers have shown little interest, and 8K content remains scarce. The PS5 Pro debut failed to ignite meaningful interest in 8K content, and the few remaining 8K TVs on the market still sell at a premium compared to 4K Ultra HD or even Full HD models. Massive 8K displays need native 8K content to deliver optimal results since upscaled lower-resolution material can introduce artifacts or blurriness. // Related Stories Ironically, Sony is exiting the 8K TV market while continuing to produce 8K native cameras designed to capture video feeds at a 7680x4320 resolution. Early adopters eager to get a jump on that 8K content have very few other options. Samsung has released massive 8K TVs with microLED backlighting, while LG introduced its latest 8K models in 2024 with the QNED 99 Series.0 Reacties 0 aandelen 130 Views -

WWW.DIGITALTRENDS.COMStaples slashed the price of the Samsung Galaxy Tab S9+ by $300For those in the market for a new Android tablet, check out this offer for the Samsung Galaxy Tab S9+. From $1,000, the device’s price is down to a more affordable $700, for huge savings of $300. We’re not sure how much time is remaining before this bargain from Staples’ tablet deals disappears though, so if you don’t want to miss out on the discount, we highly recommend pushing forward with your transaction as soon as possible. The Samsung Galaxy Tab S10 series is the latest in the popular line of Android tablets, but the Samsung Galaxy Tab S9+ is still a worthwhile purchase today for most people. With the Qualcomm Snapdragon 8 Gen 2 for Galaxy processor and 12GB of RAM, it works pretty smoothly, and its 256GB of onboard storage may be expanded by a microSD card for up to 1TB of additional space. The tablet also comes with the S Pen stylus, which will make it easier to take notes and draw sketches on the tablet. In our comparison between the Samsung Galaxy Tab S9 versus S9+ versus S9 Ultra, the Samsung Galaxy Tab S9+ serves as a good middle ground between the smaller Samsung Galaxy Tab S9 and the larger Samsung Galaxy Tab S9 Ultra. All tablets feature a Dynamic AMOLED 2X touchscreen, but the 12.4-inch display of the Samsung Galaxy Tab S9+ provides a bit more room for using apps and watching streaming shows compared to the 11-inch display of the Samsung Galaxy Tab S9, without making it too unwieldy if the 14.6-inch display of the Samsung Galaxy Tab S9 Ultra is too big for you. Related The Samsung Galaxy Tab S9+ already presents amazing value at its sticker price of $1,000, so you wouldn’t want to miss this opportunity to get it for a discounted price of $700. There’s always high demand for Samsung tablet deals though, and we don’t think it’s going to be any different in this case. If you want to buy the Samsung Galaxy Tab S9+ at $300 off, you should add it to your cart and finish the checkout process immediately while stocks of the tablet are still available. Editors’ Recommendations0 Reacties 0 aandelen 92 Views

WWW.DIGITALTRENDS.COMStaples slashed the price of the Samsung Galaxy Tab S9+ by $300For those in the market for a new Android tablet, check out this offer for the Samsung Galaxy Tab S9+. From $1,000, the device’s price is down to a more affordable $700, for huge savings of $300. We’re not sure how much time is remaining before this bargain from Staples’ tablet deals disappears though, so if you don’t want to miss out on the discount, we highly recommend pushing forward with your transaction as soon as possible. The Samsung Galaxy Tab S10 series is the latest in the popular line of Android tablets, but the Samsung Galaxy Tab S9+ is still a worthwhile purchase today for most people. With the Qualcomm Snapdragon 8 Gen 2 for Galaxy processor and 12GB of RAM, it works pretty smoothly, and its 256GB of onboard storage may be expanded by a microSD card for up to 1TB of additional space. The tablet also comes with the S Pen stylus, which will make it easier to take notes and draw sketches on the tablet. In our comparison between the Samsung Galaxy Tab S9 versus S9+ versus S9 Ultra, the Samsung Galaxy Tab S9+ serves as a good middle ground between the smaller Samsung Galaxy Tab S9 and the larger Samsung Galaxy Tab S9 Ultra. All tablets feature a Dynamic AMOLED 2X touchscreen, but the 12.4-inch display of the Samsung Galaxy Tab S9+ provides a bit more room for using apps and watching streaming shows compared to the 11-inch display of the Samsung Galaxy Tab S9, without making it too unwieldy if the 14.6-inch display of the Samsung Galaxy Tab S9 Ultra is too big for you. Related The Samsung Galaxy Tab S9+ already presents amazing value at its sticker price of $1,000, so you wouldn’t want to miss this opportunity to get it for a discounted price of $700. There’s always high demand for Samsung tablet deals though, and we don’t think it’s going to be any different in this case. If you want to buy the Samsung Galaxy Tab S9+ at $300 off, you should add it to your cart and finish the checkout process immediately while stocks of the tablet are still available. Editors’ Recommendations0 Reacties 0 aandelen 92 Views -

WWW.WSJ.COMDavid Altmejd and Kennedy Yanko: Sculpting the FutureDavid Altmejd offers unsettling sci-fi visions at White Cube while Kennedy Yanko blends the harshness of metal with the flexibility of paint at Salon 94 and James Cohan.0 Reacties 0 aandelen 104 Views

-

ARSTECHNICA.COMResearchers find AI is pretty bad at debugging—but they’re working on itDebugging Researchers find AI is pretty bad at debugging—but they’re working on it Even when given access to tools, AI agents can't reliably debug software. Samuel Axon – Apr 11, 2025 6:26 pm | 0 Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more There are few areas where AI has seen more robust deployment than the field of software development. From "vibe" coding to GitHub Copilot to startups building quick-and-dirty applications with support from LLMs, AI is already deeply integrated. However, those claiming we're mere months away from AI agents replacing most programmers should adjust their expectations because models aren't good enough at the debugging part, and debugging occupies most of a developer's time. That's the suggestion of Microsoft Research, which built a new tool called debug-gym to test and improve how AI models can debug software. Debug-gym (available on GitHub and detailed in a blog post) is an environment that allows AI models to try and debug any existing code repository with access to debugging tools that aren't historically part of the process for these models. Microsoft found that without this approach, models are quite notably bad at debugging tasks. With the approach, they're better but still a far cry from what an experienced human developer can do. Here's how Microsoft's researchers describe debug-gym: Debug-gym expands an agent’s action and observation space with feedback from tool usage, enabling setting breakpoints, navigating code, printing variable values, and creating test functions. Agents can interact with tools to investigate code or rewrite it, if confident. We believe interactive debugging with proper tools can empower coding agents to tackle real-world software engineering tasks and is central to LLM-based agent research. The fixes proposed by a coding agent with debugging capabilities, and then approved by a human programmer, will be grounded in the context of the relevant codebase, program execution and documentation, rather than relying solely on guesses based on previously seen training data. Pictured below are the results of the tests using debug-gym. Agents using debugging tools drastically outperformed those that didn't, but their success rate still wasn't high enough. Credit: Microsoft Research This approach is much more successful than relying on the models as they're usually used, but when your best case is a 48.4 percent success rate, you're not ready for primetime. The limitations are likely because the models don't fully understand how to best use the tools, and because their current training data is not tailored to this use case. "We believe this is due to the scarcity of data representing sequential decision-making behavior (e.g., debugging traces) in the current LLM training corpus," the blog post says. "However, the significant performance improvement... validates that this is a promising research direction." This initial report is just the start of the efforts, the post claims. The next step is to "fine-tune an info-seeking model specialized in gathering the necessary information to resolve bugs." If the model is large, the best move to save inference costs may be to "build a smaller info-seeking model that can provide relevant information to the larger one." This isn't the first time we've seen outcomes that suggest some of the ambitious ideas about AI agents directly replacing developers are pretty far from reality. There have been numerous studies already showing that even though an AI tool can sometimes create an application that seems acceptable to the user for a narrow task, the models tend to produce code laden with bugs and security vulnerabilities, and they aren't generally capable of fixing those problems. This is an early step on the path to AI coding agents, but most researchers agree it remains likely that the best outcome is an agent that saves a human developer a substantial amount of time, not one that can do everything they can do. Samuel Axon Senior Editor Samuel Axon Senior Editor Samuel Axon is a senior editor at Ars Technica, where he is the editorial director for tech and gaming coverage. He covers AI, software development, gaming, entertainment, and mixed reality. He has been writing about gaming and technology for nearly two decades at Engadget, PC World, Mashable, Vice, Polygon, Wired, and others. He previously ran a marketing and PR agency in the gaming industry, led editorial for the TV network CBS, and worked on social media marketing strategy for Samsung Mobile at the creative agency SPCSHP. He also is an independent software and game developer for iOS, Windows, and other platforms, and he is a graduate of DePaul University, where he studied interactive media and software development. 0 Comments0 Reacties 0 aandelen 89 Views

ARSTECHNICA.COMResearchers find AI is pretty bad at debugging—but they’re working on itDebugging Researchers find AI is pretty bad at debugging—but they’re working on it Even when given access to tools, AI agents can't reliably debug software. Samuel Axon – Apr 11, 2025 6:26 pm | 0 Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more There are few areas where AI has seen more robust deployment than the field of software development. From "vibe" coding to GitHub Copilot to startups building quick-and-dirty applications with support from LLMs, AI is already deeply integrated. However, those claiming we're mere months away from AI agents replacing most programmers should adjust their expectations because models aren't good enough at the debugging part, and debugging occupies most of a developer's time. That's the suggestion of Microsoft Research, which built a new tool called debug-gym to test and improve how AI models can debug software. Debug-gym (available on GitHub and detailed in a blog post) is an environment that allows AI models to try and debug any existing code repository with access to debugging tools that aren't historically part of the process for these models. Microsoft found that without this approach, models are quite notably bad at debugging tasks. With the approach, they're better but still a far cry from what an experienced human developer can do. Here's how Microsoft's researchers describe debug-gym: Debug-gym expands an agent’s action and observation space with feedback from tool usage, enabling setting breakpoints, navigating code, printing variable values, and creating test functions. Agents can interact with tools to investigate code or rewrite it, if confident. We believe interactive debugging with proper tools can empower coding agents to tackle real-world software engineering tasks and is central to LLM-based agent research. The fixes proposed by a coding agent with debugging capabilities, and then approved by a human programmer, will be grounded in the context of the relevant codebase, program execution and documentation, rather than relying solely on guesses based on previously seen training data. Pictured below are the results of the tests using debug-gym. Agents using debugging tools drastically outperformed those that didn't, but their success rate still wasn't high enough. Credit: Microsoft Research This approach is much more successful than relying on the models as they're usually used, but when your best case is a 48.4 percent success rate, you're not ready for primetime. The limitations are likely because the models don't fully understand how to best use the tools, and because their current training data is not tailored to this use case. "We believe this is due to the scarcity of data representing sequential decision-making behavior (e.g., debugging traces) in the current LLM training corpus," the blog post says. "However, the significant performance improvement... validates that this is a promising research direction." This initial report is just the start of the efforts, the post claims. The next step is to "fine-tune an info-seeking model specialized in gathering the necessary information to resolve bugs." If the model is large, the best move to save inference costs may be to "build a smaller info-seeking model that can provide relevant information to the larger one." This isn't the first time we've seen outcomes that suggest some of the ambitious ideas about AI agents directly replacing developers are pretty far from reality. There have been numerous studies already showing that even though an AI tool can sometimes create an application that seems acceptable to the user for a narrow task, the models tend to produce code laden with bugs and security vulnerabilities, and they aren't generally capable of fixing those problems. This is an early step on the path to AI coding agents, but most researchers agree it remains likely that the best outcome is an agent that saves a human developer a substantial amount of time, not one that can do everything they can do. Samuel Axon Senior Editor Samuel Axon Senior Editor Samuel Axon is a senior editor at Ars Technica, where he is the editorial director for tech and gaming coverage. He covers AI, software development, gaming, entertainment, and mixed reality. He has been writing about gaming and technology for nearly two decades at Engadget, PC World, Mashable, Vice, Polygon, Wired, and others. He previously ran a marketing and PR agency in the gaming industry, led editorial for the TV network CBS, and worked on social media marketing strategy for Samsung Mobile at the creative agency SPCSHP. He also is an independent software and game developer for iOS, Windows, and other platforms, and he is a graduate of DePaul University, where he studied interactive media and software development. 0 Comments0 Reacties 0 aandelen 89 Views -

WWW.VOX.COMTrump defied a court order. The Supreme Court just handed him a partial loss.The facts underlying Noem v. Abrego Garcia are shocking, even by the standards of the Trump administration’s treatment of immigrants. The Supreme Court just ruled that the immigrant at the heart of the case get some relief — but that relief is only partial.In mid-March, President Donald Trump’s government deported Kilmar Armando Abrego Garcia to El Salvador, where he is currently detained in a notorious prison supposedly reserved for terrorists. He was deported even though, in 2019, an immigration judge had issued an order explicitly forbidding the government from sending Abrego Garcia to El Salvador because he faced a “clear probability of future persecution” if returned to that nation. This court order is still in effect today.No one, including Trump’s own lawyers, has tried to justify this decision under the law. The administration claims that Abrego Garcia was deported as the result of an “administrative error.” When a federal judge asked a Justice Department lawyer why the federal government cannot bring him back to this country, that lawyer responded, “The first thing I did was ask my clients that very question. I’ve not received, to date, an answer that I find satisfactory.”The judge ordered the federal government to “facilitate and effectuate the return of [Abrego Garcia] to the United States by no later than 11:59 PM on Monday, April 7.”And yet Abrego Garcia remains in El Salvador. After the Trump administration asked the Supreme Court to vacate the judge’s order, Chief Justice John Roberts temporarily blocked the requirement that he be returned to give his Court time to consider the case. On Thursday evening, the full Court lifted that block in what appears to be a 9-0 decision (sometimes, justices disagree with an order but do not make that dissent public). Still, Thursday’s decision does not order Abrego Garcia’s immediate release and return to the United States.While the Court’s three Democrats all joined an opinion by Justice Sonia Sotomayor indicating that they would have simply left the lower court’s order in place, the full Supreme Court’s order sends the case back down to the lower court for additional proceedings.The Supreme Court concludes that the lower court’s order “properly requires the Government to ‘facilitate’ Abrego Garcia’s release from custody in El Salvador and to ensure that his case is handled as it would have been had he not been improperly sent to El Salvador.” But it adds that the “intended scope of the term ‘effectuate’ in the District Court’s order” — to “facilitate and effectuate” his return — “is, however, unclear, and may exceed the District Court’s authority.” The word “facilitate” suggests that the government must take what steps it can to make something happen, while the word “effectuate” suggests that it needs to actually make it happen.Because the Supreme Court does not elaborate in much detail on this conclusion, it is difficult to know why the Republican justices decided to limit the lower court’s order in this way, but the Trump administration’s brief in this case may offer a hint as to what the Supreme Court means. The administration’s primary argument was that “the United States does not control the sovereign nation of El Salvador, nor can it compel El Salvador to follow a federal judge’s bidding.” So it claimed that the lower court’s order was invalid because it is unenforceable.The Supreme Court’s order does not go that far, but it does suggest that a majority of the justices are open to the possibility that the US government will request Abrego Garcia’s release, that the Salvadorian government says “no,” and that at some point the courts will not be able to push US officials to do more.That said, the Supreme Court’s order also states that “the Government should be prepared to share what it can concerning the steps it has taken and the prospect of further steps.” So the justices, at the very least, expect a judge to supervise the administration’s behavior and to intervene if they conclude that it is not doing enough to secure Abrego Garcia’s release.It is likely, in other words, that the Trump administration will still be able to drag its feet in this case while it waits for the lower court to, in the Supreme Court’s words, “clarify its directive.” And there may be more rounds of litigation if the administration does not use all the tools at its disposal to free Abrego Garcia. In the meantime, of course, he is likely to remain in a prison known for its human rights abuses.Still, it is notable that none of the justices publicly dissented from Thursday’s order. It seems, in other words, that all nine of the justices are willing to concede that, at the very least, the Trump administration must take some steps to correct its behavior when it does something even its own lawyers cannot defend.See More:0 Reacties 0 aandelen 87 Views

WWW.VOX.COMTrump defied a court order. The Supreme Court just handed him a partial loss.The facts underlying Noem v. Abrego Garcia are shocking, even by the standards of the Trump administration’s treatment of immigrants. The Supreme Court just ruled that the immigrant at the heart of the case get some relief — but that relief is only partial.In mid-March, President Donald Trump’s government deported Kilmar Armando Abrego Garcia to El Salvador, where he is currently detained in a notorious prison supposedly reserved for terrorists. He was deported even though, in 2019, an immigration judge had issued an order explicitly forbidding the government from sending Abrego Garcia to El Salvador because he faced a “clear probability of future persecution” if returned to that nation. This court order is still in effect today.No one, including Trump’s own lawyers, has tried to justify this decision under the law. The administration claims that Abrego Garcia was deported as the result of an “administrative error.” When a federal judge asked a Justice Department lawyer why the federal government cannot bring him back to this country, that lawyer responded, “The first thing I did was ask my clients that very question. I’ve not received, to date, an answer that I find satisfactory.”The judge ordered the federal government to “facilitate and effectuate the return of [Abrego Garcia] to the United States by no later than 11:59 PM on Monday, April 7.”And yet Abrego Garcia remains in El Salvador. After the Trump administration asked the Supreme Court to vacate the judge’s order, Chief Justice John Roberts temporarily blocked the requirement that he be returned to give his Court time to consider the case. On Thursday evening, the full Court lifted that block in what appears to be a 9-0 decision (sometimes, justices disagree with an order but do not make that dissent public). Still, Thursday’s decision does not order Abrego Garcia’s immediate release and return to the United States.While the Court’s three Democrats all joined an opinion by Justice Sonia Sotomayor indicating that they would have simply left the lower court’s order in place, the full Supreme Court’s order sends the case back down to the lower court for additional proceedings.The Supreme Court concludes that the lower court’s order “properly requires the Government to ‘facilitate’ Abrego Garcia’s release from custody in El Salvador and to ensure that his case is handled as it would have been had he not been improperly sent to El Salvador.” But it adds that the “intended scope of the term ‘effectuate’ in the District Court’s order” — to “facilitate and effectuate” his return — “is, however, unclear, and may exceed the District Court’s authority.” The word “facilitate” suggests that the government must take what steps it can to make something happen, while the word “effectuate” suggests that it needs to actually make it happen.Because the Supreme Court does not elaborate in much detail on this conclusion, it is difficult to know why the Republican justices decided to limit the lower court’s order in this way, but the Trump administration’s brief in this case may offer a hint as to what the Supreme Court means. The administration’s primary argument was that “the United States does not control the sovereign nation of El Salvador, nor can it compel El Salvador to follow a federal judge’s bidding.” So it claimed that the lower court’s order was invalid because it is unenforceable.The Supreme Court’s order does not go that far, but it does suggest that a majority of the justices are open to the possibility that the US government will request Abrego Garcia’s release, that the Salvadorian government says “no,” and that at some point the courts will not be able to push US officials to do more.That said, the Supreme Court’s order also states that “the Government should be prepared to share what it can concerning the steps it has taken and the prospect of further steps.” So the justices, at the very least, expect a judge to supervise the administration’s behavior and to intervene if they conclude that it is not doing enough to secure Abrego Garcia’s release.It is likely, in other words, that the Trump administration will still be able to drag its feet in this case while it waits for the lower court to, in the Supreme Court’s words, “clarify its directive.” And there may be more rounds of litigation if the administration does not use all the tools at its disposal to free Abrego Garcia. In the meantime, of course, he is likely to remain in a prison known for its human rights abuses.Still, it is notable that none of the justices publicly dissented from Thursday’s order. It seems, in other words, that all nine of the justices are willing to concede that, at the very least, the Trump administration must take some steps to correct its behavior when it does something even its own lawyers cannot defend.See More:0 Reacties 0 aandelen 87 Views