0 Commentarios

0 Acciones

101 Views

Directorio

Directorio

-

Please log in to like, share and comment!

-

WWW.FORBES.COMThe Freelance Factor: Why Hybrid Work Systems Need An Upgradefreelancers offer specialized expertise on demand without the financial commitment of full-time ... More employmentgetty A silent revolution is taking place in today’s rapidly evolving work landscape. According to new research from Remote, 91% of companies have maintained or increased their use of freelancers over the past three years. This isn’t just a passing trend—it’s the future of work materializing before our eyes, with 52% of businesses explicitly increasing their freelance utilization and nearly half turning to contract workers to fill roles they can't staff permanently. As companies struggle to implement hybrid work policies that satisfy everyone, leaders should remember that “distributed” work doesn’t just mean place to place. It can also mean crossing organizational boundaries with an increasingly freelance workforce. The Rise Of The Fluid Workforce What’s driving this freelance surge? Remote’s “State of Freelance Work 2025” report demonstrates a change in how companies operate and how workers want to contribute. The data represents insights from 1,900 leaders with talent responsibilities across five countries (UK, USA, Netherlands, Germany, and Australia), alongside 3,300 freelancers from desk-based industries in ten countries. The research was conducted in November 2024. For businesses, freelancers offer specialized expertise on demand without the financial commitment of full-time employment. Engineering and IT lead the charge, with 37% of surveyed companies hiring freelancers in these domains, followed closely by creative roles (34%), customer support (32%), and marketing (31%). Why are workers seeking more freelance and contract employment? 41% want to be their own boss 31% need supplemental income 28% desire greater flexibility than traditional employment allows Only 6% say return-to-office mandates were their reason for going freelance; this implies a deeper desire for autonomy, not office policy resistance, is the real driver. MORE FOR YOU A "silver freelance" trend is also emerging across the market. Remote’s report reveals that 45% of employers have observed an increase in freelancers aged 55 and over, with 43% explicitly preferring this demographic. Companies cite experience, reliability, and mentorship capabilities as key advantages, especially in consulting roles where institutional knowledge creates immediate value without extensive onboarding. Digital Environments: Where Work Lives This shift toward a more fluid workforce demands reconsideration of how we structure work environments. Companies excelling in this new paradigm are those creating clear digital destinations for work—"work where the work is," as the author has written. Despite the clear benefits of freelance relationships, 27% of companies report communication issues as a significant obstacle. This shouldn't surprise us when nearly half (49%) are managing these vital relationships through makeshift in-house systems, often rudimentary spreadsheets and disjointed processes that create friction. Organizations succeeding with blended workforces are implementing digital work environments with explicit agreements about which tools host which types of work. They designate specific channels for formal communications, collaborative documents for shared editing, and project templates that organize multiple information types while maintaining flexibility. Clearly documented and transparent business rhythms can also help freelancers see how broader decision making and communication cadences influence the work they are doing, and the schedule required to do it. These approaches mirror best practices for distributed internal teams but become even more critical when engaging external contributors who lack the luxury of absorbing workplace norms through osmosis. The Freelance Administrative Burden The Remote report highlights a serious issue: administrative tasks are hurting productivity for freelancers. A surprising 85% of freelancers say their invoices are paid late at least sometimes, while only 24% of full-time employees face similar delays. This payment priority gap points to inadequate operational systems for managing contractors—an area where improvement could yield significant competitive advantage. Companies that streamline contractor payments don't just reduce administrative overhead; they gain preferred access to top freelance talent who value reliable clients. Many freelancers worry about being labeled incorrectly. About 40% feel they should be recognized as employees instead of contractors. At the same time, 36% of companies admit they sometimes misclassify employees as contractors. Today, this confusion can lead to legal problems and erode trust. For tomorrow, it indicates a lack of preparedness for a time when workers may be engaged on an even wider array of contract types. Freelance Beyond Borders and Boundaries The freelance model is also expanding the talent pool for many companies, with 37% of of them hiring freelancers internationally. The primary drivers? Quality of work (59%) Reliability (54%) Skill level (51%) For companies newer to global hiring, it can introduce new challenges such as payment processing and cultural differences. For instance, the report highlights that clients may praise Italian freelancers for their creativity but sometimes find them "too social" because they have different communication styles. Organizations making this work aren't just adept at navigating cultural differences—they're developing robust systems that create consistency across geographical boundaries and contractor relationships. In doing so, they're building the infrastructure necessary for tomorrow's workforce, whether employed, contracted, or some hybrid arrangement yet to emerge. Building The Integrated Workforce Model For business leaders wrestling with today's hybrid work challenges, there's a compelling case for seeing those efforts as investments in future capabilities. The infrastructure required for effective hybrid work—clear digital environments, consistent meeting practices, shared documentation systems—creates the foundation for successfully engaging a more fluid workforce. Leading organizations are taking a structured approach to policies, which they view as tools to help coordinate work, not as ways to control workers. Instead of enforcing strict attendance rules, they create agreements that explain when, where, and how different types of work can be done effectively. They are also investing in technology that simplifies how they onboard, manage, and pay contractors. Most importantly, they're developing leaders capable of managing outcomes rather than activities, evaluating value over presence, and fostering connection across distance and contractual boundaries. The Path Forward The freelance future isn’t coming—it’s already here. The distributed workplace isn’t a temporary pandemic adaptation but a permanent evolution of how work happens. Organizations that view these realities as opportunities rather than inconveniences are positioning themselves for sustainable competitive advantage. The question for leaders isn’t whether to prepare for a more fluid and freelance workforce but how quickly they can build the systems, processes, and culture to thrive with one. The talent they attract—and keep—may depend on it.0 Commentarios 0 Acciones 66 Views

WWW.FORBES.COMThe Freelance Factor: Why Hybrid Work Systems Need An Upgradefreelancers offer specialized expertise on demand without the financial commitment of full-time ... More employmentgetty A silent revolution is taking place in today’s rapidly evolving work landscape. According to new research from Remote, 91% of companies have maintained or increased their use of freelancers over the past three years. This isn’t just a passing trend—it’s the future of work materializing before our eyes, with 52% of businesses explicitly increasing their freelance utilization and nearly half turning to contract workers to fill roles they can't staff permanently. As companies struggle to implement hybrid work policies that satisfy everyone, leaders should remember that “distributed” work doesn’t just mean place to place. It can also mean crossing organizational boundaries with an increasingly freelance workforce. The Rise Of The Fluid Workforce What’s driving this freelance surge? Remote’s “State of Freelance Work 2025” report demonstrates a change in how companies operate and how workers want to contribute. The data represents insights from 1,900 leaders with talent responsibilities across five countries (UK, USA, Netherlands, Germany, and Australia), alongside 3,300 freelancers from desk-based industries in ten countries. The research was conducted in November 2024. For businesses, freelancers offer specialized expertise on demand without the financial commitment of full-time employment. Engineering and IT lead the charge, with 37% of surveyed companies hiring freelancers in these domains, followed closely by creative roles (34%), customer support (32%), and marketing (31%). Why are workers seeking more freelance and contract employment? 41% want to be their own boss 31% need supplemental income 28% desire greater flexibility than traditional employment allows Only 6% say return-to-office mandates were their reason for going freelance; this implies a deeper desire for autonomy, not office policy resistance, is the real driver. MORE FOR YOU A "silver freelance" trend is also emerging across the market. Remote’s report reveals that 45% of employers have observed an increase in freelancers aged 55 and over, with 43% explicitly preferring this demographic. Companies cite experience, reliability, and mentorship capabilities as key advantages, especially in consulting roles where institutional knowledge creates immediate value without extensive onboarding. Digital Environments: Where Work Lives This shift toward a more fluid workforce demands reconsideration of how we structure work environments. Companies excelling in this new paradigm are those creating clear digital destinations for work—"work where the work is," as the author has written. Despite the clear benefits of freelance relationships, 27% of companies report communication issues as a significant obstacle. This shouldn't surprise us when nearly half (49%) are managing these vital relationships through makeshift in-house systems, often rudimentary spreadsheets and disjointed processes that create friction. Organizations succeeding with blended workforces are implementing digital work environments with explicit agreements about which tools host which types of work. They designate specific channels for formal communications, collaborative documents for shared editing, and project templates that organize multiple information types while maintaining flexibility. Clearly documented and transparent business rhythms can also help freelancers see how broader decision making and communication cadences influence the work they are doing, and the schedule required to do it. These approaches mirror best practices for distributed internal teams but become even more critical when engaging external contributors who lack the luxury of absorbing workplace norms through osmosis. The Freelance Administrative Burden The Remote report highlights a serious issue: administrative tasks are hurting productivity for freelancers. A surprising 85% of freelancers say their invoices are paid late at least sometimes, while only 24% of full-time employees face similar delays. This payment priority gap points to inadequate operational systems for managing contractors—an area where improvement could yield significant competitive advantage. Companies that streamline contractor payments don't just reduce administrative overhead; they gain preferred access to top freelance talent who value reliable clients. Many freelancers worry about being labeled incorrectly. About 40% feel they should be recognized as employees instead of contractors. At the same time, 36% of companies admit they sometimes misclassify employees as contractors. Today, this confusion can lead to legal problems and erode trust. For tomorrow, it indicates a lack of preparedness for a time when workers may be engaged on an even wider array of contract types. Freelance Beyond Borders and Boundaries The freelance model is also expanding the talent pool for many companies, with 37% of of them hiring freelancers internationally. The primary drivers? Quality of work (59%) Reliability (54%) Skill level (51%) For companies newer to global hiring, it can introduce new challenges such as payment processing and cultural differences. For instance, the report highlights that clients may praise Italian freelancers for their creativity but sometimes find them "too social" because they have different communication styles. Organizations making this work aren't just adept at navigating cultural differences—they're developing robust systems that create consistency across geographical boundaries and contractor relationships. In doing so, they're building the infrastructure necessary for tomorrow's workforce, whether employed, contracted, or some hybrid arrangement yet to emerge. Building The Integrated Workforce Model For business leaders wrestling with today's hybrid work challenges, there's a compelling case for seeing those efforts as investments in future capabilities. The infrastructure required for effective hybrid work—clear digital environments, consistent meeting practices, shared documentation systems—creates the foundation for successfully engaging a more fluid workforce. Leading organizations are taking a structured approach to policies, which they view as tools to help coordinate work, not as ways to control workers. Instead of enforcing strict attendance rules, they create agreements that explain when, where, and how different types of work can be done effectively. They are also investing in technology that simplifies how they onboard, manage, and pay contractors. Most importantly, they're developing leaders capable of managing outcomes rather than activities, evaluating value over presence, and fostering connection across distance and contractual boundaries. The Path Forward The freelance future isn’t coming—it’s already here. The distributed workplace isn’t a temporary pandemic adaptation but a permanent evolution of how work happens. Organizations that view these realities as opportunities rather than inconveniences are positioning themselves for sustainable competitive advantage. The question for leaders isn’t whether to prepare for a more fluid and freelance workforce but how quickly they can build the systems, processes, and culture to thrive with one. The talent they attract—and keep—may depend on it.0 Commentarios 0 Acciones 66 Views -

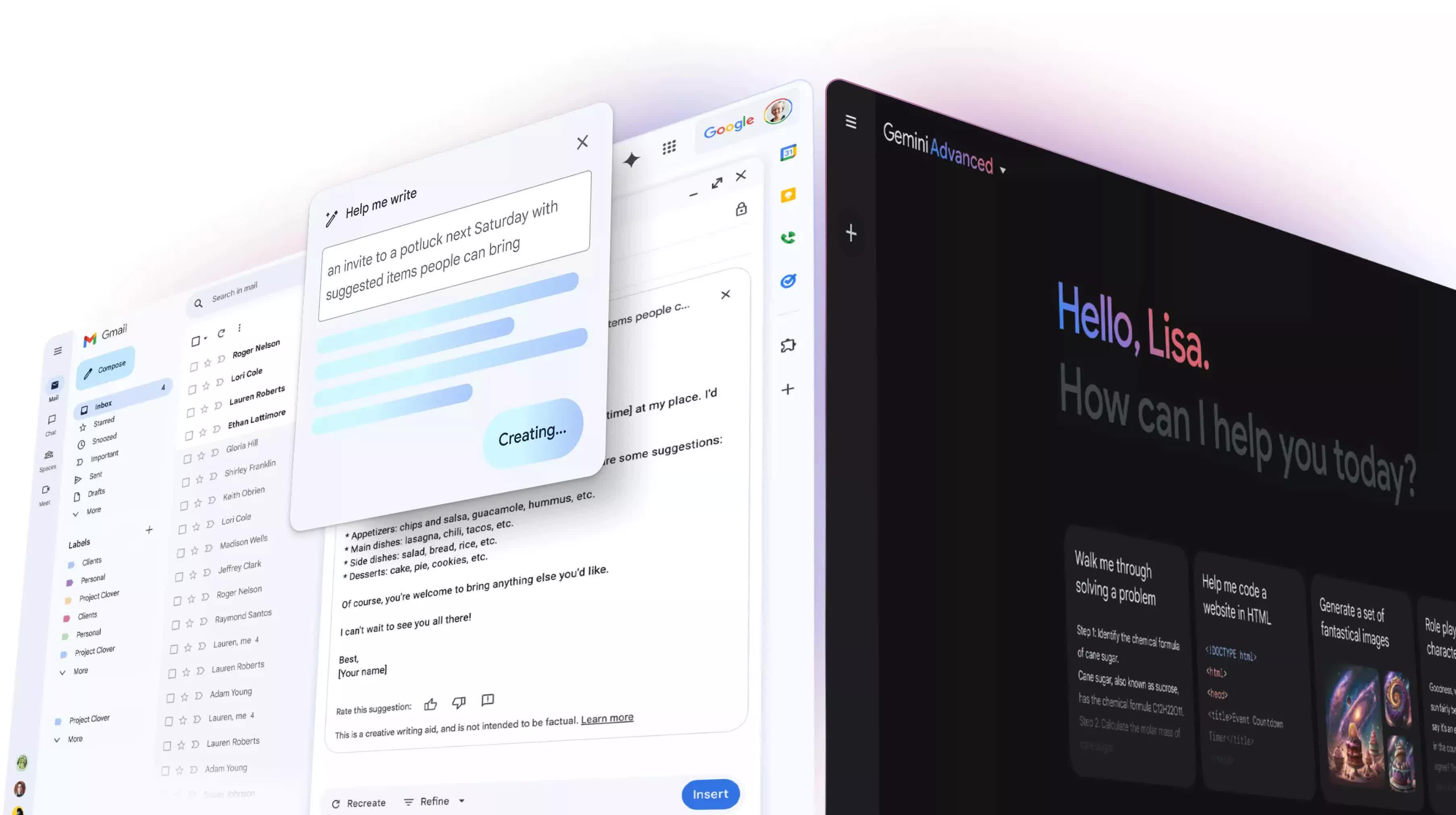

WWW.TECHSPOT.COMGoogle undercuts Microsoft with 71% Workspace discount for US government agenciesWhat just happened? Google is making a bold move to challenge Microsoft's dominance in federal government software contracts by significantly slashing prices on its Workspace suite. Under a new government-wide agreement, the company is offering its productivity tools to US agencies at a steep 71 percent discount. Tony Orlando, GM of specialty sales for Google Public Sector, positioned Workspace as a secure, AI-powered alternative to Microsoft's offerings. Google also confirmed that Workspace has achieved FedRAMP High authorization, a critical requirement for federal IT systems. This certification extends to Gemini, which recently became the first AI assistant to receive FedRAMP High approval. The accreditation ensures that transitioning to Workspace won't require costly AI add-ons or complex configurations. Google is no stranger to government work. Hundreds of thousands of employees across the Department of Energy and the Air Force Research Laboratory already use its tools. Google highlights its success at the Air Force labs, where it claims to have created a "flexible, synergistic enterprise" by streamlining digital workflows. However, the new discount is clearly a push to expand that footprint. The pricing – available through September 30, 2025 – applies to both Google Workspace Enterprise Plus and Assured Controls Plus editions. The company estimates that a widespread switch could save federal agencies up to $2 billion over three years. The timing is no coincidence. The discount aligns with President Trump's initiative to centralize federal IT procurement by treating the government as a single buyer. The effort is part of a broader cost-cutting campaign led by the Department of Government Efficiency (DOGE), which aims to reduce redundancy and streamline IT acquisitions. // Related Stories Beyond cost savings, Google is pitching Workspace as a modern, collaborative alternative. It emphasizes features such as real-time editing, 99.9 percent uptime guarantees, and integrated AI tools like Gemini and NotebookLM. With AI becoming a growing priority for federal agencies, Gemini's FedRAMP High certification could serve as a major selling point. Integrated directly into core Workspace apps like Docs, Gmail, and Meet, Gemini enables features such as summarizing, drafting, and information retrieval.0 Commentarios 0 Acciones 105 Views

WWW.TECHSPOT.COMGoogle undercuts Microsoft with 71% Workspace discount for US government agenciesWhat just happened? Google is making a bold move to challenge Microsoft's dominance in federal government software contracts by significantly slashing prices on its Workspace suite. Under a new government-wide agreement, the company is offering its productivity tools to US agencies at a steep 71 percent discount. Tony Orlando, GM of specialty sales for Google Public Sector, positioned Workspace as a secure, AI-powered alternative to Microsoft's offerings. Google also confirmed that Workspace has achieved FedRAMP High authorization, a critical requirement for federal IT systems. This certification extends to Gemini, which recently became the first AI assistant to receive FedRAMP High approval. The accreditation ensures that transitioning to Workspace won't require costly AI add-ons or complex configurations. Google is no stranger to government work. Hundreds of thousands of employees across the Department of Energy and the Air Force Research Laboratory already use its tools. Google highlights its success at the Air Force labs, where it claims to have created a "flexible, synergistic enterprise" by streamlining digital workflows. However, the new discount is clearly a push to expand that footprint. The pricing – available through September 30, 2025 – applies to both Google Workspace Enterprise Plus and Assured Controls Plus editions. The company estimates that a widespread switch could save federal agencies up to $2 billion over three years. The timing is no coincidence. The discount aligns with President Trump's initiative to centralize federal IT procurement by treating the government as a single buyer. The effort is part of a broader cost-cutting campaign led by the Department of Government Efficiency (DOGE), which aims to reduce redundancy and streamline IT acquisitions. // Related Stories Beyond cost savings, Google is pitching Workspace as a modern, collaborative alternative. It emphasizes features such as real-time editing, 99.9 percent uptime guarantees, and integrated AI tools like Gemini and NotebookLM. With AI becoming a growing priority for federal agencies, Gemini's FedRAMP High certification could serve as a major selling point. Integrated directly into core Workspace apps like Docs, Gmail, and Meet, Gemini enables features such as summarizing, drafting, and information retrieval.0 Commentarios 0 Acciones 105 Views -

WWW.DIGITALTRENDS.COMBaldur’s Gate 3’s final patch releases in less than a weekAfter far too long a wait, the final patch for Baldur’s Gate 3 will hit consoles and PC on April 15. Larian Studios says Patch 8 is the final major patch for the beloved title and adds in a ton of new content, including multiple subclasses, an evil ending, new Cantrips, spells, and more. Patch 8 has undergone stress testing for a long time now to work out all the bugs, and it seems like it’s finally ready to be set free into the world like a (slightly) benevolent Elder Brain. The news comes from Larian’s official YouTube channel, appropriately titled the Channel From Hell. On April 16, Larian will host a livestream that will take a “closer look at Patch 8, including the new subclasses” and touch on the future of the game now that all major updates have been released. Recommended Videos While we know the patch is coming on April 15, Larian hasn’t announced an exact time for the rollout. Most likely, the patch will drop in sections depending on geographic location and time zone, but we will update this story if a more specific window is announced. While Patch 8 is supposedly the final major update for Baldur’s Gate 3, that doesn’t mean the game is going to stop being popular any time soon. Larian Studios recently released a full suite of modding tools, and the community has already started work on brand-new custom campaigns, new races and classes, and much more. Considering that Baldur’s Gate 2 is still popular 25 years after launch, fans of the sequel have nothing to worry about. Related If you’ve never played Baldur’s Gate 3 before, now is a great time to dive in. The expansive RPG will keep you hooked for dozens of hours, and it also makes a great couch co-op title to play with friends or your significant other. The game is not expected to get any DLC at this point, so it is, for all intents and purposes, complete. But with an estimated 17,000 different endings, there’s enough content to keep you exploring Faerun for years to come. Editors’ Recommendations0 Commentarios 0 Acciones 61 Views

WWW.DIGITALTRENDS.COMBaldur’s Gate 3’s final patch releases in less than a weekAfter far too long a wait, the final patch for Baldur’s Gate 3 will hit consoles and PC on April 15. Larian Studios says Patch 8 is the final major patch for the beloved title and adds in a ton of new content, including multiple subclasses, an evil ending, new Cantrips, spells, and more. Patch 8 has undergone stress testing for a long time now to work out all the bugs, and it seems like it’s finally ready to be set free into the world like a (slightly) benevolent Elder Brain. The news comes from Larian’s official YouTube channel, appropriately titled the Channel From Hell. On April 16, Larian will host a livestream that will take a “closer look at Patch 8, including the new subclasses” and touch on the future of the game now that all major updates have been released. Recommended Videos While we know the patch is coming on April 15, Larian hasn’t announced an exact time for the rollout. Most likely, the patch will drop in sections depending on geographic location and time zone, but we will update this story if a more specific window is announced. While Patch 8 is supposedly the final major update for Baldur’s Gate 3, that doesn’t mean the game is going to stop being popular any time soon. Larian Studios recently released a full suite of modding tools, and the community has already started work on brand-new custom campaigns, new races and classes, and much more. Considering that Baldur’s Gate 2 is still popular 25 years after launch, fans of the sequel have nothing to worry about. Related If you’ve never played Baldur’s Gate 3 before, now is a great time to dive in. The expansive RPG will keep you hooked for dozens of hours, and it also makes a great couch co-op title to play with friends or your significant other. The game is not expected to get any DLC at this point, so it is, for all intents and purposes, complete. But with an estimated 17,000 different endings, there’s enough content to keep you exploring Faerun for years to come. Editors’ Recommendations0 Commentarios 0 Acciones 61 Views -

WWW.WSJ.COMIreland’s Privacy Watchdog Probes Musk’s Grok AI ModelIreland’s data privacy watchdog said it is looking into whether Grok has been illegally trained on European user posts on Musk’s X social media platform.0 Commentarios 0 Acciones 82 Views

-

WWW.WSJ.COMWhy Good Butter Is Worth the SplurgeThe options at the supermarket have proliferated, and the quality is off the charts. Even as tariffs loom, butter remains an accessible luxury. Here, a guide to getting the most out of your investment.0 Commentarios 0 Acciones 78 Views

-

ARSTECHNICA.COMQuantum hardware may be a good match for AIIntelligent qubits? Quantum hardware may be a good match for AI New manuscript describes analyzing image data in a quantum processor. John Timmer – Apr 11, 2025 10:46 am | 23 Credit: Jason Marz/Getty Images Credit: Jason Marz/Getty Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more Concerns about AI's energy use have a lot of people looking into ways to cut down on its power requirements. Many of these focus on hardware and software approaches that are pretty straightforward extensions of existing technologies. But a few technologies are much farther out there. One that's definitely in the latter category? Quantum computing. In some ways, quantum hardware is a better match for some of the math that underlies AI than more traditional hardware. While the current quantum hardware is a bit too error-prone for the more elaborate AI models currently in use, researchers are starting to put the pieces in place to run AI models when the hardware is ready. This week, a couple of commercial interests are releasing a draft of a paper describing how to get classical image data into a quantum processor (actually, two different processors) and perform a basic AI image classification. All of which gives us a great opportunity to discuss why quantum AI may be more than just hype. Machine learning goes quantum Just as there are many machine-learning techniques that fall under the AI umbrella, there are many ways to potentially use quantum computing to perform some aspect of an AI algorithm. Some are simply matters of math; some forms of machine learning require, for example, many matrix operations, which can be performed efficiently on quantum hardware. (Here is a good review of all the ways quantum hardware might help machine learning.) But there are also ways in which the quantum hardware can be a good match for AI. One of the challenges of running AI on traditional computing hardware is that the processing and memory are separate. To run something like a neural network requires repeated trips to memory to look up which destination signals from one artificial neuron need to be sent to and what weight to assign each signal. This creates a major bottleneck. Quantum computers don't have that sort of separation. While they could include some quantum memory, the data is generally housed directly in the qubits, while computation involves performing operations, called gates, directly on the qubits themselves. In fact, there has been a demonstration that, for supervised machine learning, where a system can learn to classify items after training on pre-classified data, a quantum system can outperform classical ones, even when the data being processed is housed on classical hardware. This form of machine learning relies on what are called variational quantum circuits. This is a two-qubit gate operation that takes an additional factor that can be held on the classical side of the hardware and imparted to the qubits via the control signals that trigger the gate operation. You can think of this as analogous to the communications involved in a neural network, with the two-qubit gate operation equivalent to the passing of information between two artificial neurons and the factor analogous to the weight given to the signal. That's exactly the system that a team from the Honda Research Institute worked on in collaboration with a quantum software company called Blue Qubit. Pixels to qubits The focus of the new work was mostly on how to get data from the classical world into the quantum system for characterization. But the researchers ended up testing the results on two different quantum processors. The problem they were testing is one of image classification. The raw material was from the Honda Scenes dataset, which has images taken from roughly 80 hours of driving in Northern California; the images are tagged with information about what's in the scene. And the question the researchers wanted the machine learning to handle was a simple one: Is it snowing in the scene? All the images were sitting on classical hardware, of course. To classify an image on quantum hardware, it had to be converted to quantum information for processing. The team tried three methods of encoding the data, which differed in terms of how the pixels of the images were sliced up and how many qubits the resulting slices were sent to. The researchers used a classical simulator of a quantum processor to do the training steps, which identified the appropriate numbers—again, think in terms of the weights of a neural network—to use during the two-qubit gate operations. They then ran the hardware on two different quantum processors. One, from IBM, has a lot of qubits (156) but a slightly higher error rate during gate operations. The second is from Quantinuum and is notable for having a very low error rate during operations, but it only has 56 qubits. In general, the accuracy of the classification went up as the researchers used more qubits or as they ran more gates. In general, though, the system worked; accuracies were well above what you'd expect from random chance. At the same time, they were generally lower than what you'd get from a standard algorithm run on normal hardware. We're still not at the point where existing hardware has both enough qubits and a low enough error rate to be competitive on classical hardware. Still, the work was clearly able to show that real-world quantum hardware is capable of running the sorts of AI algorithms that people have been expecting it to. But like everyone else, people hoping to solve useful problems will have to wait for further improvements on the hardware side. John Timmer Senior Science Editor John Timmer Senior Science Editor John is Ars Technica's science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots. 23 Comments0 Commentarios 0 Acciones 71 Views

ARSTECHNICA.COMQuantum hardware may be a good match for AIIntelligent qubits? Quantum hardware may be a good match for AI New manuscript describes analyzing image data in a quantum processor. John Timmer – Apr 11, 2025 10:46 am | 23 Credit: Jason Marz/Getty Images Credit: Jason Marz/Getty Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more Concerns about AI's energy use have a lot of people looking into ways to cut down on its power requirements. Many of these focus on hardware and software approaches that are pretty straightforward extensions of existing technologies. But a few technologies are much farther out there. One that's definitely in the latter category? Quantum computing. In some ways, quantum hardware is a better match for some of the math that underlies AI than more traditional hardware. While the current quantum hardware is a bit too error-prone for the more elaborate AI models currently in use, researchers are starting to put the pieces in place to run AI models when the hardware is ready. This week, a couple of commercial interests are releasing a draft of a paper describing how to get classical image data into a quantum processor (actually, two different processors) and perform a basic AI image classification. All of which gives us a great opportunity to discuss why quantum AI may be more than just hype. Machine learning goes quantum Just as there are many machine-learning techniques that fall under the AI umbrella, there are many ways to potentially use quantum computing to perform some aspect of an AI algorithm. Some are simply matters of math; some forms of machine learning require, for example, many matrix operations, which can be performed efficiently on quantum hardware. (Here is a good review of all the ways quantum hardware might help machine learning.) But there are also ways in which the quantum hardware can be a good match for AI. One of the challenges of running AI on traditional computing hardware is that the processing and memory are separate. To run something like a neural network requires repeated trips to memory to look up which destination signals from one artificial neuron need to be sent to and what weight to assign each signal. This creates a major bottleneck. Quantum computers don't have that sort of separation. While they could include some quantum memory, the data is generally housed directly in the qubits, while computation involves performing operations, called gates, directly on the qubits themselves. In fact, there has been a demonstration that, for supervised machine learning, where a system can learn to classify items after training on pre-classified data, a quantum system can outperform classical ones, even when the data being processed is housed on classical hardware. This form of machine learning relies on what are called variational quantum circuits. This is a two-qubit gate operation that takes an additional factor that can be held on the classical side of the hardware and imparted to the qubits via the control signals that trigger the gate operation. You can think of this as analogous to the communications involved in a neural network, with the two-qubit gate operation equivalent to the passing of information between two artificial neurons and the factor analogous to the weight given to the signal. That's exactly the system that a team from the Honda Research Institute worked on in collaboration with a quantum software company called Blue Qubit. Pixels to qubits The focus of the new work was mostly on how to get data from the classical world into the quantum system for characterization. But the researchers ended up testing the results on two different quantum processors. The problem they were testing is one of image classification. The raw material was from the Honda Scenes dataset, which has images taken from roughly 80 hours of driving in Northern California; the images are tagged with information about what's in the scene. And the question the researchers wanted the machine learning to handle was a simple one: Is it snowing in the scene? All the images were sitting on classical hardware, of course. To classify an image on quantum hardware, it had to be converted to quantum information for processing. The team tried three methods of encoding the data, which differed in terms of how the pixels of the images were sliced up and how many qubits the resulting slices were sent to. The researchers used a classical simulator of a quantum processor to do the training steps, which identified the appropriate numbers—again, think in terms of the weights of a neural network—to use during the two-qubit gate operations. They then ran the hardware on two different quantum processors. One, from IBM, has a lot of qubits (156) but a slightly higher error rate during gate operations. The second is from Quantinuum and is notable for having a very low error rate during operations, but it only has 56 qubits. In general, the accuracy of the classification went up as the researchers used more qubits or as they ran more gates. In general, though, the system worked; accuracies were well above what you'd expect from random chance. At the same time, they were generally lower than what you'd get from a standard algorithm run on normal hardware. We're still not at the point where existing hardware has both enough qubits and a low enough error rate to be competitive on classical hardware. Still, the work was clearly able to show that real-world quantum hardware is capable of running the sorts of AI algorithms that people have been expecting it to. But like everyone else, people hoping to solve useful problems will have to wait for further improvements on the hardware side. John Timmer Senior Science Editor John Timmer Senior Science Editor John is Ars Technica's science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots. 23 Comments0 Commentarios 0 Acciones 71 Views -

WWW.NEWSCIENTIST.COMDolphins are dying from toxic chemicals banned since the 1980sA common dolphin stranded on a UK beachWaves & Wellies Photography Dolphins in seas around the UK are dying from a combination of increased water temperatures and toxic chemicals that the UK banned in the 1980s. Polychlorinated biphenyls (PCBs) are a long-lasting type of persistent chemical pollutant, once widely used in industrial manufacturing. They interfere with animals’ reproduction and immune response and cause cancer in humans. Advertisement In a new study, researchers showed that higher levels of PCBs in the body and increased sea surface temperatures are linked to a greater mortality risk from infectious diseases for short-beaked common dolphins (Delphinus delphis), a first for marine mammals. The ocean is facing “a triple planetary crisis” – climate change, pollution and biodiversity loss – but we often look at threats in isolation, says Rosie Williams at Zoological Society of London. Williams and her colleagues analysed post-mortem data from 836 common dolphins stranded in the UK between 1990 and 2020 to assess the impact of these interlinked threats. Unmissable news about our planet delivered straight to your inbox every month. Sign up to newsletter They found a rise of 1 milligram of PCBs per kilogram of blubber was linked with a 1.6 per cent increase in the chance of infectious diseases – such as gastritis, enteritis, bacterial infection, encephalitis and pneumonia – becoming fatal. Every 1°C rise in sea surface temperature corresponded to a 14 per cent increase in mortality risk. According to the study, the threshold where PCB blubber concentrations have a significant effect on a dolphin’s risk of disease is 22 mg/kg, but the average concentration in samples was higher, at 32.15 mg/kg. Because dolphins are long-lived, widely distributed around the UK and high in the food chain, they are a good indicator species to show how threats might also affect other animals. “Their position at the top of the food web means that toxins from their prey accumulate in their blubber, providing a concentrated snapshot of chemical pollutants in the ocean – though unfortunately at the expense of their health,” says Thea Taylor, managing director of Sussex Dolphin Project. Despite being banned in the UK in 1981 and internationally in 2001, PCBs are still washing into the ocean. “They are still probably entering the environment through stockpiles and are often a side product or a byproduct of other manufacturing processes,” says Williams. Cleaning up PCBs is very difficult. “Because they’re so persistent, they’re a nightmare to get rid of,” she says. “There is definitely not an easy fix.” Some researchers are exploring dredging as a cleanup technique, while others are focused on improving water treatment plants’ effectiveness in removing persistent chemicals. These findings indicate what might happen if action isn’t taken to ban perfluoroalkyl and polyfluoroalkyl substances (PFAS), another widespread group of so-called forever chemicals. “While we cannot reverse the contamination that has already occurred, it is critical to prevent further chemical inputs into the environment,” says Taylor. Journal reference:Communications Biology DOI: 10.1038/s42003-025-07858-7 Topics:0 Commentarios 0 Acciones 88 Views

WWW.NEWSCIENTIST.COMDolphins are dying from toxic chemicals banned since the 1980sA common dolphin stranded on a UK beachWaves & Wellies Photography Dolphins in seas around the UK are dying from a combination of increased water temperatures and toxic chemicals that the UK banned in the 1980s. Polychlorinated biphenyls (PCBs) are a long-lasting type of persistent chemical pollutant, once widely used in industrial manufacturing. They interfere with animals’ reproduction and immune response and cause cancer in humans. Advertisement In a new study, researchers showed that higher levels of PCBs in the body and increased sea surface temperatures are linked to a greater mortality risk from infectious diseases for short-beaked common dolphins (Delphinus delphis), a first for marine mammals. The ocean is facing “a triple planetary crisis” – climate change, pollution and biodiversity loss – but we often look at threats in isolation, says Rosie Williams at Zoological Society of London. Williams and her colleagues analysed post-mortem data from 836 common dolphins stranded in the UK between 1990 and 2020 to assess the impact of these interlinked threats. Unmissable news about our planet delivered straight to your inbox every month. Sign up to newsletter They found a rise of 1 milligram of PCBs per kilogram of blubber was linked with a 1.6 per cent increase in the chance of infectious diseases – such as gastritis, enteritis, bacterial infection, encephalitis and pneumonia – becoming fatal. Every 1°C rise in sea surface temperature corresponded to a 14 per cent increase in mortality risk. According to the study, the threshold where PCB blubber concentrations have a significant effect on a dolphin’s risk of disease is 22 mg/kg, but the average concentration in samples was higher, at 32.15 mg/kg. Because dolphins are long-lived, widely distributed around the UK and high in the food chain, they are a good indicator species to show how threats might also affect other animals. “Their position at the top of the food web means that toxins from their prey accumulate in their blubber, providing a concentrated snapshot of chemical pollutants in the ocean – though unfortunately at the expense of their health,” says Thea Taylor, managing director of Sussex Dolphin Project. Despite being banned in the UK in 1981 and internationally in 2001, PCBs are still washing into the ocean. “They are still probably entering the environment through stockpiles and are often a side product or a byproduct of other manufacturing processes,” says Williams. Cleaning up PCBs is very difficult. “Because they’re so persistent, they’re a nightmare to get rid of,” she says. “There is definitely not an easy fix.” Some researchers are exploring dredging as a cleanup technique, while others are focused on improving water treatment plants’ effectiveness in removing persistent chemicals. These findings indicate what might happen if action isn’t taken to ban perfluoroalkyl and polyfluoroalkyl substances (PFAS), another widespread group of so-called forever chemicals. “While we cannot reverse the contamination that has already occurred, it is critical to prevent further chemical inputs into the environment,” says Taylor. Journal reference:Communications Biology DOI: 10.1038/s42003-025-07858-7 Topics:0 Commentarios 0 Acciones 88 Views -

WWW.TECHNOLOGYREVIEW.COMHow AI is interacting with our creative human processesIn 2021, 20 years after the death of her older sister, Vauhini Vara was still unable to tell the story of her loss. “I wondered,” she writes in Searches, her new collection of essays on AI technology, “if Sam Altman’s machine could do it for me.” So she tried ChatGPT. But as it expanded on Vara’s prompts in sentences ranging from the stilted to the unsettling to the sublime, the thing she’d enlisted as a tool stopped seeming so mechanical. “Once upon a time, she taught me to exist,” the AI model wrote of the young woman Vara had idolized. Vara, a journalist and novelist, called the resulting essay “Ghosts,” and in her opinion, the best lines didn’t come from her: “I found myself irresistibly attracted to GPT-3—to the way it offered, without judgment, to deliver words to a writer who has found herself at a loss for them … as I tried to write more honestly, the AI seemed to be doing the same.” The rapid proliferation of AI in our lives introduces new challenges around authorship, authenticity, and ethics in work and art. But it also offers a particularly human problem in narrative: How can we make sense of these machines, not just use them? And how do the words we choose and stories we tell about technology affect the role we allow it to take on (or even take over) in our creative lives? Both Vara’s book and The Uncanny Muse, a collection of essays on the history of art and automation by the music critic David Hajdu, explore how humans have historically and personally wrestled with the ways in which machines relate to our own bodies, brains, and creativity. At the same time, The Mind Electric, a new book by a neurologist, Pria Anand, reminds us that our own inner workings may not be so easy to replicate. Searches is a strange artifact. Part memoir, part critical analysis, and part AI-assisted creative experimentation, Vara’s essays trace her time as a tech reporter and then novelist in the San Francisco Bay Area alongside the history of the industry she watched grow up. Tech was always close enough to touch: One college friend was an early Google employee, and when Vara started reporting on Facebook (now Meta), she and Mark Zuckerberg became “friends” on his platform. In 2007, she published a scoop that the company was planning to introduce ad targeting based on users’ personal information—the first shot fired in the long, gnarly data war to come. In her essay “Stealing Great Ideas,” she talks about turning down a job reporting on Apple to go to graduate school for fiction. There, she wrote a novel about a tech founder, which was later published as The Immortal King Rao. Vara points out that in some ways at the time, her art was “inextricable from the resources [she] used to create it”—products like Google Docs, a MacBook, an iPhone. But these pre-AI resources were tools, plain and simple. What came next was different. Interspersed with Vara’s essays are chapters of back-and-forths between the author and ChatGPT about the book itself, where the bot serves as editor at Vara’s prompting. ChatGPT obligingly summarizes and critiques her writing in a corporate-shaded tone that’s now familiar to any knowledge worker. “If there’s a place for disagreement,” it offers about the first few chapters on tech companies, “it might be in the balance of these narratives. Some might argue that the benefits—such as job creation, innovation in various sectors like AI and logistics, and contributions to the global economy—can outweigh the negatives.” Searches: Selfhood in the Digital AgeVauhini VaraPANTHEON, 2025 Vara notices that ChatGPT writes “we” and “our” in these responses, pulling it into the human story, not the tech one: “Earlier you mentioned ‘our access to information’ and ‘our collective experiences and understandings.’” When she asks what the rhetorical purpose of that choice is, ChatGPT responds with a numbered list of benefits including “inclusivity and solidarity” and “neutrality and objectivity.” It adds that “using the first-person plural helps to frame the discussion in terms of shared human experiences and collective challenges.” Does the bot believe it’s human? Or at least, do the humans who made it want other humans to believe it does? “Can corporations use these [rhetorical] tools in their products too, to subtly make people identify with, and not in opposition to, them?” Vara asks. ChatGPT replies, “Absolutely.” Vara has concerns about the words she’s used as well. In “Thank You for Your Important Work,” she worries about the impact of “Ghosts,” which went viral after it was first published. Had her writing helped corporations hide the reality of AI behind a velvet curtain? She’d meant to offer a nuanced “provocation,” exploring how uncanny generative AI can be. But instead, she’d produced something beautiful enough to resonate as an ad for its creative potential. Even Vara herself felt fooled. She particularly loved one passage the bot wrote, about Vara and her sister as kids holding hands on a long drive. But she couldn’t imagine either of them being so sentimental. What Vara had elicited from the machine, she realized, was “wish fulfillment,” not a haunting. The rapid proliferation of AI in our lives introduces new challenges around authorship, authenticity, and ethics in work and art. How can we make sense of these machines, not just use them? The machine wasn’t the only thing crouching behind that too-good-to-be-true curtain. The GPT models and others are trained through human labor, in sometimes exploitative conditions. And much of the training data was the creative work of human writers before her. “I’d conjured artificial language about grief through the extraction of real human beings’ language about grief,” she writes. The creative ghosts in the model were made of code, yes, but also, ultimately, made of people. Maybe Vara’s essay helped cover up that truth too. In the book’s final essay, Vara offers a mirror image of those AI call-and-response exchanges as an antidote. After sending out an anonymous survey to women of various ages, she presents the replies to each question, one after the other. “Describe something that doesn’t exist,” she prompts, and the women respond: “God.” “God.” “God.” “Perfection.” “My job. (Lost it.)” Real people contradict each other, joke, yell, mourn, and reminisce. Instead of a single authoritative voice—an editor, or a company’s limited style guide—Vara gives us the full gasping crowd of human creativity. “What’s it like to be alive?” Vara asks the group. “It depends,” one woman answers. David Hajdu, now music editor at The Nation and previously a music critic for The New Republic, goes back much further than the early years of Facebook to tell the history of how humans have made and used machines to express ourselves. Player pianos, microphones, synthesizers, and electrical instruments were all assistive technologies that faced skepticism before acceptance and, sometimes, elevation in music and popular culture. They even influenced the kind of art people were able to and wanted to make. Electrical amplification, for instance, allowed singers to use a wider vocal range and still reach an audience. The synthesizer introduced a new lexicon of sound to rock music. “What’s so bad about being mechanical, anyway?” Hajdu asks in The Uncanny Muse. And “what’s so great about being human?” The Uncanny Muse: Music, Art, and Machines from Automata to AIDavid HajduW.W. NORTON & COMPANY, 2025 But Hajdu is also interested in how intertwined the history of man and machine can be, and how often we’ve used one as a metaphor for the other. Descartes saw the body as empty machinery for consciousness, he reminds us. Hobbes wrote that “life is but a motion of limbs.” Freud described the mind as a steam engine. Andy Warhol told an interviewer that “everybody should be a machine.” And when computers entered the scene, humans used them as metaphors for themselves too. “Where the machine model had once helped us understand the human body … a new category of machines led us to imagine the brain (how we think, what we know, even how we feel or how we think about what we feel) in terms of the computer,” Hajdu writes. But what is lost with these one-to-one mappings? What happens when we imagine that the complexity of the brain—an organ we do not even come close to fully understanding—can be replicated in 1s and 0s? Maybe what happens is we get a world full of chatbots and agents, computer-generated artworks and AI DJs, that companies claim are singular creative voices rather than remixes of a million human inputs. And perhaps we also get projects like the painfully named Painting Fool—an AI that paints, developed by Simon Colton, a scholar at Queen Mary University of London. He told Hajdu that he wanted to “demonstrate the potential of a computer program to be taken seriously as a creative artist in its own right.” What Colton means is not just a machine that makes art but one that expresses its own worldview: “Art that communicates what it’s like to be a machine.” What happens when we imagine that the complexity of the brain—an organ we do not even come close to fully understanding—can be replicated in 1s and 0s? Hajdu seems to be curious and optimistic about this line of inquiry. “Machines of many kinds have been communicating things for ages, playing invaluable roles in our communication through art,” he says. “Growing in intelligence, machines may still have more to communicate, if we let them.” But the question that The Uncanny Muse raises at the end is: Why should we art-making humans be so quick to hand over the paint to the paintbrush? Why do we care how the paintbrush sees the world? Are we truly finished telling our own stories ourselves? Pria Anand might say no. In The Mind Electric, she writes: “Narrative is universally, spectacularly human; it is as unconscious as breathing, as essential as sleep, as comforting as familiarity. It has the capacity to bind us, but also to other, to lay bare, but also obscure.” The electricity in The Mind Electric belongs entirely to the human brain—no metaphor necessary. Instead, the book explores a number of neurological afflictions and the stories patients and doctors tell to better understand them. “The truth of our bodies and minds is as strange as fiction,” Anand writes—and the language she uses throughout the book is as evocative as that in any novel. The Mind Electric: A Neurologist on the Strangeness and Wonder of Our BrainsPria AnandWASHINGTON SQUARE PRESS, 2025 In personal and deeply researched vignettes in the tradition of Oliver Sacks, Anand shows that any comparison between brains and machines will inevitably fall flat. She tells of patients who see clear images when they’re functionally blind, invent entire backstories when they’ve lost a memory, break along seams that few can find, and—yes—see and hear ghosts. In fact, Anand cites one study of 375 college students in which researchers found that nearly three-quarters “had heard a voice that no one else could hear.” These were not diagnosed schizophrenics or sufferers of brain tumors—just people listening to their own uncanny muses. Many heard their name, others heard God, and some could make out the voice of a loved one who’d passed on. Anand suggests that writers throughout history have harnessed organic exchanges with these internal apparitions to make art. “I see myself taking the breath of these voices in my sails,” Virginia Woolf wrote of her own experiences with ghostly sounds. “I am a porous vessel afloat on sensation.” The mind in The Mind Electric is vast, mysterious, and populated. The narratives people construct to traverse it are just as full of wonder. Humans are not going to stop using technology to help us create anytime soon—and there’s no reason we should. Machines make for wonderful tools, as they always have. But when we turn the tools themselves into artists and storytellers, brains and bodies, magicians and ghosts, we bypass truth for wish fulfillment. Maybe what’s worse, we rob ourselves of the opportunity to contribute our own voices to the lively and loud chorus of human experience. And we keep others from the human pleasure of hearing them too. Rebecca Ackermann is a writer, designer, and artist based in San Francisco.0 Commentarios 0 Acciones 117 Views

WWW.TECHNOLOGYREVIEW.COMHow AI is interacting with our creative human processesIn 2021, 20 years after the death of her older sister, Vauhini Vara was still unable to tell the story of her loss. “I wondered,” she writes in Searches, her new collection of essays on AI technology, “if Sam Altman’s machine could do it for me.” So she tried ChatGPT. But as it expanded on Vara’s prompts in sentences ranging from the stilted to the unsettling to the sublime, the thing she’d enlisted as a tool stopped seeming so mechanical. “Once upon a time, she taught me to exist,” the AI model wrote of the young woman Vara had idolized. Vara, a journalist and novelist, called the resulting essay “Ghosts,” and in her opinion, the best lines didn’t come from her: “I found myself irresistibly attracted to GPT-3—to the way it offered, without judgment, to deliver words to a writer who has found herself at a loss for them … as I tried to write more honestly, the AI seemed to be doing the same.” The rapid proliferation of AI in our lives introduces new challenges around authorship, authenticity, and ethics in work and art. But it also offers a particularly human problem in narrative: How can we make sense of these machines, not just use them? And how do the words we choose and stories we tell about technology affect the role we allow it to take on (or even take over) in our creative lives? Both Vara’s book and The Uncanny Muse, a collection of essays on the history of art and automation by the music critic David Hajdu, explore how humans have historically and personally wrestled with the ways in which machines relate to our own bodies, brains, and creativity. At the same time, The Mind Electric, a new book by a neurologist, Pria Anand, reminds us that our own inner workings may not be so easy to replicate. Searches is a strange artifact. Part memoir, part critical analysis, and part AI-assisted creative experimentation, Vara’s essays trace her time as a tech reporter and then novelist in the San Francisco Bay Area alongside the history of the industry she watched grow up. Tech was always close enough to touch: One college friend was an early Google employee, and when Vara started reporting on Facebook (now Meta), she and Mark Zuckerberg became “friends” on his platform. In 2007, she published a scoop that the company was planning to introduce ad targeting based on users’ personal information—the first shot fired in the long, gnarly data war to come. In her essay “Stealing Great Ideas,” she talks about turning down a job reporting on Apple to go to graduate school for fiction. There, she wrote a novel about a tech founder, which was later published as The Immortal King Rao. Vara points out that in some ways at the time, her art was “inextricable from the resources [she] used to create it”—products like Google Docs, a MacBook, an iPhone. But these pre-AI resources were tools, plain and simple. What came next was different. Interspersed with Vara’s essays are chapters of back-and-forths between the author and ChatGPT about the book itself, where the bot serves as editor at Vara’s prompting. ChatGPT obligingly summarizes and critiques her writing in a corporate-shaded tone that’s now familiar to any knowledge worker. “If there’s a place for disagreement,” it offers about the first few chapters on tech companies, “it might be in the balance of these narratives. Some might argue that the benefits—such as job creation, innovation in various sectors like AI and logistics, and contributions to the global economy—can outweigh the negatives.” Searches: Selfhood in the Digital AgeVauhini VaraPANTHEON, 2025 Vara notices that ChatGPT writes “we” and “our” in these responses, pulling it into the human story, not the tech one: “Earlier you mentioned ‘our access to information’ and ‘our collective experiences and understandings.’” When she asks what the rhetorical purpose of that choice is, ChatGPT responds with a numbered list of benefits including “inclusivity and solidarity” and “neutrality and objectivity.” It adds that “using the first-person plural helps to frame the discussion in terms of shared human experiences and collective challenges.” Does the bot believe it’s human? Or at least, do the humans who made it want other humans to believe it does? “Can corporations use these [rhetorical] tools in their products too, to subtly make people identify with, and not in opposition to, them?” Vara asks. ChatGPT replies, “Absolutely.” Vara has concerns about the words she’s used as well. In “Thank You for Your Important Work,” she worries about the impact of “Ghosts,” which went viral after it was first published. Had her writing helped corporations hide the reality of AI behind a velvet curtain? She’d meant to offer a nuanced “provocation,” exploring how uncanny generative AI can be. But instead, she’d produced something beautiful enough to resonate as an ad for its creative potential. Even Vara herself felt fooled. She particularly loved one passage the bot wrote, about Vara and her sister as kids holding hands on a long drive. But she couldn’t imagine either of them being so sentimental. What Vara had elicited from the machine, she realized, was “wish fulfillment,” not a haunting. The rapid proliferation of AI in our lives introduces new challenges around authorship, authenticity, and ethics in work and art. How can we make sense of these machines, not just use them? The machine wasn’t the only thing crouching behind that too-good-to-be-true curtain. The GPT models and others are trained through human labor, in sometimes exploitative conditions. And much of the training data was the creative work of human writers before her. “I’d conjured artificial language about grief through the extraction of real human beings’ language about grief,” she writes. The creative ghosts in the model were made of code, yes, but also, ultimately, made of people. Maybe Vara’s essay helped cover up that truth too. In the book’s final essay, Vara offers a mirror image of those AI call-and-response exchanges as an antidote. After sending out an anonymous survey to women of various ages, she presents the replies to each question, one after the other. “Describe something that doesn’t exist,” she prompts, and the women respond: “God.” “God.” “God.” “Perfection.” “My job. (Lost it.)” Real people contradict each other, joke, yell, mourn, and reminisce. Instead of a single authoritative voice—an editor, or a company’s limited style guide—Vara gives us the full gasping crowd of human creativity. “What’s it like to be alive?” Vara asks the group. “It depends,” one woman answers. David Hajdu, now music editor at The Nation and previously a music critic for The New Republic, goes back much further than the early years of Facebook to tell the history of how humans have made and used machines to express ourselves. Player pianos, microphones, synthesizers, and electrical instruments were all assistive technologies that faced skepticism before acceptance and, sometimes, elevation in music and popular culture. They even influenced the kind of art people were able to and wanted to make. Electrical amplification, for instance, allowed singers to use a wider vocal range and still reach an audience. The synthesizer introduced a new lexicon of sound to rock music. “What’s so bad about being mechanical, anyway?” Hajdu asks in The Uncanny Muse. And “what’s so great about being human?” The Uncanny Muse: Music, Art, and Machines from Automata to AIDavid HajduW.W. NORTON & COMPANY, 2025 But Hajdu is also interested in how intertwined the history of man and machine can be, and how often we’ve used one as a metaphor for the other. Descartes saw the body as empty machinery for consciousness, he reminds us. Hobbes wrote that “life is but a motion of limbs.” Freud described the mind as a steam engine. Andy Warhol told an interviewer that “everybody should be a machine.” And when computers entered the scene, humans used them as metaphors for themselves too. “Where the machine model had once helped us understand the human body … a new category of machines led us to imagine the brain (how we think, what we know, even how we feel or how we think about what we feel) in terms of the computer,” Hajdu writes. But what is lost with these one-to-one mappings? What happens when we imagine that the complexity of the brain—an organ we do not even come close to fully understanding—can be replicated in 1s and 0s? Maybe what happens is we get a world full of chatbots and agents, computer-generated artworks and AI DJs, that companies claim are singular creative voices rather than remixes of a million human inputs. And perhaps we also get projects like the painfully named Painting Fool—an AI that paints, developed by Simon Colton, a scholar at Queen Mary University of London. He told Hajdu that he wanted to “demonstrate the potential of a computer program to be taken seriously as a creative artist in its own right.” What Colton means is not just a machine that makes art but one that expresses its own worldview: “Art that communicates what it’s like to be a machine.” What happens when we imagine that the complexity of the brain—an organ we do not even come close to fully understanding—can be replicated in 1s and 0s? Hajdu seems to be curious and optimistic about this line of inquiry. “Machines of many kinds have been communicating things for ages, playing invaluable roles in our communication through art,” he says. “Growing in intelligence, machines may still have more to communicate, if we let them.” But the question that The Uncanny Muse raises at the end is: Why should we art-making humans be so quick to hand over the paint to the paintbrush? Why do we care how the paintbrush sees the world? Are we truly finished telling our own stories ourselves? Pria Anand might say no. In The Mind Electric, she writes: “Narrative is universally, spectacularly human; it is as unconscious as breathing, as essential as sleep, as comforting as familiarity. It has the capacity to bind us, but also to other, to lay bare, but also obscure.” The electricity in The Mind Electric belongs entirely to the human brain—no metaphor necessary. Instead, the book explores a number of neurological afflictions and the stories patients and doctors tell to better understand them. “The truth of our bodies and minds is as strange as fiction,” Anand writes—and the language she uses throughout the book is as evocative as that in any novel. The Mind Electric: A Neurologist on the Strangeness and Wonder of Our BrainsPria AnandWASHINGTON SQUARE PRESS, 2025 In personal and deeply researched vignettes in the tradition of Oliver Sacks, Anand shows that any comparison between brains and machines will inevitably fall flat. She tells of patients who see clear images when they’re functionally blind, invent entire backstories when they’ve lost a memory, break along seams that few can find, and—yes—see and hear ghosts. In fact, Anand cites one study of 375 college students in which researchers found that nearly three-quarters “had heard a voice that no one else could hear.” These were not diagnosed schizophrenics or sufferers of brain tumors—just people listening to their own uncanny muses. Many heard their name, others heard God, and some could make out the voice of a loved one who’d passed on. Anand suggests that writers throughout history have harnessed organic exchanges with these internal apparitions to make art. “I see myself taking the breath of these voices in my sails,” Virginia Woolf wrote of her own experiences with ghostly sounds. “I am a porous vessel afloat on sensation.” The mind in The Mind Electric is vast, mysterious, and populated. The narratives people construct to traverse it are just as full of wonder. Humans are not going to stop using technology to help us create anytime soon—and there’s no reason we should. Machines make for wonderful tools, as they always have. But when we turn the tools themselves into artists and storytellers, brains and bodies, magicians and ghosts, we bypass truth for wish fulfillment. Maybe what’s worse, we rob ourselves of the opportunity to contribute our own voices to the lively and loud chorus of human experience. And we keep others from the human pleasure of hearing them too. Rebecca Ackermann is a writer, designer, and artist based in San Francisco.0 Commentarios 0 Acciones 117 Views -

WWW.BUSINESSINSIDER.COMMeta earnings: See the social media giant's financial history, dividends, and growth expected from projectionsManuel Orbegozo/REUTERS Updated 2025-04-11T16:31:15Z Save Saved Read in app This story is available exclusively to Business Insider subscribers. Become an Insider and start reading now. Have an account? Meta Platforms is a closely watched company, with its quarterly earnings carefully scrutinized. Meta Platforms Q4 2024 earnings report was released on January 29. The company is fighting an anti-trust lawsuit against the government. Meta Platforms, the Silicon Valley parent company of social media sites Facebook, Instagram, and Threads, the messaging app WhatsApp, and more, releases its earnings quarterly.CEO and chairman Mark Zuckerberg plays a leading role on these calls to report Meta's status to its shareholders. Here's a breakdown of Meta's recent earnings.Meta Q4 earnings 2024Meta reported its fourth-quarter earnings on January 29 after the closing bell. The social media company crushed Wall Street's expectations.Meta tried to reassure investors about how much it's spending on artificial intelligence and about possible competition from Chinese AI company DeepSeek.The Facebook parent reported revenue for the period of $48.39 billion, beating the consensus analyst estimate of $46.98 billion.While its first-quarter sales forecast came in below estimates, investors seemed more concerned about other matters.During Meta's earnings conference call, CEO Mark Zuckerberg fielded questions from analysts on the company's recent content moderation changes, its big spending plans for 2025, TikTok, and more.He teased Llama 4 news and said he was "optimistic" about "progress and innovation" under Donald Trump's government. Zuckerberg also responded to a question about DeepSeek, saying it was important to have a domestic firm set the standard on open-source AI "for our own national advantage."4th Quarter resultsEarnings per share: $8.02 vs. estimate of $6.78Revenue: $48.39 billion vs. estimate of $46.98 billionOperating margin: 48% vs. estimate of 42.6%Meta Q3 earnings 2024Meta reported its third-quarter earnings on October 30 after the market close. The company made it clear it would not be slowing down on its spending while building out its AI infrastructure this year — and expects those costs to increase in 2025."We had a good quarter driven by AI progress across our apps and business," Meta CEO Mark Zuckerberg said. "We also have strong momentum with Meta AI, Llama adoption, and AI-powered glasses."The company's revenue for the quarter was $40.59 billion, ahead of the expected $40.25 billion. Earnings per share were in at $6.06, above the expected $5.25.In its core business of advertising, Meta said its average price per ad had increased 11% year over year.However, the company missed expectations for user growth. It said daily active users grew 5% year over year to 3.29 billion. That was lower than expectations of 3.31 billion daily users.Shares dipped more than 3% following Meta's earnings call with analysts, during which Zuckerberg talked through the company's AI investment strategy and said that "this might be the most dynamic moment I've seen in our industry."The company's big bet on AI, which includes both training its own AI models and launching consumer products across its platforms powered by them, continued to drive up its costs.3rd Quarter resultsEarnings per share: $6.03 vs. estimate of $5.25Revenue: $40.59 billion vs. estimate of $40.25 billionOperating margin: 43% vs. estimate of 39.6%Meta Q2 earnings 2024Meta reported second-quarter earnings on July 31 after the market close, and it was another win for Mark Zuckerberg.The Facebook parent's revenue and earnings-per-share beat consensus analyst estimates, driven by better-than-expected advertising sales.Like other tech giants, Meta has been heavily investing in generative AI with little to show for it so far, but CEO Zuckerberg defended its spending plans in the earnings call."Before we're really talking about monetization of any of those things by themselves, I don't think anyone should be surprised I would say that would be years," he said, noting that "the early signals on this are good."Zuckerberg also said in the earnings release that the company's chatbot, Meta AI, is on pace to become the most widely used in the world by the end of 2024.Meta's stock rose more than 6% in after-hours trading shortly after the results.2nd Quarter ResultsEarnings per share: $5.16 vs. estimate of $4.72Revenue: $39.07 billion, vs. estimate of $38.34 billionOperating margin: 38% vs. estimate of 37.7%Meta Q1 earnings 2024Meta reported first-quarter earnings on April 24 after the closing bell. The company reported revenue and earnings-per-share that beat consensus analyst estimates. But shares slid after Meta gave a range for second-quarter sales that was on the light side of forecasts and said it would spend more than it expected this year.The report is Meta's first without monthly- and daily-average-user numbers specifically broken out for Facebook. The company instead reported overall "Family of Apps" results that also included Instagram and WhatsApp. The combined group saw $36 billion of revenue, beating the consensus estimate of $35.5 billion.Meta's stock fell as much as 17% in after-hours trading as investors assessed the results.CEO Mark Zuckerberg's main focus on the investor call was Meta's plans to invest more significantly in AI. He also hyped up the company's recent partnership with Ray-Ban.1st Quarter ResultsEarnings per share: $4.71 vs. estimate of $4.30Revenue: $36.46 billion, +27% y/y, estimate $36.12 billionOperating margin: 38% vs. 25% y/y, estimate 37.2%Meta earnings historyMeta's earnings are a chance for investors to hear from Mark Zuckerberg himself. The founder and CEO tends to sprinkle in interesting snippets during earnings calls and has a front-row seat to the growing AI boom.Meta has shifted its focus recently from the Metaverse to AI-based large language models. Meta's AI offering, Llama, is unique in that it is open-sourced, similar to China's DeepSeek. The company has also talked up the adoption of AI technologies into its ad network, which has shown solid results so far.An ongoing anti-trust lawsuit from the government has recently weighed on Meta. The lawsuit alleges that Meta illegally purchased Instagram and WhatsApp to crush the competition and maintain a monopoly in the social networking industry.Zuckerberg himself has reportedly lobbied the Trump administration to ditch the lawsuit.If Meta proves unsuccessful in fighting the anti-trust lawsuit, it could lead to a break up of some aspects of its business.Meta's next earnings report is scheduled for April 30 after the market close. Recommended video0 Commentarios 0 Acciones 64 Views