0 Комментарии

0 Поделились

56 Просмотры

Каталог

Каталог

-

Войдите, чтобы отмечать, делиться и комментировать!

-

WWW.FORBES.COMFrom Overworked To Optimized: Technology And The Future Of Primary CareRather than replacing physicians, technology can help redefine their roles, enabling a more patient-centered, transdisciplinary approach.0 Комментарии 0 Поделились 55 Просмотры

WWW.FORBES.COMFrom Overworked To Optimized: Technology And The Future Of Primary CareRather than replacing physicians, technology can help redefine their roles, enabling a more patient-centered, transdisciplinary approach.0 Комментарии 0 Поделились 55 Просмотры -

TIME.COMWhy Tax Breaks for Data Centers Could Backfire on StatesAs the AI boom continues to escalate, states have rushed to entice data centers with juicy tax breaks. Data centers not only provide cloud based storage, but also power the training of increasingly powerful AI models. Lawmakers in more than 30 states have carved out tax incentives for data center companies, arguing that without them, the data centers wouldn’t come—and that their presence is essential toward growing property and income tax revenue, and driving economic development. But some economists and policy experts have started to question this logic. In a new study from Good Jobs First, a nonprofit research group, authors Greg LeRoy and Kasia Tarczynska find that data center tax breaks have swelled to billions of dollars in lost revenue for states a year—and that those losses, for some states, actually outweigh the tax revenue that the data centers bring in.“It’s a billion-dollar subsidy to the industry that is dominated by a few big tech companies,” says Tarczynska. Conversely, other economists and data center lobbyists argue that the industry’s overall impact vastly outweighs those potential losses, including by supporting local infrastructure and catalyzing further investment. The paper’s authors contend that even if there are positives, the public doesn’t yet know the extent of their costs, which are often obscured by tax privacy laws, confidentiality agreements or a sheer lack of research. “All politicians and corporations want to talk about are the benefits, while ignoring or downplaying the costs of development subsidies,” LeRoy writes in an email to TIME. “Let the companies compete on quality and price, not on how much they can extract from taxpayers and distort economic development and land use.” ‘Up and up and up’Many data center agreements are shrouded in secrecy, which means that the paper’s authors had limited data to draw from. At least 12 states, Leroy says, share no data on tax expenditures for data centers at all. But by looking through state budgets and other fiscal reports, the paper’s authors found that at least 10 states are missing out on $100 million per year in tax revenue. Much of this comes from subsidies for equipment like expensive servers, which need to be swapped out every few years. Many of these exemptions are uncapped in terms of their dollar amounts or time limit. This means that as data centers grow in size and power, so do the subsidies, forcing states to drastically recalculate their budget projections. In Texas, the cost projection for its data center sales tax exemption program increased from $157 million in 2023 to $1 billion this year. “These hyperscalers come and spend a lot of money, and the tax breaks are going up and up and up,” says Tarczynska. Data center proponents offer several rebuttals. First, they argue that most states already offer tax exemptions for manufacturing equipment—and these new carveouts are simply bringing the data center industry in line with those rules. They also argue that without subsidies, data center companies would set up shop elsewhere. In 2022, a tax incentive evaluation study from the Carl Vinson Institute of Government at the University of Georgia found that 90% of data center activity in Georgia was due to the incentive—implying that without those tax breaks, all of that business would essentially vanish. However, there is debate in economic circles around how much incentives actually cause economic activity. A 2018 paper from the economist Timothy Bartik found that the percentage of firms who made a location decision based on an incentive was much lower than the Vinson number: between 2 and 25 percent. “You need to allow for the fact that for some incentives, you would have gotten some data center activity anyway,” Bartik tells TIME. “My view is that there are potentially some fiscal benefits of data centers, but the industry is vastly overstating them.”A debate in GeorgiaData centers are a particular point of contention in Georgia, where they are growing at an unprecedented rate, particularly in the Atlanta metro area. State reports show that data center incentives have waived at least $163 million in local state and sales tax collections each year since 2022. The Governor’s Office of Planning and Budget estimates those incentives will surpass $327 million next year. Last year, Georgia state senate finance committee chairman Chuck Hufstetler, a Republican, spearheaded efforts to scrutinize tax breaks, resulting in the state legislature passing a bill halting tax breaks to data centers for two years. But Governor Brian Kemp vetoed it, saying that it would harm investment plans. Hufstetler tells TIME that the incentives are unnecessary. “The thing I’ve seen is that it's a competition of who can build it the quickest. It’s more important to them that they’re built first versus the cost,” he says. “I’m not opposed to data centers. But if they’re going to come, it shouldn’t be on the backs of local people, and they should be paying all their costs.“ Georgia state senator Sally Harrell, a Democrat, agrees, and contends that data centers do not provide long term jobs or serve local businesses. Data centers typically employ just a few dozen workers, which pales in comparison to factories of similar sizes. “The local community is left footing the bill for big, national companies that generate economic activity elsewhere,” she writes in an email to TIME. The aforementioned 2022 Vinson study in Georgia found that the amount of revenue forgone due to exemptions far exceeded the tax revenue generated by data centers, resulting in a negative state fiscal impact of $18 million in 2021. The study argued, however, that because the data centers would mostly not have been established without the incentive, their overall economic impact was significantly positive.Nathan Jensen, a professor at the University of Texas Austin, argues that this line of reasoning is flawed. “It’s always a bit of a trick where they cite the economic impact: You could go to a Walmart parking lot and throw $100 in the air, and that would have a net positive economic impact,” he says. “The key is the fiscal impact: does the state make its money back?” A defense of data centersStephen Hartka, vice president of research at the Virginia Economic Development Partnership, has a different perspective. He says that the “overwhelming majority” of data centers in Virginia were brought to the state by incentives. He argues that economists who only look at state tax revenues miss the fact that Virginia’s tax structure sends more benefits to smaller localities—who especially benefit from the property taxes that data centers pay. He also says that data centers can be particularly valuable in rural areas that have struggled to attract businesses. “Data centers are a very good fit for these rural areas, because they don't require a lot of people and they generate a ton of revenue for local governments,” he says. “They are financial boons to these communities that might not have many other options.” However, there has been a groundswell of activism against data centers in many Virginian communities. Last year, Ian Lovejoy, a Republican state delegate in Virginia, told TIME that data centers were the “number one issue we hear about from our constituents.” Residents expressed concerns about data centers threatening electricity and water access, and worried that taxpayers may have to foot the bill for future power lines. Across the country, communities have complained about data centers emitting pollutants and harming quality of life. LeRoy, the author of the Good Jobs First study, cautions localities from making “land use decisions based not on jobs or quality of life, but on how much they can get in tax revenue from a particular use.” He points to a precursor: when localities rushed to build malls and big-box retail centers for the sales and property taxes they brought in, only for them to be deserted when e-commerce took its place. The direction of data centers and their national expansion is still hazy. But watchdogs like LeRoy and policy experts like Jensen wish that more data was provided about the massive tradeoffs that states are making, and whether they are worth it. “I think the simple story is these are heavily subsidized capital intensive investments, which makes a pretty tough value proposition for communities,” Jensen says. “Because it's the tax revenues that are the biggest benefit, and you're giving a ton of that back.”0 Комментарии 0 Поделились 59 Просмотры

TIME.COMWhy Tax Breaks for Data Centers Could Backfire on StatesAs the AI boom continues to escalate, states have rushed to entice data centers with juicy tax breaks. Data centers not only provide cloud based storage, but also power the training of increasingly powerful AI models. Lawmakers in more than 30 states have carved out tax incentives for data center companies, arguing that without them, the data centers wouldn’t come—and that their presence is essential toward growing property and income tax revenue, and driving economic development. But some economists and policy experts have started to question this logic. In a new study from Good Jobs First, a nonprofit research group, authors Greg LeRoy and Kasia Tarczynska find that data center tax breaks have swelled to billions of dollars in lost revenue for states a year—and that those losses, for some states, actually outweigh the tax revenue that the data centers bring in.“It’s a billion-dollar subsidy to the industry that is dominated by a few big tech companies,” says Tarczynska. Conversely, other economists and data center lobbyists argue that the industry’s overall impact vastly outweighs those potential losses, including by supporting local infrastructure and catalyzing further investment. The paper’s authors contend that even if there are positives, the public doesn’t yet know the extent of their costs, which are often obscured by tax privacy laws, confidentiality agreements or a sheer lack of research. “All politicians and corporations want to talk about are the benefits, while ignoring or downplaying the costs of development subsidies,” LeRoy writes in an email to TIME. “Let the companies compete on quality and price, not on how much they can extract from taxpayers and distort economic development and land use.” ‘Up and up and up’Many data center agreements are shrouded in secrecy, which means that the paper’s authors had limited data to draw from. At least 12 states, Leroy says, share no data on tax expenditures for data centers at all. But by looking through state budgets and other fiscal reports, the paper’s authors found that at least 10 states are missing out on $100 million per year in tax revenue. Much of this comes from subsidies for equipment like expensive servers, which need to be swapped out every few years. Many of these exemptions are uncapped in terms of their dollar amounts or time limit. This means that as data centers grow in size and power, so do the subsidies, forcing states to drastically recalculate their budget projections. In Texas, the cost projection for its data center sales tax exemption program increased from $157 million in 2023 to $1 billion this year. “These hyperscalers come and spend a lot of money, and the tax breaks are going up and up and up,” says Tarczynska. Data center proponents offer several rebuttals. First, they argue that most states already offer tax exemptions for manufacturing equipment—and these new carveouts are simply bringing the data center industry in line with those rules. They also argue that without subsidies, data center companies would set up shop elsewhere. In 2022, a tax incentive evaluation study from the Carl Vinson Institute of Government at the University of Georgia found that 90% of data center activity in Georgia was due to the incentive—implying that without those tax breaks, all of that business would essentially vanish. However, there is debate in economic circles around how much incentives actually cause economic activity. A 2018 paper from the economist Timothy Bartik found that the percentage of firms who made a location decision based on an incentive was much lower than the Vinson number: between 2 and 25 percent. “You need to allow for the fact that for some incentives, you would have gotten some data center activity anyway,” Bartik tells TIME. “My view is that there are potentially some fiscal benefits of data centers, but the industry is vastly overstating them.”A debate in GeorgiaData centers are a particular point of contention in Georgia, where they are growing at an unprecedented rate, particularly in the Atlanta metro area. State reports show that data center incentives have waived at least $163 million in local state and sales tax collections each year since 2022. The Governor’s Office of Planning and Budget estimates those incentives will surpass $327 million next year. Last year, Georgia state senate finance committee chairman Chuck Hufstetler, a Republican, spearheaded efforts to scrutinize tax breaks, resulting in the state legislature passing a bill halting tax breaks to data centers for two years. But Governor Brian Kemp vetoed it, saying that it would harm investment plans. Hufstetler tells TIME that the incentives are unnecessary. “The thing I’ve seen is that it's a competition of who can build it the quickest. It’s more important to them that they’re built first versus the cost,” he says. “I’m not opposed to data centers. But if they’re going to come, it shouldn’t be on the backs of local people, and they should be paying all their costs.“ Georgia state senator Sally Harrell, a Democrat, agrees, and contends that data centers do not provide long term jobs or serve local businesses. Data centers typically employ just a few dozen workers, which pales in comparison to factories of similar sizes. “The local community is left footing the bill for big, national companies that generate economic activity elsewhere,” she writes in an email to TIME. The aforementioned 2022 Vinson study in Georgia found that the amount of revenue forgone due to exemptions far exceeded the tax revenue generated by data centers, resulting in a negative state fiscal impact of $18 million in 2021. The study argued, however, that because the data centers would mostly not have been established without the incentive, their overall economic impact was significantly positive.Nathan Jensen, a professor at the University of Texas Austin, argues that this line of reasoning is flawed. “It’s always a bit of a trick where they cite the economic impact: You could go to a Walmart parking lot and throw $100 in the air, and that would have a net positive economic impact,” he says. “The key is the fiscal impact: does the state make its money back?” A defense of data centersStephen Hartka, vice president of research at the Virginia Economic Development Partnership, has a different perspective. He says that the “overwhelming majority” of data centers in Virginia were brought to the state by incentives. He argues that economists who only look at state tax revenues miss the fact that Virginia’s tax structure sends more benefits to smaller localities—who especially benefit from the property taxes that data centers pay. He also says that data centers can be particularly valuable in rural areas that have struggled to attract businesses. “Data centers are a very good fit for these rural areas, because they don't require a lot of people and they generate a ton of revenue for local governments,” he says. “They are financial boons to these communities that might not have many other options.” However, there has been a groundswell of activism against data centers in many Virginian communities. Last year, Ian Lovejoy, a Republican state delegate in Virginia, told TIME that data centers were the “number one issue we hear about from our constituents.” Residents expressed concerns about data centers threatening electricity and water access, and worried that taxpayers may have to foot the bill for future power lines. Across the country, communities have complained about data centers emitting pollutants and harming quality of life. LeRoy, the author of the Good Jobs First study, cautions localities from making “land use decisions based not on jobs or quality of life, but on how much they can get in tax revenue from a particular use.” He points to a precursor: when localities rushed to build malls and big-box retail centers for the sales and property taxes they brought in, only for them to be deserted when e-commerce took its place. The direction of data centers and their national expansion is still hazy. But watchdogs like LeRoy and policy experts like Jensen wish that more data was provided about the massive tradeoffs that states are making, and whether they are worth it. “I think the simple story is these are heavily subsidized capital intensive investments, which makes a pretty tough value proposition for communities,” Jensen says. “Because it's the tax revenues that are the biggest benefit, and you're giving a ton of that back.”0 Комментарии 0 Поделились 59 Просмотры -

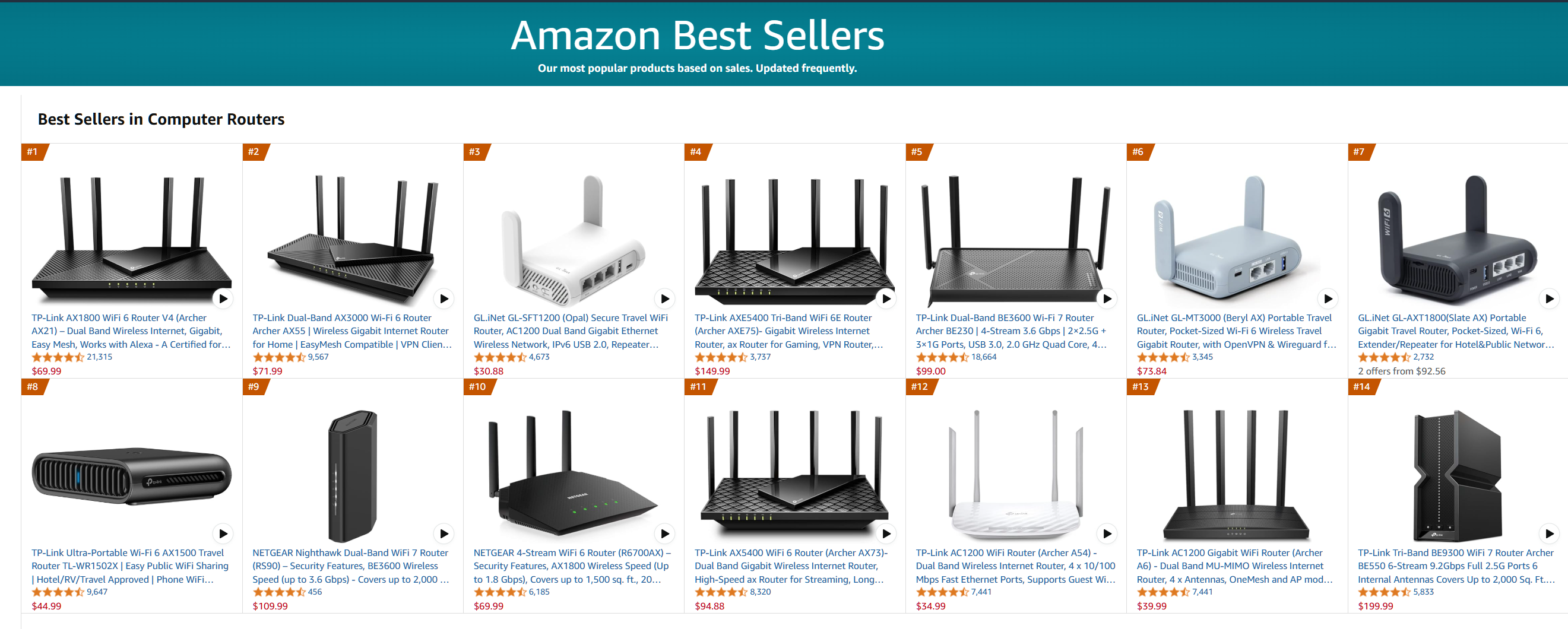

WWW.TECHSPOT.COMTP-Link's router pricing and China ties under US government investigationIn a nutshell: TP-Link Systems, one of the most popular router brands in the United States, has become the subject of a criminal antitrust investigation by the Department of Justice. According to reports, the China-linked company's pricing strategies and potential national security risks will be examined by the DoJ and the Commerce Department. The affordability of TP-Link's routers is part of what makes them so popular. Prosecutors at the DoJ are examining whether the company engaged in predatory pricing to undercut competitors and dominate the US market, writes Bloomberg. The probe began in 2024 under President Biden and continues today under the Trump administration. TP-Link is also being investigated by the Commerce Department over whether its ties to China pose a security threat. It was reported in December that an office of the Commerce Department had subpoenaed TP-Link, and that its routers could be banned in the US over national security concerns. TP-Link was founded by brothers Zhao Jianjun and Zhao Jiaxing in 1996. In 2008, TP-Link USA was set up to market and service products in North America, but ownership, management and supply chain all still reported to the Shenzhen-based TP-Link parent. In 2024, TP-Link USA completed a merger with TP-Link's non-Chinese operations to form TP-Link Systems Inc., headquartered in Irvine, California. This "organisational separation" from the Chinese company ensures each side has its own shareholding structure, board, R&D, production, marketing and support teams. Regulators and lawmakers are still reviewing whether the structural split truly insulates TP-Link's US arm from Chinese legal jurisdiction – hence the current antitrust and national-security probes. // Related Stories TP-Link has around 65% of the US market for routers used in homes and small businesses. Twelve of the top twenty best-selling routers on Amazon are TP-Link models, including the number one (TP-Link AX1800 WiFi 6 Router V4) and number two (TP-Link Dual-Band AX3000 Wi-Fi 6 Router Archer AX55) top sellers. In October 2024, Microsoft exposed a complex network of compromised devices that Chinese hackers used to launch highly evasive password spray attacks against Microsoft Azure customers. The network, dubbed CovertNetwork-1658, had been actively stealing credentials since August 2023. The attacks used a botnet of thousands of small office and home office (SOHO) routers, cameras, and other Internet-connected devices. At its peak, there were more than 16,000 devices in the botnet, most of which were TP-Link routers. There's a history of security flaws being discovered in TP-Link routers. A critical vulnerability with a CVSS score of 10.0 was found in the Archer C5400X tri-band router for gaming in May 2024, and in 2023, it was reported that Chinese state hackers were infecting TP-Link routers with custom, malicious firmware. The latter incident arrived soon after the US government said Mirai Botnet operators were using TP-Link routers for DDoS attacks.0 Комментарии 0 Поделились 56 Просмотры

WWW.TECHSPOT.COMTP-Link's router pricing and China ties under US government investigationIn a nutshell: TP-Link Systems, one of the most popular router brands in the United States, has become the subject of a criminal antitrust investigation by the Department of Justice. According to reports, the China-linked company's pricing strategies and potential national security risks will be examined by the DoJ and the Commerce Department. The affordability of TP-Link's routers is part of what makes them so popular. Prosecutors at the DoJ are examining whether the company engaged in predatory pricing to undercut competitors and dominate the US market, writes Bloomberg. The probe began in 2024 under President Biden and continues today under the Trump administration. TP-Link is also being investigated by the Commerce Department over whether its ties to China pose a security threat. It was reported in December that an office of the Commerce Department had subpoenaed TP-Link, and that its routers could be banned in the US over national security concerns. TP-Link was founded by brothers Zhao Jianjun and Zhao Jiaxing in 1996. In 2008, TP-Link USA was set up to market and service products in North America, but ownership, management and supply chain all still reported to the Shenzhen-based TP-Link parent. In 2024, TP-Link USA completed a merger with TP-Link's non-Chinese operations to form TP-Link Systems Inc., headquartered in Irvine, California. This "organisational separation" from the Chinese company ensures each side has its own shareholding structure, board, R&D, production, marketing and support teams. Regulators and lawmakers are still reviewing whether the structural split truly insulates TP-Link's US arm from Chinese legal jurisdiction – hence the current antitrust and national-security probes. // Related Stories TP-Link has around 65% of the US market for routers used in homes and small businesses. Twelve of the top twenty best-selling routers on Amazon are TP-Link models, including the number one (TP-Link AX1800 WiFi 6 Router V4) and number two (TP-Link Dual-Band AX3000 Wi-Fi 6 Router Archer AX55) top sellers. In October 2024, Microsoft exposed a complex network of compromised devices that Chinese hackers used to launch highly evasive password spray attacks against Microsoft Azure customers. The network, dubbed CovertNetwork-1658, had been actively stealing credentials since August 2023. The attacks used a botnet of thousands of small office and home office (SOHO) routers, cameras, and other Internet-connected devices. At its peak, there were more than 16,000 devices in the botnet, most of which were TP-Link routers. There's a history of security flaws being discovered in TP-Link routers. A critical vulnerability with a CVSS score of 10.0 was found in the Archer C5400X tri-band router for gaming in May 2024, and in 2023, it was reported that Chinese state hackers were infecting TP-Link routers with custom, malicious firmware. The latter incident arrived soon after the US government said Mirai Botnet operators were using TP-Link routers for DDoS attacks.0 Комментарии 0 Поделились 56 Просмотры -

WWW.DIGITALTRENDS.COMMicrosoft has revealed one of its recent ads uses gen AI — can you tell?In January, Microsoft released a minute-long advert for its Surface Pro and Surface Laptop. It currently has 42,000 views on YouTube with 302 comments discussing the hardware — what the comments don’t mention, however, is the AI-generated shots used in the ad. Why? Because no one even realized AI was involved until Microsoft smugly revealed it this week. You can tell the company is proud of this little stunt it’s pulled off because the blog about it begins with a dramatic summary of the history of film and how it has evolved — implying generative AI tools are the next step in this grand evolution. The blog, written by Jay Tan, says the reason the Visual Design team used generative AI for this advert is because they only had one month to plan, film, and edit the entire thing. They used AI tools to draft the script, storyboards, and pitch deck within a couple of days — a process Tan claims would usually take weeks. Related The next part of the process sounds a little bit like torture, however — refining prompts to get the right output. Designers gave natural language descriptions to one AI tool which then transformed them into an effective “prompt format” to use with other AI tools. They went through thousands of different prompts to finally achieve what they wanted — and yet this was apparently quicker in the long run than the traditional methods. In the end, the team thinks it saved “90% of the time and cost it would typically take.” When the deadline is the most important aspect of your project, I suppose you have to do whatever it takes to save time. However, having tried and failed to use generative AI for work myself, I feel acutely sorry for whoever got stuck with the task of refining prompts thousands of times over. I can’t deny, however, that a Microsoft advert is the perfect place to hide AI-generated images. The overly clean, minimal, unlived-in look of the sets and backgrounds Microsoft uses are basically real-life versions of AI-generated images. Everything looks a little too perfect, a little too digitally adjusted, and overall just a little bit “off.” So when I watch the ad now, it’s no surprise to me that viewers just saw the AI content as more of Microsoft’s usual airbrushed imagery. When you look closely, however, you can spot things that look a little odd — a pair of very flat glasses, a suspicious-looking teddy bear, and a few innocuous objects that look okay at a distance but when you zoom in, you can’t really tell what they’re meant to be. But for the most part, it’s not very obvious at all. Naturally, the Design team still used real people for all of the close-up hand shots and used editing software to remove every weird AI artifact and hallucination-produced anomaly so there were no striking “uncanny valley” moments left for viewers to notice. Well done, Microsoft, you tricked us all! Editors’ Recommendations0 Комментарии 0 Поделились 52 Просмотры

WWW.DIGITALTRENDS.COMMicrosoft has revealed one of its recent ads uses gen AI — can you tell?In January, Microsoft released a minute-long advert for its Surface Pro and Surface Laptop. It currently has 42,000 views on YouTube with 302 comments discussing the hardware — what the comments don’t mention, however, is the AI-generated shots used in the ad. Why? Because no one even realized AI was involved until Microsoft smugly revealed it this week. You can tell the company is proud of this little stunt it’s pulled off because the blog about it begins with a dramatic summary of the history of film and how it has evolved — implying generative AI tools are the next step in this grand evolution. The blog, written by Jay Tan, says the reason the Visual Design team used generative AI for this advert is because they only had one month to plan, film, and edit the entire thing. They used AI tools to draft the script, storyboards, and pitch deck within a couple of days — a process Tan claims would usually take weeks. Related The next part of the process sounds a little bit like torture, however — refining prompts to get the right output. Designers gave natural language descriptions to one AI tool which then transformed them into an effective “prompt format” to use with other AI tools. They went through thousands of different prompts to finally achieve what they wanted — and yet this was apparently quicker in the long run than the traditional methods. In the end, the team thinks it saved “90% of the time and cost it would typically take.” When the deadline is the most important aspect of your project, I suppose you have to do whatever it takes to save time. However, having tried and failed to use generative AI for work myself, I feel acutely sorry for whoever got stuck with the task of refining prompts thousands of times over. I can’t deny, however, that a Microsoft advert is the perfect place to hide AI-generated images. The overly clean, minimal, unlived-in look of the sets and backgrounds Microsoft uses are basically real-life versions of AI-generated images. Everything looks a little too perfect, a little too digitally adjusted, and overall just a little bit “off.” So when I watch the ad now, it’s no surprise to me that viewers just saw the AI content as more of Microsoft’s usual airbrushed imagery. When you look closely, however, you can spot things that look a little odd — a pair of very flat glasses, a suspicious-looking teddy bear, and a few innocuous objects that look okay at a distance but when you zoom in, you can’t really tell what they’re meant to be. But for the most part, it’s not very obvious at all. Naturally, the Design team still used real people for all of the close-up hand shots and used editing software to remove every weird AI artifact and hallucination-produced anomaly so there were no striking “uncanny valley” moments left for viewers to notice. Well done, Microsoft, you tricked us all! Editors’ Recommendations0 Комментарии 0 Поделились 52 Просмотры -

WWW.WSJ.COMThe Hottest AI Job of 2023 Is Already ObsoletePrompt engineering, a role aimed at crafting the perfect input to send to a large language model, was poised to become one of the hottest jobs in artificial intelligence. What happened?0 Комментарии 0 Поделились 53 Просмотры

-

ARSTECHNICA.COMIn the age of AI, we must protect human creativity as a natural resourceSilent Spring In the age of AI, we must protect human creativity as a natural resource Op-ed: As AI outputs flood the Internet, diverse human perspectives are our most valuable resource. Benj Edwards – Apr 25, 2025 7:00 am | 11 Credit: Kenny McCartney via Getty Images Credit: Kenny McCartney via Getty Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more Ironically, our present AI age has shone a bright spotlight on the immense value of human creativity as breakthroughs in technology threaten to undermine it. As tech giants rush to build newer AI models, their web crawlers vacuum up creative content, and those same models spew floods of synthetic media, risking drowning out the human creative spark in an ocean of pablum. Given this trajectory, AI-generated content may soon exceed the entire corpus of historical human creative works, making the preservation of the human creative ecosystem not just an ethical concern but an urgent imperative. The alternative is nothing less than a gradual homogenization of our cultural landscape, where machine learning flattens the richness of human expression into a mediocre statistical average. A limited resource By ingesting billions of creations, chatbots learn to talk, and image synthesizers learn to draw. Along the way, the AI companies behind them treat our shared culture like an inexhaustible resource to be strip-mined, with little thought for the consequences. But human creativity isn't the product of an industrial process; it's inherently throttled precisely because we are finite biological beings who draw inspiration from real lived experiences while balancing creativity with the necessities of life—sleep, emotional recovery, and limited lifespans. Creativity comes from making connections, and it takes energy, time, and insight for those connections to be meaningful. Until recently, a human brain was a prerequisite for making those kinds of connections, and there's a reason why that is valuable. Every human brain isn't just a store of data—it's a knowledge engine that thinks in a unique way, creating novel combinations of ideas. Instead of having one "connection machine" (an AI model) duplicated a million times, we have seven billion neural networks, each with a unique perspective. Relying on the diversity of thought derived from human cognition helps us escape the monolithic thinking that may emerge if everyone were to draw from the same AI-generated sources. Today, the AI industry's business models unintentionally echo the ways in which early industrialists approached forests and fisheries—as free inputs to exploit without considering ecological limits. Just as pollution from early factories unexpectedly damaged the environment, AI systems risk polluting the digital environment by flooding the Internet with synthetic content. Like a forest that needs careful management to thrive or a fishery vulnerable to collapse from overexploitation, the creative ecosystem can be degraded even if the potential for imagination remains. Depleting our creative diversity may become one of the hidden costs of AI, but that diversity is worth preserving. If we let AI systems deplete or pollute the human outputs they depend on, what happens to AI models—and ultimately to human society—over the long term? AI’s creative debt Every AI chatbot or image generator exists only because of human works, and many traditional artists argue strongly against current AI training approaches, labeling them plagiarism. Tech companies tend to disagree, although their positions vary. For example, in 2023, imaging giant Adobe took an unusual step by training its Firefly AI models solely on licensed stock photos and public domain works, demonstrating that alternative approaches are possible. Adobe's licensing model offers a contrast to companies like OpenAI, which rely heavily on scraping vast amounts of Internet content without always distinguishing between licensed and unlicensed works. Credit: drcooke via Getty Images OpenAI has argued that this type of scraping constitutes "fair use" and effectively claims that competitive AI models at current performance levels cannot be developed without relying on unlicensed training data, despite Adobe's alternative approach. The "fair use" argument often hinges on the legal concept of "transformative use," the idea that using works for a fundamentally different purpose from creative expression—such as identifying patterns for AI—does not violate copyright. Generative AI proponents often argue that their approach is how human artists learn from the world around them. Meanwhile, artists are expressing growing concern about losing their livelihoods as corporations turn to cheap, instantaneously generated AI content. They also call for clear boundaries and consent-driven models rather than allowing developers to extract value from their creations without acknowledgment or remuneration. Copyright as crop rotation This tension between artists and AI reveals a deeper ecological perspective on creativity itself. Copyright's time-limited nature was designed as a form of resource management, like crop rotation or regulated fishing seasons that allow for regeneration. Copyright expiration isn't a bug; its designers hoped it would ensure a steady replenishment of the public domain, feeding the ecosystem from which future creativity springs. On the other hand, purely AI-generated outputs cannot be copyrighted in the US, potentially brewing an unprecedented explosion in public domain content, although it's content that contains smoothed-over imitations of human perspectives. Treating human-generated content solely as raw material for AI training disrupts this ecological balance between "artist as consumer of creative ideas" and "artist as producer." Repeated legislative extensions of copyright terms have already significantly delayed the replenishment cycle, keeping works out of the public domain for much longer than originally envisioned. Now, AI's wholesale extraction approach further threatens this delicate balance. The resource under strain Our creative ecosystem is already showing measurable strain from AI's impact, from tangible present-day infrastructure burdens to concerning future possibilities. Aggressive AI crawlers already effectively function as denial-of-service attacks on certain sites, with Cloudflare documenting GPTBot's immediate impact on traffic patterns. Wikimedia's experience provides clear evidence of current costs: AI crawlers caused a documented 50 percent bandwidth surge, forcing the nonprofit to divert limited resources to defensive measures rather than to its core mission of knowledge sharing. As Wikimedia says, "Our content is free, our infrastructure is not.” Many of these crawlers demonstrably ignore established technical boundaries like robots.txt files. Beyond infrastructure strain, our information environment also shows signs of degradation. Google has publicly acknowledged rising volumes of "spammy, low-quality," often auto-generated content appearing in search results. A Wired investigation found concrete examples of AI-generated plagiarism sometimes outranking original reporting in search results. This kind of digital pollution led Ross Anderson of Cambridge University to compare it to filling oceans with plastic—it’s a contamination of our shared information spaces. Looking to the future, more risks may emerge. Ted Chiang's comparison of LLMs to lossy JPEGs offers a framework for understanding potential problems, as each AI generation summarizes web information into an increasingly "blurry" facsimile of human knowledge. The logical extension of this process—what some researchers term "model collapse"—presents a risk of degradation in our collective knowledge ecosystem if models are trained indiscriminately on their own outputs. (However, this differs from carefully designed synthetic data that can actually improve model efficiency.) This downward spiral of AI pollution may soon resemble a classic "tragedy of the commons," in which organizations act from self-interest at the expense of shared resources. If AI developers continue extracting data without limits or meaningful contributions, the shared resource of human creativity could eventually degrade for everyone. Protecting the human spark While AI models that simulate creativity in writing, coding, images, audio, or video can achieve remarkable imitations of human works, this sophisticated mimicry currently lacks the full depth of the human experience. For example, AI models lack a body that endures the pain and travails of human life. They don't grow over the course of a human lifespan in real time. When an AI-generated output happens to connect with us emotionally, it often does so by imitating patterns learned from a human artist who has actually lived that pain or joy. Credit: Thana Prasongsin via Getty Images Even if future AI systems develop more sophisticated simulations of emotional states or embodied experiences, they would still fundamentally differ from human creativity, which emerges organically from lived biological experience, cultural context, and social interaction. That's because the world constantly changes. New types of human experience emerge. If an ethically trained AI model is to remain useful, researchers must train it on recent human experiences, such as viral trends, evolving slang, and cultural shifts. Current AI solutions, like retrieval-augmented generation (RAG), address this challenge somewhat by retrieving up-to-date, external information to supplement their static training data. Yet even RAG methods depend heavily on validated, high-quality human-generated content—the very kind of data at risk if our digital environment becomes overwhelmed with low-quality AI-produced output. This need for high-quality, human-generated data is a major reason why companies like OpenAI have pursued media deals (including a deal signed with Ars Technica parent Condé Nast last August). Yet paradoxically, the same models fed on valuable human data often produce the low-quality spam and slop that floods public areas of the Internet, degrading the very ecosystem they rely on. AI as creative support When used carelessly or excessively, generative AI is a threat to the creative ecosystem, but we can't wholly discount the tech as a tool in a human creative's arsenal. The history of art is full of technological changes (new pigments, brushes, typewriters, word processors) that transform the nature of artistic production while augmenting human creativity. Bear with me because there's a great deal of nuance here that is easy to miss among today's more impassioned reactions to people using AI as a blunt instrument of creating mediocrity. While many artists rightfully worry about AI's extractive tendencies, research published in Harvard Business Review indicates that AI tools can potentially amplify rather than merely extract creative capacity, suggesting that a symbiotic relationship is possible under the right conditions. Inherent in this argument is that the responsible use of AI is reflected in the skill of the user. You can use a paintbrush to paint a wall or paint the Mona Lisa. Similarly, generative AI can mindlessly fill a canvas with slop, or a human can utilize it to express their own ideas. Machine learning tools (such as those in Adobe Photoshop) already help human creatives prototype concepts faster, iterate on variations they wouldn't have considered, or handle some repetitive production tasks like object removal or audio transcription, freeing humans to focus on conceptual direction and emotional resonance. These potential positives, however, don't negate the need for responsible stewardship and respecting human creativity as a precious resource. Cultivating the future So what might a sustainable ecosystem for human creativity actually involve? Legal and economic approaches will likely be key. Governments could legislate that AI training must be opt-in, or at the very least, provide a collective opt-out registry (as the EU's “AI Act” does). Other potential mechanisms include robust licensing or royalty systems, such as creating a royalty clearinghouse (like the music industry's BMI or ASCAP) for efficient licensing and fair compensation. Those fees could help compensate human creatives and encourage them to keep creating well into the future. Deeper shifts may involve cultural values and governance. Inspired by models like Japan's "Living National Treasures"—where the government funds artisans to preserve vital skills and support their work. Could we establish programs that similarly support human creators while also designating certain works or practices as "creative reserves," funding the further creation of certain creative works even if the economic market for them dries up? Or a more radical shift might involve an "AI commons"—legally declaring that any AI model trained on publicly scraped data should be owned collectively as a shared public domain, ensuring that its benefits flow back to society and don’t just enrich corporations. Credit: Tom Werner via Getty Images Meanwhile, Internet platforms have already been experimenting with technical defenses against industrial-scale AI demands. Examples include proof-of-work challenges, slowdown "tarpits" (e.g., Nepenthes), shared crawler blocklists ("ai.robots.txt"), commercial tools (Cloudflare's AI Labyrinth), and Wikimedia's "WE5: Responsible Use of Infrastructure" initiative. These solutions aren't perfect, and implementing any of them would require overcoming significant practical hurdles. Strict regulations might slow beneficial AI development; opt-out systems burden creators, while opt-in models can be complex to track. Meanwhile, tech defenses often invite arms races. Finding a sustainable, equitable balance remains the core challenge. The issue won't be solved in a day. Invest in people While navigating these complex systemic challenges will take time and collective effort, there is a surprisingly direct strategy that organizations can adopt now: investing in people. Don't sacrifice human connection and insight to save money with mediocre AI outputs. Organizations that cultivate unique human perspectives and integrate them with thoughtful AI augmentation will likely outperform those that pursue cost-cutting through wholesale creative automation. Investing in people acknowledges that while AI can generate content at scale, the distinctiveness of human insight, experience, and connection remains priceless. Benj Edwards Senior AI Reporter Benj Edwards Senior AI Reporter Benj Edwards is Ars Technica's Senior AI Reporter and founder of the site's dedicated AI beat in 2022. He's also a tech historian with almost two decades of experience. In his free time, he writes and records music, collects vintage computers, and enjoys nature. He lives in Raleigh, NC. 11 Comments0 Комментарии 0 Поделились 63 Просмотры

ARSTECHNICA.COMIn the age of AI, we must protect human creativity as a natural resourceSilent Spring In the age of AI, we must protect human creativity as a natural resource Op-ed: As AI outputs flood the Internet, diverse human perspectives are our most valuable resource. Benj Edwards – Apr 25, 2025 7:00 am | 11 Credit: Kenny McCartney via Getty Images Credit: Kenny McCartney via Getty Images Story text Size Small Standard Large Width * Standard Wide Links Standard Orange * Subscribers only Learn more Ironically, our present AI age has shone a bright spotlight on the immense value of human creativity as breakthroughs in technology threaten to undermine it. As tech giants rush to build newer AI models, their web crawlers vacuum up creative content, and those same models spew floods of synthetic media, risking drowning out the human creative spark in an ocean of pablum. Given this trajectory, AI-generated content may soon exceed the entire corpus of historical human creative works, making the preservation of the human creative ecosystem not just an ethical concern but an urgent imperative. The alternative is nothing less than a gradual homogenization of our cultural landscape, where machine learning flattens the richness of human expression into a mediocre statistical average. A limited resource By ingesting billions of creations, chatbots learn to talk, and image synthesizers learn to draw. Along the way, the AI companies behind them treat our shared culture like an inexhaustible resource to be strip-mined, with little thought for the consequences. But human creativity isn't the product of an industrial process; it's inherently throttled precisely because we are finite biological beings who draw inspiration from real lived experiences while balancing creativity with the necessities of life—sleep, emotional recovery, and limited lifespans. Creativity comes from making connections, and it takes energy, time, and insight for those connections to be meaningful. Until recently, a human brain was a prerequisite for making those kinds of connections, and there's a reason why that is valuable. Every human brain isn't just a store of data—it's a knowledge engine that thinks in a unique way, creating novel combinations of ideas. Instead of having one "connection machine" (an AI model) duplicated a million times, we have seven billion neural networks, each with a unique perspective. Relying on the diversity of thought derived from human cognition helps us escape the monolithic thinking that may emerge if everyone were to draw from the same AI-generated sources. Today, the AI industry's business models unintentionally echo the ways in which early industrialists approached forests and fisheries—as free inputs to exploit without considering ecological limits. Just as pollution from early factories unexpectedly damaged the environment, AI systems risk polluting the digital environment by flooding the Internet with synthetic content. Like a forest that needs careful management to thrive or a fishery vulnerable to collapse from overexploitation, the creative ecosystem can be degraded even if the potential for imagination remains. Depleting our creative diversity may become one of the hidden costs of AI, but that diversity is worth preserving. If we let AI systems deplete or pollute the human outputs they depend on, what happens to AI models—and ultimately to human society—over the long term? AI’s creative debt Every AI chatbot or image generator exists only because of human works, and many traditional artists argue strongly against current AI training approaches, labeling them plagiarism. Tech companies tend to disagree, although their positions vary. For example, in 2023, imaging giant Adobe took an unusual step by training its Firefly AI models solely on licensed stock photos and public domain works, demonstrating that alternative approaches are possible. Adobe's licensing model offers a contrast to companies like OpenAI, which rely heavily on scraping vast amounts of Internet content without always distinguishing between licensed and unlicensed works. Credit: drcooke via Getty Images OpenAI has argued that this type of scraping constitutes "fair use" and effectively claims that competitive AI models at current performance levels cannot be developed without relying on unlicensed training data, despite Adobe's alternative approach. The "fair use" argument often hinges on the legal concept of "transformative use," the idea that using works for a fundamentally different purpose from creative expression—such as identifying patterns for AI—does not violate copyright. Generative AI proponents often argue that their approach is how human artists learn from the world around them. Meanwhile, artists are expressing growing concern about losing their livelihoods as corporations turn to cheap, instantaneously generated AI content. They also call for clear boundaries and consent-driven models rather than allowing developers to extract value from their creations without acknowledgment or remuneration. Copyright as crop rotation This tension between artists and AI reveals a deeper ecological perspective on creativity itself. Copyright's time-limited nature was designed as a form of resource management, like crop rotation or regulated fishing seasons that allow for regeneration. Copyright expiration isn't a bug; its designers hoped it would ensure a steady replenishment of the public domain, feeding the ecosystem from which future creativity springs. On the other hand, purely AI-generated outputs cannot be copyrighted in the US, potentially brewing an unprecedented explosion in public domain content, although it's content that contains smoothed-over imitations of human perspectives. Treating human-generated content solely as raw material for AI training disrupts this ecological balance between "artist as consumer of creative ideas" and "artist as producer." Repeated legislative extensions of copyright terms have already significantly delayed the replenishment cycle, keeping works out of the public domain for much longer than originally envisioned. Now, AI's wholesale extraction approach further threatens this delicate balance. The resource under strain Our creative ecosystem is already showing measurable strain from AI's impact, from tangible present-day infrastructure burdens to concerning future possibilities. Aggressive AI crawlers already effectively function as denial-of-service attacks on certain sites, with Cloudflare documenting GPTBot's immediate impact on traffic patterns. Wikimedia's experience provides clear evidence of current costs: AI crawlers caused a documented 50 percent bandwidth surge, forcing the nonprofit to divert limited resources to defensive measures rather than to its core mission of knowledge sharing. As Wikimedia says, "Our content is free, our infrastructure is not.” Many of these crawlers demonstrably ignore established technical boundaries like robots.txt files. Beyond infrastructure strain, our information environment also shows signs of degradation. Google has publicly acknowledged rising volumes of "spammy, low-quality," often auto-generated content appearing in search results. A Wired investigation found concrete examples of AI-generated plagiarism sometimes outranking original reporting in search results. This kind of digital pollution led Ross Anderson of Cambridge University to compare it to filling oceans with plastic—it’s a contamination of our shared information spaces. Looking to the future, more risks may emerge. Ted Chiang's comparison of LLMs to lossy JPEGs offers a framework for understanding potential problems, as each AI generation summarizes web information into an increasingly "blurry" facsimile of human knowledge. The logical extension of this process—what some researchers term "model collapse"—presents a risk of degradation in our collective knowledge ecosystem if models are trained indiscriminately on their own outputs. (However, this differs from carefully designed synthetic data that can actually improve model efficiency.) This downward spiral of AI pollution may soon resemble a classic "tragedy of the commons," in which organizations act from self-interest at the expense of shared resources. If AI developers continue extracting data without limits or meaningful contributions, the shared resource of human creativity could eventually degrade for everyone. Protecting the human spark While AI models that simulate creativity in writing, coding, images, audio, or video can achieve remarkable imitations of human works, this sophisticated mimicry currently lacks the full depth of the human experience. For example, AI models lack a body that endures the pain and travails of human life. They don't grow over the course of a human lifespan in real time. When an AI-generated output happens to connect with us emotionally, it often does so by imitating patterns learned from a human artist who has actually lived that pain or joy. Credit: Thana Prasongsin via Getty Images Even if future AI systems develop more sophisticated simulations of emotional states or embodied experiences, they would still fundamentally differ from human creativity, which emerges organically from lived biological experience, cultural context, and social interaction. That's because the world constantly changes. New types of human experience emerge. If an ethically trained AI model is to remain useful, researchers must train it on recent human experiences, such as viral trends, evolving slang, and cultural shifts. Current AI solutions, like retrieval-augmented generation (RAG), address this challenge somewhat by retrieving up-to-date, external information to supplement their static training data. Yet even RAG methods depend heavily on validated, high-quality human-generated content—the very kind of data at risk if our digital environment becomes overwhelmed with low-quality AI-produced output. This need for high-quality, human-generated data is a major reason why companies like OpenAI have pursued media deals (including a deal signed with Ars Technica parent Condé Nast last August). Yet paradoxically, the same models fed on valuable human data often produce the low-quality spam and slop that floods public areas of the Internet, degrading the very ecosystem they rely on. AI as creative support When used carelessly or excessively, generative AI is a threat to the creative ecosystem, but we can't wholly discount the tech as a tool in a human creative's arsenal. The history of art is full of technological changes (new pigments, brushes, typewriters, word processors) that transform the nature of artistic production while augmenting human creativity. Bear with me because there's a great deal of nuance here that is easy to miss among today's more impassioned reactions to people using AI as a blunt instrument of creating mediocrity. While many artists rightfully worry about AI's extractive tendencies, research published in Harvard Business Review indicates that AI tools can potentially amplify rather than merely extract creative capacity, suggesting that a symbiotic relationship is possible under the right conditions. Inherent in this argument is that the responsible use of AI is reflected in the skill of the user. You can use a paintbrush to paint a wall or paint the Mona Lisa. Similarly, generative AI can mindlessly fill a canvas with slop, or a human can utilize it to express their own ideas. Machine learning tools (such as those in Adobe Photoshop) already help human creatives prototype concepts faster, iterate on variations they wouldn't have considered, or handle some repetitive production tasks like object removal or audio transcription, freeing humans to focus on conceptual direction and emotional resonance. These potential positives, however, don't negate the need for responsible stewardship and respecting human creativity as a precious resource. Cultivating the future So what might a sustainable ecosystem for human creativity actually involve? Legal and economic approaches will likely be key. Governments could legislate that AI training must be opt-in, or at the very least, provide a collective opt-out registry (as the EU's “AI Act” does). Other potential mechanisms include robust licensing or royalty systems, such as creating a royalty clearinghouse (like the music industry's BMI or ASCAP) for efficient licensing and fair compensation. Those fees could help compensate human creatives and encourage them to keep creating well into the future. Deeper shifts may involve cultural values and governance. Inspired by models like Japan's "Living National Treasures"—where the government funds artisans to preserve vital skills and support their work. Could we establish programs that similarly support human creators while also designating certain works or practices as "creative reserves," funding the further creation of certain creative works even if the economic market for them dries up? Or a more radical shift might involve an "AI commons"—legally declaring that any AI model trained on publicly scraped data should be owned collectively as a shared public domain, ensuring that its benefits flow back to society and don’t just enrich corporations. Credit: Tom Werner via Getty Images Meanwhile, Internet platforms have already been experimenting with technical defenses against industrial-scale AI demands. Examples include proof-of-work challenges, slowdown "tarpits" (e.g., Nepenthes), shared crawler blocklists ("ai.robots.txt"), commercial tools (Cloudflare's AI Labyrinth), and Wikimedia's "WE5: Responsible Use of Infrastructure" initiative. These solutions aren't perfect, and implementing any of them would require overcoming significant practical hurdles. Strict regulations might slow beneficial AI development; opt-out systems burden creators, while opt-in models can be complex to track. Meanwhile, tech defenses often invite arms races. Finding a sustainable, equitable balance remains the core challenge. The issue won't be solved in a day. Invest in people While navigating these complex systemic challenges will take time and collective effort, there is a surprisingly direct strategy that organizations can adopt now: investing in people. Don't sacrifice human connection and insight to save money with mediocre AI outputs. Organizations that cultivate unique human perspectives and integrate them with thoughtful AI augmentation will likely outperform those that pursue cost-cutting through wholesale creative automation. Investing in people acknowledges that while AI can generate content at scale, the distinctiveness of human insight, experience, and connection remains priceless. Benj Edwards Senior AI Reporter Benj Edwards Senior AI Reporter Benj Edwards is Ars Technica's Senior AI Reporter and founder of the site's dedicated AI beat in 2022. He's also a tech historian with almost two decades of experience. In his free time, he writes and records music, collects vintage computers, and enjoys nature. He lives in Raleigh, NC. 11 Comments0 Комментарии 0 Поделились 63 Просмотры -

WWW.INFORMATIONWEEK.COMHow Your Organization Can Benefit from Platform EngineeringJohn Edwards, Technology Journalist & AuthorApril 25, 20255 Min ReadArtemisDiana via Alamy Stock PhotoPlatform engineering is a discipline that's designed to improve software developer productivity, application cycle time, and speed to market by providing common, reusable tools and capabilities via an internal developer platform. The platform creates a bridge between developers and infrastructure, speeding complex tasks that would normally be challenging, and perhaps even impossible, for individual developers to manage independently. Platform engineering is also the practice of building and maintaining an internal developer platform that provides a set of tools and services to help development teams build, test, and deploy software more efficiently, explains Brett Smith, a distinguished software developer with analytics software and services firm SAS, in an online interview. "Ideally, the platform is self-service, freeing the team to focus on updates and improvements." Platform engineering advocates the continuous application of practices that provide an improved, more productive developer experience by delivering tools and capabilities to standardize the software development process and make it more efficient, says Faruk Muratovic, engineering leader at Deloitte Consulting, in an online interview. A core platform engineering component is a cloud-native services catalog that allows development teams to seamlessly provision infrastructure, configure pipelines, and integrate DevOps tooling, Muratovic says. "With platform engineering, development teams are empowered to create a development environment that optimizes performance and drives successful deployment." Related:A Helping Hand Platform engineering significantly improves development team productivity by streamlining workflows, automating tasks, and removing infrastructure-related obstacles, observes Vinod Chavan, cloud platform engineering services leader at IBM Consulting. "By reducing manual effort in deploying and managing applications, developers can focus on writing code and innovating rather than managing infrastructure," he notes in an email interview. Process automation and standardization minimizes human error and enhances consistency and speed across the development lifecycle, Muratovic says. Additionally, by providing self-service development models, platform engineering significantly reduces dependency on traditional IT services teams since it allows full-stack product pods to deploy and manage their own environments, he adds. Embedded monitoring, security, and compliance policies ensure that enterprise policies are followed without adding overhead, Muratovic says. "Platform engineering also supports Infrastructure as a Code (IaC) capabilities, which provide development teams with pre-configured networking, storage, compute, and CI/CD (continuous integration/continuous delivery) pipelines." Related:An often-overlooked platform engineering benefit is regular tool updates, Smith notes. Enterprise Benefits Platform engineering gives enterprises the structure and automation needed to scale efficiently while lowering costs and strengthening operational resilience, Chavan says. "By eliminating inefficiencies and reducing manual labor, it optimizes resource usage and enables business growth without unnecessary complexity or costs." He adds that by providing a stable environment that can support the seamless integration of advanced tools, platform engineering can also play a key role in helping organizations leverage AI and other emerging technologies. Platform engineering can reduce operational friction, increase monitoring ability, and enhance flexibility when deploying workloads into hybrid cloud environments. "Overhead costs can be reduced by automating repetitive, manual tasks, and access controls and compliance protocols can be standardized," Muratovic says. Related:By taking advantage of reusable platform services, such as API gateways, monitoring, orchestration, and shared authentication, platform engineering can also build a strong foundation for application and systems scalability. "Additionally, organizations that develop product-oriented and cloud-first models can pre-define reference architectures and develop best practices to encourage adoption and enhance system reliability and security," Muratovic says. A centralized and structured platform also helps organizations strengthen security and compliance by providing better visibility into infrastructure, applications and workflows, Chavan says. "With real-time monitoring and automated governance, businesses can quickly detect risks, address security issues before they escalate, and stay up to date with evolving compliance regulations." Potential Pitfalls When building a platform, a common pitfall is creating a system that's too complex and doesn't address the specific problems facing development and operations teams, Chavan says. Additionally, failing to build strong governance and oversight can also lead to control issues, which can lead to security or compliance problems. Muratovic warns against over-engineering and failing to align with developer culture. "Over-engineering is simply creating systems that are too complex for the problems they were intended to solve, which increases maintenance costs and slows productivity -- both of which can erode value," he says. "Also, if the shift to platform engineering isn't aligned with developer needs, developers may become resistant to the effort, which can significantly delay adoption." Another pitfall is overly rigid implementation. "It's crucial to find a balance between standardization across the enterprise and providing too many choices for developers," Muratovic says. "Too much rigidity and developers won’t like the experience; too much flexibility leads to chaos and inefficiency." Final Thoughts Platform engineering isn't just about the technology, Chavan observes. It's also about creating a collaborative and continuously improving work culture. "By equipping developers and operators with the right tools and well-designed processes, organizations can streamline workflows and increase space for innovation." Platform engineering isn’t simply about technology; its value lies in creating a development operating model that empowers developers while aligning with business needs, Muratovic says. He believes that the discipline will constantly evolve as needs and goals change, so it's crucial to create a culture of openness and collaboration between platform engineers, operations teams, and developers. Muratovic notes that by focusing on the developer experience -- particularly self-service, automation, governance, compliance, and security -- platform engineering can provide organizations with a flexible, scalable, resilient ecosystem that fuels the agility and innovation that drives sustained growth. "Platform engineering is how you herd the cats, eliminate the unicorns, and eradicate the chaos from your software supply chain," Smith concludes. About the AuthorJohn EdwardsTechnology Journalist & AuthorJohn Edwards is a veteran business technology journalist. His work has appeared in The New York Times, The Washington Post, and numerous business and technology publications, including Computerworld, CFO Magazine, IBM Data Management Magazine, RFID Journal, and Electronic Design. He has also written columns for The Economist's Business Intelligence Unit and PricewaterhouseCoopers' Communications Direct. John has authored several books on business technology topics. His work began appearing online as early as 1983. Throughout the 1980s and 90s, he wrote daily news and feature articles for both the CompuServe and Prodigy online services. His "Behind the Screens" commentaries made him the world's first known professional blogger.See more from John EdwardsReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Комментарии 0 Поделились 45 Просмотры

WWW.INFORMATIONWEEK.COMHow Your Organization Can Benefit from Platform EngineeringJohn Edwards, Technology Journalist & AuthorApril 25, 20255 Min ReadArtemisDiana via Alamy Stock PhotoPlatform engineering is a discipline that's designed to improve software developer productivity, application cycle time, and speed to market by providing common, reusable tools and capabilities via an internal developer platform. The platform creates a bridge between developers and infrastructure, speeding complex tasks that would normally be challenging, and perhaps even impossible, for individual developers to manage independently. Platform engineering is also the practice of building and maintaining an internal developer platform that provides a set of tools and services to help development teams build, test, and deploy software more efficiently, explains Brett Smith, a distinguished software developer with analytics software and services firm SAS, in an online interview. "Ideally, the platform is self-service, freeing the team to focus on updates and improvements." Platform engineering advocates the continuous application of practices that provide an improved, more productive developer experience by delivering tools and capabilities to standardize the software development process and make it more efficient, says Faruk Muratovic, engineering leader at Deloitte Consulting, in an online interview. A core platform engineering component is a cloud-native services catalog that allows development teams to seamlessly provision infrastructure, configure pipelines, and integrate DevOps tooling, Muratovic says. "With platform engineering, development teams are empowered to create a development environment that optimizes performance and drives successful deployment." Related:A Helping Hand Platform engineering significantly improves development team productivity by streamlining workflows, automating tasks, and removing infrastructure-related obstacles, observes Vinod Chavan, cloud platform engineering services leader at IBM Consulting. "By reducing manual effort in deploying and managing applications, developers can focus on writing code and innovating rather than managing infrastructure," he notes in an email interview. Process automation and standardization minimizes human error and enhances consistency and speed across the development lifecycle, Muratovic says. Additionally, by providing self-service development models, platform engineering significantly reduces dependency on traditional IT services teams since it allows full-stack product pods to deploy and manage their own environments, he adds. Embedded monitoring, security, and compliance policies ensure that enterprise policies are followed without adding overhead, Muratovic says. "Platform engineering also supports Infrastructure as a Code (IaC) capabilities, which provide development teams with pre-configured networking, storage, compute, and CI/CD (continuous integration/continuous delivery) pipelines." Related:An often-overlooked platform engineering benefit is regular tool updates, Smith notes. Enterprise Benefits Platform engineering gives enterprises the structure and automation needed to scale efficiently while lowering costs and strengthening operational resilience, Chavan says. "By eliminating inefficiencies and reducing manual labor, it optimizes resource usage and enables business growth without unnecessary complexity or costs." He adds that by providing a stable environment that can support the seamless integration of advanced tools, platform engineering can also play a key role in helping organizations leverage AI and other emerging technologies. Platform engineering can reduce operational friction, increase monitoring ability, and enhance flexibility when deploying workloads into hybrid cloud environments. "Overhead costs can be reduced by automating repetitive, manual tasks, and access controls and compliance protocols can be standardized," Muratovic says. Related:By taking advantage of reusable platform services, such as API gateways, monitoring, orchestration, and shared authentication, platform engineering can also build a strong foundation for application and systems scalability. "Additionally, organizations that develop product-oriented and cloud-first models can pre-define reference architectures and develop best practices to encourage adoption and enhance system reliability and security," Muratovic says. A centralized and structured platform also helps organizations strengthen security and compliance by providing better visibility into infrastructure, applications and workflows, Chavan says. "With real-time monitoring and automated governance, businesses can quickly detect risks, address security issues before they escalate, and stay up to date with evolving compliance regulations." Potential Pitfalls When building a platform, a common pitfall is creating a system that's too complex and doesn't address the specific problems facing development and operations teams, Chavan says. Additionally, failing to build strong governance and oversight can also lead to control issues, which can lead to security or compliance problems. Muratovic warns against over-engineering and failing to align with developer culture. "Over-engineering is simply creating systems that are too complex for the problems they were intended to solve, which increases maintenance costs and slows productivity -- both of which can erode value," he says. "Also, if the shift to platform engineering isn't aligned with developer needs, developers may become resistant to the effort, which can significantly delay adoption." Another pitfall is overly rigid implementation. "It's crucial to find a balance between standardization across the enterprise and providing too many choices for developers," Muratovic says. "Too much rigidity and developers won’t like the experience; too much flexibility leads to chaos and inefficiency." Final Thoughts Platform engineering isn't just about the technology, Chavan observes. It's also about creating a collaborative and continuously improving work culture. "By equipping developers and operators with the right tools and well-designed processes, organizations can streamline workflows and increase space for innovation." Platform engineering isn’t simply about technology; its value lies in creating a development operating model that empowers developers while aligning with business needs, Muratovic says. He believes that the discipline will constantly evolve as needs and goals change, so it's crucial to create a culture of openness and collaboration between platform engineers, operations teams, and developers. Muratovic notes that by focusing on the developer experience -- particularly self-service, automation, governance, compliance, and security -- platform engineering can provide organizations with a flexible, scalable, resilient ecosystem that fuels the agility and innovation that drives sustained growth. "Platform engineering is how you herd the cats, eliminate the unicorns, and eradicate the chaos from your software supply chain," Smith concludes. About the AuthorJohn EdwardsTechnology Journalist & AuthorJohn Edwards is a veteran business technology journalist. His work has appeared in The New York Times, The Washington Post, and numerous business and technology publications, including Computerworld, CFO Magazine, IBM Data Management Magazine, RFID Journal, and Electronic Design. He has also written columns for The Economist's Business Intelligence Unit and PricewaterhouseCoopers' Communications Direct. John has authored several books on business technology topics. His work began appearing online as early as 1983. Throughout the 1980s and 90s, he wrote daily news and feature articles for both the CompuServe and Prodigy online services. His "Behind the Screens" commentaries made him the world's first known professional blogger.See more from John EdwardsReportsMore ReportsNever Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.SIGN-UPYou May Also Like0 Комментарии 0 Поделились 45 Просмотры -

WWW.NEWSCIENTIST.COMPhotography contest spotlights the beauty of science in vivid detailOptical fibre connected to a dilution refrigeratorHarsh Rathee/Department of Physics Photographs accompanying most scientific papers might politely be called “functional”. But this collection of images from Imperial College London’s research photography competition proves that research can be beautiful. The top image, by Harsh Rathee of the physics department, shows an optical fibre connected to a dilution refrigerator, a device that creates a temperature a thousandth that of the vacuum of space. By observing how light interacts with sound waves at this incredibly low temperature, researchers can explore the unique properties of matter at the quantum level. Liquid GoldAnna Curran/Department of Mathematics The above entry is from Anna Curran of the maths department, who won a judges’ choice prize in the PhD student category. Curran’s research focuses on mathematically modelling the effect of molecules called surfactants, which reduce surface tension in fluids. It is this phenomenon that allows bubbles to hold their shape within the ring. “Surfactants are all around us – in our soaps and detergents, they are responsible for breaking down dirt and bacteria, but their effects also underpin many biological, medical and engineering processes, from inkjet printing to self-cleaning surfaces to the treatment of premature babies’ lungs,” says Curran. Cerebral organoid, or “mini-brain”Alex Kingston/Department of Life Sciences Pictured above is an image from Alex Kingston of the life sciences department. It depicts part of a cerebral organoid, also known as a “mini-brain”. These lab-grown collections of cells are a microcosm of the earliest stages of human brain development. Topics:0 Комментарии 0 Поделились 57 Просмотры