WWW.TECHSPOT.COM

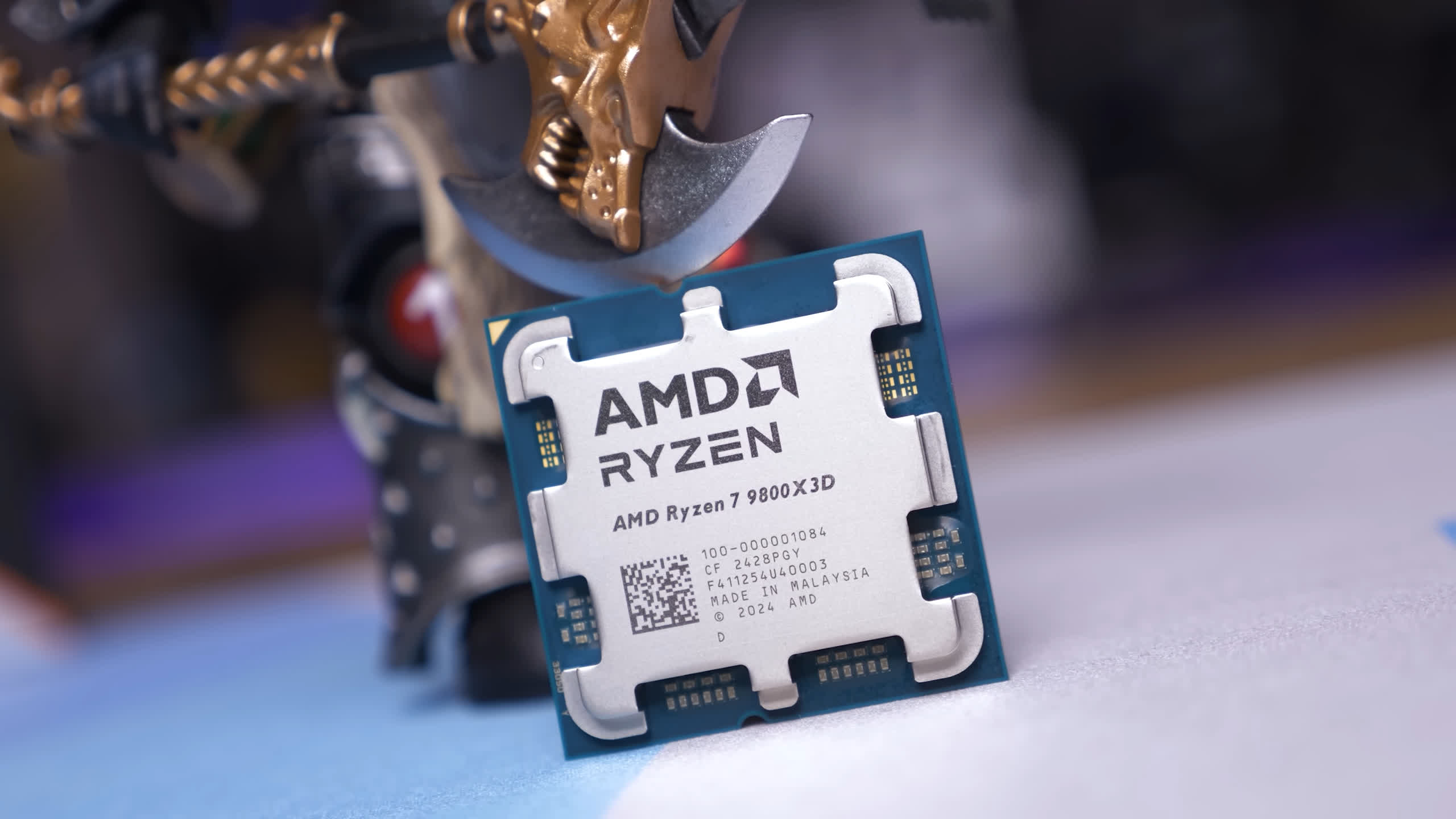

AMD Ryzen 7 9800X3D vs. Intel Core Ultra 9 285K: 45 Game Benchmark

Today, we're re-reviewing the Core Ultra 9 285K just kidding! Instead, we're throwing Intel's latest flagship CPU to the wolves, and by that we mean we are comparing it head-to-head with the Ryzen 7 9800X3D across 45 games.What we want to know is how these CPUs compare across a wide range of games or, more specifically, how much faster the 9800X3D is. For this review, we're sticking with the new format. Instead of showing individual results for a dozen or so titles and summarizing the margins for the rest, we'll present results for five games at a time. This way, you can see the FPS data for every game tested.As always, all CPU gaming benchmarks are conducted at 1080p using the GeForce RTX 4090. If you're curious why this is the best approach for evaluating CPU performance in games today and into the future, we have an article for that right here.Now, let's dive into the graphs!Gaming BenchmarksThe Last of Us, Cyberpunk, Hogwarts Legacy, ACC, Spider-ManFor our first batch of games, let's begin with The Last of Us Part 1. This is an example where the 285K performs quite well, resulting in the 9800X3D being only 5% faster a negligible margin. In this scenario, both CPUs deliver exceptional performance, as expected from flagship processors.However, the 285K stinks in Cyberpunk 2077, choking the RTX 4090 to just 151 fps. As a result, the Ryzen 7 processor is an impressive 45% faster. The 9800X3D also crushes the 285K in Hogwarts Legacy, delivering 43% better performance. The margins are even more brutal in ACC, where the AMD processor outpaces the Intel CPU by a staggering 75%. While performance improves for Intel in Spider-Man Remastered, the 9800X3D still maintains a 15% lead.Baldur's Gate 3, Homeworld 3, APTR, Flight Simulator, StarfieldNext, we have Baldur's Gate 3, where the 9800X3D is 34% faster, jumping from 131 fps to 176 fps. The margins become even more striking in Homeworld 3, with the 9800X3D achieving 58% better average frame rates and an incredible 117% improvement in 1% lows.The 285K also performs unexpectedly poorly in A Plague Tale: Requiem, averaging just 123 fps compared to 195 fps on the 9800X3D a 59% performance boost for the AMD CPU.In Microsoft Flight Simulator 2020, the 9800X3D maintains a strong lead with an average of 95 fps, a 34% improvement over the 285K. The Intel CPU shows one of its best results in Starfield, though even here, the 9800X3D is 10% faster.Horizon Forbidden West, Horizon Zero Dawn, Watch Dogs, Far Cry 6, T&LIn Horizon Forbidden West, the 285K delivers one of its best performances, coming within a few percentage points of the 9800X3D. However, in Horizon Zero Dawn, the Ryzen 7 processor is 29% faster. The 9800X3D also outpaces the 285K by 46% in Watch Dogs Legion and 28% in Far Cry 6, while the margin is negligible in Throne and Liberty.Hitman 3, Callisto Protocol, SoTR, Halo, Warhammer 3Next is Hitman 3, where AMD's 3D V-Cache processor is just 7% faster. Both CPUs provide more than enough performance for this older game. We've updated the benchmark for The Callisto Protocol to a less GPU-bound test, showing the 9800X3D outperforming the 285K by 42%.We're also seeing a 24% increase in Shadow of the Tomb Raider, and like Hitman 3 performance here is much higher than is required, but it's a good benchmark tool for comparing the gaming performance of these two parts. Then we see that performance in Halo Infinite and Warhammer III is near enough to be identical.Black Ops 6, Borderlands 3, Riftbreaker, Remnant 2, SWJSThis next set of results begins with Black Ops 6, where the 9800X3D is 19% faster. Although the 285K is more than capable in this case, the Ryzen 7's significant advantage suggests better longevity.Similarly, in Borderlands 3, the 9800X3D delivers a 21% improvement. In The Riftbreaker, the 285K faces a substantial defeat, with the 3D V-Cache processor is a whopping 42% faster.In Remnant 2, the 285K produces relatively low frame rates for a high-end CPU, making the 30% improvement provided by the 9800X3D highly noticeable, especially for those using high refresh rate monitors. Finally, in Star Wars Jedi: Survivor, the 9800X3D delivers a crushing 45% performance lead.War Thunder, Skull and Bones, Returnal, Ratchet & Clank, Dying Light 2And here we have yet more gaming data. Those seeking ultimate performance in War Thunder will find the 285K underwhelming, as 266 fps is not particularly impressive in this title. This also means the 9800X3D was nearly 50% faster.Performance in Skull and Bones, Returnal, and Ratchet & Clank is nearly identical, with no clear winner. However, the 9800X3D delivers a 19% performance uplift in Dying Light 2.Forza Horizon 5, Forza Motorsport, Gears 5, Ghost of Tsushima, HuntThe 9800X3D is only slightly faster in Forza Horizon 5, but it shows a 21% improvement in Forza Motorsport. In Gears 5, the Core Ultra 9 processor is completely outmatched, managing just 180 fps with 1% lows of 101 fps. This results in the 9800X3D being 62% faster. Margins are more modest in Ghost of Tsushima and Hunt: Showdown, though the 9800X3D is comfortably ahead in both cases.World War Z, F1 24, Rainbow Six Siege, Counter-Strike 2, FortniteThe 285K is once again crushed, with the 9800X3D delivering a 27% lead in World War Z and an 18% advantage in F1 24. Similar gains are observed in Rainbow Six Siege, where the Ryzen 7 processor provides 26% greater performance, and in Counter-Strike 2, where it delivers a 30% improvement.In Fortnite, we encountered a compatibility issue with Easy Anti-Cheat, which prevented the game from running on the 285K. We reported the issue to Intel over a week ago, and they have informed us that they are working with Epic Games on a fix. Hopefully, this will be resolved soon.Assassin's Creed x2, Space Marine 2, SW Outlaws, Dragon Age: The VeilguardFinally, we arrive at the last set of results, starting with Assassin's Creed Mirage, where the 9800X3D leads by a comfortable 22%, pushing frame rates beyond 200 fps. In Valhalla, the 9800X3D shows only a 5% improvement in average frame rates but an impressive 64% advantage in 1% lows, based on a three-run average.Space Marine 2 is heavily CPU-limited, making the 27% performance boost from the 9800X3D a significant advantage, particularly for those using high-refresh-rate displays. Similarly, Star Wars Outlaws is CPU-intensive, but here, the 9800X3D is just 5% faster. Lastly, in Dragon Age: The Veilguard, the 9800X3D maintains a 15% lead.45 Game AverageHere's how the Ryzen 7 9800X3D and Core Ultra 9 285K compare head-to-head across the 45 games tested. There are no instances where the Ryzen processor was slower. Margins within 5% are considered a tie, as differences of 1 3% are not statistically significant.Across the 45 games, we found the 9800X3D to be, on average, 24% faster. While this margin is smaller than our review data, that's because reviews often emphasize CPU-limited gaming. This dataset includes several GPU-limited titles, such as Forza Horizon 5.What's particularly troubling for the 285K is the number of games where the Ryzen CPU led by over 40%. Hopefully, this is something Intel can address, but it seems the 285K may simply not be ideal for gamers.When looking at the 1% lows, the story remains largely the same. The 9800X3D provides, on average, 29% better 1% lows. While there are notable double-digit gains across many titles, the overall averages and 1% lows paint a consistent picture.What We LearnedTo say this was a one-sided bashing, with AMD wielding the 3D V-Cache hammer, would be stating the obvious. Intel's 285K got annihilated. You might think that's a bit over the top, after all the 285K delivered perfectly acceptable gaming performance in most titles tested, but we are talking about Intel's latest high-end CPU, and at $630, it is far from cheap.Granted, the Core Ultra 285K is a much better productivity CPU. However, that's not the competition the 9800X3D was built for it's designed for gaming. As it stands, the 9800X3D is the best gaming CPU available, with the previous-generation Ryzen 7 7800X3D coming in as the next best.Worse still for the 285K, the Ryzen 9 9950X3D is expected early next year. It will likely claim the productivity crown from Intel's 285K. Even now, it's unclear whether the 285K holds that title, as the 9950X already outperforms it in several productivity workloads.Returning to gaming, if you're looking for the absolute best in CPU performance, Intel is no longer part of the conversation, which is shocking. The 9800X3D is simply too fast. Intel has admitted that Arrow Lake missed the mark and promised performance fixes by December, but expectations remain uncertain.Intel's Robert Hallock has publicly acknowledged that specific BIOS and OS-level settings have caused issues that negatively impacted performance. It's likely that Windows scheduling improvements will stabilize performance across a broader range of games. Compatibility problems, such as those with Easy Anti-Cheat, also need to be resolved. We are aware of Intel's statements about Arrow Lake's performance, if they manage to get out some updates before year's end, you can rest assured we'll re-test everything you've seen here.Shopping Shortcuts:AMD Ryzen 7 9800X3D on AmazonIntel Core Ultra 9 285K on AmazonAMD Ryzen 9 9950X on AmazonIntel Core Ultra 7 265K on AmazonAMD Ryzen 7 9700X on AmazonAMD Ryzen 7 7700X on AmazonAMD Ryzen 5 9600X on Amazon

0 Reacties

0 aandelen

179 Views