0 Reacties

0 aandelen

116 Views

Bedrijvengids

Bedrijvengids

-

Please log in to like, share and comment!

-

GIZMODO.COMHow to Use Your New iPhone 16s Camera Control ButtonThe iPhone 16/16 Pros new Camera Control button is almost as polarizing as politics. Some people love it; they think it makes it easier to photograph on the fly rather than unlocking the iPhone with Face ID and launching the camera app. The button also provides quick access to Visual Intelligence in the latest iOS 18 update and can serve as an accessibility tool for people who struggle with mobility. Other people hate the Camera Control button enough to call it the worst feature Apple has added to the iPhone. Thats way harsh, Tai, but you can understand the point once you start using the Camera Control button for yourself. The button is toward the lower bottom half of the iPhone, so youll have to carefully balance the device with your index finger and thumb while pressing down to snap a photo. If you dont have the mechanism down in your wrist, it causes the phone to shake quite a bitthe complete antithesis of how you want a phone moving when trying to snap a photo. Apple has algorithms that help with some of the shakiness due to the Camera Control, but theres still some cognitive dissonance. How am I supposed to believe the picture I took was steady when I can feel the iPhone shake as I do it? If youre new to the iPhone 16/16 Pro, youre probably wondering about the utility of a button that nobody has asked for. My job is to give you instructions after using the product myself, so at least you can derive some use from a function that no one was clamoring for. As I said, the Camera Control button is helpful for rapidly taking photos. But it can do a few other things if you decide the old way of snapping pictures on the iPhone still suits you better than this newfangled thing. Press to shoot Florence Ion / Gizmodo The Camera Control button on the iPhone 16 and 16 Pro is a physical button with the DNA of a MacBook trackpad. Press it down hard, like you would with the power or volume buttons, or press down lightly for a soft press. The button area is capacitive, so you can control the interface by sliding your finger. Double-press the Camera Control button to bring up the iPhone viewfinder. You can press again to snap a photo or long-press to start recording a video. If you dont press down at all, by default, you can use the Camera Control button to zoom in and out between the camera lenses, including that 5x telephoto that Apple included for both sizes of the iPhone 16 Pro. You can lightly tap the Camera Control button twice to cycle through other settings, including exposure, depth, and the iPhone 16s new Photographic styles. The soft-press-and-slide mechanism was super confusing at first. It took me a while to understand what a soft press was with the Camera Control button and to recognize iOSs interface animations when activated. I have an easier time using the phones sliding mechanism if Im stabilizing it with my other hand. If youve updated to iOS 18.2, Apple lets you control the exposure and focus lock with the Camera Control slider. Its easy to set up in the Settings app, though youll need to do all that before you take a photo. Florence Ion / Gizmodo Customize the Camera Control button Im an Android user most of the time, and my favorite shortcut for accessing the camera app is double-pressing the power button. To that end, I didnt mind that the Camera Control button requires a double-press before its activated, either. But I went for the faster shortcut to launch it after one click. Apple lets you do that from Settings. Under Camera Control, tap to choose between a single or double click to launch the camera.The Camera Control button can also be adjusted or used as an accessibility tool for people with accessibility needs. Under Settings > Accessibility > Camera Control, you can adjust the firmness of the buttons soft and hard presses and even select how forceful the press should be. You dont even have to use it You dont have to use the Camera Control button to launch the iPhones camera app. If you dig back into the iOS settings panel, under Camera Control settings, you can program it to launch something else despite limited options. You can choose between the built-in QR code scanner, the magnifier for zooming into real-life tiny text, or any third-party apps that have implemented the new hardware. You can also turn off the Camera Control button entirely if it interferes with the rest of your iPhone experience. Florence Ion / Gizmodo0 Reacties 0 aandelen 136 Views

GIZMODO.COMHow to Use Your New iPhone 16s Camera Control ButtonThe iPhone 16/16 Pros new Camera Control button is almost as polarizing as politics. Some people love it; they think it makes it easier to photograph on the fly rather than unlocking the iPhone with Face ID and launching the camera app. The button also provides quick access to Visual Intelligence in the latest iOS 18 update and can serve as an accessibility tool for people who struggle with mobility. Other people hate the Camera Control button enough to call it the worst feature Apple has added to the iPhone. Thats way harsh, Tai, but you can understand the point once you start using the Camera Control button for yourself. The button is toward the lower bottom half of the iPhone, so youll have to carefully balance the device with your index finger and thumb while pressing down to snap a photo. If you dont have the mechanism down in your wrist, it causes the phone to shake quite a bitthe complete antithesis of how you want a phone moving when trying to snap a photo. Apple has algorithms that help with some of the shakiness due to the Camera Control, but theres still some cognitive dissonance. How am I supposed to believe the picture I took was steady when I can feel the iPhone shake as I do it? If youre new to the iPhone 16/16 Pro, youre probably wondering about the utility of a button that nobody has asked for. My job is to give you instructions after using the product myself, so at least you can derive some use from a function that no one was clamoring for. As I said, the Camera Control button is helpful for rapidly taking photos. But it can do a few other things if you decide the old way of snapping pictures on the iPhone still suits you better than this newfangled thing. Press to shoot Florence Ion / Gizmodo The Camera Control button on the iPhone 16 and 16 Pro is a physical button with the DNA of a MacBook trackpad. Press it down hard, like you would with the power or volume buttons, or press down lightly for a soft press. The button area is capacitive, so you can control the interface by sliding your finger. Double-press the Camera Control button to bring up the iPhone viewfinder. You can press again to snap a photo or long-press to start recording a video. If you dont press down at all, by default, you can use the Camera Control button to zoom in and out between the camera lenses, including that 5x telephoto that Apple included for both sizes of the iPhone 16 Pro. You can lightly tap the Camera Control button twice to cycle through other settings, including exposure, depth, and the iPhone 16s new Photographic styles. The soft-press-and-slide mechanism was super confusing at first. It took me a while to understand what a soft press was with the Camera Control button and to recognize iOSs interface animations when activated. I have an easier time using the phones sliding mechanism if Im stabilizing it with my other hand. If youve updated to iOS 18.2, Apple lets you control the exposure and focus lock with the Camera Control slider. Its easy to set up in the Settings app, though youll need to do all that before you take a photo. Florence Ion / Gizmodo Customize the Camera Control button Im an Android user most of the time, and my favorite shortcut for accessing the camera app is double-pressing the power button. To that end, I didnt mind that the Camera Control button requires a double-press before its activated, either. But I went for the faster shortcut to launch it after one click. Apple lets you do that from Settings. Under Camera Control, tap to choose between a single or double click to launch the camera.The Camera Control button can also be adjusted or used as an accessibility tool for people with accessibility needs. Under Settings > Accessibility > Camera Control, you can adjust the firmness of the buttons soft and hard presses and even select how forceful the press should be. You dont even have to use it You dont have to use the Camera Control button to launch the iPhones camera app. If you dig back into the iOS settings panel, under Camera Control settings, you can program it to launch something else despite limited options. You can choose between the built-in QR code scanner, the magnifier for zooming into real-life tiny text, or any third-party apps that have implemented the new hardware. You can also turn off the Camera Control button entirely if it interferes with the rest of your iPhone experience. Florence Ion / Gizmodo0 Reacties 0 aandelen 136 Views -

WWW.ARCHDAILY.COMCoihues Neighborhood / 0.7 ArquitecturaCoihues Neighborhood / 0.7 ArquitecturaSave this picture! Mara Eugenia CancelaColivingVilla Udaondo, ArgentinaArchitects: 0.7 ArquitecturaAreaArea of this architecture projectArea:2315 mYearCompletion year of this architecture project Year: 2024 ManufacturersBrands with products used in this architecture project Manufacturers: FV, Hydro, ferrum Lead Architects: Juan Manuel Caonero, J. Lucas I. Cceres More SpecsLess SpecsSave this picture!Text description provided by the architects. Coihues is a neighborhood of ten houses situated on both sides of an internal vehicular street after passing through an important entrance gate. The access of each unit from this community street is private and has its own garage and a park area with a pool.Save this picture!Save this picture!The houses are oriented alternately in order to receive the best sunlight opening towards their private parks. The units on the front line of the street invert their orientation to cover the best views while keeping the row of trees in their parks, which works as a natural separation from the vehicular street.Save this picture!The emplacement layout has to do with a particular perpendicular position of each house with respect to the street in order to open up the central community space and, at the same time, to improve the sunlight of the private outdoor areas. As a result of this design, it stands as a small-scale neighborhood with high-quality community spaces and large areas of privacy for each unit.Save this picture!The houses are developed on three variants of one unique typology that, by mirroring and alternating, avoid the monotony of the whole set. The internal functions of the houses are distributed on two levels, with a public area on the ground floor, which includes the living- and dining room, the kitchen, and an external gallery facing the park. This gallery is opened completely towards the street, allowing wider use by incorporating the garages as a useful space. On the first floor is the private area, which includes the master suite and the rest of the bedrooms.Save this picture!Save this picture!Save this picture!The language of the housing complex is given by a reinforced concrete structure that works as an exterior enclosure as well as an assembly of the facades. From a formal point of view, the reinforced concrete is used to generate hanging planes that lighten the impact of the material while allowing the ground floor to open towards the park and, at the same time, protect the private spaces of the first level. The facades are complemented by the use of wood as cladding, giving the entire set a greater warmth and a greater harmony with the forest environment.Save this picture!Project gallerySee allShow lessProject locationAddress:Villa Udaondo, ArgentinaLocation to be used only as a reference. It could indicate city/country but not exact address.About this office0.7 ArquitecturaOfficeMaterialConcreteMaterials and TagsPublished on December 28, 2024Cite: "Coihues Neighborhood / 0.7 Arquitectura" [ Barrio Coihues / 0.7 Arquitectura] 28 Dec 2024. ArchDaily. Accessed . <https://www.archdaily.com/1024223/coihues-neighborhood-arquitectura&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Reacties 0 aandelen 129 Views

WWW.ARCHDAILY.COMCoihues Neighborhood / 0.7 ArquitecturaCoihues Neighborhood / 0.7 ArquitecturaSave this picture! Mara Eugenia CancelaColivingVilla Udaondo, ArgentinaArchitects: 0.7 ArquitecturaAreaArea of this architecture projectArea:2315 mYearCompletion year of this architecture project Year: 2024 ManufacturersBrands with products used in this architecture project Manufacturers: FV, Hydro, ferrum Lead Architects: Juan Manuel Caonero, J. Lucas I. Cceres More SpecsLess SpecsSave this picture!Text description provided by the architects. Coihues is a neighborhood of ten houses situated on both sides of an internal vehicular street after passing through an important entrance gate. The access of each unit from this community street is private and has its own garage and a park area with a pool.Save this picture!Save this picture!The houses are oriented alternately in order to receive the best sunlight opening towards their private parks. The units on the front line of the street invert their orientation to cover the best views while keeping the row of trees in their parks, which works as a natural separation from the vehicular street.Save this picture!The emplacement layout has to do with a particular perpendicular position of each house with respect to the street in order to open up the central community space and, at the same time, to improve the sunlight of the private outdoor areas. As a result of this design, it stands as a small-scale neighborhood with high-quality community spaces and large areas of privacy for each unit.Save this picture!The houses are developed on three variants of one unique typology that, by mirroring and alternating, avoid the monotony of the whole set. The internal functions of the houses are distributed on two levels, with a public area on the ground floor, which includes the living- and dining room, the kitchen, and an external gallery facing the park. This gallery is opened completely towards the street, allowing wider use by incorporating the garages as a useful space. On the first floor is the private area, which includes the master suite and the rest of the bedrooms.Save this picture!Save this picture!Save this picture!The language of the housing complex is given by a reinforced concrete structure that works as an exterior enclosure as well as an assembly of the facades. From a formal point of view, the reinforced concrete is used to generate hanging planes that lighten the impact of the material while allowing the ground floor to open towards the park and, at the same time, protect the private spaces of the first level. The facades are complemented by the use of wood as cladding, giving the entire set a greater warmth and a greater harmony with the forest environment.Save this picture!Project gallerySee allShow lessProject locationAddress:Villa Udaondo, ArgentinaLocation to be used only as a reference. It could indicate city/country but not exact address.About this office0.7 ArquitecturaOfficeMaterialConcreteMaterials and TagsPublished on December 28, 2024Cite: "Coihues Neighborhood / 0.7 Arquitectura" [ Barrio Coihues / 0.7 Arquitectura] 28 Dec 2024. ArchDaily. Accessed . <https://www.archdaily.com/1024223/coihues-neighborhood-arquitectura&gt ISSN 0719-8884Save!ArchDaily?You've started following your first account!Did you know?You'll now receive updates based on what you follow! Personalize your stream and start following your favorite authors, offices and users.Go to my stream0 Reacties 0 aandelen 129 Views -

GAMERANT.COMStalker 2: All Arch Artifacts LocationsStalker 2: Heart of Chornobyl offers a ton of artifacts for players to collect and gain the status bonuses that come with them. Among all the artifacts in the game, the Arch artifacts are the most unique ones that are tied to one of the six anomalous zones and can be acquired once. These artifacts provide a significant benefit to players' stats while having zero radiation side effects. However, they do inflict a debuff on players to balance the benefit gained from it.0 Reacties 0 aandelen 140 Views

GAMERANT.COMStalker 2: All Arch Artifacts LocationsStalker 2: Heart of Chornobyl offers a ton of artifacts for players to collect and gain the status bonuses that come with them. Among all the artifacts in the game, the Arch artifacts are the most unique ones that are tied to one of the six anomalous zones and can be acquired once. These artifacts provide a significant benefit to players' stats while having zero radiation side effects. However, they do inflict a debuff on players to balance the benefit gained from it.0 Reacties 0 aandelen 140 Views -

GAMERANT.COMOne Piece: Oda Reveals The Power Of The Bandaged Holy KnightOne Piece chapter 1135 dropped recently and it revealed quite a lot of exciting details to the fans. This chapter spanned many exciting things, such as the Owl's Library, and of course, the banquet finally being held in Elbaf Village. But, undoubtedly, the thing that fans were waiting to see the most in this arc was the move made by the Holy Knights that were previously introduced in the chapter.0 Reacties 0 aandelen 127 Views

GAMERANT.COMOne Piece: Oda Reveals The Power Of The Bandaged Holy KnightOne Piece chapter 1135 dropped recently and it revealed quite a lot of exciting details to the fans. This chapter spanned many exciting things, such as the Owl's Library, and of course, the banquet finally being held in Elbaf Village. But, undoubtedly, the thing that fans were waiting to see the most in this arc was the move made by the Holy Knights that were previously introduced in the chapter.0 Reacties 0 aandelen 127 Views -

GAMERANT.COMBaldurs Gate 3 Player Commits the Most Sadistic Act PossibleThis article contains small spoilers for Act One of Baldur's Gate 3.0 Reacties 0 aandelen 113 Views

GAMERANT.COMBaldurs Gate 3 Player Commits the Most Sadistic Act PossibleThis article contains small spoilers for Act One of Baldur's Gate 3.0 Reacties 0 aandelen 113 Views -

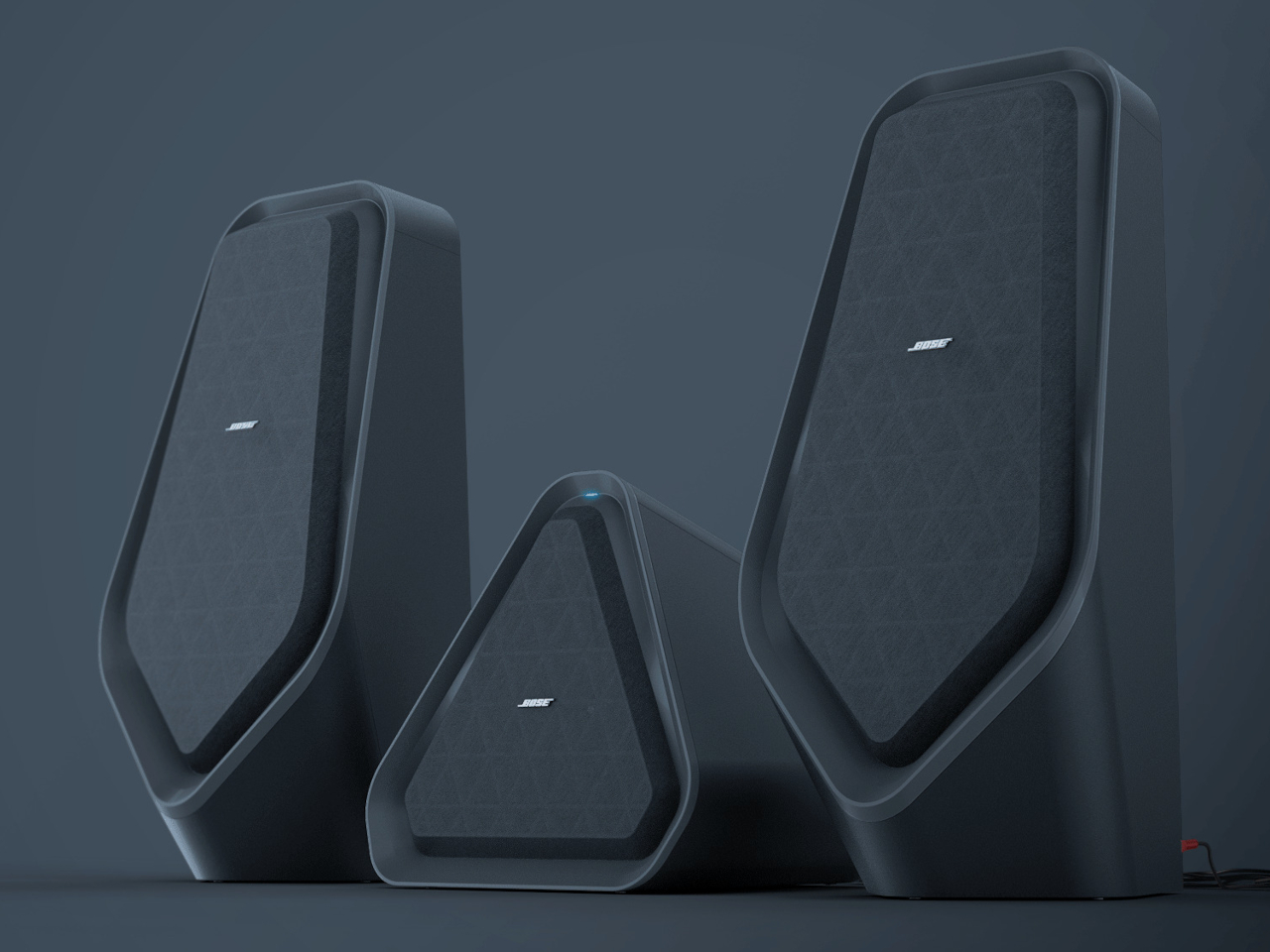

WWW.YANKODESIGN.COMGeometric speaker concept creates a futuristic ambiance in your living spaceSpeakers started out with rather boxy designs that were meant to be more efficient than elegant. This situation has changed over the years, thanks to developments in technology and manufacturing that left a bit of wiggle room for the critical components that make up these pieces of audio equipment. Some home speakers even come in the form of art objects that inject a bit of elegance and sophistication into the room.Thats not to say that angular, geometric shapes are unappealing, especially if they can be tweaked and embellished with a few details that take them beyond their polygonal appearance. This set of speakers, for example, mash together nature and architecture in a design that makes them look like imposing yet striking rocks. If rocks came from outer space, that is.Designer: Santiago LopezAlthough a monolith technically refers to a geological feature, the word has also taken a different meaning throughout history and culture. On the one hand, you have monolithic man-made structures like obelisks dating as far back as Ancient Egypt. On the other hand, you also have fictional objects like the iconic black monolith from Arthur C. Clarkes Space Odyssey science fiction series. It is a word that inspires both awe and creativity, combining both natural formations and artificial creations in a single thought.Those are the kinds of emotions that this Monolith speaker concept design tries to convey. On the one hand, they resemble large boulders naturally rising from the floor of your living room. On the other hand, their sharp angles and clean lines clearly convey an artificial nature, keeping their technological roots clear and unambiguous. The dark motif, paired with a few discreet light indicators, also gives it a sci-fi vibe, as if the speakers were miniature spacecraft from an advanced alien civilization.There are also a few subtle details that give the Monolith speakers some added charm. The front and the back of the speakers use triangular grilles to visually set themselves apart from typical perforations, but the front also utilizes a transparent fabric to make that pattern less conspicuous and distracting. Physical buttons lie on top of the speakers to control volume and power, while the rest of the connectors and switches are conveniently hidden on their backs.Its possible to connect all three speakers of the set using cables, creating the semblance of a starship fleet ready for take-off, or they can be distributed across the area and communicate over Bluetooth instead. The Monolith speaker concept doesnt drastically change the design formula but combines inspiration from nature with technical sensibilities to deliver a more striking aesthetic that doesnt compromise on space efficiency for the components inside.The post Geometric speaker concept creates a futuristic ambiance in your living space first appeared on Yanko Design.0 Reacties 0 aandelen 123 Views

WWW.YANKODESIGN.COMGeometric speaker concept creates a futuristic ambiance in your living spaceSpeakers started out with rather boxy designs that were meant to be more efficient than elegant. This situation has changed over the years, thanks to developments in technology and manufacturing that left a bit of wiggle room for the critical components that make up these pieces of audio equipment. Some home speakers even come in the form of art objects that inject a bit of elegance and sophistication into the room.Thats not to say that angular, geometric shapes are unappealing, especially if they can be tweaked and embellished with a few details that take them beyond their polygonal appearance. This set of speakers, for example, mash together nature and architecture in a design that makes them look like imposing yet striking rocks. If rocks came from outer space, that is.Designer: Santiago LopezAlthough a monolith technically refers to a geological feature, the word has also taken a different meaning throughout history and culture. On the one hand, you have monolithic man-made structures like obelisks dating as far back as Ancient Egypt. On the other hand, you also have fictional objects like the iconic black monolith from Arthur C. Clarkes Space Odyssey science fiction series. It is a word that inspires both awe and creativity, combining both natural formations and artificial creations in a single thought.Those are the kinds of emotions that this Monolith speaker concept design tries to convey. On the one hand, they resemble large boulders naturally rising from the floor of your living room. On the other hand, their sharp angles and clean lines clearly convey an artificial nature, keeping their technological roots clear and unambiguous. The dark motif, paired with a few discreet light indicators, also gives it a sci-fi vibe, as if the speakers were miniature spacecraft from an advanced alien civilization.There are also a few subtle details that give the Monolith speakers some added charm. The front and the back of the speakers use triangular grilles to visually set themselves apart from typical perforations, but the front also utilizes a transparent fabric to make that pattern less conspicuous and distracting. Physical buttons lie on top of the speakers to control volume and power, while the rest of the connectors and switches are conveniently hidden on their backs.Its possible to connect all three speakers of the set using cables, creating the semblance of a starship fleet ready for take-off, or they can be distributed across the area and communicate over Bluetooth instead. The Monolith speaker concept doesnt drastically change the design formula but combines inspiration from nature with technical sensibilities to deliver a more striking aesthetic that doesnt compromise on space efficiency for the components inside.The post Geometric speaker concept creates a futuristic ambiance in your living space first appeared on Yanko Design.0 Reacties 0 aandelen 123 Views -

WWW.YANKODESIGN.COMSquid Fork makes eating your Cup Noodles easier and more funSometimes, at the end of a long and stressful day, the only thing that I can think of eating is a cup of instant noodles bought from my neighborhood convenience store. Its best eaten with a pair of chopsticks while slurping down the soup but there are times when there are no chopsticks around and you have to make do with a fork, which isnt the most convenient.Designer: NissinNissin is one of the main proponents of instant noodles culture with their ubiquitous Cup Noodle products. Theyre now making it more fun and easier to eat your favorite noodles with the Ika Fork or the Squid Fork (ika is of course Japanese for squid). It solves the problem of your noodles slipping out of your ordinary plastic forks grasp by redesigning the fork and also making it unique and fun.The fork is designed to look like a real squid, with actual details of the cephalopod included in the utensil. Its around 8 inches in length and made from heat-resistant materials that can withstand really hot noodles up to 100 degrees Celsius. It prevents your noodles from slipping so you can enjoy the cup without being annoyed.Its designed for the cup noodles but you can also use it for other food things that require a fork. Its included in a limited-edition set which has several Cup Noodles flavors and can be ordered through Nissins official store and Amazon Japan. The post Squid Fork makes eating your Cup Noodles easier and more fun first appeared on Yanko Design.0 Reacties 0 aandelen 108 Views

WWW.YANKODESIGN.COMSquid Fork makes eating your Cup Noodles easier and more funSometimes, at the end of a long and stressful day, the only thing that I can think of eating is a cup of instant noodles bought from my neighborhood convenience store. Its best eaten with a pair of chopsticks while slurping down the soup but there are times when there are no chopsticks around and you have to make do with a fork, which isnt the most convenient.Designer: NissinNissin is one of the main proponents of instant noodles culture with their ubiquitous Cup Noodle products. Theyre now making it more fun and easier to eat your favorite noodles with the Ika Fork or the Squid Fork (ika is of course Japanese for squid). It solves the problem of your noodles slipping out of your ordinary plastic forks grasp by redesigning the fork and also making it unique and fun.The fork is designed to look like a real squid, with actual details of the cephalopod included in the utensil. Its around 8 inches in length and made from heat-resistant materials that can withstand really hot noodles up to 100 degrees Celsius. It prevents your noodles from slipping so you can enjoy the cup without being annoyed.Its designed for the cup noodles but you can also use it for other food things that require a fork. Its included in a limited-edition set which has several Cup Noodles flavors and can be ordered through Nissins official store and Amazon Japan. The post Squid Fork makes eating your Cup Noodles easier and more fun first appeared on Yanko Design.0 Reacties 0 aandelen 108 Views -

VENTUREBEAT.COMHow advanced foundation models will expand what AI can do (and other predictions for 2025)Foundation models will be brand DNA, hands-free will be redefined and we'll hit the AI trust tipping point (among other developments).Read More0 Reacties 0 aandelen 143 Views

VENTUREBEAT.COMHow advanced foundation models will expand what AI can do (and other predictions for 2025)Foundation models will be brand DNA, hands-free will be redefined and we'll hit the AI trust tipping point (among other developments).Read More0 Reacties 0 aandelen 143 Views -

/cdn.vox-cdn.com/uploads/chorus_asset/file/23318437/akrales_220309_4977_0292.jpg) WWW.THEVERGE.COMThe US proposes rules to make healthcare data more secureThe US Department of Health and Human Services (HHS) Office for Civil Rights (OCR) is proposing new cybersecurity requirements for healthcare organizations aimed at protecting patients private data in the event of cyberattacks, reports Reuters. The rules come after major cyberattacks like one that leaked the private information of more than 100 million UnitedHealth patients earlier this year.The OCRs proposal includes requiring that healthcare organizations make multifactor authentication mandatory in most situations, that they segment their networks to reduce risks of intrusions spreading from one system to another, and that they encrypt patient data so that even if its stolen, it cant be accessed. It would also direct regulated groups to undertake certain risk analysis practices, keep compliance documentation, and more. RelatedThe rule is part of the cybersecurity strategy that the Biden administration announced last year. Once finalized, it would update the Security Rule of the Health Insurance Portability and Accountability Act of 1996 (HIPAA), which regulates doctors, nursing homes, health insurance companies, and more, and was last updated in 2013.US deputy national security advisor Anne Neuberger put the cost of implementing the requirements at an estimated $9 billion in the first year, and $6 billion in years two through five, writes Reuters. The proposal is due to be published in the Federal Register on January 6th, which will kick off the 60-day public comment period before the final rule is set.0 Reacties 0 aandelen 125 Views

WWW.THEVERGE.COMThe US proposes rules to make healthcare data more secureThe US Department of Health and Human Services (HHS) Office for Civil Rights (OCR) is proposing new cybersecurity requirements for healthcare organizations aimed at protecting patients private data in the event of cyberattacks, reports Reuters. The rules come after major cyberattacks like one that leaked the private information of more than 100 million UnitedHealth patients earlier this year.The OCRs proposal includes requiring that healthcare organizations make multifactor authentication mandatory in most situations, that they segment their networks to reduce risks of intrusions spreading from one system to another, and that they encrypt patient data so that even if its stolen, it cant be accessed. It would also direct regulated groups to undertake certain risk analysis practices, keep compliance documentation, and more. RelatedThe rule is part of the cybersecurity strategy that the Biden administration announced last year. Once finalized, it would update the Security Rule of the Health Insurance Portability and Accountability Act of 1996 (HIPAA), which regulates doctors, nursing homes, health insurance companies, and more, and was last updated in 2013.US deputy national security advisor Anne Neuberger put the cost of implementing the requirements at an estimated $9 billion in the first year, and $6 billion in years two through five, writes Reuters. The proposal is due to be published in the Federal Register on January 6th, which will kick off the 60-day public comment period before the final rule is set.0 Reacties 0 aandelen 125 Views