0 Commentarii

0 Distribuiri

Director

Director

-

Vă rugăm să vă autentificați pentru a vă dori, partaja și comenta!

-

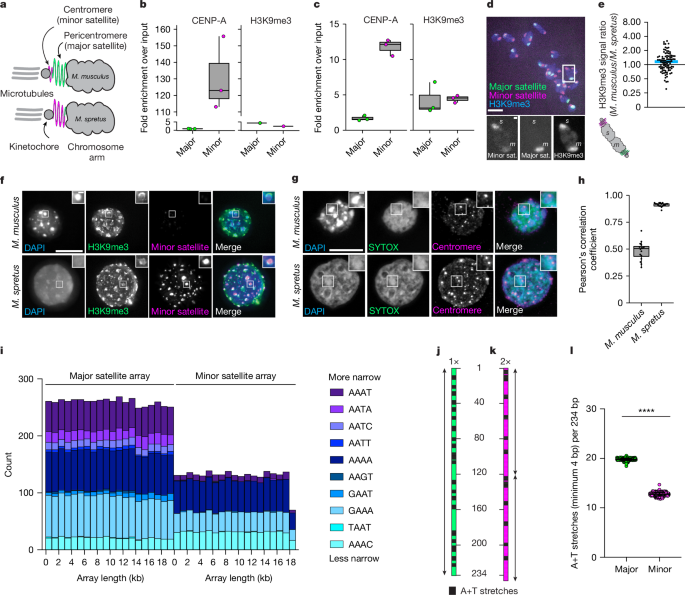

WWW.NATURE.COMSatellite DNA shapes dictate pericentromere packaging in female meiosisNature, Published online: 08 January 2025; doi:10.1038/s41586-024-08374-0Divergent satellite DNA shapes influence architectural protein binding and chromatin organization in two mouse species so that the satellites can be packaged and centromere function maintained, mitigating the evolutionary cost of satellite DNA expansion.0 Commentarii 0 Distribuiri

WWW.NATURE.COMSatellite DNA shapes dictate pericentromere packaging in female meiosisNature, Published online: 08 January 2025; doi:10.1038/s41586-024-08374-0Divergent satellite DNA shapes influence architectural protein binding and chromatin organization in two mouse species so that the satellites can be packaged and centromere function maintained, mitigating the evolutionary cost of satellite DNA expansion.0 Commentarii 0 Distribuiri -

GAMERANT.COMPalworld's Feybreak Update May Be the Game's Darkest YetThe monster-capturing and survival game hybrid, Palworld, has recently been given one of its biggest patches that has changed it for the better. The major Feybreak update has introduced a variety of new things, such as a bigger island, more Pals, resources, and more. Everything that it has brought to the game has improved the player's experience in ways that many appreciate, and it's a good sign for things to come if this is what Pocketpair expects to dish out more of in the future. But as great as it is, the latest update can also be seen as the darkest compared to the previous ones, as some of its additions have tapped into the macabre side of the in-game world.0 Commentarii 0 Distribuiri

GAMERANT.COMPalworld's Feybreak Update May Be the Game's Darkest YetThe monster-capturing and survival game hybrid, Palworld, has recently been given one of its biggest patches that has changed it for the better. The major Feybreak update has introduced a variety of new things, such as a bigger island, more Pals, resources, and more. Everything that it has brought to the game has improved the player's experience in ways that many appreciate, and it's a good sign for things to come if this is what Pocketpair expects to dish out more of in the future. But as great as it is, the latest update can also be seen as the darkest compared to the previous ones, as some of its additions have tapped into the macabre side of the in-game world.0 Commentarii 0 Distribuiri -

GAMERANT.COMWuthering Waves: Ruins Where Shadows Roam Guide (Unlock Nightmare Crownless)Nightmare Crownless is one of the new Nightmare versions of Overlord-class Echoes in Wuthering Waves. Unlike its regular counterpart, this one offers bonus Havoc DMG and Basic Attack DMG, making it an excellent pick for certain characters.0 Commentarii 0 Distribuiri

GAMERANT.COMWuthering Waves: Ruins Where Shadows Roam Guide (Unlock Nightmare Crownless)Nightmare Crownless is one of the new Nightmare versions of Overlord-class Echoes in Wuthering Waves. Unlike its regular counterpart, this one offers bonus Havoc DMG and Basic Attack DMG, making it an excellent pick for certain characters.0 Commentarii 0 Distribuiri -

GAMERANT.COMNew EA Sports UFC 5 Update Adds Undefeated FighterEA Sports UFC 5 developer EA Vancouver is set to release a new update for the popular mixed martial arts fighting game on January 9, adding a new undefeated fighter to the roster and making a handful of bug fixes and improvements. Patch 1.18 for EA Sports UFC 5 will be available for PlayStation 5 and Xbox Series X/S users on January 9 at 1 PM ET, with no downtime expected for the update.0 Commentarii 0 Distribuiri

GAMERANT.COMNew EA Sports UFC 5 Update Adds Undefeated FighterEA Sports UFC 5 developer EA Vancouver is set to release a new update for the popular mixed martial arts fighting game on January 9, adding a new undefeated fighter to the roster and making a handful of bug fixes and improvements. Patch 1.18 for EA Sports UFC 5 will be available for PlayStation 5 and Xbox Series X/S users on January 9 at 1 PM ET, with no downtime expected for the update.0 Commentarii 0 Distribuiri -

WWW.POLYGON.COMBluey is getting some Lego setsBlueys ascension in the pop culture canon continues. Not only will the Australian animated series about cartoon dogs aimed at young kids be turned into a movie in 2027; this year, it will be bestowed with the ultimate franchise status symbol Lego sets.Lego announced Thursday that six Bluey sets will be released in 2025. The sets havent been revealed yet that will happen in the spring but Lego said they will be part of its Duplo and 4+ lines, so theyll definitely be simpler sets aimed at the shows young audience. Lego teased the sets with an image and a cute video clip referencing at the iconic silhouettes and colors of blue heeler pup Bluey and her little sister Bingo. View this post on Instagram A post shared by LEGO Family (@legofamily)Blueys sometimes controversial, always funny, often moving, and frequently genius-level episodes follow the Heeler family as they use imaginative play to explore lifes many mysteries and foibles. The partnership [between Lego and Bluey licensor BBC Studios] aims to create equally fun moments of imaginative roleplay and creativity for families as they build, connect and play out their favourite scenes from the show, Lego said in a press release.Its a perfect match in fact, its a wonder it didnt happen sooner, given the amount of Bluey merchandise already out there (including one sadly terrible video game). Blueys many adult fans might be disappointed theyre not getting a 1000-piece diorama of the Heeler house or the solar system from Sleepytime, but they also probably have the good sense to know its not about them. And everyone can appreciate the introduction of Bingo orange to the Lego color palette.0 Commentarii 0 Distribuiri

WWW.POLYGON.COMBluey is getting some Lego setsBlueys ascension in the pop culture canon continues. Not only will the Australian animated series about cartoon dogs aimed at young kids be turned into a movie in 2027; this year, it will be bestowed with the ultimate franchise status symbol Lego sets.Lego announced Thursday that six Bluey sets will be released in 2025. The sets havent been revealed yet that will happen in the spring but Lego said they will be part of its Duplo and 4+ lines, so theyll definitely be simpler sets aimed at the shows young audience. Lego teased the sets with an image and a cute video clip referencing at the iconic silhouettes and colors of blue heeler pup Bluey and her little sister Bingo. View this post on Instagram A post shared by LEGO Family (@legofamily)Blueys sometimes controversial, always funny, often moving, and frequently genius-level episodes follow the Heeler family as they use imaginative play to explore lifes many mysteries and foibles. The partnership [between Lego and Bluey licensor BBC Studios] aims to create equally fun moments of imaginative roleplay and creativity for families as they build, connect and play out their favourite scenes from the show, Lego said in a press release.Its a perfect match in fact, its a wonder it didnt happen sooner, given the amount of Bluey merchandise already out there (including one sadly terrible video game). Blueys many adult fans might be disappointed theyre not getting a 1000-piece diorama of the Heeler house or the solar system from Sleepytime, but they also probably have the good sense to know its not about them. And everyone can appreciate the introduction of Bingo orange to the Lego color palette.0 Commentarii 0 Distribuiri -

WWW.ENGADGET.COMPick up a four-pack of Apple AirTags for only $70If you want to keep better track of your things in the new year, a Bluetooth tracker can help. Apples AirTags are currently on sale where you can get a four pack for only $70. Thats a record low for the bundle and it brings each individual device down to only $17.50. If youre not so sure you need four of them, a single AirTag will set you back $23 at the moment. AirTags take just seconds to set up using an iPhone. They are integrated into the Find My network, so you don't have to register for another service or download a separate app. AirTags also support the ultra-wideband wireless protocol. When your iPhone gets within roughly 25 feet of a linked AirTag, you'll see directional arrows and an approximate distance meter to help you locate it. On top of that, Apple recently revealed that several major airlines are adding support for AirTag tracking to their systems. The idea is to help you (and your airline) more easily locate any missing bag that has an AirTag inside. Meanwhile, you might be interested in picking up some AirTag accessories to, say, more easily attach them to your keychain. We've got you covered there too, thanks to our round-up of the best AirTag accessories. Follow @EngadgetDeals on Twitter and subscribe to the Engadget Deals newsletter for the latest tech deals and buying advice.This article originally appeared on Engadget at https://www.engadget.com/deals/pick-up-a-four-pack-of-apple-airtags-for-only-70-150049782.html?src=rss0 Commentarii 0 Distribuiri

-

WWW.ENGADGET.COMSony's immersive The Last of Us experience at CES 2025 dropped me into a subway filled with zombiesAs Engadget's chief The Last of Us correspondent, I was pretty pumped to find out during Sony's CES 2025 press conference that season two of the HBO show would come out in April. But Naughty Dog head Neil Druckmann also teased a "location-based experience exhibit" that would transport participants into the tunnels of Seattle filled with Infected. That's an area straight out of The Last of Us Part II, and today I got a chance to try the proof-of-concept experience. It was short, minimal, and a little rough, but it was also another good example of how Sony is trying to take its tentpole franchises from PlayStation and put them in entirely different experiences.Unfortunately, Sony had a strict "no cameras or videos" policy for this experience, so you'll have to rely on my words and a little video the company showed about the tech behind it.I entered the experience with three other participants after a quick run-down of the gear we'd use: two of us got shotguns, and two got flashlights (sadly I was stuck with a flashlight). Both have a bunch of small sensors attached to the front so that they could interact with the environment we entered; the flashlight felt like a real flashlight with some sensors on the end, but the guns were crude tubes with a handle and trigger; the trigger felt pretty good from my quick test of it before we got started. There are also sensors on the barrel of the gun that detect a "pump" motion to reload it.Once we were outfitted, an actor playing a member of an unnamed militia briefed us on the mission: some of our fellow mercenaries disappeared in the Seattle subways perhaps kidnapped by the WLF, perhaps taken down by Infected. Our job was to find him... what could go wrong?Our guide directed me and the other flashlight-holder to start lighting up the subway station which was created by three giant screens surrounding us. The walls of the room were made of LED panels, and the sensors on the flashlights interacted with them to track my moment. I needed to be pretty close to the screens for it to recognize my flashlight, but it was pretty cool to be lighting up a virtual environment in real time.Nathan Ingraham for EngadgetThen, of course, a clicker scream puts the group on high alert and given that it came from a specific location we all swing our flashlights in that direction to identify the threat. Just as in the game, though, the disgusting infected creature shambled closer to us, let out another scream and came charging forward, at which point the shotgunners blasted away with abandon. That noise brought more Infected charging into the space; I would light them up with the flashlight and my partner shot them down.Things calmed down, momentarily then a massive subway car started sliding out of its precarious place, which trigged one of the demo's coolest effects. The floor was rigged for haptic feedback, and while we had felt it rumble at various disturbances, this was by far the biggest impact. The combo of the visuals, audio and haptics all made it feel, well, immersive. I certainly didn't forget I was in a demo, but it was cool nonetheless. Beyond the floor haptics, Sony says that there are even scents pumped into the room to further the atmosphere, but I wasn't able to detect anything myself.Then we got the obligatory cameo from The Last of Us Part II co-protagonist Ellie and her companion Dina, as they scrambled away from Infected who start chasing them down. One knocked Ellie down and started ripping at her throat until Dina caught up and pulled it off her, at which point they sprinted away. Unfortunately, the disturbance brought a massive swarm of monsters coming at us, which brought on the big battle of the experience. I started illuminating the hordes and my companion blasted them down, but then dozens started overwhelming the screens and the screams got more and more intense until everything cut to black as our crew was overrun. That's that!I'm not judging the experience too harshly, because Sony was clear both in its press conference and before we tried it that this is a very early proof of concept. The main thing that pulled me out of it was that the space we were in is static there's no way to run away or move beyond the boundaries of what we were presented with. And then, as I mentioned, you needed to be relatively close to the "walls" for them to recognize the flashlight or gun, which meant that if you backed up to take in the scope of the space you gear might not work.The other barrier to it being truly scary or more immersive is that I couldn't ignore the fact that the threat was on a screen rather than in the room with me. There's no doubt that having full control in an environment like this would be a wild way to play a game like this, but it was all just a little too on the rails and removed from the space I was in.I'm trying to track down anyone from Sony who can tell me more about the genesis for this idea as well as where they see it going in the future. But Sony and Naughty Dog have already brought The Last of Us to a variety of other media, and this feels like a more high-tech vision of what Sony did in conjunction with Universal Studios when it brought the franchise to the Halloween Horror Nights that happens at the theme parks. Whether this is a one-off curiosity or something we see down the line in a more complete fashion, though, remains to be seen.This article originally appeared on Engadget at https://www.engadget.com/gaming/playstation/sonys-immersive-the-last-of-us-experience-at-ces-2025-dropped-me-into-a-subway-filled-with-zombies-140010550.html?src=rss0 Commentarii 0 Distribuiri

-

WWW.ENGADGET.COMDevices with strong cybersecurity can now apply for a government seal of approvalIn summer 2023, the Biden administration announced its plan to certify devices with a logo indicating powerful cybersecurity. Now, as Biden navigates his last couple weeks in office, the White House has launched the US Cyber Trust Mark. The green shield logo will adorn any product which passes accreditation tests established by the US National Institute of Standards and Technology (NIST).The program will open to companies "soon," allowing them to submit products to an accredited lab for compliance testing. "The US Cyber Trust Mark embodies public-private collaboration," the White House stated in a release. "It connects companies, consumers, and the US government by incentivizing companies to build products securely against established security standards and gives consumers an added measure of assurance through the label that their smart device is cybersafe." Some companies, like Best Buy and Amazon, plan to showcase labeled products for consumer's easy discovery.Steps to get the program up and running have continued over the last year and a half. In March, the Federal Communications Commission (FCC) approved the program in a bipartisan, unanimous vote. Last month, the Commission issued 11 companies with conditional approval to act as Cybersecurity Label Administrators.The White House's original announcement included plans to also create a QR code linking to a database of the products its unclear if this aspect will move forward. The QR code would allow customers to check if the product was up-to-date with its cybersecurity checks.This article originally appeared on Engadget at https://www.engadget.com/cybersecurity/devices-with-strong-cybersecurity-can-now-apply-for-a-government-seal-of-approval-131553198.html?src=rss0 Commentarii 0 Distribuiri

-

WWW.TECHRADAR.COMResearchers hijack thousands of backdoors thanks to expired domainsExpired domains allowed watchTowr to access and sinkhole thousands of web backdoors.0 Commentarii 0 Distribuiri

WWW.TECHRADAR.COMResearchers hijack thousands of backdoors thanks to expired domainsExpired domains allowed watchTowr to access and sinkhole thousands of web backdoors.0 Commentarii 0 Distribuiri