0 Comentários

0 Compartilhamentos

165 Visualizações

Diretório

Diretório

-

Faça o login para curtir, compartilhar e comentar!

-

WWW.THEVERGE.COMSavants newSmart Budget system lets you control your homes electrical loadAt CES this week, Savant Systems announced Savant Smart Budget, a feature of its Smart Power system of modular relays and equipment that integrates with your existing circuit breaker box.If youre already at the limits of your breaker boxs capacity, Smart Budget lets you get around that with automated control of individual circuits. That way, you can add more high-draw connections, like appliances or EV chargers, than your electrical box can supply at once. For instance, you could set it so that power only goes to your EV overnight after youre done using your oven. That sort of control can also be useful if youre using a house battery or running on solar power.Savants Smart Budget software. Image: SavantSavant says its system, which starts at $1,500 and requires installation by a licensed electrician, is more affordable than the alternative of working with your electric utility provider to upgrade to higher amperage service, which could cost in the tens of thousands of dollars. Those parts fit into most major electrical panels that standardize on 1 breaker spacing, company CMO J.C. Murphy tells The Verge, including panels from Schneider, Eaton, GE, ABB, Siemens, and others.The Smart Budget kit will include two 30-amp single-pole circuit breakers, which Savant calls Power Modules, along with a double-pole 60-amp one and a current tracker for circuits you only want to monitor, according to Murphy. It also includes a Savant Director hub and sensors. The company sells additional Power Modules that cost $120 for dual 20-amp or single-pole 30-amp versions and $240 for a 60-amp double-pole module.0 Comentários 0 Compartilhamentos 158 Visualizações

-

/cdn.vox-cdn.com/uploads/chorus_asset/file/25804910/rog_ally_x_bazzite_002.jpg) WWW.THEVERGE.COMValve will officially let you install SteamOS on other handhelds as soon as this AprilSteamOS was always supposed to be bigger than Valves own Steam Deck, and 2025 is the year it finally expands. Not only will Lenovo ship the first third-party SteamOS handheld this May, Valve has now revealed it will let you install a working copy of SteamOS on other handhelds even sooner than that. Pierre-Loup Griffais, one of the lead designers on the Steam Deck and SteamOS, tells me a beta for other handhelds is slated to ship after March sometime, and that you might discover the OS just starts working properly after that happens! Griffais and his co-designer Lawrence Yang would not confirm which handhelds might just start working, though there are some obvious candidates: the company confirmed to us in August that it had been adding support for the Asus ROG Allys controls. Also, quite a few PC gamers have also discovered that Bazzite, a fork of Valves Steam Deck experience that I loved testing on an Ally X and vastly preferred to Windows, also works wonderfully on the Lenovo Legion Go. There still arent that many handhelds out there at the end of the day, and I would think Valve would take advantage of work the Linux gaming community has already done on both.RelatedSpeaking of Bazzite, Valve seems to be flattered! We have nothing against it, says Yang. Its a great community project that delivers a lot of value to people that want a similar experience on devices right now, says Griffais, adding later In a lot of ways Bazzite is a good way to kind of get the latest and greatest of what weve been working on, and test it. But he says Bazzite isnt yet in a state where a hardware manufacturer could preload it on a handheld, nor would Valve allow that. While users can freely download and install the SteamOS image onto their own devices, companies arent allowed to sell it or modify it, and must partner with Valve first.There are some non-selfish reasons for that. Among other things, Griffais explains that the Lenovo Legion Go S will run the same SteamOS image as the Steam Deck itself, taking advantage of the same software updates and the same precached shaders that let games load and run more smoothly, just with added hardware compatibility tweaks. Valve wants to make sure SteamOS is a single platform, not a fragmented one.In general, we just want to make sure we have a good pathway to work together on things like firmware updates and you can get to things like the boot manager and the BIOS and things like that in a semi-standardized fashion, right? says Griffais, regarding what Valve needs to see in a partnership that would officially ship SteamOS on other devices.Valve isnt currently partnered with any other companies beyond Lenovo to do that collaboration Yang tells me the company is not working with GPD on official SteamOS support, despite that manufacturers claim. Valves also not promising that whichever Windows handheld you have will necessarily run SteamOS perfectly in a new blog post, Valve only confirms that a beta will ship before Lenovos Legion Go S, that it should improve the experience on other devices, and that users can download and test this themselves.As far as other form factors, like possible SteamOS living room boxes, Valve says you might have a good experience trying that. And partnerships are a possibility there too: if someone wants to bring that to the market and preload SteamOS on it, wed be happy to talk to them.Valve wouldnt tell me anything about the rumors that its developing its own Steam Controller 2, VR headset with wands, and possibly its own living room box, but did tell me that we might expect more Steam Input compatible controllers in the future.0 Comentários 0 Compartilhamentos 147 Visualizações

WWW.THEVERGE.COMValve will officially let you install SteamOS on other handhelds as soon as this AprilSteamOS was always supposed to be bigger than Valves own Steam Deck, and 2025 is the year it finally expands. Not only will Lenovo ship the first third-party SteamOS handheld this May, Valve has now revealed it will let you install a working copy of SteamOS on other handhelds even sooner than that. Pierre-Loup Griffais, one of the lead designers on the Steam Deck and SteamOS, tells me a beta for other handhelds is slated to ship after March sometime, and that you might discover the OS just starts working properly after that happens! Griffais and his co-designer Lawrence Yang would not confirm which handhelds might just start working, though there are some obvious candidates: the company confirmed to us in August that it had been adding support for the Asus ROG Allys controls. Also, quite a few PC gamers have also discovered that Bazzite, a fork of Valves Steam Deck experience that I loved testing on an Ally X and vastly preferred to Windows, also works wonderfully on the Lenovo Legion Go. There still arent that many handhelds out there at the end of the day, and I would think Valve would take advantage of work the Linux gaming community has already done on both.RelatedSpeaking of Bazzite, Valve seems to be flattered! We have nothing against it, says Yang. Its a great community project that delivers a lot of value to people that want a similar experience on devices right now, says Griffais, adding later In a lot of ways Bazzite is a good way to kind of get the latest and greatest of what weve been working on, and test it. But he says Bazzite isnt yet in a state where a hardware manufacturer could preload it on a handheld, nor would Valve allow that. While users can freely download and install the SteamOS image onto their own devices, companies arent allowed to sell it or modify it, and must partner with Valve first.There are some non-selfish reasons for that. Among other things, Griffais explains that the Lenovo Legion Go S will run the same SteamOS image as the Steam Deck itself, taking advantage of the same software updates and the same precached shaders that let games load and run more smoothly, just with added hardware compatibility tweaks. Valve wants to make sure SteamOS is a single platform, not a fragmented one.In general, we just want to make sure we have a good pathway to work together on things like firmware updates and you can get to things like the boot manager and the BIOS and things like that in a semi-standardized fashion, right? says Griffais, regarding what Valve needs to see in a partnership that would officially ship SteamOS on other devices.Valve isnt currently partnered with any other companies beyond Lenovo to do that collaboration Yang tells me the company is not working with GPD on official SteamOS support, despite that manufacturers claim. Valves also not promising that whichever Windows handheld you have will necessarily run SteamOS perfectly in a new blog post, Valve only confirms that a beta will ship before Lenovos Legion Go S, that it should improve the experience on other devices, and that users can download and test this themselves.As far as other form factors, like possible SteamOS living room boxes, Valve says you might have a good experience trying that. And partnerships are a possibility there too: if someone wants to bring that to the market and preload SteamOS on it, wed be happy to talk to them.Valve wouldnt tell me anything about the rumors that its developing its own Steam Controller 2, VR headset with wands, and possibly its own living room box, but did tell me that we might expect more Steam Input compatible controllers in the future.0 Comentários 0 Compartilhamentos 147 Visualizações -

TOWARDSAI.NETBuilding Large Action Models: Insights from MicrosoftBuilding Large Action Models: Insights from Microsoft 0 like January 7, 2025Share this postAuthor(s): Jesus Rodriguez Originally published on Towards AI. Created Using MidjourneyI recently started an AI-focused educational newsletter, that already has over 175,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:TheSequence | Jesus Rodriguez | SubstackThe best source to stay up-to-date with the developments in the machine learning, artificial intelligence, and datathesequence.aiAction execution is one of the key building blocks of agentic workflows. One of the most interesting debates in that are is whether actions are executed by the model itself or by an external coordination layer. The supporters of the former hypothesis have lined up behind a theory known as large action models(LAMs) with projects like Gorilla or Rabbit r1 as key pioneers. However, there are still only a few practical examples of LAM frameworks. Recently, Microsoft Research published one of the most complete papers in this area outlining a complete framework for LAM models. Microsofts core idea is to simply bridge the gap between the language understanding prowess of LLMs and the need for real-world action execution.From LLMs to LAMs: A Paradigm ShiftThe limitations of traditional LLMs in interacting with and manipulating the physical world necessitate the development of LAMs. While LLMs excel at generating intricate textual responses, their inability to translate understanding into tangible actions restricts their applicability in real-world scenarios. LAMs address this challenge by extending the expertise of LLMs from language processing to action generation, enabling them to perform actions in both physical and digital environments. This transition signifies a shift from passive language understanding to active task completion, marking a significant milestone in AI development.Image Credit: Microsoft ResearchKey Architectural Components: A Step-by-Step ApproachMicrosofts framework for developing LAMs outlines a systematic process, encompassing crucial stages from inception to deployment. The key architectural components include:Data Collection and PreparationThis foundational step involves gathering and curating high-quality, action-oriented data for specific use cases. This data includes user queries, environmental context, potential actions, and any other relevant information required to train the LAM effectively. A two-phase data collection approach is adopted:Task-Plan CollectionThis phase focuses on collecting data consisting of tasks and their corresponding plans. Tasks represent user requests expressed in natural language, while plans outline detailed step-by-step procedures designed to fulfill these requests. This data is crucial for training the model to generate effective plans and enhance its high-level reasoning and planning capabilities. Sources for this data include application documentation, online how-to guides like WikiHow, and historical search queries.Task-Action CollectionThis phase converts task-plan data into executable steps. It involves refining tasks and plans to be more concrete and grounded within a specific environment. Action sequences are generated, representing actionable instructions that directly interact with the environment, such as select_text(text=hello) or click(on=Button(20), how=left, double=False). This data provides the necessary granularity for training a LAM to perform reliable and accurate task executions in real-world scenarios.Image Credit: Microsoft ResearchModel TrainingThis stage involves training or fine-tuning LLMs to perform actions rather than merely generate text. A staged training strategy, consisting of four phases, is employed:Phase 1: Task-Plan Pretraining: This phase focuses on training the model to generate coherent and logical plans for various tasks, utilizing a dataset of 76,672 task-plan pairs. This pretraining establishes a foundational understanding of task structures, enabling the model to decompose tasks into logical steps.Phase 2: Learning from Experts: The model learns to execute actions by imitating expert-labeled task-action trajectories. This phase aligns plan generation with actionable steps, teaching the model how to perform actions based on observed UI states and corresponding actions.Phase 3: Self-Boosting Exploration: This phase encourages the model to explore and handle tasks that even expert demonstrations failed to solve. By interacting with the environment and trying alternative strategies, the model autonomously generates new success cases, promoting diversity and adaptability.Phase 4: Learning from a Reward Model: This phase incorporates reinforcement learning (RL) principles to optimize decision-making. A reward model is trained on success and failure data to predict the quality of actions. This model is then used to fine-tune the LAM in an offline RL setting, allowing the model to learn from failures and improve action selection without additional environmental interactions.Image Credit: Microsoft ResearchIntegration and GroundingThe trained LAM is integrated into an agent framework, enabling interaction with external tools, maintaining memory, and interfacing with the environment. This integration transforms the model into a functional agent capable of making meaningful impacts in the physical world. Microsofts UFO, a GUI agent for Windows OS interaction, exemplifies this integration. The AppAgent within UFO serves as the operational platform for the LAM.EvaluationRigorous evaluation processes are essential to assess the reliability, robustness, and safety of the LAM before real-world deployment. This evaluation involves testing the model in a variety of scenarios to ensure generalization across different environments and tasks, as well as effective handling of unexpected situations. Both offline and online evaluations are conducted:Offline Evaluation: The LAMs performance is assessed using an offline dataset in a controlled, static environment. This allows for systematic analysis of task success rates, precision, and recall metrics.Online Evaluation: The LAMs performance is evaluated in a real-world environment. This involves measuring aspects like task completion accuracy, efficiency, and effectiveness.Image Credit: Microsoft ResearchKey Building Blocks: Essential Features of LAMsSeveral key building blocks empower LAMs to perform complex real-world tasks:Action Generation: The ability to translate user intentions into actionable steps grounded in the environment is a defining feature of LAMs. These actions can manifest as operations on graphical user interfaces (GUIs), API calls for software applications, physical manipulations by robots, or even code generation.Dynamic Planning and Adaptation: LAMs are capable of decomposing complex tasks into subtasks and dynamically adjusting their plans in response to environmental changes. This adaptive planning ensures robust performance in dynamic, real-world scenarios where unexpected situations are common.Specialization and Efficiency: LAMs can be tailored for specific domains or tasks, achieving high accuracy and efficiency within their operational scope. This specialization allows for reduced computational overhead and improved response times compared to general-purpose LLMs.Agent Systems: Agent systems provide the operational framework for LAMs, equipping them with tools, memory, and feedback mechanisms. This integration allows LAMs to interact with the world and execute actions effectively. UFOs AppAgent, for example, employs components like action executors, memory, and environment data collection to facilitate seamless interaction between the LAM and the Windows OS environment.The UFO Agent: Grounding LAMs in Windows OSMicrosofts UFO agent exemplifies the integration and grounding of LAMs in a real-world environment. Key aspects of UFO include:Architecture: UFO comprises a HostAgent for decomposing user requests into subtasks and an AppAgent for executing these subtasks within specific applications. This hierarchical structure facilitates the handling of complex, cross-application tasks.AppAgent Structure: The AppAgent, where the LAM resides, consists of:Environment Data Collection: The agent gathers information about the application environment, including UI elements and their properties, to provide context for the LAM.LAM Inference Engine: The LAM, serving as the brain of the AppAgent, processes the collected information and infers the necessary actions to fulfill the user request.Action Executor: This component grounds the LAMs predicted actions, translating them into concrete interactions with the applications UI, such as mouse clicks, keyboard inputs, or API calls.Memory: The agent maintains a memory of previous actions and plans, providing crucial context for the LAM to make informed and adaptive decisions.Image Credit: Microsoft ResearchEvaluation and Performance: Benchmarking LAMsMicrosoft employs a comprehensive evaluation framework to assess the performance of LAMs in both controlled and real-world environments. Key metrics include:Task Success Rate (TSR): This measures the percentage of tasks successfully completed out of the total attempted. It evaluates the agents ability to accurately and reliably complete tasks.Task Completion Time: This measures the total time taken to complete a task, from the initial request to the final action. It reflects the efficiency of the LAM and agent system.Object Accuracy: This measures the accuracy of selecting the correct UI element for each task step. It assesses the agents ability to interact with the appropriate UI components.Step Success Rate (SSR): This measures the percentage of individual steps completed successfully within a task. It provides a granular assessment of action execution accuracy.In online evaluations using Microsoft Word as the target application, LAM achieved a TSR of 71.0%, demonstrating competitive performance compared to baseline models like GPT-4o. Importantly, LAM exhibited superior efficiency, achieving the shortest task completion times and lowest average step latencies. These results underscore the efficacy of Microsofts framework in building LAMs that are not only accurate but also efficient in real-world applications.LimitationsDespite the advancements made, LAMs are still in their early stages of development. Key limitations and future research areas include:Safety Risks: The ability of LAMs to interact with the real world introduces potential safety concerns. Robust mechanisms are needed to ensure that LAMs operate safely and reliably, minimizing the risk of unintended consequences.Ethical Considerations: The development and deployment of LAMs raise ethical considerations, particularly regarding bias, fairness, and accountability. Future research needs to address these concerns to ensure responsible LAM development and deployment.Scalability and Adaptability: Scaling LAMs to new domains and tasks can be challenging due to the need for extensive data collection and training. Developing more efficient training methods and exploring techniques like transfer learning are crucial for enhancing the scalability and adaptability of LAMs.ConclusionMicrosofts framework for building LAMs represents a significant advancement in AI, enabling a shift from passive language understanding to active real-world engagement. The frameworks comprehensive approach, encompassing data collection, model training, agent integration, and rigorous evaluation, provides a robust foundation for building LAMs. While challenges remain, the transformative potential of LAMs in revolutionizing human-computer interaction and automating complex tasks is undeniable. Continued research and development efforts will pave the way for more sophisticated, reliable, and ethically sound LAM applications, bringing us closer to a future where AI seamlessly integrates with our lives, augmenting human capabilities and transforming our interaction with the world around us.Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming asponsor. Published via Towards AITowards AI - Medium Share this post0 Comentários 0 Compartilhamentos 154 Visualizações

TOWARDSAI.NETBuilding Large Action Models: Insights from MicrosoftBuilding Large Action Models: Insights from Microsoft 0 like January 7, 2025Share this postAuthor(s): Jesus Rodriguez Originally published on Towards AI. Created Using MidjourneyI recently started an AI-focused educational newsletter, that already has over 175,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:TheSequence | Jesus Rodriguez | SubstackThe best source to stay up-to-date with the developments in the machine learning, artificial intelligence, and datathesequence.aiAction execution is one of the key building blocks of agentic workflows. One of the most interesting debates in that are is whether actions are executed by the model itself or by an external coordination layer. The supporters of the former hypothesis have lined up behind a theory known as large action models(LAMs) with projects like Gorilla or Rabbit r1 as key pioneers. However, there are still only a few practical examples of LAM frameworks. Recently, Microsoft Research published one of the most complete papers in this area outlining a complete framework for LAM models. Microsofts core idea is to simply bridge the gap between the language understanding prowess of LLMs and the need for real-world action execution.From LLMs to LAMs: A Paradigm ShiftThe limitations of traditional LLMs in interacting with and manipulating the physical world necessitate the development of LAMs. While LLMs excel at generating intricate textual responses, their inability to translate understanding into tangible actions restricts their applicability in real-world scenarios. LAMs address this challenge by extending the expertise of LLMs from language processing to action generation, enabling them to perform actions in both physical and digital environments. This transition signifies a shift from passive language understanding to active task completion, marking a significant milestone in AI development.Image Credit: Microsoft ResearchKey Architectural Components: A Step-by-Step ApproachMicrosofts framework for developing LAMs outlines a systematic process, encompassing crucial stages from inception to deployment. The key architectural components include:Data Collection and PreparationThis foundational step involves gathering and curating high-quality, action-oriented data for specific use cases. This data includes user queries, environmental context, potential actions, and any other relevant information required to train the LAM effectively. A two-phase data collection approach is adopted:Task-Plan CollectionThis phase focuses on collecting data consisting of tasks and their corresponding plans. Tasks represent user requests expressed in natural language, while plans outline detailed step-by-step procedures designed to fulfill these requests. This data is crucial for training the model to generate effective plans and enhance its high-level reasoning and planning capabilities. Sources for this data include application documentation, online how-to guides like WikiHow, and historical search queries.Task-Action CollectionThis phase converts task-plan data into executable steps. It involves refining tasks and plans to be more concrete and grounded within a specific environment. Action sequences are generated, representing actionable instructions that directly interact with the environment, such as select_text(text=hello) or click(on=Button(20), how=left, double=False). This data provides the necessary granularity for training a LAM to perform reliable and accurate task executions in real-world scenarios.Image Credit: Microsoft ResearchModel TrainingThis stage involves training or fine-tuning LLMs to perform actions rather than merely generate text. A staged training strategy, consisting of four phases, is employed:Phase 1: Task-Plan Pretraining: This phase focuses on training the model to generate coherent and logical plans for various tasks, utilizing a dataset of 76,672 task-plan pairs. This pretraining establishes a foundational understanding of task structures, enabling the model to decompose tasks into logical steps.Phase 2: Learning from Experts: The model learns to execute actions by imitating expert-labeled task-action trajectories. This phase aligns plan generation with actionable steps, teaching the model how to perform actions based on observed UI states and corresponding actions.Phase 3: Self-Boosting Exploration: This phase encourages the model to explore and handle tasks that even expert demonstrations failed to solve. By interacting with the environment and trying alternative strategies, the model autonomously generates new success cases, promoting diversity and adaptability.Phase 4: Learning from a Reward Model: This phase incorporates reinforcement learning (RL) principles to optimize decision-making. A reward model is trained on success and failure data to predict the quality of actions. This model is then used to fine-tune the LAM in an offline RL setting, allowing the model to learn from failures and improve action selection without additional environmental interactions.Image Credit: Microsoft ResearchIntegration and GroundingThe trained LAM is integrated into an agent framework, enabling interaction with external tools, maintaining memory, and interfacing with the environment. This integration transforms the model into a functional agent capable of making meaningful impacts in the physical world. Microsofts UFO, a GUI agent for Windows OS interaction, exemplifies this integration. The AppAgent within UFO serves as the operational platform for the LAM.EvaluationRigorous evaluation processes are essential to assess the reliability, robustness, and safety of the LAM before real-world deployment. This evaluation involves testing the model in a variety of scenarios to ensure generalization across different environments and tasks, as well as effective handling of unexpected situations. Both offline and online evaluations are conducted:Offline Evaluation: The LAMs performance is assessed using an offline dataset in a controlled, static environment. This allows for systematic analysis of task success rates, precision, and recall metrics.Online Evaluation: The LAMs performance is evaluated in a real-world environment. This involves measuring aspects like task completion accuracy, efficiency, and effectiveness.Image Credit: Microsoft ResearchKey Building Blocks: Essential Features of LAMsSeveral key building blocks empower LAMs to perform complex real-world tasks:Action Generation: The ability to translate user intentions into actionable steps grounded in the environment is a defining feature of LAMs. These actions can manifest as operations on graphical user interfaces (GUIs), API calls for software applications, physical manipulations by robots, or even code generation.Dynamic Planning and Adaptation: LAMs are capable of decomposing complex tasks into subtasks and dynamically adjusting their plans in response to environmental changes. This adaptive planning ensures robust performance in dynamic, real-world scenarios where unexpected situations are common.Specialization and Efficiency: LAMs can be tailored for specific domains or tasks, achieving high accuracy and efficiency within their operational scope. This specialization allows for reduced computational overhead and improved response times compared to general-purpose LLMs.Agent Systems: Agent systems provide the operational framework for LAMs, equipping them with tools, memory, and feedback mechanisms. This integration allows LAMs to interact with the world and execute actions effectively. UFOs AppAgent, for example, employs components like action executors, memory, and environment data collection to facilitate seamless interaction between the LAM and the Windows OS environment.The UFO Agent: Grounding LAMs in Windows OSMicrosofts UFO agent exemplifies the integration and grounding of LAMs in a real-world environment. Key aspects of UFO include:Architecture: UFO comprises a HostAgent for decomposing user requests into subtasks and an AppAgent for executing these subtasks within specific applications. This hierarchical structure facilitates the handling of complex, cross-application tasks.AppAgent Structure: The AppAgent, where the LAM resides, consists of:Environment Data Collection: The agent gathers information about the application environment, including UI elements and their properties, to provide context for the LAM.LAM Inference Engine: The LAM, serving as the brain of the AppAgent, processes the collected information and infers the necessary actions to fulfill the user request.Action Executor: This component grounds the LAMs predicted actions, translating them into concrete interactions with the applications UI, such as mouse clicks, keyboard inputs, or API calls.Memory: The agent maintains a memory of previous actions and plans, providing crucial context for the LAM to make informed and adaptive decisions.Image Credit: Microsoft ResearchEvaluation and Performance: Benchmarking LAMsMicrosoft employs a comprehensive evaluation framework to assess the performance of LAMs in both controlled and real-world environments. Key metrics include:Task Success Rate (TSR): This measures the percentage of tasks successfully completed out of the total attempted. It evaluates the agents ability to accurately and reliably complete tasks.Task Completion Time: This measures the total time taken to complete a task, from the initial request to the final action. It reflects the efficiency of the LAM and agent system.Object Accuracy: This measures the accuracy of selecting the correct UI element for each task step. It assesses the agents ability to interact with the appropriate UI components.Step Success Rate (SSR): This measures the percentage of individual steps completed successfully within a task. It provides a granular assessment of action execution accuracy.In online evaluations using Microsoft Word as the target application, LAM achieved a TSR of 71.0%, demonstrating competitive performance compared to baseline models like GPT-4o. Importantly, LAM exhibited superior efficiency, achieving the shortest task completion times and lowest average step latencies. These results underscore the efficacy of Microsofts framework in building LAMs that are not only accurate but also efficient in real-world applications.LimitationsDespite the advancements made, LAMs are still in their early stages of development. Key limitations and future research areas include:Safety Risks: The ability of LAMs to interact with the real world introduces potential safety concerns. Robust mechanisms are needed to ensure that LAMs operate safely and reliably, minimizing the risk of unintended consequences.Ethical Considerations: The development and deployment of LAMs raise ethical considerations, particularly regarding bias, fairness, and accountability. Future research needs to address these concerns to ensure responsible LAM development and deployment.Scalability and Adaptability: Scaling LAMs to new domains and tasks can be challenging due to the need for extensive data collection and training. Developing more efficient training methods and exploring techniques like transfer learning are crucial for enhancing the scalability and adaptability of LAMs.ConclusionMicrosofts framework for building LAMs represents a significant advancement in AI, enabling a shift from passive language understanding to active real-world engagement. The frameworks comprehensive approach, encompassing data collection, model training, agent integration, and rigorous evaluation, provides a robust foundation for building LAMs. While challenges remain, the transformative potential of LAMs in revolutionizing human-computer interaction and automating complex tasks is undeniable. Continued research and development efforts will pave the way for more sophisticated, reliable, and ethically sound LAM applications, bringing us closer to a future where AI seamlessly integrates with our lives, augmenting human capabilities and transforming our interaction with the world around us.Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming asponsor. Published via Towards AITowards AI - Medium Share this post0 Comentários 0 Compartilhamentos 154 Visualizações -

WWW.IGN.COMPrivate Division Games including Tales of the Shire and Kerbal Space Program to Be Distributed by New Label From Annapurna Interactive's Former StaffIn a rather unusual merging of two completely separate reported stories from last year, the former staff of Annapurna Interactive are seemingly preparing to take over the portfolio of shuttered indie label Private Division. At least some of the remaining Private Division employees are expected to be laid off in the process.This is according to a report from Bloomberg, which states that a currently unnamed company staffed by former Annapurna Interactive employees has reached a deal with private equity firm Haveli to take over the distribution of Private Division titles. Bloomberg reports and IGN can independently confirm that Haveli is the company that purchased Private Division from Take-Two Interactive last year for an undisclosed amount.The portfolio includes both current and upcoming Private Division titles, such as Tales of the Shire, which releases March 25. It also includes Project Bloom, a AAA action-adventure game developed by Game Freak that was announced back in 2023 with nothing more than a concept art teaser.Concept art for Game Freak's Project Bloom, formerly published by Private Division.As Bloomberg reports, Haveli's purchase of Private Division included not just the portfolio, but 20 employees who remained with the label following Take-Two-implemented layoffs last spring. Remaining employees have reportedly been told to explore other employment options, with the expectation that at least some of them will be laid off as part of the deal with the unnamed new publisher.Private Division was formerly Take-Two's publishing label, which the company founded back in 2017. It was intended to support independent games that were smaller than the fare typically supported by the Grand Theft Auto publisher. Over the years, Private Division produced titles such as The Outer Worlds, OlliOlli World, and Kerbal Space Program 2, but game sales repeatedly fell short of Take-Two expectations. Early last year, we reported that Take-Two was slowly shuttering operations at Private Division, first by winding down operations at its supported studios and then by selling off the label.The new owners of Private Division's portfolio are a group of former employees of Annapurna Interactive that collectively resigned last year following a leadership dispute at Annapurna. We reported last fall on the messy circumstances, which left Annapurna seeking to restaff an entire publishing team to cover its numerous obligations and roughly 25 individuals seeking new employment.Rebekah Valentine is a senior reporter for IGN. You can find her posting on BlueSky @duckvalentine.bsky.social. Got a story tip? Send it to rvalentine@ign.com.0 Comentários 0 Compartilhamentos 143 Visualizações

WWW.IGN.COMPrivate Division Games including Tales of the Shire and Kerbal Space Program to Be Distributed by New Label From Annapurna Interactive's Former StaffIn a rather unusual merging of two completely separate reported stories from last year, the former staff of Annapurna Interactive are seemingly preparing to take over the portfolio of shuttered indie label Private Division. At least some of the remaining Private Division employees are expected to be laid off in the process.This is according to a report from Bloomberg, which states that a currently unnamed company staffed by former Annapurna Interactive employees has reached a deal with private equity firm Haveli to take over the distribution of Private Division titles. Bloomberg reports and IGN can independently confirm that Haveli is the company that purchased Private Division from Take-Two Interactive last year for an undisclosed amount.The portfolio includes both current and upcoming Private Division titles, such as Tales of the Shire, which releases March 25. It also includes Project Bloom, a AAA action-adventure game developed by Game Freak that was announced back in 2023 with nothing more than a concept art teaser.Concept art for Game Freak's Project Bloom, formerly published by Private Division.As Bloomberg reports, Haveli's purchase of Private Division included not just the portfolio, but 20 employees who remained with the label following Take-Two-implemented layoffs last spring. Remaining employees have reportedly been told to explore other employment options, with the expectation that at least some of them will be laid off as part of the deal with the unnamed new publisher.Private Division was formerly Take-Two's publishing label, which the company founded back in 2017. It was intended to support independent games that were smaller than the fare typically supported by the Grand Theft Auto publisher. Over the years, Private Division produced titles such as The Outer Worlds, OlliOlli World, and Kerbal Space Program 2, but game sales repeatedly fell short of Take-Two expectations. Early last year, we reported that Take-Two was slowly shuttering operations at Private Division, first by winding down operations at its supported studios and then by selling off the label.The new owners of Private Division's portfolio are a group of former employees of Annapurna Interactive that collectively resigned last year following a leadership dispute at Annapurna. We reported last fall on the messy circumstances, which left Annapurna seeking to restaff an entire publishing team to cover its numerous obligations and roughly 25 individuals seeking new employment.Rebekah Valentine is a senior reporter for IGN. You can find her posting on BlueSky @duckvalentine.bsky.social. Got a story tip? Send it to rvalentine@ign.com.0 Comentários 0 Compartilhamentos 143 Visualizações -

WWW.IGN.COMSony Debuts Tech Where Players Can See, Shoot, and Smell Baddies From Games Like The Last of UsSony has debuted conceptual technology at CES 2025 that would essentially allow gamers to enter the worlds of PlayStation games such as The Last of Us and see, shoot, and even smell the baddies.Footage of the Future Immersive Entertainment Concept was shared in the video below, and shows a giant cube players enter similar to the multi-person virtual reality experiences currently available. This isn't VR, however, as the cube is instead made of incredibly high definition screens that present the game world around the player.PlayIGN's Twenty Questions - Guess the game!IGN's Twenty Questions - Guess the game!To start:...try asking a question that can be answered with a "Yes" or "No".000/250Looking to boost the immersion further, players are also delivered "engaging audio" and even "scent and atmospherics with interactive PlayStation game content." The players in the video also had imitation weapons and would shoot at the screens as clickers appeared, presumably getting a whiff of rotten fungus and other offenses as they did so.Sony Future Immersive Entertainment Concept - The Last of UsThe demo was made using gameplay pulled from The Last of Us but obviously adapted to work for the Future Immersive Entertainment Concept, so unfortunately for fans is not a further look into the beloved world.Even if it was, of course, this technology is still years away and presumably incredibly expensive, so there are myriad limitations to actually getting it into the public's hands (or getting the public into it). Sony may showcase it with other franchises in the future too, such as God of War, Horizon, and so on.The Last of Us has otherwise been dormant since 2020 when Part 2 was released, outside of a remake of the first game and remaster of the second. Another entry may not appear for a while either, as a multiplayer take was recently scrapped and developer Naughty Dog is currently focused on a new sci-fi franchise called Intergalactic: The Heretic Prophet.Ryan Dinsdale is an IGN freelance reporter. He'll talk about The Witcher all day.0 Comentários 0 Compartilhamentos 161 Visualizações

WWW.IGN.COMSony Debuts Tech Where Players Can See, Shoot, and Smell Baddies From Games Like The Last of UsSony has debuted conceptual technology at CES 2025 that would essentially allow gamers to enter the worlds of PlayStation games such as The Last of Us and see, shoot, and even smell the baddies.Footage of the Future Immersive Entertainment Concept was shared in the video below, and shows a giant cube players enter similar to the multi-person virtual reality experiences currently available. This isn't VR, however, as the cube is instead made of incredibly high definition screens that present the game world around the player.PlayIGN's Twenty Questions - Guess the game!IGN's Twenty Questions - Guess the game!To start:...try asking a question that can be answered with a "Yes" or "No".000/250Looking to boost the immersion further, players are also delivered "engaging audio" and even "scent and atmospherics with interactive PlayStation game content." The players in the video also had imitation weapons and would shoot at the screens as clickers appeared, presumably getting a whiff of rotten fungus and other offenses as they did so.Sony Future Immersive Entertainment Concept - The Last of UsThe demo was made using gameplay pulled from The Last of Us but obviously adapted to work for the Future Immersive Entertainment Concept, so unfortunately for fans is not a further look into the beloved world.Even if it was, of course, this technology is still years away and presumably incredibly expensive, so there are myriad limitations to actually getting it into the public's hands (or getting the public into it). Sony may showcase it with other franchises in the future too, such as God of War, Horizon, and so on.The Last of Us has otherwise been dormant since 2020 when Part 2 was released, outside of a remake of the first game and remaster of the second. Another entry may not appear for a while either, as a multiplayer take was recently scrapped and developer Naughty Dog is currently focused on a new sci-fi franchise called Intergalactic: The Heretic Prophet.Ryan Dinsdale is an IGN freelance reporter. He'll talk about The Witcher all day.0 Comentários 0 Compartilhamentos 161 Visualizações -

WWW.DENOFGEEK.COMSquid Game Season 3 Has Three Games to Go: Heres What They Could BeThis article contains spoilers for Squid Game season 2.Unlike the first season of Squid Game, season 2 ends before the games are truly done. Despite his best efforts to end the games early, first through democracy and when that doesnt work, through rebellion, Seong Gi-hun (Lee Jung-jae) and the survivors will likely have to face a few more deadly games when the series returns for its third season.We may not yet know when this year well get to see season 3, but that hasnt stopped fans from theorizing what games the players could face when Squid Game returns. Based on the number of games we saw in season 1, its been surmised that there will likely be three more challenges next season.One of those games is thought to be some version of monkey bars. Fans on Reddit and TikTok have pointed out that the stick figures on the walls of the players quarters in season 2 look like they are swinging across something. In season 1, tug of war and the glass bridge were both games that forced the losers to fall from a great height, so a deadly version of monkey bars that forces the players to either have to hang on for long periods of time or knock players off to swing across isnt that wild of a guess. @justthenobodys Replying to @redmoonisblue Squid Game Season 3 Theory! #fyp #foryou #squidgame original sound JustTheNobodys Another game that can be seen on the walls in the background of season 2 is something that looks an awful lot like chess or checkers. According to fan theories, this version of chess would likely involve the players stepping in as the pieces with Gi-hun in charge of moving one group across the board and the Front Man (Lee Byung-hun) controlling the other group. Since this is Squid Game, however, that means that any players taken off the board would likely be killed. So of course this means that Gi-hun would likely be trying to play in a way that keeps as many people alive on both sides as possible, while the Front Man would be looking to get as many pieces off the board as possible.The third game is hinted at in the mid-credits scene of season 2, where we see a railroad crossing with a green light and red light along with the infamous Red Light, Green Light doll in addition to a new boy doll weve never seen before. This could indicate one of two things according to TikToker MidwestMarvelGuy. The first theory is that this game will include some twisted version of the Trolley Problem a popular philosophical debate that asks whether a person would intervene to save one person over many. This could very well be the basis of a punishment for the players who took part in the rebellion, especially for Gi-hun.However, the true game thats likely at the heart of this potential challenge is called Dong Dong Dong Dae-mun. This game involves two kids forming an arch while other kids form a line and run underneath them as they sing a nursery rhyme of the same name. Once the song stops, the arch comes down, and anyone caught in their arms is out. We all know that Squid Game loves to give us creepy nursery rhymes, and that could explain the addition of the boy robot. The two robots would likely be the gatekeepers as the players run underneath them with the lights also indicating when to stop and when to go. @midwestmarvelguy Im 100% certain THIS is what the mid-credits scene means in Squid Game 2! #squidgame #squidgameseason2 #squidgameedit #netflix @Netflix Pink Soldiers 23 All of these games sound like theyd be terrifying to play in this scenario, so best of luck to the players if any of these theories end up coming true. Regardless of what games season 3 has in store for Gi-hun and the survivors, well be on the edge of our seats, cheering them on.All seven episodes of Squid Game season 2 are available to stream on Netflix now.0 Comentários 0 Compartilhamentos 157 Visualizações

WWW.DENOFGEEK.COMSquid Game Season 3 Has Three Games to Go: Heres What They Could BeThis article contains spoilers for Squid Game season 2.Unlike the first season of Squid Game, season 2 ends before the games are truly done. Despite his best efforts to end the games early, first through democracy and when that doesnt work, through rebellion, Seong Gi-hun (Lee Jung-jae) and the survivors will likely have to face a few more deadly games when the series returns for its third season.We may not yet know when this year well get to see season 3, but that hasnt stopped fans from theorizing what games the players could face when Squid Game returns. Based on the number of games we saw in season 1, its been surmised that there will likely be three more challenges next season.One of those games is thought to be some version of monkey bars. Fans on Reddit and TikTok have pointed out that the stick figures on the walls of the players quarters in season 2 look like they are swinging across something. In season 1, tug of war and the glass bridge were both games that forced the losers to fall from a great height, so a deadly version of monkey bars that forces the players to either have to hang on for long periods of time or knock players off to swing across isnt that wild of a guess. @justthenobodys Replying to @redmoonisblue Squid Game Season 3 Theory! #fyp #foryou #squidgame original sound JustTheNobodys Another game that can be seen on the walls in the background of season 2 is something that looks an awful lot like chess or checkers. According to fan theories, this version of chess would likely involve the players stepping in as the pieces with Gi-hun in charge of moving one group across the board and the Front Man (Lee Byung-hun) controlling the other group. Since this is Squid Game, however, that means that any players taken off the board would likely be killed. So of course this means that Gi-hun would likely be trying to play in a way that keeps as many people alive on both sides as possible, while the Front Man would be looking to get as many pieces off the board as possible.The third game is hinted at in the mid-credits scene of season 2, where we see a railroad crossing with a green light and red light along with the infamous Red Light, Green Light doll in addition to a new boy doll weve never seen before. This could indicate one of two things according to TikToker MidwestMarvelGuy. The first theory is that this game will include some twisted version of the Trolley Problem a popular philosophical debate that asks whether a person would intervene to save one person over many. This could very well be the basis of a punishment for the players who took part in the rebellion, especially for Gi-hun.However, the true game thats likely at the heart of this potential challenge is called Dong Dong Dong Dae-mun. This game involves two kids forming an arch while other kids form a line and run underneath them as they sing a nursery rhyme of the same name. Once the song stops, the arch comes down, and anyone caught in their arms is out. We all know that Squid Game loves to give us creepy nursery rhymes, and that could explain the addition of the boy robot. The two robots would likely be the gatekeepers as the players run underneath them with the lights also indicating when to stop and when to go. @midwestmarvelguy Im 100% certain THIS is what the mid-credits scene means in Squid Game 2! #squidgame #squidgameseason2 #squidgameedit #netflix @Netflix Pink Soldiers 23 All of these games sound like theyd be terrifying to play in this scenario, so best of luck to the players if any of these theories end up coming true. Regardless of what games season 3 has in store for Gi-hun and the survivors, well be on the edge of our seats, cheering them on.All seven episodes of Squid Game season 2 are available to stream on Netflix now.0 Comentários 0 Compartilhamentos 157 Visualizações -

The Belly Bumpers Preview is Now Available for Xbox Insiders!Xbox Insiders can now join the preview for Belly Bumpers! Use your belly to knockout other players in this 2-8 online and local party game. Eat juicy burgers to increase the size of your belly or force-feed opponents until they burst. Bump it out in a world of food-themed stages.About Belly BumpersLocal and Online MultiplayerBump your friends out of the arena in this belly-centric party game. Play with 2-8 local players on a single device! Cross-platform online multiplayer is also supported for 2-8 players.Eat BurgersEat burgers to increase the size of your belly and the power of your bumpers. Be careful as you eat a variety of foods because some have horrible side effects!Force-Feed OpponentsForce-feed opponents until their bellies burst by slapping them in the face with food!Food ArenasBump it out on over 10 food-themed stages. Each stage has unique interactive food elements such as stretchy licorice!Custom GamesPlay with only the tastiest foods. Set your own rules, modify bump powers, choose stages, and more with in our customizable games.How to Participate:Sign in on your Xbox Series X|S or Xbox One console and launch the Xbox Insider Hub app (install the Xbox Insider Hub from the Store first if necessary).Navigate to Previews > Belly Bumpers.Select Join.Wait for the registration to complete, and you should be directed to the Store page to install it.How to Provide Feedback:If you experience any issues while playing Belly Bumpers, dont forget to use Report a problem so we can investigate:Hold down the home button on your Xbox controller.Select Report a problem.Select the Games category and Belly Bumpers subcategory.Fill out the form with the appropriate details to help our investigation.Other resources:For more information: follow us on Twitter at @XboxInsider and this blog for release notes, announcements, and more. And feel free to interact with the community on the Xbox Insider SubReddit.0 Comentários 0 Compartilhamentos 148 Visualizações

-

9TO5MAC.COMSwift Student Challenge kicks off February 3 for three weeks onlyApple is preparing to kick off its annual Swift Student Challenge again this year, with entries opening in just under a month and lasting three weeks only. Here are the details.350 winners will be selected, with 50 invited to three-day Cupertino experienceApple runs the Swift Student Challenge annually as an opportunity to motivate and recognize the creativity and excellence of student developers.From Apples website:Apple is proud to support and uplift the next generation of developers, creators, and entrepreneurs with the SwiftStudentChallenge. The Challenge has given thousands of student developers the opportunity to showcase their creativity and coding capabilities through app playgrounds, and learn real-world skills that they can take into their careers andbeyond.This year, Apple plans to select 350 winners total whose submissions demonstrate excellence in innovation, creativity, social impact, or inclusivity.Out of those 350, a smaller group of 50 will earn the title Distinguished Winners and be invited to three days in Cupertino (presumably at WWDC 2025).The 2025 Swift Student Challenge will begin accepting applications on Monday, February 3 and applications will close three weeks later.To prepare for the Challenge, Apple is inviting both students and educators to join an upcoming online session where more info about the requirements will be shared, plus tips and inspiration from a former Challenge winner and more.Last years Distinguished Winners got to mingle with Apple CEO Tim Cook at Apple Park before WWDCs kickoff. So if you need additional motivation, a photo op with the CEO himself may be in the cards.Are you going to participate in Apples Swift Student Challenge this year? Let us know in the comments.Best Mac accessoriesAdd 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.Youre reading 9to5Mac experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Dont know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 148 Visualizações

9TO5MAC.COMSwift Student Challenge kicks off February 3 for three weeks onlyApple is preparing to kick off its annual Swift Student Challenge again this year, with entries opening in just under a month and lasting three weeks only. Here are the details.350 winners will be selected, with 50 invited to three-day Cupertino experienceApple runs the Swift Student Challenge annually as an opportunity to motivate and recognize the creativity and excellence of student developers.From Apples website:Apple is proud to support and uplift the next generation of developers, creators, and entrepreneurs with the SwiftStudentChallenge. The Challenge has given thousands of student developers the opportunity to showcase their creativity and coding capabilities through app playgrounds, and learn real-world skills that they can take into their careers andbeyond.This year, Apple plans to select 350 winners total whose submissions demonstrate excellence in innovation, creativity, social impact, or inclusivity.Out of those 350, a smaller group of 50 will earn the title Distinguished Winners and be invited to three days in Cupertino (presumably at WWDC 2025).The 2025 Swift Student Challenge will begin accepting applications on Monday, February 3 and applications will close three weeks later.To prepare for the Challenge, Apple is inviting both students and educators to join an upcoming online session where more info about the requirements will be shared, plus tips and inspiration from a former Challenge winner and more.Last years Distinguished Winners got to mingle with Apple CEO Tim Cook at Apple Park before WWDCs kickoff. So if you need additional motivation, a photo op with the CEO himself may be in the cards.Are you going to participate in Apples Swift Student Challenge this year? Let us know in the comments.Best Mac accessoriesAdd 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.Youre reading 9to5Mac experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Dont know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 148 Visualizações -

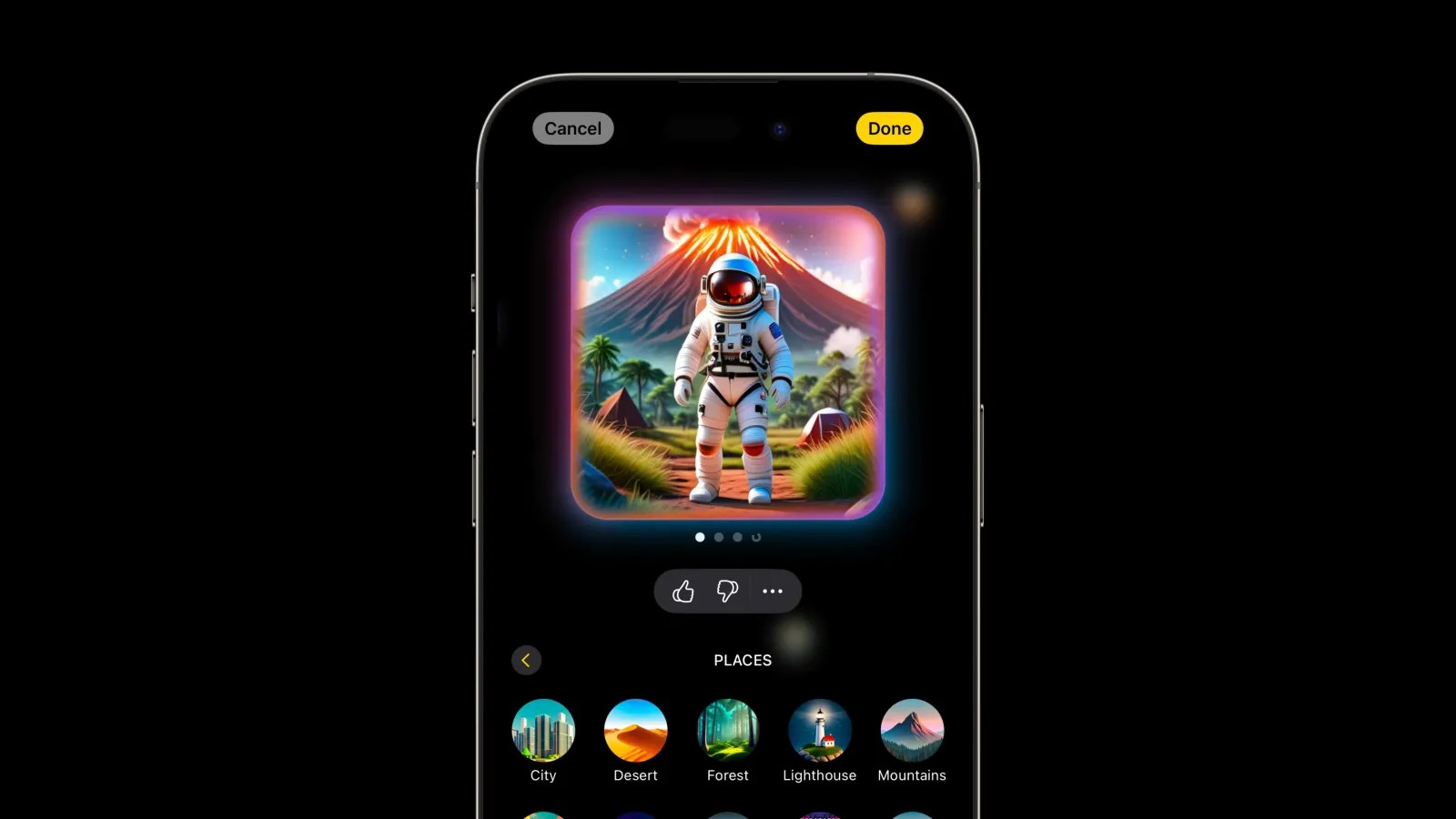

9TO5MAC.COMYou can manually disable certain Apple Intelligence features, heres howApple Intelligences ever-growing feature set has brought additional storage requirements on your device, but its also come with new controls over which features are enabled. Heres how to manually disable certain Apple Intelligence features on your iPhone and more.Screen Time includes method to disable three types of Apple Intelligence featuresApple Intelligence is mostly an all-or-nothing feature set.When you enable AI from your iPhones Settings app, or as part of an iOS setup walkthrough, youre activating nearly the entire Apple Intelligence feature set.But theres also a way to selectively scale back.Inside Screen Time, Apple has built in options to disable or enable three different categories of Apple Intelligence:Image CreationWriting Tools ChatGPT ExtensionThe first category applies to Image Playground, Genmoji, and Image Wand. Theres no way to turn off just one of these features, but you can disable all of them with a single control.Writing Tools refers to the AI tools to compose, proofread, or rewrite or reformat your text.And ChatGPT is self-explanatory. Though its perhaps an odd addition, since theres already a separate ChatGPT toggle inside Apple Intelligences own Settings menu.How to disable certain Apple Intelligence featuresTo find the above options inside Screen Time, here are the steps youll need to follow.Open the Settings appGo to the Screen Time menuOpen Content & Privacy RestrictionsMake sure that the green toggle at the top is onThen open Intelligence & Siri to find the AI controlsAfter youve disabled a given feature, youll notice that even UI elements referencing it will disappear.For example, disabling Image Creation will remove the glowing Genmoji icon from the emoji keyboard. And disabling Writing Tools will remove the icon from Notes toolbar, and the copy/paste menu.Note: in my testing, it usually takes a little time or an app force-quit before the relevant AI interface elements actually disappear.Do you plan to disable any Apple Intelligence features? Let us know in the comments.Best iPhone accessoriesAdd 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.Youre reading 9to5Mac experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Dont know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 146 Visualizações

9TO5MAC.COMYou can manually disable certain Apple Intelligence features, heres howApple Intelligences ever-growing feature set has brought additional storage requirements on your device, but its also come with new controls over which features are enabled. Heres how to manually disable certain Apple Intelligence features on your iPhone and more.Screen Time includes method to disable three types of Apple Intelligence featuresApple Intelligence is mostly an all-or-nothing feature set.When you enable AI from your iPhones Settings app, or as part of an iOS setup walkthrough, youre activating nearly the entire Apple Intelligence feature set.But theres also a way to selectively scale back.Inside Screen Time, Apple has built in options to disable or enable three different categories of Apple Intelligence:Image CreationWriting Tools ChatGPT ExtensionThe first category applies to Image Playground, Genmoji, and Image Wand. Theres no way to turn off just one of these features, but you can disable all of them with a single control.Writing Tools refers to the AI tools to compose, proofread, or rewrite or reformat your text.And ChatGPT is self-explanatory. Though its perhaps an odd addition, since theres already a separate ChatGPT toggle inside Apple Intelligences own Settings menu.How to disable certain Apple Intelligence featuresTo find the above options inside Screen Time, here are the steps youll need to follow.Open the Settings appGo to the Screen Time menuOpen Content & Privacy RestrictionsMake sure that the green toggle at the top is onThen open Intelligence & Siri to find the AI controlsAfter youve disabled a given feature, youll notice that even UI elements referencing it will disappear.For example, disabling Image Creation will remove the glowing Genmoji icon from the emoji keyboard. And disabling Writing Tools will remove the icon from Notes toolbar, and the copy/paste menu.Note: in my testing, it usually takes a little time or an app force-quit before the relevant AI interface elements actually disappear.Do you plan to disable any Apple Intelligence features? Let us know in the comments.Best iPhone accessoriesAdd 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.Youre reading 9to5Mac experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Dont know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 146 Visualizações

/cdn.vox-cdn.com/uploads/chorus_asset/file/25821419/rca_tvs.jpg)