0 Reacties

0 aandelen

111 Views

Bedrijvengids

Bedrijvengids

-

Please log in to like, share and comment!

-

WWW.WIRED.COMGlobal Warming Is Wreaking Havoc on the Planets Water CycleIn 2024, natural disasters related to variations in the water cycle caused more than 8,700 deaths and at least $550 billion of economic loss.0 Reacties 0 aandelen 112 Views

WWW.WIRED.COMGlobal Warming Is Wreaking Havoc on the Planets Water CycleIn 2024, natural disasters related to variations in the water cycle caused more than 8,700 deaths and at least $550 billion of economic loss.0 Reacties 0 aandelen 112 Views -

WWW.NYTIMES.COMMeta Goes MAGA Mode + a Big Month in A.I. + HatGPTI think this set of changes that the company announced this week are the most important series of policy changes that they have made in the past five years.0 Reacties 0 aandelen 124 Views

WWW.NYTIMES.COMMeta Goes MAGA Mode + a Big Month in A.I. + HatGPTI think this set of changes that the company announced this week are the most important series of policy changes that they have made in the past five years.0 Reacties 0 aandelen 124 Views -

WWW.MACWORLD.COMThe best CES 2025 Mac and iPhone accessories you need to seeMacworldThere are many varied and even crazy products launched or demoed at the giant Consumer Electronics Show in Las Vegas every January. We picked out 8 cool CES announcements every Apple fan will want to buy, and here we ceremoniously select our absolute favorites from the show floor. Trying not to be distracted by AI robots, body scanners, and a spoon that supposedly makes your food taste better, we walked the miles of halls to find the best new Mac- and iPhone-friendly accessory products announced at this years CES.Anker 140W ChargerFoundryEveryone who buys an iPhone needs to bring their own USB-C wall charger, and while theyve gotten smaller and more powerful over the years, they still look pretty ugly and ungainly plugged into your wall. Ankers new compact and powerful four-port 140W USB-C Charger helps restore some of your home or office aesthetics by placing all four of its ports on the underside so that the cables hang down vertically. This should also make the cables and the charger itself less prone to falling out of the power socket if pulled or knocked.With a maximum 140W output, it can fast-charge PD 3.1 laptops such as the 16-inch MacBook Pro. There are three USB-C ports and one legacy USB-A for older cables. It also offers a color smart display that showcases real-time power output and remaining power for each port. It costs $90/90 and is available now in either silver or gray.Ugreen Nexode 500W 6-Port GaN Desktop ChargerSimon Jary / FoundryUSB-C wall chargers are small and powerful but when you need a lot of power you require a desktop charger with a proper power supply. In the past we have reviewed a desktop charger with a maximum power output of 300W, but Ugreen has now trumped that and some with its announcement of the worlds first 500W GaN charger at CES.The top USB-C port can output at a mighty 240W (PD 3.1), again a first in the hands of Macworld reviewers. It can charge up to six devices simultaneously, and Ugreen claims that this charger is powerful enough even for power-hungry devices such as e-bikes. Plugable UD-7400PD Docking StationPlugableIt might seem greedy to connect five external displays to your MacBook, but some Mac users might actually need it. For those people, its now possiblePlugable has released the worlds first five-screen DisplayLink docking station for your MacBook.Of course, you dont have to connect five displays, the dock is suitable for two or three extra independent screens as well, with a mix of ports that gives flexible solutions for video, data, and power. The Plugable UD-7400PD has two HDMI ports and three 10Gbps USB-C ports that can handle video connections, as well as another 30W USB-C port, two 10Gbps USB-A ports, 2.5Gb Ethernet, and an audio socket.As it uses DisplayLink software even the usually display-shy plain (non-Pro/Max) M1 and M2 MacBooks can take over your working space with quintuple monitors. Read more on our tests of the best DisplayLink docks and how this third-party software solves some big Apple display limitations.It is expected to become available in March at a cost of around $235.Revodok Max 2131 Thunderbolt 5 Docking StationSimon Jary / FoundryApple is first to market again with a mainstream computer, the M4 Mac mini and M4 Pro/Mac MacBooks, that boast the latest version of the super-fast Thunderbolt 5 data, video, and power connector. But to make full use of Thunderbolt 5s bidirectional data-transfer speeds of up to 80Gbps and 120Gbps display bandwidth, you need some devices that support it, and Ugreen showed off its forthcoming Thunderbolt 5 docking station at CES.The Revodok Max 2131 Thunderbolt 5 Docking Station supports single-screen 8K resolution at 60Hz or dual-screen 4K at 144Hz. It features 13 ports including three Thunderbolt 5 ports: one upstream to the computer and two downstream for extra devices. Its not coming till later this year, so if you cant wait, check out the best Thunderbolt 4 docks for Mac.OWC Thunderbolt 5 HubSimon Jary / FoundryIf you dont want a full docking station but still need a bunch of 80/120Gbps Thunderbolt 5 ports, OWC newest hub has four speedy Thunderbolt 5 ports and an extra 10Gbps USB-C port.Thunderbolt 5 is up to two times faster than Thunderbolt 4 and USB4 and can provide an incredible 120Gbps for higher display bandwidth needs. Even if your computer doesnt yet have Thunderbolt 5, the technology is backwards compatible with Thunderbolt 4, Thunderbolt 3, USB4 and USB-C devices.The OWC Thunderbolt 5 Hub delivers up to 140W to fast-charge any MacBook and is available now for $190.Satechi M4 Mac mini HubSatechiApples products are notoriously difficult to internally customize, and when the Mac mini was found to be lacking front-facing ports along came Satechis matching Mac mini Stand and Hub to add a bunch of ports where users demanded them. Apple rectified its front-port oversight when it released its latest M4 Mac mini with front-facing USB-C ports, but Satechi has created a new matching hub that adds back three legacy USB-A ports (two at 10Gbps and one 480Mbps) and an SD Card reader.This time Satechi has fixed a different problemthe M4 hub features a cutout that enables easy access to the minis power switch. It also raises the mini slightly with bottom heat-dissipating vents and a recessed top area for optimal cooling. The new Satechi M4 Stand & Hub also includes an SSD enclosure supporting up to 4TB of NVMe storage, so you can add fast storage without having an external drive hogging one of the Macs ports. The Satechi Mac Mini M4 Stand & Hub with SSD Enclosure will be available for $100 in February.ESR HaloLock MagMouse Wireless MouseSimon Jary / FoundryWe have to admit, we werent expecting to see a mouse that can magnetically clamp to the top of your laptop at CES. The ESR MagMouse does just that. Sadly its magnetism doesnt extend to wireless charging, but it does boast a handy built-in retractable USB-C cable. Take a look at our other recommendations in our best Mac mice roundup.ESR expects the MagMouse to ship in April.BoostCharge Power Bank 20K with Integrated CableSimon Jary / FoundryColorful? Check. Powerful? Check? Portable? Check. This new 20000mAh power bank from Belkin can recharge your MacBook and/or your phone and other USB-C or Thunderbolt devices without needing to bring along a separate cable, as it comes with its own built in cable that tidily tucks away when not in use.There are additional USB-C and USB-A ports that allow for charging up to three devices simultaneously. The BoostCharge Power Bank 20K can charge at 30W, which is perfect for the newest iPhones. Its a little slowalthough still usablefor a MacBook Pro but fine for a MacBook Air.It will be available in April in a range of colorsblack, white, blue, and pinkand has its casing made with 90 percent recycled plastics. Go here for more of the best MacBook power bank options.Torras PolarCircle Qi2 Mag-Safe ChargerSimon Jary / FoundryTorras makes some great iPhone cases but we were particularly taken with its PolarCircle Qi2 Mag-Safe Charger which uses an advanced TEC semiconductor cooling to maintain optimal charging temperatures during the charging process, preventing overheating and ensuring efficient charging. It can reduce the charging temperature by up to 77F.As a charger, it uses Qi2 for fast 15W wireless charging. Just like the Torras phone cases, this cooling wireless charger features a 360-degree rotating stand so you can catch up on your streaming or make a FaceTime call while efficiently charging your iPhone. See Macworlds recommended best MagSafe chargers for more options while you wait for this one to arrive.Romoss Solar Power Bank 30000mAh 65W Solar ChargerSimon Jary / FoundryKeeping a phone cool should make charging more efficient but there are times when the heat of the Sun can help, too. This power bank from Romoss features six removable solar panels for self-charging in the wild. The power bank has an impressive 30000mAh capacity yet is lightweight and portableit weighs 22oz (628g). The solar panels weigh 17.4oz (494g).Belkin PowerGripSimon Jary / FoundryiPhone users who crave a retro tech touch can add this cute but mutlfunctional gadget that turns your phone into an old-school digital camera. Enjoy the thrill of pushing a physical button on the Belkin PowerGrip to take photos and hold it like an old-school point-and-click camera.The PowerGrip is much more than a camera gimmick, however, as it includes a high-capacity (10000mAh) power bank with 7.5W MagSafe-compatible wireless charging, USB-C output ports, a retractable USB-C charging cable, and LED screen to show battery percentage. As if that isnt fun enough, the PowerGrip comes in five colorsPowder Blue, Sand, Yellow, Pepper, and Lavenderand will be available in May.Moft Trackable Wallet StandFoundryMoft makes some great foldable wallet stands and has now gone one better with a 1.1mm, 2.3oz slim MagSafe wallet stand that includes Apples FindMy tracker functionality. The 80mAh battery is rechargeable. My colleague Roman Loyola described it as an origami AirTag. It will be available in May for $49.99. Check out Macworlds collection of the best iPhone 16 cases.0 Reacties 0 aandelen 116 Views

WWW.MACWORLD.COMThe best CES 2025 Mac and iPhone accessories you need to seeMacworldThere are many varied and even crazy products launched or demoed at the giant Consumer Electronics Show in Las Vegas every January. We picked out 8 cool CES announcements every Apple fan will want to buy, and here we ceremoniously select our absolute favorites from the show floor. Trying not to be distracted by AI robots, body scanners, and a spoon that supposedly makes your food taste better, we walked the miles of halls to find the best new Mac- and iPhone-friendly accessory products announced at this years CES.Anker 140W ChargerFoundryEveryone who buys an iPhone needs to bring their own USB-C wall charger, and while theyve gotten smaller and more powerful over the years, they still look pretty ugly and ungainly plugged into your wall. Ankers new compact and powerful four-port 140W USB-C Charger helps restore some of your home or office aesthetics by placing all four of its ports on the underside so that the cables hang down vertically. This should also make the cables and the charger itself less prone to falling out of the power socket if pulled or knocked.With a maximum 140W output, it can fast-charge PD 3.1 laptops such as the 16-inch MacBook Pro. There are three USB-C ports and one legacy USB-A for older cables. It also offers a color smart display that showcases real-time power output and remaining power for each port. It costs $90/90 and is available now in either silver or gray.Ugreen Nexode 500W 6-Port GaN Desktop ChargerSimon Jary / FoundryUSB-C wall chargers are small and powerful but when you need a lot of power you require a desktop charger with a proper power supply. In the past we have reviewed a desktop charger with a maximum power output of 300W, but Ugreen has now trumped that and some with its announcement of the worlds first 500W GaN charger at CES.The top USB-C port can output at a mighty 240W (PD 3.1), again a first in the hands of Macworld reviewers. It can charge up to six devices simultaneously, and Ugreen claims that this charger is powerful enough even for power-hungry devices such as e-bikes. Plugable UD-7400PD Docking StationPlugableIt might seem greedy to connect five external displays to your MacBook, but some Mac users might actually need it. For those people, its now possiblePlugable has released the worlds first five-screen DisplayLink docking station for your MacBook.Of course, you dont have to connect five displays, the dock is suitable for two or three extra independent screens as well, with a mix of ports that gives flexible solutions for video, data, and power. The Plugable UD-7400PD has two HDMI ports and three 10Gbps USB-C ports that can handle video connections, as well as another 30W USB-C port, two 10Gbps USB-A ports, 2.5Gb Ethernet, and an audio socket.As it uses DisplayLink software even the usually display-shy plain (non-Pro/Max) M1 and M2 MacBooks can take over your working space with quintuple monitors. Read more on our tests of the best DisplayLink docks and how this third-party software solves some big Apple display limitations.It is expected to become available in March at a cost of around $235.Revodok Max 2131 Thunderbolt 5 Docking StationSimon Jary / FoundryApple is first to market again with a mainstream computer, the M4 Mac mini and M4 Pro/Mac MacBooks, that boast the latest version of the super-fast Thunderbolt 5 data, video, and power connector. But to make full use of Thunderbolt 5s bidirectional data-transfer speeds of up to 80Gbps and 120Gbps display bandwidth, you need some devices that support it, and Ugreen showed off its forthcoming Thunderbolt 5 docking station at CES.The Revodok Max 2131 Thunderbolt 5 Docking Station supports single-screen 8K resolution at 60Hz or dual-screen 4K at 144Hz. It features 13 ports including three Thunderbolt 5 ports: one upstream to the computer and two downstream for extra devices. Its not coming till later this year, so if you cant wait, check out the best Thunderbolt 4 docks for Mac.OWC Thunderbolt 5 HubSimon Jary / FoundryIf you dont want a full docking station but still need a bunch of 80/120Gbps Thunderbolt 5 ports, OWC newest hub has four speedy Thunderbolt 5 ports and an extra 10Gbps USB-C port.Thunderbolt 5 is up to two times faster than Thunderbolt 4 and USB4 and can provide an incredible 120Gbps for higher display bandwidth needs. Even if your computer doesnt yet have Thunderbolt 5, the technology is backwards compatible with Thunderbolt 4, Thunderbolt 3, USB4 and USB-C devices.The OWC Thunderbolt 5 Hub delivers up to 140W to fast-charge any MacBook and is available now for $190.Satechi M4 Mac mini HubSatechiApples products are notoriously difficult to internally customize, and when the Mac mini was found to be lacking front-facing ports along came Satechis matching Mac mini Stand and Hub to add a bunch of ports where users demanded them. Apple rectified its front-port oversight when it released its latest M4 Mac mini with front-facing USB-C ports, but Satechi has created a new matching hub that adds back three legacy USB-A ports (two at 10Gbps and one 480Mbps) and an SD Card reader.This time Satechi has fixed a different problemthe M4 hub features a cutout that enables easy access to the minis power switch. It also raises the mini slightly with bottom heat-dissipating vents and a recessed top area for optimal cooling. The new Satechi M4 Stand & Hub also includes an SSD enclosure supporting up to 4TB of NVMe storage, so you can add fast storage without having an external drive hogging one of the Macs ports. The Satechi Mac Mini M4 Stand & Hub with SSD Enclosure will be available for $100 in February.ESR HaloLock MagMouse Wireless MouseSimon Jary / FoundryWe have to admit, we werent expecting to see a mouse that can magnetically clamp to the top of your laptop at CES. The ESR MagMouse does just that. Sadly its magnetism doesnt extend to wireless charging, but it does boast a handy built-in retractable USB-C cable. Take a look at our other recommendations in our best Mac mice roundup.ESR expects the MagMouse to ship in April.BoostCharge Power Bank 20K with Integrated CableSimon Jary / FoundryColorful? Check. Powerful? Check? Portable? Check. This new 20000mAh power bank from Belkin can recharge your MacBook and/or your phone and other USB-C or Thunderbolt devices without needing to bring along a separate cable, as it comes with its own built in cable that tidily tucks away when not in use.There are additional USB-C and USB-A ports that allow for charging up to three devices simultaneously. The BoostCharge Power Bank 20K can charge at 30W, which is perfect for the newest iPhones. Its a little slowalthough still usablefor a MacBook Pro but fine for a MacBook Air.It will be available in April in a range of colorsblack, white, blue, and pinkand has its casing made with 90 percent recycled plastics. Go here for more of the best MacBook power bank options.Torras PolarCircle Qi2 Mag-Safe ChargerSimon Jary / FoundryTorras makes some great iPhone cases but we were particularly taken with its PolarCircle Qi2 Mag-Safe Charger which uses an advanced TEC semiconductor cooling to maintain optimal charging temperatures during the charging process, preventing overheating and ensuring efficient charging. It can reduce the charging temperature by up to 77F.As a charger, it uses Qi2 for fast 15W wireless charging. Just like the Torras phone cases, this cooling wireless charger features a 360-degree rotating stand so you can catch up on your streaming or make a FaceTime call while efficiently charging your iPhone. See Macworlds recommended best MagSafe chargers for more options while you wait for this one to arrive.Romoss Solar Power Bank 30000mAh 65W Solar ChargerSimon Jary / FoundryKeeping a phone cool should make charging more efficient but there are times when the heat of the Sun can help, too. This power bank from Romoss features six removable solar panels for self-charging in the wild. The power bank has an impressive 30000mAh capacity yet is lightweight and portableit weighs 22oz (628g). The solar panels weigh 17.4oz (494g).Belkin PowerGripSimon Jary / FoundryiPhone users who crave a retro tech touch can add this cute but mutlfunctional gadget that turns your phone into an old-school digital camera. Enjoy the thrill of pushing a physical button on the Belkin PowerGrip to take photos and hold it like an old-school point-and-click camera.The PowerGrip is much more than a camera gimmick, however, as it includes a high-capacity (10000mAh) power bank with 7.5W MagSafe-compatible wireless charging, USB-C output ports, a retractable USB-C charging cable, and LED screen to show battery percentage. As if that isnt fun enough, the PowerGrip comes in five colorsPowder Blue, Sand, Yellow, Pepper, and Lavenderand will be available in May.Moft Trackable Wallet StandFoundryMoft makes some great foldable wallet stands and has now gone one better with a 1.1mm, 2.3oz slim MagSafe wallet stand that includes Apples FindMy tracker functionality. The 80mAh battery is rechargeable. My colleague Roman Loyola described it as an origami AirTag. It will be available in May for $49.99. Check out Macworlds collection of the best iPhone 16 cases.0 Reacties 0 aandelen 116 Views -

WWW.MACWORLD.COMAt CES 2025, everyone wants to be just like AppleMacworldIn the world of sports, its sometimes easier to impress by not playing. When youre in the game, everything you do is judged and all your mistakes happen under a harsh spotlight. But get yourself dropped behind some other poor bloke now getting picked apart for their mistakes, youll find that all is forgiven or, better yet, forgotten. Lets get David Price back in the game, theyll say. Im sure we were wrong about him being physically incompetent and afraid of the ball.I often wonder if some variant of this mindset is what leads Apple to snub trade shows and conferences when it has the resources to attend every event on the planet if it wants. Lots of new tech products made their debut at CES in Las Vegas this month, for example, but there were none at all by the industrys highest-profile brand. And yet, in their absence, Apples products picked up some of the most positive coverage all the same.Take poor old Dell, which at CES announced a major rebranding for its PC lines. Instead of XPS, Inspiron, and Latitude, the companys machines will be branded as Dell, Dell Pro, and Dell Pro Maxterms that are familiar to customers because theyre inspired, shall we charitably say, by the iPhone. The result? Mockery for Dell and a bunch of headlines that plug Apples products and make the Cupertino company sound like a trendsetter.I rather feel for Dell, which clearly got this one wrong and doesnt seem to know how to make it right. Apple has a tendency to do very difficult and complicated things and make them look easy and simple. When rivals follow suit they trip over their shoelaces by adding, in this case, needlessly complicating sub-brands like Plus and Premium. In any case, simplicity isnt about your choice of words, its about the fundamental structure of your product portfolio. (Incidentally, Apple doesnt always get these things right either. But of course, its mistakes are forgotten when someone else is in the spotlight.)Nvidia, meanwhile, was doing its own Apple impersonation elsewhere in Las Vegas: the firms Project Digits supercomputer had barely landed on the CES show floor before it was getting described as a Mac mini clone, and not without good reason. Its far more powerful (and correspondingly far more expensive) than the Mac mini, but the palmtop design is extremely familiar, as is the focus on AI. And it would be hard to imagine that the words Like a Mac mini, except were not uttered at some point in its development cycle.NvidiaEven some of the more positive headlines were marred, for Apples rivals, by invidious comparisons with the absent giant. Asus got pretty much everything right with the Zenbook A14, but suffered the indignity of seeing this heralded as little more than a MacBook Air competitor. The company, in fact, played up to the comparison, joking that it had considered the name Zenbook Air. But I always regard this strategy, which in movies and TV shows is known as lampshading, as cheating. Joking that your design is unoriginal doesnt change the fact that it is.Whether or not theyre prepared to admit it, Apples rivals spent much of CES 2025 trying to copy its moves. Instead of using its absence from the show as an opportunity to present something different, they delivered more of the sameonly with PC chips, worse software, and disastrous branding. Whereas Apple got a bunch of uncritical PR without doing anything.Its often said that Apple doesnt innovate out of thin air. Rather, it bides its time and lets other companies build up a market before swooping in at the crucial moment and grabbing the revenue. I would agree that the company is rarely first to enter a market. But what it often does is create the first iconic product in a market, the one which defines what that market represents in the popular consciousnessand, all too often, in the minds of its competitors too. After the launch of an iPhone or a MacBook Air, rivals struggle to envision an alternative that doesnt begin with Apples offering and then iterate from that.The irony is that if the companies really wanted to be like Apple, the best thing would be not to turn up at CES at all. But when the star player is away, its simply too tempting to rush onto the field and do your best to impress the fans.0 Reacties 0 aandelen 126 Views

WWW.MACWORLD.COMAt CES 2025, everyone wants to be just like AppleMacworldIn the world of sports, its sometimes easier to impress by not playing. When youre in the game, everything you do is judged and all your mistakes happen under a harsh spotlight. But get yourself dropped behind some other poor bloke now getting picked apart for their mistakes, youll find that all is forgiven or, better yet, forgotten. Lets get David Price back in the game, theyll say. Im sure we were wrong about him being physically incompetent and afraid of the ball.I often wonder if some variant of this mindset is what leads Apple to snub trade shows and conferences when it has the resources to attend every event on the planet if it wants. Lots of new tech products made their debut at CES in Las Vegas this month, for example, but there were none at all by the industrys highest-profile brand. And yet, in their absence, Apples products picked up some of the most positive coverage all the same.Take poor old Dell, which at CES announced a major rebranding for its PC lines. Instead of XPS, Inspiron, and Latitude, the companys machines will be branded as Dell, Dell Pro, and Dell Pro Maxterms that are familiar to customers because theyre inspired, shall we charitably say, by the iPhone. The result? Mockery for Dell and a bunch of headlines that plug Apples products and make the Cupertino company sound like a trendsetter.I rather feel for Dell, which clearly got this one wrong and doesnt seem to know how to make it right. Apple has a tendency to do very difficult and complicated things and make them look easy and simple. When rivals follow suit they trip over their shoelaces by adding, in this case, needlessly complicating sub-brands like Plus and Premium. In any case, simplicity isnt about your choice of words, its about the fundamental structure of your product portfolio. (Incidentally, Apple doesnt always get these things right either. But of course, its mistakes are forgotten when someone else is in the spotlight.)Nvidia, meanwhile, was doing its own Apple impersonation elsewhere in Las Vegas: the firms Project Digits supercomputer had barely landed on the CES show floor before it was getting described as a Mac mini clone, and not without good reason. Its far more powerful (and correspondingly far more expensive) than the Mac mini, but the palmtop design is extremely familiar, as is the focus on AI. And it would be hard to imagine that the words Like a Mac mini, except were not uttered at some point in its development cycle.NvidiaEven some of the more positive headlines were marred, for Apples rivals, by invidious comparisons with the absent giant. Asus got pretty much everything right with the Zenbook A14, but suffered the indignity of seeing this heralded as little more than a MacBook Air competitor. The company, in fact, played up to the comparison, joking that it had considered the name Zenbook Air. But I always regard this strategy, which in movies and TV shows is known as lampshading, as cheating. Joking that your design is unoriginal doesnt change the fact that it is.Whether or not theyre prepared to admit it, Apples rivals spent much of CES 2025 trying to copy its moves. Instead of using its absence from the show as an opportunity to present something different, they delivered more of the sameonly with PC chips, worse software, and disastrous branding. Whereas Apple got a bunch of uncritical PR without doing anything.Its often said that Apple doesnt innovate out of thin air. Rather, it bides its time and lets other companies build up a market before swooping in at the crucial moment and grabbing the revenue. I would agree that the company is rarely first to enter a market. But what it often does is create the first iconic product in a market, the one which defines what that market represents in the popular consciousnessand, all too often, in the minds of its competitors too. After the launch of an iPhone or a MacBook Air, rivals struggle to envision an alternative that doesnt begin with Apples offering and then iterate from that.The irony is that if the companies really wanted to be like Apple, the best thing would be not to turn up at CES at all. But when the star player is away, its simply too tempting to rush onto the field and do your best to impress the fans.0 Reacties 0 aandelen 126 Views -

WWW.COMPUTERWORLD.COMMeta puts the Dead Internet Theory into practiceMetas mission statementis to build the future of human connection and the technology that makes it possible.According to Meta, the future of human connection is basically humans connecting with AI.The company has already rolled out and is working to radically expand tools that enable real users to create fake users on the platform on a massive scale. Meta is hoping to convince its 3 billion users that chatting with, commenting on the posts of, and generally interacting with software that pretends to be human is a normal and desirable thing to do.Meta treats the dystopian Dead Internet Theory the belief that most online content, traffic, and user interactions are generated by AI and bots rather than humans as a business plan instead of a toxic trend to be opposed.In the old days, when Meta was called Facebook, the company wrapped every new initiative in the warm metaphorical blanket of human connectionconnecting people to each other.Now, it appears Meta wants users to engage with anyone or anythingreal or fake doesnt matter, as long as theyre engaging, which is to say spending time on the platforms and money on the advertised products and services.In other words, Meta has so many users that the only way to continue its previous rapid growth is to build users out of AI. The good news is that Metas Dead Internet projects are not going well.Metas aim to get people talking and interacting with non-human AI has taken several forms.The Fake Celebrities ProjectIn September 2023, Meta launched AI chatbots featuring celebrity likenesses, including Kendall Jenner, MrBeast, Snoop Dogg, Charli DAmelio, and Paris Hilton.Users largely rejected and ignored the chatbots, and Meta ended the program.The Fake Influencer Engagement ProgramMeta is testing a program called Creator AI,which enables influencers to create AI-generated bot versions of themselves. These bots would be designed to look, act, sound, and write like the influencers who made them, and would be trained on the wording of their posts.The influencer bots would engage in interactive direct messages and respond to comments on posts, fueling the unhealthy parasocial relationships millions already have with celebrities and influencers on Meta platforms. The other benefit is that the influencers could outsource fan engagement to a bot.(Here at meta, we engage with your fans so you dont have to!)And Meta has even started testing a new feature that automatically adds AI images of users (based on their profile pics) privately into their Instagram feeds, presumably to drive demand and acclimate the public to the idea of turning themselves into AI.The Fake Users InitiativeMeta launched its AI Studio in the United States in July 2024; it empowers users without AI skills to create user accounts of invented fake users, complete with profile pics, voices, and personalities.The idea is that these computer-generated users have profiles that exist just like human-user profiles, which can interact with real people on Instagram, Messenger, WhatsApp, and the web.Meta plans to enable these personas to do the same on Metas metaverse virtual reality platforms.A senior Meta executive recently defended the AI-powered fake user concept. We expect these AIs to actually, over time, exist on our platforms, kind of in the same way that accounts do, Connor Hayes, vice president of product for generative AI at Meta, said in aFinancial Timesarticle. Theyll have bios and profile pictures and be able to generate and share content powered by AI on the platform.... Thats where we see all of this going.Hayes added that while hundreds of thousands of such characters have already been created by users, most have been kept private (defeating their purpose of driving engagement).The Fake Experiences FollyMeta also plans to release its text-to-video generation software to content creators. This will essentially enable users to place themselves into AI-generated videos, where they can be depicted doing things they never did in places theyve never been.The Fake Facebook Folks FiascoAbout a year ago, Meta created and managed 28 fake-user accounts on Facebook and Instagram. The profiles contained bios and AI-generated profile pictures and posted AI-generated content (responsibly labeled as both AI and managed by Meta) on which any user could comment. Users could also chat with the bots.Recently, the public started noticing these accounts and didnt like what they saw. Social media mobs shamed Meta into deleting the accounts.One strain of criticism was that the fake users simulated human stereotypes, which were found to not represent the communities they were pretending to be part of.Also, as with most AI-generated content, the output was often dull, generic, corporate-sounding, wrong, and/or offensive. It didnt get much engagement, which, for Meta, was the entire purpose for the effort. (Another criticism was that users couldnt block the account; Meta blamed a bug for the problem.)AI slop is a problem; Meta sees an opportunityAll thisintentional AI fakerytakes place on platforms where the biggest and most harmful quality is arguablybottomless pools of spammy AI slopgenerated by users without content-creation help from Meta.The genre uses bad AI-generated, often-bizarre images to elicit a knee-jerk emotional reaction and engagement.In Facebook posts, these engagement bait pictures are accompanied by strange, often nonsensical, and manipulative text elements. The more successful posts have religious, military, political, or general pathos themes (sad, suffering AI children, for example).The posts often include weird words. Posters almost always hashtag celebrity names. Many contain information about unrelated topics, like cars. Many such posts ask, Why dont pictures like this ever trend?These bizarre posts anchored in bad AI, bad taste, and bad faith are rife on Facebook.You can block AI slop profiles. But they just keep coming believe me, I tried. Blocking, reporting, criticizing, and ignoring have zero impact on the constant appearance of these posts, as far as I can tell.And the apparent reason is that Metas algorithm is rewarding them.Meta is not only failing to stop these posts, but is essentially paying the content creators to make them andusing its algorithms to boost them. Spammy AI slop falls perfectly into line with Metas apparent conclusion that any garbage is good if it drives engagement.The AI content crisisAI content, in general, is a crisis online for a very simple reason: Social media users, content creators, would-be influencers, advertisers, and marketers dont quite seem to realize that AI-generated content, for lack of a better term, sucks.AI-generated text, for example, uses repetitive, generic language that doesnt flow and doesnt have a voice. Word choices tend to be off, and the AI usually cant tell the difference between whats important and whats irrelevant.AI-generated images are especially problematic. According to multiple studies, people feel more negatively about AI-generated images than real photos.Social networks are filled with AI-generated images. Billions have been created using text-to-image AI tools since 2022, many posted online.To quantify: A year ago, some71% of images shared on social media in the UShad been AI-generated. In Canada, that figure was 77%. In addition, 26% of marketers were using AI to create marketing images, and that percentage rose to 39% for marketers posting on social.According to the 2024 Imperva Bad Bot Report by Thales,bots accounted for 49.6% of all global internet trafficin 2023. One-third (32%) of internet traffic was attributed to malicious bots. And 18% came from good bots (search engine crawlers, for example).In 2023, only 50.4% of internet traffic was human activity. Now, in the first month of 2025, human traffic is definitely a minority of all internet activity.The Dead Internet Theory people are not only conspiracy theorists, theyre also ahead of the curve. If the theory holds that a majority of online activity is by AI, bots, and agents, then the theory is now objectively true.(The theory offers a host of reasons for that outcome that have not been proven true. Proponents believe bots and AI are intentionally created to manipulate algorithms, boost search results, and control public perception.)Meta cheerfully boasts about its intentional creation of AI bots, but mainly to drive engagement.Metas fake-user initiatives remind me of its failed metaverse programs.As with the Dead Internet Theory, the metaverse concept was a dystopian nightmare dreamed up by novelists as a warning to mankind. The Dead Internet Theory is a conspiracy theory that attempts to explain how the internet went horribly wrong.But to Meta, the metaverse and Dead Internet theory are product roadmaps.Meta is proving itself to be an anti-human company thats working hard to get people away from the real world and trapped for many hours each day, going nowhere, doing nothing, and interacting with no one.Meta will fail. The public will reject its dystopian goals.But the rest of us should learn from their bad example. What the public really wants something Meta used to understand is human connection: people connecting to other people. Advertising, articles, posts, comments, and chats made by people rather than bots are becoming harder to find and, as such, also more valuable. Because a connection with nobody is no connection at all.0 Reacties 0 aandelen 145 Views

WWW.COMPUTERWORLD.COMMeta puts the Dead Internet Theory into practiceMetas mission statementis to build the future of human connection and the technology that makes it possible.According to Meta, the future of human connection is basically humans connecting with AI.The company has already rolled out and is working to radically expand tools that enable real users to create fake users on the platform on a massive scale. Meta is hoping to convince its 3 billion users that chatting with, commenting on the posts of, and generally interacting with software that pretends to be human is a normal and desirable thing to do.Meta treats the dystopian Dead Internet Theory the belief that most online content, traffic, and user interactions are generated by AI and bots rather than humans as a business plan instead of a toxic trend to be opposed.In the old days, when Meta was called Facebook, the company wrapped every new initiative in the warm metaphorical blanket of human connectionconnecting people to each other.Now, it appears Meta wants users to engage with anyone or anythingreal or fake doesnt matter, as long as theyre engaging, which is to say spending time on the platforms and money on the advertised products and services.In other words, Meta has so many users that the only way to continue its previous rapid growth is to build users out of AI. The good news is that Metas Dead Internet projects are not going well.Metas aim to get people talking and interacting with non-human AI has taken several forms.The Fake Celebrities ProjectIn September 2023, Meta launched AI chatbots featuring celebrity likenesses, including Kendall Jenner, MrBeast, Snoop Dogg, Charli DAmelio, and Paris Hilton.Users largely rejected and ignored the chatbots, and Meta ended the program.The Fake Influencer Engagement ProgramMeta is testing a program called Creator AI,which enables influencers to create AI-generated bot versions of themselves. These bots would be designed to look, act, sound, and write like the influencers who made them, and would be trained on the wording of their posts.The influencer bots would engage in interactive direct messages and respond to comments on posts, fueling the unhealthy parasocial relationships millions already have with celebrities and influencers on Meta platforms. The other benefit is that the influencers could outsource fan engagement to a bot.(Here at meta, we engage with your fans so you dont have to!)And Meta has even started testing a new feature that automatically adds AI images of users (based on their profile pics) privately into their Instagram feeds, presumably to drive demand and acclimate the public to the idea of turning themselves into AI.The Fake Users InitiativeMeta launched its AI Studio in the United States in July 2024; it empowers users without AI skills to create user accounts of invented fake users, complete with profile pics, voices, and personalities.The idea is that these computer-generated users have profiles that exist just like human-user profiles, which can interact with real people on Instagram, Messenger, WhatsApp, and the web.Meta plans to enable these personas to do the same on Metas metaverse virtual reality platforms.A senior Meta executive recently defended the AI-powered fake user concept. We expect these AIs to actually, over time, exist on our platforms, kind of in the same way that accounts do, Connor Hayes, vice president of product for generative AI at Meta, said in aFinancial Timesarticle. Theyll have bios and profile pictures and be able to generate and share content powered by AI on the platform.... Thats where we see all of this going.Hayes added that while hundreds of thousands of such characters have already been created by users, most have been kept private (defeating their purpose of driving engagement).The Fake Experiences FollyMeta also plans to release its text-to-video generation software to content creators. This will essentially enable users to place themselves into AI-generated videos, where they can be depicted doing things they never did in places theyve never been.The Fake Facebook Folks FiascoAbout a year ago, Meta created and managed 28 fake-user accounts on Facebook and Instagram. The profiles contained bios and AI-generated profile pictures and posted AI-generated content (responsibly labeled as both AI and managed by Meta) on which any user could comment. Users could also chat with the bots.Recently, the public started noticing these accounts and didnt like what they saw. Social media mobs shamed Meta into deleting the accounts.One strain of criticism was that the fake users simulated human stereotypes, which were found to not represent the communities they were pretending to be part of.Also, as with most AI-generated content, the output was often dull, generic, corporate-sounding, wrong, and/or offensive. It didnt get much engagement, which, for Meta, was the entire purpose for the effort. (Another criticism was that users couldnt block the account; Meta blamed a bug for the problem.)AI slop is a problem; Meta sees an opportunityAll thisintentional AI fakerytakes place on platforms where the biggest and most harmful quality is arguablybottomless pools of spammy AI slopgenerated by users without content-creation help from Meta.The genre uses bad AI-generated, often-bizarre images to elicit a knee-jerk emotional reaction and engagement.In Facebook posts, these engagement bait pictures are accompanied by strange, often nonsensical, and manipulative text elements. The more successful posts have religious, military, political, or general pathos themes (sad, suffering AI children, for example).The posts often include weird words. Posters almost always hashtag celebrity names. Many contain information about unrelated topics, like cars. Many such posts ask, Why dont pictures like this ever trend?These bizarre posts anchored in bad AI, bad taste, and bad faith are rife on Facebook.You can block AI slop profiles. But they just keep coming believe me, I tried. Blocking, reporting, criticizing, and ignoring have zero impact on the constant appearance of these posts, as far as I can tell.And the apparent reason is that Metas algorithm is rewarding them.Meta is not only failing to stop these posts, but is essentially paying the content creators to make them andusing its algorithms to boost them. Spammy AI slop falls perfectly into line with Metas apparent conclusion that any garbage is good if it drives engagement.The AI content crisisAI content, in general, is a crisis online for a very simple reason: Social media users, content creators, would-be influencers, advertisers, and marketers dont quite seem to realize that AI-generated content, for lack of a better term, sucks.AI-generated text, for example, uses repetitive, generic language that doesnt flow and doesnt have a voice. Word choices tend to be off, and the AI usually cant tell the difference between whats important and whats irrelevant.AI-generated images are especially problematic. According to multiple studies, people feel more negatively about AI-generated images than real photos.Social networks are filled with AI-generated images. Billions have been created using text-to-image AI tools since 2022, many posted online.To quantify: A year ago, some71% of images shared on social media in the UShad been AI-generated. In Canada, that figure was 77%. In addition, 26% of marketers were using AI to create marketing images, and that percentage rose to 39% for marketers posting on social.According to the 2024 Imperva Bad Bot Report by Thales,bots accounted for 49.6% of all global internet trafficin 2023. One-third (32%) of internet traffic was attributed to malicious bots. And 18% came from good bots (search engine crawlers, for example).In 2023, only 50.4% of internet traffic was human activity. Now, in the first month of 2025, human traffic is definitely a minority of all internet activity.The Dead Internet Theory people are not only conspiracy theorists, theyre also ahead of the curve. If the theory holds that a majority of online activity is by AI, bots, and agents, then the theory is now objectively true.(The theory offers a host of reasons for that outcome that have not been proven true. Proponents believe bots and AI are intentionally created to manipulate algorithms, boost search results, and control public perception.)Meta cheerfully boasts about its intentional creation of AI bots, but mainly to drive engagement.Metas fake-user initiatives remind me of its failed metaverse programs.As with the Dead Internet Theory, the metaverse concept was a dystopian nightmare dreamed up by novelists as a warning to mankind. The Dead Internet Theory is a conspiracy theory that attempts to explain how the internet went horribly wrong.But to Meta, the metaverse and Dead Internet theory are product roadmaps.Meta is proving itself to be an anti-human company thats working hard to get people away from the real world and trapped for many hours each day, going nowhere, doing nothing, and interacting with no one.Meta will fail. The public will reject its dystopian goals.But the rest of us should learn from their bad example. What the public really wants something Meta used to understand is human connection: people connecting to other people. Advertising, articles, posts, comments, and chats made by people rather than bots are becoming harder to find and, as such, also more valuable. Because a connection with nobody is no connection at all.0 Reacties 0 aandelen 145 Views -

APPLEINSIDER.COMTwenty years of the Mac mini, the little Mac that couldIt's once again a fan favorite with its new M4 version, but 20 years after Steve Jobs launched the Mac mini, it has had an extraordinary life of rave reviews and criticism.Apple's website announces the Mac mini in 2005Steve Jobs introduced the Mac mini with the explicit aim of attracting Windows switchers. Yet it never quite seemed to do what Apple wanted, and instead became a cult favorite Mac, even when the company has tried to forget about it.Jobs unveiled the Mac mini on January 10, 2005. Apple didn't issue a press release until the following day, though, and the Mac mini itself didn't ship until January 22. Continue Reading on AppleInsider | Discuss on our Forums0 Reacties 0 aandelen 114 Views

APPLEINSIDER.COMTwenty years of the Mac mini, the little Mac that couldIt's once again a fan favorite with its new M4 version, but 20 years after Steve Jobs launched the Mac mini, it has had an extraordinary life of rave reviews and criticism.Apple's website announces the Mac mini in 2005Steve Jobs introduced the Mac mini with the explicit aim of attracting Windows switchers. Yet it never quite seemed to do what Apple wanted, and instead became a cult favorite Mac, even when the company has tried to forget about it.Jobs unveiled the Mac mini on January 10, 2005. Apple didn't issue a press release until the following day, though, and the Mac mini itself didn't ship until January 22. Continue Reading on AppleInsider | Discuss on our Forums0 Reacties 0 aandelen 114 Views -

GAMINGBOLT.COMLike a Dragon: Pirate Yakuza in Hawaii Gameplay Showcases Crew Bonding, Pirate Coliseum, and MoreThe latest Like a Dragon Direct focused on Like a Dragon: Pirate Yakuza in Hawaii, starring Majima Goro on a sea-faring quest for treasure. As a spin-off of Infinite Wealth, its an action-adventure title with beat em up mechanics and naval combat. Check out the Direct below to learn more about its story, combat mechanics, and more.Majima wields two Styles, Mad Dog and Sea Dog, the latter employing dual cutlasses and pistols. Players can jump for the first time in the franchise, launching enemies and diving into them from above. You can switch between both Styles seamlessly and even harness Dark Instruments for powerful summons.Then theres naval combat, where players can recruit and assign over 100 crew members to their ship to battle hostile vessels. Bonding with them will improve their stats while equipping different weapons, from flamethrowers to lasers, is key to survival (and you can even man certain weapons). The sea also hosts dangerous monsters that have yet to be fully revealed.If all this wasnt enough, theres Madlantis, where the Pirate Coliseum awaits. The Direct confirms that four battle types await, including naval combat, for rewards.Like a Dragon: Pirate Yakuza in Hawaii launches on February 21st for Xbox One, Xbox Series X/S, PS4, PS5, and PC. Unlike Infinite Wealth, it will add New Game Plus for free post-launch.0 Reacties 0 aandelen 142 Views

GAMINGBOLT.COMLike a Dragon: Pirate Yakuza in Hawaii Gameplay Showcases Crew Bonding, Pirate Coliseum, and MoreThe latest Like a Dragon Direct focused on Like a Dragon: Pirate Yakuza in Hawaii, starring Majima Goro on a sea-faring quest for treasure. As a spin-off of Infinite Wealth, its an action-adventure title with beat em up mechanics and naval combat. Check out the Direct below to learn more about its story, combat mechanics, and more.Majima wields two Styles, Mad Dog and Sea Dog, the latter employing dual cutlasses and pistols. Players can jump for the first time in the franchise, launching enemies and diving into them from above. You can switch between both Styles seamlessly and even harness Dark Instruments for powerful summons.Then theres naval combat, where players can recruit and assign over 100 crew members to their ship to battle hostile vessels. Bonding with them will improve their stats while equipping different weapons, from flamethrowers to lasers, is key to survival (and you can even man certain weapons). The sea also hosts dangerous monsters that have yet to be fully revealed.If all this wasnt enough, theres Madlantis, where the Pirate Coliseum awaits. The Direct confirms that four battle types await, including naval combat, for rewards.Like a Dragon: Pirate Yakuza in Hawaii launches on February 21st for Xbox One, Xbox Series X/S, PS4, PS5, and PC. Unlike Infinite Wealth, it will add New Game Plus for free post-launch.0 Reacties 0 aandelen 142 Views -

GAMINGBOLT.COMMonster Hunter Wilds Will Need Players to Approach Hunts With a Nature-Focused PerspectiveCapcom has revealed some more details about its design intentions with changes to the classical quest structure of Monster Hunter games with the upcoming Monster Hunter Wilds. In an interview with IGN, Monster Hunter Wilds director Yuya Tokuda talks about how the more open structure of the game allows for players to face monsters from a more nature-focused perspective.The design (in past Monster Hunter games) where you participate in one quest at a time does provide the benefit of a game that you can always play in a stable environment, but you cant help but feel this to be unnatural in ways for a game that depicts ecosystems in the wild, said Tokuda. It seems like a given that youd face different monsters and winds from one day to the next, considering that nature-focused perspective.Tokuda also talks about the dynamic weather system and open design of Monster Hunter Wilds could lead to more dramatic moments for players as they face down the games roster of monsters.There are only a few dramatic scenes and events you get to encounter and experience in everyday life over the course of a year, said executive director and art director Kaname Fujioka.You may only be able to see an evenings beauty for a few dozen minutes over the course of a day, but those moments leave an incredible impression on you, Fujioka continued. The team has constantly shared an awareness of how important it is to have these kinds of dramatic moments and experiences properly playing out before you in a game while also making sure that they dont appear unnatural. Theres the risk of nothing happening to a player within a large open world or with changes in circumstances like seamless shifts in weather. We discussed this concern many times during development, and I was quite aware as a designer to be compacting and connecting what we can do to make things more fun, creating dramatic twists that constantly play out before you.Monster Hunter Wilds is currently in development for PC, PS5 and Xbox Series X/S. The game got an open beta test back in November, where players got to play around with some of its new open-world systems and new monsters. While the open-beta didnt feature too much in the way of content, players got to try their hand at taking on some of the games earlier monsters, including Doshugama and Rey Dau.Capcom has recently announced a second open beta test for Monster Hunter Wilds, which is slated to take place over the course of two weekends in February: February 6 to February 9, and February 13 to February 16. This second beta test will not feature the improvements that have been made to the games performance, and is meant to be a way for players that missed the first beta to get some hands-on time with the title before its February 28 release. The upcoming beta will also include a returning monster for players to hunt the Gypceros.For more details about how the game is expected to run on consoles, here is information about the titles graphics options on consoles.0 Reacties 0 aandelen 124 Views

GAMINGBOLT.COMMonster Hunter Wilds Will Need Players to Approach Hunts With a Nature-Focused PerspectiveCapcom has revealed some more details about its design intentions with changes to the classical quest structure of Monster Hunter games with the upcoming Monster Hunter Wilds. In an interview with IGN, Monster Hunter Wilds director Yuya Tokuda talks about how the more open structure of the game allows for players to face monsters from a more nature-focused perspective.The design (in past Monster Hunter games) where you participate in one quest at a time does provide the benefit of a game that you can always play in a stable environment, but you cant help but feel this to be unnatural in ways for a game that depicts ecosystems in the wild, said Tokuda. It seems like a given that youd face different monsters and winds from one day to the next, considering that nature-focused perspective.Tokuda also talks about the dynamic weather system and open design of Monster Hunter Wilds could lead to more dramatic moments for players as they face down the games roster of monsters.There are only a few dramatic scenes and events you get to encounter and experience in everyday life over the course of a year, said executive director and art director Kaname Fujioka.You may only be able to see an evenings beauty for a few dozen minutes over the course of a day, but those moments leave an incredible impression on you, Fujioka continued. The team has constantly shared an awareness of how important it is to have these kinds of dramatic moments and experiences properly playing out before you in a game while also making sure that they dont appear unnatural. Theres the risk of nothing happening to a player within a large open world or with changes in circumstances like seamless shifts in weather. We discussed this concern many times during development, and I was quite aware as a designer to be compacting and connecting what we can do to make things more fun, creating dramatic twists that constantly play out before you.Monster Hunter Wilds is currently in development for PC, PS5 and Xbox Series X/S. The game got an open beta test back in November, where players got to play around with some of its new open-world systems and new monsters. While the open-beta didnt feature too much in the way of content, players got to try their hand at taking on some of the games earlier monsters, including Doshugama and Rey Dau.Capcom has recently announced a second open beta test for Monster Hunter Wilds, which is slated to take place over the course of two weekends in February: February 6 to February 9, and February 13 to February 16. This second beta test will not feature the improvements that have been made to the games performance, and is meant to be a way for players that missed the first beta to get some hands-on time with the title before its February 28 release. The upcoming beta will also include a returning monster for players to hunt the Gypceros.For more details about how the game is expected to run on consoles, here is information about the titles graphics options on consoles.0 Reacties 0 aandelen 124 Views -

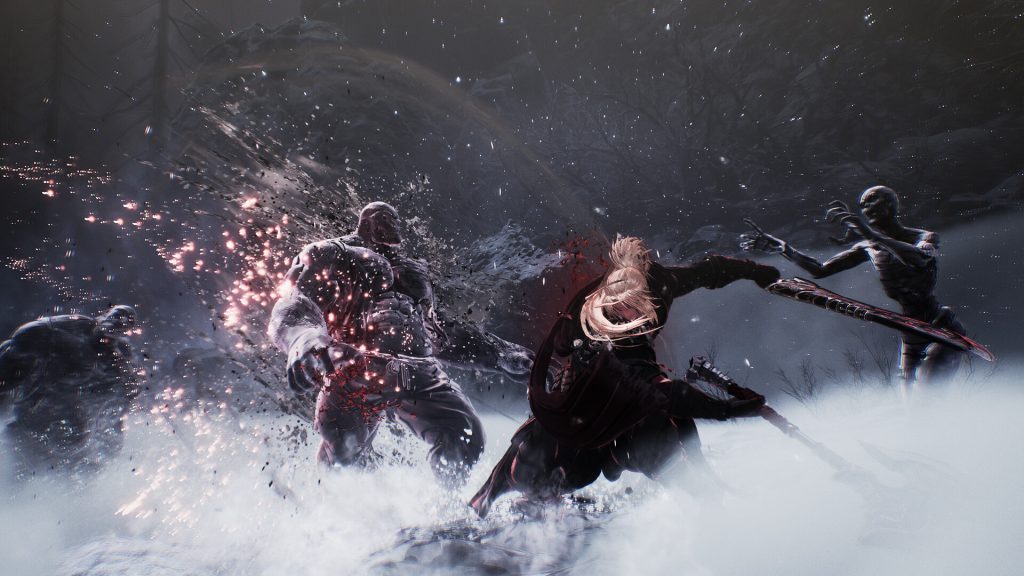

GAMINGBOLT.COMThe First Berserker: Khazan Interview Combat, Difficulty, Art Style, and MoreOf all the Soulslikes coming up on the horizon, few of them have captured attention like Neople and Nexons action RPG The First Berserker: Khazan, a grimdark take on theDungeon and Fighteruniverse, and a game that has looked consistently promising with its pre-release showings. From the promise of crunching and challenging combat to its striking, stylized art tyle, to the strong impression it has made with pre-release previews,The First Berserker: Khazanhas plenty going for it that makes it one of 2025s more prominent releases. Recently, we had the chance to speak with its developers about its combat, approach to difficulty, level design, and more. You can read the full interview below."While many hardcore action RPGs and Soulsborne games lean toward surreal, complex art with high-end design and graphics, we aim to bring an animated and anime-inspired style to Khazan."The First Berserker: Khazans stylized art style is one of its most instantly eye-catching elements. How did you land on this look for the game?As a longtime console game enthusiast, particularly in action RPGs, I have long envisioned a game with a style like Khazans. Since childhood, Ive been a fan of this genre, and that passion deeply influenced my approach to developing this game. Our goal of evolving the Dungeon and Fighter (DNF) Universe and expanding it globally with a console launch inspired us to create a fresh new art style for the IP. While many hardcore action RPGs and Soulsborne games lean toward surreal, complex art with high-end design and graphics, we aim to bring an animated and anime-inspired style to Khazan. This choice stays true to the DNF aesthetic while also refreshing the look for Western audiences who may be less familiar with the universe. We aim to achieve a cinematic quality for Khazan that is often found in mature-audience animation, focusing on two primary elements: bold, gritty, and intense battle scenes, paired with high-quality cartoon rendering to immerse players in a dark, atmospheric setting. We also believed that this animation style would better express the ultimate combat feeling we pursue and the action scenes that Khazan unfolds. Through this approach, we hope to engage a global audience by delivering intense battles with detailed, cel-based animation.How heavily will The First Berserker: Khazan emphasize its story and storytelling?The First Berserker: Khazan is a work fundamentally structured around its story. We designed all content, systems, and gameplay to revolve around the narrative. Furthermore, the protagonist Khazan is a hero who becomes the ancestor of the Slayer class, one of DFOs signature classes. He is one of the most crucial figures in the DFO universe and its grand narrative. In the original Dungeon and Fighter, Khazan is exiled and killed, but in The First Berserker: Khazan, the whole story is reconstructed as Khazan dramatically survives the expulsion process. Through this, players familiar with the original can experience a new aspect of the universe, while new players who dont know the original can enjoy the gameplay and story without any prior knowledge. Starting with The First Berserker: Khazan, the Dungeon and Fighter Universe will continue to expand.It looks like the game will adopt a level-based structure. With that in mind, what can you tell us about how heavily it will emphasize exploration? Should players expect anything specific in how they traverse the world?Yes, it leans towards a closed-level design. While we chose a linear structure, we made various efforts to incorporate the joy of adventure and exploration throughout the game. Speaking just about level and world play, weve placed various routes and hidden elements, and sometimes there are powerful enemies or traps. Through these elements, players can obtain important set items, crafting recipes, and various power sources for Khazan. Moreover, we wanted Khazans journey to be more than just a revenge story we wanted players to uncover different aspects of past events through this adventure and exploration. In particular, players can obtain key items related to the directors true ending among various possible endings."We wanted Khazans journey to be more than just a revenge story we wanted players to uncover different aspects of past events through this adventure and exploration. In particular, players can obtain key items related to the directors true ending among various possible endings."What can you tell us about your approach to designing enemies and bosses? How much variety should players expect from the game on this front?We consider adventure, challenge, and a sense of accomplishment as the core elements of gameplay. While challenges inevitably involve failure, we believe that failure should lead to another attempt, and it is important to ensure that the process remains enjoyable. Therefore, we have provided clear feedback at every stage of decision-making during challenges. Our goal is to lead players to face various enemies, learn their patterns, resist their attacks, and ultimately grow both the player and the character, Khazan.Additionally, we designed a game in which players can take different moves in terms of strategy and tried to provide clear feedback or accomplishment for each attempt. Some attacks must be better defeated with guards, yet in others, players will defend certain attacks more effectively with dodge. They can sometimes even dodge an attack simply by going past, although the direction of walking is essential in this case. To sum up, combat in Khazan is meant to allow players to defeat each attack and defense with their own techniques. Players are able to mix up different weapons, items and skills to define their own paly style to defeat the boss monster once they reach a level where they can see and respond to the enemy patterns. We believe there will be as many defeat strategies as the number of players.Combat content such as bosses and enemies, as well as elements like level design, were created to allow for progressive new challenges that match the various states and levels of both the player and Khazan. The main mission bosses all have strong personalities and various ways to defeat them. Beyond encountering different bosses, players can discover new aspects each time they challenge a boss.Furthermore, while you may encounter bosses in sub-missions that are similar to some main bosses, we designed these encounters to provide new experiences and challenges from a gameplay perspective.How expansive will The First Berserker: Khazans progression and customization systems be? How heavily will the game emphasize build diversity?The weapons in The First Berserker: Khazan are comparable to that of characters in other games. Dual Wields, Greatsword, and Spear can be simply seen as three different play styles, but they are more distinguishable from one another in terms of attack power, speed, range, pace, as well as attack and defense techniques. The skill tree system once again details them down furthermore. We believe our players have unique preferences when it comes to gameplay, so the system is built to satisfy all different styles. The First Berserker: Khazan aims for adventures, challenges, and character development. It is a game that allows players to develop their own styles. It isnt about gamers selecting a weapon from a variety and confirming their choice of battle style. By selecting a weapon, they will decide the general combat style and using different actions provided by the skill tree system, they can search for and develop their preferred combat style while also enhancing it with various options, set effects for each gear, and different item combinations. By combining these elements, players can enjoy the game with various appearances and builds.Will the game feature difficulty options?While we originally stated that there are no plans for difficulty options, we are now testing a mode with lowered difficulty within the team. Our primary goal was for players to become fully engaged with the character and sympathize with his difficult circumstances; however, there were requests for difficulty settings. It is still unclear whether there will be an easy or story mode, but we want to encourage more players to participate in the game and discover its fundamental fun. Details are being tested and reviewed, and we plan to do several internal tests before real deployment."It is still unclear whether there will be an easy or story mode, but we want to encourage more players to participate in the game and discover its fundamental fun. Details are being tested and reviewed, and we plan to do several internal tests before real deployment."Roughly how long will an average playthrough of the game be?Since the game isnt completely linear and includes elements of adventure and exploration, along with various farming and build options, and because the gameplay time can vary significantly depending on player skill and choices, its difficult to specify an exact playtime. However, as a single-package game, we have prepared a substantial amount of content, and based on the development teams standard playthrough, the time required to reach the true ending is quite substantial.0 Reacties 0 aandelen 136 Views

GAMINGBOLT.COMThe First Berserker: Khazan Interview Combat, Difficulty, Art Style, and MoreOf all the Soulslikes coming up on the horizon, few of them have captured attention like Neople and Nexons action RPG The First Berserker: Khazan, a grimdark take on theDungeon and Fighteruniverse, and a game that has looked consistently promising with its pre-release showings. From the promise of crunching and challenging combat to its striking, stylized art tyle, to the strong impression it has made with pre-release previews,The First Berserker: Khazanhas plenty going for it that makes it one of 2025s more prominent releases. Recently, we had the chance to speak with its developers about its combat, approach to difficulty, level design, and more. You can read the full interview below."While many hardcore action RPGs and Soulsborne games lean toward surreal, complex art with high-end design and graphics, we aim to bring an animated and anime-inspired style to Khazan."The First Berserker: Khazans stylized art style is one of its most instantly eye-catching elements. How did you land on this look for the game?As a longtime console game enthusiast, particularly in action RPGs, I have long envisioned a game with a style like Khazans. Since childhood, Ive been a fan of this genre, and that passion deeply influenced my approach to developing this game. Our goal of evolving the Dungeon and Fighter (DNF) Universe and expanding it globally with a console launch inspired us to create a fresh new art style for the IP. While many hardcore action RPGs and Soulsborne games lean toward surreal, complex art with high-end design and graphics, we aim to bring an animated and anime-inspired style to Khazan. This choice stays true to the DNF aesthetic while also refreshing the look for Western audiences who may be less familiar with the universe. We aim to achieve a cinematic quality for Khazan that is often found in mature-audience animation, focusing on two primary elements: bold, gritty, and intense battle scenes, paired with high-quality cartoon rendering to immerse players in a dark, atmospheric setting. We also believed that this animation style would better express the ultimate combat feeling we pursue and the action scenes that Khazan unfolds. Through this approach, we hope to engage a global audience by delivering intense battles with detailed, cel-based animation.How heavily will The First Berserker: Khazan emphasize its story and storytelling?The First Berserker: Khazan is a work fundamentally structured around its story. We designed all content, systems, and gameplay to revolve around the narrative. Furthermore, the protagonist Khazan is a hero who becomes the ancestor of the Slayer class, one of DFOs signature classes. He is one of the most crucial figures in the DFO universe and its grand narrative. In the original Dungeon and Fighter, Khazan is exiled and killed, but in The First Berserker: Khazan, the whole story is reconstructed as Khazan dramatically survives the expulsion process. Through this, players familiar with the original can experience a new aspect of the universe, while new players who dont know the original can enjoy the gameplay and story without any prior knowledge. Starting with The First Berserker: Khazan, the Dungeon and Fighter Universe will continue to expand.It looks like the game will adopt a level-based structure. With that in mind, what can you tell us about how heavily it will emphasize exploration? Should players expect anything specific in how they traverse the world?Yes, it leans towards a closed-level design. While we chose a linear structure, we made various efforts to incorporate the joy of adventure and exploration throughout the game. Speaking just about level and world play, weve placed various routes and hidden elements, and sometimes there are powerful enemies or traps. Through these elements, players can obtain important set items, crafting recipes, and various power sources for Khazan. Moreover, we wanted Khazans journey to be more than just a revenge story we wanted players to uncover different aspects of past events through this adventure and exploration. In particular, players can obtain key items related to the directors true ending among various possible endings."We wanted Khazans journey to be more than just a revenge story we wanted players to uncover different aspects of past events through this adventure and exploration. In particular, players can obtain key items related to the directors true ending among various possible endings."What can you tell us about your approach to designing enemies and bosses? How much variety should players expect from the game on this front?We consider adventure, challenge, and a sense of accomplishment as the core elements of gameplay. While challenges inevitably involve failure, we believe that failure should lead to another attempt, and it is important to ensure that the process remains enjoyable. Therefore, we have provided clear feedback at every stage of decision-making during challenges. Our goal is to lead players to face various enemies, learn their patterns, resist their attacks, and ultimately grow both the player and the character, Khazan.Additionally, we designed a game in which players can take different moves in terms of strategy and tried to provide clear feedback or accomplishment for each attempt. Some attacks must be better defeated with guards, yet in others, players will defend certain attacks more effectively with dodge. They can sometimes even dodge an attack simply by going past, although the direction of walking is essential in this case. To sum up, combat in Khazan is meant to allow players to defeat each attack and defense with their own techniques. Players are able to mix up different weapons, items and skills to define their own paly style to defeat the boss monster once they reach a level where they can see and respond to the enemy patterns. We believe there will be as many defeat strategies as the number of players.Combat content such as bosses and enemies, as well as elements like level design, were created to allow for progressive new challenges that match the various states and levels of both the player and Khazan. The main mission bosses all have strong personalities and various ways to defeat them. Beyond encountering different bosses, players can discover new aspects each time they challenge a boss.Furthermore, while you may encounter bosses in sub-missions that are similar to some main bosses, we designed these encounters to provide new experiences and challenges from a gameplay perspective.How expansive will The First Berserker: Khazans progression and customization systems be? How heavily will the game emphasize build diversity?The weapons in The First Berserker: Khazan are comparable to that of characters in other games. Dual Wields, Greatsword, and Spear can be simply seen as three different play styles, but they are more distinguishable from one another in terms of attack power, speed, range, pace, as well as attack and defense techniques. The skill tree system once again details them down furthermore. We believe our players have unique preferences when it comes to gameplay, so the system is built to satisfy all different styles. The First Berserker: Khazan aims for adventures, challenges, and character development. It is a game that allows players to develop their own styles. It isnt about gamers selecting a weapon from a variety and confirming their choice of battle style. By selecting a weapon, they will decide the general combat style and using different actions provided by the skill tree system, they can search for and develop their preferred combat style while also enhancing it with various options, set effects for each gear, and different item combinations. By combining these elements, players can enjoy the game with various appearances and builds.Will the game feature difficulty options?While we originally stated that there are no plans for difficulty options, we are now testing a mode with lowered difficulty within the team. Our primary goal was for players to become fully engaged with the character and sympathize with his difficult circumstances; however, there were requests for difficulty settings. It is still unclear whether there will be an easy or story mode, but we want to encourage more players to participate in the game and discover its fundamental fun. Details are being tested and reviewed, and we plan to do several internal tests before real deployment."It is still unclear whether there will be an easy or story mode, but we want to encourage more players to participate in the game and discover its fundamental fun. Details are being tested and reviewed, and we plan to do several internal tests before real deployment."Roughly how long will an average playthrough of the game be?Since the game isnt completely linear and includes elements of adventure and exploration, along with various farming and build options, and because the gameplay time can vary significantly depending on player skill and choices, its difficult to specify an exact playtime. However, as a single-package game, we have prepared a substantial amount of content, and based on the development teams standard playthrough, the time required to reach the true ending is quite substantial.0 Reacties 0 aandelen 136 Views