0 Reacties

0 aandelen

116 Views

Bedrijvengids

Bedrijvengids

-

Please log in to like, share and comment!

-

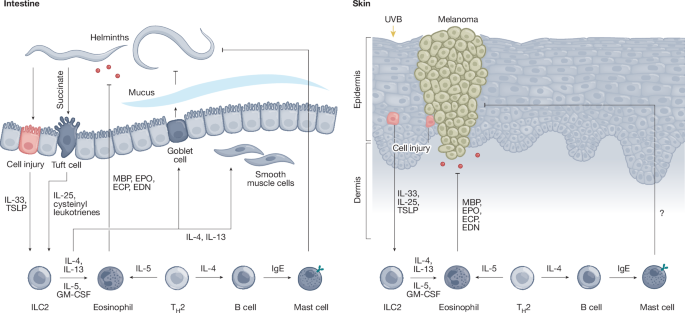

WWW.NATURE.COMReinventing type 2 immunity in cancerNature, Published online: 08 January 2025; doi:10.1038/s41586-024-08194-2This Review addresses the roles of type 2 immunity in cancer and the potential for cancer immunotherapies targeting this pathway.0 Reacties 0 aandelen 125 Views

WWW.NATURE.COMReinventing type 2 immunity in cancerNature, Published online: 08 January 2025; doi:10.1038/s41586-024-08194-2This Review addresses the roles of type 2 immunity in cancer and the potential for cancer immunotherapies targeting this pathway.0 Reacties 0 aandelen 125 Views -

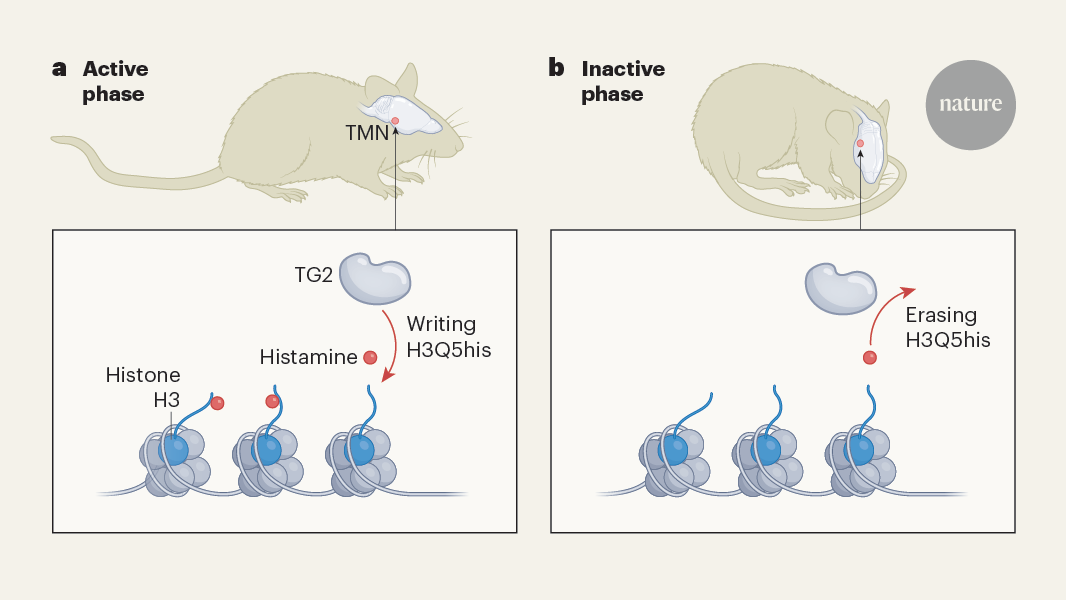

WWW.NATURE.COMCircadian rhythms are set by epigenetic marks in neuronsNature, Published online: 08 January 2025; doi:10.1038/d41586-024-04080-zChemicals derived from neurotransmitter molecules in the brain can act as epigenetic marks on histone proteins to regulate gene expression. These marks control circadian clock genes and influence behaviour.0 Reacties 0 aandelen 119 Views

WWW.NATURE.COMCircadian rhythms are set by epigenetic marks in neuronsNature, Published online: 08 January 2025; doi:10.1038/d41586-024-04080-zChemicals derived from neurotransmitter molecules in the brain can act as epigenetic marks on histone proteins to regulate gene expression. These marks control circadian clock genes and influence behaviour.0 Reacties 0 aandelen 119 Views -

WWW.LIVESCIENCE.COMScientists discover new kind of cartilage that looks like fat-filled 'Bubble Wrap'A new study describes a type of cartilage that may have been discovered, forgotten and found again at several points in history.0 Reacties 0 aandelen 127 Views

WWW.LIVESCIENCE.COMScientists discover new kind of cartilage that looks like fat-filled 'Bubble Wrap'A new study describes a type of cartilage that may have been discovered, forgotten and found again at several points in history.0 Reacties 0 aandelen 127 Views -

WWW.LIVESCIENCE.COMThere's a speed limit to human thought and it's ridiculously lowHuman brains take in sensory data at more than 1 billion bits per second, but only process that information at a measly 10 bits per second, new research has found.0 Reacties 0 aandelen 125 Views

WWW.LIVESCIENCE.COMThere's a speed limit to human thought and it's ridiculously lowHuman brains take in sensory data at more than 1 billion bits per second, but only process that information at a measly 10 bits per second, new research has found.0 Reacties 0 aandelen 125 Views -

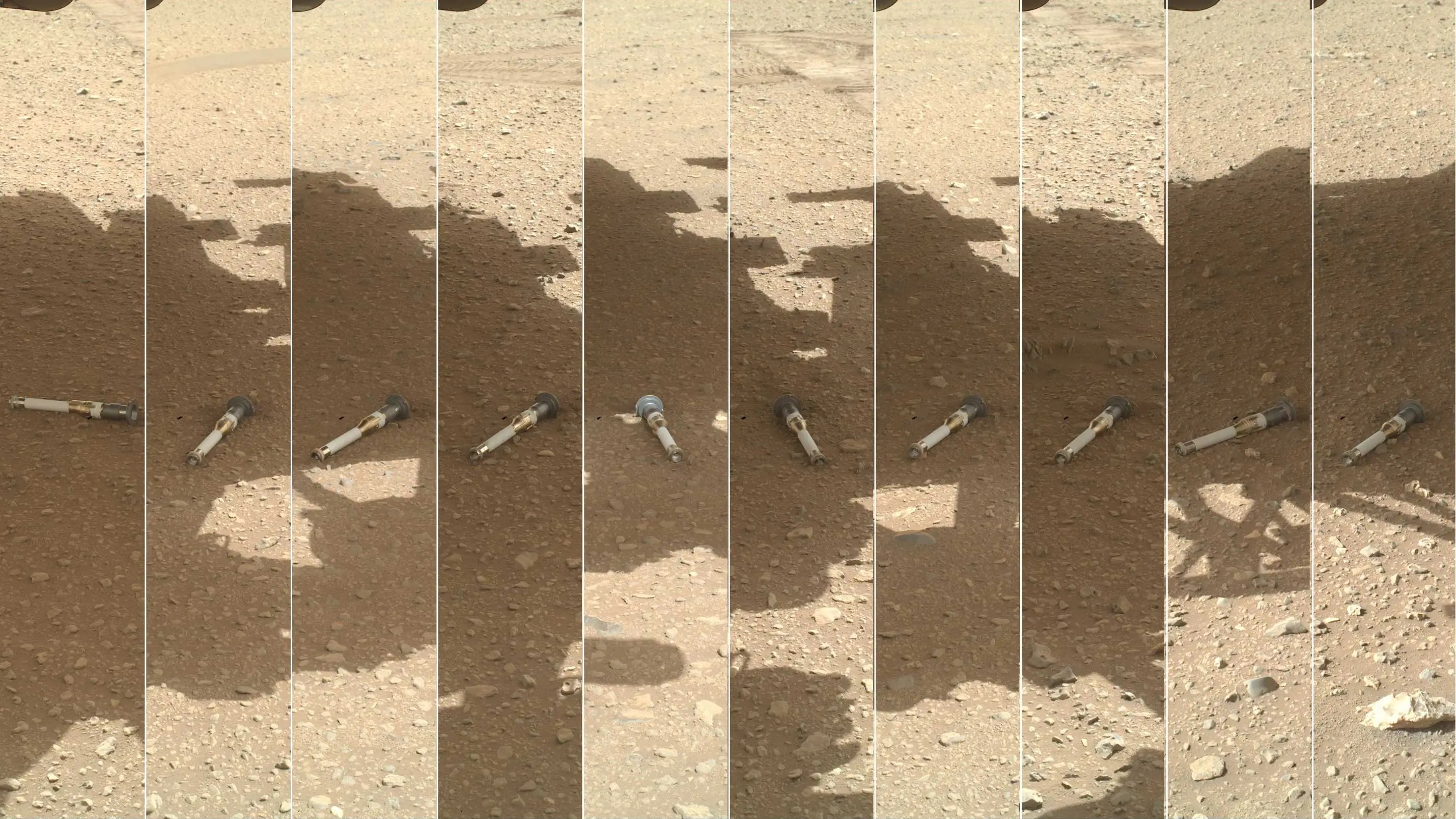

WWW.LIVESCIENCE.COMMars rock samples may contain evidence of alien life, but can NASA get them back to Earth?NASA will explore two different strategies for fetching Mars rocks collected by the Perseverance rover, and there's a chance these samples contain evidence of alien life.0 Reacties 0 aandelen 126 Views

WWW.LIVESCIENCE.COMMars rock samples may contain evidence of alien life, but can NASA get them back to Earth?NASA will explore two different strategies for fetching Mars rocks collected by the Perseverance rover, and there's a chance these samples contain evidence of alien life.0 Reacties 0 aandelen 126 Views -

Vote on Restricting Sexualizing CommentsA few days ago, I asked for feedback on NSFW restrictions and received far more if it than I was expected. I intend to get around to creating polls to decide which exact rules around NSFW restrictions will enacted, but with such a wide range of opinions expressed, I think multiple polls will be necessary to work out the fine details. For the time being, I'll be addressing what is perhaps the simplest issue brought up in that thread, that being sexualizing comments. For the sake of clarifying the four options, real examples of sexualizing comments include: * "Would" * "Booobieesss.. love em " * Example image #1 * Example Image #2 and real examples of sexualizing comments which would be considered constructive include: * "Needs more subsurface scattering, it looks like a sex doll or a dead body." * "Looks like the artifical skin of a sex doll tbh ... its getting uncanny but the Skin texture needs work." * "...the bouncy beds is a lil too much for it to be appealing " * "OK horniness aside this is absolutely incredible" What constitutes an explicitly sexualized artwork is difficult to define, but for the purposes of this poll, assume that things similar in nature to a sculpt of a standing nude body or a depiction of a nude character laying in a field does not count, but that a character in a risqu outfit or posing suggestively would count, even if no nudity is depicted. Sexualizing comments should be... View Poll submitted by /u/Avereniect [link] [comments]0 Reacties 0 aandelen 133 Views

-

WWW.GAMESPOT.COMGrab Monster Hunter World And Rise For Only $10 For A Limited TimeMonster Hunter fans don't have to wait much longer for the next mainline entry in Capcom's wildly popular action-RPG series. Monster Hunter Wilds is slated to launch February 28, and players can participate in a second open beta early next month. If you want to check out the series for the first time or are interested in owning Steam versions of the most recent games, Fanatical has brought back its terrific Build Your Own Monster Hunter Bundle. You can get Monster Hunter Rise and Monster Hunter World for only 10 bucks total.We'd recommend snagging each game's sprawling expansion, too. You can get the base games as well as Rise's Sunbreak DLC and World's Iceborne DLC for only $18. Build your bundle at Fanatical Capcom Monster Hunter BundleMonster Hunter Rise: Sunbreak2 for $10 / 4 for $18 / All 8 for $25Continue Reading at GameSpot0 Reacties 0 aandelen 117 Views

WWW.GAMESPOT.COMGrab Monster Hunter World And Rise For Only $10 For A Limited TimeMonster Hunter fans don't have to wait much longer for the next mainline entry in Capcom's wildly popular action-RPG series. Monster Hunter Wilds is slated to launch February 28, and players can participate in a second open beta early next month. If you want to check out the series for the first time or are interested in owning Steam versions of the most recent games, Fanatical has brought back its terrific Build Your Own Monster Hunter Bundle. You can get Monster Hunter Rise and Monster Hunter World for only 10 bucks total.We'd recommend snagging each game's sprawling expansion, too. You can get the base games as well as Rise's Sunbreak DLC and World's Iceborne DLC for only $18. Build your bundle at Fanatical Capcom Monster Hunter BundleMonster Hunter Rise: Sunbreak2 for $10 / 4 for $18 / All 8 for $25Continue Reading at GameSpot0 Reacties 0 aandelen 117 Views -

WWW.GAMESPOT.COMSleeping Dogs Movie From Donnie Yen May Never HappenA movie based on the video game Sleeping Dogs was in the works for years, but it's apparently no longer moving ahead. Actor Donnie Yen (Ip Man, John Wick, Rogue One) was announced as the lead years ago, and he's now confirmed the momentum there once was to make the movie happen has stalled."I spent a lot of time and did a lot of work with these producers, and I even invested some of my own money into obtaining the drafts and some of the rights," Yen told Polygon. "I waited for years. Years. And I really want to do it. I have all these visions in my head, and unfortunately I don't know, you know how Hollywood goes, right? I spent many, many years on it. It was an unfortunate thing."Yen didn't provide any other specifics on what happened to the Sleeping Dogs movie, but it's not uncommon for Hollywood films to fall apart or trudge through the so-called development hell and never see the light of day for any number of reasons.Continue Reading at GameSpot0 Reacties 0 aandelen 101 Views

WWW.GAMESPOT.COMSleeping Dogs Movie From Donnie Yen May Never HappenA movie based on the video game Sleeping Dogs was in the works for years, but it's apparently no longer moving ahead. Actor Donnie Yen (Ip Man, John Wick, Rogue One) was announced as the lead years ago, and he's now confirmed the momentum there once was to make the movie happen has stalled."I spent a lot of time and did a lot of work with these producers, and I even invested some of my own money into obtaining the drafts and some of the rights," Yen told Polygon. "I waited for years. Years. And I really want to do it. I have all these visions in my head, and unfortunately I don't know, you know how Hollywood goes, right? I spent many, many years on it. It was an unfortunate thing."Yen didn't provide any other specifics on what happened to the Sleeping Dogs movie, but it's not uncommon for Hollywood films to fall apart or trudge through the so-called development hell and never see the light of day for any number of reasons.Continue Reading at GameSpot0 Reacties 0 aandelen 101 Views -

WWW.GAMESPOT.COMLeaked Switch 2 Renders Grant A 360-Degree Look At The ConsoleAmong a multitude of a Nintendo Switch 2 leaks and third-party accessory marketing, yet another leak has emerged. This time, the supposed leak shows a full 3D render of the console and its Joy-Cons.Tech and phone website 91mobiles, in collaboration with OnLeaks, published the renders. They depict a black handheld console, which looks similar to the Switch, albeit larger than either the OLED or original models. With the Joy-Cons attached, 91mobiles published the following dimensions: 271 x 116.4 x 31.4mm (10.67 x 4.58 x 1.24 inches). The stated screen size is 8.4 inches.These dimensions match prior calculations based on Genki's Switch 2 mock-up, made to promote the company's line of accessories. Genki further claimed the system will launch in April and clarified that it does not know the system's internals; representatives only claimed knowledge of the physical body and features like magnetic controllers.Continue Reading at GameSpot0 Reacties 0 aandelen 114 Views

WWW.GAMESPOT.COMLeaked Switch 2 Renders Grant A 360-Degree Look At The ConsoleAmong a multitude of a Nintendo Switch 2 leaks and third-party accessory marketing, yet another leak has emerged. This time, the supposed leak shows a full 3D render of the console and its Joy-Cons.Tech and phone website 91mobiles, in collaboration with OnLeaks, published the renders. They depict a black handheld console, which looks similar to the Switch, albeit larger than either the OLED or original models. With the Joy-Cons attached, 91mobiles published the following dimensions: 271 x 116.4 x 31.4mm (10.67 x 4.58 x 1.24 inches). The stated screen size is 8.4 inches.These dimensions match prior calculations based on Genki's Switch 2 mock-up, made to promote the company's line of accessories. Genki further claimed the system will launch in April and clarified that it does not know the system's internals; representatives only claimed knowledge of the physical body and features like magnetic controllers.Continue Reading at GameSpot0 Reacties 0 aandelen 114 Views