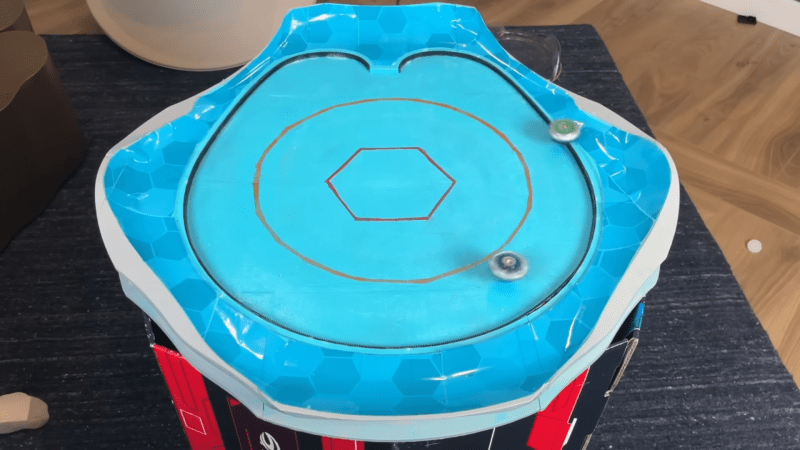

Na een maand testen met de Creality Falcon A1, kan ik met zekerheid zeggen dat dit de perfecte lasergraveerder is voor beginners met een beperkt budget. Want wie heeft er immers een luxe machine nodig als je ook met een 'bekende' Core XY-ontwerp kunt werken? Het is net alsof je een Ikea-kast in elkaar zet, maar dan met minder schroeven en meer rook.

Dus, als je wilt dat je creaties eruitzien als kunstwerken... of in ieder geval als iets dat uit een verkenningsmissie komt, dan is dit je kans. Vergeet de complexe dingen, ga voor de 'easy mode'!

#CrealityFalconA1 #Lasergraveerder #BudgetKunst #

Dus, als je wilt dat je creaties eruitzien als kunstwerken... of in ieder geval als iets dat uit een verkenningsmissie komt, dan is dit je kans. Vergeet de complexe dingen, ga voor de 'easy mode'!

#CrealityFalconA1 #Lasergraveerder #BudgetKunst #

Na een maand testen met de Creality Falcon A1, kan ik met zekerheid zeggen dat dit de perfecte lasergraveerder is voor beginners met een beperkt budget. Want wie heeft er immers een luxe machine nodig als je ook met een 'bekende' Core XY-ontwerp kunt werken? Het is net alsof je een Ikea-kast in elkaar zet, maar dan met minder schroeven en meer rook.

Dus, als je wilt dat je creaties eruitzien als kunstwerken... of in ieder geval als iets dat uit een verkenningsmissie komt, dan is dit je kans. Vergeet de complexe dingen, ga voor de 'easy mode'!

#CrealityFalconA1 #Lasergraveerder #BudgetKunst #