HOLLYWOOD VFX TOOLS FOR SPACE EXPLORATION

By CHRIS McGOWAN

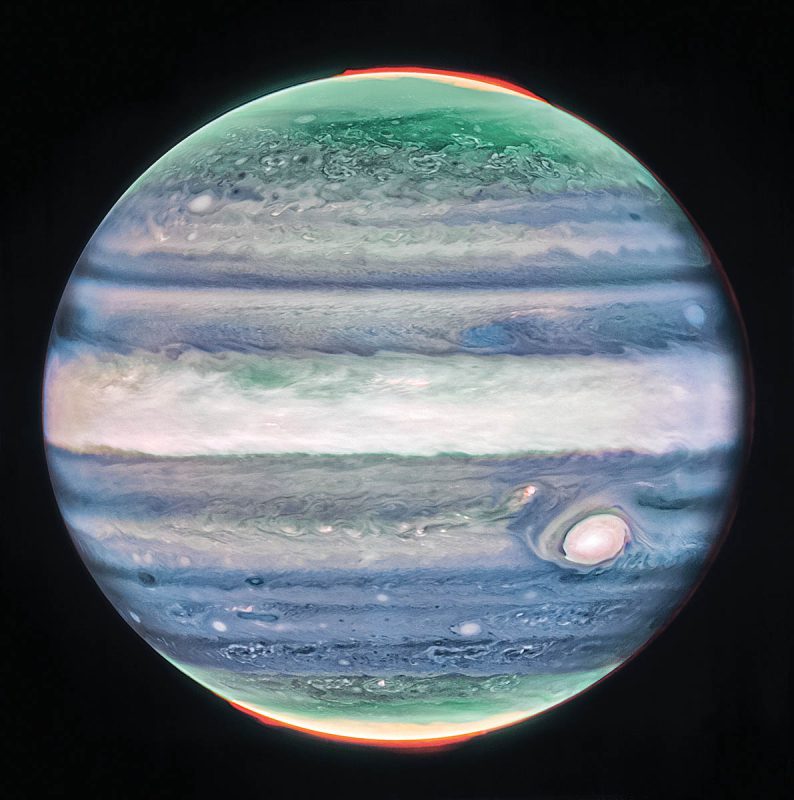

This image of Jupiter from NASA’s James Webb Space Telescope’s NIRCamshows stunning details of the majestic planet in infrared light.Special effects have been used for decades to depict space exploration, from visits to planets and moons to zero gravity and spaceships – one need only think of the landmark 2001: A Space Odyssey. Since that era, visual effects have increasingly grown in realism and importance. VFX have been used for entertainment and for scientific purposes, outreach to the public and astronaut training in virtual reality. Compelling images and videos can bring data to life. NASA’s Scientific Visualization Studioproduces visualizations, animations and images to help scientists tell stories of their research and make science more approachable and engaging.

A.J. Christensen is a senior visualization designer for the NASA Scientific Visualization Studioat the Goddard Space Flight Center in Greenbelt, Maryland. There, he develops data visualization techniques and designs data-driven imagery for scientific analysis and public outreach using Hollywood visual effects tools, according to NASA. SVS visualizations feature datasets from Earth-and space-based instrumentation, scientific supercomputer models and physical statistical distributions that have been analyzed and processed by computational scientists. Christensen’s specialties include working with 3D volumetric data, using the procedural cinematic software Houdini and science topics in Heliophysics, Geophysics and Astrophysics. He previously worked at the National Center for Supercomputing Applications’ Advanced Visualization Lab where he worked on more than a dozen science documentary full-dome films as well as the IMAX films Hubble 3D and A Beautiful Planet – and he worked at DNEG on the movie Interstellar, which won the 2015 Best Visual Effects Academy Award.

This global map of CO2 was created by NASA’s Scientific Visualization Studio using a model called GEOS, short for the Goddard Earth Observing System. GEOS is a high-resolution weather reanalysis model, powered by supercomputers, that is used to represent what was happening in the atmosphere.“The NASA Scientific Visualization Studio operates like a small VFX studio that creates animations of scientific data that has been collected or analyzed at NASA. We are one of several groups at NASA that create imagery for public consumption, but we are also a part of the scientific research process, helping scientists understand and share their data through pictures and video.”

—A.J. Christensen, Senior Visualization Designer, NASA Scientific Visualization StudioAbout his work at NASA SVS, Christensen comments, “The NASA Scientific Visualization Studio operates like a small VFX studio that creates animations of scientific data that has been collected or analyzed at NASA. We are one of several groups at NASA that create imagery for public consumption, but we are also a part of the scientific research process, helping scientists understand and share their data through pictures and video. This past year we were part of NASA’s total eclipse outreach efforts, we participated in all the major earth science and astronomy conferences, we launched a public exhibition at the Smithsonian Museum of Natural History called the Earth Information Center, and we posted hundreds of new visualizations to our publicly accessible website: svs.gsfc.nasa.gov.”

This is the ‘beauty shot version’ of Perpetual Ocean 2: Western Boundary Currents. The visualization starts with a rotating globe showing ocean currents. The colors used to color the flow in this version were chosen to provide a pleasing look.The Gulf Stream and connected currents.Venus, our nearby “sister” planet, beckons today as a compelling target for exploration that may connect the objects in our own solar system to those discovered around nearby stars.WORKING WITH DATA

While Christensen is interpreting the data from active spacecraft and making it usable in different forms, such as for science and outreach, he notes, “It’s not just spacecraft that collect data. NASA maintains or monitors instruments on Earth too – on land, in the oceans and in the air. And to be precise, there are robots wandering around Mars that are collecting data, too.”

He continues, “Sometimes the data comes to our team as raw telescope imagery, sometimes we get it as a data product that a scientist has already analyzed and extracted meaning from, and sometimes various sensor data is used to drive computational models and we work with the models’ resulting output.”

Jupiter’s moon Europa may have life in a vast ocean beneath its icy surface.HOUDINI AND OTHER TOOLS

“Data visualization means a lot of different things to different people, but many people on our team interpret it as a form of filmmaking,” Christensen says. “We are very inspired by the approach to visual storytelling that Hollywood uses, and we use tools that are standard for Hollywood VFX. Many professionals in our area – the visualization of 3D scientific data – were previously using other animation tools but have discovered that Houdini is the most capable of understanding and manipulating unusual data, so there has been major movement toward Houdini over the past decade.”

Satellite imagery from NASA’s Solar Dynamics Observatoryshows the Sun in ultraviolet light colorized in light brown. Seen in ultraviolet light, the dark patches on the Sun are known as coronal holes and are regions where fast solar wind gushes out into space.Christensen explains, “We have always worked with scientific software as well – sometimes there’s only one software tool in existence to interpret a particular kind of scientific data. More often than not, scientific software does not have a GUI, so we’ve had to become proficient at learning new coding environments very quickly. IDL and Python are the generic data manipulation environments we use when something is too complicated or oversized for Houdini, but there are lots of alternatives out there. Typically, we use these tools to get the data into a format that Houdini can interpret, and then we use Houdini to do our shading, lighting and camera design, and seamlessly blend different datasets together.”

While cruising around Saturn in early October 2004, Cassini captured a series of images that have been composed into this large global natural color view of Saturn and its rings. This grand mosaic consists of 126 images acquired in a tile-like fashion, covering one end of Saturn’s rings to the other and the entire planet in between.The black hole Gargantua and the surrounding accretion disc from the 2014 movie Interstellar.Another visualization of the black hole Gargantua.INTERSTELLAR & GARGANTUA

Christensen recalls working for DNEG on Interstellar. “When I first started at DNEG, they asked me to work on the giant waves on Miller’s ocean planet. About a week in, my manager took me into the hall and said, ‘I was looking at your reel and saw all this astronomy stuff. We’re working on another sequence with an accretion disk around a black hole that I’m wondering if we should put you on.’ And I said, ‘Oh yeah, I’ve done lots of accretion disks.’ So, for the rest of my time on the show, I was working on the black hole team.”

He adds, “There are a lot of people in my community that would be hesitant to label any big-budget movie sequence as a scientific visualization. The typical assumption is that for a Hollywood movie, no one cares about accuracy as long as it looks good. Guardians of the Galaxy makes it seem like space is positively littered with nebulae, and Star Wars makes it seem like asteroids travel in herds. But the black hole Gargantua in Interstellar is a good case for being called a visualization. The imagery you see in the movie is the direct result of a collaboration with an expert scientist, Dr. Kip Thorne, working with the DNEG research team using the actual Einstein equations that describe the gravity around a black hole.”

Thorne is a Nobel Prize-winning theoretical physicist who taught at Caltech for many years. He has reached wide audiences with his books and presentations on black holes, time travel and wormholes on PBS and BBC shows. Christensen comments, “You can make the argument that some of the complexity around what a black hole actually looks like was discarded for the film, and they admit as much in the research paper that was published after the movie came out. But our team at NASA does that same thing. There is no such thing as an objectively ‘true’ scientific image – you always have to make aesthetic decisions around whether the image tells the science story, and often it makes more sense to omit information to clarify what’s important. Ultimately, Gargantua taught a whole lot of people something new about science, and that’s what a good scientific visualization aims to do.”

The SVS produces an annual visualization of the Moon’s phase and libration comprising 8,760 hourly renderings of its precise size, orientation and illumination.FURTHER CHALLENGES

The sheer size of the data often encountered by Christensen and his peers is a challenge. “I’m currently working with a dataset that is 400GB per timestep. It’s so big that I don’t even want to move it from one file server to another. So, then I have to make decisions about which data attributes to keep and which to discard, whether there’s a region of the data that I can cull or downsample, and I have to experiment with data compression schemes that might require me to entirely re-design the pipeline I’m using for Houdini. Of course, if I get rid of too much information, it becomes very resource-intensive to recompute everything, but if I don’t get rid of enough, then my design process becomes agonizingly slow.”

SVS also works closely with its NASA partner groups Conceptual Image Laband Goddard Media Studiosto publish a diverse array of content. Conceptual Image Lab focuses more on the artistic side of things – producing high-fidelity renders using film animation and visual design techniques, according to NASA. Where the SVS primarily focuses on making data-based visualizations, CIL puts more emphasis on conceptual visualizations – producing animations featuring NASA spacecraft, planetary observations and simulations, according to NASA. Goddard Media Studios, on the other hand, is more focused towards public outreach – producing interviews, TV programs and documentaries. GMS continues to be the main producers behind NASA TV, and as such, much of their content is aimed towards the general public.

An impact crater on the moon.Image of Mars showing a partly shadowed Olympus Mons toward the upper left of the image.Mars. Hellas Basin can be seen in the lower right portion of the image.Mars slightly tilted to show the Martian North Pole.Christensen notes, “One of the more unique challenges in this field is one of bringing people from very different backgrounds to agree on a common outcome. I work on teams with scientists, communicators and technologists, and we all have different communities we’re trying to satisfy. For instance, communicators are generally trying to simplify animations so their learning goal is clear, but scientists will insist that we add text and annotations on top of the video to eliminate ambiguity and avoid misinterpretations. Often, the technologist will have to say we can’t zoom in or look at the data in a certain way because it will show the data boundaries or data resolution limits. Every shot is a negotiation, but in trying to compromise, we often push the boundaries of what has been done before, which is exciting.”

#hollywood #vfx #tools #space #exploration

HOLLYWOOD VFX TOOLS FOR SPACE EXPLORATION

By CHRIS McGOWAN

This image of Jupiter from NASA’s James Webb Space Telescope’s NIRCamshows stunning details of the majestic planet in infrared light.Special effects have been used for decades to depict space exploration, from visits to planets and moons to zero gravity and spaceships – one need only think of the landmark 2001: A Space Odyssey. Since that era, visual effects have increasingly grown in realism and importance. VFX have been used for entertainment and for scientific purposes, outreach to the public and astronaut training in virtual reality. Compelling images and videos can bring data to life. NASA’s Scientific Visualization Studioproduces visualizations, animations and images to help scientists tell stories of their research and make science more approachable and engaging.

A.J. Christensen is a senior visualization designer for the NASA Scientific Visualization Studioat the Goddard Space Flight Center in Greenbelt, Maryland. There, he develops data visualization techniques and designs data-driven imagery for scientific analysis and public outreach using Hollywood visual effects tools, according to NASA. SVS visualizations feature datasets from Earth-and space-based instrumentation, scientific supercomputer models and physical statistical distributions that have been analyzed and processed by computational scientists. Christensen’s specialties include working with 3D volumetric data, using the procedural cinematic software Houdini and science topics in Heliophysics, Geophysics and Astrophysics. He previously worked at the National Center for Supercomputing Applications’ Advanced Visualization Lab where he worked on more than a dozen science documentary full-dome films as well as the IMAX films Hubble 3D and A Beautiful Planet – and he worked at DNEG on the movie Interstellar, which won the 2015 Best Visual Effects Academy Award.

This global map of CO2 was created by NASA’s Scientific Visualization Studio using a model called GEOS, short for the Goddard Earth Observing System. GEOS is a high-resolution weather reanalysis model, powered by supercomputers, that is used to represent what was happening in the atmosphere.“The NASA Scientific Visualization Studio operates like a small VFX studio that creates animations of scientific data that has been collected or analyzed at NASA. We are one of several groups at NASA that create imagery for public consumption, but we are also a part of the scientific research process, helping scientists understand and share their data through pictures and video.”

—A.J. Christensen, Senior Visualization Designer, NASA Scientific Visualization StudioAbout his work at NASA SVS, Christensen comments, “The NASA Scientific Visualization Studio operates like a small VFX studio that creates animations of scientific data that has been collected or analyzed at NASA. We are one of several groups at NASA that create imagery for public consumption, but we are also a part of the scientific research process, helping scientists understand and share their data through pictures and video. This past year we were part of NASA’s total eclipse outreach efforts, we participated in all the major earth science and astronomy conferences, we launched a public exhibition at the Smithsonian Museum of Natural History called the Earth Information Center, and we posted hundreds of new visualizations to our publicly accessible website: svs.gsfc.nasa.gov.”

This is the ‘beauty shot version’ of Perpetual Ocean 2: Western Boundary Currents. The visualization starts with a rotating globe showing ocean currents. The colors used to color the flow in this version were chosen to provide a pleasing look.The Gulf Stream and connected currents.Venus, our nearby “sister” planet, beckons today as a compelling target for exploration that may connect the objects in our own solar system to those discovered around nearby stars.WORKING WITH DATA

While Christensen is interpreting the data from active spacecraft and making it usable in different forms, such as for science and outreach, he notes, “It’s not just spacecraft that collect data. NASA maintains or monitors instruments on Earth too – on land, in the oceans and in the air. And to be precise, there are robots wandering around Mars that are collecting data, too.”

He continues, “Sometimes the data comes to our team as raw telescope imagery, sometimes we get it as a data product that a scientist has already analyzed and extracted meaning from, and sometimes various sensor data is used to drive computational models and we work with the models’ resulting output.”

Jupiter’s moon Europa may have life in a vast ocean beneath its icy surface.HOUDINI AND OTHER TOOLS

“Data visualization means a lot of different things to different people, but many people on our team interpret it as a form of filmmaking,” Christensen says. “We are very inspired by the approach to visual storytelling that Hollywood uses, and we use tools that are standard for Hollywood VFX. Many professionals in our area – the visualization of 3D scientific data – were previously using other animation tools but have discovered that Houdini is the most capable of understanding and manipulating unusual data, so there has been major movement toward Houdini over the past decade.”

Satellite imagery from NASA’s Solar Dynamics Observatoryshows the Sun in ultraviolet light colorized in light brown. Seen in ultraviolet light, the dark patches on the Sun are known as coronal holes and are regions where fast solar wind gushes out into space.Christensen explains, “We have always worked with scientific software as well – sometimes there’s only one software tool in existence to interpret a particular kind of scientific data. More often than not, scientific software does not have a GUI, so we’ve had to become proficient at learning new coding environments very quickly. IDL and Python are the generic data manipulation environments we use when something is too complicated or oversized for Houdini, but there are lots of alternatives out there. Typically, we use these tools to get the data into a format that Houdini can interpret, and then we use Houdini to do our shading, lighting and camera design, and seamlessly blend different datasets together.”

While cruising around Saturn in early October 2004, Cassini captured a series of images that have been composed into this large global natural color view of Saturn and its rings. This grand mosaic consists of 126 images acquired in a tile-like fashion, covering one end of Saturn’s rings to the other and the entire planet in between.The black hole Gargantua and the surrounding accretion disc from the 2014 movie Interstellar.Another visualization of the black hole Gargantua.INTERSTELLAR & GARGANTUA

Christensen recalls working for DNEG on Interstellar. “When I first started at DNEG, they asked me to work on the giant waves on Miller’s ocean planet. About a week in, my manager took me into the hall and said, ‘I was looking at your reel and saw all this astronomy stuff. We’re working on another sequence with an accretion disk around a black hole that I’m wondering if we should put you on.’ And I said, ‘Oh yeah, I’ve done lots of accretion disks.’ So, for the rest of my time on the show, I was working on the black hole team.”

He adds, “There are a lot of people in my community that would be hesitant to label any big-budget movie sequence as a scientific visualization. The typical assumption is that for a Hollywood movie, no one cares about accuracy as long as it looks good. Guardians of the Galaxy makes it seem like space is positively littered with nebulae, and Star Wars makes it seem like asteroids travel in herds. But the black hole Gargantua in Interstellar is a good case for being called a visualization. The imagery you see in the movie is the direct result of a collaboration with an expert scientist, Dr. Kip Thorne, working with the DNEG research team using the actual Einstein equations that describe the gravity around a black hole.”

Thorne is a Nobel Prize-winning theoretical physicist who taught at Caltech for many years. He has reached wide audiences with his books and presentations on black holes, time travel and wormholes on PBS and BBC shows. Christensen comments, “You can make the argument that some of the complexity around what a black hole actually looks like was discarded for the film, and they admit as much in the research paper that was published after the movie came out. But our team at NASA does that same thing. There is no such thing as an objectively ‘true’ scientific image – you always have to make aesthetic decisions around whether the image tells the science story, and often it makes more sense to omit information to clarify what’s important. Ultimately, Gargantua taught a whole lot of people something new about science, and that’s what a good scientific visualization aims to do.”

The SVS produces an annual visualization of the Moon’s phase and libration comprising 8,760 hourly renderings of its precise size, orientation and illumination.FURTHER CHALLENGES

The sheer size of the data often encountered by Christensen and his peers is a challenge. “I’m currently working with a dataset that is 400GB per timestep. It’s so big that I don’t even want to move it from one file server to another. So, then I have to make decisions about which data attributes to keep and which to discard, whether there’s a region of the data that I can cull or downsample, and I have to experiment with data compression schemes that might require me to entirely re-design the pipeline I’m using for Houdini. Of course, if I get rid of too much information, it becomes very resource-intensive to recompute everything, but if I don’t get rid of enough, then my design process becomes agonizingly slow.”

SVS also works closely with its NASA partner groups Conceptual Image Laband Goddard Media Studiosto publish a diverse array of content. Conceptual Image Lab focuses more on the artistic side of things – producing high-fidelity renders using film animation and visual design techniques, according to NASA. Where the SVS primarily focuses on making data-based visualizations, CIL puts more emphasis on conceptual visualizations – producing animations featuring NASA spacecraft, planetary observations and simulations, according to NASA. Goddard Media Studios, on the other hand, is more focused towards public outreach – producing interviews, TV programs and documentaries. GMS continues to be the main producers behind NASA TV, and as such, much of their content is aimed towards the general public.

An impact crater on the moon.Image of Mars showing a partly shadowed Olympus Mons toward the upper left of the image.Mars. Hellas Basin can be seen in the lower right portion of the image.Mars slightly tilted to show the Martian North Pole.Christensen notes, “One of the more unique challenges in this field is one of bringing people from very different backgrounds to agree on a common outcome. I work on teams with scientists, communicators and technologists, and we all have different communities we’re trying to satisfy. For instance, communicators are generally trying to simplify animations so their learning goal is clear, but scientists will insist that we add text and annotations on top of the video to eliminate ambiguity and avoid misinterpretations. Often, the technologist will have to say we can’t zoom in or look at the data in a certain way because it will show the data boundaries or data resolution limits. Every shot is a negotiation, but in trying to compromise, we often push the boundaries of what has been done before, which is exciting.”

#hollywood #vfx #tools #space #exploration