Gemini 2.5 is leaving preview just in time for Google’s new $250 AI subscription

Google I/O? More like Google AI

Gemini 2.5 is leaving preview just in time for Google’s new AI subscription

Gemini 2.5 is rolling out everywhere, and you can pay Google per month for more of it.

Ryan Whitwam

–

May 20, 2025 5:03 pm

|

44

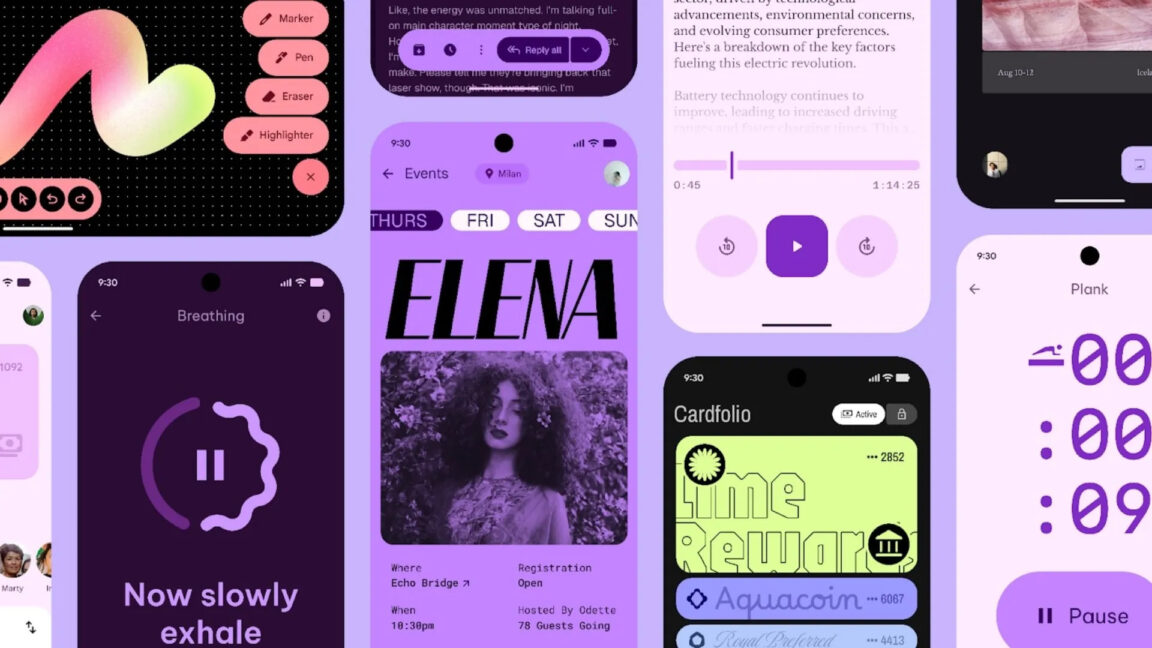

All the new Gemini AI at I/O.

Credit:

Ryan Whitwam

All the new Gemini AI at I/O.

Credit:

Ryan Whitwam

Story text

Size

Small

Standard

Large

Width

*

Standard

Wide

Links

Standard

Orange

* Subscribers only

Learn more

MOUNTAIN VIEW, Calif.—Google rolled out early versions of Gemini 2.5 earlier this year. Marking a significant improvement over the 2.0 branch. For the first time, Google's chatbot felt competitive with the likes of ChatGPT, but it's been "experimental" and later "preview" since then. At I/O 2025, Google announced general availability for Gemini 2.5, and these models will soon be integrated with Chrome. There's also a fancy new subscription plan to get the most from Google's AI. You probably won't like the pricing, though.

Gemini 2.5 goes gold

Even though Gemini 2.5 was revealed a few months ago, the older 2.0 Flash has been the default model all this time. Now that 2.5 is finally ready, the 2.5 Flash model will be swapped in as the new default. This model has built-in simulated reasoning, so its outputs are much more reliable than 2.0 Flash.

Google says the release version of 2.5 Flash is better at reasoning, coding, and multimodality, but it uses 20–30 percent fewer tokens than the preview version. This edition is now live in Vertex AI, AI Studio, and the Gemini app. It will be made the default model in early June.

Likewise, the Pro model is shedding its preview title, and it's getting some new goodies to celebrate. Recent updates have solidified the model's lead on the LM Arena leaderboard, which still means something to Google despite the recent drama—yes, AI benchmarking drama is a thing now. It's also getting a capability called Deep Think, which lets the model consider multiple hypotheses for every query. This apparently makes it incredibly good at math and coding. Google plans to do a little more testing on this feature before making it widely available.

Deep Think is more capable of complex math and coding.

Credit:

Ryan Whitwam

Both 2.5 models have adjustable thinking budgets when used in Vertex AI and via the API, and now the models will also include summaries of the "thinking" process for each output. This makes a little progress toward making generative AI less overwhelmingly expensive to run. Gemini 2.5 Pro will also appear in some of Google's dev products, including Gemini Code Assist.

Gemini Live, previously known as Project Astra, started to appear on mobile devices over the last few months. Initially, you needed to have a Gemini subscription or a Pixel phone to access Gemini Live, but now it's coming to all Android and iOS devices immediately. Google demoed a future "agentic" capability in the Gemini app that can actually control your phone, search the web for files, open apps, and make calls. It's perhaps a little aspirational, just like the Astra demo from last year. The version of Gemini Live we got wasn't as good, but as a glimpse of the future, it was impressive.

There are also some developments in Chrome, and you guessed it, it's getting Gemini. It's not dissimilar from what you get in Edge with Copilot. There's a little Gemini icon in the corner of the browser, which you can click to access Google's chatbot. You can ask it about the pages you're browsing, have it summarize those pages, and ask follow-up questions.

Google AI Ultra is ultra-expensive

Since launching Gemini, Google has only had a single monthly plan for AI features. That plan granted you access to the Pro models and early versions of Google's upcoming AI. At I/O, Google is catching up to AI firms like OpenAI, which have offered sky-high AI plans. Google's new Google AI Ultra plan will cost per month, more than the plan for ChatGPT Pro.

So what does your get you every month? You'll get all the models included with the basic plan with much higher usage limits. If you're using video and image generation, for instance, you won't bump against any limits. Plus, Ultra comes with the newest and most expensive models. For example, Ultra subs will get immediate access to Gemini in Chrome, as well as a new agentic model capable of computer use in the Gemini API.

Gemini Ultra has everything from Pro, plus higher limits and instant access to new tools.

Credit:

Ryan Whitwam

That's probably still not worth it for most Gemini users, but Google is offering a deal right now. Ultra subscribers will get a 50 percent discount for the first three months, but is still a tough sell for AI. It's available in the US today and will come to other regions soon.

A faster future?

Google previewed what could be an important advancement in generative AI for the future. Most of the text and code-based outputs you've seen are generated from beginning to end, token by token. Its large language modelDiffusion works a bit differently for image generation, but Google is now experimenting with Gemini Diffusion.

Diffusion models create images by starting with random noise and then denoise it to create what you asked for. Gemini Diffusion works similarly, generating entire blocks of tokens at the same time. The model can therefore work much faster, and it can check its work as it goes to make the final output more accurate than comparable LLMs. Google says Gemini Diffusion is 2.5 times faster than Gemini 2.5 Flash Lite, which is its fastest standard model, while also producing much better results.

Google claims Gemini Diffusion is capable of previously unheard-of accuracy in complex math and coding. However, it's not being released right away like many of the other I/O Gemini features. Google DeepMind is accepting applications to test it, but it may be a while before the model exits the experimental stage.

Even though I/O was wall-to-wall Gemini, Google still has much, much more AI in store.

Ryan Whitwam

Senior Technology Reporter

Ryan Whitwam

Senior Technology Reporter

Ryan Whitwam is a senior technology reporter at Ars Technica, covering the ways Google, AI, and mobile technology continue to change the world. Over his 20-year career, he's written for Android Police, ExtremeTech, Wirecutter, NY Times, and more. He has reviewed more phones than most people will ever own. You can follow him on Bluesky, where you will see photos of his dozens of mechanical keyboards.

44 Comments

#gemini #leaving #preview #just #time

Gemini 2.5 is leaving preview just in time for Google’s new $250 AI subscription

Google I/O? More like Google AI

Gemini 2.5 is leaving preview just in time for Google’s new AI subscription

Gemini 2.5 is rolling out everywhere, and you can pay Google per month for more of it.

Ryan Whitwam

–

May 20, 2025 5:03 pm

|

44

All the new Gemini AI at I/O.

Credit:

Ryan Whitwam

All the new Gemini AI at I/O.

Credit:

Ryan Whitwam

Story text

Size

Small

Standard

Large

Width

*

Standard

Wide

Links

Standard

Orange

* Subscribers only

Learn more

MOUNTAIN VIEW, Calif.—Google rolled out early versions of Gemini 2.5 earlier this year. Marking a significant improvement over the 2.0 branch. For the first time, Google's chatbot felt competitive with the likes of ChatGPT, but it's been "experimental" and later "preview" since then. At I/O 2025, Google announced general availability for Gemini 2.5, and these models will soon be integrated with Chrome. There's also a fancy new subscription plan to get the most from Google's AI. You probably won't like the pricing, though.

Gemini 2.5 goes gold

Even though Gemini 2.5 was revealed a few months ago, the older 2.0 Flash has been the default model all this time. Now that 2.5 is finally ready, the 2.5 Flash model will be swapped in as the new default. This model has built-in simulated reasoning, so its outputs are much more reliable than 2.0 Flash.

Google says the release version of 2.5 Flash is better at reasoning, coding, and multimodality, but it uses 20–30 percent fewer tokens than the preview version. This edition is now live in Vertex AI, AI Studio, and the Gemini app. It will be made the default model in early June.

Likewise, the Pro model is shedding its preview title, and it's getting some new goodies to celebrate. Recent updates have solidified the model's lead on the LM Arena leaderboard, which still means something to Google despite the recent drama—yes, AI benchmarking drama is a thing now. It's also getting a capability called Deep Think, which lets the model consider multiple hypotheses for every query. This apparently makes it incredibly good at math and coding. Google plans to do a little more testing on this feature before making it widely available.

Deep Think is more capable of complex math and coding.

Credit:

Ryan Whitwam

Both 2.5 models have adjustable thinking budgets when used in Vertex AI and via the API, and now the models will also include summaries of the "thinking" process for each output. This makes a little progress toward making generative AI less overwhelmingly expensive to run. Gemini 2.5 Pro will also appear in some of Google's dev products, including Gemini Code Assist.

Gemini Live, previously known as Project Astra, started to appear on mobile devices over the last few months. Initially, you needed to have a Gemini subscription or a Pixel phone to access Gemini Live, but now it's coming to all Android and iOS devices immediately. Google demoed a future "agentic" capability in the Gemini app that can actually control your phone, search the web for files, open apps, and make calls. It's perhaps a little aspirational, just like the Astra demo from last year. The version of Gemini Live we got wasn't as good, but as a glimpse of the future, it was impressive.

There are also some developments in Chrome, and you guessed it, it's getting Gemini. It's not dissimilar from what you get in Edge with Copilot. There's a little Gemini icon in the corner of the browser, which you can click to access Google's chatbot. You can ask it about the pages you're browsing, have it summarize those pages, and ask follow-up questions.

Google AI Ultra is ultra-expensive

Since launching Gemini, Google has only had a single monthly plan for AI features. That plan granted you access to the Pro models and early versions of Google's upcoming AI. At I/O, Google is catching up to AI firms like OpenAI, which have offered sky-high AI plans. Google's new Google AI Ultra plan will cost per month, more than the plan for ChatGPT Pro.

So what does your get you every month? You'll get all the models included with the basic plan with much higher usage limits. If you're using video and image generation, for instance, you won't bump against any limits. Plus, Ultra comes with the newest and most expensive models. For example, Ultra subs will get immediate access to Gemini in Chrome, as well as a new agentic model capable of computer use in the Gemini API.

Gemini Ultra has everything from Pro, plus higher limits and instant access to new tools.

Credit:

Ryan Whitwam

That's probably still not worth it for most Gemini users, but Google is offering a deal right now. Ultra subscribers will get a 50 percent discount for the first three months, but is still a tough sell for AI. It's available in the US today and will come to other regions soon.

A faster future?

Google previewed what could be an important advancement in generative AI for the future. Most of the text and code-based outputs you've seen are generated from beginning to end, token by token. Its large language modelDiffusion works a bit differently for image generation, but Google is now experimenting with Gemini Diffusion.

Diffusion models create images by starting with random noise and then denoise it to create what you asked for. Gemini Diffusion works similarly, generating entire blocks of tokens at the same time. The model can therefore work much faster, and it can check its work as it goes to make the final output more accurate than comparable LLMs. Google says Gemini Diffusion is 2.5 times faster than Gemini 2.5 Flash Lite, which is its fastest standard model, while also producing much better results.

Google claims Gemini Diffusion is capable of previously unheard-of accuracy in complex math and coding. However, it's not being released right away like many of the other I/O Gemini features. Google DeepMind is accepting applications to test it, but it may be a while before the model exits the experimental stage.

Even though I/O was wall-to-wall Gemini, Google still has much, much more AI in store.

Ryan Whitwam

Senior Technology Reporter

Ryan Whitwam

Senior Technology Reporter

Ryan Whitwam is a senior technology reporter at Ars Technica, covering the ways Google, AI, and mobile technology continue to change the world. Over his 20-year career, he's written for Android Police, ExtremeTech, Wirecutter, NY Times, and more. He has reviewed more phones than most people will ever own. You can follow him on Bluesky, where you will see photos of his dozens of mechanical keyboards.

44 Comments

#gemini #leaving #preview #just #time

1 Σχόλια

·131 Views