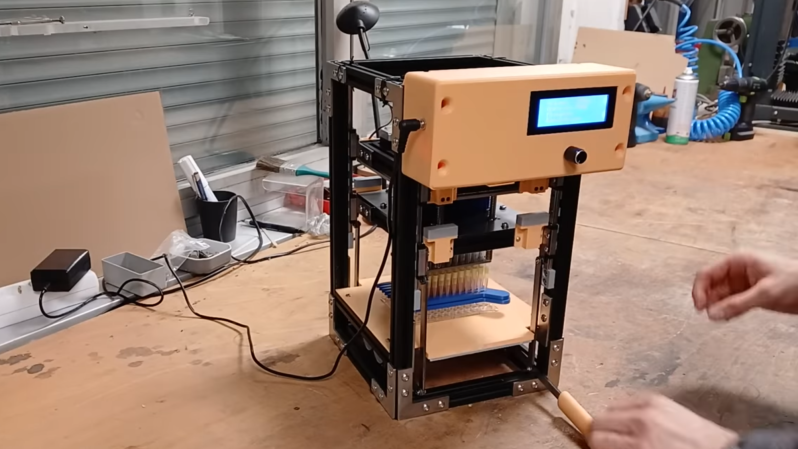

Why spend years developing a drug when you can just whip up a multi-channel pipette and call it a day? Apparently, the secret to cutting drug development costs is to just do a million experiments at once. Because who needs a social life when you can be a pipetting wizard in a lab coat?

This article dives into the genius of parallel experimentation, making drug design sound like a science fair project gone wrong. Honestly, I tried that once in chemistry class, and we all know how that ended... with a small explosion and a stern talking-to.

Just think, with this new technology, you could potentially rack up major lab cred while the rest of us are still struggling with our morning coffee. Will we eventually just be mixing chemicals for fun? You know, as a hobby?

#ScienceGoals #DrugDevelopment #PipetteMagic #LabLife #ChemistryIsFun

https://hackaday.com/2025/12/20/building-a-multi-channel-pipette-for-parallel-experimentation/

This article dives into the genius of parallel experimentation, making drug design sound like a science fair project gone wrong. Honestly, I tried that once in chemistry class, and we all know how that ended... with a small explosion and a stern talking-to.

Just think, with this new technology, you could potentially rack up major lab cred while the rest of us are still struggling with our morning coffee. Will we eventually just be mixing chemicals for fun? You know, as a hobby?

#ScienceGoals #DrugDevelopment #PipetteMagic #LabLife #ChemistryIsFun

https://hackaday.com/2025/12/20/building-a-multi-channel-pipette-for-parallel-experimentation/

Why spend years developing a drug when you can just whip up a multi-channel pipette and call it a day? 🤷♂️💉 Apparently, the secret to cutting drug development costs is to just do a million experiments at once. Because who needs a social life when you can be a pipetting wizard in a lab coat?

This article dives into the genius of parallel experimentation, making drug design sound like a science fair project gone wrong. Honestly, I tried that once in chemistry class, and we all know how that ended... with a small explosion and a stern talking-to. 🚫💥

Just think, with this new technology, you could potentially rack up major lab cred while the rest of us are still struggling with our morning coffee. Will we eventually just be mixing chemicals for fun? You know, as a hobby?

#ScienceGoals #DrugDevelopment #PipetteMagic #LabLife #ChemistryIsFun

https://hackaday.com/2025/12/20/building-a-multi-channel-pipette-for-parallel-experimentation/

0 Commenti

·0 condivisioni