0 Comentários

0 Compartilhamentos

17 Visualizações

Diretório

Diretório

-

Faça Login para curtir, compartilhar e comentar!

-

WWW.NYTIMES.COMHow X Is Benefiting as Musk Advises TrumpThe social media platform has experienced a return in advertisers and new exposure as an official source of government news.0 Comentários 0 Compartilhamentos 17 Visualizações

WWW.NYTIMES.COMHow X Is Benefiting as Musk Advises TrumpThe social media platform has experienced a return in advertisers and new exposure as an official source of government news.0 Comentários 0 Compartilhamentos 17 Visualizações -

WWW.COMPUTERWORLD.COMHow enterprise IT can protect itself from genAI unreliabilityMesmerized by the scalability, efficiency and flexibility claims from generative AI (genAI) vendors, enterprise execs have been all but tripping over themselves trying to push the technology to its limits.The fear of flawed deliverables based on a combination ofhallucinations, imperfect training data and a model that can disregard query specificsand can ignore guardrails is usually minimized.But the Mayo Clinic is trying to push back on all those problematic answers.In an interview with VentureBeat,Matthew Callstrom,Mayos medical director, explained: Mayo paired whats known as the clustering using representatives (CURE) algorithm with LLMs and vector databases to double-check data retrieval.The algorithm has the ability to detect outliers or data points that dont match the others. Combining CURE with a reverse RAG approach, Mayos [large language model] split the summaries it generated into individual facts, then matched those back to source documents. A second LLM then scored how well the facts aligned with those sources, specifically if there was a causal relationship between the two.(Computerworldreached directly to Callstrom for an interview, but he was not available.)There are, broadly speaking, two categories for reducing genAIs lack of reliability: humans in the loop (usually, an awfullotof humans in the loop) or some version of AI watching AI.The idea of having more humans monitoring what these tools deliver is typically seen as the safer approach, but it undercuts the key value of genAI massive efficiencies. Those efficiencies, the argument goes, should allow workers to be redeployed to more strategic work or, as the argument becomes a whisper, to sharply reduce that workforce.Butat the scale of a typical enterprise, genAI efficienciescouldreplace the work of thousands of people. Adding human oversight might only require dozens of humans. It still makes mathematical sense.The AI-watching-AI approach is scarier, althougha lot of enterprisesare giving it a go. Some are looking to push any liability down the road bypartnering with others to do their genAI calculationsfor them. Still others are looking topay third-parties to come in and try and improvetheir genAI accuracy. The phrase throwing good money after bad immediately comes to mind.The lack of effective ways to improve genAI reliability internally is a key factor in why so many proof-of-concept trialsgot approved quickly, but never moved into production.Some version of throwing more humans into the mix to keep an eye on genAI outputs seems to be winning the argument, for now. You have to have a human babysitter on it. AI watching AI is guaranteed to fail, said Missy Cummings, a George Mason University professor and director of Masons Autonomy and Robotics Center (MARC).People are going to do it because they want to believe in the (technologys) promises. People can be taken in by the self-confidence of a genAI system, she said, comparing it to the experience of driving autonomous vehicles (AVs).When driving an AV, the AI is pretty good and it can work. But if you quit paying attention for a quick second, disaster can strike, Cummings said. The bigger problem is that people develop an unhealthy complacency.Rowan Curran, a Forrester senior analyst,said Mayos approach might have some merit. Look at the input and look at the output and see how close it adheres, Curran said.Curran argued that identifying the objective truth of a response is important, but its also important to simply see whether the model is even attempting to directly answer the query posed, including all of the querys components. If the system concludes that the answer is non-responsive, it can be ignored on that basis.Another genAI expert is Rex Booth, CISO for identity vendor Sailpoint. Booth said that simply forcing LLMs to explain more about their own limitations would be a major help in making outputs more reliable.For example, many if not most hallucinations happen when the model cant find an answer in its massive database. If the system were set up to simply say, I dont know, or even the more face-saving, The data I was trained on doesnt cover that, confidence in outputs would likely rise.Booth focused on how current data is. If a question asks about something that happened in April 2025 and the model knows its training data was last updated in December 2024 it should simply say that rather than making something up. It wont even flag that its data is so limited, he said.He also said that the concept of agents checking agents can work well provided each agent is assigned a discrete task.But IT decision-makers should never assume those tasks and that separation will be respected. You cant rely on the effective establishment of rules, Booth said. Whether human or AI agents, everything steps outside the rules. You have to be able to detect that once it happens.Another popular concept for making genAI more reliable is to force senior management and especially the board of directors to agree on a risk tolerance level, put it in writing and publish it. This would ideally push senior managers and execs to ask the tough questions about what can go wrong with these tools and how much damage they could cause.Reece Hayden,principal analyst with ABI Research, is skeptical about how much senior management truly understands genAI risks.They see the benefits and they understand the 10% inaccuracy, but they see it as though they are human-like errors: small mistakes, recoverable mistakes, Hayden said. But when algorithms go off track, they can make errors light years more serious than humans.For example, humans often spot-check their work. But spot-checking genAI doesnt work, Hayden said. In no way does the accuracy of one answer indicate the accuracy of other answers.Its possible the reliability issues wont be fixed until enterprise environments adapt to become more technologically hospitable to genAI systems.The deeper problem lies in how most enterprises treat the model like a magic box, expecting it to behave perfectly in a messy, incomplete and outdated system, said Soumendra Mohanty, chief strategy officer at AI vendor Tredence. GenAI models hallucinate not just because theyre flawed, but because theyre being used in environments that were never built for machine decision-making. To move past this, CIOs need to stop managing the model and start managing the system around the model. This means rethinking how data flows, how AI is embedded in business processes, and how decisions are made, checked and improved.Mohanty offered an example: A contract summarizer should not just generate a summary, but it should validate which clauses to flag, highlight missing sections and pull definitions from approved sources. This is decision engineering defining the path, limits, and rules for AI output, not just the prompt.There is a psychological reason execs tend to resist facing this issue. Licensing genAI models is stunningly expensive. And after making a massive investment in the technology, theres natural resistance to pouring even more money into it to make outputs reliable.And yet, the whole genAI game has to be focused on delivering the goods. That means not only looking at what works, but dealing with what doesnt. Theres going to be a substantial cost to fixing things when these erroneus answers or flawed actions are discovered.Its galling, yes; it is also necessary. The same people who will be praised effusively about the benefits of genAI will be the ones blamed for errors that materialize later. Its your career choose wisely.0 Comentários 0 Compartilhamentos 17 Visualizações

WWW.COMPUTERWORLD.COMHow enterprise IT can protect itself from genAI unreliabilityMesmerized by the scalability, efficiency and flexibility claims from generative AI (genAI) vendors, enterprise execs have been all but tripping over themselves trying to push the technology to its limits.The fear of flawed deliverables based on a combination ofhallucinations, imperfect training data and a model that can disregard query specificsand can ignore guardrails is usually minimized.But the Mayo Clinic is trying to push back on all those problematic answers.In an interview with VentureBeat,Matthew Callstrom,Mayos medical director, explained: Mayo paired whats known as the clustering using representatives (CURE) algorithm with LLMs and vector databases to double-check data retrieval.The algorithm has the ability to detect outliers or data points that dont match the others. Combining CURE with a reverse RAG approach, Mayos [large language model] split the summaries it generated into individual facts, then matched those back to source documents. A second LLM then scored how well the facts aligned with those sources, specifically if there was a causal relationship between the two.(Computerworldreached directly to Callstrom for an interview, but he was not available.)There are, broadly speaking, two categories for reducing genAIs lack of reliability: humans in the loop (usually, an awfullotof humans in the loop) or some version of AI watching AI.The idea of having more humans monitoring what these tools deliver is typically seen as the safer approach, but it undercuts the key value of genAI massive efficiencies. Those efficiencies, the argument goes, should allow workers to be redeployed to more strategic work or, as the argument becomes a whisper, to sharply reduce that workforce.Butat the scale of a typical enterprise, genAI efficienciescouldreplace the work of thousands of people. Adding human oversight might only require dozens of humans. It still makes mathematical sense.The AI-watching-AI approach is scarier, althougha lot of enterprisesare giving it a go. Some are looking to push any liability down the road bypartnering with others to do their genAI calculationsfor them. Still others are looking topay third-parties to come in and try and improvetheir genAI accuracy. The phrase throwing good money after bad immediately comes to mind.The lack of effective ways to improve genAI reliability internally is a key factor in why so many proof-of-concept trialsgot approved quickly, but never moved into production.Some version of throwing more humans into the mix to keep an eye on genAI outputs seems to be winning the argument, for now. You have to have a human babysitter on it. AI watching AI is guaranteed to fail, said Missy Cummings, a George Mason University professor and director of Masons Autonomy and Robotics Center (MARC).People are going to do it because they want to believe in the (technologys) promises. People can be taken in by the self-confidence of a genAI system, she said, comparing it to the experience of driving autonomous vehicles (AVs).When driving an AV, the AI is pretty good and it can work. But if you quit paying attention for a quick second, disaster can strike, Cummings said. The bigger problem is that people develop an unhealthy complacency.Rowan Curran, a Forrester senior analyst,said Mayos approach might have some merit. Look at the input and look at the output and see how close it adheres, Curran said.Curran argued that identifying the objective truth of a response is important, but its also important to simply see whether the model is even attempting to directly answer the query posed, including all of the querys components. If the system concludes that the answer is non-responsive, it can be ignored on that basis.Another genAI expert is Rex Booth, CISO for identity vendor Sailpoint. Booth said that simply forcing LLMs to explain more about their own limitations would be a major help in making outputs more reliable.For example, many if not most hallucinations happen when the model cant find an answer in its massive database. If the system were set up to simply say, I dont know, or even the more face-saving, The data I was trained on doesnt cover that, confidence in outputs would likely rise.Booth focused on how current data is. If a question asks about something that happened in April 2025 and the model knows its training data was last updated in December 2024 it should simply say that rather than making something up. It wont even flag that its data is so limited, he said.He also said that the concept of agents checking agents can work well provided each agent is assigned a discrete task.But IT decision-makers should never assume those tasks and that separation will be respected. You cant rely on the effective establishment of rules, Booth said. Whether human or AI agents, everything steps outside the rules. You have to be able to detect that once it happens.Another popular concept for making genAI more reliable is to force senior management and especially the board of directors to agree on a risk tolerance level, put it in writing and publish it. This would ideally push senior managers and execs to ask the tough questions about what can go wrong with these tools and how much damage they could cause.Reece Hayden,principal analyst with ABI Research, is skeptical about how much senior management truly understands genAI risks.They see the benefits and they understand the 10% inaccuracy, but they see it as though they are human-like errors: small mistakes, recoverable mistakes, Hayden said. But when algorithms go off track, they can make errors light years more serious than humans.For example, humans often spot-check their work. But spot-checking genAI doesnt work, Hayden said. In no way does the accuracy of one answer indicate the accuracy of other answers.Its possible the reliability issues wont be fixed until enterprise environments adapt to become more technologically hospitable to genAI systems.The deeper problem lies in how most enterprises treat the model like a magic box, expecting it to behave perfectly in a messy, incomplete and outdated system, said Soumendra Mohanty, chief strategy officer at AI vendor Tredence. GenAI models hallucinate not just because theyre flawed, but because theyre being used in environments that were never built for machine decision-making. To move past this, CIOs need to stop managing the model and start managing the system around the model. This means rethinking how data flows, how AI is embedded in business processes, and how decisions are made, checked and improved.Mohanty offered an example: A contract summarizer should not just generate a summary, but it should validate which clauses to flag, highlight missing sections and pull definitions from approved sources. This is decision engineering defining the path, limits, and rules for AI output, not just the prompt.There is a psychological reason execs tend to resist facing this issue. Licensing genAI models is stunningly expensive. And after making a massive investment in the technology, theres natural resistance to pouring even more money into it to make outputs reliable.And yet, the whole genAI game has to be focused on delivering the goods. That means not only looking at what works, but dealing with what doesnt. Theres going to be a substantial cost to fixing things when these erroneus answers or flawed actions are discovered.Its galling, yes; it is also necessary. The same people who will be praised effusively about the benefits of genAI will be the ones blamed for errors that materialize later. Its your career choose wisely.0 Comentários 0 Compartilhamentos 17 Visualizações -

WWW.TECHNOLOGYREVIEW.COMHow the Pentagon is adapting to Chinas technological riseIts been just over two months since Kathleen Hicks stepped down as US deputy secretary of defense. As the highest-ranking woman in Pentagon history, Hicks shaped US military posture through an era defined by renewed competition between powerful countries and a scramble to modernize defense technology.Shes currently taking a break before jumping into her (still unannounced) next act. Its been refreshing, she saysbut disconnecting isnt easy. She continues to monitor defense developments closely and expresses concern over potential setbacks: New administrations have new priorities, and thats completely expected, but I do worry about just stalling out on progress that weve built over a number of administrations.Over the past three decades, Hicks has watched the Pentagon transformpolitically, strategically, and technologically. She entered government in the 1990s at the tail end of the Cold War, when optimism and a belief in global cooperation still dominated US foreign policy. But that optimism dimmed. After 9/11, the focus shifted to counterterrorism and nonstate actors. Then came Russias resurgence and Chinas growing assertiveness. Hicks took two previous breaks from government workthe first to complete a PhD at MIT and the second to join the think tank Center for Strategic and International Studies (CSIS), where she focused on defense strategy. By the time I returned in 2021, she says, there was one actorthe PRC (Peoples Republic of China)that had the capability and the will to really contest the international system as its set up.In this conversation with MIT Technology Review, Hicks reflects on how the Pentagon is adaptingor failing to adaptto a new era of geopolitical competition. She discusses Chinas technological rise, the future of AI in warfare, and her signature initiative, Replicator, a Pentagon initiative to rapidly field thousands of low-cost autonomous systems such as drones.Youve described China as a talented fast follower. Do you still believe that, especially given recent developments in AI and other technologies?Yes, I do. China is the biggest pacing challenge we face, which means it sets the pace for most capability areas for what we need to be able to defeat to deter them. For example, surface maritime capability, missile capability, stealth fighter capability. They set their minds to achieving a certain capability, they tend to get there, and they tend to get there even faster.That said, they have a substantial amount of corruption, and they havent been engaged in a real conflict or combat operation in the way that Western militaries have trained for or been involved in, and that is a huge X factor in how effective they would be.China has made major technological strides, and the old narrative of its being a follower is breaking downnot just in commercial tech, but more broadly. Do you think the US still holds a strategic advantage?I would never want to underestimate their abilityor any nations abilityto innovate organically when they put their minds to it. But I still think its a helpful comparison to look at the US model. Because were a system of free minds, free people, and free markets, we have the potential to generate much more innovation culturally and organically than a statist model does. Thats our advantageif we can realize it.China is ahead in manufacturing, especially when it comes to drones and other unmanned systems. How big a problem is that for US defense, and can the US catch up?I do think its a massive problem. When we were conceiving Replicator, one of the big concerns was that DJI had just jumped way out ahead on the manufacturing side, and the US had been left behind. A lot of manufacturers here believe they can catch up if given the right contractsand I agree with that.We also spent time identifying broader supply-chain vulnerabilities. Microelectronics was a big one. Critical minerals. Batteries. People sometimes think batteries are just about electrification, but theyre fundamental across our systemseven on ships in the Navy.When it comes to drones specifically, I actually think its a solvable problem. The issue isnt complexity. Its just about getting enough mass of contracts to scale up manufacturing. If we do that, I believe the US can absolutely compete.The Replicator drone program was one of your key initiatives. It promised a very fast timelineespecially compared with the typical defense acquisition cycle. Was that achievable? How is that progressing?When I left in January, we had still lined up for proving out this summer, and I still believe we should see some completion this year. I hope Congress will stay very engaged in trying to ensure that the capability, in fact, comes to fruition. Even just this week with Secretary [Pete] Hegseth out in the Indo-Pacific, he made some passing reference to the [US Indo-Pacific Command] commander, Admiral [Samuel] Paparo, having the flexibility to create the capability needed, and that gives me a lot of confidence of consistency.Can you talk about how Replicator fits into broader efforts to speed up defense innovation? Whats actually changing inside the system?Traditionally, defense acquisition is slow and serialone step after another, which works for massive, long-term systems like submarines. But for things like drones, that just doesnt cut it. With Replicator, we aimed to shift to a parallel model: integrating hardware, software, policy, and testing all at once. Thats how you get speedby breaking down silos and running things simultaneously.Its not about Move fast and break things. You still have to test and evaluate responsibly. But this approach shows we can move faster without sacrificing accountabilityand thats a big cultural shift.How important is AI to the future of national defense?Its central. The future of warfare will be about speed and precisiondecision advantage. AI helps enable that. Its about integrating capabilities to create faster, more accurate decision-making: for achieving military objectives, for reducing civilian casualties, and for being able to deter effectively. But weve also emphasized responsible AI. If its not safe, its not going to be effective. Thats been a key focus across administrations.What about generative AI specifically? Does it have real strategic significance yet, or is it still in the experimental phase?It does have significance, especially for decision-making and efficiency. We had an effort called Project Lima where we looked at use cases for generative AIwhere it might be most useful, and what the rules for responsible use should look like. Some of the biggest use may come first in the back officehuman resources, auditing, logistics. But the ability to use generative AI to create a network of capability around unmanned systems or information exchange, either in Replicator or JADC2? Thats where it becomes a real advantage. But those back-office areas are where I would anticipate to see big gains first.[Editors note: JADC2 is Joint All-Domain Command and Control, a DOD initiative to connect sensors from all branches of the armed forces into a unified network powered by artificial intelligence.]In recent years, weve seen more tech industry figures stepping into national defense conversationssometimes pushing strong political views or advocating for deregulation. How do you see Silicon Valleys growing influence on US defense strategy?Theres a long history of innovation in this country coming from outside the governmentpeople who look at big national problems and want to help solve them. That kind of engagement is good, especially when their technical expertise lines up with real national security needs.But thats not just one stakeholder group. A healthy democracy includes others, tooworkers, environmental voices, allies. We need to reconcile all of that through a functioning democratic process. Thats the only way this works.How do you view the involvement of prominent tech entrepreneurs, such as Elon Musk, in shaping national defense policies?I believe its not healthy for any democracy when a single individual wields more power than their technical expertise or official role justifies. We need strong institutions, not just strong personalities.The US has long attracted top STEM talent from around the world, including many researchers from China. But in recent years, immigration hurdles and heightened scrutiny have made it harder for foreign-born scientists to stay. Do you see this as a threat to US innovation?I think you have to be confident that you have a secure research community to do secure work. But much of the work that underpins national defense thats STEM-related research doesnt need to be tightly secured in that way, and it really is dependent on a diverse ecosystem of talent. Cutting off talent pipelines is like eating our seed corn. Programs like H-1B visas are really important.And its not just about international talentwe need to make sure people from underrepresented communities here in the US see national security as a space where they can contribute. If they dont feel valued or trusted, theyre less likely to come in and stay.What do you see as the biggest challenge the Department of Defense faces today?I do think the trustor the lack of itis a big challenge. Whether its trust in government broadly or specific concerns like military spending, audits, or politicization of the uniformed military, that issue manifests in everything DOD is trying to get done. It affects our ability to work with Congress, with allies, with industry, and with the American people. If people dont believe youre working in their interest, its hard to get anything done.0 Comentários 0 Compartilhamentos 19 Visualizações

WWW.TECHNOLOGYREVIEW.COMHow the Pentagon is adapting to Chinas technological riseIts been just over two months since Kathleen Hicks stepped down as US deputy secretary of defense. As the highest-ranking woman in Pentagon history, Hicks shaped US military posture through an era defined by renewed competition between powerful countries and a scramble to modernize defense technology.Shes currently taking a break before jumping into her (still unannounced) next act. Its been refreshing, she saysbut disconnecting isnt easy. She continues to monitor defense developments closely and expresses concern over potential setbacks: New administrations have new priorities, and thats completely expected, but I do worry about just stalling out on progress that weve built over a number of administrations.Over the past three decades, Hicks has watched the Pentagon transformpolitically, strategically, and technologically. She entered government in the 1990s at the tail end of the Cold War, when optimism and a belief in global cooperation still dominated US foreign policy. But that optimism dimmed. After 9/11, the focus shifted to counterterrorism and nonstate actors. Then came Russias resurgence and Chinas growing assertiveness. Hicks took two previous breaks from government workthe first to complete a PhD at MIT and the second to join the think tank Center for Strategic and International Studies (CSIS), where she focused on defense strategy. By the time I returned in 2021, she says, there was one actorthe PRC (Peoples Republic of China)that had the capability and the will to really contest the international system as its set up.In this conversation with MIT Technology Review, Hicks reflects on how the Pentagon is adaptingor failing to adaptto a new era of geopolitical competition. She discusses Chinas technological rise, the future of AI in warfare, and her signature initiative, Replicator, a Pentagon initiative to rapidly field thousands of low-cost autonomous systems such as drones.Youve described China as a talented fast follower. Do you still believe that, especially given recent developments in AI and other technologies?Yes, I do. China is the biggest pacing challenge we face, which means it sets the pace for most capability areas for what we need to be able to defeat to deter them. For example, surface maritime capability, missile capability, stealth fighter capability. They set their minds to achieving a certain capability, they tend to get there, and they tend to get there even faster.That said, they have a substantial amount of corruption, and they havent been engaged in a real conflict or combat operation in the way that Western militaries have trained for or been involved in, and that is a huge X factor in how effective they would be.China has made major technological strides, and the old narrative of its being a follower is breaking downnot just in commercial tech, but more broadly. Do you think the US still holds a strategic advantage?I would never want to underestimate their abilityor any nations abilityto innovate organically when they put their minds to it. But I still think its a helpful comparison to look at the US model. Because were a system of free minds, free people, and free markets, we have the potential to generate much more innovation culturally and organically than a statist model does. Thats our advantageif we can realize it.China is ahead in manufacturing, especially when it comes to drones and other unmanned systems. How big a problem is that for US defense, and can the US catch up?I do think its a massive problem. When we were conceiving Replicator, one of the big concerns was that DJI had just jumped way out ahead on the manufacturing side, and the US had been left behind. A lot of manufacturers here believe they can catch up if given the right contractsand I agree with that.We also spent time identifying broader supply-chain vulnerabilities. Microelectronics was a big one. Critical minerals. Batteries. People sometimes think batteries are just about electrification, but theyre fundamental across our systemseven on ships in the Navy.When it comes to drones specifically, I actually think its a solvable problem. The issue isnt complexity. Its just about getting enough mass of contracts to scale up manufacturing. If we do that, I believe the US can absolutely compete.The Replicator drone program was one of your key initiatives. It promised a very fast timelineespecially compared with the typical defense acquisition cycle. Was that achievable? How is that progressing?When I left in January, we had still lined up for proving out this summer, and I still believe we should see some completion this year. I hope Congress will stay very engaged in trying to ensure that the capability, in fact, comes to fruition. Even just this week with Secretary [Pete] Hegseth out in the Indo-Pacific, he made some passing reference to the [US Indo-Pacific Command] commander, Admiral [Samuel] Paparo, having the flexibility to create the capability needed, and that gives me a lot of confidence of consistency.Can you talk about how Replicator fits into broader efforts to speed up defense innovation? Whats actually changing inside the system?Traditionally, defense acquisition is slow and serialone step after another, which works for massive, long-term systems like submarines. But for things like drones, that just doesnt cut it. With Replicator, we aimed to shift to a parallel model: integrating hardware, software, policy, and testing all at once. Thats how you get speedby breaking down silos and running things simultaneously.Its not about Move fast and break things. You still have to test and evaluate responsibly. But this approach shows we can move faster without sacrificing accountabilityand thats a big cultural shift.How important is AI to the future of national defense?Its central. The future of warfare will be about speed and precisiondecision advantage. AI helps enable that. Its about integrating capabilities to create faster, more accurate decision-making: for achieving military objectives, for reducing civilian casualties, and for being able to deter effectively. But weve also emphasized responsible AI. If its not safe, its not going to be effective. Thats been a key focus across administrations.What about generative AI specifically? Does it have real strategic significance yet, or is it still in the experimental phase?It does have significance, especially for decision-making and efficiency. We had an effort called Project Lima where we looked at use cases for generative AIwhere it might be most useful, and what the rules for responsible use should look like. Some of the biggest use may come first in the back officehuman resources, auditing, logistics. But the ability to use generative AI to create a network of capability around unmanned systems or information exchange, either in Replicator or JADC2? Thats where it becomes a real advantage. But those back-office areas are where I would anticipate to see big gains first.[Editors note: JADC2 is Joint All-Domain Command and Control, a DOD initiative to connect sensors from all branches of the armed forces into a unified network powered by artificial intelligence.]In recent years, weve seen more tech industry figures stepping into national defense conversationssometimes pushing strong political views or advocating for deregulation. How do you see Silicon Valleys growing influence on US defense strategy?Theres a long history of innovation in this country coming from outside the governmentpeople who look at big national problems and want to help solve them. That kind of engagement is good, especially when their technical expertise lines up with real national security needs.But thats not just one stakeholder group. A healthy democracy includes others, tooworkers, environmental voices, allies. We need to reconcile all of that through a functioning democratic process. Thats the only way this works.How do you view the involvement of prominent tech entrepreneurs, such as Elon Musk, in shaping national defense policies?I believe its not healthy for any democracy when a single individual wields more power than their technical expertise or official role justifies. We need strong institutions, not just strong personalities.The US has long attracted top STEM talent from around the world, including many researchers from China. But in recent years, immigration hurdles and heightened scrutiny have made it harder for foreign-born scientists to stay. Do you see this as a threat to US innovation?I think you have to be confident that you have a secure research community to do secure work. But much of the work that underpins national defense thats STEM-related research doesnt need to be tightly secured in that way, and it really is dependent on a diverse ecosystem of talent. Cutting off talent pipelines is like eating our seed corn. Programs like H-1B visas are really important.And its not just about international talentwe need to make sure people from underrepresented communities here in the US see national security as a space where they can contribute. If they dont feel valued or trusted, theyre less likely to come in and stay.What do you see as the biggest challenge the Department of Defense faces today?I do think the trustor the lack of itis a big challenge. Whether its trust in government broadly or specific concerns like military spending, audits, or politicization of the uniformed military, that issue manifests in everything DOD is trying to get done. It affects our ability to work with Congress, with allies, with industry, and with the American people. If people dont believe youre working in their interest, its hard to get anything done.0 Comentários 0 Compartilhamentos 19 Visualizações -

GAMINGBOLT.COMThe Duskbloods Doesnt Change FromSoftwares Commitment to Single-Player Titles, Says DirectorPlenty of games generated buzz during last weeks Nintendo Switch 2 Direct, but the announcement of FromSoftwares The Duskbloods felt noteworthy. Perhaps its because of the concept or its exclusivity status a first for the developer on Nintendo platforms.However, there was some trepidation after it was announced as an eight-player PvEvP title. With Elden Ring: Nightreign on the horizon, was the developer focusing more on online experiences for the foreseeable future?Well, no. In a new Creators Voice interview with Nintendo, director Hidetaka Miyazaki said, This is an online multiplayer title at its core, but this doesnt mean that we as a company have decided to shift to a more multiplayer-focused direction with titles going forward.The Nintendo Switch 2 version of Elden Ring was also announced, and we still intend to actively develop single-player focused games such as this that embrace our more traditional style.Though its action and art style scream FromSoftware, The Duskbloods sees the team venturing into interesting new directions. There are over a dozen playable characters with unique abilities and weapons, and players can customize them to some extent.You can also adjust their blood history, marking others as your rival or companion (and earning rewards). The range of traversal options is also distinctly superhuman compared to the developers previous titles.The Duskbloods launches in 2026 for Nintendo Switch 2. Stay tuned for more details in the coming months.0 Comentários 0 Compartilhamentos 28 Visualizações

GAMINGBOLT.COMThe Duskbloods Doesnt Change FromSoftwares Commitment to Single-Player Titles, Says DirectorPlenty of games generated buzz during last weeks Nintendo Switch 2 Direct, but the announcement of FromSoftwares The Duskbloods felt noteworthy. Perhaps its because of the concept or its exclusivity status a first for the developer on Nintendo platforms.However, there was some trepidation after it was announced as an eight-player PvEvP title. With Elden Ring: Nightreign on the horizon, was the developer focusing more on online experiences for the foreseeable future?Well, no. In a new Creators Voice interview with Nintendo, director Hidetaka Miyazaki said, This is an online multiplayer title at its core, but this doesnt mean that we as a company have decided to shift to a more multiplayer-focused direction with titles going forward.The Nintendo Switch 2 version of Elden Ring was also announced, and we still intend to actively develop single-player focused games such as this that embrace our more traditional style.Though its action and art style scream FromSoftware, The Duskbloods sees the team venturing into interesting new directions. There are over a dozen playable characters with unique abilities and weapons, and players can customize them to some extent.You can also adjust their blood history, marking others as your rival or companion (and earning rewards). The range of traversal options is also distinctly superhuman compared to the developers previous titles.The Duskbloods launches in 2026 for Nintendo Switch 2. Stay tuned for more details in the coming months.0 Comentários 0 Compartilhamentos 28 Visualizações -

VENTUREBEAT.COMReturn Entertainment launches Rivals Arena smart TV trivia game on Amazon Fire TV in UKReturn Entertainment said its debut game, Rivals Arena, is now available for Fire TV in the United Kingdom.Read More0 Comentários 0 Compartilhamentos 15 Visualizações

VENTUREBEAT.COMReturn Entertainment launches Rivals Arena smart TV trivia game on Amazon Fire TV in UKReturn Entertainment said its debut game, Rivals Arena, is now available for Fire TV in the United Kingdom.Read More0 Comentários 0 Compartilhamentos 15 Visualizações -

WWW.GAMESINDUSTRY.BIZBill Petras, Overwatch and World of Warcraft art director, has diedBill Petras, Overwatch and World of Warcraft art director, has diedPetras worked on multiple genre-defining games at the studioImage credit: Blizzard Entertainment News by Samuel Roberts Editorial Director Published on April 7, 2025 Overwatch and World of Warcraft art director Bill Petras has died.The news was shared by Harley D. Huggins II, former cinematic projects director at Blizzard, who paid tribute to Petras on LinkedIn."Bill Petras, a game development legend has unexpectedly passed away. Billy was an amazingly gifted artist who worked at Blizzard Entertainment for almost two decades," Huggins said."He was immensely proud of the work he did there, most notably as the Art Director for World of Warcraft and Overwatch.""Billy and I started at Blizzard the same week and were close friends for 28 years. I will miss our regular, long, rambling conversations about life, game dev, games, art, comic books, toys, monster movies and Conan."He will be deeply missed by me and all who knew him."Petras' career began in the early '90s on PC titles like BloodNet and Star Crusader.He joined Blizzard in 1997, working on 1998's StarCraft and its Brood War expansion. Petras was an artist on the best-selling real-time strategy game, and is also credited for its box and manual art.Petras would later work on the RTS WarCraft III, and then World of Warcraft, where he was responsible for the highly influential art direction of the MMO upon its original launch in 2004.Petras left Blizzard in 2005 to co-found the studio Red 5, which created the MMO Firefall.He later returned to the company in 2010, working as Art Director for the successful shooter Overwatch, which emerged from the cancelled MMO project, Titan. He remained at Blizzard until 2021.0 Comentários 0 Compartilhamentos 17 Visualizações

WWW.GAMESINDUSTRY.BIZBill Petras, Overwatch and World of Warcraft art director, has diedBill Petras, Overwatch and World of Warcraft art director, has diedPetras worked on multiple genre-defining games at the studioImage credit: Blizzard Entertainment News by Samuel Roberts Editorial Director Published on April 7, 2025 Overwatch and World of Warcraft art director Bill Petras has died.The news was shared by Harley D. Huggins II, former cinematic projects director at Blizzard, who paid tribute to Petras on LinkedIn."Bill Petras, a game development legend has unexpectedly passed away. Billy was an amazingly gifted artist who worked at Blizzard Entertainment for almost two decades," Huggins said."He was immensely proud of the work he did there, most notably as the Art Director for World of Warcraft and Overwatch.""Billy and I started at Blizzard the same week and were close friends for 28 years. I will miss our regular, long, rambling conversations about life, game dev, games, art, comic books, toys, monster movies and Conan."He will be deeply missed by me and all who knew him."Petras' career began in the early '90s on PC titles like BloodNet and Star Crusader.He joined Blizzard in 1997, working on 1998's StarCraft and its Brood War expansion. Petras was an artist on the best-selling real-time strategy game, and is also credited for its box and manual art.Petras would later work on the RTS WarCraft III, and then World of Warcraft, where he was responsible for the highly influential art direction of the MMO upon its original launch in 2004.Petras left Blizzard in 2005 to co-found the studio Red 5, which created the MMO Firefall.He later returned to the company in 2010, working as Art Director for the successful shooter Overwatch, which emerged from the cancelled MMO project, Titan. He remained at Blizzard until 2021.0 Comentários 0 Compartilhamentos 17 Visualizações -

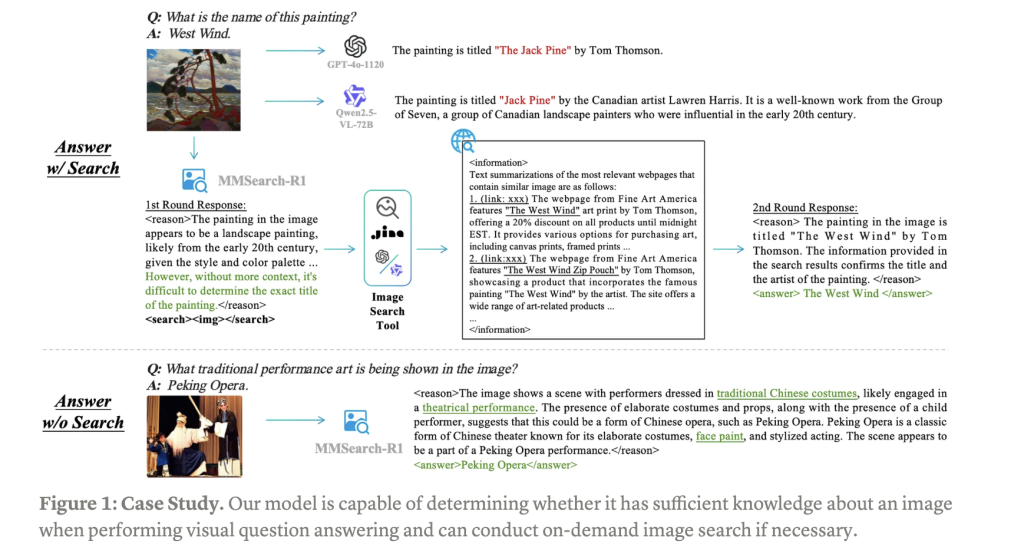

WWW.MARKTECHPOST.COMMMSearch-R1: End-to-End Reinforcement Learning for Active Image Search in LMMsLarge Multimodal Models (LMMs) have demonstrated remarkable capabilities when trained on extensive visual-text paired data, advancing multimodal understanding tasks significantly. However, these models struggle with complex real-world knowledge, particularly long-tail information that emerges after training cutoffs or domain-specific knowledge restricted by privacy, copyright, or security concerns. When forced to operate beyond their internal knowledge boundaries, LMMs often produce hallucinations, severely compromising their reliability in scenarios where factual accuracy is paramount. While Retrieval-Augmented Generation (RAG) has been widely implemented to overcome these limitations, it introduces its challenges: the decoupled retrieval and generation components resist end-to-end optimisation, and its rigid retrieve-then-generate approach triggers unnecessary retrievals even when the model already possesses sufficient knowledge, resulting in increased latency and computational costs.Recent approaches have made significant strides in addressing knowledge limitations in large models. End-to-end reinforcement learning (RL) methods like OpenAIs o-series, DeepSeek-R1, and Kimi K-1.5 have remarkably improved model reasoning capabilities. Simultaneously, Deep Research Models developed by major AI labs have shown that training models to interact directly with internet content substantially enhances their performance on complex real-world tasks. Despite these advances, challenges persist in efficiently integrating external knowledge retrieval with generation capabilities. Current methods either prioritize reasoning without optimized knowledge access or focus on retrieval mechanisms that arent seamlessly integrated with the models generation process. These approaches often fail to achieve the optimal balance between computational efficiency, response accuracy, and the ability to handle dynamic information, leaving significant room for improvement in creating truly adaptive and knowledge-aware multimodal systems.Researchers have attempted to explore an end-to-end RL framework to extend the capability boundaries of LMMs. And tried to answer the following questions:(1) Can LMMs be trained to perceive their knowledge boundaries and learn to invoke search tools when necessary?(2) What are the effectiveness and efficiency of the RL approach?(3) Could the RL framework lead to the emergence of robust multimodal intelligent behaviors?This research introduces MMSearch-R1, which represents a pioneering approach to equip LMMs with active image search capabilities through an end-to-end reinforcement learning framework. This robust method focuses specifically on enhancing visual question answering (VQA) performance by enabling models to autonomously engage with image search tools. MMSearch-R1 trains models to make critical decisions about when to initiate image searches and how to effectively process the retrieved visual information. The system excels at extracting, synthesizing, and utilizing relevant visual data to support sophisticated reasoning processes. As a foundational advancement in multimodal AI, MMSearch-R1 enables LMMs to dynamically interact with external tools in a goal-oriented manner, significantly improving performance on knowledge-intensive and long-tail VQA tasks that traditionally challenge conventional models with their static knowledge bases.MMSearch-R1 employs a comprehensive architecture that combines sophisticated data engineering with advanced reinforcement learning techniques. The system builds upon the robust FactualVQA dataset, specifically constructed to provide unambiguous answers that can be reliably evaluated with automated methods. This dataset was created by extracting 50,000 Visual Concepts from both familiar and unfamiliar sections of the MetaCLIP metadata distribution, retrieving associated images, and using GPT-4o to generate factual question-answer pairs. After rigorous filtering and balancing processes, the dataset ensures an optimal mix of queries that can be answered with and without image search assistance.The reinforcement learning framework adapts the standard GRPO algorithm with multi-turn rollouts, integrating an advanced image search tool based on the veRL framework for end-to-end training. This image search capability combines SerpApi, JINA Reader for content extraction, and LLM-based summarization to retrieve and process relevant web content associated with images. The system employs a carefully calibrated reward function that balances answer correctness, proper formatting, and a mild penalty for tool usage, calculated as 0.9 (Score 0.1) + 0.1 Format when image search is used, and 0.9 Score + 0.1 Format when it is not.Experimental results demonstrate MMSearch-R1s significant performance advantages across multiple dimensions. Image search capabilities effectively expand the knowledge boundaries of Large Multimodal Models, with the system learning to make intelligent decisions about when to initiate searches while avoiding over-reliance on external tools. Both supervised fine-tuning (SFT) and reinforcement learning implementations show substantial performance improvements across in-domain FactualVQA testing and out-of-domain benchmarks, including InfoSeek, MMSearch, and Gimmick. Also, the models dynamically adjust their search rates based on visual content familiarity, maintaining efficient resource utilization while maximizing accuracy.Reinforcement learning demonstrates superior efficiency compared to supervised fine-tuning approaches. When applied directly to Qwen2.5-VL-Instruct-3B/7B models, GRPO achieves better results despite using only half the training data required by SFT methods. This remarkable efficiency highlights RLs effectiveness in optimizing model performance with limited resources. The systems ability to balance knowledge access with computational efficiency represents a significant advancement in creating more resource-conscious yet highly capable multimodal systems that can intelligently utilize external knowledge sources.MMSearch-R1 successfully demonstrates that outcome-based reinforcement learning can effectively train Large Multimodal Models with active image search capabilities. This approach enables models to autonomously decide when to utilize external visual knowledge sources while maintaining computational efficiency. The promising results establish a strong foundation for developing future tool-augmented, reasoning-capable LMMs that can dynamically interact with the visual world.Check outthe Blog and Code.All credit for this research goes to the researchers of this project. Also,feel free to follow us onTwitterand dont forget to join our85k+ ML SubReddit. Mohammad AsjadAsjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.Mohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/Anthropics Evaluation of Chain-of-Thought Faithfulness: Investigating Hidden Reasoning, Reward Hacks, and the Limitations of Verbal AI Transparency in Reasoning ModelsMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/Building Your AI Q&A Bot for Webpages Using Open Source AI ModelsMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/DeltaProduct: An AI Method that Balances Expressivity and Efficiency of the Recurrence Computation, Improving State-Tracking in Linear Recurrent Neural NetworksMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/PydanticAI: Advancing Generative AI Agent Development through Intelligent Framework Design0 Comentários 0 Compartilhamentos 16 Visualizações

WWW.MARKTECHPOST.COMMMSearch-R1: End-to-End Reinforcement Learning for Active Image Search in LMMsLarge Multimodal Models (LMMs) have demonstrated remarkable capabilities when trained on extensive visual-text paired data, advancing multimodal understanding tasks significantly. However, these models struggle with complex real-world knowledge, particularly long-tail information that emerges after training cutoffs or domain-specific knowledge restricted by privacy, copyright, or security concerns. When forced to operate beyond their internal knowledge boundaries, LMMs often produce hallucinations, severely compromising their reliability in scenarios where factual accuracy is paramount. While Retrieval-Augmented Generation (RAG) has been widely implemented to overcome these limitations, it introduces its challenges: the decoupled retrieval and generation components resist end-to-end optimisation, and its rigid retrieve-then-generate approach triggers unnecessary retrievals even when the model already possesses sufficient knowledge, resulting in increased latency and computational costs.Recent approaches have made significant strides in addressing knowledge limitations in large models. End-to-end reinforcement learning (RL) methods like OpenAIs o-series, DeepSeek-R1, and Kimi K-1.5 have remarkably improved model reasoning capabilities. Simultaneously, Deep Research Models developed by major AI labs have shown that training models to interact directly with internet content substantially enhances their performance on complex real-world tasks. Despite these advances, challenges persist in efficiently integrating external knowledge retrieval with generation capabilities. Current methods either prioritize reasoning without optimized knowledge access or focus on retrieval mechanisms that arent seamlessly integrated with the models generation process. These approaches often fail to achieve the optimal balance between computational efficiency, response accuracy, and the ability to handle dynamic information, leaving significant room for improvement in creating truly adaptive and knowledge-aware multimodal systems.Researchers have attempted to explore an end-to-end RL framework to extend the capability boundaries of LMMs. And tried to answer the following questions:(1) Can LMMs be trained to perceive their knowledge boundaries and learn to invoke search tools when necessary?(2) What are the effectiveness and efficiency of the RL approach?(3) Could the RL framework lead to the emergence of robust multimodal intelligent behaviors?This research introduces MMSearch-R1, which represents a pioneering approach to equip LMMs with active image search capabilities through an end-to-end reinforcement learning framework. This robust method focuses specifically on enhancing visual question answering (VQA) performance by enabling models to autonomously engage with image search tools. MMSearch-R1 trains models to make critical decisions about when to initiate image searches and how to effectively process the retrieved visual information. The system excels at extracting, synthesizing, and utilizing relevant visual data to support sophisticated reasoning processes. As a foundational advancement in multimodal AI, MMSearch-R1 enables LMMs to dynamically interact with external tools in a goal-oriented manner, significantly improving performance on knowledge-intensive and long-tail VQA tasks that traditionally challenge conventional models with their static knowledge bases.MMSearch-R1 employs a comprehensive architecture that combines sophisticated data engineering with advanced reinforcement learning techniques. The system builds upon the robust FactualVQA dataset, specifically constructed to provide unambiguous answers that can be reliably evaluated with automated methods. This dataset was created by extracting 50,000 Visual Concepts from both familiar and unfamiliar sections of the MetaCLIP metadata distribution, retrieving associated images, and using GPT-4o to generate factual question-answer pairs. After rigorous filtering and balancing processes, the dataset ensures an optimal mix of queries that can be answered with and without image search assistance.The reinforcement learning framework adapts the standard GRPO algorithm with multi-turn rollouts, integrating an advanced image search tool based on the veRL framework for end-to-end training. This image search capability combines SerpApi, JINA Reader for content extraction, and LLM-based summarization to retrieve and process relevant web content associated with images. The system employs a carefully calibrated reward function that balances answer correctness, proper formatting, and a mild penalty for tool usage, calculated as 0.9 (Score 0.1) + 0.1 Format when image search is used, and 0.9 Score + 0.1 Format when it is not.Experimental results demonstrate MMSearch-R1s significant performance advantages across multiple dimensions. Image search capabilities effectively expand the knowledge boundaries of Large Multimodal Models, with the system learning to make intelligent decisions about when to initiate searches while avoiding over-reliance on external tools. Both supervised fine-tuning (SFT) and reinforcement learning implementations show substantial performance improvements across in-domain FactualVQA testing and out-of-domain benchmarks, including InfoSeek, MMSearch, and Gimmick. Also, the models dynamically adjust their search rates based on visual content familiarity, maintaining efficient resource utilization while maximizing accuracy.Reinforcement learning demonstrates superior efficiency compared to supervised fine-tuning approaches. When applied directly to Qwen2.5-VL-Instruct-3B/7B models, GRPO achieves better results despite using only half the training data required by SFT methods. This remarkable efficiency highlights RLs effectiveness in optimizing model performance with limited resources. The systems ability to balance knowledge access with computational efficiency represents a significant advancement in creating more resource-conscious yet highly capable multimodal systems that can intelligently utilize external knowledge sources.MMSearch-R1 successfully demonstrates that outcome-based reinforcement learning can effectively train Large Multimodal Models with active image search capabilities. This approach enables models to autonomously decide when to utilize external visual knowledge sources while maintaining computational efficiency. The promising results establish a strong foundation for developing future tool-augmented, reasoning-capable LMMs that can dynamically interact with the visual world.Check outthe Blog and Code.All credit for this research goes to the researchers of this project. Also,feel free to follow us onTwitterand dont forget to join our85k+ ML SubReddit. Mohammad AsjadAsjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.Mohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/Anthropics Evaluation of Chain-of-Thought Faithfulness: Investigating Hidden Reasoning, Reward Hacks, and the Limitations of Verbal AI Transparency in Reasoning ModelsMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/Building Your AI Q&A Bot for Webpages Using Open Source AI ModelsMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/DeltaProduct: An AI Method that Balances Expressivity and Efficiency of the Recurrence Computation, Improving State-Tracking in Linear Recurrent Neural NetworksMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/PydanticAI: Advancing Generative AI Agent Development through Intelligent Framework Design0 Comentários 0 Compartilhamentos 16 Visualizações -

THEHACKERNEWS.COMPoisonSeed Exploits CRM Accounts to Launch Cryptocurrency Seed Phrase Poisoning AttacksA malicious campaign dubbed PoisonSeed is leveraging compromised credentials associated with customer relationship management (CRM) tools and bulk email providers to send spam messages containing cryptocurrency seed phrases in an attempt to drain victims' digital wallets."Recipients of the bulk spam are targeted with a cryptocurrency seed phrase poisoning attack," Silent Push said in an analysis. "As part of the attack, PoisonSeed provides security seed phrases to get potential victims to copy and paste them into new cryptocurrency wallets for future compromising."Targets of PoisonSeed include enterprise organizations and individuals outside the cryptocurrency industry. Crypto companies like Coinbase and Ledger, and bulk email providers such as Mailchimp, SendGrid, Hubspot, Mailgun, and Zoho are among the targeted crypto companies.The activity is assessed to be distinct from two loosely aligned threat actors Scattered Spider and CryptoChameleon, which are both part of a broader cybercrime ecosystem called The Com. Some aspects of the campaign were previously disclosed by security researcher Troy Hunt and Bleeping Computer last month.The attacks involve the threat actors setting up lookalike phishing pages for prominent CRM and bulk email companies, aiming to trick high-value targets into providing their credentials. Once the credentials are obtained, the adversaries proceed to create an API key to ensure persistence even if the stolen password is reset by its owner.In the next phase, the operators export mailing lists likely using an automated tool and send spam from those compromised accounts. The post-CRM-compromise supply chain spam messages inform users that they need to set up a new Coinbase Wallet using the seed phrase embedded in the email.The end goal of the attacks is to use the same recovery phrase to hijack the accounts and transfer funds from those wallets. The links to Scattered Spider and CryptoChameleon stem from the use of a domain ("mailchimp-sso[.]com") that has been previously identified as used by the former, as well as CryptoChameleon's historical targeting of Coinbase and Ledger.That said, the phishing kit used by PoisonSeed does not share any similarity with those used by the other two threat clusters, raising the possibility that it's either a brand new phishing kit from CryptoChameleon or it's a different threat actor that just happens to use similar tradecraft.The development comes as a Russian-speaking threat actor has been observed using phishing pages hosted on Cloudflare Pages.Dev and Workers.Dev to deliver malware that can remotely control infected Windows hosts. A previous iteration of the campaign was found to have also distributed the StealC information stealer."This recent campaign leverages Cloudflare-branded phishing pages themed around DMCA (Digital Millennium Copyright Act) takedown notices served across multiple domains," Hunt.io said."The lure abuses the ms-search protocol to download a malicious LNK file disguised as a PDF via a double extension. Once executed, the malware checks in with an attacker-operated Telegram bot-sending the victim's IP address-before transitioning to Pyramid C2 to control the infected host."Found this article interesting? Follow us on Twitter and LinkedIn to read more exclusive content we post.0 Comentários 0 Compartilhamentos 17 Visualizações

THEHACKERNEWS.COMPoisonSeed Exploits CRM Accounts to Launch Cryptocurrency Seed Phrase Poisoning AttacksA malicious campaign dubbed PoisonSeed is leveraging compromised credentials associated with customer relationship management (CRM) tools and bulk email providers to send spam messages containing cryptocurrency seed phrases in an attempt to drain victims' digital wallets."Recipients of the bulk spam are targeted with a cryptocurrency seed phrase poisoning attack," Silent Push said in an analysis. "As part of the attack, PoisonSeed provides security seed phrases to get potential victims to copy and paste them into new cryptocurrency wallets for future compromising."Targets of PoisonSeed include enterprise organizations and individuals outside the cryptocurrency industry. Crypto companies like Coinbase and Ledger, and bulk email providers such as Mailchimp, SendGrid, Hubspot, Mailgun, and Zoho are among the targeted crypto companies.The activity is assessed to be distinct from two loosely aligned threat actors Scattered Spider and CryptoChameleon, which are both part of a broader cybercrime ecosystem called The Com. Some aspects of the campaign were previously disclosed by security researcher Troy Hunt and Bleeping Computer last month.The attacks involve the threat actors setting up lookalike phishing pages for prominent CRM and bulk email companies, aiming to trick high-value targets into providing their credentials. Once the credentials are obtained, the adversaries proceed to create an API key to ensure persistence even if the stolen password is reset by its owner.In the next phase, the operators export mailing lists likely using an automated tool and send spam from those compromised accounts. The post-CRM-compromise supply chain spam messages inform users that they need to set up a new Coinbase Wallet using the seed phrase embedded in the email.The end goal of the attacks is to use the same recovery phrase to hijack the accounts and transfer funds from those wallets. The links to Scattered Spider and CryptoChameleon stem from the use of a domain ("mailchimp-sso[.]com") that has been previously identified as used by the former, as well as CryptoChameleon's historical targeting of Coinbase and Ledger.That said, the phishing kit used by PoisonSeed does not share any similarity with those used by the other two threat clusters, raising the possibility that it's either a brand new phishing kit from CryptoChameleon or it's a different threat actor that just happens to use similar tradecraft.The development comes as a Russian-speaking threat actor has been observed using phishing pages hosted on Cloudflare Pages.Dev and Workers.Dev to deliver malware that can remotely control infected Windows hosts. A previous iteration of the campaign was found to have also distributed the StealC information stealer."This recent campaign leverages Cloudflare-branded phishing pages themed around DMCA (Digital Millennium Copyright Act) takedown notices served across multiple domains," Hunt.io said."The lure abuses the ms-search protocol to download a malicious LNK file disguised as a PDF via a double extension. Once executed, the malware checks in with an attacker-operated Telegram bot-sending the victim's IP address-before transitioning to Pyramid C2 to control the infected host."Found this article interesting? Follow us on Twitter and LinkedIn to read more exclusive content we post.0 Comentários 0 Compartilhamentos 17 Visualizações -

_All_Canada_Photos_Alamy.jpg) WWW.INFORMATIONWEEK.COMHigh-Severity Cloud Security Alerts Tripled in 2024Attackers aren't just spending more time targeting the cloud they're ruthlessly stealing more sensitive data and accessing more critical systems than ever before.0 Comentários 0 Compartilhamentos 19 Visualizações

WWW.INFORMATIONWEEK.COMHigh-Severity Cloud Security Alerts Tripled in 2024Attackers aren't just spending more time targeting the cloud they're ruthlessly stealing more sensitive data and accessing more critical systems than ever before.0 Comentários 0 Compartilhamentos 19 Visualizações