0 Comentários

0 Compartilhamentos

36 Visualizações

Diretório

Diretório

-

Faça Login para curtir, compartilhar e comentar!

-

GAMINGBOLT.COMDays Gone Remastered is Out Now on PS5Days Gone Remastered, an enhanced version of Bend Studio’s 2019 open-world title, is now available on PS5. Retailing for $49.99, owners of the PS4 original can upgrade for $10. PC players can also pick up Broken Road, a DLC that includes all the new content for $10. Check out the launch trailer below. Alongside visual improvements and PS5 Pro support, the remaster’s main appeal is the new content, particularly Horde Assault. The arcade-like experience involves surviving in the world for as long as possible against increasingly challenging Freakers. Hordes are larger than ever, and negative Injectors result in more harrowing fights. However, there are also positive Injectors for gaining an edge, and as you level up, new cosmetics and playable characters become available. Days Gone Remastered also adds Permadeath and Speedrun Modes, and new settings for Photo Mode, like time of day, new logos and a three-point lighting system. High Contrast Mode, UI narration, fully remappable controls, and other accessibility features are also included. Check out our feature for everything you should know before buying.0 Comentários 0 Compartilhamentos 29 Visualizações

GAMINGBOLT.COMDays Gone Remastered is Out Now on PS5Days Gone Remastered, an enhanced version of Bend Studio’s 2019 open-world title, is now available on PS5. Retailing for $49.99, owners of the PS4 original can upgrade for $10. PC players can also pick up Broken Road, a DLC that includes all the new content for $10. Check out the launch trailer below. Alongside visual improvements and PS5 Pro support, the remaster’s main appeal is the new content, particularly Horde Assault. The arcade-like experience involves surviving in the world for as long as possible against increasingly challenging Freakers. Hordes are larger than ever, and negative Injectors result in more harrowing fights. However, there are also positive Injectors for gaining an edge, and as you level up, new cosmetics and playable characters become available. Days Gone Remastered also adds Permadeath and Speedrun Modes, and new settings for Photo Mode, like time of day, new logos and a three-point lighting system. High Contrast Mode, UI narration, fully remappable controls, and other accessibility features are also included. Check out our feature for everything you should know before buying.0 Comentários 0 Compartilhamentos 29 Visualizações -

EN.WIKIPEDIA.ORGWikipedia picture of the day for April 26The Royal Palace of Amsterdam is a royal residence in Amsterdam, Netherlands. Opened in 1655 as a town hall, the main architect was Jacob van Campen, who designed it in the Dutch Baroque style. Louis Bonaparte became King of Holland in 1806 and established his court in Amsterdam, turning the town hall into a palace; it has been used by Dutch monarchs since then, although their main place of residence is Huis ten Bosch in The Hague. The Royal Palace of Amsterdam is used for entertaining and official functions during state visits and other official receptions, such as New Year receptions. This photograph shows the Royal Palace from Dam Square in 2016. Photograph credit: Diego Delso Recently featured: Indian Head gold pieces Blue-tailed damselfly Chandos portrait Archive More featured pictures0 Comentários 0 Compartilhamentos 22 Visualizações

EN.WIKIPEDIA.ORGWikipedia picture of the day for April 26The Royal Palace of Amsterdam is a royal residence in Amsterdam, Netherlands. Opened in 1655 as a town hall, the main architect was Jacob van Campen, who designed it in the Dutch Baroque style. Louis Bonaparte became King of Holland in 1806 and established his court in Amsterdam, turning the town hall into a palace; it has been used by Dutch monarchs since then, although their main place of residence is Huis ten Bosch in The Hague. The Royal Palace of Amsterdam is used for entertaining and official functions during state visits and other official receptions, such as New Year receptions. This photograph shows the Royal Palace from Dam Square in 2016. Photograph credit: Diego Delso Recently featured: Indian Head gold pieces Blue-tailed damselfly Chandos portrait Archive More featured pictures0 Comentários 0 Compartilhamentos 22 Visualizações -

EN.WIKIPEDIA.ORGOn this day: April 26April 26 Lorenzo de' Medici 1478 – In a conspiracy to replace the Medici family as rulers of the Republic of Florence, the Pazzi family attacked Lorenzo de' Medici (pictured) and killed his brother Giuliano at Florence Cathedral. 1915 – First World War: Britain, France and Russia signed a secret treaty promising territory to Italy if it joined the war on their side. 1933 – The Gestapo, the official secret police force of Nazi Germany, was established. 1989 – A tornado struck the Manikganj District of Bangladesh and killed an estimated 1,300 people, making it the deadliest tornado in history. 1994 – Just before landing at Nagoya Airport, Japan, the copilot of China Airlines Flight 140 inadvertently triggered the takeoff/go-around switch, causing the aircraft to crash and killing 264 of the 271 people on board. Marcus Aurelius (b. 121)Alice Ayres (d. 1885)S. J. V. Chelvanayakam (d. 1977) More anniversaries: April 25 April 26 April 27 Archive By email List of days of the year About0 Comentários 0 Compartilhamentos 22 Visualizações

EN.WIKIPEDIA.ORGOn this day: April 26April 26 Lorenzo de' Medici 1478 – In a conspiracy to replace the Medici family as rulers of the Republic of Florence, the Pazzi family attacked Lorenzo de' Medici (pictured) and killed his brother Giuliano at Florence Cathedral. 1915 – First World War: Britain, France and Russia signed a secret treaty promising territory to Italy if it joined the war on their side. 1933 – The Gestapo, the official secret police force of Nazi Germany, was established. 1989 – A tornado struck the Manikganj District of Bangladesh and killed an estimated 1,300 people, making it the deadliest tornado in history. 1994 – Just before landing at Nagoya Airport, Japan, the copilot of China Airlines Flight 140 inadvertently triggered the takeoff/go-around switch, causing the aircraft to crash and killing 264 of the 271 people on board. Marcus Aurelius (b. 121)Alice Ayres (d. 1885)S. J. V. Chelvanayakam (d. 1977) More anniversaries: April 25 April 26 April 27 Archive By email List of days of the year About0 Comentários 0 Compartilhamentos 22 Visualizações -

WWW.SMITHSONIANMAG.COMSee the Forgotten Paintings Made by Jane Austen's Older Sister, CassandraSee the Forgotten Paintings Made by Jane Austen’s Older Sister, Cassandra A new exhibition at the Jane Austen House in England includes six artworks that are going on public display for the first time Cassandra Austen's 1795 hand-drawn copy of a plate from a 1786 drawing instruction manual Luke Shears / Jane Austen House When Jane Austen died on July 18, 1817, her older sister, Cassandra, was overcome with grief. “She was the sun of my life, the gilder of every pleasure, the soother of every sorrow. I had not a thought concealed from her, and it is as if I had lost a part of myself,” Cassandra wrote in a letter later that month. Years later, Cassandra burned the vast majority of her younger sister’s 3,000 letters, leaving only around 160 for the benefit of researchers, biographers and Janeites, as the author’s devotees are known. Questions began to swirl about her motivations. Was she protecting her sister’s legacy? What was she hiding? Cassandra’s controversial decision is “the act for which she is perhaps best known today,” as Artnet’s Jo Lawson-Tancred writes. Meanwhile, her own work as an accomplished watercolor artist whose paintings may have inspired parts of Jane’s novels has been largely forgotten. Now, an exhibition at the Jane Austen House in the English village of Chawton has assembled the largest public display of Cassandra’s artworks for a reconsideration of her legacy beyond the bonfire. Cassandra's 1802 watercolor of a man crossing a wintry landscape with his dog Luke Shears / Jane Austen House “The Art of Cassandra” features ten works. Six are recent donations or loans to the museum from descendants of the Austen family that are going on public display for the first time. “This is a small display but a truly exciting one,” Sophie Reynolds, the head of collections, interpretation and engagement at the museum, tells the Guardian’s Jamie Grierson. Cassandra's depiction of Elizabeth I for her sister's The History of England, a parody of history textbooks Public domain via Wikimedia Commons While some of Cassandra’s works are original portraits of family members like James, one of the Austen sisters’ six brothers, or watercolor landscapes, many are detailed reproductions of images from books and prints, per a statement from the museum. The exhibition’s curator, Janine Barchas, an Austen scholar at the University of Texas at Austin, has found seven prints or bookplates that match Cassandra’s artworks. These sources provide rich insights into the reading habits and tastes of the Austen sisters. The Austen sisters were about three years apart in age, and they were very close. Their artistic talents first converged as early as 1791, when Jane, then 15, wrote a parody of British history textbooks called The History of England. She dedicated the book to Cassandra, who provided drawings of historical figures like Elizabeth I and Mary, Queen of Scots. Cassandra’s best-known work is a portrait of Jane that now resides in the National Portrait Gallery in London. “Her skill was akin to Jane’s own—neat and careful, with delicacy and lightness of touch, so to see them is a pleasure in itself—but more than that, for those interested in Jane Austen, Cassandra’s artworks also remind us of the many paintings and drawings in Jane’s novels,” says Reynolds in the statement.Reynolds points to the character Elinor Dashwood’s drawings in Jane’s first published novel, Sense and Sensibility. Upon moving to Barton Cottage, the women of the Dashwood family “make the house a home” by, among other things, arranging Elinor’s drawings on the walls of their sitting room, Reynolds says. The cottage where Jane spent the last eight years of her life and worked on all six of her novels is an ideal setting for Cassandra’s artworks, now carefully arranged on the sitting room walls. “Not since Cassandra’s creative years in this very cottage have so many of her surviving artworks been gathered together in one place,” says Barchas in the statement. “The Art of Cassandra” will be on view at the Jane Austen House in Chawton, England, from April 29 to June 8, 2025. Get the latest stories in your inbox every weekday.0 Comentários 0 Compartilhamentos 23 Visualizações

WWW.SMITHSONIANMAG.COMSee the Forgotten Paintings Made by Jane Austen's Older Sister, CassandraSee the Forgotten Paintings Made by Jane Austen’s Older Sister, Cassandra A new exhibition at the Jane Austen House in England includes six artworks that are going on public display for the first time Cassandra Austen's 1795 hand-drawn copy of a plate from a 1786 drawing instruction manual Luke Shears / Jane Austen House When Jane Austen died on July 18, 1817, her older sister, Cassandra, was overcome with grief. “She was the sun of my life, the gilder of every pleasure, the soother of every sorrow. I had not a thought concealed from her, and it is as if I had lost a part of myself,” Cassandra wrote in a letter later that month. Years later, Cassandra burned the vast majority of her younger sister’s 3,000 letters, leaving only around 160 for the benefit of researchers, biographers and Janeites, as the author’s devotees are known. Questions began to swirl about her motivations. Was she protecting her sister’s legacy? What was she hiding? Cassandra’s controversial decision is “the act for which she is perhaps best known today,” as Artnet’s Jo Lawson-Tancred writes. Meanwhile, her own work as an accomplished watercolor artist whose paintings may have inspired parts of Jane’s novels has been largely forgotten. Now, an exhibition at the Jane Austen House in the English village of Chawton has assembled the largest public display of Cassandra’s artworks for a reconsideration of her legacy beyond the bonfire. Cassandra's 1802 watercolor of a man crossing a wintry landscape with his dog Luke Shears / Jane Austen House “The Art of Cassandra” features ten works. Six are recent donations or loans to the museum from descendants of the Austen family that are going on public display for the first time. “This is a small display but a truly exciting one,” Sophie Reynolds, the head of collections, interpretation and engagement at the museum, tells the Guardian’s Jamie Grierson. Cassandra's depiction of Elizabeth I for her sister's The History of England, a parody of history textbooks Public domain via Wikimedia Commons While some of Cassandra’s works are original portraits of family members like James, one of the Austen sisters’ six brothers, or watercolor landscapes, many are detailed reproductions of images from books and prints, per a statement from the museum. The exhibition’s curator, Janine Barchas, an Austen scholar at the University of Texas at Austin, has found seven prints or bookplates that match Cassandra’s artworks. These sources provide rich insights into the reading habits and tastes of the Austen sisters. The Austen sisters were about three years apart in age, and they were very close. Their artistic talents first converged as early as 1791, when Jane, then 15, wrote a parody of British history textbooks called The History of England. She dedicated the book to Cassandra, who provided drawings of historical figures like Elizabeth I and Mary, Queen of Scots. Cassandra’s best-known work is a portrait of Jane that now resides in the National Portrait Gallery in London. “Her skill was akin to Jane’s own—neat and careful, with delicacy and lightness of touch, so to see them is a pleasure in itself—but more than that, for those interested in Jane Austen, Cassandra’s artworks also remind us of the many paintings and drawings in Jane’s novels,” says Reynolds in the statement.Reynolds points to the character Elinor Dashwood’s drawings in Jane’s first published novel, Sense and Sensibility. Upon moving to Barton Cottage, the women of the Dashwood family “make the house a home” by, among other things, arranging Elinor’s drawings on the walls of their sitting room, Reynolds says. The cottage where Jane spent the last eight years of her life and worked on all six of her novels is an ideal setting for Cassandra’s artworks, now carefully arranged on the sitting room walls. “Not since Cassandra’s creative years in this very cottage have so many of her surviving artworks been gathered together in one place,” says Barchas in the statement. “The Art of Cassandra” will be on view at the Jane Austen House in Chawton, England, from April 29 to June 8, 2025. Get the latest stories in your inbox every weekday.0 Comentários 0 Compartilhamentos 23 Visualizações -

VENTUREBEAT.COMLiquid AI is revolutionizing LLMs to work on edge devices like smartphones with new ‘Hyena Edge’ modelJoin our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Liquid AI, the Boston-based foundation model startup spun out of the Massachusetts Institute of Technology (MIT), is seeking to move the tech industry beyond its reliance on the Transformer architecture underpinning most popular large language models (LLMs) such as OpenAI’s GPT series and Google’s Gemini family. Yesterday, the company announced “Hyena Edge,” a new convolution-based, multi-hybrid model designed for smartphones and other edge devices in advance of the International Conference on Learning Representations (ICLR) 2025. The conference, one of the premier events for machine learning research, is taking place this year in Vienna, Austria. New convolution-based model promises faster, more memory-efficient AI at the edge Hyena Edge is engineered to outperform strong Transformer baselines on both computational efficiency and language model quality. In real-world tests on a Samsung Galaxy S24 Ultra smartphone, the model delivered lower latency, smaller memory footprint, and better benchmark results compared to a parameter-matched Transformer++ model. A new architecture for a new era of edge AI Unlike most small models designed for mobile deployment — including SmolLM2, the Phi models, and Llama 3.2 1B — Hyena Edge steps away from traditional attention-heavy designs. Instead, it strategically replaces two-thirds of grouped-query attention (GQA) operators with gated convolutions from the Hyena-Y family. The new architecture is the result of Liquid AI’s Synthesis of Tailored Architectures (STAR) framework, which uses evolutionary algorithms to automatically design model backbones and was announced back in December 2024. STAR explores a wide range of operator compositions, rooted in the mathematical theory of linear input-varying systems, to optimize for multiple hardware-specific objectives like latency, memory usage, and quality. Benchmarked directly on consumer hardware To validate Hyena Edge’s real-world readiness, Liquid AI ran tests directly on the Samsung Galaxy S24 Ultra smartphone. Results show that Hyena Edge achieved up to 30% faster prefill and decode latencies compared to its Transformer++ counterpart, with speed advantages increasing at longer sequence lengths. Prefill latencies at short sequence lengths also outpaced the Transformer baseline — a critical performance metric for responsive on-device applications. In terms of memory, Hyena Edge consistently used less RAM during inference across all tested sequence lengths, positioning it as a strong candidate for environments with tight resource constraints. Outperforming Transformers on language benchmarks Hyena Edge was trained on 100 billion tokens and evaluated across standard benchmarks for small language models, including Wikitext, Lambada, PiQA, HellaSwag, Winogrande, ARC-easy, and ARC-challenge. On every benchmark, Hyena Edge either matched or exceeded the performance of the GQA-Transformer++ model, with noticeable improvements in perplexity scores on Wikitext and Lambada, and higher accuracy rates on PiQA, HellaSwag, and Winogrande. These results suggest that the model’s efficiency gains do not come at the cost of predictive quality — a common tradeoff for many edge-optimized architectures. Hyena Edge Evolution: A look at performance and operator trends For those seeking a deeper dive into Hyena Edge’s development process, a recent video walkthrough provides a compelling visual summary of the model’s evolution. The video highlights how key performance metrics — including prefill latency, decode latency, and memory consumption — improved over successive generations of architecture refinement. It also offers a rare behind-the-scenes look at how the internal composition of Hyena Edge shifted during development. Viewers can see dynamic changes in the distribution of operator types, such as Self-Attention (SA) mechanisms, various Hyena variants, and SwiGLU layers. These shifts offer insight into the architectural design principles that helped the model reach its current level of efficiency and accuracy. By visualizing the trade-offs and operator dynamics over time, the video provides valuable context for understanding the architectural breakthroughs underlying Hyena Edge’s performance. Open-source plans and a broader vision Liquid AI said it plans to open-source a series of Liquid foundation models, including Hyena Edge, over the coming months. The company’s goal is to build capable and efficient general-purpose AI systems that can scale from cloud datacenters down to personal edge devices. The debut of Hyena Edge also highlights the growing potential for alternative architectures to challenge Transformers in practical settings. With mobile devices increasingly expected to run sophisticated AI workloads natively, models like Hyena Edge could set a new baseline for what edge-optimized AI can achieve. Hyena Edge’s success — both in raw performance metrics and in showcasing automated architecture design — positions Liquid AI as one of the emerging players to watch in the evolving AI model landscape. Daily insights on business use cases with VB Daily If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI. Read our Privacy Policy Thanks for subscribing. Check out more VB newsletters here. An error occured.0 Comentários 0 Compartilhamentos 22 Visualizações

VENTUREBEAT.COMLiquid AI is revolutionizing LLMs to work on edge devices like smartphones with new ‘Hyena Edge’ modelJoin our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Liquid AI, the Boston-based foundation model startup spun out of the Massachusetts Institute of Technology (MIT), is seeking to move the tech industry beyond its reliance on the Transformer architecture underpinning most popular large language models (LLMs) such as OpenAI’s GPT series and Google’s Gemini family. Yesterday, the company announced “Hyena Edge,” a new convolution-based, multi-hybrid model designed for smartphones and other edge devices in advance of the International Conference on Learning Representations (ICLR) 2025. The conference, one of the premier events for machine learning research, is taking place this year in Vienna, Austria. New convolution-based model promises faster, more memory-efficient AI at the edge Hyena Edge is engineered to outperform strong Transformer baselines on both computational efficiency and language model quality. In real-world tests on a Samsung Galaxy S24 Ultra smartphone, the model delivered lower latency, smaller memory footprint, and better benchmark results compared to a parameter-matched Transformer++ model. A new architecture for a new era of edge AI Unlike most small models designed for mobile deployment — including SmolLM2, the Phi models, and Llama 3.2 1B — Hyena Edge steps away from traditional attention-heavy designs. Instead, it strategically replaces two-thirds of grouped-query attention (GQA) operators with gated convolutions from the Hyena-Y family. The new architecture is the result of Liquid AI’s Synthesis of Tailored Architectures (STAR) framework, which uses evolutionary algorithms to automatically design model backbones and was announced back in December 2024. STAR explores a wide range of operator compositions, rooted in the mathematical theory of linear input-varying systems, to optimize for multiple hardware-specific objectives like latency, memory usage, and quality. Benchmarked directly on consumer hardware To validate Hyena Edge’s real-world readiness, Liquid AI ran tests directly on the Samsung Galaxy S24 Ultra smartphone. Results show that Hyena Edge achieved up to 30% faster prefill and decode latencies compared to its Transformer++ counterpart, with speed advantages increasing at longer sequence lengths. Prefill latencies at short sequence lengths also outpaced the Transformer baseline — a critical performance metric for responsive on-device applications. In terms of memory, Hyena Edge consistently used less RAM during inference across all tested sequence lengths, positioning it as a strong candidate for environments with tight resource constraints. Outperforming Transformers on language benchmarks Hyena Edge was trained on 100 billion tokens and evaluated across standard benchmarks for small language models, including Wikitext, Lambada, PiQA, HellaSwag, Winogrande, ARC-easy, and ARC-challenge. On every benchmark, Hyena Edge either matched or exceeded the performance of the GQA-Transformer++ model, with noticeable improvements in perplexity scores on Wikitext and Lambada, and higher accuracy rates on PiQA, HellaSwag, and Winogrande. These results suggest that the model’s efficiency gains do not come at the cost of predictive quality — a common tradeoff for many edge-optimized architectures. Hyena Edge Evolution: A look at performance and operator trends For those seeking a deeper dive into Hyena Edge’s development process, a recent video walkthrough provides a compelling visual summary of the model’s evolution. The video highlights how key performance metrics — including prefill latency, decode latency, and memory consumption — improved over successive generations of architecture refinement. It also offers a rare behind-the-scenes look at how the internal composition of Hyena Edge shifted during development. Viewers can see dynamic changes in the distribution of operator types, such as Self-Attention (SA) mechanisms, various Hyena variants, and SwiGLU layers. These shifts offer insight into the architectural design principles that helped the model reach its current level of efficiency and accuracy. By visualizing the trade-offs and operator dynamics over time, the video provides valuable context for understanding the architectural breakthroughs underlying Hyena Edge’s performance. Open-source plans and a broader vision Liquid AI said it plans to open-source a series of Liquid foundation models, including Hyena Edge, over the coming months. The company’s goal is to build capable and efficient general-purpose AI systems that can scale from cloud datacenters down to personal edge devices. The debut of Hyena Edge also highlights the growing potential for alternative architectures to challenge Transformers in practical settings. With mobile devices increasingly expected to run sophisticated AI workloads natively, models like Hyena Edge could set a new baseline for what edge-optimized AI can achieve. Hyena Edge’s success — both in raw performance metrics and in showcasing automated architecture design — positions Liquid AI as one of the emerging players to watch in the evolving AI model landscape. Daily insights on business use cases with VB Daily If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI. Read our Privacy Policy Thanks for subscribing. Check out more VB newsletters here. An error occured.0 Comentários 0 Compartilhamentos 22 Visualizações -

WWW.THEVERGE.COMBending to industry, Donald Trump issues executive order to “expedite” deep sea miningDonald Trump wants to mine the depths of the ocean for critical minerals ubiquitous in rechargeable batteries, signing an executive order on Thursday to try to expedite mining within US and international waters. It’s a brash move that critics say could create unknown havoc on sea life and coastal economies, and that bucks international agreements. Talks to develop rules for deep-sea mining are still ongoing through the International Seabed Authority (ISA), a process that missed an initial 2023 deadline and has continued to stymie efforts to start commercially mining the deep sea.“A dangerous precedent”“Fast-tracking deep-sea mining by bypassing the ISA’s global regulatory processes would set a dangerous precedent and would be a violation of customary international law,” Duncan Currie, legal adviser for the Deep Sea Conservation Coalition that has advocated for a moratorium on deep sea mining, said in a press statement.The ISA was established by the 1982 United Nations Convention on the Law of the Sea. More than 160 nations have ratified the convention, but the United States has not. Ignoring the convention, the executive order Trump signed directs federal agencies to expedite the process for issuing licenses to companies seeking to recover minerals “in areas beyond national jurisdiction” in accordance with the 1980 US Deep Seabed Hard Mineral Resources Act. A country’s territorial jurisdiction only extends roughly 200 nautical miles from shore.The Trump administration wants to work with industry “to counter China’s growing influence over seabed mineral resources,” the executive order says. However, no country has yet to commercially mine the deep ocean where depths reach about 656 feet (200 meters) in international waters. There have already been efforts to explore parts of the ocean floor rich in nickel, copper, cobalt, iron, and manganese sought after for rechargeable batteries, though, and China is a leading refiner of many critical minerals. China responded on Friday: the BBC reported Chinese foreign ministry spokesman Guo Jiakun as saying that Trump’s move “violates international law and harms the overall interests of the international community.”The Metals Company announced in March that the Canadian company had already “met with officials in the White House” and planned to apply for permits under existing US mining code to begin extracting minerals from the high seas. California-based company Impossible Metals asked the Trump administration earlier this month to auction off mining leases for areas off the coast of American Samoa, which would be within US-controlled waters. Trump’s executive order also directs the Secretary of the Interior to expedite the process for leasing areas for mining within US waters.Companies seeking to exploit offshore mineral resources argue that it would cause less harm than mining on land. Their opponents contend that there’s still too little research to even understand how widespread the effects of deep sea mining could be on marine ecosystems and the people who depend on them. Recent studies have warned of “irreversible” damage and loud noise affecting sea life, and one controversial study raises questions of whether the deep sea could be an important source of “dark oxygen” for the world. More than 30 countries — including Palau, Fiji, Costa Rica, Canada, Mexico, Brazil, New Zealand, France, Germany, and the United Kingdom — have called for a ban or moratorium on deep-sea mining until international rules are in place to minimize the potential damage.“The harm caused by deep-sea mining isn’t restricted to the ocean floor: it will impact the entire water column, top to bottom, and everyone and everything relying on it,” Jeff Watters, vice president for external affairs at the nonprofit Ocean Conservancy said in a press release.See More:0 Comentários 0 Compartilhamentos 27 Visualizações

WWW.THEVERGE.COMBending to industry, Donald Trump issues executive order to “expedite” deep sea miningDonald Trump wants to mine the depths of the ocean for critical minerals ubiquitous in rechargeable batteries, signing an executive order on Thursday to try to expedite mining within US and international waters. It’s a brash move that critics say could create unknown havoc on sea life and coastal economies, and that bucks international agreements. Talks to develop rules for deep-sea mining are still ongoing through the International Seabed Authority (ISA), a process that missed an initial 2023 deadline and has continued to stymie efforts to start commercially mining the deep sea.“A dangerous precedent”“Fast-tracking deep-sea mining by bypassing the ISA’s global regulatory processes would set a dangerous precedent and would be a violation of customary international law,” Duncan Currie, legal adviser for the Deep Sea Conservation Coalition that has advocated for a moratorium on deep sea mining, said in a press statement.The ISA was established by the 1982 United Nations Convention on the Law of the Sea. More than 160 nations have ratified the convention, but the United States has not. Ignoring the convention, the executive order Trump signed directs federal agencies to expedite the process for issuing licenses to companies seeking to recover minerals “in areas beyond national jurisdiction” in accordance with the 1980 US Deep Seabed Hard Mineral Resources Act. A country’s territorial jurisdiction only extends roughly 200 nautical miles from shore.The Trump administration wants to work with industry “to counter China’s growing influence over seabed mineral resources,” the executive order says. However, no country has yet to commercially mine the deep ocean where depths reach about 656 feet (200 meters) in international waters. There have already been efforts to explore parts of the ocean floor rich in nickel, copper, cobalt, iron, and manganese sought after for rechargeable batteries, though, and China is a leading refiner of many critical minerals. China responded on Friday: the BBC reported Chinese foreign ministry spokesman Guo Jiakun as saying that Trump’s move “violates international law and harms the overall interests of the international community.”The Metals Company announced in March that the Canadian company had already “met with officials in the White House” and planned to apply for permits under existing US mining code to begin extracting minerals from the high seas. California-based company Impossible Metals asked the Trump administration earlier this month to auction off mining leases for areas off the coast of American Samoa, which would be within US-controlled waters. Trump’s executive order also directs the Secretary of the Interior to expedite the process for leasing areas for mining within US waters.Companies seeking to exploit offshore mineral resources argue that it would cause less harm than mining on land. Their opponents contend that there’s still too little research to even understand how widespread the effects of deep sea mining could be on marine ecosystems and the people who depend on them. Recent studies have warned of “irreversible” damage and loud noise affecting sea life, and one controversial study raises questions of whether the deep sea could be an important source of “dark oxygen” for the world. More than 30 countries — including Palau, Fiji, Costa Rica, Canada, Mexico, Brazil, New Zealand, France, Germany, and the United Kingdom — have called for a ban or moratorium on deep-sea mining until international rules are in place to minimize the potential damage.“The harm caused by deep-sea mining isn’t restricted to the ocean floor: it will impact the entire water column, top to bottom, and everyone and everything relying on it,” Jeff Watters, vice president for external affairs at the nonprofit Ocean Conservancy said in a press release.See More:0 Comentários 0 Compartilhamentos 27 Visualizações -

TOWARDSAI.NETFrom Code to Conversation: The Rise of Seamless MLOps-DevOps Fusion in Large Language ModelsFrom Code to Conversation: The Rise of Seamless MLOps-DevOps Fusion in Large Language Models 0 like April 25, 2025 Share this post Author(s): Rajarshi Tarafdar Originally published on Towards AI. Artificial intelligence has undergone rapid evolution through large language models which enable technology systems to interact with users like human beings. The sophisticated interfaces of automation systems operate through an operational infrastructure which requires the unification of Machine Learning Operations (MLOps) and Development Operations (DevOps). The fusion of MLOps and DevOps brings about a fundamental change in how organizations operate conversational AI systems at a large scale. The Evolutionary Path to Integration Organizations embarked on their MLOps-DevOps merger path when they realized traditional development methods were inadequate for machine learning system management. Software engineering maintains well-defined methods for version control and testing together with deployment whereas machine learning introduced distinct requirements such as data dependencies and model drift and computational demands which needed specialized solutions. “MLOps emerged to address the complexities of machine learning lifecycle management, drawing inspiration from DevOps principles,” notes a comprehensive industry analysis from Hatchworks. Between 2018 and 2020, frameworks like Kubeflow and MLflow introduced critical capabilities for version control and workflow automation, while major cloud providers including AWS SageMaker and Google Cloud AI Platform began offering scalable ML pipelines. The emergence of sophisticated large language models accelerated this convergence dramatically. By 2023–2025, LLMs necessitated even more sophisticated approaches, including hybrid cloud-edge deployments, automated retraining mechanisms, and robust compliance frameworks to address regulations like the EU AI Act. As Adam McMurchie, AI architect and DevOps specialist, explains “LLMs represent a quantum leap in complexity compared to traditional ML models, requiring not just different infrastructure but fundamentally different operational approaches that blend the best of MLOps and DevOps philosophies.” Key Drivers of MLOps-DevOps Convergence Hyper-Automation in ML Workflows The massive complexity of large language models requires automated lifecycle management which has become essential because manual operations prove too difficult. Today’s ML pipeline infrastructure integrates continuous integration and continuous deployment systems that specialize in machine learning workload requirements. The tools combine GitHub Actions and Jenkins with their specialized functionality for handling ML requirements across data validation through model training and deployment monitoring processes. For instance, a typical LLM deployment pipeline might include: yaml stages: – build: Train model using updated data – test: Validate performance with pytest – deploy: Roll out via Kubernetes Current monitoring systems designed for LLM deployments use platforms such as WhyLabs and Datadog to detect model drift and performance issues and bias-related problems in generated outputs. The monitoring systems exist to detect performance levels below specified thresholds where they activate automated retraining sequences. Organizational Transformation: Breaking Down Silos The technical merger of MLOps with DevOps requires organizations to modify their structure in parallel with the necessary integration. Organizational success now faces barriers from the traditional divisions preventing seamless work between data scientists and ML engineers and DevOps specialists. “Organizations are restructuring to break silos between data scientists, ML engineers, and DevOps teams,” reports a study in the International Journal of Scientific Research. This restructuring involves creating cross-functional teams with shared responsibilities and unified toolsets that span the entire model lifecycle. Shared tools together with standardized practices have established fundamental elements of this evolution. Command versioning software alongside project management technologies Jira work in tandem with programming code systems to function as collaborative centers that bind distant operational sectors. The alignment between code and data enables swift deployment cycles with stronger deployments because each team member operates from a unified understanding of LLM systems. Governance and Ethical AI Implementation AI ethics concerns together with regulatory requirements act as strong drivers which push organizations toward merging MLOps and DevOps practices. Mandatory transparency and accountability regulations have recently emerged through EU AI Act and 2023 U.S. Executive Order on AI for AI systems. Organizations have started including governance frameworks as an operational core layer in their business processes. The contemporary MLOps pipeline builds automatic features to record data origins while offering models’ decision-making processes and produces legal compliance documentation for regulatory needs. A substantial shift from standard DevOps practices regarding performance optimization emerged with the integration of capabilities to enhance ethical care in applications development. “The integration of compliance checks into automated workflows represents a fundamental shift in how we approach AI development,” notes an analysis in CoreEdge’s industry overview. “It transforms governance from a separate process into an intrinsic part of the development cycle.” Navigating Integration Challenges Despite the clear benefits of MLOps-DevOps fusion, organizations face significant challenges in implementing these integrated approaches effectively. Cultural and Expertise Gaps One of the most persistent obstacles is the cultural divide between traditional software development teams and data science groups. “Traditional DevOps teams may lack ML expertise, while data scientists often prioritize experimentation over production needs,” observes a comprehensive review by AltexSoft. The dissociation produces disparities regarding business goals and accomplishment indicators. The DevOps team primarily aims for system stability together with security and deploy speed improvement yet data science teams emphasize model precision achievements alongside innovation delivery. The gap can be bridged through deliberate training which ensures both teams use unified performance indicators and leadership teams need to establish equal valuation of different perspectives. Infrastructure Complexity LMs demand specific hardware resources which challenge the established strategies of DevOps development. Specified hardware accelerators like GPUs and TPUs are needed to deploy these models together with deployment infrastructures that need hybrid implementation between cloud and edge devices. “LLMs require specialized hardware and hybrid deployments, complicating scalability,” notes the research from Hatchworks. The system’s complexity increases because network operations need to maintain both computational speed and respond quickly while being economical in different installation places. Organizations achieve success by using cloud-native architectures which give them the ability to dynamically scale resources according to demand and deployment requirements. The Continuous Training Paradigm MLOps-DevOps fusion encounters its most basic challenge because LLMs differ from traditional software through their natural tendency to deteriorate in performance. The statistical data in real life evolves past the training data which leads to a phenomenon termed model drift. “Unlike static software, LLMs degrade over time, necessitating automated retraining pipelines — a shift from DevOps’ focus on continuous testing,” explains the analysis from AltexSoft and CoreEdge. This reality transforms the traditional DevOps concept of continuous deployment into a more comprehensive continuous learning system, where models are regularly retrained and validated against evolving data. Best Practices for Successful Integration Organizations that have successfully implemented MLOps-DevOps fusion for LLMs typically follow several established best practices: Unified Version Control Approach Successful implementations employ comprehensive version control strategies that track both code and data dependencies. “Version Control: DVC for data, Git for code,” summarizes the recommended approach from multiple sources. This dual tracking ensures reproducibility of model training and enables teams to pinpoint exactly which data produced which model behaviors — a crucial capability for debugging and compliance. Comprehensive Monitoring Strategy Effective LLM operations require monitoring across multiple dimensions. Industry leaders recommend a dual approach: “Monitoring: Prometheus for infrastructure, WhyLabs for model metrics”. This strategy enables teams to correlate infrastructure issues with model performance problems, accelerating troubleshooting and ensuring stable operations. Edge Computing Integration As LLMs expand beyond centralized data centers, edge deployment has become increasingly important. “Edge Computing: Deploying lightweight LLMs on IoT devices to reduce latency, enabled by frameworks like TensorFlow Lite,” highlights a key trend in the field. This approach brings conversational AI capabilities directly to end-user devices, reducing latency and addressing privacy concerns by minimizing data transmission. The Horizon: Future Directions As MLOps-DevOps fusion continues to mature, several emerging trends are shaping its evolution: Autonomous MLOps The next frontier involves self-healing pipelines that can detect, diagnose, and address problems with minimal human intervention. “Autonomous MLOps: Self-healing pipelines that retrain and redeploy models without human intervention,” describes this emerging capability. These systems leverage the power of AI itself to optimize and maintain AI infrastructure, creating a virtuous cycle of improvement. LLMOps Specialization Specialized practices known as “LLMOps” emerged because of large language model characteristics. The field of LLCOps includes optimized practices for foundation model tuning along with ethical regulations and massive computational resource administration. Sustainable AI Operations Environmental considerations are increasingly influencing operational decisions. “Sustainable AI: Energy-efficient training methods and carbon footprint tracking tools,” identifies a growing focus area in the field. Organizations are developing sophisticated approaches to measure and minimize the environmental impact of LLM training and inference, responding to both cost pressures and corporate sustainability commitments. The Journey Ahead Technology has evolved above mere practical transformation to become a complete strategic change in AI system implementation and deployment methods. This cross-practice partnership allows organizations to achieve powerful conversational AI deployments through process automation together with functional group support and integrated ethical oversight in operational activities. Organizations set to lead their markets will understand MLOps-DevOps fusion as an everlasting process rather than a final destination. Large-language-model innovation speeds rapidly therefore organizations need operational methods which match its velocity and handle new model structures and system deployments together with regulatory compliance changes. Changing the approach from coding interfaces to fluid conversational inputs needs parallel changes in system building and operations. End-to-end collaboration between DevOps and MLOps systems creates a framework enabling AI models to conduct meaningful dialogs while upholding essential user and social standards. Your organization’s implementation success for LLM deployment involves developing an operational mindset which honors innovative AI engagement as well as operational reliability and ethical conversational AI stewardship. Mastering the fusion between human and machine constitutes an arduous path which leads organizations to establish the definition for subsequent human-machine interaction styles. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI Towards AI - Medium Share this post0 Comentários 0 Compartilhamentos 30 Visualizações

-

WWW.IGN.COMThe Fantastic Four: First Steps Is Getting a Comic Book PrequelMarvel Comics may publish a great many stories set across numerous versions of the Marvel Universe, but they've rarely ever released comics that are specifically set within the Marvel Cinematic Universe. That appears to be changing, with Marvel revealing a prequel comic for the upcoming The Fantastic Four: First Steps.Simply titled The Fantastic Four: First Steps #1, the prequel is set four years prior to the events of the film. Written by Matt Fraction (Hawkeye) and illustrated by Mark Buckingham (Fables), this standalone issue will recount the FF's debut adventure, seemingly inspired by Stan Lee and Jack Kirby's original Fantastic Four #1. We know that the film is mostly skipping over the FF's origin story, so it appears that the comic will fill in for those who want to know how the team came to be. Art by Phil Noto. (Image Credit: Marvel)Here's Marvel's official description for The Fantastic Four: First Steps #1:It’s the moment that changed the world–-presented in the most brilliant medium there is! FANTASTIC FOUR: FIRST STEPS #1 is certain to be a must-have item, both for those who have looked up to this super team since they made themselves known those who might be unaware of the history behind Mister Fantastic, Invisible Woman, Human Torch and The Thing’s incredible rise to global stardom!Four years ago, the world was transformed as an amazing cosmic-powered quartet revealed themselves and their astonishing abilities to the public! Since that time, they have become world-famous as the Fantastic Four! Now, to celebrate that anniversary, Marvel Comics recounts their very first exploit that saved our city from near destruction!"What an honor to be asked to help celebrate the fourth anniversary of the Fantastic Four!” Fraction said in a statement. “It was a thrill to bring their first legendary adventure to the world of comic books for the first time! It's a story we all know by heart, but I think Magic Mark Buckingham and I have found a way to tell it as you've never heard or seen before -- and who knows, this could be the start of something big!"While Fraction's comments are a tongue-in-cheek way of acknowledging that this issue is being presented as an in-universe comic book authorized by Pedro Pascal's Reed Richards and his Future Foundation, we also have to wonder if he's teasing the idea of more MCU comics. Again, apart from a handful of tie-ins to early MCU films like Captain America: The First Avenger and The Avengers, Marvel hasn't published many stories specifically set in the MCU. Could we be getting an Avengers: Doomsday or Spider-Man: Brand New Day prequel next?PlayThe Fantastic Four: First Steps #1 will be released on July 2, 2025, just a few weeks before the movie hits theaters. Let us know in the comments what MCU comics you'd like to read.For more on The Fantastic Four: First Steps, see why it's a big deal that Vanessa Kirby's Invisible Woman is pregnant and learn why Silver Surfer is a woman in this movie.Jesse is a mild-mannered staff writer for IGN. Allow him to lend a machete to your intellectual thicket byfollowing @jschedeen on BlueSky.0 Comentários 0 Compartilhamentos 33 Visualizações

WWW.IGN.COMThe Fantastic Four: First Steps Is Getting a Comic Book PrequelMarvel Comics may publish a great many stories set across numerous versions of the Marvel Universe, but they've rarely ever released comics that are specifically set within the Marvel Cinematic Universe. That appears to be changing, with Marvel revealing a prequel comic for the upcoming The Fantastic Four: First Steps.Simply titled The Fantastic Four: First Steps #1, the prequel is set four years prior to the events of the film. Written by Matt Fraction (Hawkeye) and illustrated by Mark Buckingham (Fables), this standalone issue will recount the FF's debut adventure, seemingly inspired by Stan Lee and Jack Kirby's original Fantastic Four #1. We know that the film is mostly skipping over the FF's origin story, so it appears that the comic will fill in for those who want to know how the team came to be. Art by Phil Noto. (Image Credit: Marvel)Here's Marvel's official description for The Fantastic Four: First Steps #1:It’s the moment that changed the world–-presented in the most brilliant medium there is! FANTASTIC FOUR: FIRST STEPS #1 is certain to be a must-have item, both for those who have looked up to this super team since they made themselves known those who might be unaware of the history behind Mister Fantastic, Invisible Woman, Human Torch and The Thing’s incredible rise to global stardom!Four years ago, the world was transformed as an amazing cosmic-powered quartet revealed themselves and their astonishing abilities to the public! Since that time, they have become world-famous as the Fantastic Four! Now, to celebrate that anniversary, Marvel Comics recounts their very first exploit that saved our city from near destruction!"What an honor to be asked to help celebrate the fourth anniversary of the Fantastic Four!” Fraction said in a statement. “It was a thrill to bring their first legendary adventure to the world of comic books for the first time! It's a story we all know by heart, but I think Magic Mark Buckingham and I have found a way to tell it as you've never heard or seen before -- and who knows, this could be the start of something big!"While Fraction's comments are a tongue-in-cheek way of acknowledging that this issue is being presented as an in-universe comic book authorized by Pedro Pascal's Reed Richards and his Future Foundation, we also have to wonder if he's teasing the idea of more MCU comics. Again, apart from a handful of tie-ins to early MCU films like Captain America: The First Avenger and The Avengers, Marvel hasn't published many stories specifically set in the MCU. Could we be getting an Avengers: Doomsday or Spider-Man: Brand New Day prequel next?PlayThe Fantastic Four: First Steps #1 will be released on July 2, 2025, just a few weeks before the movie hits theaters. Let us know in the comments what MCU comics you'd like to read.For more on The Fantastic Four: First Steps, see why it's a big deal that Vanessa Kirby's Invisible Woman is pregnant and learn why Silver Surfer is a woman in this movie.Jesse is a mild-mannered staff writer for IGN. Allow him to lend a machete to your intellectual thicket byfollowing @jschedeen on BlueSky.0 Comentários 0 Compartilhamentos 33 Visualizações -

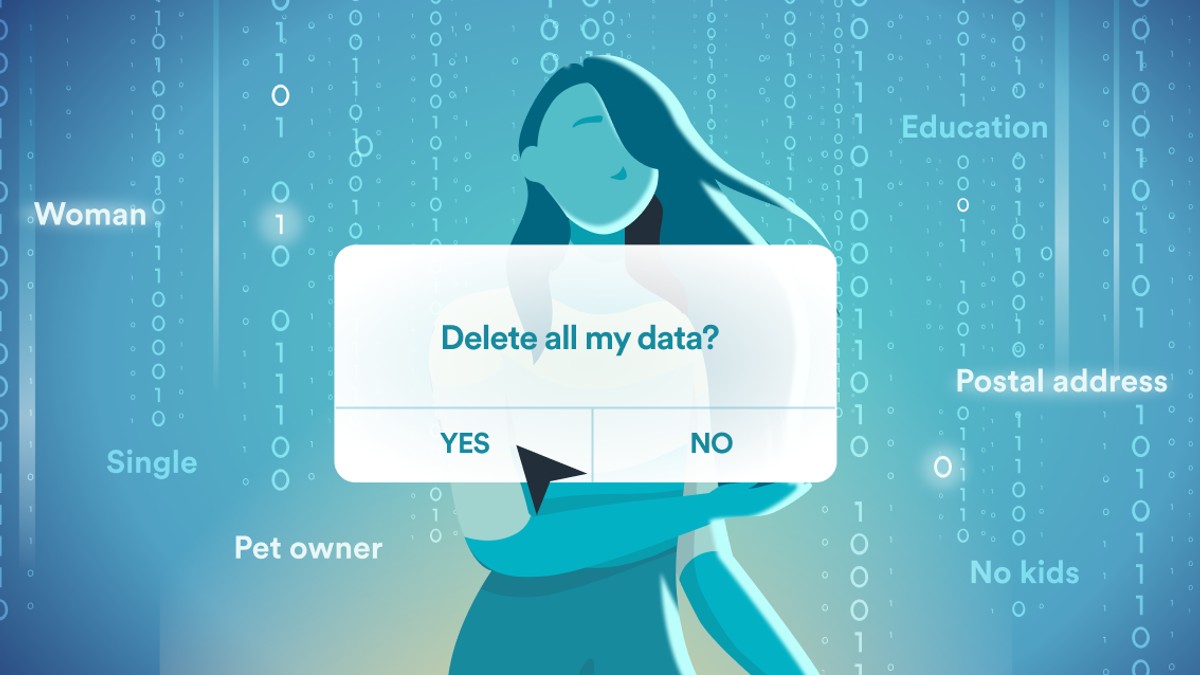

9TO5MAC.COMHere’s how and why to delete your personal data from the internetWith data brokers making big money by selling your personal details, it’s never been easier for spammers and scanners to get access to your phone number, email address, physical address, and even sensitive data like your social security number. Apple’s privacy protections help, of course, but if you want to get proactive about removing your existing personal data from the internet, it’s never been easier to do so … Data brokers are companies whose business is buying and selling personal data. While they do grab headlines on rare occasions – as in the case of a major data breach – they mostly operate in the shadows. Despite occasional talk of lawmakers or regulators intervening, data broking remains a perfectly legal business, taking advantage of the small-print we frequently agree to when registering to use a website or buying an app. This not only makes your contact details available to spammers and scammers, but records of your online activity can also hurt your finances by impacting your credit score. That can affect everything from your eligibility for credit cards and loans to the rates you pay for your health insurance. The law does allow you to opt out, but manually identifying all of the companies holding your data, and issuing removal requests, is an incredibly labor-intensive task. Fortunately, there’s a much easier solution. Incogni’s data-erasure service Instead of you having to contact hundreds of different companies, jumping through whatever hoops they put in your way, you can outsource the work to data-removal service Incogni. The company behind Surfshark does all the hard work for you, contacting more than 250 data brokers and people search sites on your behalf, and issuing demands for the removal of all of your personal details. No matter whether you are based in the US, Canada, UK, EU, or Switzerland, Incogni will use the relevant laws in each country when issuing its notices. The results? Less spam, as fewer companies have your details Reduced risk of being scammed, as fraudsters often steal details available online Protection from stalking and harassment, by keeping your personal details private Subscribers can monitor the process (potential databases found, requests sent, requests completed) on their Incogni dashboard. 50% savings for 9to5Mac readers 9to5Mac readers can keep their data off the market with a 1-year subscription at a 55% discount on both individual and family plans. Users can choose to add up to four loved ones to their Family & Friends plan. Users can add up to three phone numbers (US-based customers only), three emails, and three physical addresses. Additionally, Incogni recently launched its Unlimited plan, which the company believes to be a game-changer in the world of data removal services. In addition to all the standard data brokers and people-finder sites, Unlimited subscribers can request custom removals from any website that exposes their personal data (with the exception of social media platforms, government records, blogs, and forums) – and dedicated privacy agents will take care of the rest. Exclusive reader pricing is: Monthly, Individual: $16.58 Monthly, Family: $32.98 Annual, Individual: $89.53 Annual, Family: $178.09 Annual, Individual, Unlimited: $161.89 Annual, Family, Unlimited: $323.89 Use the discount code 9TO5MAC at checkout to qualify. Sign up at Incogni’s website. Add 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.You’re reading 9to5Mac — experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Don’t know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 31 Visualizações

9TO5MAC.COMHere’s how and why to delete your personal data from the internetWith data brokers making big money by selling your personal details, it’s never been easier for spammers and scanners to get access to your phone number, email address, physical address, and even sensitive data like your social security number. Apple’s privacy protections help, of course, but if you want to get proactive about removing your existing personal data from the internet, it’s never been easier to do so … Data brokers are companies whose business is buying and selling personal data. While they do grab headlines on rare occasions – as in the case of a major data breach – they mostly operate in the shadows. Despite occasional talk of lawmakers or regulators intervening, data broking remains a perfectly legal business, taking advantage of the small-print we frequently agree to when registering to use a website or buying an app. This not only makes your contact details available to spammers and scammers, but records of your online activity can also hurt your finances by impacting your credit score. That can affect everything from your eligibility for credit cards and loans to the rates you pay for your health insurance. The law does allow you to opt out, but manually identifying all of the companies holding your data, and issuing removal requests, is an incredibly labor-intensive task. Fortunately, there’s a much easier solution. Incogni’s data-erasure service Instead of you having to contact hundreds of different companies, jumping through whatever hoops they put in your way, you can outsource the work to data-removal service Incogni. The company behind Surfshark does all the hard work for you, contacting more than 250 data brokers and people search sites on your behalf, and issuing demands for the removal of all of your personal details. No matter whether you are based in the US, Canada, UK, EU, or Switzerland, Incogni will use the relevant laws in each country when issuing its notices. The results? Less spam, as fewer companies have your details Reduced risk of being scammed, as fraudsters often steal details available online Protection from stalking and harassment, by keeping your personal details private Subscribers can monitor the process (potential databases found, requests sent, requests completed) on their Incogni dashboard. 50% savings for 9to5Mac readers 9to5Mac readers can keep their data off the market with a 1-year subscription at a 55% discount on both individual and family plans. Users can choose to add up to four loved ones to their Family & Friends plan. Users can add up to three phone numbers (US-based customers only), three emails, and three physical addresses. Additionally, Incogni recently launched its Unlimited plan, which the company believes to be a game-changer in the world of data removal services. In addition to all the standard data brokers and people-finder sites, Unlimited subscribers can request custom removals from any website that exposes their personal data (with the exception of social media platforms, government records, blogs, and forums) – and dedicated privacy agents will take care of the rest. Exclusive reader pricing is: Monthly, Individual: $16.58 Monthly, Family: $32.98 Annual, Individual: $89.53 Annual, Family: $178.09 Annual, Individual, Unlimited: $161.89 Annual, Family, Unlimited: $323.89 Use the discount code 9TO5MAC at checkout to qualify. Sign up at Incogni’s website. Add 9to5Mac to your Google News feed. FTC: We use income earning auto affiliate links. More.You’re reading 9to5Mac — experts who break news about Apple and its surrounding ecosystem, day after day. Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop. Don’t know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel0 Comentários 0 Compartilhamentos 31 Visualizações