0 Σχόλια

0 Μοιράστηκε

112 Views

Κατάλογος

Κατάλογος

-

Παρακαλούμε συνδέσου στην Κοινότητά μας για να δηλώσεις τι σου αρέσει, να σχολιάσεις και να μοιραστείς με τους φίλους σου!

-

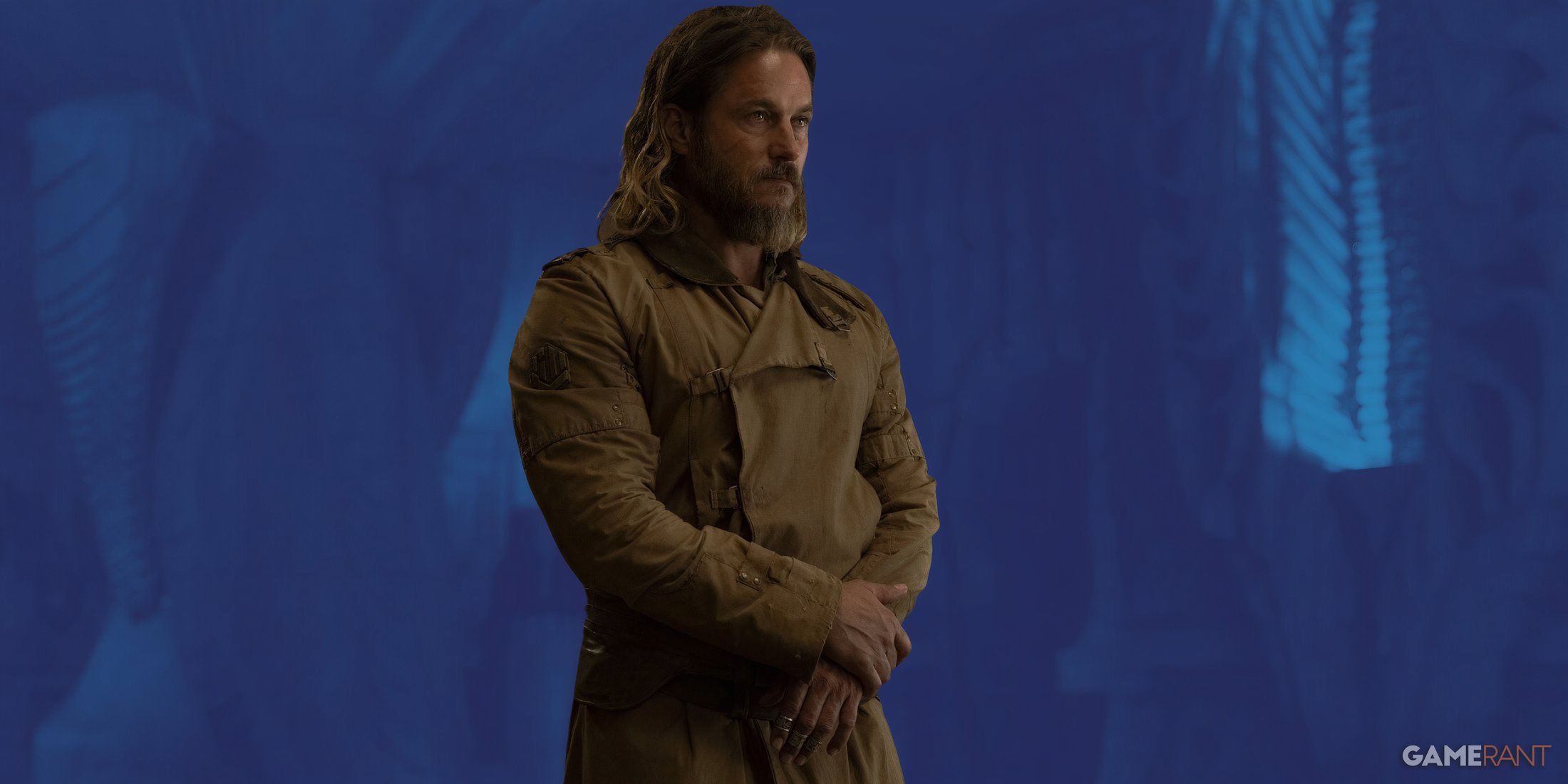

GAMERANT.COMDune: Prophecy To Keep The Spice Flowing With A Season 2As the first season of Dune: Prophecy winds up just ahead of Christmas, its cast crew and fans wont have to spend the holiday wondering if the story has ended. In fact, theyll be able to ring in the new year knowing that Dune: Prophecy will carry on and air again, possibly in 2025.0 Σχόλια 0 Μοιράστηκε 134 Views

GAMERANT.COMDune: Prophecy To Keep The Spice Flowing With A Season 2As the first season of Dune: Prophecy winds up just ahead of Christmas, its cast crew and fans wont have to spend the holiday wondering if the story has ended. In fact, theyll be able to ring in the new year knowing that Dune: Prophecy will carry on and air again, possibly in 2025.0 Σχόλια 0 Μοιράστηκε 134 Views -

GAMEDEV.NETCreate an Indie Game Budget: 10 Challenges and Solutions for SuccessI have seen many companies struggle with ineffective cost management and the solution is often simpler than expected. Implementing the right tool has made a huge difference for us. It streamlined our approval processes, effectively tracked budgets, and simplified payments to vendors. The time savings and reduction in errors has been incredible, and our team has mastered it without much trouble. If you're tired of outdated workflows like in0 Σχόλια 0 Μοιράστηκε 134 Views

GAMEDEV.NETCreate an Indie Game Budget: 10 Challenges and Solutions for SuccessI have seen many companies struggle with ineffective cost management and the solution is often simpler than expected. Implementing the right tool has made a huge difference for us. It streamlined our approval processes, effectively tracked budgets, and simplified payments to vendors. The time savings and reduction in errors has been incredible, and our team has mastered it without much trouble. If you're tired of outdated workflows like in0 Σχόλια 0 Μοιράστηκε 134 Views -

GAMEDEV.NETforce opengl to use software renderingI'm trying to write an opengl program that need to use pure software rendering for some test reasons.But i just can't find a common way to force opengl using CPU only even if it's running on the platform that equipped with a GPU.manually set compatibility profile or set the version of opengl into an older version (like 1.4) seems not work.So is there any official or recommended way to achieve that (better if it's cross-platform)? Or a0 Σχόλια 0 Μοιράστηκε 126 Views

GAMEDEV.NETforce opengl to use software renderingI'm trying to write an opengl program that need to use pure software rendering for some test reasons.But i just can't find a common way to force opengl using CPU only even if it's running on the platform that equipped with a GPU.manually set compatibility profile or set the version of opengl into an older version (like 1.4) seems not work.So is there any official or recommended way to achieve that (better if it's cross-platform)? Or a0 Σχόλια 0 Μοιράστηκε 126 Views -

GAMEDEV.NETAdding Support for Custom Literal ConstantsMy recent project needs fixed point float, it would be nice to have AngelScript support custom literal, e.g. 3.14f32 to define fixed32 literal constant. I've noticed that some people already suggested this feature in To-Do list a decade ago.I'm investigating the compiler of AngelScript and trying to add something like user-defined literals of C++ (operator""_suffix).My currently planned solution is to add new behaviors asBEHAVE_LITERAL_CONSTRUCT/F0 Σχόλια 0 Μοιράστηκε 129 Views

GAMEDEV.NETAdding Support for Custom Literal ConstantsMy recent project needs fixed point float, it would be nice to have AngelScript support custom literal, e.g. 3.14f32 to define fixed32 literal constant. I've noticed that some people already suggested this feature in To-Do list a decade ago.I'm investigating the compiler of AngelScript and trying to add something like user-defined literals of C++ (operator""_suffix).My currently planned solution is to add new behaviors asBEHAVE_LITERAL_CONSTRUCT/F0 Σχόλια 0 Μοιράστηκε 129 Views -

BLOGS.NVIDIA.COMNieR Perfect: GeForce NOW Loops Square Enixs NieR:Automata and NieR Replicant ver.1.22474487139 Into the CloudStuck in a gaming rut? Get out of the loop this GFN Thursday with four new games joining the GeForce NOW library of over 2,000 supported games.Dive into Square Enixs mind-bending action role-playing games (RPGs) NieR:Automata and NieR Replicant ver.1.22474487139, now streaming in the cloud. Plus, explore HoYoverses Zenless Zone Zero for an adrenaline-packed adventure, just in time for its 1.4 update.Check out GeForce Greats, which offers a look back at the biggest and best moments of PC gaming, from the launch of the GeForce 256 graphics card to the modern era. Follow the GeForce, GeForce NOW, NVIDIA Studio and NVIDIA AI PC channels on X, as well as #GeForceGreats, to join in on the nostalgic journey. Plus, participate in the GeForce LAN Missions from the cloud with GeForce NOW starting on Saturday, Jan. 4, for a chance to win in-game rewards, first come, first served.GeForce NOW members will also be able to launch a virtual stadium for a front-row seat to the CES opening keynote, to be delivered by NVIDIA founder and CEO Jensen Huang on Monday, Jan. 6. Stay tuned to GFN Thursday for more details.A Tale of Two NieRsNieR:Automata and NieR Replicant ver.1.22474487139 two captivating action RPGs from Square Enix delve into profound existential themes and are set in a distant, postapocalyptic future.Existence is futile, except in the cloud.Control androids 2B, 9S and A2 as they battle machine life-forms in a proxy war for human survival in NieR:Automata. The game explores complex philosophical concepts through its multiple endings and perspective shifts, blurring the lines between man and machine. It seamlessly mixes stylish and exhilarating combat with open-world exploration for a diverse gameplay experience.The heros journey leads to the cloud.NieR Replicant ver.1.22474487139, an updated version of the original NieR game, follows a young mans quest to save his sister from a mysterious illness called the Black Scrawl. Uncover dark secrets about their world while encountering a cast of unforgettable characters and making heart-wrenching decisions.Unravel the layers of the emotionally charged world of NieR with each playthrough on GeForce NOW. Experience rich storytelling and intense combat without high-end hardware. Carefully explore every possible loop with extended gaming sessions for Performance and Ultimate members.Find Zen in the CloudDive into the Hollows.Zenless Zone Zero, the free-to-play action role-playing game from HoYoverse, is set in the post-apocalyptic metropolis of New Eridu. Take on the role of a Proxy and guide others through dangerous alternate dimensions to confront an interdimensional threat. The game features a fast-paced, combo-oriented combat system and offers a mix of intense action, character-driven storytelling and exploration of a unique futuristic world.The title comes to the cloud in time for the version 1.4 update, A Storm of Falling Stars, bringing additions to the game for new and experienced players alike. Joining the roster of playable characters are Frost Anomaly agent Hoshimi Miyabi and Electric Attack agent Asaba Harumasa. Plus, the revamped Decibel system allows individual characters to collect and use Decibels instead of sharing across the squad, offering a new layer of strategy. Explore two new areas, Port Elpis and Reverb Arena, and try out the new Hollow Zero-Lost Void mode.Experience the adventure on GeForce NOW and dive deeper into New Eridu across devices with a Performance or Ultimate membership. Snag some in-game loot by following the GeForce NOW social channels (X, Facebook, Instagram, Threads) and be on the lookout for for a limited-quantity redemption code for a free reward package including 20,000 Dennies, three Official Investigator Logs and three W-Engine Power Supplies.Fresh ArrivalsLook for the following games available to stream in the cloud this week:NieR:Automata (Steam)NieR Replicant ver.1.22474487139 (Steam)Replikant Chat (Steam)Zenless Zone Zero v1.4 (HoYoverse)What are you planning to play this weekend? Let us know on X or in the comments below.0 Σχόλια 0 Μοιράστηκε 159 Views

BLOGS.NVIDIA.COMNieR Perfect: GeForce NOW Loops Square Enixs NieR:Automata and NieR Replicant ver.1.22474487139 Into the CloudStuck in a gaming rut? Get out of the loop this GFN Thursday with four new games joining the GeForce NOW library of over 2,000 supported games.Dive into Square Enixs mind-bending action role-playing games (RPGs) NieR:Automata and NieR Replicant ver.1.22474487139, now streaming in the cloud. Plus, explore HoYoverses Zenless Zone Zero for an adrenaline-packed adventure, just in time for its 1.4 update.Check out GeForce Greats, which offers a look back at the biggest and best moments of PC gaming, from the launch of the GeForce 256 graphics card to the modern era. Follow the GeForce, GeForce NOW, NVIDIA Studio and NVIDIA AI PC channels on X, as well as #GeForceGreats, to join in on the nostalgic journey. Plus, participate in the GeForce LAN Missions from the cloud with GeForce NOW starting on Saturday, Jan. 4, for a chance to win in-game rewards, first come, first served.GeForce NOW members will also be able to launch a virtual stadium for a front-row seat to the CES opening keynote, to be delivered by NVIDIA founder and CEO Jensen Huang on Monday, Jan. 6. Stay tuned to GFN Thursday for more details.A Tale of Two NieRsNieR:Automata and NieR Replicant ver.1.22474487139 two captivating action RPGs from Square Enix delve into profound existential themes and are set in a distant, postapocalyptic future.Existence is futile, except in the cloud.Control androids 2B, 9S and A2 as they battle machine life-forms in a proxy war for human survival in NieR:Automata. The game explores complex philosophical concepts through its multiple endings and perspective shifts, blurring the lines between man and machine. It seamlessly mixes stylish and exhilarating combat with open-world exploration for a diverse gameplay experience.The heros journey leads to the cloud.NieR Replicant ver.1.22474487139, an updated version of the original NieR game, follows a young mans quest to save his sister from a mysterious illness called the Black Scrawl. Uncover dark secrets about their world while encountering a cast of unforgettable characters and making heart-wrenching decisions.Unravel the layers of the emotionally charged world of NieR with each playthrough on GeForce NOW. Experience rich storytelling and intense combat without high-end hardware. Carefully explore every possible loop with extended gaming sessions for Performance and Ultimate members.Find Zen in the CloudDive into the Hollows.Zenless Zone Zero, the free-to-play action role-playing game from HoYoverse, is set in the post-apocalyptic metropolis of New Eridu. Take on the role of a Proxy and guide others through dangerous alternate dimensions to confront an interdimensional threat. The game features a fast-paced, combo-oriented combat system and offers a mix of intense action, character-driven storytelling and exploration of a unique futuristic world.The title comes to the cloud in time for the version 1.4 update, A Storm of Falling Stars, bringing additions to the game for new and experienced players alike. Joining the roster of playable characters are Frost Anomaly agent Hoshimi Miyabi and Electric Attack agent Asaba Harumasa. Plus, the revamped Decibel system allows individual characters to collect and use Decibels instead of sharing across the squad, offering a new layer of strategy. Explore two new areas, Port Elpis and Reverb Arena, and try out the new Hollow Zero-Lost Void mode.Experience the adventure on GeForce NOW and dive deeper into New Eridu across devices with a Performance or Ultimate membership. Snag some in-game loot by following the GeForce NOW social channels (X, Facebook, Instagram, Threads) and be on the lookout for for a limited-quantity redemption code for a free reward package including 20,000 Dennies, three Official Investigator Logs and three W-Engine Power Supplies.Fresh ArrivalsLook for the following games available to stream in the cloud this week:NieR:Automata (Steam)NieR Replicant ver.1.22474487139 (Steam)Replikant Chat (Steam)Zenless Zone Zero v1.4 (HoYoverse)What are you planning to play this weekend? Let us know on X or in the comments below.0 Σχόλια 0 Μοιράστηκε 159 Views -

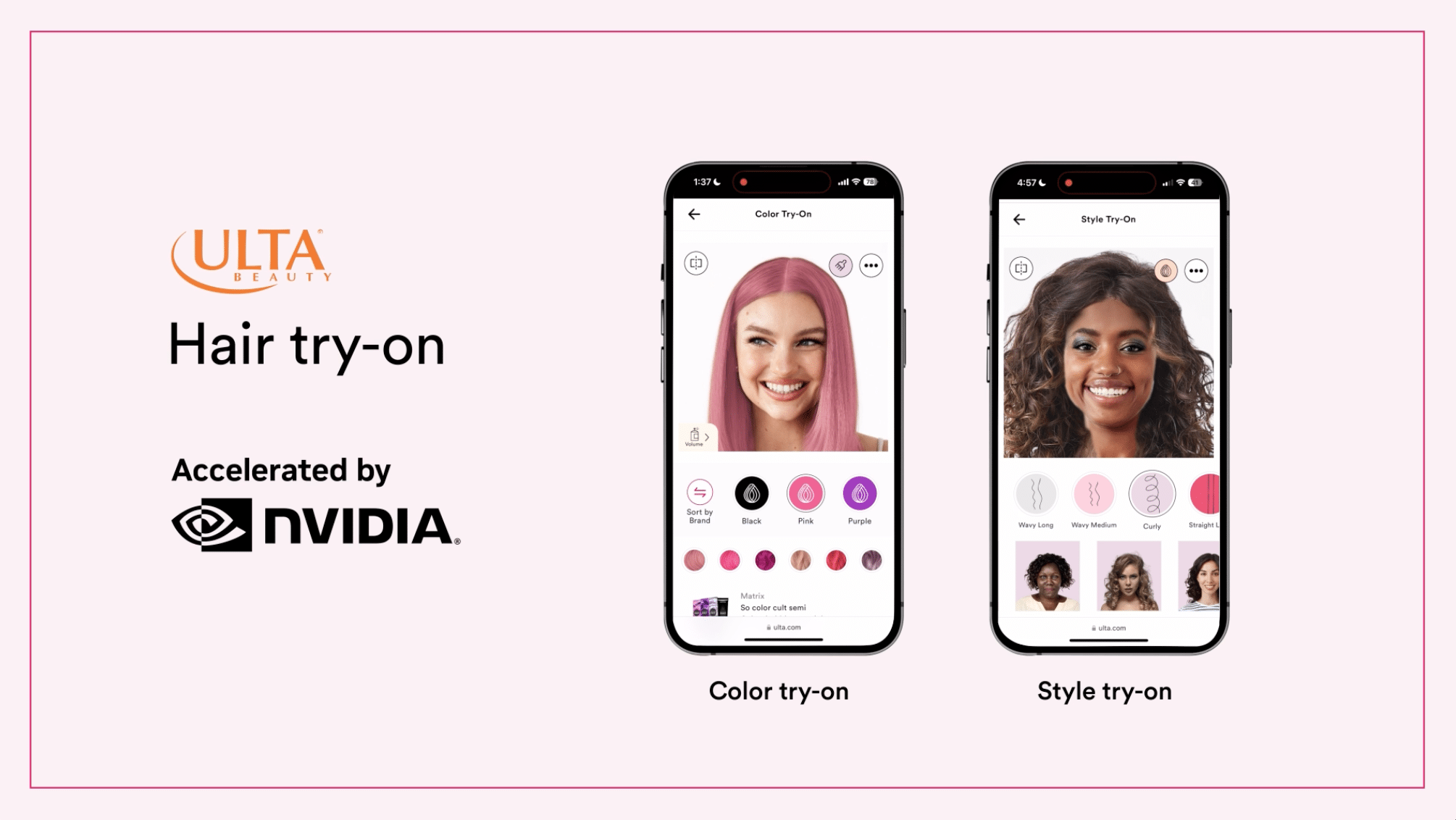

BLOGS.NVIDIA.COMAIs in Style: Ulta Beauty Helps Shoppers Virtually Try New HairstylesShoppers pondering a new hairstyle can now try styles before committing to curls or a new color. An AI app by Ulta Beauty, the largest specialty beauty retailer in the U.S., uses selfies to show near-instant, highly realistic previews of desired hairstyles.GLAMlab Hair Try On is a digital experience that lets users take a photo, upload a headshot or use a models picture to experiment with different hair colors and styles. Used by thousands of web and mobile app users daily, the experience is powered by the NVIDIA StyleGAN2 generative AI model.Hair color try-ons feature links to Ulta Beauty products so shoppers can achieve the look in real life. The company, which has more than 1,400 stores across the U.S., has found that people who use the virtual tool are more likely to purchase a product than those who dont.Shoppers need to try out hair and makeup styles before they purchase, said Juan Cardelino, director of the computer vision and digital innovation department at Ulta Beauty. As one of the first cosmetics companies to integrate makeup testers in stores, offering try-ons is part of Ulta Beautys DNA whether in physical or digital retail environments.https://blogs.nvidia.com/wp-content/uploads/2024/12/Hair-final-Nvidia-2024.12.18.mp4Adding Ulta Beautys Flair to StyleGAN2GLAMlab is Ulta Beautys first generative AI application, developed by its digital innovation team.To build its AI pipeline, the team turned to StyleGAN2, a style-based neural network architecture for generative adversarial networks, aka GANs. StyleGAN2, developed by NVIDIA Research, uses transfer learning to generate infinite images in a variety of styles.StyleGAN2 is one of the most well-regarded models in the tech community, and, since the source code was available for experimentation, it was the right choice for our application, Cardelino said. For our hairstyle try-on use case, we had to license the model for commercial use, retrain it and put guardrails around it to ensure the AI was only modifying pixels related to hair not distorting any feature of the users face.Available on the Ulta Beauty website and mobile app, the hair style and color try-ons rely on NVIDIA Tensor Core GPUs in the cloud to run AI inference, which takes around 5 seconds to compute the first style and about a second each for subsequent styles.The company next plans to incorporate virtual trials for additional hair categories like wigs and is exploring how the virtual hairstyle try-ons could be connected to in-store styling services.Stylists could use the tool to show our guests how certain hairstyles will look on them, giving them more confidence to try new looks, Cardelino said.Beyond giving customers a new way to interact with Ulta Beautys products, these AI-powered virtual try-ons give users a chance to be creative and explore new possibilities for their personal styles.Hair and makeup are playful categories, Cardelino said. Virtual try-ons are a way to explore options that may be out of a customers comfort zone without needing to commit to a physical change.See the latest work from NVIDIA Research, which has hundreds of scientists and engineers worldwide, with teams focused on topics including AI, computer graphics, computer vision, self-driving cars and robotics.0 Σχόλια 0 Μοιράστηκε 176 Views

BLOGS.NVIDIA.COMAIs in Style: Ulta Beauty Helps Shoppers Virtually Try New HairstylesShoppers pondering a new hairstyle can now try styles before committing to curls or a new color. An AI app by Ulta Beauty, the largest specialty beauty retailer in the U.S., uses selfies to show near-instant, highly realistic previews of desired hairstyles.GLAMlab Hair Try On is a digital experience that lets users take a photo, upload a headshot or use a models picture to experiment with different hair colors and styles. Used by thousands of web and mobile app users daily, the experience is powered by the NVIDIA StyleGAN2 generative AI model.Hair color try-ons feature links to Ulta Beauty products so shoppers can achieve the look in real life. The company, which has more than 1,400 stores across the U.S., has found that people who use the virtual tool are more likely to purchase a product than those who dont.Shoppers need to try out hair and makeup styles before they purchase, said Juan Cardelino, director of the computer vision and digital innovation department at Ulta Beauty. As one of the first cosmetics companies to integrate makeup testers in stores, offering try-ons is part of Ulta Beautys DNA whether in physical or digital retail environments.https://blogs.nvidia.com/wp-content/uploads/2024/12/Hair-final-Nvidia-2024.12.18.mp4Adding Ulta Beautys Flair to StyleGAN2GLAMlab is Ulta Beautys first generative AI application, developed by its digital innovation team.To build its AI pipeline, the team turned to StyleGAN2, a style-based neural network architecture for generative adversarial networks, aka GANs. StyleGAN2, developed by NVIDIA Research, uses transfer learning to generate infinite images in a variety of styles.StyleGAN2 is one of the most well-regarded models in the tech community, and, since the source code was available for experimentation, it was the right choice for our application, Cardelino said. For our hairstyle try-on use case, we had to license the model for commercial use, retrain it and put guardrails around it to ensure the AI was only modifying pixels related to hair not distorting any feature of the users face.Available on the Ulta Beauty website and mobile app, the hair style and color try-ons rely on NVIDIA Tensor Core GPUs in the cloud to run AI inference, which takes around 5 seconds to compute the first style and about a second each for subsequent styles.The company next plans to incorporate virtual trials for additional hair categories like wigs and is exploring how the virtual hairstyle try-ons could be connected to in-store styling services.Stylists could use the tool to show our guests how certain hairstyles will look on them, giving them more confidence to try new looks, Cardelino said.Beyond giving customers a new way to interact with Ulta Beautys products, these AI-powered virtual try-ons give users a chance to be creative and explore new possibilities for their personal styles.Hair and makeup are playful categories, Cardelino said. Virtual try-ons are a way to explore options that may be out of a customers comfort zone without needing to commit to a physical change.See the latest work from NVIDIA Research, which has hundreds of scientists and engineers worldwide, with teams focused on topics including AI, computer graphics, computer vision, self-driving cars and robotics.0 Σχόλια 0 Μοιράστηκε 176 Views -

BLOG.PLAYSTATION.COMThe Holiday Sale promotion comes to PlayStation StoreUndecided on your gaming plans for the holidays? Let PlayStation Store help with a sleigh-full of discounted delights!Were rounding off 2024 with one of the biggest promotions of the year, featuring numerous games and DLC, all reduced for a limited time*. That includes the likes of EA Sports FC 25, Call of Duty: Black Ops 6, NBA 2K25 and God of War Ragnark.And there are many more. So many, in fact, we cant fit the entire list here. Preview a selection of the offers below, then, when the promotion starts on December 20, head to PlayStation Store to find out your regional discount.A Plague Tale: InnocenceA Plague Tale: RequiemAEW: Fight ForeverAfter the Fall Complete EditionAfterimage PS4 & PS5Agatha Christie Murder on the Orient ExpressAge of Wonders 4AkimbotAmong Us VRAngry Birds VR: Isle of PigsAnno 1800 Console Edition StandardAnother Crabs TreasureArizona Sunshine 2Arizona Sunshine RemakeARK: Survival AscendedARMORED CORE VI FIRES OF RUBICON Deluxe Edition PS4&PS5ARMORED CORE VI FIRES OF RUBICON PS4&PS5Arranger: A Role-Puzzling AdventureAssassins Creed Mirage & Assassins Creed Valhalla BundleAssassins Creed Mirage Master Assassin EditionAssassins Creed ValhallaAssassins Creed Valhalla Complete EditionAssassins Creed MirageAssetto Corsa CompetizioneASTRO BOTASTRO BOT Digital Deluxe EditionAtari 50: The Anniversary CelebrationAtelier Ryza 3: Alchemist of the End & the Secret Key (PS4 & PS5)Atelier Ryza 3: Alchemist of the End & the Secret Key Ultimate Edition (PS4 & PS5)Atlas Fallen: Reign of SandAtomic Heart Premium Edition (PS4 & PS5)Atomic Heart (PS4 & PS5)Avatar The Last Airbender: Quest for BalanceAvatar: Frontiers of PandoraAvatar: Frontiers of Pandora Ultimate EditionBack 4 Blood: Standard Edition PS4 & PS5Back 4 Blood: Ultimate Edition PS4 & PS5Baldurs Gate 3Baldurs Gate 3 Digital Deluxe EditionBanishers: Ghosts of New EdenBarbie Project FriendshipBassmaster Fishing PS4 and PS5Battlefield 2042 PS5Beyond Galaxyland (PS4 & PS5)Beyond Good & Evil 20th Anniversary EditionBleak Faith: ForsakenBloodRayne 2: ReVampedBloomtown: A Different StoryBluey: The VideogameBorderlands 3 PS4 & PS5Borderlands 3: Super Deluxe Edition PS4 & PS5Borderlands Collection: Pandoras BoxBraid, Anniversary EditionBramble: The Mountain KingBratz: Flaunt Your FashionBreachersBrotato PS4 & PS5Brothers: A Tale of Two Sons RemakeCall of Duty: Black Ops 6 Cross-Gen BundleCall of Duty: Black Ops 6 Vault EditionCall of Duty: Vanguard Cross-Gen BundleCaravan SandWitchCARRIONCATAN Console Edition Super DeluxeChernobylite Complete EditionChivalry 2 Kings Edition PS4 & PS5Cities: Skylines RemasteredClock Tower: RewindCOCOONCONSCRIPT Digital Deluxe EditionConstruction Simulator Gold EditionContra: Operation Galuga PS4 & PS5Core KeeperCreature LabCricket 24Crime Boss: Rockay CityCRISIS CORE -FINAL FANTASY VII- REUNION DIGITAL DELUXE EDITION PS4 & PS5CRISIS CORE -FINAL FANTASY VII- REUNION PS4 & PS5Crown Wars Sacred EditionCrusader Kings III: Royal EditionCult of the LambCult of the Lamb: Unholy EditionCYGNI: All Guns BlazingDarkwoodDaymare: 1994 SandcastleDead by Daylight PS4 & PS5Dead Island 2Dead Rising Deluxe RemasterDead SpaceDEATHLOOP Deluxe EditionDeep Rock Galactic PS4 & PS5DemeoDemon Slayer -Kimetsu no Yaiba- The Hinokami Chronicles PS4 & PS5Demon Slayer: Kimetsu no Yaiba The Hinokami Chronicles Ultimate EditionDestroy All Humans! 2 Reprobed: Dressed to Skill EditionDevil May Cry 5 Special EditionDiablo IV Expansion BundleDiablo IV Standard EditionDouble Dragon Gaiden: Rise of the DragonsDragon Age: The VeilguardDRAGON BALL FighterZ PS4 & PS5DRAGON BALL XENOVERSE 2DRAGON BALL Z: KAKAROTDRAGON BALL Z: KAKAROT Legendary EditionDragons Dogma 2 Deluxe EditionDREDGE Digital Deluxe EditionDungeons 4Dying Light 2 Stay Human PS4&PS5DYNASTY WARRIORS 9 EmpiresDYNASTY WARRIORS 9 Empires Deluxe EditionDYSMANTLEEA SPORTS FC 25 Standard Edition PS4 & PS5EA SPORTS College Football 25EA SPORTS Madden NFL 25EA SPORTS MVP Bundle (Madden NFL 25 Deluxe Edition & College Football 25 Deluxe Edition)EA SPORTS PGA TOUREA SPORTS WRC 24EARTH DEFENSE FORCE 6 DELUXE EDITION PS4 & PS5EARTH DEFENSE FORCE: WORLD BROTHERS 2 PS4 & PS5Eiyuden Chronicle: Hundred HeroesEmpire of the AntsEnotria: The Last Song Deluxe editionEscape AcademyEvil WestExoprimal PS4 & PS5Expeditions: A MudRunner Game Supreme Edition (PS4 & PS5)Expeditions: A MudRunner Game (PS4 & PS5)FabledomFallout 4Far Cry 6 Game of the Year EditionFAR CRY6 Standard Edition PS4 & PS5FATAL FRAME: Maiden of Black Water PS4 & PS5FATAL FRAME: Mask of the Lunar Eclipse (PS4 & PS5)Fate/Samurai Remnant Digital Deluxe Edition(PS4 & PS5)Fate/Samurai Remnant(PS4 & PS5)Fernbus Coach SimulatorFINAL FANTASY VII REBIRTHFINAL FANTASY VII REBIRTH Digital Deluxe EditionFINAL FANTASY VII REMAKE & REBIRTH Twin PackFINAL FANTASY VII REMAKE INTERGRADEFINAL FANTASY VII REMAKE INTERGRADE Digital Deluxe EditionFINAL FANTASY XVIFINAL FANTASY XVI COMPLETE EDITIONFirst Responder Simulation Bundle: Police FirefightingForspokenFort SolisFunko FusionGhostbusters: Spirits Unleashed Ecto EditionGhostrunnerGhostrunner 2Ghostrunner 2 Brutal EditionGhostwire: Tokyo Deluxe EditionGloomhaven Gold EditionGoat Simulator 3God of War RagnarkGotham KnightsGranblue Fantasy Versus: Rising Deluxe EditionGranblue Fantasy Versus: Rising Standard EditionGranblue Fantasy: Relink Digital Deluxe EditionGranblue Fantasy: Relink Special EditionGranblue Fantasy: Relink Standard EditionGrand Rush VR Highway Car Traffic Racing SimulatorGrand Theft Auto V (PlayStation5)Grand Theft Auto: The Trilogy The Definitive Edition (PS5 & PS4)GreedFall Standard EditionGreyhill IncidentGrocery Market Simulator ManagerGrounded PS4 & PS5Guilty Gear -Strive-Gun Club VRGUNDAM BREAKER 4 PS4 & PS5GUNDAM BREAKER 4 Ultimate Edition PS4 & PS5GYLTHarold HalibutHarry Potter: Quidditch Champions Deluxe Edition PS4 & PS5HavenHazelight BundleHell Let LooseHELLDIVERS 2Hellsweeper VRHigh On LifeHITMAN World of Assassination Deluxe EditionHogwarts Legacy + Harry Potter: Quidditch Champions Deluxe Editions BundleHogwarts Legacy PS5 VersionHome Safety HotlineHorizon Forbidden WestHOT WHEELS UNLEASHED 2 Turbocharged Deluxe Edition PS4 & PS5HOT WHEELS UNLEASHED 2 Turbocharged Legendary Edition PS4 & PS5HOT WHEELS UNLEASHED 2 Turbocharged PS4 & PS5Hotel Renovator Five Star EditionHUMANKIND Heritage Edition PS4 & PS5Hunt: Showdown 1896 Premium EditionHunt: Showdown 1896 Starter EditionHunting Simulator 2 Elite EditionIMMORTALS FENYX RISING GOLD EDITION PS4 & PS5Insurgency: Sandstorm Gold Edition [PS4 & PS5]Insurgency: Sandstorm [PS4 & PS5]Into the RadiusIron Harvest Complete EditionIsonzoJoJos Bizarre Adventure: All-Star Battle R Deluxe Edition PS4 & PS5Jujutsu Kaisen Cursed Clash PS4 & PS5Jujutsu Kaisen Cursed Clash Ultimate Edition PS4 & PS5Jurassic World Aftermath Collection PS4 & PS5JusantJust Dance 2025 EditionJust Dance 2025 Ultimate EditionKeyWeKingdoms and CastlesKong: Survivor InstinctKunitsu-Gami: Path of the Goddess PS4 & PS5Lake PS4 & PS5Lakeview Cabin CollectionLEGO Harry Potter CollectionLEGO 2K Drive Cross-Gen Standard EditionLEGO Horizon AdventuresLEGO Horizon Adventures Digital Deluxe EditionLEGO Star Wars: The Skywalker Saga Galactic EditionLEGO Star Wars: The Skywalker Saga PS4 & PS5Lies of PLife is Strange: Double ExposureLife is Strange: Double Exposure Ultimate EditionLife is Strange: True Colors Deluxe Edition PS4 & PS5Like a Dragon: Infinite Wealth PS4 & PS5Like a Dragon: Ishin! Digital Deluxe Edition PS4 & PS5Little Nightmares II PS4 & PS5Lords of the Fallen Deluxe EditionMADiSONMADiSON VRManeater Apex EditionMarvels Guardians of the Galaxy PS4 & PS5Marvels Midnight Suns Enhanced EditionMarvels Midnight Suns Legendary Edition for PS5Marvels Spider-Man 2Marvels Spider-Man 2 Digital Deluxe EditionMarvels Spider-Man RemasteredMedieval DynastyMEGATON MUSASHI W: WIRED Deluxe EditionMEGATON MUSASHI W: WIRED Standard EditionMETAL GEAR SOLID Master Collection Version PS4 & PS5METAL GEAR SOLID 2: Sons of Liberty Master Collection Version PS4 & PS5Metaphor: ReFantazio PS4 & PS5Metro AwakeningMetro ExodusMinecraft LegendsMLB The Show 24 Digital Deluxe Edition PS5 and PS4MLB The Show 24 PS5MONOPOLYMonster Energy Supercross The Official Videogame 6 PS4 & PS5Monster Hunter Rise + SunbreakMonster Hunter Rise PS4 & PS5Monster Jam Showdown Big Air Edition PS4 & PS5Monster Jam Showdown PS4 & PS5Morbid: The Lords of IreMORDHAUMortal Kombat 11Mortal Kombat 1Mortal Kombat 1: Khaos Reigns KollectionMossMotoGP24 PS4 & PS5Mount & Blade II: BannerlordMoving Out 2Mushoku Tensei: Jobless Reincarnation Quest of MemoriesMX vs ATV Legends 2024 Monster Energy Supercross EditionMXGP 2021 The Official Motocross VideogameMY LITTLE PONY: A Maretime Bay AdventureMy Little Pony: A Zephyr Heights MysteryNARUTO X BORUTO Ultimate Ninja STORM CONNECTIONS Deluxe Edition PS4 & PS5NBA 2K25 All-Star EditionNBA 2K25 Standard EditionNeed for Speed UnboundNeed for Speed Unbound Ultimate CollectionNeon WhiteNEW WORLD: AETERNUMNew World: Aeternum Deluxe EditionNHL 25 Standard EditionNHRA Championship Drag Racing: Speed For AllNickelodeon All-Star Brawl 2 Ultimate EditionNickelodeon Kart Racers 3: Slime Speedway Turbo EditionNikoderiko: The Magical WorldNo Mans Sky PS4 & PS5Nobody Wants to DieOCTOPATH TRAVELER II PS4&PS5OCTOPATH TRAVELER PS4&PS5ON THE ROAD The Truck SimulatorONE PIECE ODYSSEY Deluxe Edition PS4 & PS5Outcast A New BeginningOuter WildsOuter Wilds: Archaeologist EditionOutward Definitive EditionOvercooked! All You Can EatPacific Drive: Deluxe EditionPAC-MAN WORLD Re-PAC PS4 & PS5Paleo Pines PS4 & PS5PalworldPAW Patrol WorldPAW Patrol: Grand PrixPAYDAY 3PAYDAY 3: Year 1 EditionPersona 3 Reload Digital Deluxe Edition PS4 & PS5Persona 5 Tactica PS4 & PS5PGA 2K23 Cross-Gen EditionPGA TOUR 2K23 Tiger Woods EditionPhasmophobiaPistol Whip PS4 & PS5Planet Coaster 2Planet of LanaPneumataPolice Simulator: Patrol Officers: Gold EditionPotion Craft: Alchemist SimulatorPotion PermitPowerWash SimulatorPrince of Persia The Lost CrownProject Wingman: Frontline 59Propagation: Paradise HotelQuake IIRailway Empire 2Rain WorldRed Matter CollectionRemnant II Deluxe EditionRESIDENT EVIL 2RESIDENT EVIL 3Resident Evil 4 Gold Edition PS4 & PS5RESIDENT EVIL 7 biohazardResident Evil 7 Gold Edition & Village Gold Edition PS4 & PS5Resident Evil Village PS4 & PS5Rewind or DieREYNATIS Digital Deluxe EditionRez InfiniteRIDE 5RIDE 5 Special EditionRiders Republic PS4 & PS5Riders Republic Complete EditionRisk of Rain 2RoboCop: Rogue CityRogue Legacy 2RollerCoaster Tycoon Adventures DeluxeRomancing SaGa 2: Revenge of the Seven PS4&PS5Rugby 22SaGa Emerald Beyond PS4&PS5Saints Row PS4&PS5Saints Row: The Third RemasteredSCARLET NEXUS Ultimate Edition PS4 & PS5Scars AboveScornSea of StarsSea of Thieves: Deluxe EditionSea of Thieves: Premium EditionShadows of DoubtShreddersShredders 540INDY EditionSifuSILENT HILL 2Sker RitualSker Ritual: Digital Deluxe EditionSKULL AND BONESSlime Rancher 2SlitterheadSmalland: Survive the WildsSniper Ghost Warrior Contracts 2SnowRunner 2-Year Anniversary EditionSnowRunner 4-Year Anniversary EditionSon and BoneSonic Frontiers Digital Deluxe Edition PS4 & PS5SONIC SUPERSTARS Digital Deluxe Edition featuring LEGO PS4 & PS5SONIC X SHADOW GENERATIONS Digital Deluxe Edition PS4 & PS5SOUTH PARK: SNOW DAY!SpongeBob SquarePants: The Patrick Star GameSPYANYA: Operation Memories Deluxe Edition PS4 & PS5Squirrel with a GunSTAR OCEAN THE SECOND STORY R PS4 & PS5STAR WARS Jedi: Fallen OrderStar Wars OutlawsStar Wars Outlaws Ultimate EditionSTAR WARS Battlefront Classic CollectionSTAR WARS: Dark Forces RemasterStay Out of the House PS4 & PS5SteamWorld Heist IISTRANGER OF PARADISE FINAL FANTASY ORIGIN Digital Deluxe Edition PS4 & PS5Street Fighter 6Street Fighter 6 Ultimate EditionStreet Outlaws 2: Winner Takes AllSTRIDE: FatesSunnySideSurvival: Fountain of Youth Captains EditionSword and Fairy: Together Forever PS4 & PS5SWORD ART ONLINE Fractured DaydreamSWORD ART ONLINE Fractured Daydream Premium EditionSWORD ART ONLINE Last Recollection PS4 & PS5Swordsman VRSynapseSystem ShockTactics Ogre: RebornTales of Arise Beyond the Dawn Ultimate Edition PS4 & PS5Taxi Life Supporter EditionTeenage Mutant Ninja Turtles: Mutants Unleashed Digital Deluxe EditionTeenage Mutant Ninja Turtles: Shredders RevengeTEKKEN 8TEKKEN 8 Ultimate EditionTerminator: Resistance EnhancedTest Drive Unlimited Solar Crown Gold EditionTest Drive Unlimited Solar Crown Silver Streets EditionTetris Effect: ConnectedTetris ForeverThat Time I Got Reincarnated as a Slime ISEKAI ChroniclesThe 7th Guest VRThe Casting of Frank StoneThe Crew Motorfest Cross-Gen BundleThe Crew Motorfest Ultimate EditionThe Dark Pictures Anthology: House of Ashes PS4 & PS5The Dark Pictures Anthology: The Devil in Me PS4 & PS5The Dark Pictures: Switchback VRThe Elder Scrolls OnlineThe Elder Scrolls V: Skyrim Anniversary Edition PS5 & PS4The Grinch: Christmas AdventuresThe Karate Kid: Street RumbleTHE KING OF FIGHTERS XV Standard Edition PS4 & PS5The Last Stand: AftermathThe Legend of Heroes: Trails into ReverieThe Legend of Heroes: Trails into Reverie Ultimate EditionThe Outer Worlds: Spacers Choice EditionThe Pathless PS4 & PS5The Quarry for PS5The Room VR: A Dark MatterThe Sinking City PS5 Deluxe EditionThe Smurfs Dreams PS4 & PS5The Smurfs Village PartyThe Texas Chain Saw MassacreThe Walking Dead: DestiniesThis Bed We MadeTIEBREAK Ace EditionTimeSplittersTimeSplitters 2TimeSplitters: Future PerfectTiny Tinas Wonderlands: Chaotic Great EditionTom Clancys Rainbow Six Siege Operator EditionTom Clancys Rainbow Six Siege Ultimate EditionTomb Raider I-III Remastered Starring Lara CroftTopSpin 2K25 Cross-Gen Digital EditionTopSpin 2K25 Grand Slam EditionTowers of AghasbaTownsmen VRTrailmakersTrain Sim World 5: Standard Edition PS4 & PS5Transformers Beyond RealityTRANSFORMERS: Galactic TrialsTrepang2Tropico 6 Next Gen EditionTruck and Logistics SimulatorTwelve MinutesTwo Point CampusUFC 5UNDER NIGHT IN-BIRTH II Sys:CelesUndisputedUnknown 9: AwakeningUNOUntil ThenVALKYRIE ELYSIUM PS4&PS5VALKYRIE PROFILE: LENNETHVampire Hunters PS4 & PS5Vampire: The Masquerade JusticeVENDETTA FOREVERVertigo 2ViewfinderVISAGEVisions of Mana Digital Deluxe Edition PS4 & PS5Visions of Mana PS4 & PS5Waltz of the WizardWarhammer 40,000: Boltgun (PS4 & PS5)Warhammer 40,000: Chaos Gate Daemonhunters PS4 & PS5Warhammer 40,000: Rogue TraderWarhammer 40,000: Rogue Trader Voidfarer EditionWarhammer 40,000: Space Marine 2Warhammer 40,000: Space Marine 2 Ultra EditionWarhammer Age of Sigmar: Realms of Ruin Ultimate EditionWatch Dogs: Legion Ultimate Edition PS4 & PS5Watch Dogs: Legion PS4 & PS5WayfinderWe Love Katamari REROLL+ Royal Reverie Special Edition PS4 & PS5Welcome to ParadiZeWelcome to ParadiZe Zombot EditionWhat Remains of Edith FinchWhite Day 2: The Flower That Tells Lies Complete EditionWho Wants to Be a Millionaire? New Edition PS5WILD HEARTS Karakuri EditionWithering RoomsWizardry: Proving Grounds of the Mad OverlordWo Long: Fallen Dynasty (PS4 & PS5)Wo Long: Fallen Dynasty Complete Edition (PS4 & PS5)Worms Armageddon: Anniversary EditionWWE 2K24 40 Years of WrestleMania EditionWWE 2K24 Bray Wyatt EditionWWE 2K24 Cross-Gen Digital EditionXIIIYakuza: Like a Dragon Legendary Hero Edition PS4 & PS5Yakuza: Like a Dragon PS4 & PS5Ys IX: Monstrum NoxYs VIII: Lacrimosa of DANAYs X: Nordics Digital Deluxe EditionYs X: Nordics Digital Ultimate Edition*The Holiday Sale promotion runs from Friday, December 20 00.00am PST/GMT/JST until Friday, January 17 11:59 PM PST/GMT/JST. Some titles in the promotion are only discounted until January 6. Please check the individual product pages on PlayStation Store for more information.0 Σχόλια 0 Μοιράστηκε 160 Views

BLOG.PLAYSTATION.COMThe Holiday Sale promotion comes to PlayStation StoreUndecided on your gaming plans for the holidays? Let PlayStation Store help with a sleigh-full of discounted delights!Were rounding off 2024 with one of the biggest promotions of the year, featuring numerous games and DLC, all reduced for a limited time*. That includes the likes of EA Sports FC 25, Call of Duty: Black Ops 6, NBA 2K25 and God of War Ragnark.And there are many more. So many, in fact, we cant fit the entire list here. Preview a selection of the offers below, then, when the promotion starts on December 20, head to PlayStation Store to find out your regional discount.A Plague Tale: InnocenceA Plague Tale: RequiemAEW: Fight ForeverAfter the Fall Complete EditionAfterimage PS4 & PS5Agatha Christie Murder on the Orient ExpressAge of Wonders 4AkimbotAmong Us VRAngry Birds VR: Isle of PigsAnno 1800 Console Edition StandardAnother Crabs TreasureArizona Sunshine 2Arizona Sunshine RemakeARK: Survival AscendedARMORED CORE VI FIRES OF RUBICON Deluxe Edition PS4&PS5ARMORED CORE VI FIRES OF RUBICON PS4&PS5Arranger: A Role-Puzzling AdventureAssassins Creed Mirage & Assassins Creed Valhalla BundleAssassins Creed Mirage Master Assassin EditionAssassins Creed ValhallaAssassins Creed Valhalla Complete EditionAssassins Creed MirageAssetto Corsa CompetizioneASTRO BOTASTRO BOT Digital Deluxe EditionAtari 50: The Anniversary CelebrationAtelier Ryza 3: Alchemist of the End & the Secret Key (PS4 & PS5)Atelier Ryza 3: Alchemist of the End & the Secret Key Ultimate Edition (PS4 & PS5)Atlas Fallen: Reign of SandAtomic Heart Premium Edition (PS4 & PS5)Atomic Heart (PS4 & PS5)Avatar The Last Airbender: Quest for BalanceAvatar: Frontiers of PandoraAvatar: Frontiers of Pandora Ultimate EditionBack 4 Blood: Standard Edition PS4 & PS5Back 4 Blood: Ultimate Edition PS4 & PS5Baldurs Gate 3Baldurs Gate 3 Digital Deluxe EditionBanishers: Ghosts of New EdenBarbie Project FriendshipBassmaster Fishing PS4 and PS5Battlefield 2042 PS5Beyond Galaxyland (PS4 & PS5)Beyond Good & Evil 20th Anniversary EditionBleak Faith: ForsakenBloodRayne 2: ReVampedBloomtown: A Different StoryBluey: The VideogameBorderlands 3 PS4 & PS5Borderlands 3: Super Deluxe Edition PS4 & PS5Borderlands Collection: Pandoras BoxBraid, Anniversary EditionBramble: The Mountain KingBratz: Flaunt Your FashionBreachersBrotato PS4 & PS5Brothers: A Tale of Two Sons RemakeCall of Duty: Black Ops 6 Cross-Gen BundleCall of Duty: Black Ops 6 Vault EditionCall of Duty: Vanguard Cross-Gen BundleCaravan SandWitchCARRIONCATAN Console Edition Super DeluxeChernobylite Complete EditionChivalry 2 Kings Edition PS4 & PS5Cities: Skylines RemasteredClock Tower: RewindCOCOONCONSCRIPT Digital Deluxe EditionConstruction Simulator Gold EditionContra: Operation Galuga PS4 & PS5Core KeeperCreature LabCricket 24Crime Boss: Rockay CityCRISIS CORE -FINAL FANTASY VII- REUNION DIGITAL DELUXE EDITION PS4 & PS5CRISIS CORE -FINAL FANTASY VII- REUNION PS4 & PS5Crown Wars Sacred EditionCrusader Kings III: Royal EditionCult of the LambCult of the Lamb: Unholy EditionCYGNI: All Guns BlazingDarkwoodDaymare: 1994 SandcastleDead by Daylight PS4 & PS5Dead Island 2Dead Rising Deluxe RemasterDead SpaceDEATHLOOP Deluxe EditionDeep Rock Galactic PS4 & PS5DemeoDemon Slayer -Kimetsu no Yaiba- The Hinokami Chronicles PS4 & PS5Demon Slayer: Kimetsu no Yaiba The Hinokami Chronicles Ultimate EditionDestroy All Humans! 2 Reprobed: Dressed to Skill EditionDevil May Cry 5 Special EditionDiablo IV Expansion BundleDiablo IV Standard EditionDouble Dragon Gaiden: Rise of the DragonsDragon Age: The VeilguardDRAGON BALL FighterZ PS4 & PS5DRAGON BALL XENOVERSE 2DRAGON BALL Z: KAKAROTDRAGON BALL Z: KAKAROT Legendary EditionDragons Dogma 2 Deluxe EditionDREDGE Digital Deluxe EditionDungeons 4Dying Light 2 Stay Human PS4&PS5DYNASTY WARRIORS 9 EmpiresDYNASTY WARRIORS 9 Empires Deluxe EditionDYSMANTLEEA SPORTS FC 25 Standard Edition PS4 & PS5EA SPORTS College Football 25EA SPORTS Madden NFL 25EA SPORTS MVP Bundle (Madden NFL 25 Deluxe Edition & College Football 25 Deluxe Edition)EA SPORTS PGA TOUREA SPORTS WRC 24EARTH DEFENSE FORCE 6 DELUXE EDITION PS4 & PS5EARTH DEFENSE FORCE: WORLD BROTHERS 2 PS4 & PS5Eiyuden Chronicle: Hundred HeroesEmpire of the AntsEnotria: The Last Song Deluxe editionEscape AcademyEvil WestExoprimal PS4 & PS5Expeditions: A MudRunner Game Supreme Edition (PS4 & PS5)Expeditions: A MudRunner Game (PS4 & PS5)FabledomFallout 4Far Cry 6 Game of the Year EditionFAR CRY6 Standard Edition PS4 & PS5FATAL FRAME: Maiden of Black Water PS4 & PS5FATAL FRAME: Mask of the Lunar Eclipse (PS4 & PS5)Fate/Samurai Remnant Digital Deluxe Edition(PS4 & PS5)Fate/Samurai Remnant(PS4 & PS5)Fernbus Coach SimulatorFINAL FANTASY VII REBIRTHFINAL FANTASY VII REBIRTH Digital Deluxe EditionFINAL FANTASY VII REMAKE & REBIRTH Twin PackFINAL FANTASY VII REMAKE INTERGRADEFINAL FANTASY VII REMAKE INTERGRADE Digital Deluxe EditionFINAL FANTASY XVIFINAL FANTASY XVI COMPLETE EDITIONFirst Responder Simulation Bundle: Police FirefightingForspokenFort SolisFunko FusionGhostbusters: Spirits Unleashed Ecto EditionGhostrunnerGhostrunner 2Ghostrunner 2 Brutal EditionGhostwire: Tokyo Deluxe EditionGloomhaven Gold EditionGoat Simulator 3God of War RagnarkGotham KnightsGranblue Fantasy Versus: Rising Deluxe EditionGranblue Fantasy Versus: Rising Standard EditionGranblue Fantasy: Relink Digital Deluxe EditionGranblue Fantasy: Relink Special EditionGranblue Fantasy: Relink Standard EditionGrand Rush VR Highway Car Traffic Racing SimulatorGrand Theft Auto V (PlayStation5)Grand Theft Auto: The Trilogy The Definitive Edition (PS5 & PS4)GreedFall Standard EditionGreyhill IncidentGrocery Market Simulator ManagerGrounded PS4 & PS5Guilty Gear -Strive-Gun Club VRGUNDAM BREAKER 4 PS4 & PS5GUNDAM BREAKER 4 Ultimate Edition PS4 & PS5GYLTHarold HalibutHarry Potter: Quidditch Champions Deluxe Edition PS4 & PS5HavenHazelight BundleHell Let LooseHELLDIVERS 2Hellsweeper VRHigh On LifeHITMAN World of Assassination Deluxe EditionHogwarts Legacy + Harry Potter: Quidditch Champions Deluxe Editions BundleHogwarts Legacy PS5 VersionHome Safety HotlineHorizon Forbidden WestHOT WHEELS UNLEASHED 2 Turbocharged Deluxe Edition PS4 & PS5HOT WHEELS UNLEASHED 2 Turbocharged Legendary Edition PS4 & PS5HOT WHEELS UNLEASHED 2 Turbocharged PS4 & PS5Hotel Renovator Five Star EditionHUMANKIND Heritage Edition PS4 & PS5Hunt: Showdown 1896 Premium EditionHunt: Showdown 1896 Starter EditionHunting Simulator 2 Elite EditionIMMORTALS FENYX RISING GOLD EDITION PS4 & PS5Insurgency: Sandstorm Gold Edition [PS4 & PS5]Insurgency: Sandstorm [PS4 & PS5]Into the RadiusIron Harvest Complete EditionIsonzoJoJos Bizarre Adventure: All-Star Battle R Deluxe Edition PS4 & PS5Jujutsu Kaisen Cursed Clash PS4 & PS5Jujutsu Kaisen Cursed Clash Ultimate Edition PS4 & PS5Jurassic World Aftermath Collection PS4 & PS5JusantJust Dance 2025 EditionJust Dance 2025 Ultimate EditionKeyWeKingdoms and CastlesKong: Survivor InstinctKunitsu-Gami: Path of the Goddess PS4 & PS5Lake PS4 & PS5Lakeview Cabin CollectionLEGO Harry Potter CollectionLEGO 2K Drive Cross-Gen Standard EditionLEGO Horizon AdventuresLEGO Horizon Adventures Digital Deluxe EditionLEGO Star Wars: The Skywalker Saga Galactic EditionLEGO Star Wars: The Skywalker Saga PS4 & PS5Lies of PLife is Strange: Double ExposureLife is Strange: Double Exposure Ultimate EditionLife is Strange: True Colors Deluxe Edition PS4 & PS5Like a Dragon: Infinite Wealth PS4 & PS5Like a Dragon: Ishin! Digital Deluxe Edition PS4 & PS5Little Nightmares II PS4 & PS5Lords of the Fallen Deluxe EditionMADiSONMADiSON VRManeater Apex EditionMarvels Guardians of the Galaxy PS4 & PS5Marvels Midnight Suns Enhanced EditionMarvels Midnight Suns Legendary Edition for PS5Marvels Spider-Man 2Marvels Spider-Man 2 Digital Deluxe EditionMarvels Spider-Man RemasteredMedieval DynastyMEGATON MUSASHI W: WIRED Deluxe EditionMEGATON MUSASHI W: WIRED Standard EditionMETAL GEAR SOLID Master Collection Version PS4 & PS5METAL GEAR SOLID 2: Sons of Liberty Master Collection Version PS4 & PS5Metaphor: ReFantazio PS4 & PS5Metro AwakeningMetro ExodusMinecraft LegendsMLB The Show 24 Digital Deluxe Edition PS5 and PS4MLB The Show 24 PS5MONOPOLYMonster Energy Supercross The Official Videogame 6 PS4 & PS5Monster Hunter Rise + SunbreakMonster Hunter Rise PS4 & PS5Monster Jam Showdown Big Air Edition PS4 & PS5Monster Jam Showdown PS4 & PS5Morbid: The Lords of IreMORDHAUMortal Kombat 11Mortal Kombat 1Mortal Kombat 1: Khaos Reigns KollectionMossMotoGP24 PS4 & PS5Mount & Blade II: BannerlordMoving Out 2Mushoku Tensei: Jobless Reincarnation Quest of MemoriesMX vs ATV Legends 2024 Monster Energy Supercross EditionMXGP 2021 The Official Motocross VideogameMY LITTLE PONY: A Maretime Bay AdventureMy Little Pony: A Zephyr Heights MysteryNARUTO X BORUTO Ultimate Ninja STORM CONNECTIONS Deluxe Edition PS4 & PS5NBA 2K25 All-Star EditionNBA 2K25 Standard EditionNeed for Speed UnboundNeed for Speed Unbound Ultimate CollectionNeon WhiteNEW WORLD: AETERNUMNew World: Aeternum Deluxe EditionNHL 25 Standard EditionNHRA Championship Drag Racing: Speed For AllNickelodeon All-Star Brawl 2 Ultimate EditionNickelodeon Kart Racers 3: Slime Speedway Turbo EditionNikoderiko: The Magical WorldNo Mans Sky PS4 & PS5Nobody Wants to DieOCTOPATH TRAVELER II PS4&PS5OCTOPATH TRAVELER PS4&PS5ON THE ROAD The Truck SimulatorONE PIECE ODYSSEY Deluxe Edition PS4 & PS5Outcast A New BeginningOuter WildsOuter Wilds: Archaeologist EditionOutward Definitive EditionOvercooked! All You Can EatPacific Drive: Deluxe EditionPAC-MAN WORLD Re-PAC PS4 & PS5Paleo Pines PS4 & PS5PalworldPAW Patrol WorldPAW Patrol: Grand PrixPAYDAY 3PAYDAY 3: Year 1 EditionPersona 3 Reload Digital Deluxe Edition PS4 & PS5Persona 5 Tactica PS4 & PS5PGA 2K23 Cross-Gen EditionPGA TOUR 2K23 Tiger Woods EditionPhasmophobiaPistol Whip PS4 & PS5Planet Coaster 2Planet of LanaPneumataPolice Simulator: Patrol Officers: Gold EditionPotion Craft: Alchemist SimulatorPotion PermitPowerWash SimulatorPrince of Persia The Lost CrownProject Wingman: Frontline 59Propagation: Paradise HotelQuake IIRailway Empire 2Rain WorldRed Matter CollectionRemnant II Deluxe EditionRESIDENT EVIL 2RESIDENT EVIL 3Resident Evil 4 Gold Edition PS4 & PS5RESIDENT EVIL 7 biohazardResident Evil 7 Gold Edition & Village Gold Edition PS4 & PS5Resident Evil Village PS4 & PS5Rewind or DieREYNATIS Digital Deluxe EditionRez InfiniteRIDE 5RIDE 5 Special EditionRiders Republic PS4 & PS5Riders Republic Complete EditionRisk of Rain 2RoboCop: Rogue CityRogue Legacy 2RollerCoaster Tycoon Adventures DeluxeRomancing SaGa 2: Revenge of the Seven PS4&PS5Rugby 22SaGa Emerald Beyond PS4&PS5Saints Row PS4&PS5Saints Row: The Third RemasteredSCARLET NEXUS Ultimate Edition PS4 & PS5Scars AboveScornSea of StarsSea of Thieves: Deluxe EditionSea of Thieves: Premium EditionShadows of DoubtShreddersShredders 540INDY EditionSifuSILENT HILL 2Sker RitualSker Ritual: Digital Deluxe EditionSKULL AND BONESSlime Rancher 2SlitterheadSmalland: Survive the WildsSniper Ghost Warrior Contracts 2SnowRunner 2-Year Anniversary EditionSnowRunner 4-Year Anniversary EditionSon and BoneSonic Frontiers Digital Deluxe Edition PS4 & PS5SONIC SUPERSTARS Digital Deluxe Edition featuring LEGO PS4 & PS5SONIC X SHADOW GENERATIONS Digital Deluxe Edition PS4 & PS5SOUTH PARK: SNOW DAY!SpongeBob SquarePants: The Patrick Star GameSPYANYA: Operation Memories Deluxe Edition PS4 & PS5Squirrel with a GunSTAR OCEAN THE SECOND STORY R PS4 & PS5STAR WARS Jedi: Fallen OrderStar Wars OutlawsStar Wars Outlaws Ultimate EditionSTAR WARS Battlefront Classic CollectionSTAR WARS: Dark Forces RemasterStay Out of the House PS4 & PS5SteamWorld Heist IISTRANGER OF PARADISE FINAL FANTASY ORIGIN Digital Deluxe Edition PS4 & PS5Street Fighter 6Street Fighter 6 Ultimate EditionStreet Outlaws 2: Winner Takes AllSTRIDE: FatesSunnySideSurvival: Fountain of Youth Captains EditionSword and Fairy: Together Forever PS4 & PS5SWORD ART ONLINE Fractured DaydreamSWORD ART ONLINE Fractured Daydream Premium EditionSWORD ART ONLINE Last Recollection PS4 & PS5Swordsman VRSynapseSystem ShockTactics Ogre: RebornTales of Arise Beyond the Dawn Ultimate Edition PS4 & PS5Taxi Life Supporter EditionTeenage Mutant Ninja Turtles: Mutants Unleashed Digital Deluxe EditionTeenage Mutant Ninja Turtles: Shredders RevengeTEKKEN 8TEKKEN 8 Ultimate EditionTerminator: Resistance EnhancedTest Drive Unlimited Solar Crown Gold EditionTest Drive Unlimited Solar Crown Silver Streets EditionTetris Effect: ConnectedTetris ForeverThat Time I Got Reincarnated as a Slime ISEKAI ChroniclesThe 7th Guest VRThe Casting of Frank StoneThe Crew Motorfest Cross-Gen BundleThe Crew Motorfest Ultimate EditionThe Dark Pictures Anthology: House of Ashes PS4 & PS5The Dark Pictures Anthology: The Devil in Me PS4 & PS5The Dark Pictures: Switchback VRThe Elder Scrolls OnlineThe Elder Scrolls V: Skyrim Anniversary Edition PS5 & PS4The Grinch: Christmas AdventuresThe Karate Kid: Street RumbleTHE KING OF FIGHTERS XV Standard Edition PS4 & PS5The Last Stand: AftermathThe Legend of Heroes: Trails into ReverieThe Legend of Heroes: Trails into Reverie Ultimate EditionThe Outer Worlds: Spacers Choice EditionThe Pathless PS4 & PS5The Quarry for PS5The Room VR: A Dark MatterThe Sinking City PS5 Deluxe EditionThe Smurfs Dreams PS4 & PS5The Smurfs Village PartyThe Texas Chain Saw MassacreThe Walking Dead: DestiniesThis Bed We MadeTIEBREAK Ace EditionTimeSplittersTimeSplitters 2TimeSplitters: Future PerfectTiny Tinas Wonderlands: Chaotic Great EditionTom Clancys Rainbow Six Siege Operator EditionTom Clancys Rainbow Six Siege Ultimate EditionTomb Raider I-III Remastered Starring Lara CroftTopSpin 2K25 Cross-Gen Digital EditionTopSpin 2K25 Grand Slam EditionTowers of AghasbaTownsmen VRTrailmakersTrain Sim World 5: Standard Edition PS4 & PS5Transformers Beyond RealityTRANSFORMERS: Galactic TrialsTrepang2Tropico 6 Next Gen EditionTruck and Logistics SimulatorTwelve MinutesTwo Point CampusUFC 5UNDER NIGHT IN-BIRTH II Sys:CelesUndisputedUnknown 9: AwakeningUNOUntil ThenVALKYRIE ELYSIUM PS4&PS5VALKYRIE PROFILE: LENNETHVampire Hunters PS4 & PS5Vampire: The Masquerade JusticeVENDETTA FOREVERVertigo 2ViewfinderVISAGEVisions of Mana Digital Deluxe Edition PS4 & PS5Visions of Mana PS4 & PS5Waltz of the WizardWarhammer 40,000: Boltgun (PS4 & PS5)Warhammer 40,000: Chaos Gate Daemonhunters PS4 & PS5Warhammer 40,000: Rogue TraderWarhammer 40,000: Rogue Trader Voidfarer EditionWarhammer 40,000: Space Marine 2Warhammer 40,000: Space Marine 2 Ultra EditionWarhammer Age of Sigmar: Realms of Ruin Ultimate EditionWatch Dogs: Legion Ultimate Edition PS4 & PS5Watch Dogs: Legion PS4 & PS5WayfinderWe Love Katamari REROLL+ Royal Reverie Special Edition PS4 & PS5Welcome to ParadiZeWelcome to ParadiZe Zombot EditionWhat Remains of Edith FinchWhite Day 2: The Flower That Tells Lies Complete EditionWho Wants to Be a Millionaire? New Edition PS5WILD HEARTS Karakuri EditionWithering RoomsWizardry: Proving Grounds of the Mad OverlordWo Long: Fallen Dynasty (PS4 & PS5)Wo Long: Fallen Dynasty Complete Edition (PS4 & PS5)Worms Armageddon: Anniversary EditionWWE 2K24 40 Years of WrestleMania EditionWWE 2K24 Bray Wyatt EditionWWE 2K24 Cross-Gen Digital EditionXIIIYakuza: Like a Dragon Legendary Hero Edition PS4 & PS5Yakuza: Like a Dragon PS4 & PS5Ys IX: Monstrum NoxYs VIII: Lacrimosa of DANAYs X: Nordics Digital Deluxe EditionYs X: Nordics Digital Ultimate Edition*The Holiday Sale promotion runs from Friday, December 20 00.00am PST/GMT/JST until Friday, January 17 11:59 PM PST/GMT/JST. Some titles in the promotion are only discounted until January 6. Please check the individual product pages on PlayStation Store for more information.0 Σχόλια 0 Μοιράστηκε 160 Views -

WWW.POLYGON.COMCheck Cyberpunk 2077s latest patch for a secret TTRPG surpriseIn a secret pre-holiday update to Cyberpunk 2077, developer CD Projekt Red added a little tabletop-shaped gift. A spokesperson from Cyberpunk publisher R. Talsorian Games told Polygon that any person who owns Cyberpunk 2077 and can access their Bonus Content folder can now find Cyberpunk RED: Easy Mode in there, of course for free.Without mention, Cyberpunk 2077s update 2.2 quietly offered up the quick start guide to the tabletop role-playing game. Easy Mode has abridged rules and an introductory mission to onboard new players quickly to using dice pools instead of controllers and keyboards. Players will be able to act as one of five pre-generated characters in the quick start: the charismatic Rockerboy, the lethal Solo, the inventive Tech, the lifesaving Medtech, and the hard-hitting Media. Though the guide includes a brief dive into the history and landscape of Night City, fans of the video game should feel intimately familiar with the prequel world of Cyberpunk RED.Though the preview was released at Gen Con in 2019, the full version of Cyberpunk RED originally released alongside Cyberpunk 2077 in 2020. The tabletop game is latest edition of the original Cyberpunk 2020 RPG (which was released in 1989). The franchise explores the gritty sci-fi fiction of an end-stage capitalist technofuture where, according to the games description, corporations control the world from their skyscraper fortresses. Perhaps a little on the nose. Set in 2045, Cyberpunk RED takes place midway between the events of Cyberpunk 2020 and Cyberpunk 2077.Polygon has confirmed the gift is available to PC users. To check for your copy of the PDF on PC, navigate to C:\Program Files (x86)\Steam\steamapps\music\Cyberpunk 2077 Bonus Content. Polygon has not been able to verify how to get the PDF on Xbox or PlayStation, though the spokesperson said it should have been confirmed for those platforms as well.If you catch the bug and want more Cyberpunk TTRPGs, the bonus content available on Steam also includes the original Cyberpunk 2020 core rulebook. The full Cyberpunk Red core rulebook is available at R Talsorian Games for $60.0 Σχόλια 0 Μοιράστηκε 114 Views

WWW.POLYGON.COMCheck Cyberpunk 2077s latest patch for a secret TTRPG surpriseIn a secret pre-holiday update to Cyberpunk 2077, developer CD Projekt Red added a little tabletop-shaped gift. A spokesperson from Cyberpunk publisher R. Talsorian Games told Polygon that any person who owns Cyberpunk 2077 and can access their Bonus Content folder can now find Cyberpunk RED: Easy Mode in there, of course for free.Without mention, Cyberpunk 2077s update 2.2 quietly offered up the quick start guide to the tabletop role-playing game. Easy Mode has abridged rules and an introductory mission to onboard new players quickly to using dice pools instead of controllers and keyboards. Players will be able to act as one of five pre-generated characters in the quick start: the charismatic Rockerboy, the lethal Solo, the inventive Tech, the lifesaving Medtech, and the hard-hitting Media. Though the guide includes a brief dive into the history and landscape of Night City, fans of the video game should feel intimately familiar with the prequel world of Cyberpunk RED.Though the preview was released at Gen Con in 2019, the full version of Cyberpunk RED originally released alongside Cyberpunk 2077 in 2020. The tabletop game is latest edition of the original Cyberpunk 2020 RPG (which was released in 1989). The franchise explores the gritty sci-fi fiction of an end-stage capitalist technofuture where, according to the games description, corporations control the world from their skyscraper fortresses. Perhaps a little on the nose. Set in 2045, Cyberpunk RED takes place midway between the events of Cyberpunk 2020 and Cyberpunk 2077.Polygon has confirmed the gift is available to PC users. To check for your copy of the PDF on PC, navigate to C:\Program Files (x86)\Steam\steamapps\music\Cyberpunk 2077 Bonus Content. Polygon has not been able to verify how to get the PDF on Xbox or PlayStation, though the spokesperson said it should have been confirmed for those platforms as well.If you catch the bug and want more Cyberpunk TTRPGs, the bonus content available on Steam also includes the original Cyberpunk 2020 core rulebook. The full Cyberpunk Red core rulebook is available at R Talsorian Games for $60.0 Σχόλια 0 Μοιράστηκε 114 Views -

WWW.POLYGON.COMThe next World of Warcraft patch is a neon-soaked goblin paradiseWorld of Warcraft is renowned for its expansive set of locations, from barren deserts to lush forests, but some of the most interesting zones are densely inhabited, like Suramar or Tiragarde Sound. The first major patch for The War Within expansion adds another such area with Undermine, the goblin capital and a massive urban center thats not quite like anything else seen on Azeroth.On Thursday, Blizzard released a video of Undermine detailing what to expect from the goblin capital. The goblins have been underserved by World of Warcraft; since their introduction all the way back in 2010s Cataclysm, theyve mostly served as goofy comic relief for the Horde. The goblin starting zone in Cataclysm is similarly advanced, with cars, highways, and even a personal assistant for the player but it was destroyed by the emergence of Deathwing.Some of the goblin collectives in Patch 1.1 (which is cleverly titled Undermined) have been enemies in past patches. Luckily, theyre interested in working with the heroes of Azeroth, and they wont hold past transgressions against you so if youre the kind of player who slaughtered guards in Booty Bay and racked up a terrible reputation with the Blackwater cartel, Baron Revilgaz wont be hostile towards you.Undermined includes a car, which you can upgrade by collaborating with the goblin cartels. Blizzard is introducing the D.R.I.V.E. (Dynamic Revolutionary Improvements to Vehicular Experiences) mechanic alongside these goblin cars; theyre much faster than a ground mount and even a little bit faster than a flying mount in the air. For anyone who wishes World of Warcraft had more drifting, this is the patch for you.Undermined will also include new Delves (one of which has an alarming amount of Void constructs hidden away), a new Arena, and a new dungeon called Operation Floodgate. Raiders can look forward to the Liberation of Undermine, an eight-boss raid. Here, players finally get to beat up Gallywix, the ultra-capitalist goblin who betrayed his people during the Cataclysm yet somehow managed to fail upward to leading the faction. Gallywix is, true to form, inside a giant mech that has been build to look like his face.Blizzard hasnt announced a release date for Undermined quite yet, but it looks like an interesting spin on The War Withins zones so far. The goblin antics will tie back into the greater plot with Xalatath, so there will be some interesting mysteries in store.0 Σχόλια 0 Μοιράστηκε 104 Views

WWW.POLYGON.COMThe next World of Warcraft patch is a neon-soaked goblin paradiseWorld of Warcraft is renowned for its expansive set of locations, from barren deserts to lush forests, but some of the most interesting zones are densely inhabited, like Suramar or Tiragarde Sound. The first major patch for The War Within expansion adds another such area with Undermine, the goblin capital and a massive urban center thats not quite like anything else seen on Azeroth.On Thursday, Blizzard released a video of Undermine detailing what to expect from the goblin capital. The goblins have been underserved by World of Warcraft; since their introduction all the way back in 2010s Cataclysm, theyve mostly served as goofy comic relief for the Horde. The goblin starting zone in Cataclysm is similarly advanced, with cars, highways, and even a personal assistant for the player but it was destroyed by the emergence of Deathwing.Some of the goblin collectives in Patch 1.1 (which is cleverly titled Undermined) have been enemies in past patches. Luckily, theyre interested in working with the heroes of Azeroth, and they wont hold past transgressions against you so if youre the kind of player who slaughtered guards in Booty Bay and racked up a terrible reputation with the Blackwater cartel, Baron Revilgaz wont be hostile towards you.Undermined includes a car, which you can upgrade by collaborating with the goblin cartels. Blizzard is introducing the D.R.I.V.E. (Dynamic Revolutionary Improvements to Vehicular Experiences) mechanic alongside these goblin cars; theyre much faster than a ground mount and even a little bit faster than a flying mount in the air. For anyone who wishes World of Warcraft had more drifting, this is the patch for you.Undermined will also include new Delves (one of which has an alarming amount of Void constructs hidden away), a new Arena, and a new dungeon called Operation Floodgate. Raiders can look forward to the Liberation of Undermine, an eight-boss raid. Here, players finally get to beat up Gallywix, the ultra-capitalist goblin who betrayed his people during the Cataclysm yet somehow managed to fail upward to leading the faction. Gallywix is, true to form, inside a giant mech that has been build to look like his face.Blizzard hasnt announced a release date for Undermined quite yet, but it looks like an interesting spin on The War Withins zones so far. The goblin antics will tie back into the greater plot with Xalatath, so there will be some interesting mysteries in store.0 Σχόλια 0 Μοιράστηκε 104 Views