0 Kommentare

0 Anteile

92 Ansichten

Verzeichnis

Verzeichnis

-

Please log in to like, share and comment!

-

WWW.TECHRADAR.COMQuordle today my hints and answers for Monday, December 30 (game #1071)Looking for Quordle clues? We can help. Plus get the answers to Quordle today and past solutions.0 Kommentare 0 Anteile 90 Ansichten

WWW.TECHRADAR.COMQuordle today my hints and answers for Monday, December 30 (game #1071)Looking for Quordle clues? We can help. Plus get the answers to Quordle today and past solutions.0 Kommentare 0 Anteile 90 Ansichten -

BEFORESANDAFTERS.COMHere: A test with Tom HanksThe de-ageing Pepsi challenge. An excerpt from issue #24 of befores & afters magazine in print.Ultimately, there would be 53-character minutes of full-face replacement in Here between the four key actors: Tom Hanks, Robin Wright, Paul Bettany and Kelly Reilly. This was a significant amount of screen time. Shots were also often longup to four minutesand the de-aged periods went close to up to 40 years in time. It was obvious that there was no way we could use traditional CGI methods to do this, suggests visual effects supervisor Kevin Baillie. It was not going to be economical and it was not going to be fast enough, and it also risked us falling into the Uncanny Valley. There was just no way we could keep that level of quality up to be believable and relatable throughout the entire movie using CG methods.We also didnt want to bring tons of witness cameras and head-mounted cameras and all these other kinds of technologies onto set, adds Baillie. That sent us down the road of AI machine learning-based approaches, which were just becoming viable to use in feature film production.Test footage of a de-aged Tom Hanks by Metaphysic, which aided in green-lighting the film.With that in mind, the production devised a test in November 2022 featuring Hanks. The actor (who is now in his late 60s) was filmed performing a planned scene from the film in a mocked-up version of the set at Panavision in Thousand Oaks. We had a handful of companies, and we did a paid de-ageing test across all these companies, outlines Baillie. One test was to turn him into a 25-year-old Tom Hanks just to see if the tech could even work. At the same time, we also hired a couple of doubles to come in and redo the performance that hed done to see if we could use doubles to avoid having to de-age arms and necks and ears.Metaphysic won, as Baillie describes it, the Pepsi Challenge on that test. When we saw the results we said, Oh my gosh, that looks like Tom Hanks from Big. What also became clear in that test was that the concept of using doubles to save us some work on arms and hands and neck and ears and things was never going to work. Even though they were acting to Tom Hanks voice and Tom was there helping to give them direction, it just was clear that it wasnt Tom. It wasnt the soul of the actor that was there. I actually think this will help to make people a little more comfortable with some of the AI tools that are coming out. They just dont work without the soul of the performer behind them. Thats why it was key for us to have Tom and Robin and Paul Bettany and Kelly Reillytheyre driving every single one of the character performance moments that you see on screen.Metaphysics approach to de-ageingThe key to de-ageing characters played by the likes of Tom Hanks and Robin Wright with machine learning techniquesMetaphysic relies on their bespoke process known as its Neural Performance Toolsetwas data. Ostensibly this came from prior performances on film, interviews, family photographs, photos from premieres and press images. Its based upon photographic reference that goes into these neural models that we train, outlines Metaphysic visual effects supervisor Jo Plaete. In the case of Tom Hanks, for example, we get a body of movies of him where he appears in those age ranges, and ingest it into our system. We have a toolset that extracts Tom from these movies and then preps and tailors all that, those facial expressions, and all these lighting conditions, and all these poses, into what we call a dataset, which then gets handed over to our visual data scientists.Hanks de-aged as visualized live on set.The raw camera feed.Final shot.I make the analogy, continues Plaete, that where you used to build the asset through modeling and all these steps, in our machine learning world, the asset built is a visual data science process. Its important to note that, ultimately, the artistic outcome requires an artist to sit down and do that process. Its just a different set of tools. Its more about curation of data, how long do you expose it to this neural network, what kind of parameters and at what stage do you dial in? Its like a physics simulation.Metaphysics workflow involved honing the neural network to deliver the de-aged characters at various ages. There is an element of training branches of that network where you start to hone in onto subsections of that dataset to get, say, Tom Hanks at 18, at 30, at 45, says Plaete. Eventually, also, we had some networks that aged Robin into her 80s, which was a slightly different approach even though its the same type of network. At the same time, we have our machine learning engineers come in and tweak the architectures of the neural networks themselves.Such tweaking is necessary owing to, as Plaete calls it, identity leak. You get Toms brother or cousin coming out, instead. Everybody knows Tom extremely well, so you want to hit that likeness 100%. So we have that tight collaboration from the machine learning engineers with the visual data scientists and artists to bring them together. They tweak the architecture, they tweak the dataset and the training loops. Together, we review the outputs, and ultimately, at the end of the day, we are striving for the best looking network. But rather than hitting render on a 3D model, we hit inference on a neural net, and thats what comes out and goes into compositing.On set, Metaphysic carried out a few short data shoots with the actors it would be de-ageing (and up-ageing) in the lighting of the scene. That just involved capturing the faces of the actors and perhaps having a little bit of extra poses and expression range to help our networks learn how a face behaves and presents itself within that lighting scenario, explains Plaete. Ultimately, we have a very low footprint.De-aged, liveThe de-ageing effects were not carried out in isolation. Bob was very excited to involve the actors in reviewing the de-aged faces, recounts Baillie. Id show them, Okay, heres you at 25, what do you think? They had a hand in sculpting what their likeness was like. I remember in particular Robin when I first showed footage of her de-aged to 25and the plate for this was I just sat with her and had a conversation for a couple of minutes and we filmed it on a RED and then went away and a month later I showed herthat it was really emotional for her. She said, Ive been thinking about how to bring the innocence of my youth back into my eyes, back into my expression and suffering over that. This helped me do it. Thats all that the AI knows, is that innocence of her youth. For her, I think there was just this moment of realization that this can help her get back there.Part of Metaphysics workflow is feature recognition, which detects the actors physiology in basic outlines.Final shot.Indeed, this helped drive an effort on set for a preview of the de-ageing to be available to the cast and crew. That helped us to make sure that the body performance of the actor matched the intended age of the character at that time, says Baillie. Its very hard to judge that if youre not seeing it. Every time Bob would call cut, Tom would run back around behind the monitors and watch himself and be like, Oh, I need to straighten up a little bit more. Oh, I was shuffling a little bit, or maybe I was overacting my youth in that one. It became a tool for the actors that they were able to use, and Bob was able to use it, and even our hair and makeup team and costume design team were all able to use it.Metaphysic already had an on-set preview system in the works before Here, but ramped it up on the production when Baillie asked the studio if it could be done. I think a week or two later, recalls Plaete, we hopped on a Zoom call and we had a test live face swap running into the Zoom to show him an example. Kevin said, Yeah, we should do it.The real-time system worked using only the main camera feed, without any special witness cameras or other gear, notes Baillie. It was just literally one SDI video feed running off of the camera, out a hole in the side of our stage into a little porta-cabin that theyd set up next to the set. Thats where all the loud, hot GPUs were sitting, and Metaphysic had a small team of four people that were in that cabin.The real-time budget was about 100 or 200 milliseconds, adds Plaete. We needed to take all our normal steps, optimize them, and hook them together as fast as we could. That was a bit of a hackathon, as you can imagine. But ultimately, it meant training models that were lower resolution. Still, the inference would run fast. I mean, the inference of these models is fast anyway. The high resolution model will still take a second to pop out the frame, which is crazy different from the 25 hours of ray tracing that we come from [in visual effects].The team had built a toolset that carried out a computer vision-like identity recognition pass so that the de-ageing could occur on the right actor. Those recognitions would hand over their results to the face swapper, details Plaete, which would face swap these optimized models that would come out with this type of square that you sometimes see in our breakdowns, and a mask. That would hand over to a real-time compositing step, which is an optimized version of our proprietary color transfer and detail transfer tools that we run in Nuke for our offline models, but optimized, again, to run superfast on a GPU, and then hooking that all together. Wed send back a feed with the young versions.A monitor displaying Metaphysics identity detection that went into making sure that each actors younger real-time likeness was swapped onto them, and only them, during filming.We had one monitor on set that was the live feed from the camera and another monitor that was about six-frames delayed that was the de-aged actors, outlines Baillie. When we did playback, wed just shift the audio by six frames to give us perfectly synchronized lipsync with the young actors. It was really, really remarkable to see that used as a tool. Rita, Toms wife, walked on the set and was like, Oh, my gosh, thats the age he was when we first met. It was lightweight. It was reliable. Its the most unobtrusive visual effects technology Ive ever seen used on set, and it had such an emotional impact at the same time.Plaete adds that he was surprised to see Zemeckis constantly referring to the monitor displaying the live face swap, rather than the raw feed. It was the highest praise to see if a filmmaker that level used that tool constantly on the set. The actors themselves, as well, would come back after every take to analyze if the facial performance with the young face would work on the body.The art of editing a machine learned performanceOne challenge in using machine learning tools for any visual effects work has been, thus far, a limited ability to alter the final result. Some ML systems are black boxes. However, in the case of Metaphysics tools, the studio has purposefully set up a workflow that can be somewhat editable.Tests of various AI models for capturing Wrights younger likeness. Note the difference in mood between the outputs, which needed to be curated and guided by artists at Metaphysic.In addition to compositors, advises Baillie, they even have animators on their team. But instead of working in Maya, theyre working in Nuke with controls that allow them to help to make sure that the output from these models is as close to the intent of the performance as possible.I call them neural animators, points out Plaete. Theyre probably the first of their kind. They edit in Nuke, and its all in real-time. They see a photoreal output, and as they move things around, it updates in real-time. They love it because they dont have the long feedback loop that theyre used to to see the final pixels. The sooner youre in this photoreal world, the sooner youre outside of the Uncanny Valley and the more you can iterate on perfecting it and addressing the things that really matter. I think thats where the toolset is just next level.Sometimes the trained models will make mistakes, such as recognizing a slightly saggier upper eyelid for some other intention. Our eyelids as we age tend to droop a little bit, and these models will misinterpret that as a squint, observes Baillie. Or in lip motion, sometimes there might be an action that happens between frames, especially during fast movements when you say P, and here the model will actually do slightly the wrong thing.Its not wrong in that its interpreting the image the best that it can, continues Baillie, but its not matching the intent of their performance. What these tools allow Metaphysic to do is go into latent space, like statistical space, and nudge the AI to do something slightly different. It allows them to go back in and fix that squint to be the right thing. With these animation tools, it feels just like the last 2% of tweaking that you would do on a CG face replacement, but youre getting to 98% 10 times as fast.You can compare it with blend shapes where you have sliders that move topology, says Plaete. These sliders, they nudge the neural network to nudge the output as a layer on top of the performance that is already coming through from the input. You can nudge an eyeline, for example. Bob likes his characters to flirt with the camera but obviously not look down the barrel. These networks tend to magnetically do that. Eyeline notes would be something that we get when we present the first version to Kevin and Bob, and theyd say, Okay, maybe lets adjust that a little bit.Dealing with edge casesAnother challenge with de-ageing and machine learning in the past has been when the actor is not fully visible to camera, or turns their head, or where there is significant motion blur in the frame. All these things had to be dealt with by Metaphysic.Paul Bettany, de-aged.We knew that that was going to be an issue, states Baillie, so we lensed the movie knowing that we were going to have a 10% pad around the edges of the film. That meant the AI would have a chance to lock onto features of an actor if theyre coming in from off-screen, so that we werent going to have issues from exiting camera or coming onto camera.A kiss between two de-aged characters proved tricky, in that regard. The solution here was to paint out the foreground actors face, do the face swap onto the roughly reconstructed actor, and then place the foreground person back on top. Or, when an actor turned away from camera to, say, a three-quarter view, this meant that there would be less or no features to lock onto. What the team had to do in that scenario was track a rough 3D head of the actor onto the actor and project the last good frame of the face swap onto it and do a transition and let that 3D head carry around the face in an area where the AI itself wouldnt have been able to succeed, outlines Baillie. All these limitations of the AI tools, they need traditional visual effects teams who know how to do this stuff to backstop them and help them succeed.To tackle some of these edge cases, Metaphysic built a suite of tools they call dataset augmentation. You find holes in your dataset and you fill them in by a set of other machine learning based approaches that synthesize parts of the dataset that might be missing or that are missing, discusses Plaete. We also trained identity-specific enhancement models. Thats another set of neural networks that we can run in Nuke and the compositors have access to that. Thats basically specific networks that can operate on frames that are coming out impaired or soft and restore those in place for compositors to have extra elements that are still identity-specific.All of Metaphysics tools are exposed in Nuke, giving their compositors ways of massaging the performance. They can run the face swap straight in Nuke via a machine learning server connection, and they can run these enhancement models, explains Plaete. They have these meshes that get generated where they can do 2.5D tricks or sometimes they might fall back onto plate for a frame where its possible. Theres some amazing artistry on the compositing side.Ageing upwardsMost of Metaphysics work on the film related to de-ageing, but some ageing of Robin Wrights character with machine learning techniques did occur (from a practical effects point of view, makeup and hair designer Jenny Shircore crafted a number of makeup effects and prosthetics approaches for aged, and de-aged characters).Here, Wright appears in old-age makeup, which is then compared with synthesized images of her at her older age, which were used to improve the makeup using similar methods to the de-aging done in the rest of the film.For the machine learning approach, a couple of older actors that were the right target age were cast who had a similar facial structure to Wright. Metaphysic then shot an extensive dataset of them to provide for skin textures and movement of the target age. We would mix that in with the oldest layer of data of Robin, states Plaete, which we would also synthetically age up by means of some other networks. Wed mold our Robin dataset to make it look older, but to keep the realism, wed then fuse in some people actually at that age. Ultimately, this was run on top of a set of prosthetics that had already been applied by the makeup department.Plaete stresses that collaboration with hair and makeup on the film was incredibly tight, and important. You want the makeup that they apply to be communicating a time or a look that would settle that scene in a certain place within the movies really extensive timeline. We had to be careful with our face swap technology that is trained on a certain look from the data, from the archival, from the movies, that we wouldnt just wash away all the amazing work from the makeup department. We worked really closely together to introduce these looks into our datasets and make sure that that stylization came out as well.Read the full issue on Here, which also goes in-depth on the virtual production side of the film.All images 2024 CTMG, Inc. All Rights Reserved.The post Here: A test with Tom Hanks appeared first on befores & afters.0 Kommentare 0 Anteile 169 Ansichten

BEFORESANDAFTERS.COMHere: A test with Tom HanksThe de-ageing Pepsi challenge. An excerpt from issue #24 of befores & afters magazine in print.Ultimately, there would be 53-character minutes of full-face replacement in Here between the four key actors: Tom Hanks, Robin Wright, Paul Bettany and Kelly Reilly. This was a significant amount of screen time. Shots were also often longup to four minutesand the de-aged periods went close to up to 40 years in time. It was obvious that there was no way we could use traditional CGI methods to do this, suggests visual effects supervisor Kevin Baillie. It was not going to be economical and it was not going to be fast enough, and it also risked us falling into the Uncanny Valley. There was just no way we could keep that level of quality up to be believable and relatable throughout the entire movie using CG methods.We also didnt want to bring tons of witness cameras and head-mounted cameras and all these other kinds of technologies onto set, adds Baillie. That sent us down the road of AI machine learning-based approaches, which were just becoming viable to use in feature film production.Test footage of a de-aged Tom Hanks by Metaphysic, which aided in green-lighting the film.With that in mind, the production devised a test in November 2022 featuring Hanks. The actor (who is now in his late 60s) was filmed performing a planned scene from the film in a mocked-up version of the set at Panavision in Thousand Oaks. We had a handful of companies, and we did a paid de-ageing test across all these companies, outlines Baillie. One test was to turn him into a 25-year-old Tom Hanks just to see if the tech could even work. At the same time, we also hired a couple of doubles to come in and redo the performance that hed done to see if we could use doubles to avoid having to de-age arms and necks and ears.Metaphysic won, as Baillie describes it, the Pepsi Challenge on that test. When we saw the results we said, Oh my gosh, that looks like Tom Hanks from Big. What also became clear in that test was that the concept of using doubles to save us some work on arms and hands and neck and ears and things was never going to work. Even though they were acting to Tom Hanks voice and Tom was there helping to give them direction, it just was clear that it wasnt Tom. It wasnt the soul of the actor that was there. I actually think this will help to make people a little more comfortable with some of the AI tools that are coming out. They just dont work without the soul of the performer behind them. Thats why it was key for us to have Tom and Robin and Paul Bettany and Kelly Reillytheyre driving every single one of the character performance moments that you see on screen.Metaphysics approach to de-ageingThe key to de-ageing characters played by the likes of Tom Hanks and Robin Wright with machine learning techniquesMetaphysic relies on their bespoke process known as its Neural Performance Toolsetwas data. Ostensibly this came from prior performances on film, interviews, family photographs, photos from premieres and press images. Its based upon photographic reference that goes into these neural models that we train, outlines Metaphysic visual effects supervisor Jo Plaete. In the case of Tom Hanks, for example, we get a body of movies of him where he appears in those age ranges, and ingest it into our system. We have a toolset that extracts Tom from these movies and then preps and tailors all that, those facial expressions, and all these lighting conditions, and all these poses, into what we call a dataset, which then gets handed over to our visual data scientists.Hanks de-aged as visualized live on set.The raw camera feed.Final shot.I make the analogy, continues Plaete, that where you used to build the asset through modeling and all these steps, in our machine learning world, the asset built is a visual data science process. Its important to note that, ultimately, the artistic outcome requires an artist to sit down and do that process. Its just a different set of tools. Its more about curation of data, how long do you expose it to this neural network, what kind of parameters and at what stage do you dial in? Its like a physics simulation.Metaphysics workflow involved honing the neural network to deliver the de-aged characters at various ages. There is an element of training branches of that network where you start to hone in onto subsections of that dataset to get, say, Tom Hanks at 18, at 30, at 45, says Plaete. Eventually, also, we had some networks that aged Robin into her 80s, which was a slightly different approach even though its the same type of network. At the same time, we have our machine learning engineers come in and tweak the architectures of the neural networks themselves.Such tweaking is necessary owing to, as Plaete calls it, identity leak. You get Toms brother or cousin coming out, instead. Everybody knows Tom extremely well, so you want to hit that likeness 100%. So we have that tight collaboration from the machine learning engineers with the visual data scientists and artists to bring them together. They tweak the architecture, they tweak the dataset and the training loops. Together, we review the outputs, and ultimately, at the end of the day, we are striving for the best looking network. But rather than hitting render on a 3D model, we hit inference on a neural net, and thats what comes out and goes into compositing.On set, Metaphysic carried out a few short data shoots with the actors it would be de-ageing (and up-ageing) in the lighting of the scene. That just involved capturing the faces of the actors and perhaps having a little bit of extra poses and expression range to help our networks learn how a face behaves and presents itself within that lighting scenario, explains Plaete. Ultimately, we have a very low footprint.De-aged, liveThe de-ageing effects were not carried out in isolation. Bob was very excited to involve the actors in reviewing the de-aged faces, recounts Baillie. Id show them, Okay, heres you at 25, what do you think? They had a hand in sculpting what their likeness was like. I remember in particular Robin when I first showed footage of her de-aged to 25and the plate for this was I just sat with her and had a conversation for a couple of minutes and we filmed it on a RED and then went away and a month later I showed herthat it was really emotional for her. She said, Ive been thinking about how to bring the innocence of my youth back into my eyes, back into my expression and suffering over that. This helped me do it. Thats all that the AI knows, is that innocence of her youth. For her, I think there was just this moment of realization that this can help her get back there.Part of Metaphysics workflow is feature recognition, which detects the actors physiology in basic outlines.Final shot.Indeed, this helped drive an effort on set for a preview of the de-ageing to be available to the cast and crew. That helped us to make sure that the body performance of the actor matched the intended age of the character at that time, says Baillie. Its very hard to judge that if youre not seeing it. Every time Bob would call cut, Tom would run back around behind the monitors and watch himself and be like, Oh, I need to straighten up a little bit more. Oh, I was shuffling a little bit, or maybe I was overacting my youth in that one. It became a tool for the actors that they were able to use, and Bob was able to use it, and even our hair and makeup team and costume design team were all able to use it.Metaphysic already had an on-set preview system in the works before Here, but ramped it up on the production when Baillie asked the studio if it could be done. I think a week or two later, recalls Plaete, we hopped on a Zoom call and we had a test live face swap running into the Zoom to show him an example. Kevin said, Yeah, we should do it.The real-time system worked using only the main camera feed, without any special witness cameras or other gear, notes Baillie. It was just literally one SDI video feed running off of the camera, out a hole in the side of our stage into a little porta-cabin that theyd set up next to the set. Thats where all the loud, hot GPUs were sitting, and Metaphysic had a small team of four people that were in that cabin.The real-time budget was about 100 or 200 milliseconds, adds Plaete. We needed to take all our normal steps, optimize them, and hook them together as fast as we could. That was a bit of a hackathon, as you can imagine. But ultimately, it meant training models that were lower resolution. Still, the inference would run fast. I mean, the inference of these models is fast anyway. The high resolution model will still take a second to pop out the frame, which is crazy different from the 25 hours of ray tracing that we come from [in visual effects].The team had built a toolset that carried out a computer vision-like identity recognition pass so that the de-ageing could occur on the right actor. Those recognitions would hand over their results to the face swapper, details Plaete, which would face swap these optimized models that would come out with this type of square that you sometimes see in our breakdowns, and a mask. That would hand over to a real-time compositing step, which is an optimized version of our proprietary color transfer and detail transfer tools that we run in Nuke for our offline models, but optimized, again, to run superfast on a GPU, and then hooking that all together. Wed send back a feed with the young versions.A monitor displaying Metaphysics identity detection that went into making sure that each actors younger real-time likeness was swapped onto them, and only them, during filming.We had one monitor on set that was the live feed from the camera and another monitor that was about six-frames delayed that was the de-aged actors, outlines Baillie. When we did playback, wed just shift the audio by six frames to give us perfectly synchronized lipsync with the young actors. It was really, really remarkable to see that used as a tool. Rita, Toms wife, walked on the set and was like, Oh, my gosh, thats the age he was when we first met. It was lightweight. It was reliable. Its the most unobtrusive visual effects technology Ive ever seen used on set, and it had such an emotional impact at the same time.Plaete adds that he was surprised to see Zemeckis constantly referring to the monitor displaying the live face swap, rather than the raw feed. It was the highest praise to see if a filmmaker that level used that tool constantly on the set. The actors themselves, as well, would come back after every take to analyze if the facial performance with the young face would work on the body.The art of editing a machine learned performanceOne challenge in using machine learning tools for any visual effects work has been, thus far, a limited ability to alter the final result. Some ML systems are black boxes. However, in the case of Metaphysics tools, the studio has purposefully set up a workflow that can be somewhat editable.Tests of various AI models for capturing Wrights younger likeness. Note the difference in mood between the outputs, which needed to be curated and guided by artists at Metaphysic.In addition to compositors, advises Baillie, they even have animators on their team. But instead of working in Maya, theyre working in Nuke with controls that allow them to help to make sure that the output from these models is as close to the intent of the performance as possible.I call them neural animators, points out Plaete. Theyre probably the first of their kind. They edit in Nuke, and its all in real-time. They see a photoreal output, and as they move things around, it updates in real-time. They love it because they dont have the long feedback loop that theyre used to to see the final pixels. The sooner youre in this photoreal world, the sooner youre outside of the Uncanny Valley and the more you can iterate on perfecting it and addressing the things that really matter. I think thats where the toolset is just next level.Sometimes the trained models will make mistakes, such as recognizing a slightly saggier upper eyelid for some other intention. Our eyelids as we age tend to droop a little bit, and these models will misinterpret that as a squint, observes Baillie. Or in lip motion, sometimes there might be an action that happens between frames, especially during fast movements when you say P, and here the model will actually do slightly the wrong thing.Its not wrong in that its interpreting the image the best that it can, continues Baillie, but its not matching the intent of their performance. What these tools allow Metaphysic to do is go into latent space, like statistical space, and nudge the AI to do something slightly different. It allows them to go back in and fix that squint to be the right thing. With these animation tools, it feels just like the last 2% of tweaking that you would do on a CG face replacement, but youre getting to 98% 10 times as fast.You can compare it with blend shapes where you have sliders that move topology, says Plaete. These sliders, they nudge the neural network to nudge the output as a layer on top of the performance that is already coming through from the input. You can nudge an eyeline, for example. Bob likes his characters to flirt with the camera but obviously not look down the barrel. These networks tend to magnetically do that. Eyeline notes would be something that we get when we present the first version to Kevin and Bob, and theyd say, Okay, maybe lets adjust that a little bit.Dealing with edge casesAnother challenge with de-ageing and machine learning in the past has been when the actor is not fully visible to camera, or turns their head, or where there is significant motion blur in the frame. All these things had to be dealt with by Metaphysic.Paul Bettany, de-aged.We knew that that was going to be an issue, states Baillie, so we lensed the movie knowing that we were going to have a 10% pad around the edges of the film. That meant the AI would have a chance to lock onto features of an actor if theyre coming in from off-screen, so that we werent going to have issues from exiting camera or coming onto camera.A kiss between two de-aged characters proved tricky, in that regard. The solution here was to paint out the foreground actors face, do the face swap onto the roughly reconstructed actor, and then place the foreground person back on top. Or, when an actor turned away from camera to, say, a three-quarter view, this meant that there would be less or no features to lock onto. What the team had to do in that scenario was track a rough 3D head of the actor onto the actor and project the last good frame of the face swap onto it and do a transition and let that 3D head carry around the face in an area where the AI itself wouldnt have been able to succeed, outlines Baillie. All these limitations of the AI tools, they need traditional visual effects teams who know how to do this stuff to backstop them and help them succeed.To tackle some of these edge cases, Metaphysic built a suite of tools they call dataset augmentation. You find holes in your dataset and you fill them in by a set of other machine learning based approaches that synthesize parts of the dataset that might be missing or that are missing, discusses Plaete. We also trained identity-specific enhancement models. Thats another set of neural networks that we can run in Nuke and the compositors have access to that. Thats basically specific networks that can operate on frames that are coming out impaired or soft and restore those in place for compositors to have extra elements that are still identity-specific.All of Metaphysics tools are exposed in Nuke, giving their compositors ways of massaging the performance. They can run the face swap straight in Nuke via a machine learning server connection, and they can run these enhancement models, explains Plaete. They have these meshes that get generated where they can do 2.5D tricks or sometimes they might fall back onto plate for a frame where its possible. Theres some amazing artistry on the compositing side.Ageing upwardsMost of Metaphysics work on the film related to de-ageing, but some ageing of Robin Wrights character with machine learning techniques did occur (from a practical effects point of view, makeup and hair designer Jenny Shircore crafted a number of makeup effects and prosthetics approaches for aged, and de-aged characters).Here, Wright appears in old-age makeup, which is then compared with synthesized images of her at her older age, which were used to improve the makeup using similar methods to the de-aging done in the rest of the film.For the machine learning approach, a couple of older actors that were the right target age were cast who had a similar facial structure to Wright. Metaphysic then shot an extensive dataset of them to provide for skin textures and movement of the target age. We would mix that in with the oldest layer of data of Robin, states Plaete, which we would also synthetically age up by means of some other networks. Wed mold our Robin dataset to make it look older, but to keep the realism, wed then fuse in some people actually at that age. Ultimately, this was run on top of a set of prosthetics that had already been applied by the makeup department.Plaete stresses that collaboration with hair and makeup on the film was incredibly tight, and important. You want the makeup that they apply to be communicating a time or a look that would settle that scene in a certain place within the movies really extensive timeline. We had to be careful with our face swap technology that is trained on a certain look from the data, from the archival, from the movies, that we wouldnt just wash away all the amazing work from the makeup department. We worked really closely together to introduce these looks into our datasets and make sure that that stylization came out as well.Read the full issue on Here, which also goes in-depth on the virtual production side of the film.All images 2024 CTMG, Inc. All Rights Reserved.The post Here: A test with Tom Hanks appeared first on befores & afters.0 Kommentare 0 Anteile 169 Ansichten -

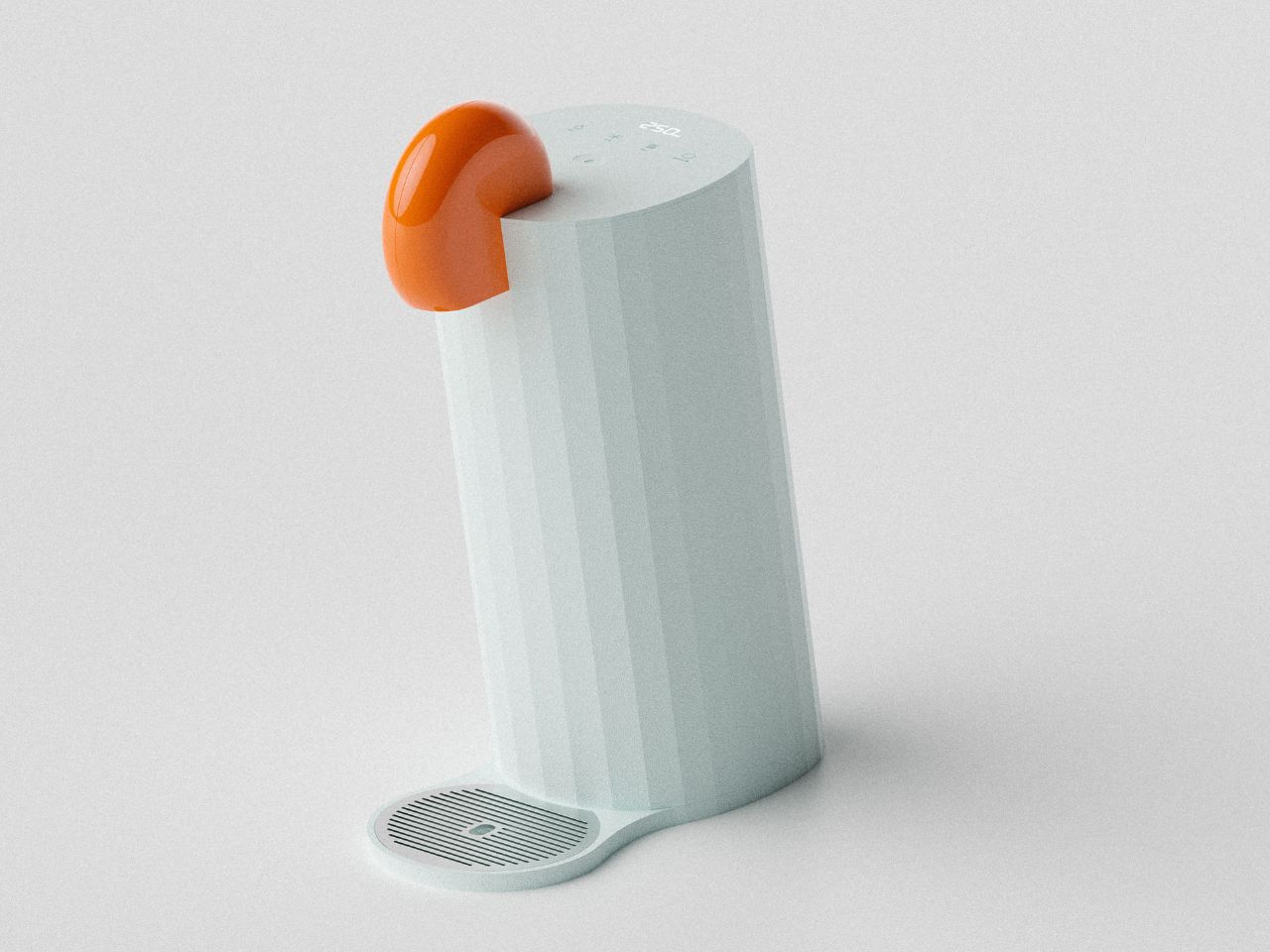

WWW.YANKODESIGN.COMThis Water Purifiers Avant-Garde Design Evokes The Feeling Of A Sunset In Your KitchenIn modern living, the kitchen has transcended its original role as a space dedicated to cooking and chores. With the rise of island tables and multifunctional designs, it has evolved into a hub of relaxation, connection, and creativity. Whether reading a book, engaging in conversation, or simply unwinding, the kitchen serves as a microcosm of our lives. Yet, amidst its familiarity, achieving true relaxation can often prove elusive. This is where the Oasium water purifier steps ina product designed to transform the kitchen into an oasis of tranquility and sophistication.Designer:Taeyeon KimIn pursuit of relaxation, many of us seek refuge in travel. Stepping away from the mundane, we immerse ourselves in new surroundings and encounters, discovering rest through unique experiences. Drawing inspiration from this concept, the Oasium water purifier brings the essence of a vacation into the heart of your kitchen. Its presence evokes the feeling of indulgence and leisure, akin to savoring a welcome drink at an exotic retreat. Every glass of water becomes a moment of self-care and serenity, elevating a daily necessity into an extraordinary experience.The Oasium water purifiers design is a masterstroke of elegance and functionality, seamlessly blending aesthetics with purpose. Inspired by the artistry of cocktail-making, its exterior is slightly tilted, creating the impression of a glass of water being graciously offered. This subtle gesture is complemented by a rounded faucet, reminiscent of the decorative fruit that adorns a cocktail glass. Together, these elements create a visual language that exudes sophistication and warmth.To amplify its refreshing appeal, the Oasium incorporates straight-line patterns into its design. These patterns lend a clean, minimalist aesthetic, enhancing the overall ambiance of the kitchen. The result is a product that not only serves a practical function but also enriches the emotional and visual landscape of its surroundings.The Oasium water purifiers user interface is designed with both convenience and style in mind:Comprehensive Control Panel: Located on the top, the panel includes touch buttons for water dispensing, purification, cold water, hot water, and filter replacement. These controls are sleekly integrated to maintain the products minimalist aesthetic.LED Lighting: Hidden lighting displays the water dispensing amount, ensuring clarity and precision. When idle, the LED remains subdued, preserving the purifiers refined atmosphere.Ergonomic Button Design: The water dispensing button features a subtle indentation, perfectly accommodating the shape of a finger for intuitive use.The Oasium celebrates meticulous craftsmanship. Its faucet, inspired by the playful elegance of cocktail garnishes, maintains a streamlined form without any protrusions at the water outlet. Smooth, rounded edges ensure a cohesive and harmonious profile. Meanwhile, the straight-line patterns extend across the main body and water tray, with partition lines delicately emerging to maintain design continuity.The Oasium water purifiers color scheme is a journey in itself, evoking the moods and memories of cherished travel destinations:Sunset over the Sea: The main color captures the golden hues of a setting sun over an ocean horizon, infusing the product with warmth and vibrancy.Gradient of the Sea: A secondary gradient color mirrors the gentle transition of sea tones at a resort, enhanced with soft pink undertones to evoke comfort and coziness.Hotel Sophistication: A third option, inspired by the muted elegance of hotel interiors, features a subtle green shade with dark, low-saturation tones for a touch of understated luxury.The Oasium water purifier redefines the act of drinking water, turning it into a ritual of relaxation and refinement. By merging innovative design with a deep understanding of human experience, it transforms the kitchen into a sanctuary. With its sleek form, intuitive functionality, and evocative color palette, the Oasium offers something beyond hydrationit offers a moment of serenity in every glass.The post This Water Purifiers Avant-Garde Design Evokes The Feeling Of A Sunset In Your Kitchen first appeared on Yanko Design.0 Kommentare 0 Anteile 109 Ansichten

WWW.YANKODESIGN.COMThis Water Purifiers Avant-Garde Design Evokes The Feeling Of A Sunset In Your KitchenIn modern living, the kitchen has transcended its original role as a space dedicated to cooking and chores. With the rise of island tables and multifunctional designs, it has evolved into a hub of relaxation, connection, and creativity. Whether reading a book, engaging in conversation, or simply unwinding, the kitchen serves as a microcosm of our lives. Yet, amidst its familiarity, achieving true relaxation can often prove elusive. This is where the Oasium water purifier steps ina product designed to transform the kitchen into an oasis of tranquility and sophistication.Designer:Taeyeon KimIn pursuit of relaxation, many of us seek refuge in travel. Stepping away from the mundane, we immerse ourselves in new surroundings and encounters, discovering rest through unique experiences. Drawing inspiration from this concept, the Oasium water purifier brings the essence of a vacation into the heart of your kitchen. Its presence evokes the feeling of indulgence and leisure, akin to savoring a welcome drink at an exotic retreat. Every glass of water becomes a moment of self-care and serenity, elevating a daily necessity into an extraordinary experience.The Oasium water purifiers design is a masterstroke of elegance and functionality, seamlessly blending aesthetics with purpose. Inspired by the artistry of cocktail-making, its exterior is slightly tilted, creating the impression of a glass of water being graciously offered. This subtle gesture is complemented by a rounded faucet, reminiscent of the decorative fruit that adorns a cocktail glass. Together, these elements create a visual language that exudes sophistication and warmth.To amplify its refreshing appeal, the Oasium incorporates straight-line patterns into its design. These patterns lend a clean, minimalist aesthetic, enhancing the overall ambiance of the kitchen. The result is a product that not only serves a practical function but also enriches the emotional and visual landscape of its surroundings.The Oasium water purifiers user interface is designed with both convenience and style in mind:Comprehensive Control Panel: Located on the top, the panel includes touch buttons for water dispensing, purification, cold water, hot water, and filter replacement. These controls are sleekly integrated to maintain the products minimalist aesthetic.LED Lighting: Hidden lighting displays the water dispensing amount, ensuring clarity and precision. When idle, the LED remains subdued, preserving the purifiers refined atmosphere.Ergonomic Button Design: The water dispensing button features a subtle indentation, perfectly accommodating the shape of a finger for intuitive use.The Oasium celebrates meticulous craftsmanship. Its faucet, inspired by the playful elegance of cocktail garnishes, maintains a streamlined form without any protrusions at the water outlet. Smooth, rounded edges ensure a cohesive and harmonious profile. Meanwhile, the straight-line patterns extend across the main body and water tray, with partition lines delicately emerging to maintain design continuity.The Oasium water purifiers color scheme is a journey in itself, evoking the moods and memories of cherished travel destinations:Sunset over the Sea: The main color captures the golden hues of a setting sun over an ocean horizon, infusing the product with warmth and vibrancy.Gradient of the Sea: A secondary gradient color mirrors the gentle transition of sea tones at a resort, enhanced with soft pink undertones to evoke comfort and coziness.Hotel Sophistication: A third option, inspired by the muted elegance of hotel interiors, features a subtle green shade with dark, low-saturation tones for a touch of understated luxury.The Oasium water purifier redefines the act of drinking water, turning it into a ritual of relaxation and refinement. By merging innovative design with a deep understanding of human experience, it transforms the kitchen into a sanctuary. With its sleek form, intuitive functionality, and evocative color palette, the Oasium offers something beyond hydrationit offers a moment of serenity in every glass.The post This Water Purifiers Avant-Garde Design Evokes The Feeling Of A Sunset In Your Kitchen first appeared on Yanko Design.0 Kommentare 0 Anteile 109 Ansichten -

EN.WIKIPEDIA.ORGWikipedia picture of the day for December 30Bathymetry is the study of the underwater depth of sea and ocean floors, lake floors, and river floors. It has been carried out for more than 3,000 years, with the first recorded evidence of measurements of water depth occurring in ancient Egypt. Bathymetric measurements are conducted with various methods, including depth sounding, sonar and lidar techniques, buoys, and satellite altimetry. However, despite modern computer-based research, the depth of the seabed of Earth remains less well measured in many locations than the topography of Mars. Bathymetry has various uses, including the production of bathymetric charts to guide vessels and identify underwater hazards, the study of marine life near the bottom of bodies of water, coastline analysis, and ocean dynamics, including predicting currents and tides. This video, created by the Scientific Visualization Studio at NASA's Goddard Space Flight Center, simulates the effect on a satellite world map of a gradual decrease in worldwide sea levels. As the sea level drops, more seabed is exposed in shades of brown, producing a bathymetric map of the world. Continental shelves appear mostly by a depth of 140 meters (460ft), mid-ocean ridges by 3,000 meters (9,800ft), and oceanic trenches at depths beyond 6,000 meters (20,000ft). The video ends at a depth of 10,190 meters (33,430ft) below sea level the approximate depth of the Challenger Deep, the deepest known point of the seabed.Video credit: NASA / Goddard Space Flight Center / Horace Mitchell, and James O'DonoghueRecently featured: Cinnamon hummingbirdArthur SullivanReduced Gravity Walking SimulatorArchiveMore featured pictures0 Kommentare 0 Anteile 131 Ansichten

EN.WIKIPEDIA.ORGWikipedia picture of the day for December 30Bathymetry is the study of the underwater depth of sea and ocean floors, lake floors, and river floors. It has been carried out for more than 3,000 years, with the first recorded evidence of measurements of water depth occurring in ancient Egypt. Bathymetric measurements are conducted with various methods, including depth sounding, sonar and lidar techniques, buoys, and satellite altimetry. However, despite modern computer-based research, the depth of the seabed of Earth remains less well measured in many locations than the topography of Mars. Bathymetry has various uses, including the production of bathymetric charts to guide vessels and identify underwater hazards, the study of marine life near the bottom of bodies of water, coastline analysis, and ocean dynamics, including predicting currents and tides. This video, created by the Scientific Visualization Studio at NASA's Goddard Space Flight Center, simulates the effect on a satellite world map of a gradual decrease in worldwide sea levels. As the sea level drops, more seabed is exposed in shades of brown, producing a bathymetric map of the world. Continental shelves appear mostly by a depth of 140 meters (460ft), mid-ocean ridges by 3,000 meters (9,800ft), and oceanic trenches at depths beyond 6,000 meters (20,000ft). The video ends at a depth of 10,190 meters (33,430ft) below sea level the approximate depth of the Challenger Deep, the deepest known point of the seabed.Video credit: NASA / Goddard Space Flight Center / Horace Mitchell, and James O'DonoghueRecently featured: Cinnamon hummingbirdArthur SullivanReduced Gravity Walking SimulatorArchiveMore featured pictures0 Kommentare 0 Anteile 131 Ansichten -

EN.WIKIPEDIA.ORGOn this day: December 30December 30: Rizal Day in the Philippines (1896)Pro-government rally in Tehran, Iran999 In Ireland, the combined forces of Munster and Meath crushed a rebellion by Leinster and Dublin.1940 The Arroyo Seco Parkway, one of the first freeways built in the U.S., connecting downtown Los Angeles with Pasadena, California, was officially dedicated.1954 The Finnish National Bureau of Investigation was established to consolidate criminal investigation and intelligence into a single agency.1969 Philippine president Ferdinand Marcos began his second term after being re-elected in a landslide.2006 Saddam Hussein, the former president of Iraq, was executed after being found guilty of crimes against humanity by the Iraqi High Tribunal.2009 Pro-government counter-demonstrators held rallies (pictured) in several Iranian cities in response to recent anti-government protests on the holy day of Ashura.Bernard Gui (d.1331)LeBron James (b.1984)V (b.1995)Erica Garner (d.2017)More anniversaries: December 29December 30December 31ArchiveBy emailList of days of the yearAbout0 Kommentare 0 Anteile 155 Ansichten

EN.WIKIPEDIA.ORGOn this day: December 30December 30: Rizal Day in the Philippines (1896)Pro-government rally in Tehran, Iran999 In Ireland, the combined forces of Munster and Meath crushed a rebellion by Leinster and Dublin.1940 The Arroyo Seco Parkway, one of the first freeways built in the U.S., connecting downtown Los Angeles with Pasadena, California, was officially dedicated.1954 The Finnish National Bureau of Investigation was established to consolidate criminal investigation and intelligence into a single agency.1969 Philippine president Ferdinand Marcos began his second term after being re-elected in a landslide.2006 Saddam Hussein, the former president of Iraq, was executed after being found guilty of crimes against humanity by the Iraqi High Tribunal.2009 Pro-government counter-demonstrators held rallies (pictured) in several Iranian cities in response to recent anti-government protests on the holy day of Ashura.Bernard Gui (d.1331)LeBron James (b.1984)V (b.1995)Erica Garner (d.2017)More anniversaries: December 29December 30December 31ArchiveBy emailList of days of the yearAbout0 Kommentare 0 Anteile 155 Ansichten -

WWW.THEVERGE.COMMore of the DJI Flip folding drone appears in new leaked imagesNew images of the rumored DJI Flip folding drone hit late last week, showing the compact, light-colored drone both folded and unfolded, and even in a carrying case. The images appeared in posts by Igor Bogdanov, who has shared other credible DJI leaks in the past.Bogdanov added in a post yesterday that DJI is preparing a new Cellular Dongle 2 module for the compact drone. The new leaks join earlier images of ND filters for the Flip, its propeller set, and charging hub, which Bogdanov wrote can charge two batteries in a minimum of 45 minutes, and can use a 65W parallel charger.Below are some of the other pictures Bogdanov posted, including of its front screen, which drone leaker Jasper Ellens notes shows all the handsfree Quickshots we know from the Neo. 1/12Leaked images of the DJI Flip. Image: Igor Bogdanov1/12Leaked images of the DJI Flip. Image: Igor BogdanovEllens posted a short video of the drone yesterday, writing that the Flips registration numbers put it in DJIs FPV drone category, meaning that it could allow for first-person streaming during flight. In early December, he also leaked details like the drones compact folding approach and that it should get about 30 minutes of flight thanks to a battery thats bigger than the one in DJIs Neo selfie drone.0 Kommentare 0 Anteile 129 Ansichten

-

WWW.MARKTECHPOST.COMAdvancing Parallel Programming with HPC-INSTRUCT: Optimizing Code LLMs for High-Performance ComputingLLMs have revolutionized software development by automating coding tasks and bridging the natural language and programming gap. While highly effective for general-purpose programming, they struggle with specialized domains like High-Performance Computing (HPC), particularly in generating parallel code. This limitation arises from the scarcity of high-quality parallel code data in pre-training datasets and the inherent complexity of parallel programming. Addressing these challenges is critical, as creating HPC-specific LLMs can significantly enhance developer productivity and accelerate scientific discoveries. To overcome these hurdles, researchers emphasize the need for curated datasets with better-quality parallel code and improved training methodologies that go beyond simply increasing data volume.Efforts to adapt LLMs for HPC have included fine-tuning specialized models such as HPC-Coder and OMPGPT. While these models demonstrate promise, many rely on outdated architectures or narrow applications, limiting their effectiveness. Recent advancements like HPC-Coder-V2 leverage state-of-the-art techniques to improve performance, achieving comparable or superior results to larger models while maintaining efficiency. Studies highlight the importance of data quality over quantity and advocate for targeted approaches to enhance parallel code generation. Future research aims to develop robust HPC-specific LLMs that bridge the gap between serial and parallel programming capabilities by integrating insights from synthetic data generation and focusing on high-quality datasets.Researchers from the University of Maryland conducted a detailed study to fine-tune a specialized HPC LLM for parallel code generation. They developed a synthetic dataset, HPC-INSTRUCT, containing high-quality instruction-answer pairs derived from parallel code samples. Using this dataset, they fine-tuned HPC-Coder-V2, which emerged as the best open-source code LLM for parallel code generation, performing near GPT-4 levels. Their study explored how data representation, training parameters, and model size influence performance, addressing key questions about data quality, fine-tuning strategies, and scalability to guide future advancements in HPC-specific LLMs.Enhancing Code LLMs for parallel programming involves creating HPC-INSTRUCT, a large synthetic dataset of 120k instruction-response pairs derived from open-source parallel code snippets and LLM outputs. This dataset includes programming, translation, optimization, and parallelization tasks across languages like C, Fortran, and CUDA. We fine-tune three pre-trained Code LLMs1.3B, 6.7B, and 16B parameter modelson HPC-INSTRUCT and other datasets using the AxoNN framework. Through ablation studies, we examine the impact of data quality, model size, and prompt formatting on performance, optimizing the models for the ParEval benchmark to assess their ability to generate parallel code effectively.To evaluate Code LLMs for parallel code generation, the ParEval benchmark was used, featuring 420 diverse problems across 12 categories and seven execution models like MPI, CUDA, and Kokkos. Performance was assessed using the pass@k metric, which measures the probability of generating at least one correct solution within k attempts. Ablation studies analyzed the impact of base models, instruction masking, data quality, and model size. Results revealed that fine-tuning base models yielded better performance than instruct variants, high-quality data improved outcomes, and larger models showed diminishing returns, with a notable gain from 1.3B to 6.7B parameters.In conclusion, the study presents HPC-INSTRUCT, an HPC instruction dataset created using synthetic data from LLMs and open-source parallel code. An in-depth analysis was conducted across data, model, and prompt configurations to identify factors influencing code LLM performance in generating parallel code. Key findings include the minimal impact of instruction masking, the advantage of fine-tuning base models over instruction-tuned variants, and diminishing returns from increased training data or model size. Using these insights, three state-of-the-art HPC-specific LLMsHPC-Coder-V2 modelswere fine-tuned, achieving superior performance on the ParEval benchmark. These models are efficient, outperforming others in parallel code generation for high-performance computing.Check out the Paper. All credit for this research goes to the researchers of this project. Also,dont forget to follow us onTwitter and join ourTelegram Channel andLinkedIn Group. Dont Forget to join our60k+ ML SubReddit. Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence.The post Advancing Parallel Programming with HPC-INSTRUCT: Optimizing Code LLMs for High-Performance Computing appeared first on MarkTechPost.0 Kommentare 0 Anteile 126 Ansichten

WWW.MARKTECHPOST.COMAdvancing Parallel Programming with HPC-INSTRUCT: Optimizing Code LLMs for High-Performance ComputingLLMs have revolutionized software development by automating coding tasks and bridging the natural language and programming gap. While highly effective for general-purpose programming, they struggle with specialized domains like High-Performance Computing (HPC), particularly in generating parallel code. This limitation arises from the scarcity of high-quality parallel code data in pre-training datasets and the inherent complexity of parallel programming. Addressing these challenges is critical, as creating HPC-specific LLMs can significantly enhance developer productivity and accelerate scientific discoveries. To overcome these hurdles, researchers emphasize the need for curated datasets with better-quality parallel code and improved training methodologies that go beyond simply increasing data volume.Efforts to adapt LLMs for HPC have included fine-tuning specialized models such as HPC-Coder and OMPGPT. While these models demonstrate promise, many rely on outdated architectures or narrow applications, limiting their effectiveness. Recent advancements like HPC-Coder-V2 leverage state-of-the-art techniques to improve performance, achieving comparable or superior results to larger models while maintaining efficiency. Studies highlight the importance of data quality over quantity and advocate for targeted approaches to enhance parallel code generation. Future research aims to develop robust HPC-specific LLMs that bridge the gap between serial and parallel programming capabilities by integrating insights from synthetic data generation and focusing on high-quality datasets.Researchers from the University of Maryland conducted a detailed study to fine-tune a specialized HPC LLM for parallel code generation. They developed a synthetic dataset, HPC-INSTRUCT, containing high-quality instruction-answer pairs derived from parallel code samples. Using this dataset, they fine-tuned HPC-Coder-V2, which emerged as the best open-source code LLM for parallel code generation, performing near GPT-4 levels. Their study explored how data representation, training parameters, and model size influence performance, addressing key questions about data quality, fine-tuning strategies, and scalability to guide future advancements in HPC-specific LLMs.Enhancing Code LLMs for parallel programming involves creating HPC-INSTRUCT, a large synthetic dataset of 120k instruction-response pairs derived from open-source parallel code snippets and LLM outputs. This dataset includes programming, translation, optimization, and parallelization tasks across languages like C, Fortran, and CUDA. We fine-tune three pre-trained Code LLMs1.3B, 6.7B, and 16B parameter modelson HPC-INSTRUCT and other datasets using the AxoNN framework. Through ablation studies, we examine the impact of data quality, model size, and prompt formatting on performance, optimizing the models for the ParEval benchmark to assess their ability to generate parallel code effectively.To evaluate Code LLMs for parallel code generation, the ParEval benchmark was used, featuring 420 diverse problems across 12 categories and seven execution models like MPI, CUDA, and Kokkos. Performance was assessed using the pass@k metric, which measures the probability of generating at least one correct solution within k attempts. Ablation studies analyzed the impact of base models, instruction masking, data quality, and model size. Results revealed that fine-tuning base models yielded better performance than instruct variants, high-quality data improved outcomes, and larger models showed diminishing returns, with a notable gain from 1.3B to 6.7B parameters.In conclusion, the study presents HPC-INSTRUCT, an HPC instruction dataset created using synthetic data from LLMs and open-source parallel code. An in-depth analysis was conducted across data, model, and prompt configurations to identify factors influencing code LLM performance in generating parallel code. Key findings include the minimal impact of instruction masking, the advantage of fine-tuning base models over instruction-tuned variants, and diminishing returns from increased training data or model size. Using these insights, three state-of-the-art HPC-specific LLMsHPC-Coder-V2 modelswere fine-tuned, achieving superior performance on the ParEval benchmark. These models are efficient, outperforming others in parallel code generation for high-performance computing.Check out the Paper. All credit for this research goes to the researchers of this project. Also,dont forget to follow us onTwitter and join ourTelegram Channel andLinkedIn Group. Dont Forget to join our60k+ ML SubReddit. Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence.The post Advancing Parallel Programming with HPC-INSTRUCT: Optimizing Code LLMs for High-Performance Computing appeared first on MarkTechPost.0 Kommentare 0 Anteile 126 Ansichten -

WWW.CNET.COMCasper One Foam Mattress Review 2024: Testing Casper's New Flagship Mattress7.5 /10 SCORE Our Verdict Our Verdict Best for: Back sleeper Stomach sleeper Score Breakdown Performance 8 /10 Policies 7 /10 Durability 7 /10 Features 7 /10 Pros and Cons Pros Firm profile for back and stomach sleepers Accommodating overall feel Excellent motion isolation Cons Not as accommodating as the old model Not a good option for side sleepers Table of Contents Casper has gained popularity as a bed-in-a-box mattress brand. After offering a reliable lineup of quality mattresses for years, it's now shaking things up with five new beds. One of these mattresses is the Casper One, and it replaces the Casper Original and The Casper mattress. Is this new entry-level foam mattress the right one for you? We tried it out, and here are our thoughts on it.Read more: Best Mattress for 2024First impressions of the Casper One Foam mattress Dillon Lopez/CNETI was excited to unbox all of the new Casper beds, because we've always regarded these popular beds highly. We received all of the new beds in our office on the same day, and it was a very cold day outside when the boxes arrived. Sometimes, we'll wait a day or two before unboxing a new bed, but we unboxed these mattresses right off the truck.I mention the weather because the temperature can have an impact on the rigidity of foams, and when we removed the Casper One from the plastic, it was one of the most misshapen-looking beds I have ever seen. I had a good laugh about it because it looked funny, and we knew it was not due to some defect in the mattress. Beds often look misshapen right out of the box, but this one was pretty extreme.It took about a full day for the bed to fully inflate and get into its normal shape. I was immediately surprised when trying the Casper One for the first time because it was much firmer than I'd expected. Previous models of Casper foam beds were usually around a medium on our firmness scale, and it was clear that Casper was going in an entirely different direction with the Casper One.Video: Casper One Foam review videoWatch me review the Casper One Foam mattress in this video.One Foam construction and feel Dillon Lopez/CNETThe Casper One Foam mattress has a classic three-layer all-foam design, which is what we saw on the old models of Casper and beds from many other brands, including Nectar, Bear, Leesa and Nolah.The bottommost layer is a 7-inch layer of Casper Core support foam, which is the main support layer of the Casper One. This is the only mattress out of the five new beds from Casper that features a foam support layer instead of pocketed coils. I generally prefer coil beds for the added bouncy feel, and coils are usually better for support and durability throughout the life of the mattress, but there are plenty of sleepers who prefer foam beds because foam has better motion isolation, is easier to move and is usually more affordable.Above the support foam is a 2-inch layer of Align memory foam, and then right above that is another 2-inch layer of Breathe Flex foam. The memory foam layer is classically dense, as you'd expect from memory foam, and the top layer is a more neutral, responsive foam.This combination of foams adds up to give the Casper One Foam a somewhat unique feel. The memory foam layer gives the bed a dense feel that is balanced out by the more responsive layer above it. I think the bed has a similar dense type of feel, like a memory foam, but without the sink in quality.Overall, I'd describe it as having a firm, dense foam feel, which isn't something I've observed very often. It's quite a departure from the old Casper Original, which had a soft, neutral feel that I would describe as more accommodating to more sleepers in comparison to the Casper One.Casper One Foam firmness and sleeper types Dillon Lopez/CNETThe Casper One Foam is significantly more firm than the previous Casper model. The old Casper Original was rated at a medium, but the Casper One is a medium-firm to firm on our scale. It's closer to a medium-firm than a true firm, but it's definitely somewhere in between.Casper describes the Casper One as a firm option that is ideal for back and stomach sleepers, and I absolutely agree. If you're a primary side sleeper, I would look elsewhere for your next mattress. But for back and stomach sleepers who want less give in their mattress, the Casper One has an appropriate firmness profile.For softer options from Casper, you'll want to check out the Dream Hybrid and Dream Max Hybrid.Casper One Foam mattress performance Dillon Lopez/CNETEdge supportThe edge support of a mattress refers to how well the perimeter of the bed holds up under pressure. If the edge compresses too much, you can feel like you are going to roll off the mattress, which usually leads to poor sleep.Even though there's no design element meant to specifically enhance the edge support on the Casper One, we observed pretty solid edge support on this bed. I didn't feel that roll-off sensation when putting all of my weight on the perimeter of the mattress, so if you are planning on sharing this mattress with someone, I don't think you'll have any issues with edge support.Motion isolationIf you find yourself waking up in the middle of the night because your partner tosses and turns a lot, having a mattress that does a good job of isolating cross-mattress motion is important.The motion isolation on the Casper One is excellent, comparable to pure memory foam options like Nectar and Tempur-Pedic. Memory foam beds usually perform best in this category and the Casper One uses a layer of memory foam along with its foam support base, so I expected it to be good, but maybe not quite this good.If motion isolation is something that's been an issue in the past, then the Casper One should be an improvement.Temperature regulationThe top layer of foam on the Casper One is noticeably different from the old Casper models. It was previously called Airscape foam, and I observed it to be very breathable thanks to its perforation. The new Breath Flex foam on the Casper One isn't perforated, but it does have an open-cell design and it's light and breathable.The Casper One shouldn't heat up on you at night, and it should remain temperature neutral. If you sleep hot on this bed, it probably has more to do with your sleeping environment than the bed itself.If you want a bed with active cooling elements, Casper's new Snow and Snow Max mattress are noticeably cool to the touch. We also have a best cooling beds list you can check out.Casper One Foam mattress pricing SizeMeasurements (inches)Price Twin 38x74 inches$749Twin XL 38x80 inches$749Full 54x75 inches$899Queen 60x80 inches$999King 76x80 inches$1,399Cal king 72x84 inches$1,399 The Casper One mattress is the most affordable of Casper's new mattress lineup, and when compared to the old Casper Original, the price is roughly the same. For a queen-size, the price is also fairly competitive with other three-layer foam mattresses like the Leesa Original and the Nolah Original.With these Casper beds being so new, I wouldn't be surprised to see some price fluctuations, but the One should be the most affordable bed from Casper going forward.Be sure to check out our mattress deals page for any discounts we can find to save money on the Casper One.Casper mattress policies Dillon Lopez/CNETFree shippingCasper One mattress ships inside of a box right to your door at no extra cost in the contiguous United States. Unboxing a mattress is a quick and easy process: We've done it literally hundreds of times at CNET, and it's actually fun.This bed is pretty light, so you might be able to unbox it solo, but I'd still recommend getting someone to help you, especially for larger sizes.100-night trialCasper offers the usual standard in-home sleep trial of 100 nights. So you get roughly three months to test out the bed and see if it's supportive enough and comfortable enough for you.Keep in mind that it can take a month or longer for your body to adjust to a new mattress, no matter how well-suited it is to you. So, if you struggle to get a good night's sleep in the first week or two, don't panic.Return policyCasper, despite more brands introducing return fees, still offers completely free returns on all of its mattresses if you choose to return it within the provided trial period.Returning a mattress is usually pretty easy; you just contact the company, and usually, a group will come by your house to pick up the bed, and then it will often get donated or sometimes resold by a third party.WarrantyAll Casper mattresses are backed by a standard 10-year warranty. If you buy a mattress online, a 10-year warranty is the minimum you should expect.Final verdict Dillon Lopez/CNETOverall, I was surprised by the Casper One mattress. When a brand redesigns its mattresses, the changes are usually superficial, and the firmness and feel remain the same, but the Casper One is a big departure from the old Casper Original. It's significantly firmer and also has a different feel.In the past, I'd recommend the Casper Original to pretty much everyone because it had such an accommodating firmness and feel. Most people enjoyed it or could at least get by on it comfortably. The new Casper One is a more specialized mattress that's designed for back and stomach sleepers, and it has a less universally enjoyable feel.I think anyone looking for a firm foam mattress at an affordable price should seriously consider the Capser One, especially because other foam mattresses with this firmness profile often have a true memory foam feel, and the Casper One has a more responsive feel.You might like the Casper One Foam mattress if:You are a back or stomach sleeperYou are looking for an affordable mattressYou want a responsive feelYou want a mattress with foam support layersYou might not like the Casper One Foam mattress if:You are a side sleeperYou are seeking a hybrid mattressYou are shopping for a more luxurious mattressYou want a classic memory foam feelOther mattresses from Casper Casper's new Dream Hybrid Mattress. Owen Poole/CNETCasper Dream Hybrid: this is the most affordable hybrid mattress in the new Casper lineup, and it is essentially a direct replacement for the old Casper Original Hybrid. This bed is a medium on the firmness scale and should be accommodating for all sleeper types. It also has more of a soft, neutral hybrid feel in comparison to the dense, firm foam feel on the Casper One. The Casper Dream Hybrid also has a zoned-support design, which makes the bed slightly firmer in the center third to promote better spinal alignment and enhanced edge support. The Casper Snow Hybrid mattress. Jonathan Gomez/CNETCasper Snow Hybrid: this mattress is the most interesting new mattress from Casper because it is Casper's first mattress that has a traditional memory foam feel. The top two comfort layers are made of memory foam that's slow to respond. Like the Dream Hybrid, it has a zoned-support design, enhanced edge support and a more accommodating firmness profile. This bed is also active cooling, and it should help you sleep a few degrees cooler at night.How does the Casper One Foam mattress compare to similar mattresses?Casper One Foam vs. Leesa OriginalThe Casper Original and the Leesa Original used to be so similar I had a hard time telling the two apart in a blind mattress test. With the updated Casper One, these beds still have a similar design, but the Leesa Original has more of a soft, neutral feel in comparison to the dense firm feel on the Casper One, and the Leesa Original is a medium firmness, which makes it more accommodating for side sleepers. Both beds are also in the same ballpark on price.Casper One Foam vs. NectarThe Nectar mattress is a very online mattress and shares some design elements with the Casper One. Both have three-layer all-foam designs (although Nectar does have a separate hybrid option for an additional cost), both are on the firm end of the spectrum and both use memory foam. Nectar has a classic, dense memory foam feel, while the Casper One has a much more responsive feel. Nectar is also a more affordable mattress.Casper One Foam mattress FAQs Is the mattress cover machine washable? No, the cover on the Casper One does include a zipper, but Casper says never to remove the cover. If the cover gets dirty, it should be spot-cleaned. Show more Does Casper have free returns? Yes, if you decide to initiate a return within the 100-night trial period, you can get a full refund and return the bed at no extra cost. Show more Do I need a foundation for the Casper One? Casper beds are designed to work on a firm, solid surface. It's best to use a solid or slatted foundation, not a box spring. Show more0 Kommentare 0 Anteile 126 Ansichten