0 التعليقات

0 المشاركات

168 مشاهدة

الدليل

الدليل

-

الرجاء تسجيل الدخول , للأعجاب والمشاركة والتعليق على هذا!

-

WWW.NINTENDOLIFE.COMSummer Game Fest Returns In June With A 2-Hour ShowcaseImage: Summer Game FestIt's been confirmed that Summer Game Fest will return this year with a 2-hour showcase taking place on 6th June at the YouTube Theatre in Los Angeles.Following this, a 'Play Days' hands-on event will take place from 7th-9th June in which media and influencers can experience new and upcoming games from over 40 attending publishers.A brand-new addition for 2025 includes what's being called a 'thought leader event' curated by Christopher Dring (previously GamesIndustry.biz) and Geoff Keighley.This will feature key leaders from the games industry and beyond who will come together to "delve into some of the key changes, challenges and opportunities facing the global video game industry, as well as celebrate the cultural impact and importance of video games as the most powerful form of entertainment in the world".Public tickets for the showcase will be available for purchase in Spring, but those hoping for a strong presense from Nintendo may need to temper their expectations; the publisher isn't known for collaborating much with Summer Game Fest, and with the Switch 2 launching later this year, it will likely be controlling its own message with the upcoming April Direct.For now then, you can sign up for updates over at summergamefest.com. NontendoHands-on impressions of over a dozen gamesAre you bothered about following this year's Summer Game Fest event, or do you think Nintendo will just stick to its own guns as usual? Let us know with a comment down below.Share:00 Nintendo Lifes resident horror fanatic, when hes not knee-deep in Resident Evil and Silent Hill lore, Ollie likes to dive into a good horror book while nursing a lovely cup of tea. He also enjoys long walks and listens to everything from TOOL to Chuck Berry. Hold on there, you need to login to post a comment...Related ArticlesNintendo Expands Switch Online's SNES Library With Three More TitlesIncluding a special Super Famicom release...Opinion: My Daughter Made Me Realise That Mario Wonder's Difficulty Options Need WorkYoshi or Nabbit, make your choiceXenoblade Chronicles X: Definitive Edition Metal Poster Pre-Order Bonus RevealedAvailable now in the US0 التعليقات 0 المشاركات 153 مشاهدة

WWW.NINTENDOLIFE.COMSummer Game Fest Returns In June With A 2-Hour ShowcaseImage: Summer Game FestIt's been confirmed that Summer Game Fest will return this year with a 2-hour showcase taking place on 6th June at the YouTube Theatre in Los Angeles.Following this, a 'Play Days' hands-on event will take place from 7th-9th June in which media and influencers can experience new and upcoming games from over 40 attending publishers.A brand-new addition for 2025 includes what's being called a 'thought leader event' curated by Christopher Dring (previously GamesIndustry.biz) and Geoff Keighley.This will feature key leaders from the games industry and beyond who will come together to "delve into some of the key changes, challenges and opportunities facing the global video game industry, as well as celebrate the cultural impact and importance of video games as the most powerful form of entertainment in the world".Public tickets for the showcase will be available for purchase in Spring, but those hoping for a strong presense from Nintendo may need to temper their expectations; the publisher isn't known for collaborating much with Summer Game Fest, and with the Switch 2 launching later this year, it will likely be controlling its own message with the upcoming April Direct.For now then, you can sign up for updates over at summergamefest.com. NontendoHands-on impressions of over a dozen gamesAre you bothered about following this year's Summer Game Fest event, or do you think Nintendo will just stick to its own guns as usual? Let us know with a comment down below.Share:00 Nintendo Lifes resident horror fanatic, when hes not knee-deep in Resident Evil and Silent Hill lore, Ollie likes to dive into a good horror book while nursing a lovely cup of tea. He also enjoys long walks and listens to everything from TOOL to Chuck Berry. Hold on there, you need to login to post a comment...Related ArticlesNintendo Expands Switch Online's SNES Library With Three More TitlesIncluding a special Super Famicom release...Opinion: My Daughter Made Me Realise That Mario Wonder's Difficulty Options Need WorkYoshi or Nabbit, make your choiceXenoblade Chronicles X: Definitive Edition Metal Poster Pre-Order Bonus RevealedAvailable now in the US0 التعليقات 0 المشاركات 153 مشاهدة -

WWW.NINTENDOLIFE.COMSorry Romantics, "Bonkers" Dating Sim 'Date Everything' Has Been Delayed To JuneImage: Team17We've been keeping an eye on Date Everything the romance sim where you can, fittingly, date everything since it was revealed last summer. A last-minute delay pushed the game to a Valentine's Day 2025 release, but developer Sassy Chap Games has since decided that it still needs a little more time in the oven. Put Cupid on hold, because Date Everything is now expected to launch in June.In a statement from Sassy Chap Games' Lead Designer Ray Chase, the dev explained that while the game is complete, there's still a whole bunch of testing that needs to be done. Releasing the game in its current state, Chase explains, would be a "disservice", so the team has decided to push things back by a few months to make sure that everything is in tip-top shape.Subscribe to Nintendo Life on YouTube794kWatch on YouTube You can find Chase's full statement below:To our fellow Dateviators,Since we last updated you on development, We have been extremely hard at work finishing work on Date Everything! And at this point I can confidently say that we have reached that point where the game is complete to a standard that we feel reached our goals with no compromise in our bonkers artistic vision.However... I was too confident that we could properly test ALL the wild amount of content and pathing that exists in this massive game, and unfortunately we ran out of time on our current (and yet so appropriate) release date of February 14th, 2025.Our bug list is finally starting to dwindle down as QA gets through the labyrinthine story pathing, but to submit our game in the state with so many outstanding glitches would be doing you a disservice. We have our final release date set for June 2025. And while it isn't quite as sexy a date as Valentine's Day, we hope we can bring new sexiness to June evermore. And yes, you actually can date the glitches. Their name is Daemon and I am currently in a Love/Haterelationship with them.It's always a shame to hear of a project getting delayed (particularly when it happens twice), but in a game where there are so many romantic outcomes, we can only imagine that the list of potential glitches quickly piles up and we'd always rather have a bug-free experience, if possible.We'll be keeping an eye out for more information from Team17 and Sassy Chap Games over the coming months for a more secure release date, ready to mark it with a heart on the calendar, naturally. And it's called... Date Everything!What do you make of this delay? Are you still keen to play Date Everything later this year? Let us know in the comments.[source x.com]Related GamesSee AlsoShare:01 Jim came to Nintendo Life in 2022 and, despite his insistence that The Minish Cap is the best Zelda game and his unwavering love for the Star Wars prequels (yes, really), he has continued to write news and features on the site ever since. Hold on there, you need to login to post a comment...Related Articles69 Games You Should Pick Up In Nintendo's 'Supercharge' eShop Sale (North America)Every game we scored 9/10 or higherReview: ENDER MAGNOLIA: Bloom In The Mist (Switch) - An Incredibly Polished, Downbeat MetroidvaniaOne that shouldn't be mistXenoblade Chronicles X: Definitive Edition Metal Poster Pre-Order Bonus RevealedAvailable now in the USCeleste Dev Makes "Difficult Decision" To Cancel New Game EarthbladeFollowing "a disagreement about the IP rights of Celeste"0 التعليقات 0 المشاركات 154 مشاهدة

WWW.NINTENDOLIFE.COMSorry Romantics, "Bonkers" Dating Sim 'Date Everything' Has Been Delayed To JuneImage: Team17We've been keeping an eye on Date Everything the romance sim where you can, fittingly, date everything since it was revealed last summer. A last-minute delay pushed the game to a Valentine's Day 2025 release, but developer Sassy Chap Games has since decided that it still needs a little more time in the oven. Put Cupid on hold, because Date Everything is now expected to launch in June.In a statement from Sassy Chap Games' Lead Designer Ray Chase, the dev explained that while the game is complete, there's still a whole bunch of testing that needs to be done. Releasing the game in its current state, Chase explains, would be a "disservice", so the team has decided to push things back by a few months to make sure that everything is in tip-top shape.Subscribe to Nintendo Life on YouTube794kWatch on YouTube You can find Chase's full statement below:To our fellow Dateviators,Since we last updated you on development, We have been extremely hard at work finishing work on Date Everything! And at this point I can confidently say that we have reached that point where the game is complete to a standard that we feel reached our goals with no compromise in our bonkers artistic vision.However... I was too confident that we could properly test ALL the wild amount of content and pathing that exists in this massive game, and unfortunately we ran out of time on our current (and yet so appropriate) release date of February 14th, 2025.Our bug list is finally starting to dwindle down as QA gets through the labyrinthine story pathing, but to submit our game in the state with so many outstanding glitches would be doing you a disservice. We have our final release date set for June 2025. And while it isn't quite as sexy a date as Valentine's Day, we hope we can bring new sexiness to June evermore. And yes, you actually can date the glitches. Their name is Daemon and I am currently in a Love/Haterelationship with them.It's always a shame to hear of a project getting delayed (particularly when it happens twice), but in a game where there are so many romantic outcomes, we can only imagine that the list of potential glitches quickly piles up and we'd always rather have a bug-free experience, if possible.We'll be keeping an eye out for more information from Team17 and Sassy Chap Games over the coming months for a more secure release date, ready to mark it with a heart on the calendar, naturally. And it's called... Date Everything!What do you make of this delay? Are you still keen to play Date Everything later this year? Let us know in the comments.[source x.com]Related GamesSee AlsoShare:01 Jim came to Nintendo Life in 2022 and, despite his insistence that The Minish Cap is the best Zelda game and his unwavering love for the Star Wars prequels (yes, really), he has continued to write news and features on the site ever since. Hold on there, you need to login to post a comment...Related Articles69 Games You Should Pick Up In Nintendo's 'Supercharge' eShop Sale (North America)Every game we scored 9/10 or higherReview: ENDER MAGNOLIA: Bloom In The Mist (Switch) - An Incredibly Polished, Downbeat MetroidvaniaOne that shouldn't be mistXenoblade Chronicles X: Definitive Edition Metal Poster Pre-Order Bonus RevealedAvailable now in the USCeleste Dev Makes "Difficult Decision" To Cancel New Game EarthbladeFollowing "a disagreement about the IP rights of Celeste"0 التعليقات 0 المشاركات 154 مشاهدة -

TECHCRUNCH.COMMicrosoft signs massive carbon credit deal with reforestation startup Chestnut CarbonMicrosoft announced Thursday that its buying over 7 million tons of carbon credits from Chestnut Carbon.The 25-year deal would enable Chestnut Carbon to reforest 60,000 acres of land across Arkansas, Louisiana, and Texas, Axios reported. Recently, the tech company has struggled to rein in its carbon emissions as AI has driven a surge in data center construction and use.Microsoft reported last year that its emissions rose 29% since 2020 as a result of the boom in AI and cloud computing, imperiling its 2030 goal to sequester more carbon than it produces. In 2023, the company reported generating 17.1 million tons of greenhouse gas emissions before offsets.Carbon credits come in a range of flavors. Chestnut Carbon focuses on reforestation, in which the company facilitates tree planting and then monitors the new forests to ensure they grow as planned and arent cut down. The company currently has eight projects in the Southeast U.S., which were previously worked as farms or pastures.Trees naturally sequester carbon as they grow, though not all forest-related carbon credits are created equal. Credits from projects that plant non-native, fast-growing trees are generally seen as lower quality and sell for less since they dont tend to support as much biodiversity, and the trees dont tend to live as long. Projects that support diverse, native plantings typically sell at a premium since the ecosystems that result tend to be more resilient over time.Even premium carbon credits from afforestation, reforestation, and avoided deforestation are a relative deal compared with some alternatives. Chestnut Carbon sold credits last year for $34 per ton, whereas direct air capture, which uses fans and chemical sorbents to draw CO2 out of the atmosphere, costs around $600 to $1,000 per ton today. Despite the cost differential, Microsoft has also bought carbon credits from direct air capture startups.For all their strengths, nature-based carbon credits arent always perfect. Verra, which has the largest nature-based carbon credit portfolio, was the subject of an extensive investigation in 2023 which reported that the organization overstated the climate benefit of its projects. The scandal led to the CEOs ouster and made the industry reassess the standards it uses. Chestnut Carbon, which previously used Verra to certify its carbon credits, today uses Gold Standard.0 التعليقات 0 المشاركات 160 مشاهدة

TECHCRUNCH.COMMicrosoft signs massive carbon credit deal with reforestation startup Chestnut CarbonMicrosoft announced Thursday that its buying over 7 million tons of carbon credits from Chestnut Carbon.The 25-year deal would enable Chestnut Carbon to reforest 60,000 acres of land across Arkansas, Louisiana, and Texas, Axios reported. Recently, the tech company has struggled to rein in its carbon emissions as AI has driven a surge in data center construction and use.Microsoft reported last year that its emissions rose 29% since 2020 as a result of the boom in AI and cloud computing, imperiling its 2030 goal to sequester more carbon than it produces. In 2023, the company reported generating 17.1 million tons of greenhouse gas emissions before offsets.Carbon credits come in a range of flavors. Chestnut Carbon focuses on reforestation, in which the company facilitates tree planting and then monitors the new forests to ensure they grow as planned and arent cut down. The company currently has eight projects in the Southeast U.S., which were previously worked as farms or pastures.Trees naturally sequester carbon as they grow, though not all forest-related carbon credits are created equal. Credits from projects that plant non-native, fast-growing trees are generally seen as lower quality and sell for less since they dont tend to support as much biodiversity, and the trees dont tend to live as long. Projects that support diverse, native plantings typically sell at a premium since the ecosystems that result tend to be more resilient over time.Even premium carbon credits from afforestation, reforestation, and avoided deforestation are a relative deal compared with some alternatives. Chestnut Carbon sold credits last year for $34 per ton, whereas direct air capture, which uses fans and chemical sorbents to draw CO2 out of the atmosphere, costs around $600 to $1,000 per ton today. Despite the cost differential, Microsoft has also bought carbon credits from direct air capture startups.For all their strengths, nature-based carbon credits arent always perfect. Verra, which has the largest nature-based carbon credit portfolio, was the subject of an extensive investigation in 2023 which reported that the organization overstated the climate benefit of its projects. The scandal led to the CEOs ouster and made the industry reassess the standards it uses. Chestnut Carbon, which previously used Verra to certify its carbon credits, today uses Gold Standard.0 التعليقات 0 المشاركات 160 مشاهدة -

TECHCRUNCH.COMWaymo employees can hail fully autonomous rides in Atlanta nowWaymo said it is launching fully driverless robotaxi rides for employees in Atlanta, an important step before the company opens the service up to members of the public later in 2025.This is the latest signal of Waymos push into new markets, and it comes two months after the company closed a $5.6 billion Series C round at a $45 billion valuation. The round was led from heavy hitters including Alphabet, Andreessen Horowitz, Fidelity, Tiger Global, and others. The company earlier this week announced plans to test in 10 new cities this year, starting with San Diego and Las Vegas. When Waymo officially launches its commercial robotaxi service in Atlanta, it will be exclusively via the Uber app. Waymo and Uber also plan to launch together in Austin this year. The Alphabet-owned self-driving company opened up robotaxi rides to certain members of the public in Austin in October after first offering rides to employees seven months earlier.Waymos Atlanta milestone comes a day after Elon Musk said Tesla would launch a robotaxi service in Austin in June. Tesla has yet to bring a fully autonomous vehicle to public roads that doesnt require a human driver behind the wheel ready to take over. Waymo runs its own autonomous ride-hail service, Waymo One, in San Francisco, Phoenix, and Los Angeles.0 التعليقات 0 المشاركات 158 مشاهدة

TECHCRUNCH.COMWaymo employees can hail fully autonomous rides in Atlanta nowWaymo said it is launching fully driverless robotaxi rides for employees in Atlanta, an important step before the company opens the service up to members of the public later in 2025.This is the latest signal of Waymos push into new markets, and it comes two months after the company closed a $5.6 billion Series C round at a $45 billion valuation. The round was led from heavy hitters including Alphabet, Andreessen Horowitz, Fidelity, Tiger Global, and others. The company earlier this week announced plans to test in 10 new cities this year, starting with San Diego and Las Vegas. When Waymo officially launches its commercial robotaxi service in Atlanta, it will be exclusively via the Uber app. Waymo and Uber also plan to launch together in Austin this year. The Alphabet-owned self-driving company opened up robotaxi rides to certain members of the public in Austin in October after first offering rides to employees seven months earlier.Waymos Atlanta milestone comes a day after Elon Musk said Tesla would launch a robotaxi service in Austin in June. Tesla has yet to bring a fully autonomous vehicle to public roads that doesnt require a human driver behind the wheel ready to take over. Waymo runs its own autonomous ride-hail service, Waymo One, in San Francisco, Phoenix, and Los Angeles.0 التعليقات 0 المشاركات 158 مشاهدة -

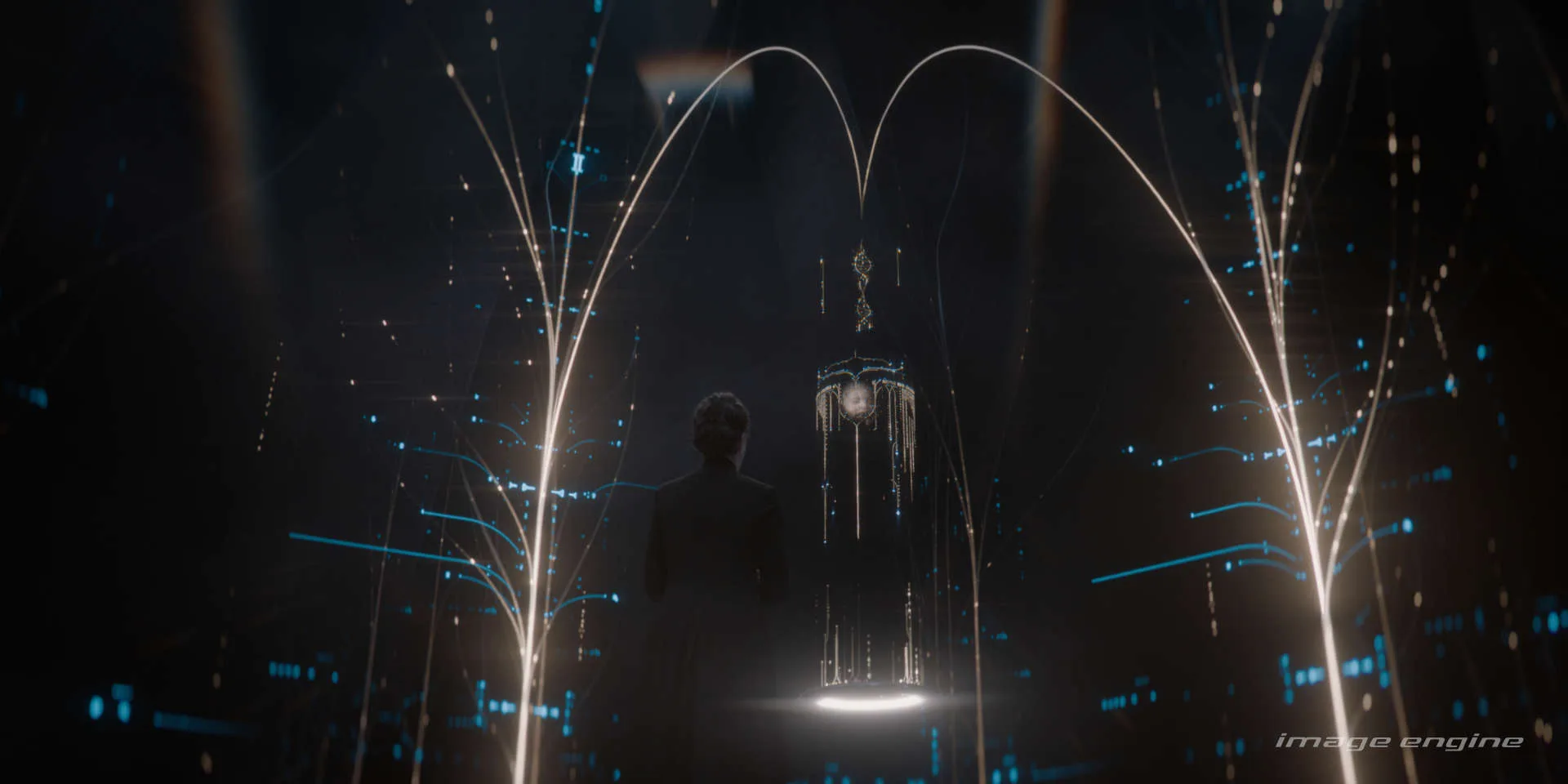

WWW.ARTOFVFX.COMDune Prophecy: Martyn Culpitt (VFX Supervisor) and the Image Engine teamInterviewsDune Prophecy: Martyn Culpitt (VFX Supervisor) and the Image Engine teamBy Vincent Frei - 30/01/2025 In 2018, Martyn Culpitt delved into Image Engines visual effects work for Fantastic Beasts: The Crimes of Grindelwald. Since then, he has contributed to a variety of projects, including The Mandalorian, Fantastic Beasts: The Secrets of Dumbledore, Willow, and Kraven the Hunter.Jeremy Mesana brings more than 25 years of visual effects experience to the table, having collaborated with leading studios like MPC, Digital Domain, and Image Engine. His credits include work on Logan, Mulan, Snowpiercer, and Halo.With more than 10 years of experience in visual effects, Adrien Vallecilla built his career at studios like MPC and Digital Domain before joining Image Engine in 2022. His credits include Terminator: Dark Fate, She-Hulk: Attorney at Law, Ahsoka, and Halo.With more than 15 years in the VFX industry, Xander Kennedy has an extensive background that includes time at Luma Pictures, MPC, and ReDefine. Since joining Image Engine in 2021, he has contributed to various shows such as The Book of Boba Fett, Obi-Wan Kenobi, Foundation, and Leave the World Behind.Geoff Pedder brings 25 years of experience in the visual effects industry, having honed his craft at studios like MPC, Cinesite, ILM, and Image Engine. His portfolio includes work on Hawkeye, Fast X, Avatar: The Last Airbender, and Kraven the Hunter.Clement Feuillet joined Image Engine in 2023, following his work with studios such as Mikros Animation, Scanline VFX, Framestore, and Animal Logic on various shows like Godzilla vs. Kong, 1899, Peter Pan & Wendy, and Leo.EungHo Lo launched his VFX career at Weta FX in 2009 before joining Image Engine in 2015. Over the years, he has contributed to various projects, including Prometheus, Game of Thrones, Hawkeye, and Snowpiercer.What was your feeling to enter into the Dune universe?Martyn Culpitt (VFX Supervisor) When I learned that my next project would be Dune: Prophecy, I was beyond excited to contribute to this incredible universe. The opportunity to bring our own creative ideas and visions to such an iconic series was truly an honor.Having been in the industry since I was eighteen, Ive had the privilege of working on only a handful of projects with worlds as unique and imaginative as this one.Denis Villeneuves first Dune film was breathtaking. I was utterly immersed, transported to its rich, otherworldly setting. The VFX work by DNEG was incredible and again for me pushed the ideas of whats possible.Working on the Dune: Prophecy was creatively one of the coolest projects. We really tried to keep to the aesthetics of the first movie but also add our own touch to it.How was the collaboration with the showrunner and with VFX Supervisor Michael Enriquez?Martyn Culpitt (VFX Supervisor) We had a great collaboration with Mike and Alison Schapker, the show runner. We were given a lot of creative freedom to really come up with exciting ideas that were true to the Dune world but also unique to Prophecy. Concepts played a huge part in this for us as it was a quick way to explore ideas and have Mike and Alison give feedback. There were a lot of creative challenges on the show and Mike was great at trying to help us understand what Alisons vision was. It was an awesome show and a pleasure working with both of them. Hopefully we get to do it again.How did you organize the work with your VFX Producer?Martyn Culpitt (VFX Supervisor) Viktoria Rucker, my VFX Producer, and I had an excellent partnership on this project. Vik is incredibly organized and played a key role in ensuring turnovers happened as quickly as possible, allowing us to dive into the complex work for the show. She was fantastic with the client, maintaining a great rapport, and collaborated closely with Terron Pratt to ensure we had everything we needed.The show presented significant challenges due to the sheer number of plates and creative complexities, but Vik and Terron were outstanding in maintaining constant communication and keeping us on track to hit our targets. While the complexity of the shots and ongoing updates to the cut pushed some deadlines, Vik excelled at reshuffling priorities to ensure everything was delivered on time. She worked closely with Kitty Widjaja, our Production Manager, to make it all happen.Viks organization and proactive approach were invaluable. I genuinely couldnt have done it without her and Kittys support. Their ability to stay on top of tight deadlines made a huge difference and alleviated a lot of potential concerns.What are the sequences made by Image Engine?Martyn Culpitt (VFX Supervisor) The show presented us with a variety of unique and challenging sequences, each creatively exciting due to their distinct nature.One of the key sequences involved the sandworm shots, where the worm engulfs the Sisterhood complex in a fully CG-rendered Arrakis environment. These shots also included haunting nightmare scenes set in the Sisterhoods realm, featuring falling sand, thumpers in the Arrakis desert, and many other unique shots.Another standout was the ice lake sequence, where we crafted a dynamic snowstorm that reflected Valyas emotional state, blending white and black snow in a visually striking way. This required a fully CG environment, complete with detailed ice cracks and FX-driven snow effects.The holographic table was particularly memorable, depicting the Arrakis desert and the sandworm consuming Desmond. This scene was one of the most technically demanding, but the final result was stunning, and were immensely proud of it.We also created a full CG mechanical robot battle sequence featuring MEK robots in a dynamic, fully digital junkyard environment.Additionally, we designed a CG bull that needed to feel grounded in reality while hinting at evolutionary traits from thousands of years in the future. This balance between realism and futurism made it an intriguing challenge.Another exciting addition was the CG lizard, created as the Princes toy. This was a fun creature to design. The animators and riggers enjoyed figuring out its unique movements for the sequence.Lastly, the Anirul world posed a fascinating challenge, requiring the development of a fully CG environment filled with intricate graphical elements. Collaborating with Territory Studios, we designed a detailed library of information stored within the Sisterhood, adding depth to this captivating world.The opening sequence with the giant robots is visually stunning. Can you walk us through the creative process behind designing and animating these massive machines?Martyn Culpitt (VFX Supervisor) We wanted the giant robots to feel both immense and imposing, yet grounded in reality. It was equally important that they exude intelligence, reflecting the advanced technical knowledge of their era. The concept department played a crucial role in this process, drawing inspiration from large, heavy machinery and industrial vehicles to give these robots a tangible sense of scale and presence.Once the concepts were approved, we transitioned to building detailed 3D models. The animation team collaborated closely with the modeling team to bring these machines to life, focusing on every aspect of their movement. We emphasized scale and weight, ensuring that each motion felt deliberate, heavy, and consistent with their massive size and mechanical nature.Jeremy Mesana (Animation Supervisor) Like most characters we will always start with a motion study. Aiming to get an idea on locomotion and scale nailed down before we start shot production. Trying to find early nuance in a characters movement usually saves time come shot production time. For the robots, we focused on how the legs moved early on while still in the concept stage. So that we could give mobility feedback to assets while still in the modeling stage. For the blaster fire, we again dove in early to try and figure out the mechanics of where the blaster originates as well of the mechanics of how the laser tracking and blaster firing would work. Making solid headway early on for these things helped the shots move much faster when it came to shot production.How did you approach the challenge of integrating the robots seamlessly into the war zone environment? What techniques were used to ensure their scale felt imposing?Martyn Culpitt (VFX Supervisor) When we received the RAW plates, they featured soldiers running through the frame, with detailed ground debris and practical special effects explosions. These elements provided a strong foundation for integrating the CG robots seamlessly into the scene.Building on this, we added even more debris and significantly extended the environment to enhance the sense of scale and depth. To fully immerse the robots into the action, we layered in additional FX elements, such as explosions, flying debris, and atmospheric effects, ensuring the CG seamlessly blended with the live-action footage and amplified the intensity of the scene.We focused on animating the robots to convey their massive scale, ensuring every movement felt deliberate and heavy. Their positioning was carefully crafted to emphasize their imposing and menacing presence, amplifying their sense of threat.Jeremy Mesana (Animation Supervisor) Having enough reference elements in the environment like bodies and debris gave the robots a nice scale of reference. The ground interaction also helped give scale with the laser explosions and collision effects.Can you tell us more about the interplay between practical effects and CGI in this sequence? Were there any physical elements used as a base for the robots?Martyn Culpitt (VFX Supervisor) There were a few practical elements integrated into the scene. For example, a blue laser mounted on a structure was used to simulate where the robot might scan its surroundings. While it provided a useful reference, the timing didnt align with the robots animation, so we painted it out and replaced it with a CG version that synced perfectly with the robots movements.Similarly, practical explosions were set off to mimic the robot firing its weapon. These worked well but were enhanced with additional CG explosions and FX simulations to help the scale of the impact. While the scene already featured some atmospheric elements and practical explosions, we layered in new FX versions to ensure they seamlessly aligned with the CG robots depth in the scene and animation, enhancing the overall integration and intensity of the shot.Adrien Vallecilla (CG Supervisor) HBO constructed a simple mechanical structure to represent the height of the main robot, with an animated laser mounted on it and aimed at the running soldiers. We replaced the mechanical structure and laser with our CGI robot, extended the set, added traveling blasters, smokes, and explosions over the plate, and enhanced the set explosions with additional CG elements.The plates provided a solid foundation, but replacing the practical laser was challenging. This required us to completely paint out the set laser and incorporate CG interactive lighting from the robots laser/blasters into the plate elements.What were the key technical hurdles in creating such a complex opening scene, and how did the team at Image Engine overcome them?Martyn Culpitt (VFX Supervisor) One of the biggest challenges we faced was the existing practical elements in the plates, which included dynamic atmospherics, lasers, and explosions. This required us to be extremely careful in how we integrated our FX work to ensure everything blended seamlessly. Fortunately, the client provided lidar scans, which were invaluable for aligning the robots and CG environment ext. with the correct depth and be able to blend it together. It was a creative challenge for sure.Adrien Vallecilla (CG Supervisor) One of the biggest concerns was the amount of FX fires, smoke, and explosions needed to make the battlefield feel realistic. Early in FX, we created a library of explosions, fires, smoke plumes, and ambient smoke elements that animation placed in the scenes. We created cross-software attributes for animation to offset their, scale, and duplicate those FX elements, and once approved, these were published to lighting and rendered. Because we render our animation dailies with Arnold too, this approach allowed us to creatively approve the battlefield at the animation stage, saving time downstream and avoiding unnecessary caching in FX.The sandworms are iconic to the Dune universe. How did you ensure your version of these creatures remained faithful to their legacy while introducing new visual elements?Martyn Culpitt (VFX Supervisor) We really studied the Dune movies to thoroughly understand the movements of the sandworms and their sense of scale. The worms larger movements are smooth and subtle, which contributes to their impressive scale. However, its the moments when the worms pause and interact with the actors that reveal the depth and detail of their animation, truly bringing them to life. The movement of the worms teeth, lips, and mouth conveys an incredible sense of scale and believability.To achieve this, we developed a sophisticated rig for the teeth, allowing them to move in response to the creatures vibrations as it traverses its environment and interacts with the sand. We also ensured that the individual hairs could be animated as needed.What was the biggest challenge in creating the sandworms interactions with the environment, especially with the intricate movement of sand?Martyn Culpitt (VFX Supervisor) We carefully analyzed numerous shots from the films to understand how the worms move through the sand, focusing on the flow of their movement and the way the sand flows and interacts with them. Particular attention was given to how the sand behaves in each shot and the individual elements that contribute to the worms immense sense of scale.When you truly study the films, you realize the sand isnt just a simple elementits a complex interplay of layers, movements, and volumes. There are countless FX passes working together to create the illusion of dynamic sand that enhances the scale and realism of the shots.This detailed study of sand movement was essential to integrating our creature seamlessly into its environment and ensuring it remained faithful to the films aesthetic and vision.Adrien Vallecilla (CG Supervisor) The main challenge ended up being the distance from the ground. Because the sand was made of particles, we needed to simulate millions of particles to make the simulations look realistic at that scale. The simulation and rendering time slowed down the creative process, but we managed to find ways to optimize and speed up the process by splitting the elements into sections and reviewing particles and volumes separately.One of the creative challenges we faced was balancing the volumes with the sand particles. There isnt much reference for such a massive amount of moving sand, so it took us some time to find the right balance. Another challenge was that the sequence took place in a dream, and for creative reasons, the worm had different scales per shot. This posed a challenge for FX and required them to build flexible templates to accommodate scale adjustments for the worm.Could you share insights into the textures and detailing of the worms skin? How did the team achieve such a realistic yet otherworldly appearance?Martyn Culpitt (VFX Supervisor) Having the Dune movies as a reference was hugely instrumental in shaping the look of our creature. We dedicated significant time to studying the details and nuances, ensuring we accurately replicated the textural qualities and intricate model details of the worms. To enhance the realism, we added fine surface hairs and introduced additional textural breakup, bringing even more depth and life to the creature in our shots.Geoff Pedder (Asset Supervisor) We studied the worms in the movies quite closely and used a mixture of zbrush sculpting for larger scale features, render time displacement, dust maps and groom to achieve the fine details and light response we were looking for.Clement Feuillet (Texture Artist) Modeling did a phenomenal job detailing the hi-resolution worm, so I was able to bake the very high poly details of the worm to use as a base for texturing, then we tried to find some good references for the worm scale and skin textures by looking at the movies, internet references, different types of stones We blended different scan data of rocks and sand textures, starting with the high-resolution baked data as our foundation. I created the scale mask by comparing the low poly and high poly meshes, and then we worked our way from large details down to smaller ones, utilizing all the data we had generated before, like occlusion to add intricate sand details between scales, using gradients and curvature masks to create a more weathered appearance on the tips of the scales that come into direct contact with the sand etc..EungHo Lo (Organic Modeller) The specific appearance of the worm was already depicted in the movie, so it was a great starting point for the design process. The level of detail varies greatly depending on how much the worm appears in the movie and how close it gets to the camera. Since most of its appearances focus on the head and open mouth, we dedicated significant effort to that part. Additionally, because it always appears with sand, creating realistic sand greatly enhanced the realism of the worm.Since the worm lives in the sand, remains constantly dry, and has a very hard shell, we tried to capture that texture and skin detail by combining the hardness of rocks with the dry, rough texture of an elephants skin.The lizard-like robot adds a unique touch to the narrative. How did you conceptualize its design and movement to balance its mechanical and organic features?Martyn Culpitt (VFX Supervisor) The original design of the Lizard was provided as a concept model by the client, which we further refined and enhanced to add greater detail and bring more life to its overall appearance. Our aim was to create a creature that seamlessly blended the organic qualities of a real lizard with the precision of a mechanical machine. To achieve this, we conducted in-depth movement studies, focusing on key details to realize this unique vision.Animating the Lizard was particularly challenging, as we needed to maintain a lifelike quality while incorporating a mechanical edge. The team did an incredible job striking that balance. The difficulty increased once the Lizard was stabbed by Desmonds knife. We had to carefully adjust its movements to convey damage, giving it a staccato-like, jerky quality. In the end the final animation really worked to meet the feel Alison, the showrunner, was after and she absolutely loved it.Jeremy Mesana (Animation Supervisor) In its undamaged state the lizard we played more organic in its movements, referencing a real-life lizard for motion. Its when it got damaged that we leaned more into a mechanical staccato-like robot motion to further sell that it was one of the thinking machines.What was the most intricate part of animating the robot lizard, particularly when showcasing its interactions with the characters and environment?Martyn Culpitt (VFX Supervisor) The transformation from the ball to the lizard unfurling on Pruwets hand was one of the most complex challenges we faced. The lizards design featured countless scales that needed to be individually animated while also blending seamlessly as the ball unfurled. When in its spherical form, the lizard has an abundance of scales that make up the sphere, but as it transitions into its lizard form, nearly half of those scales disappear.We had to find a precise and intricate way to blend and hide the scales during the transformation. It was almost like a Transformer-style transition, which made the process both fascinating and challenging for the team. Creating this effect required a highly complex rig and a lot of time and effort to get everything just right, but it was incredibly rewarding to see it come together.Jeremy Mesana (Animation Supervisor) The transformation is the more intricate of tasks for the lizard. Unfurling itself from its ball state and springing to life. Make a believable transition from one state to the other.Were there any specific inspirations or references the team used to develop its behavior and personality?Jeremy Mesana (Animation Supervisor) We gathered a slew of real-life lizard footage as reference for the motion.The holograms in Dune: Prophecy are incredibly intricate. What were the creative inspirations behind their design and color palette?Martyn Culpitt (VFX Supervisor) The holographic table sequence was one of the most challenging yet creative moments in the series. Holograms have been portrayed in countless ways before, and we were determined not to mimic what had already been done. To create something unique, we collaborated closely with Mike, the visual effects supervisor, and Alison, the showrunner, presenting numerous concepts for how the holographic table would look and function.Our goal was to make it feel tangible, as though the table was truly projecting light that interacted with surfaces to create the images and worlds we see. One of the biggest hurdles was reconciling the differences between the on-set cameras and the projectors within the scene. This required extensive problem-solving to ensure the visuals came together seamlessly.We also drew inspiration from the first film, particularly the scene where Paul reviews footage in his bedroom of the Fremen walking across the sands of Arrakis. Incorporating elements from that moment helped add continuity and authenticity to our designs. The footage in that scene has a distinct degradationan aged, film-like quality reminiscent of a real projectorand we wanted to ensure our holographic table carried a similar aesthetic.Alison, the showrunner, was clear that the holographic table should not feel like sleek, futuristic technology. Instead, she wanted it grounded in the real world, with a tactile, analog quality rather than a polished, digitally enhanced look.Xander Kennedy (CG Supervisor)- One of our main inspirations for the hologram was the Dune universe itself. There are a lot of conceptual elements that could make up a hologram, but grounding ourselves within the lore and creative elements of our universe was crucial. It became clear that the style in which the dune holograms spoke, were quite an old-school-projector look and feel. The fluttering of a film roll in front of a bulb creates unique artifacts, jitters and light rays that help produce an image that is believable. The color palette has a similar story, All the elements were built up around true photography, as if the hologram had been filmed and recorded in a real environment on Arrakis. Paying careful attention to our key and fill ratios, allowed us to build up the holographic treatment correctly. The warmth of Arrakis played into our favor and using more saturated mids / shadows really gave the hologram a sense of depth while keeping highlights as a sense of connection to the projectors.How did the team handle the technical challenges of rendering holographic elements that interacted dynamically with characters and settings?Xander Kennedy (CG Supervisor) The team did really well collaborating with each other, some of our render layers had to be rendered out of lighting and passed back downstream to FX in order to generate the correct projected light rays to connect to the highlights of the final image. It was a bit of a cyclical process, but utilizing some of our pipeline templates and key leads and artists, we were able to manage a staggered process that ended up being pretty hands-free. Each shot had dozens of AOVs multiple artists and FX elements split for the purpose of recombining in a way that was sympathetic to layering. In order to squeeze out the most height and depth to the hologram, the comp team also spent a lot of time meticulously placing additional elements that gave a lot of life to the final image.Were there any unexpected technical or creative challenges encountered during the production?Martyn Culpitt (VFX Supervisor) The project presented numerous creative and technical challenges, but the crew excelled at prioritizing issues and devising solutions within an incredibly tight deadline. Each sequence was unique in its demands, requiring significant creative effort to solve, often accompanied by unforeseen technical hurdles.One particularly challenging sequence was the ice lake environment. Its black-and-white snow presented a complex problem, as we needed to fine-tune the values and variations of snow on a per-shot basis. This required developing new tools and workflows across multiple departments to achieve the desired results.The teams collaborative spirit was truly remarkable, and their unwavering support for one another made all the difference. Its a testament to their dedication and hard work that we were able to deliver a project of such high creative quality.Adrien Vallecilla (CG Supervisor) One of the challenges was the Anirul genetic library. It is always hard to work with holographic elements, but the challenging aspect of this work is that the environment and holograms are characters in the episode. On top of having many different communication tools, Anirul itself needed to look beautiful and contribute to the artistic universe of Dune. Each asset in this environment has different attributes built-in for animation, the artists were able to move, pulse, and fade in and out the holograms. We needed to create publishable, attribute-driven assets to accomplish this in our 3D scenes, the animators were animating attributes in Maya that allowed the holograms to behave how we wanted, and then these were published to lighting for proper rendering. One of the goals of having the holograms in 3d space was to achieve realistic reflections and lighting that would interact with the environment as Anirul communicates. Organizing all these elements to create the Anirul environment that elegantly tells the story was one of the most challenging aspects of this work together.Xander Kennedy (CG Supervisor) I think something we didnt anticipate was the level of technical and creative direction we needed to give the physical projector lightbulbs of the holographic table itself.We ended up having to make a small rig / animation that was instanced on to each of the light placements and using a variety of attributes just for one shot we had to reveal how the projector turned on. This sent us down a small rabbit hole where we had to think okay how would this thing reveal a bulb from underneath the table surface and turn out a light in an aesthetically pleasing way, this had almost every departments input from asset, animation, FX, lighting and comp to compose the right effect and was quite extensive. I dont know if anyone other than the artists working on it would ever fully grasp the full extent of what we achieved.I think the biggest technical hurdle for me was designing a system to be able to art direct black and white snow within each shot, but still being able to have one depend and influence each other cohesively. The snowstorm was 60 sum shots all of varying density, storm level intensity, volumetrics, particles and black / white levels, which meant we had to carefully design a system that could output control of quite a variety of creative attributes, whilst still being able to hit creative notes on each and pivot if need be. Eventually, we were able to come up with a system to minimize FX simulations and maximize attribute control and provide flexibility for other departments to use. It meant FX lighting and comp had to work closely and share setups and knowledge extensively throughout the process.Looking back on the project, what aspects of the visual effects are you most proud of?Martyn Culpitt (VFX Supervisor) Its incredibly rewarding to look back at the end of a project and reflect on the challenges we faced and overcame, emerging with an exceptional body of work we can all be proud of. Every project is a team effort, but on this one, the crew truly went above and beyond to problem-solve, support one another, and uphold the creative and visual quality of the show to an extraordinary standard.The work is genuinely outstanding, and the teams passion was evident from the very beginning. Everyone was deeply committed to making this project the best it could be, and their excitement to contribute was inspiring.There are so many sequences Im particularly proud of. The Arrakis world with the worm and the FX simulations look fantastic. The holographic table sequence stands out with its distinctive and complex creative build, its a testament to the immense effort that went into it. One of my favorite scenes is the Ice Lake. The amount of environmental FX simulation work, all driven by the emotions of Valya was pretty incredible. There are so many layers from FX and comp really took this and made it special. The Anirul world we created is so unique with the trees and complexity Ive never seen anything quite like it. It was a huge collaborative effort with the team from Territory Studios to bring their graphics to life in our full CG world.This was truly an unforgettable project to be part of, and I feel incredibly fortunate to have worked on it.How long have you worked on this show?Martyn Culpitt (VFX Supervisor) Start date: Jan 8, 2024. Finished: Nov 15, 2024Whats the VFX shots count?Martyn Culpitt (VFX Supervisor) 208.What is your next project?Martyn Culpitt (VFX Supervisor) I cant disclose that yet, but I can tell you its going to be a pretty cool project.A big thanks for your time.WANT TO KNOW MORE?Image Engine: Dedicated page about Dune: Prophecy on Image Engine website.Mike Enriquez & Terron Pratt: Heres my interview of Production VFX Supervisor Mike Enriquez and VFX Producer Terron Pratt. Vincent Frei The Art of VFX 20250 التعليقات 0 المشاركات 164 مشاهدة