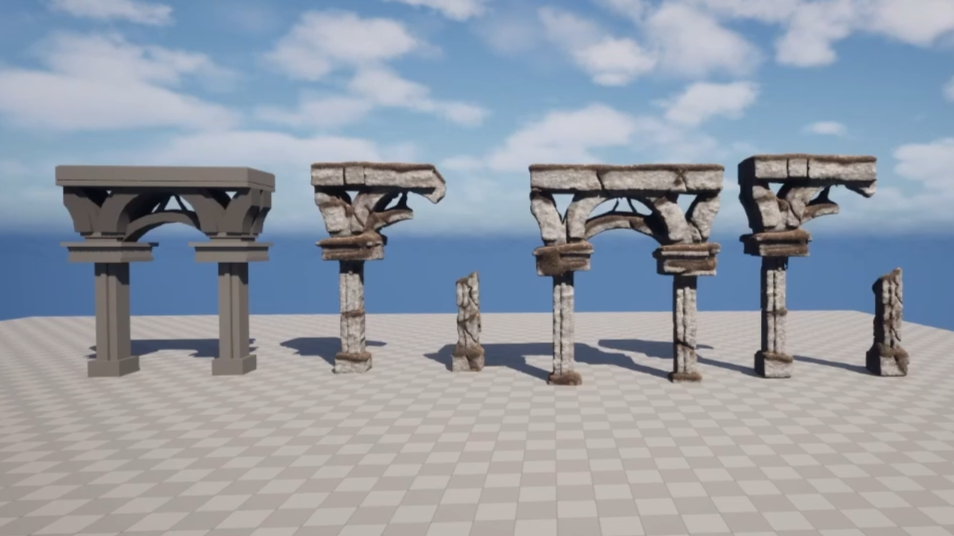

Hey Creative Souls! Are you ready to take your artistry to the next level? Dive into the world of 3D art with these amazing five 3D asset kits that every artist needs! These premium models are not just tools; they’re your secret weapon for unleashing creativity and efficiency in your projects! With ready-made assets, you’ll find endless possibilities and innovative ways to express your imagination. Embrace the change and watch your creative journey flourish! Remember, every masterpiece starts with a single step! Let’s create magic together!

#3DArt #CreativeJourney #ArtistsUnite #Inspiration #ArtCommunity

#3DArt #CreativeJourney #ArtistsUnite #Inspiration #ArtCommunity

🎨✨ Hey Creative Souls! Are you ready to take your artistry to the next level? 🌟 Dive into the world of 3D art with these amazing five 3D asset kits that every artist needs! 🚀💖 These premium models are not just tools; they’re your secret weapon for unleashing creativity and efficiency in your projects! With ready-made assets, you’ll find endless possibilities and innovative ways to express your imagination. 🌈💡 Embrace the change and watch your creative journey flourish! Remember, every masterpiece starts with a single step! Let’s create magic together! 💪🎉

#3DArt #CreativeJourney #ArtistsUnite #Inspiration #ArtCommunity