0 التعليقات

0 المشاركات

41 مشاهدة

الدليل

الدليل

-

الرجاء تسجيل الدخول , للأعجاب والمشاركة والتعليق على هذا!

-

WWW.FASTCOMPANY.COMLinda McMahon just handed A.1. steak sauce an unbelievable opportunityOn a panel this week, U.S. Secretary of Education Linda McMahon, the former WWE CEO who is now charged with making sweeping decisions for 100 million American school children, repeatedly referred to AI technology as “A1.” For McMahon, who was speaking at the ASU+GSV Summit for educators, it was an embarrassing mistake. But for Kraft Heinz’s A.1. steak sauce, it was basically free product placement—and the brand didn’t hesitate to take its cut. McMahon’s first slipup occurred when she shared an anecdote with the audience about “a school system that’s going to start making sure that first graders, or even pre-Ks, have A1 teaching in every year.” Matters only got worse when she continued, “Kids are sponges. They just absorb everything. It wasn’t all that long ago that it was, ‘We’re going to have internet in our schools!’ Now let’s see A1 and how that can be helpful.” Well, um, maybe it can help the cafeteria meatloaf taste better? A.1. was thinking along the same lines. The brand jumped on Instagram yesterday with a spoofed ad for a McMahon-inspired A.1. bottle, complete with a photoshopped version of the sauce with the label “For educational purposes only” accompanied by the slogan, “Agree, best to start them early.” The post was captioned, “You heard her. Every school should have access to A.1.” Heinz is no stranger to thinking up limited-edition novelty goods, from its neon pink Barbie-cue sauce to a Taylor Swift-inspired ranch and portable Velveeta packets. But this is the first time (that we know of) when the company has used its stunt marketing resumé to make a jab at a political figure. So far, A.1.’s loyal fans seem to be in support of its “new sauce.” “My husband wants a bottle for his desk,” one commenter wrote under the brand’s post. “He teaches middle school, at least until they replace him with A.1.” View this post on Instagram A post shared by A.1. Original Sauce (@a1originalsauce)0 التعليقات 0 المشاركات 56 مشاهدة

WWW.FASTCOMPANY.COMLinda McMahon just handed A.1. steak sauce an unbelievable opportunityOn a panel this week, U.S. Secretary of Education Linda McMahon, the former WWE CEO who is now charged with making sweeping decisions for 100 million American school children, repeatedly referred to AI technology as “A1.” For McMahon, who was speaking at the ASU+GSV Summit for educators, it was an embarrassing mistake. But for Kraft Heinz’s A.1. steak sauce, it was basically free product placement—and the brand didn’t hesitate to take its cut. McMahon’s first slipup occurred when she shared an anecdote with the audience about “a school system that’s going to start making sure that first graders, or even pre-Ks, have A1 teaching in every year.” Matters only got worse when she continued, “Kids are sponges. They just absorb everything. It wasn’t all that long ago that it was, ‘We’re going to have internet in our schools!’ Now let’s see A1 and how that can be helpful.” Well, um, maybe it can help the cafeteria meatloaf taste better? A.1. was thinking along the same lines. The brand jumped on Instagram yesterday with a spoofed ad for a McMahon-inspired A.1. bottle, complete with a photoshopped version of the sauce with the label “For educational purposes only” accompanied by the slogan, “Agree, best to start them early.” The post was captioned, “You heard her. Every school should have access to A.1.” Heinz is no stranger to thinking up limited-edition novelty goods, from its neon pink Barbie-cue sauce to a Taylor Swift-inspired ranch and portable Velveeta packets. But this is the first time (that we know of) when the company has used its stunt marketing resumé to make a jab at a political figure. So far, A.1.’s loyal fans seem to be in support of its “new sauce.” “My husband wants a bottle for his desk,” one commenter wrote under the brand’s post. “He teaches middle school, at least until they replace him with A.1.” View this post on Instagram A post shared by A.1. Original Sauce (@a1originalsauce)0 التعليقات 0 المشاركات 56 مشاهدة -

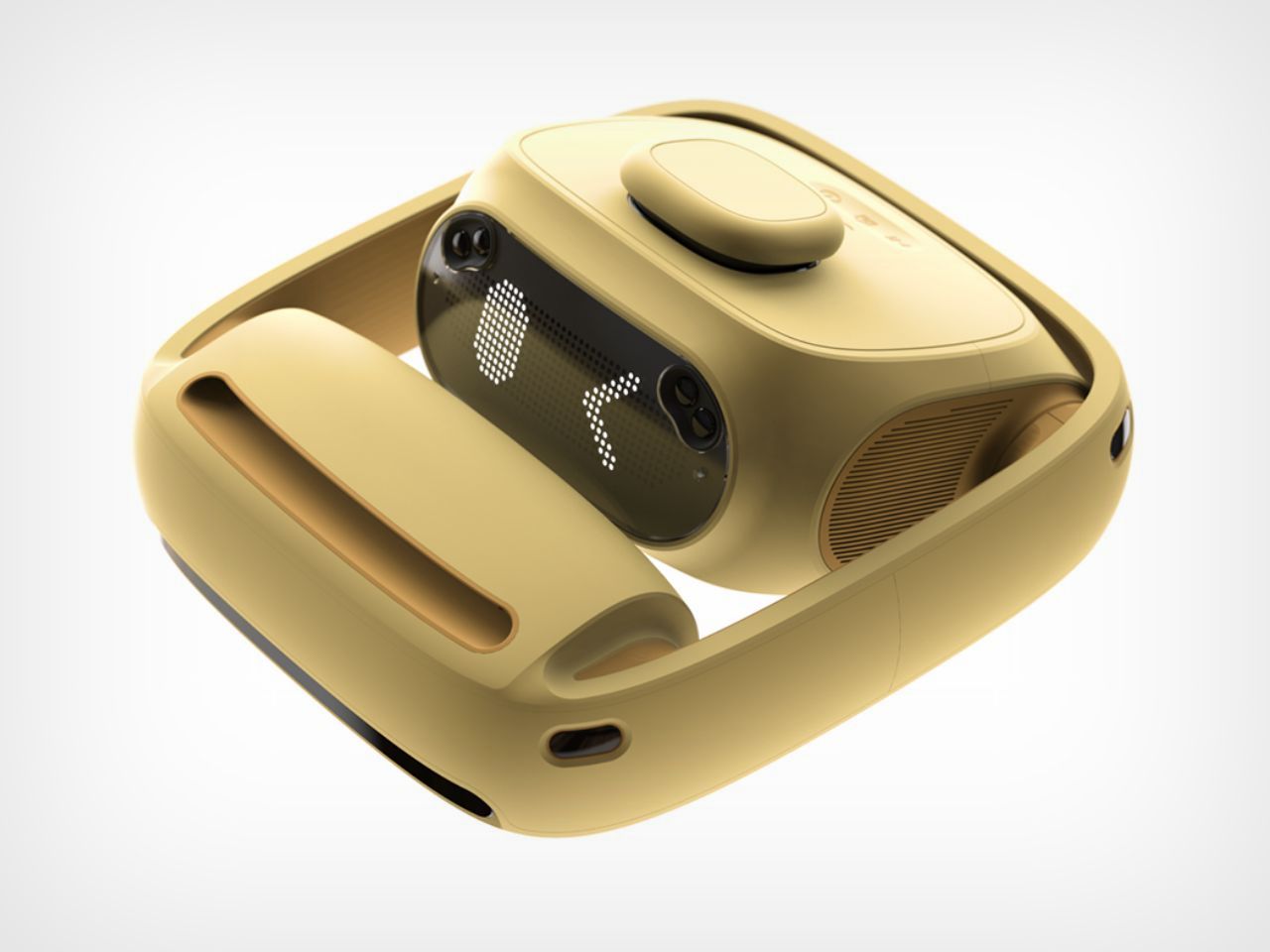

WWW.YANKODESIGN.COMThis ‘Friendly’ AI-Powered Robotic Vacuum Manages Your Home and Allows More Family TimeAs artificial intelligence continues to advance, creating art, writing stories, and even making decisions for us, there are still fundamental aspects of life where human presence remains essential. Parenting is one such domain, raising a child, nurturing a family, and forming emotional bonds cannot be automated. However, technology can play a crucial role in alleviating the burden of strenuous household tasks, enabling parents to focus on what truly matters: quality time with their children. Keepy, a home-operator robotic vacuum cleaner, is designed to support overall housework beyond just cleaning. The inspiration behind Keepy is deeply personal, drawn from the creator’s childhood memories of bedtime stories. While books provided valuable knowledge, the lasting impact came from the warmth and affection of shared moments with parents. Unfortunately, modern parents, particularly those in dual-income households, often struggle to find time for meaningful interactions due to the exhaustion of daily responsibilities. Designer: Dohyuk Joo Instead of reading to their children, many parents resort to educational automation devices, such as reading pens. While these tools are useful, they cannot replicate the warmth and comfort of a parent’s voice. Keepy seeks to change this dynamic by handling household management tasks, ensuring parents have more time to be present with their children. Throughout history, household appliances have evolved to reduce the time and effort spent on domestic tasks. Washing machines freed families from the drudgery of manual laundry, dishwashers streamlined kitchen cleanup, and robotic vacuum cleaners introduced automated floor cleaning. Keepy builds on this tradition by going beyond simple automation, it actively manages household operations. Household management is more than just cleaning; it includes tracking grocery expiration dates, ensuring laundry is removed on time, keeping up with school events, and managing essential supplies. Traditional appliances perform their tasks in a fixed manner, but with the advancement of IoT and AI, home appliances like Keepy are becoming more proactive. Unlike conventional robotic vacuum cleaners, Keepy does more than just clean. It acts as a household assistant, identifying emerging needs and filling in blind spots. By integrating with smart home appliances, Keepy can analyze supply usage, predict shortages, and even recommend or automatically order essential maintenance items. As an active home operator, Keepy patrols the house, ensuring that everything runs smoothly. It can remind parents of unpaid utility bills, check food stock levels, and turn off appliances when needed. In essence, it operates as a silent yet efficient partner in managing the complexities of family life. Beyond functionality, Keepy’s design sets it apart. Inspired by a playful toy patrol officer, its warm and approachable appearance ensures it blends seamlessly into a home environment. An Emoji Face LED display makes it engaging for children and pets, reducing any potential apprehension towards an AI-driven home assistant. By reimagining the role of a robotic vacuum, Keepy extends beyond cleaning to become an integral part of family life. It embodies a future where technology enhances human connections rather than replacing them, allowing parents to spend less time managing chores and more time creating lasting memories with their children.The post This ‘Friendly’ AI-Powered Robotic Vacuum Manages Your Home and Allows More Family Time first appeared on Yanko Design.0 التعليقات 0 المشاركات 53 مشاهدة

WWW.YANKODESIGN.COMThis ‘Friendly’ AI-Powered Robotic Vacuum Manages Your Home and Allows More Family TimeAs artificial intelligence continues to advance, creating art, writing stories, and even making decisions for us, there are still fundamental aspects of life where human presence remains essential. Parenting is one such domain, raising a child, nurturing a family, and forming emotional bonds cannot be automated. However, technology can play a crucial role in alleviating the burden of strenuous household tasks, enabling parents to focus on what truly matters: quality time with their children. Keepy, a home-operator robotic vacuum cleaner, is designed to support overall housework beyond just cleaning. The inspiration behind Keepy is deeply personal, drawn from the creator’s childhood memories of bedtime stories. While books provided valuable knowledge, the lasting impact came from the warmth and affection of shared moments with parents. Unfortunately, modern parents, particularly those in dual-income households, often struggle to find time for meaningful interactions due to the exhaustion of daily responsibilities. Designer: Dohyuk Joo Instead of reading to their children, many parents resort to educational automation devices, such as reading pens. While these tools are useful, they cannot replicate the warmth and comfort of a parent’s voice. Keepy seeks to change this dynamic by handling household management tasks, ensuring parents have more time to be present with their children. Throughout history, household appliances have evolved to reduce the time and effort spent on domestic tasks. Washing machines freed families from the drudgery of manual laundry, dishwashers streamlined kitchen cleanup, and robotic vacuum cleaners introduced automated floor cleaning. Keepy builds on this tradition by going beyond simple automation, it actively manages household operations. Household management is more than just cleaning; it includes tracking grocery expiration dates, ensuring laundry is removed on time, keeping up with school events, and managing essential supplies. Traditional appliances perform their tasks in a fixed manner, but with the advancement of IoT and AI, home appliances like Keepy are becoming more proactive. Unlike conventional robotic vacuum cleaners, Keepy does more than just clean. It acts as a household assistant, identifying emerging needs and filling in blind spots. By integrating with smart home appliances, Keepy can analyze supply usage, predict shortages, and even recommend or automatically order essential maintenance items. As an active home operator, Keepy patrols the house, ensuring that everything runs smoothly. It can remind parents of unpaid utility bills, check food stock levels, and turn off appliances when needed. In essence, it operates as a silent yet efficient partner in managing the complexities of family life. Beyond functionality, Keepy’s design sets it apart. Inspired by a playful toy patrol officer, its warm and approachable appearance ensures it blends seamlessly into a home environment. An Emoji Face LED display makes it engaging for children and pets, reducing any potential apprehension towards an AI-driven home assistant. By reimagining the role of a robotic vacuum, Keepy extends beyond cleaning to become an integral part of family life. It embodies a future where technology enhances human connections rather than replacing them, allowing parents to spend less time managing chores and more time creating lasting memories with their children.The post This ‘Friendly’ AI-Powered Robotic Vacuum Manages Your Home and Allows More Family Time first appeared on Yanko Design.0 التعليقات 0 المشاركات 53 مشاهدة -

WWW.WIRED.COMUS Tariffs Could Make Smartphones DumberDonald Trump’s tariffs are likely to make tech manufacturers more risk averse—which could stymie innovation in favor of keeping costs down.0 التعليقات 0 المشاركات 51 مشاهدة

WWW.WIRED.COMUS Tariffs Could Make Smartphones DumberDonald Trump’s tariffs are likely to make tech manufacturers more risk averse—which could stymie innovation in favor of keeping costs down.0 التعليقات 0 المشاركات 51 مشاهدة -

APPLEINSIDER.COMApple Vision Pro 2, iPhone 20, and iOS 19, on the AppleInsider PodcastWe're just weeks away from iOS 19, just months from iPhone 17, but already we're looking far ahead to future of the iPhone, the Apple Vision Pro, all on this week's episode of the AppleInsider Podcast.The current Apple Vision ProNormally by now you'd expect everyone to be deep into rumors and predictions for WWDC 2025, and there is some of that. There is a recurring spate and possibly even spat about rumors concerning iOS 19 and which pundit has the best details, but for some reason the spotlight this week has been on the far future of the iPhone 20, the Apple Vision Pro 2, and what comes next for the Apple Watch.Well, there's also been just a little attention on the price of iPhones following whatever today's tariffs are. Continue Reading on AppleInsider | Discuss on our Forums0 التعليقات 0 المشاركات 88 مشاهدة

APPLEINSIDER.COMApple Vision Pro 2, iPhone 20, and iOS 19, on the AppleInsider PodcastWe're just weeks away from iOS 19, just months from iPhone 17, but already we're looking far ahead to future of the iPhone, the Apple Vision Pro, all on this week's episode of the AppleInsider Podcast.The current Apple Vision ProNormally by now you'd expect everyone to be deep into rumors and predictions for WWDC 2025, and there is some of that. There is a recurring spate and possibly even spat about rumors concerning iOS 19 and which pundit has the best details, but for some reason the spotlight this week has been on the far future of the iPhone 20, the Apple Vision Pro 2, and what comes next for the Apple Watch.Well, there's also been just a little attention on the price of iPhones following whatever today's tariffs are. Continue Reading on AppleInsider | Discuss on our Forums0 التعليقات 0 المشاركات 88 مشاهدة -

ARCHINECT.COMCarlo Ratti to introduce SEA BEYOND Ocean Literacy Centre at Venice BiennaleHere’s a preview of an upcoming presentation at the Venice Architecture Biennale designed by Carlo Ratti Associati in collaboration with the UNESCO Intergovernmental Oceanographic Commission (IOC) and Prada Group. The SEA BEYOND Ocean Literacy Centre is to be located on San Servolo Island and will educate the public on issues related to water, ocean science, and their relationship to the human environment during climate change. Image courtesy DotdotdotMultiple rooms equipped with data-driven storytelling abilities courtesy of design studio Dotdotdot will visualize a greater understanding of challenges that affect, among other bodies of water, the Venice Lagoon. The experience unfolds exponentially, based on the the Power of 10 concept from Charles and Ray Eames’ 1977 film. The Biennale opens on May 10th, 2025. Image courtesy DotdotdotImage courtesy DotdotdotImage courtesy Dotdotdot0 التعليقات 0 المشاركات 78 مشاهدة

ARCHINECT.COMCarlo Ratti to introduce SEA BEYOND Ocean Literacy Centre at Venice BiennaleHere’s a preview of an upcoming presentation at the Venice Architecture Biennale designed by Carlo Ratti Associati in collaboration with the UNESCO Intergovernmental Oceanographic Commission (IOC) and Prada Group. The SEA BEYOND Ocean Literacy Centre is to be located on San Servolo Island and will educate the public on issues related to water, ocean science, and their relationship to the human environment during climate change. Image courtesy DotdotdotMultiple rooms equipped with data-driven storytelling abilities courtesy of design studio Dotdotdot will visualize a greater understanding of challenges that affect, among other bodies of water, the Venice Lagoon. The experience unfolds exponentially, based on the the Power of 10 concept from Charles and Ray Eames’ 1977 film. The Biennale opens on May 10th, 2025. Image courtesy DotdotdotImage courtesy DotdotdotImage courtesy Dotdotdot0 التعليقات 0 المشاركات 78 مشاهدة -

GAMINGBOLT.COMDeath Stranding 2’s Development is “95 Percent Complete” – KojimaThat big flagship single-player PlayStation title that we’ve been waiting for for a while is finally coming not long from now in the form of Death Stranding 2: On the Beach, and as its release date draws nearer, creative director and Kojima Productions boss Hideo Kojima has offered an update on how development is going. Spotted by Twitter user @Genki_JPN, speaking recently during the official Kojima Productions podcast, Kojima revealed that Death Stranding 2’s development is roughly at the 95 percent mark. Likening the project to a 24-hour cycle, Kojima went on to say that it is currently at 10 PM, which means it’s within touching distance of the finish line. Of course, given how close to launch the game is, this is what most would have expected (or at least hoped for), but it’s good to have confirmation nonetheless, especially for those who may have been concerned about potential delays. Death Stranding 2: On the Beach launches exclusively for the PS5 on June 26. Hideo Kojima says Death Stranding 2 is currently 95% complete while speaking on his Koji10 radio show! #DS2 “It’s at about 95% now… It feels like it is 10 o’clock (PM), speaking of 24 hours, Koji Pro’s DS2 is at 10 o’clock (PM), there are 2 hours left” via @koji10_tbs pic.twitter.com/sOZjY7ZLg7 — Genki✨ (@Genki_JPN) April 10, 20250 التعليقات 0 المشاركات 65 مشاهدة

GAMINGBOLT.COMDeath Stranding 2’s Development is “95 Percent Complete” – KojimaThat big flagship single-player PlayStation title that we’ve been waiting for for a while is finally coming not long from now in the form of Death Stranding 2: On the Beach, and as its release date draws nearer, creative director and Kojima Productions boss Hideo Kojima has offered an update on how development is going. Spotted by Twitter user @Genki_JPN, speaking recently during the official Kojima Productions podcast, Kojima revealed that Death Stranding 2’s development is roughly at the 95 percent mark. Likening the project to a 24-hour cycle, Kojima went on to say that it is currently at 10 PM, which means it’s within touching distance of the finish line. Of course, given how close to launch the game is, this is what most would have expected (or at least hoped for), but it’s good to have confirmation nonetheless, especially for those who may have been concerned about potential delays. Death Stranding 2: On the Beach launches exclusively for the PS5 on June 26. Hideo Kojima says Death Stranding 2 is currently 95% complete while speaking on his Koji10 radio show! #DS2 “It’s at about 95% now… It feels like it is 10 o’clock (PM), speaking of 24 hours, Koji Pro’s DS2 is at 10 o’clock (PM), there are 2 hours left” via @koji10_tbs pic.twitter.com/sOZjY7ZLg7 — Genki✨ (@Genki_JPN) April 10, 20250 التعليقات 0 المشاركات 65 مشاهدة -

WWW.THEVERGE.COMThis algorithm wasn’t supposed to keep people in jail, but it does in LouisianaA new report from ProPublica published Thursday showed how the Louisiana government is using TIGER (Targeted Interventions to Greater Enhance Re-entry), a computer program developed by Louisiana State University to prevent recidivism, to approve or deny parole applications based on a score calculating their risk of returning to prison. Though the algorithm was initially designed to be used as a tool to help rehabilitate inmates by taking their background into account, a TIGER score – which uses data from an inmate’s time before prison, such as work history, criminal convictions, and age at first arrest – is now the sole measure of one’s eligibility. In interviews, several prisoners revealed that their scheduled parole hearings had been abruptly canceled after their TIGER score determined that they were at “moderate risk” of returning to prison. There is no factor in a TIGER score that takes into account an inmate’s behavior in prison or attempts at rehab – a score that criminal justice activists argue penalizes one’s racial and demographic background. (According to current state Department of Corrections data, half of Louisiana’s prison population of roughly 13,000 would automatically fall in the moderate or high risk categories.)One included Calvin Alexander, a 70-year-old partially blind man in a wheelchair, who had been in prison for 20 years, but had spent his time in drug rehab, anger management therapy, and professional skills development, and had a clean disciplinary record. “People in jail have … lost hope in being able to do anything to reduce their time,” he told ProPublica. Parole via algorithm is not just legal in Louisiana, but a deliberate element in Republican Governor Jeff Landry’s crusade against parole. Last year, he signed a law eliminating parole for all prisoners who committed a crime after August 1st, 2024, making Louisiana the first state to eliminate parole in 24 years. A subsequent law decreed that currently-incarcerated prisoners would only be eligible for parole if the algorithm determined they were “low risk”. See More:0 التعليقات 0 المشاركات 43 مشاهدة

WWW.THEVERGE.COMThis algorithm wasn’t supposed to keep people in jail, but it does in LouisianaA new report from ProPublica published Thursday showed how the Louisiana government is using TIGER (Targeted Interventions to Greater Enhance Re-entry), a computer program developed by Louisiana State University to prevent recidivism, to approve or deny parole applications based on a score calculating their risk of returning to prison. Though the algorithm was initially designed to be used as a tool to help rehabilitate inmates by taking their background into account, a TIGER score – which uses data from an inmate’s time before prison, such as work history, criminal convictions, and age at first arrest – is now the sole measure of one’s eligibility. In interviews, several prisoners revealed that their scheduled parole hearings had been abruptly canceled after their TIGER score determined that they were at “moderate risk” of returning to prison. There is no factor in a TIGER score that takes into account an inmate’s behavior in prison or attempts at rehab – a score that criminal justice activists argue penalizes one’s racial and demographic background. (According to current state Department of Corrections data, half of Louisiana’s prison population of roughly 13,000 would automatically fall in the moderate or high risk categories.)One included Calvin Alexander, a 70-year-old partially blind man in a wheelchair, who had been in prison for 20 years, but had spent his time in drug rehab, anger management therapy, and professional skills development, and had a clean disciplinary record. “People in jail have … lost hope in being able to do anything to reduce their time,” he told ProPublica. Parole via algorithm is not just legal in Louisiana, but a deliberate element in Republican Governor Jeff Landry’s crusade against parole. Last year, he signed a law eliminating parole for all prisoners who committed a crime after August 1st, 2024, making Louisiana the first state to eliminate parole in 24 years. A subsequent law decreed that currently-incarcerated prisoners would only be eligible for parole if the algorithm determined they were “low risk”. See More:0 التعليقات 0 المشاركات 43 مشاهدة -

WWW.MARKTECHPOST.COMStep by Step Coding Guide to Build a Neural Collaborative Filtering (NCF) Recommendation System with PyTorchThis tutorial will walk you through using PyTorch to implement a Neural Collaborative Filtering (NCF) recommendation system. NCF extends traditional matrix factorisation by using neural networks to model complex user-item interactions. Introduction Neural Collaborative Filtering (NCF) is a state-of-the-art approach for building recommendation systems. Unlike traditional collaborative filtering methods that rely on linear models, NCF utilizes deep learning to capture non-linear relationships between users and items. In this tutorial, we’ll: Prepare and explore the MovieLens dataset Implement the NCF model architecture Train the model Evaluate its performance Generate recommendations for users Setup and Environment First, let’s install the necessary libraries and import them: !pip install torch numpy pandas matplotlib seaborn scikit-learn tqdm import os import numpy as np import pandas as pd import torch import torch.nn as nn import torch.optim as optim from torch.utils.data import Dataset, DataLoader import matplotlib.pyplot as plt import seaborn as sns from sklearn.model_selection import train_test_split from sklearn.preprocessing import LabelEncoder from tqdm import tqdm import random torch.manual_seed(42) np.random.seed(42) random.seed(42) device = torch.device("cuda" if torch.cuda.is_available() else "cpu") print(f"Using device: {device}") Data Loading and Preparation We’ll use the MovieLens 100K dataset, which contains 100,000 movie ratings from users: !wget -nc https://files.grouplens.org/datasets/movielens/ml-100k.zip !unzip -q -n ml-100k.zip ratings_df = pd.read_csv('ml-100k/u.data', sep='t', names=['user_id', 'item_id', 'rating', 'timestamp']) movies_df = pd.read_csv('ml-100k/u.item', sep='|', encoding='latin-1', names=['item_id', 'title', 'release_date', 'video_release_date', 'IMDb_URL', 'unknown', 'Action', 'Adventure', 'Animation', 'Children', 'Comedy', 'Crime', 'Documentary', 'Drama', 'Fantasy', 'Film-Noir', 'Horror', 'Musical', 'Mystery', 'Romance', 'Sci-Fi', 'Thriller', 'War', 'Western']) print("Ratings data:") print(ratings_df.head()) print("nMovies data:") print(movies_df[['item_id', 'title']].head()) print(f"nTotal number of ratings: {len(ratings_df)}") print(f"Number of unique users: {ratings_df['user_id'].nunique()}") print(f"Number of unique movies: {ratings_df['item_id'].nunique()}") print(f"Rating range: {ratings_df['rating'].min()} to {ratings_df['rating'].max()}") print(f"Average rating: {ratings_df['rating'].mean():.2f}") plt.figure(figsize=(10, 6)) sns.countplot(x='rating', data=ratings_df) plt.title('Distribution of Ratings') plt.xlabel('Rating') plt.ylabel('Count') plt.show() ratings_df['label'] = (ratings_df['rating'] >= 4).astype(np.float32) Data Preparation for NCF Now, let’s prepare the data for our NCF model: train_df, test_df = train_test_split(ratings_df, test_size=0.2, random_state=42) print(f"Training set size: {len(train_df)}") print(f"Test set size: {len(test_df)}") num_users = ratings_df['user_id'].max() num_items = ratings_df['item_id'].max() print(f"Number of users: {num_users}") print(f"Number of items: {num_items}") class NCFDataset(Dataset): def __init__(self, df): self.user_ids = torch.tensor(df['user_id'].values, dtype=torch.long) self.item_ids = torch.tensor(df['item_id'].values, dtype=torch.long) self.labels = torch.tensor(df['label'].values, dtype=torch.float) def __len__(self): return len(self.user_ids) def __getitem__(self, idx): return { 'user_id': self.user_ids[idx], 'item_id': self.item_ids[idx], 'label': self.labels[idx] } train_dataset = NCFDataset(train_df) test_dataset = NCFDataset(test_df) batch_size = 256 train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True) test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False) Model Architecture Now we’ll implement the Neural Collaborative Filtering (NCF) model, which combines Generalized Matrix Factorization (GMF) and Multi-Layer Perceptron (MLP) components: class NCF(nn.Module): def __init__(self, num_users, num_items, embedding_dim=32, mlp_layers=[64, 32, 16]): super(NCF, self).__init__() self.user_embedding_gmf = nn.Embedding(num_users + 1, embedding_dim) self.item_embedding_gmf = nn.Embedding(num_items + 1, embedding_dim) self.user_embedding_mlp = nn.Embedding(num_users + 1, embedding_dim) self.item_embedding_mlp = nn.Embedding(num_items + 1, embedding_dim) mlp_input_dim = 2 * embedding_dim self.mlp_layers = nn.ModuleList() for idx, layer_size in enumerate(mlp_layers): if idx == 0: self.mlp_layers.append(nn.Linear(mlp_input_dim, layer_size)) else: self.mlp_layers.append(nn.Linear(mlp_layers[idx-1], layer_size)) self.mlp_layers.append(nn.ReLU()) self.output_layer = nn.Linear(embedding_dim + mlp_layers[-1], 1) self.sigmoid = nn.Sigmoid() self._init_weights() def _init_weights(self): for m in self.modules(): if isinstance(m, nn.Embedding): nn.init.normal_(m.weight, mean=0.0, std=0.01) elif isinstance(m, nn.Linear): nn.init.kaiming_uniform_(m.weight) if m.bias is not None: nn.init.zeros_(m.bias) def forward(self, user_ids, item_ids): user_embedding_gmf = self.user_embedding_gmf(user_ids) item_embedding_gmf = self.item_embedding_gmf(item_ids) gmf_vector = user_embedding_gmf * item_embedding_gmf user_embedding_mlp = self.user_embedding_mlp(user_ids) item_embedding_mlp = self.item_embedding_mlp(item_ids) mlp_vector = torch.cat([user_embedding_mlp, item_embedding_mlp], dim=-1) for layer in self.mlp_layers: mlp_vector = layer(mlp_vector) concat_vector = torch.cat([gmf_vector, mlp_vector], dim=-1) prediction = self.sigmoid(self.output_layer(concat_vector)).squeeze() return prediction embedding_dim = 32 mlp_layers = [64, 32, 16] model = NCF(num_users, num_items, embedding_dim, mlp_layers).to(device) print(model) Training the Model Let’s train our NCF model: criterion = nn.BCELoss() optimizer = optim.Adam(model.parameters(), lr=0.001, weight_decay=1e-5) def train_epoch(model, data_loader, criterion, optimizer, device): model.train() total_loss = 0 for batch in tqdm(data_loader, desc="Training"): user_ids = batch['user_id'].to(device) item_ids = batch['item_id'].to(device) labels = batch['label'].to(device) optimizer.zero_grad() outputs = model(user_ids, item_ids) loss = criterion(outputs, labels) loss.backward() optimizer.step() total_loss += loss.item() return total_loss / len(data_loader) def evaluate(model, data_loader, criterion, device): model.eval() total_loss = 0 predictions = [] true_labels = [] with torch.no_grad(): for batch in tqdm(data_loader, desc="Evaluating"): user_ids = batch['user_id'].to(device) item_ids = batch['item_id'].to(device) labels = batch['label'].to(device) outputs = model(user_ids, item_ids) loss = criterion(outputs, labels) total_loss += loss.item() predictions.extend(outputs.cpu().numpy()) true_labels.extend(labels.cpu().numpy()) from sklearn.metrics import roc_auc_score, average_precision_score auc = roc_auc_score(true_labels, predictions) ap = average_precision_score(true_labels, predictions) return { 'loss': total_loss / len(data_loader), 'auc': auc, 'ap': ap } num_epochs = 10 history = {'train_loss': [], 'val_loss': [], 'val_auc': [], 'val_ap': []} for epoch in range(num_epochs): train_loss = train_epoch(model, train_loader, criterion, optimizer, device) eval_metrics = evaluate(model, test_loader, criterion, device) history['train_loss'].append(train_loss) history['val_loss'].append(eval_metrics['loss']) history['val_auc'].append(eval_metrics['auc']) history['val_ap'].append(eval_metrics['ap']) print(f"Epoch {epoch+1}/{num_epochs} - " f"Train Loss: {train_loss:.4f}, " f"Val Loss: {eval_metrics['loss']:.4f}, " f"AUC: {eval_metrics['auc']:.4f}, " f"AP: {eval_metrics['ap']:.4f}") plt.figure(figsize=(12, 4)) plt.subplot(1, 2, 1) plt.plot(history['train_loss'], label='Train Loss') plt.plot(history['val_loss'], label='Validation Loss') plt.title('Loss During Training') plt.xlabel('Epoch') plt.ylabel('Loss') plt.legend() plt.subplot(1, 2, 2) plt.plot(history['val_auc'], label='AUC') plt.plot(history['val_ap'], label='Average Precision') plt.title('Evaluation Metrics') plt.xlabel('Epoch') plt.ylabel('Score') plt.legend() plt.tight_layout() plt.show() torch.save(model.state_dict(), 'ncf_model.pth') print("Model saved successfully!") Generating Recommendations Now let’s create a function to generate recommendations for users: def generate_recommendations(model, user_id, n=10): model.eval() user_ids = torch.tensor([user_id] * num_items, dtype=torch.long).to(device) item_ids = torch.tensor(range(1, num_items + 1), dtype=torch.long).to(device) with torch.no_grad(): predictions = model(user_ids, item_ids).cpu().numpy() items_df = pd.DataFrame({ 'item_id': range(1, num_items + 1), 'score': predictions }) user_rated_items = set(ratings_df[ratings_df['user_id'] == user_id]['item_id'].values) items_df = items_df[~items_df['item_id'].isin(user_rated_items)] top_n_items = items_df.sort_values('score', ascending=False).head(n) recommendations = pd.merge(top_n_items, movies_df[['item_id', 'title']], on='item_id') return recommendations[['item_id', 'title', 'score']] test_users = [1, 42, 100] for user_id in test_users: print(f"nTop 10 recommendations for user {user_id}:") recommendations = generate_recommendations(model, user_id, n=10) print(recommendations) print(f"nMovies that user {user_id} has rated highly (4-5 stars):") user_liked = ratings_df[(ratings_df['user_id'] == user_id) & (ratings_df['rating'] >= 4)] user_liked = pd.merge(user_liked, movies_df[['item_id', 'title']], on='item_id') user_liked[['item_id', 'title', 'rating']] Evaluating the Model Further Let’s evaluate our model further by computing some additional metrics: def evaluate_model_with_metrics(model, test_loader, device): model.eval() predictions = [] true_labels = [] with torch.no_grad(): for batch in tqdm(test_loader, desc="Evaluating"): user_ids = batch['user_id'].to(device) item_ids = batch['item_id'].to(device) labels = batch['label'].to(device) outputs = model(user_ids, item_ids) predictions.extend(outputs.cpu().numpy()) true_labels.extend(labels.cpu().numpy()) from sklearn.metrics import roc_auc_score, average_precision_score, precision_recall_curve, accuracy_score binary_preds = [1 if p >= 0.5 else 0 for p in predictions] auc = roc_auc_score(true_labels, predictions) ap = average_precision_score(true_labels, predictions) accuracy = accuracy_score(true_labels, binary_preds) precision, recall, thresholds = precision_recall_curve(true_labels, predictions) plt.figure(figsize=(10, 6)) plt.plot(recall, precision, label=f'AP={ap:.3f}') plt.xlabel('Recall') plt.ylabel('Precision') plt.title('Precision-Recall Curve') plt.legend() plt.grid(True) plt.show() return { 'auc': auc, 'ap': ap, 'accuracy': accuracy } metrics = evaluate_model_with_metrics(model, test_loader, device) print(f"AUC: {metrics['auc']:.4f}") print(f"Average Precision: {metrics['ap']:.4f}") print(f"Accuracy: {metrics['accuracy']:.4f}") Cold Start Analysis Let’s analyze how our model performs for new users or users with few ratings (cold start problem): user_rating_counts = ratings_df.groupby('user_id').size().reset_index(name='count') user_rating_counts['group'] = pd.cut(user_rating_counts['count'], bins=[0, 10, 50, 100, float('inf')], labels=['1-10', '11-50', '51-100', '100+']) print("Number of users in each rating frequency group:") print(user_rating_counts['group'].value_counts()) def evaluate_by_user_group(model, ratings_df, user_groups, device): results = {} for group_name, user_ids in user_groups.items(): group_ratings = ratings_df[ratings_df['user_id'].isin(user_ids)] group_dataset = NCFDataset(group_ratings) group_loader = DataLoader(group_dataset, batch_size=256, shuffle=False) if len(group_loader) == 0: continue model.eval() predictions = [] true_labels = [] with torch.no_grad(): for batch in group_loader: user_ids = batch['user_id'].to(device) item_ids = batch['item_id'].to(device) labels = batch['label'].to(device) outputs = model(user_ids, item_ids) predictions.extend(outputs.cpu().numpy()) true_labels.extend(labels.cpu().numpy()) from sklearn.metrics import roc_auc_score try: auc = roc_auc_score(true_labels, predictions) results[group_name] = auc except: results[group_name] = None return results user_groups = {} for group in user_rating_counts['group'].unique(): users_in_group = user_rating_counts[user_rating_counts['group'] == group]['user_id'].values user_groups[group] = users_in_group group_performance = evaluate_by_user_group(model, test_df, user_groups, device) plt.figure(figsize=(10, 6)) groups = [] aucs = [] for group, auc in group_performance.items(): if auc is not None: groups.append(group) aucs.append(auc) plt.bar(groups, aucs) plt.xlabel('Number of Ratings per User') plt.ylabel('AUC Score') plt.title('Model Performance by User Rating Frequency (Cold Start Analysis)') plt.ylim(0.5, 1.0) plt.grid(axis='y', linestyle='--', alpha=0.7) plt.show() print("AUC scores by user rating frequency:") for group, auc in group_performance.items(): if auc is not None: print(f"{group}: {auc:.4f}") Business Insights and Extensions def analyze_predictions(model, data_loader, device): model.eval() predictions = [] true_labels = [] with torch.no_grad(): for batch in data_loader: user_ids = batch['user_id'].to(device) item_ids = batch['item_id'].to(device) labels = batch['label'].to(device) outputs = model(user_ids, item_ids) predictions.extend(outputs.cpu().numpy()) true_labels.extend(labels.cpu().numpy()) results_df = pd.DataFrame({ 'true_label': true_labels, 'predicted_score': predictions }) plt.figure(figsize=(12, 6)) plt.subplot(1, 2, 1) sns.histplot(results_df['predicted_score'], bins=30, kde=True) plt.title('Distribution of Predicted Scores') plt.xlabel('Predicted Score') plt.ylabel('Count') plt.subplot(1, 2, 2) sns.boxplot(x='true_label', y='predicted_score', data=results_df) plt.title('Predicted Scores by True Label') plt.xlabel('True Label (0=Disliked, 1=Liked)') plt.ylabel('Predicted Score') plt.tight_layout() plt.show() avg_scores = results_df.groupby('true_label')['predicted_score'].mean() print("Average prediction scores:") print(f"Items user disliked (0): {avg_scores[0]:.4f}") print(f"Items user liked (1): {avg_scores[1]:.4f}") analyze_predictions(model, test_loader, device) This tutorial demonstrates implementing Neural Collaborative Filtering, a deep learning recommendation system combining matrix factorization with neural networks. Using the MovieLens dataset and PyTorch, we built a model that generates personalized content recommendations. The implementation addresses key challenges, including the cold start problem and provides performance metrics like AUC and precision-recall curves. This foundation can be extended with hybrid approaches, attention mechanisms, or deployable web applications for various business recommendation scenarios. Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 85k+ ML SubReddit. Mohammad AsjadAsjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.Mohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/This AI Paper Introduces a Machine Learning Framework to Estimate the Inference Budget for Self-Consistency and GenRMs (Generative Reward Models)Mohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/MMSearch-R1: End-to-End Reinforcement Learning for Active Image Search in LMMsMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/Anthropic’s Evaluation of Chain-of-Thought Faithfulness: Investigating Hidden Reasoning, Reward Hacks, and the Limitations of Verbal AI Transparency in Reasoning ModelsMohammad Asjadhttps://www.marktechpost.com/author/mohammad_asjad/Building Your AI Q&A Bot for Webpages Using Open Source AI Models0 التعليقات 0 المشاركات 76 مشاهدة

-

WWW.IGN.COMSpongeBob Tower Defense Codes (April 2025)Last updated on April 10, 2025 - Added new SpongeBob Tower Defense codes!Looking for SpongeBob Tower Defense codes? This is where we'll serve them up! You won't find any Krabby Patties here, but you will find working codes you can redeem for rewards. These include chests, Magic Conches for summoning exclusive units, gems, and more. Working SpongeBob Tower Defense Codes (April 2025)Below are all the active codes we've found for SpongeBob TD as of this month:AChallenge2Open - 2x Challenge Crate (NEW)FUNonaTuesday - 10x Rare+ Chest, 1x Challenge Crate, 2x Double Faction Points (1 Hour), 2x Double Challenge Tokens (1 Hour) (NEW)500KFeelingOkay - 2,000 Gems, 10x Rare+ Chests, 1x Magic ConchExpired SpongeBob Tower Defense CodesUnfortunately, you've missed the chance to use the codes below, as they've now expired:OnlytheBestUnitRefinementFactionGrind4RealCrateOfPossibilitiesStreamLoyalistOnlytheBestDelayisOkayFoolMeTwiceOPCode4RealRealOPCodeVeryOP200MILLIPLAYSWOAHAcceptingTheChallengeWStreamChatXMarksTheSpotSummonMeASecretPLS EDUCATEDCHALLENGERBugCrushers THANKSFORSUPPORTINGDoubleItTheHuntBegins1MillionLikesOPLetsRidePiratesLife4MeStacksonStacksWPatchChatHow to Redeem SpongeBob TD CodesLog in to the SpongeBob Tower Defense experience on RobloxYou'll need to play the game until you reach Level 10 before you can unlock the codes featureOn the left side of the screen, you'll see several colorful boxesThe purple box with the clam icon on the bottom left corner is for codesClick Codes and then copy and paste your code into the boxRedeem it and enjoy the rewards!Why Isn't My SpongeBob TD Code Working?There are two main reasons why your code might not be working when you submit it. Some codes are for Roblox are case-sensitive, so you'll want to make sure you're copying it directly from this article and pasting it in. We test each and every one of the codes before adding them here, to make sure they're working. Just be sure when you are copying them from here, that you're not accidentally including any extra spaces. If one has snuck in there, just remove them and try the code again.If you're taking them directly from the article, and they're still not working, the other possibility is they're expired. When a code is expired, it will say this as soon as you hit redeem. But if the code has been entered incorrectly, it will say "invalid" instead.How to Get More SpongeBob Tower Defense CodesWe check daily for new Roblox codes, so be sure to come back here regularly to see if new SpongeBob TD codes have dropped. Alternatively, you can drop by the Krabby Krew Discord server and scan for codes yourself. What is SpongeBob Tower Defense in Roblox?Roblox has its fair share of Tower Defense games, and even SpongeBob is included in the action. Whether you choose to initially recruit SpongeBob, Patrick, or Squidward, you'll need to protect Bikini Bottom at all costs. Just like other TD games, the aim is to position units carefully along a set route, where they'll automatically attack and wipe out a constant stream of enemies. With careful strategy, you'll complete a series of waves for each location, and prevent enemies from ever reaching your base. Unlock new units, pick your favorites to take into battle, and unleash them when the time is right. Lauren Harper is an Associate Guides Editor. She loves a variety of games but is especially fond of puzzles, horrors, and point-and-click adventures.0 التعليقات 0 المشاركات 75 مشاهدة

WWW.IGN.COMSpongeBob Tower Defense Codes (April 2025)Last updated on April 10, 2025 - Added new SpongeBob Tower Defense codes!Looking for SpongeBob Tower Defense codes? This is where we'll serve them up! You won't find any Krabby Patties here, but you will find working codes you can redeem for rewards. These include chests, Magic Conches for summoning exclusive units, gems, and more. Working SpongeBob Tower Defense Codes (April 2025)Below are all the active codes we've found for SpongeBob TD as of this month:AChallenge2Open - 2x Challenge Crate (NEW)FUNonaTuesday - 10x Rare+ Chest, 1x Challenge Crate, 2x Double Faction Points (1 Hour), 2x Double Challenge Tokens (1 Hour) (NEW)500KFeelingOkay - 2,000 Gems, 10x Rare+ Chests, 1x Magic ConchExpired SpongeBob Tower Defense CodesUnfortunately, you've missed the chance to use the codes below, as they've now expired:OnlytheBestUnitRefinementFactionGrind4RealCrateOfPossibilitiesStreamLoyalistOnlytheBestDelayisOkayFoolMeTwiceOPCode4RealRealOPCodeVeryOP200MILLIPLAYSWOAHAcceptingTheChallengeWStreamChatXMarksTheSpotSummonMeASecretPLS EDUCATEDCHALLENGERBugCrushers THANKSFORSUPPORTINGDoubleItTheHuntBegins1MillionLikesOPLetsRidePiratesLife4MeStacksonStacksWPatchChatHow to Redeem SpongeBob TD CodesLog in to the SpongeBob Tower Defense experience on RobloxYou'll need to play the game until you reach Level 10 before you can unlock the codes featureOn the left side of the screen, you'll see several colorful boxesThe purple box with the clam icon on the bottom left corner is for codesClick Codes and then copy and paste your code into the boxRedeem it and enjoy the rewards!Why Isn't My SpongeBob TD Code Working?There are two main reasons why your code might not be working when you submit it. Some codes are for Roblox are case-sensitive, so you'll want to make sure you're copying it directly from this article and pasting it in. We test each and every one of the codes before adding them here, to make sure they're working. Just be sure when you are copying them from here, that you're not accidentally including any extra spaces. If one has snuck in there, just remove them and try the code again.If you're taking them directly from the article, and they're still not working, the other possibility is they're expired. When a code is expired, it will say this as soon as you hit redeem. But if the code has been entered incorrectly, it will say "invalid" instead.How to Get More SpongeBob Tower Defense CodesWe check daily for new Roblox codes, so be sure to come back here regularly to see if new SpongeBob TD codes have dropped. Alternatively, you can drop by the Krabby Krew Discord server and scan for codes yourself. What is SpongeBob Tower Defense in Roblox?Roblox has its fair share of Tower Defense games, and even SpongeBob is included in the action. Whether you choose to initially recruit SpongeBob, Patrick, or Squidward, you'll need to protect Bikini Bottom at all costs. Just like other TD games, the aim is to position units carefully along a set route, where they'll automatically attack and wipe out a constant stream of enemies. With careful strategy, you'll complete a series of waves for each location, and prevent enemies from ever reaching your base. Unlock new units, pick your favorites to take into battle, and unleash them when the time is right. Lauren Harper is an Associate Guides Editor. She loves a variety of games but is especially fond of puzzles, horrors, and point-and-click adventures.0 التعليقات 0 المشاركات 75 مشاهدة